Cracking a skill-specific interview, like one for Process Data Analysis and Reporting, requires understanding the nuances of the role. In this blog, we present the questions you’re most likely to encounter, along with insights into how to answer them effectively. Let’s ensure you’re ready to make a strong impression.

Questions Asked in Process Data Analysis and Reporting Interview

Q 1. Explain the difference between descriptive, predictive, and prescriptive analytics.

The three types of analytics – descriptive, predictive, and prescriptive – represent a progression in sophistication and the insights they provide. Think of them as stages in a detective story:

- Descriptive Analytics: This is the ‘what happened’ stage. It summarizes historical data to understand past performance. Imagine reviewing sales figures for the last year – you’re describing what happened, identifying trends and patterns. Common tools include dashboards showing key metrics like total sales, average order value, and customer demographics. For example, a descriptive analysis might reveal that sales were highest in December.

- Predictive Analytics: This moves to the ‘what might happen’ stage. It uses statistical techniques and machine learning algorithms to forecast future outcomes based on historical data. Continuing our sales example, predictive analytics might forecast next year’s sales based on past trends, seasonality, and external factors like economic growth. We might use regression models or time series analysis to build our prediction.

- Prescriptive Analytics: This is the ‘what should we do’ stage. It uses optimization techniques to recommend actions that will lead to the desired outcome. Building on the previous examples, prescriptive analytics might suggest optimal pricing strategies, inventory levels, or marketing campaigns to maximize sales next year. This often involves simulation and optimization algorithms.

In essence, descriptive analytics provides a historical context, predictive analytics forecasts the future, and prescriptive analytics suggests the best course of action.

Q 2. What are the key performance indicators (KPIs) you would track for a manufacturing process?

The KPIs for a manufacturing process depend on the specific industry and product, but some common ones include:

- Overall Equipment Effectiveness (OEE): This measures the percentage of time equipment is producing good parts. It combines availability, performance, and quality rates.

- Production Rate/Throughput: The number of units produced per unit of time. This helps determine if the process meets production targets.

- Defect Rate/Yield: The percentage of defective products produced. A high defect rate indicates quality issues that need addressing.

- Mean Time Between Failures (MTBF): The average time between equipment failures. This indicates equipment reliability.

- Mean Time To Repair (MTTR): The average time it takes to repair equipment after a failure. A high MTTR suggests inefficiencies in maintenance.

- Inventory Turnover Rate: This measures how efficiently raw materials and finished goods are managed.

- Cycle Time: The total time required to complete a production cycle from start to finish. Shorter cycle times indicate process efficiency.

- Labor Cost per Unit: This measures the efficiency of labor and can highlight areas for improvement.

Tracking these KPIs provides a comprehensive view of the manufacturing process’s efficiency and effectiveness. Regular monitoring allows for timely intervention and optimization.

Q 3. Describe your experience with ETL (Extract, Transform, Load) processes.

ETL (Extract, Transform, Load) is the backbone of any data warehousing or business intelligence project. My experience involves designing, implementing, and maintaining ETL processes using a variety of tools. I’m proficient in tools such as Informatica PowerCenter, Apache Kafka, and even scripting solutions using Python.

A typical ETL process I might manage includes:

- Extract: Retrieving data from various sources, such as databases (SQL Server, Oracle, MySQL), flat files (CSV, TXT), APIs, and cloud-based storage (AWS S3, Azure Blob Storage). This often involves dealing with diverse data formats and structures.

- Transform: Cleaning, validating, and transforming the extracted data into a consistent format suitable for analysis. This can include handling missing values, standardizing data types, removing duplicates, and performing data aggregations. I frequently use scripting to automate these tasks.

- Load: Loading the transformed data into a target data warehouse or data lake. This requires ensuring data integrity and performance, often using optimized loading techniques.

For example, in a recent project, I built an ETL pipeline using Python and the Pandas library to extract sales data from multiple CSV files, transform them by cleaning inconsistencies and calculating aggregate metrics, and load them into a PostgreSQL database for further analysis. This improved reporting speed significantly.

Q 4. How do you handle missing data in your analysis?

Missing data is a common challenge in data analysis. The best approach depends on the nature and extent of the missing data and the analysis goals. There’s no one-size-fits-all solution.

My strategies include:

- Deletion: If the missing data is minimal and random, I might consider listwise deletion (removing entire rows with missing values). However, this can lead to significant data loss if not used cautiously.

- Imputation: This involves filling in missing values with estimated values. Common imputation techniques include:

- Mean/Median/Mode Imputation: Replacing missing values with the mean, median, or mode of the available data. Simple but can distort the distribution if many values are missing.

- Regression Imputation: Predicting missing values using a regression model based on other variables.

- K-Nearest Neighbors (KNN) Imputation: Filling in missing values based on the values of similar data points.

- Multiple Imputation: Creating multiple plausible imputed datasets and combining the results to account for uncertainty in the imputed values. This is a more sophisticated approach.

- Model-based approaches: Employing models that handle missing data natively, such as maximum likelihood estimation.

Before choosing a method, I thoroughly investigate the reason behind missing data (Missing Completely at Random (MCAR), Missing at Random (MAR), or Missing Not at Random (MNAR)) to determine the best imputation technique. Each method has its strengths and weaknesses, and the choice depends on the specific context.

Q 5. What are some common data visualization techniques, and when would you use each?

Data visualization is crucial for communicating insights effectively. The choice of technique depends on the type of data and the message you want to convey.

- Bar charts: Excellent for comparing categorical data, showing the frequency of different categories.

- Line charts: Ideal for showing trends over time or relationships between continuous variables.

- Scatter plots: Useful for exploring the relationship between two continuous variables. Correlation can be visually assessed.

- Histograms: Show the distribution of a single continuous variable.

- Pie charts: Effective for showing proportions of a whole, but best used with a limited number of categories.

- Box plots: Display the distribution of a continuous variable, highlighting central tendency, spread, and outliers.

- Heatmaps: Show correlations or values across a matrix. Useful for visualizing large datasets.

- Geographic maps: Ideal for visualizing location-based data.

For example, if I’m analyzing sales data across different regions, I might use a bar chart to compare sales figures for each region. To show trends in sales over time, I would use a line chart. If exploring the relationship between advertising spend and sales, a scatter plot would be appropriate.

Q 6. What statistical methods are you familiar with, and how have you applied them?

I’m familiar with a wide range of statistical methods, including:

- Descriptive statistics: Mean, median, mode, standard deviation, variance, percentiles. Used to summarize and understand data distributions.

- Inferential statistics: Hypothesis testing, t-tests, ANOVA, chi-squared tests. Used to draw conclusions about populations based on sample data.

- Regression analysis: Linear regression, multiple regression, logistic regression. Used to model relationships between variables and make predictions.

- Time series analysis: ARIMA, Exponential Smoothing. Used to model and forecast time-dependent data.

- Clustering techniques: K-means, hierarchical clustering. Used to group similar data points together.

In a recent project, I used linear regression to model the relationship between various factors (temperature, humidity, and machine age) and equipment failure rate in a manufacturing plant. The results helped identify key factors contributing to equipment breakdowns, enabling targeted preventative maintenance efforts.

Q 7. Explain your experience with SQL and database querying.

I have extensive experience with SQL and database querying. I’m proficient in writing complex queries to extract, manipulate, and analyze data from various relational databases. My skills include:

- Data retrieval: Using

SELECTstatements to retrieve specific data based on various conditions. - Data manipulation: Using functions like

JOIN,WHERE,GROUP BY,HAVING,ORDER BYto filter, sort, and aggregate data. - Data modification: Using

INSERT,UPDATE,DELETEstatements to modify data in the database. - Database design: Understanding database normalization and designing efficient database schemas.

- Stored procedures: Creating and using stored procedures to encapsulate and reuse common database operations.

- Performance optimization: Writing efficient SQL queries using indexes and optimizing query execution plans.

For example, I frequently use SQL to extract data for reporting and analysis. A typical query might look like this:

SELECT region, SUM(sales) AS total_sales FROM sales_data GROUP BY region ORDER BY total_sales DESC;This query summarizes total sales by region and orders the results in descending order, providing a quick overview of sales performance across different regions. I’m also comfortable using various database management systems including MySQL, PostgreSQL, and SQL Server.

Q 8. How do you identify and address outliers in your datasets?

Identifying and addressing outliers is crucial for accurate data analysis. Outliers are data points that significantly deviate from the rest of the data. They can skew results and lead to misleading conclusions. My approach involves a multi-pronged strategy:

Visual Inspection: I begin by visualizing the data using histograms, box plots, and scatter plots. This allows me to quickly identify potential outliers that stand out visually. For example, a box plot clearly shows data points beyond the whiskers, representing potential outliers.

Statistical Methods: I employ various statistical techniques to quantitatively identify outliers. These include Z-score analysis (identifying data points that fall outside a certain number of standard deviations from the mean), the Interquartile Range (IQR) method (identifying data points outside a specific range based on the quartiles), and modified Z-score. For example, a Z-score of greater than 3 or less than -3 might flag a data point as an outlier.

Domain Knowledge: Context is key. I always consider the business context and domain knowledge. An outlier might not always be an error; it could represent a genuine, albeit unusual, event. For instance, a significantly higher sales figure on a particular day might be due to a successful marketing campaign, not an error.

Handling Outliers: Once identified, the treatment depends on the context. Options include removal (if clearly erroneous), winsorizing (capping values at a certain percentile), or transformation (e.g., logarithmic transformation to reduce the influence of extreme values). Careful consideration is given to avoid data bias.

For instance, in analyzing website traffic, I might find an unusually high number of visits on a specific day. Through investigation, I might discover a viral social media post driving this traffic. In such cases, removing the data point would be wrong. Instead, I’d document it and potentially segment my analysis to explore this spike separately.

Q 9. Describe your experience with data mining techniques.

I have extensive experience with various data mining techniques, applying them to extract meaningful insights from large datasets. My experience covers:

Association Rule Mining: Using algorithms like Apriori and FP-Growth to discover relationships between variables. For example, identifying frequently purchased items together in a supermarket to optimize product placement.

Classification: Employing techniques like decision trees, support vector machines (SVMs), and logistic regression to predict categorical outcomes. For example, predicting customer churn based on their past behavior.

Clustering: Using algorithms like K-means and hierarchical clustering to group similar data points. For example, segmenting customers into different groups based on their demographics and purchasing patterns.

Regression: Using linear and non-linear regression models to predict continuous outcomes. For example, predicting sales revenue based on marketing spend.

I’m proficient in selecting the appropriate technique based on the specific problem and data characteristics. I always focus on model evaluation and selection to ensure optimal performance and generalizability.

Q 10. How do you ensure the accuracy and reliability of your data analysis?

Ensuring data accuracy and reliability is paramount. My approach is built on several key principles:

Data Validation: I rigorously validate data at every stage, checking for inconsistencies, errors, and missing values. This involves data profiling, range checks, and consistency checks against known data sources.

Data Source Verification: I carefully evaluate the credibility and reliability of data sources. I consider factors like data collection methods, potential biases, and data quality documentation.

Data Cleaning and Preprocessing: I employ various data cleaning techniques to handle missing values, outliers, and inconsistencies. This could involve imputation, smoothing, or transformation techniques, always documenting the applied methods.

Version Control: I use version control systems to track changes to the data and analysis, allowing for reproducibility and auditability.

Cross-Validation: I employ cross-validation techniques to assess the generalizability and robustness of my analytical models, ensuring they are not overfitting to the specific dataset.

For instance, if I’m analyzing sales data, I’d verify the data against the sales ledger and investigate any discrepancies. I’d also ensure that the data accurately reflects sales across different regions and product categories.

Q 11. What is your experience with data cleaning and preparation?

Data cleaning and preparation are foundational to any successful data analysis project. My experience includes:

Handling Missing Values: I employ various imputation techniques like mean/median imputation, mode imputation, and more sophisticated methods like k-nearest neighbors imputation, based on the nature of the data and the amount of missingness. I always carefully consider the potential impact of imputation on the analysis.

Outlier Detection and Treatment: As discussed earlier, I use statistical methods and visualization to identify and handle outliers appropriately.

Data Transformation: I often transform data to improve model performance or meet the assumptions of specific statistical methods. This includes scaling (standardization, normalization), and encoding categorical variables (one-hot encoding, label encoding).

Data Consistency Checks: I perform rigorous checks to ensure data consistency across different sources and columns, identifying and resolving discrepancies.

Data Deduplication: I use various techniques to identify and remove duplicate records from the dataset, ensuring data integrity.

In a recent project analyzing customer data, I had to handle missing values in the customer’s address field. I used a combination of imputation with known data and pattern matching to fill in missing information, ensuring data completeness without introducing bias.

Q 12. How do you communicate complex analytical findings to non-technical stakeholders?

Communicating complex analytical findings to non-technical stakeholders requires clear, concise, and engaging communication. I prioritize the following:

Storytelling: I frame my findings within a compelling narrative, using plain language and avoiding technical jargon. I focus on the key insights and their implications for the business.

Visualizations: I use clear and informative visualizations such as charts, graphs, and dashboards to illustrate key findings. I avoid overwhelming the audience with too much detail.

Interactive Dashboards: For ongoing monitoring and exploration, I build interactive dashboards that allow stakeholders to explore the data at their own pace. Tools like Tableau and Power BI are instrumental here.

Summary Reports: I prepare concise summary reports that highlight the key findings, recommendations, and next steps. These reports are tailored to the specific audience’s needs and level of understanding.

Active Listening and Feedback: I actively solicit feedback from stakeholders to ensure they understand the findings and their implications. I adapt my communication style based on their feedback.

For example, instead of saying “the coefficient of determination (R-squared) is 0.85,” I might say “Our model explains 85% of the variation in sales, indicating a strong predictive capability.” I focus on the practical implications rather than the technical details.

Q 13. What tools and technologies are you proficient in (e.g., Tableau, Power BI, R, Python)?

I am proficient in a wide range of tools and technologies for data analysis and reporting, including:

Business Intelligence Tools: Tableau, Power BI – for creating interactive dashboards and reports.

Programming Languages: R, Python – for statistical modeling, data manipulation, and automation.

Databases: SQL, NoSQL databases – for data extraction, transformation, and loading (ETL).

Cloud Platforms: AWS, Azure, GCP – for managing and analyzing large datasets in the cloud.

Statistical Software: SPSS, SAS – for advanced statistical analysis.

My skills encompass the entire data analysis workflow, from data acquisition and cleaning to model building, visualization, and reporting.

Q 14. Describe a time you had to troubleshoot a data issue.

In a recent project analyzing customer transaction data, I encountered inconsistent date formats. This was causing errors in time-series analysis and impacting our ability to track trends accurately.

My troubleshooting involved the following steps:

Data Inspection: I first examined the data to identify the various date formats present. This involved using data profiling tools and visual inspection.

Pattern Recognition: I looked for patterns in the inconsistent formats to understand the potential causes.

Data Cleaning Script: I developed a script in Python using the

pandaslibrary to standardize the date formats. This involved using regular expressions to identify and convert different date formats into a consistent format (YYYY-MM-DD).Testing and Validation: After applying the cleaning script, I thoroughly tested the results to ensure the dates were correctly formatted and that no data was lost or incorrectly converted.

#Example Python code snippet (Illustrative):

import pandas as pd

df['Date'] = pd.to_datetime(df['Date'], format='%m/%d/%Y', errors='coerce')

This resolved the issue and allowed for accurate time-series analysis. The experience highlighted the importance of thorough data validation and the value of robust data cleaning processes.

Q 15. How do you prioritize tasks when working on multiple projects?

Prioritizing tasks across multiple projects requires a structured approach. I typically employ a combination of methods, starting with a clear understanding of project deadlines and business priorities. I use tools like Eisenhower Matrix (urgent/important) to categorize tasks and focus on high-impact activities first. For instance, if one project is nearing a critical deadline with potential significant consequences, I’ll allocate more time to it, even if other projects are also demanding attention. I also utilize project management software to track progress, dependencies, and allocate resources effectively. This visual representation helps me see the big picture and identify potential bottlenecks or conflicts that could affect the timeline.

Furthermore, I regularly communicate with stakeholders to ensure alignment on priorities and adjust my task allocation accordingly. Transparency and proactive communication are crucial to manage expectations and avoid misunderstandings. Regularly reviewing my task list and re-prioritizing as needed is a dynamic process that helps me adapt to changing circumstances and maintain efficiency.

Career Expert Tips:

- Ace those interviews! Prepare effectively by reviewing the Top 50 Most Common Interview Questions on ResumeGemini.

- Navigate your job search with confidence! Explore a wide range of Career Tips on ResumeGemini. Learn about common challenges and recommendations to overcome them.

- Craft the perfect resume! Master the Art of Resume Writing with ResumeGemini’s guide. Showcase your unique qualifications and achievements effectively.

- Don’t miss out on holiday savings! Build your dream resume with ResumeGemini’s ATS optimized templates.

Q 16. What is your experience with A/B testing and experimental design?

A/B testing and experimental design are fundamental to my work. I have extensive experience designing and implementing A/B tests to compare different versions of reports, dashboards, or data visualization techniques. This involves defining a clear hypothesis, selecting appropriate metrics, carefully controlling for confounding variables, and ensuring statistically significant sample sizes. For example, I recently conducted an A/B test to compare two different dashboard designs. One used a traditional tabular format, while the other employed interactive charts and graphs. By tracking key metrics like user engagement time and task completion rate, we determined that the interactive design significantly improved user experience and efficiency.

My experimental design approach always emphasizes rigorous methodology to ensure the results are reliable and actionable. This includes utilizing appropriate statistical tests (e.g., t-tests, chi-squared tests) and accounting for potential biases. Understanding the limitations of A/B testing and interpreting the results cautiously is crucial to avoid drawing inaccurate conclusions.

Q 17. Describe your process for developing reports and dashboards.

My process for developing reports and dashboards is iterative and user-centric. It begins with a thorough understanding of the stakeholders’ needs and objectives. I start by asking clarifying questions to understand what information they require, how they will use it, and what their level of technical expertise is. This helps me tailor the design and content appropriately.

Next, I gather and clean the relevant data, ensuring data accuracy and integrity. I then select the most effective visualization techniques to clearly and concisely communicate the insights. I favor interactive dashboards that allow for exploration and deeper dives into the data whenever possible. For instance, I might use interactive charts that allow users to filter the data by different dimensions, or drill down to view more granular details. Once a draft is complete, I present it to the stakeholders for feedback and make revisions based on their input. The iterative process ensures the final product is both effective and meets their specific needs. Finally, I document the report’s methodology and data sources for future reference and reproducibility.

Q 18. How do you ensure data security and privacy?

Data security and privacy are paramount in my work. I adhere to strict protocols to protect sensitive information. This includes implementing appropriate access controls, limiting data exposure to only authorized personnel, and using encryption techniques to secure data both in transit and at rest. I am familiar with various data privacy regulations, such as GDPR and CCPA, and I tailor my practices to comply with relevant legislation.

Furthermore, I regularly review and update security measures to address evolving threats. Data anonymization and pseudonymization techniques are often employed to protect individual identities whenever possible. Regular security audits and penetration testing are important to identify and mitigate vulnerabilities proactively. I believe in a multi-layered approach, combining technical safeguards with robust organizational policies and employee training, to create a strong security posture.

Q 19. How familiar are you with Agile methodologies?

I am very familiar with Agile methodologies, and I have incorporated Agile principles into my data analysis workflows. I believe in iterative development and continuous feedback. This translates into breaking down large analysis projects into smaller, manageable tasks, and regularly collaborating with stakeholders to ensure the analysis aligns with their evolving needs.

The Agile approach allows for flexibility and adaptability. In practice, I frequently use tools like Jira or Trello to manage tasks, track progress, and facilitate communication among team members. The short, iterative cycles allow for faster turnaround times and provide ample opportunities for course correction, ensuring that the analysis remains relevant and valuable throughout the process. This collaborative approach helps ensure that everyone stays informed and engaged, which enhances the quality of the results and increases stakeholder satisfaction.

Q 20. What are some common challenges you’ve encountered in data analysis, and how did you overcome them?

One common challenge is dealing with incomplete or inconsistent data. This often requires significant data cleaning and preprocessing, which can be time-consuming. To overcome this, I use a combination of techniques such as data imputation, outlier detection, and data transformation. For instance, I might use k-nearest neighbors to impute missing values or employ robust statistical methods that are less sensitive to outliers. I also carefully document the data cleaning steps to ensure transparency and reproducibility.

Another challenge is communicating complex technical findings to non-technical stakeholders. To address this, I focus on clear and concise communication, using visualizations that are easy to understand, and avoiding technical jargon. I create narratives that emphasize the key takeaways and their implications for the business. Using storytelling techniques, such as focusing on trends and patterns, can effectively communicate the main points without overwhelming the audience with detailed analysis.

Q 21. Explain your experience with different data formats (e.g., CSV, JSON, XML).

I have extensive experience working with various data formats, including CSV, JSON, and XML. I am proficient in using programming languages such as Python and R to read, manipulate, and analyze data from these formats.

For instance, when working with large CSV files, I often utilize libraries like Pandas in Python for efficient data manipulation and analysis. JSON data, being commonly used in web applications, requires different parsing techniques and often involves working with nested structures; libraries like `json` in Python readily handle this. XML, with its hierarchical structure, requires different parsing methods, and I’m skilled in using libraries that provide efficient navigation and extraction from XML documents. The choice of tool depends largely on the specific data format and the type of analysis I need to perform. My experience extends to handling less common formats as well, and I am always ready to learn and adapt to new data structures as needed.

Q 22. How do you validate the accuracy of your data sources?

Data source validation is crucial for ensuring the reliability of your analysis. It’s like checking the ingredients before baking a cake – if your ingredients are bad, your cake will be bad too! My approach is multi-faceted and involves several key steps:

Source Credibility Assessment: I first evaluate the reputation and expertise of the data source. Is it a reputable organization? Do they have a track record of providing accurate data? For example, government statistical agencies generally offer more reliable data than an anonymous blog.

Data Profiling and Exploration: I then perform exploratory data analysis (EDA) to understand the data’s characteristics. This involves checking for things like data types, missing values, outliers, and inconsistencies. Tools like Python’s Pandas library are invaluable here. For instance, I might identify unexpected negative values in a variable representing sales figures, indicating a data entry error.

Comparison with other sources (Triangulation): Whenever possible, I compare data from multiple, independent sources. If the data points align, it strengthens confidence in its accuracy. Discrepancies, however, require further investigation – perhaps one source has a different definition or methodology.

Data Validation Rules: I establish validation rules based on business logic and domain knowledge. For example, if I’m analyzing customer ages, I would ensure no values are below zero or unrealistically high. These rules can be implemented using data quality tools or programming scripts.

Regular Monitoring: Data quality isn’t a one-time event. I implement ongoing monitoring and alerts to detect potential issues. For instance, setting up anomaly detection triggers to flag unexpected spikes or drops in key metrics.

By combining these techniques, I can build confidence in the accuracy of my data sources and minimize the risk of drawing incorrect conclusions.

Q 23. Describe your experience with data warehousing concepts.

Data warehousing is fundamental to my work. I think of it as a central repository, a well-organized library for all your business data. It’s essential for efficient data analysis and reporting. My experience encompasses the entire lifecycle, from design to implementation and maintenance. This includes:

Data Modeling: I’m proficient in designing dimensional models (star schema, snowflake schema) to effectively organize data for analysis. Understanding the nuances of fact tables and dimension tables is crucial for efficient querying and reporting.

ETL Processes: I have experience with Extract, Transform, Load (ETL) processes, which involve extracting data from various sources, transforming it into a consistent format, and loading it into the data warehouse. I’m familiar with ETL tools like Informatica and Talend, as well as scripting languages like Python for custom ETL development.

Data Warehousing Technologies: I’m experienced working with various data warehousing platforms, including cloud-based solutions like AWS Redshift and Snowflake, and on-premise solutions like Teradata. I understand the importance of choosing the right technology to meet the specific needs of a project.

Data Governance: I understand the importance of data governance within the data warehouse, including data quality checks, access control, and metadata management. This ensures data integrity and compliance with regulations.

In a recent project, I designed and implemented a data warehouse for a large retail company, improving their reporting capabilities significantly. By centralizing their data, we reduced reporting time from days to hours and provided more actionable insights for business decisions.

Q 24. How do you measure the success of your data analysis efforts?

Measuring the success of data analysis isn’t simply about generating reports; it’s about demonstrating the impact on business decisions. I use a combination of quantitative and qualitative metrics.

Quantitative Metrics: These are measurable outcomes directly related to business objectives. For example:

- Improved Efficiency: Did the analysis lead to a reduction in processing time, cost savings, or increased productivity?

- Increased Revenue: Did the insights drive sales growth or improved customer retention?

- Reduced Risks: Did the analysis help identify and mitigate potential problems or risks?

Qualitative Metrics: These assess the impact on decision-making and overall business strategy:

- Actionable Insights: Were the findings clear, concise, and easily understood by stakeholders? Did they lead to informed decisions?

- Stakeholder Satisfaction: Did the analysis meet the needs and expectations of business users and leadership? Did it improve their understanding of the business?

- Improved Communication: Did the analysis facilitate better communication and collaboration within the organization?

It’s important to define clear KPIs (Key Performance Indicators) upfront to ensure the analysis directly addresses business needs. For example, if the goal is to reduce customer churn, we’d track metrics such as churn rate before and after implementing recommendations based on our analysis.

Q 25. What is your experience with time series analysis?

Time series analysis is a powerful technique for analyzing data collected over time. Think stock prices, weather patterns, or website traffic – all are time series data. My experience covers various aspects of this field:

Forecasting: I use techniques like ARIMA, Exponential Smoothing, and Prophet to forecast future trends. For example, I’ve successfully predicted customer demand for a retail company, optimizing inventory levels and reducing waste.

Decomposition: I decompose time series data into its components (trend, seasonality, residuals) to gain a deeper understanding of underlying patterns. This helps isolate the impact of different factors and make more accurate predictions.

Anomaly Detection: I identify unusual patterns or outliers in time series data, which could signal problems or opportunities. For example, a sudden drop in website traffic might indicate a technical issue needing immediate attention.

Tools and Techniques: I’m proficient in using statistical software packages like R and Python libraries like statsmodels and fbprophet to conduct time series analysis.

In one project, I used time series analysis to identify seasonal patterns in energy consumption for a utility company. This allowed them to optimize their energy generation and reduce costs.

Q 26. Explain your understanding of regression analysis.

Regression analysis is a statistical method used to model the relationship between a dependent variable and one or more independent variables. It’s like trying to find the best-fitting line through a scatter plot of data points. My understanding includes:

Linear Regression: This is the most basic form, assuming a linear relationship between variables. I use it to understand how changes in independent variables affect the dependent variable. For example, I might use it to model the relationship between advertising spend and sales revenue.

Multiple Regression: This extends linear regression to include multiple independent variables, allowing for a more complex model. This is useful when there are several factors influencing the dependent variable.

Non-linear Regression: When the relationship between variables isn’t linear, I employ non-linear regression techniques to find a better fit. This might involve using polynomial or exponential functions.

Model Evaluation: I rigorously evaluate regression models using metrics like R-squared, adjusted R-squared, and p-values to assess their goodness of fit and statistical significance.

For instance, I recently used multiple regression to model the factors influencing customer satisfaction. We identified key drivers and implemented targeted improvements to enhance customer experience.

Q 27. How do you handle conflicting data from multiple sources?

Conflicting data from multiple sources is a common challenge in data analysis. It’s like hearing different versions of a story; you need to carefully evaluate each source to determine the most accurate account. My approach involves:

Identifying the Discrepancies: The first step is to pinpoint the exact conflicts. This often involves data profiling and comparison techniques.

Investigating the Sources: I delve deeper into the sources of the conflicting data. This includes examining data collection methods, data definitions, and potential biases. Perhaps one source uses a different measurement scale or has a different data update frequency.

Prioritizing Data Quality: Based on my assessment, I prioritize data sources according to their reliability and accuracy. I might favor data from a more reputable or validated source.

Data Reconciliation: I may attempt to reconcile conflicting data through techniques like averaging, weighted averaging, or using a more sophisticated reconciliation algorithm. This requires careful consideration of the data’s nature and potential biases.

Flagging Inconsistencies: In cases where reconciliation is not feasible, I explicitly flag the inconsistencies in my analysis, highlighting potential areas of uncertainty.

Transparency is key; I always document the methods used to handle conflicting data and acknowledge potential limitations in the analysis.

Q 28. Describe your experience with process automation tools.

Process automation is essential for efficiency and scalability in data analysis and reporting. It’s about automating repetitive tasks, freeing up time for more strategic work. My experience includes:

ETL Automation: I automate ETL processes using tools like Informatica PowerCenter or Apache Airflow to ensure consistent and reliable data loading.

Report Generation Automation: I automate the generation of reports using scripting languages like Python and tools like Tableau or Power BI. This allows for scheduled and automated report delivery.

Data Quality Automation: I use automation to implement data quality checks and validation rules, identifying and addressing data inconsistencies proactively.

Workflow Automation: I design and implement automated workflows for data analysis tasks, optimizing processes and reducing manual intervention.

In a previous role, I automated a monthly reporting process that previously took days to complete. By using Python and scheduled tasks, I reduced the time to just a few hours, significantly improving efficiency and reducing the risk of human error.

Key Topics to Learn for Process Data Analysis and Reporting Interview

- Data Collection & Cleaning: Understanding various data sources, methods for data extraction, and techniques for handling missing or inconsistent data. Practical application: Cleaning and transforming raw data from a manufacturing process to identify bottlenecks.

- Descriptive Statistics & Data Visualization: Calculating key metrics (mean, median, standard deviation, etc.) and creating insightful visualizations (charts, graphs, dashboards) to communicate findings effectively. Practical application: Presenting key performance indicators (KPIs) using dashboards to monitor process efficiency.

- Process Improvement Methodologies: Familiarity with Lean, Six Sigma, or other process improvement methodologies and how data analysis supports process optimization. Practical application: Using statistical process control (SPC) charts to identify and address variations in a production line.

- Statistical Analysis & Hypothesis Testing: Applying statistical tests to analyze data, identify trends, and draw meaningful conclusions. Practical application: Determining if a new process improvement initiative has significantly reduced error rates.

- Data Storytelling & Reporting: Communicating complex data insights clearly and concisely through written reports and presentations. Practical application: Creating a compelling presentation summarizing findings and recommendations for process improvements to stakeholders.

- Data Mining & Predictive Modeling (Advanced): Exploring techniques for predicting future outcomes based on historical data (optional, depending on the seniority of the role). Practical application: Using regression analysis to forecast future demand based on historical sales data.

- Database Management Systems (DBMS): Understanding the fundamentals of relational databases (SQL) and data warehousing is crucial for efficient data retrieval and manipulation. Practical application: Writing SQL queries to extract specific data points for analysis.

Next Steps

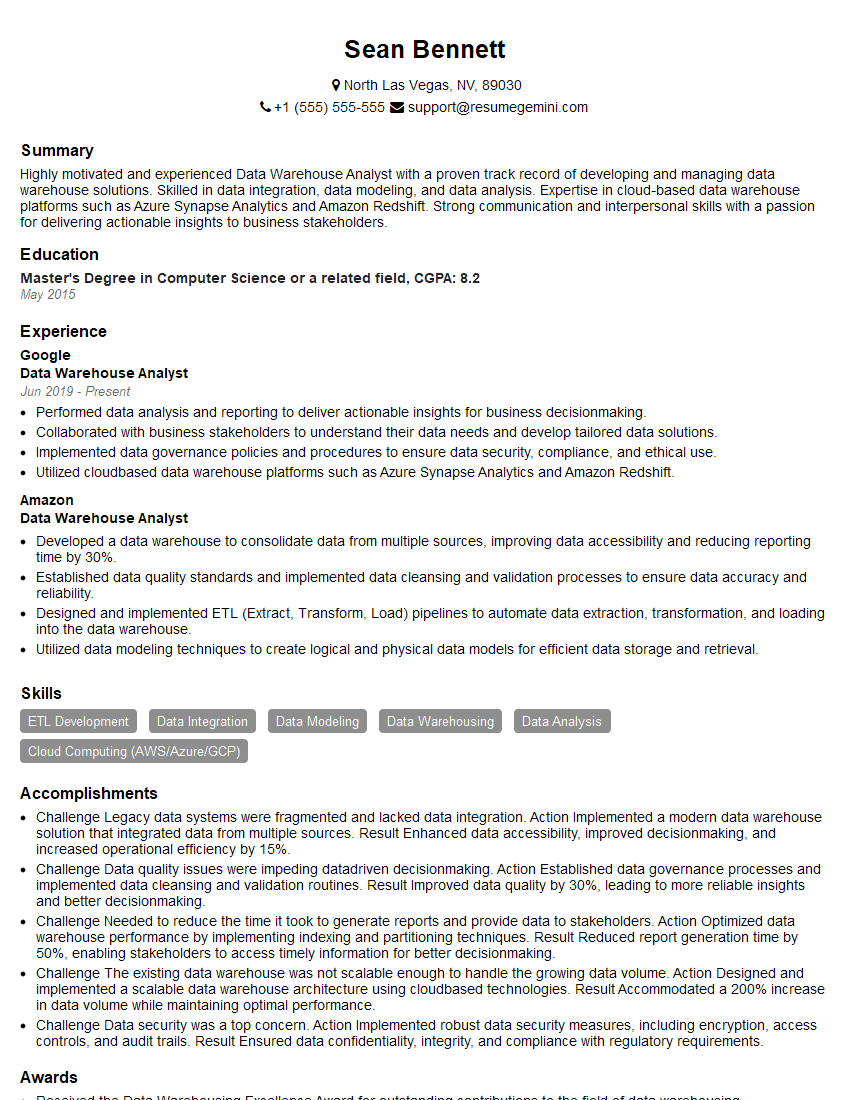

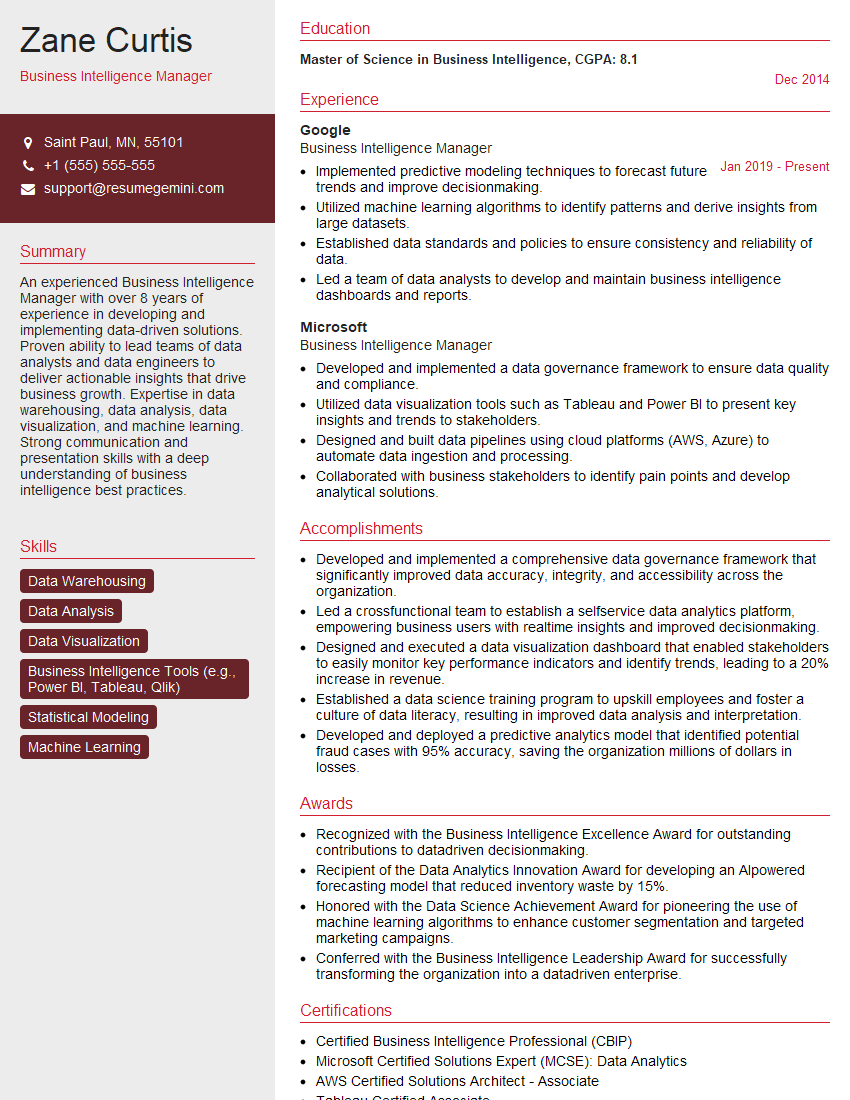

Mastering Process Data Analysis and Reporting is crucial for career advancement in today’s data-driven world. Strong analytical skills and the ability to communicate insights effectively are highly sought-after qualities. To significantly increase your job prospects, crafting an ATS-friendly resume is essential. ResumeGemini is a trusted resource to help you build a professional resume that highlights your skills and experience effectively. Examples of resumes tailored to Process Data Analysis and Reporting are available to guide you through the process. Take the next step and build a resume that showcases your potential!

Explore more articles

Users Rating of Our Blogs

Share Your Experience

We value your feedback! Please rate our content and share your thoughts (optional).

What Readers Say About Our Blog

Hello,

We found issues with your domain’s email setup that may be sending your messages to spam or blocking them completely. InboxShield Mini shows you how to fix it in minutes — no tech skills required.

Scan your domain now for details: https://inboxshield-mini.com/

— Adam @ InboxShield Mini

Reply STOP to unsubscribe

Hi, are you owner of interviewgemini.com? What if I told you I could help you find extra time in your schedule, reconnect with leads you didn’t even realize you missed, and bring in more “I want to work with you” conversations, without increasing your ad spend or hiring a full-time employee?

All with a flexible, budget-friendly service that could easily pay for itself. Sounds good?

Would it be nice to jump on a quick 10-minute call so I can show you exactly how we make this work?

Best,

Hapei

Marketing Director

Hey, I know you’re the owner of interviewgemini.com. I’ll be quick.

Fundraising for your business is tough and time-consuming. We make it easier by guaranteeing two private investor meetings each month, for six months. No demos, no pitch events – just direct introductions to active investors matched to your startup.

If youR17;re raising, this could help you build real momentum. Want me to send more info?

Hi, I represent an SEO company that specialises in getting you AI citations and higher rankings on Google. I’d like to offer you a 100% free SEO audit for your website. Would you be interested?

Hi, I represent an SEO company that specialises in getting you AI citations and higher rankings on Google. I’d like to offer you a 100% free SEO audit for your website. Would you be interested?

good