Every successful interview starts with knowing what to expect. In this blog, we’ll take you through the top 3D Animation Software interview questions, breaking them down with expert tips to help you deliver impactful answers. Step into your next interview fully prepared and ready to succeed.

Questions Asked in 3D Animation Software Interview

Q 1. Explain the difference between keyframing and tweening.

Keyframing and tweening are fundamental animation techniques in 3D software. Think of keyframing as setting the major poses or positions of an object or character at specific points in time. These are your ‘keyframes,’ defining the beginning and end points of a movement, and any crucial in-between moments. Tweening, on the other hand, is the process the software uses to automatically generate the frames between those keyframes, creating the illusion of smooth motion. It essentially fills in the gaps, interpolating the values (position, rotation, scale) between your keyframes.

For example, imagine animating a bouncing ball. You’d keyframe the ball at its highest point, then at the ground, and maybe a couple of points in between to control the arc. The software’s tweening algorithm would calculate all the frames in between, making the ball’s movement look natural. Without tweening, you would have to manually create every single frame, a tremendously time-consuming process.

The difference is crucial for efficiency. Keyframing provides control over the overall animation, defining the crucial moments. Tweening handles the tedious intermediate work, saving you significant time and effort. The choice of tweening type (linear, ease in, ease out, etc.) impacts the smoothness and feel of the animation.

Q 2. Describe your experience with various 3D modeling techniques (e.g., polygon modeling, NURBS modeling).

I’m proficient in both polygon modeling and NURBS modeling, each suited to different types of 3D assets. Polygon modeling involves creating 3D shapes using polygons (triangles, quads) – it’s ideal for hard-surface modeling like buildings, vehicles, or characters with defined, sharp edges. My experience includes using polygon modeling extensively to create detailed game assets, where optimizing polygon count for performance is vital. I’ve utilized tools like extrusion, beveling, and loop cuts to sculpt forms and refine details.

NURBS (Non-Uniform Rational B-Splines) modeling, conversely, allows for the creation of smooth, organic shapes. It’s excellent for creating curves, surfaces, and models requiring a high degree of precision and smoothness, such as characters, cars, or even architectural elements with flowing designs. I’ve used NURBS modeling to create sleek product designs and highly detailed characters requiring smooth deformations. The ability to manipulate control points offers great control over the final form. I’ve frequently switched between these techniques based on the project requirements, sometimes even combining them for optimal results.

Q 3. What are your preferred 3D animation software packages and why?

My preferred 3D animation software packages are Autodesk Maya and Blender. I choose Maya for its industry-standard tools and robust workflow, particularly useful for large-scale productions requiring collaborative efforts and extensive plugin support. Its robust animation tools and character rigging capabilities are unparalleled, allowing me to tackle challenging animation tasks smoothly.

Blender, on the other hand, is an incredible open-source alternative with powerful tools. I appreciate its versatility and cost-effectiveness, making it perfect for personal projects, experiments, and quick prototyping. Its integrated modeling, sculpting, animation, simulation, and rendering capabilities provide a complete pipeline in one package. The large and active community also means readily available resources and support.

Ultimately, my preference depends on the project’s scope, budget, and specific needs. Both programs have their strengths, and I’m comfortable working with either one.

Q 4. How do you approach creating realistic character animation?

Creating realistic character animation involves a multi-faceted approach. It begins with a solid understanding of anatomy and movement. I study reference material – videos, photographs, even observing people in real life – to capture the nuances of human movement and expression. This informs the posing and keyframing process, ensuring believable and natural-looking animation.

Beyond that, I pay close attention to weight distribution, physics, and secondary actions. Weight distribution dictates how a character moves and reacts to forces; correctly animating it is essential for realism. Secondary actions, like the subtle movement of clothing or hair, add another layer of realism and enhance the overall performance. Finally, I leverage advanced techniques such as motion capture data to create highly realistic movements, which I then refine and adjust in the software.

For example, animating a character walking involves more than simply moving their legs; it’s about animating the subtle sway of their arms, the bounce in their step, and the natural curvature of their spine. These details contribute to a believable and engaging animation.

Q 5. Explain your understanding of rigging and skinning.

Rigging and skinning are essential for creating animated characters. Rigging is the process of creating a skeleton or armature – a structure of bones and joints – inside the 3D model. This structure allows for the character’s movement, defining how different parts of the body can bend, twist, and rotate. The rig’s complexity depends on the character and animation requirements, ranging from simple rigs for basic movements to highly complex ones for detailed facial expressions and intricate actions.

Skinning, on the other hand, is the process of attaching the character’s surface geometry (the skin) to the underlying rig. This creates a connection between the bones and the mesh, so that when you move a bone in the rig, the corresponding area of the model deforms accordingly. This requires careful weight painting to ensure smooth and natural-looking deformations. Improper weight painting can lead to unnatural deformations, such as stretching or pinching of the skin.

Imagine it like building a puppet: the rig is the internal structure of sticks and joints, and skinning is the process of covering those sticks with fabric or material to create a realistic puppet.

Q 6. Describe your experience with different types of shaders.

My experience with shaders encompasses various types, from simple diffuse shaders to complex physically-based rendering (PBR) shaders. Diffuse shaders define the basic color and reflectivity of a surface. They’re simple to use but lack realism in terms of lighting and material interactions.

PBR shaders, conversely, model the material’s properties much more accurately. They use parameters such as roughness, metallicness, and normal maps to simulate how light interacts with the material. I’ve extensively used PBR shaders to create realistic-looking materials like wood, metal, and skin. These shaders are essential for creating photorealistic renders. Other shaders I have experience with include subsurface scattering shaders (for materials like skin and wax), emissive shaders (for self-illuminating objects), and displacement shaders (for adding high-frequency detail to surfaces).

The selection of a shader depends entirely on the desired visual effect and the level of realism required. For example, a cartoon render might use a simple diffuse shader, while a realistic film render would almost certainly use PBR shaders.

Q 7. How do you manage large, complex 3D scenes?

Managing large, complex 3D scenes requires a structured approach. One of the key strategies is to use proper organization. This involves grouping objects logically, using layers effectively, and employing a naming convention that’s clear and consistent. This makes navigation and selection much easier.

Optimizing geometry and reducing polygon counts is crucial for performance. High-poly models suitable for close-ups can be swapped out with lower-poly versions for distant shots. Level of Detail (LOD) systems automate this process, dynamically switching between different levels of detail based on camera distance. Furthermore, using proxies and instancing significantly reduces scene complexity, especially when dealing with repetitive elements like trees or grass.

Lastly, utilizing efficient rendering techniques, such as rendering passes and optimizing render settings, is essential. It’s also beneficial to leverage render layers to separate elements and composite them later, providing more flexibility in the post-production process. Regularly backing up your work is also a critical aspect of managing such scenes, mitigating potential data loss.

Q 8. What is your process for creating realistic lighting and shadows?

Creating realistic lighting and shadows involves a multi-step process that goes beyond simply placing lights in a scene. It’s about understanding the interplay of light sources, materials, and the environment to achieve a believable result. My approach usually begins with a thorough understanding of the scene’s mood and time of day. I’ll consider the primary light source (e.g., sun, lamp) and then add secondary and tertiary sources to create depth and realism.

Light Source Selection and Placement: I experiment with different light types – area lights, point lights, spotlights – each providing unique characteristics. For instance, area lights simulate soft, diffused light, while spotlights create focused beams. Their placement is crucial; a sun directly overhead will cast different shadows than a low-hanging sun.

Shadow Properties: I carefully adjust shadow parameters to achieve the desired effect. This includes adjusting shadow softness (penumbra), color, and distance to simulate atmospheric effects. For example, soft shadows contribute to a warm, inviting atmosphere, while hard shadows add a dramatic feel.

Global Illumination (GI): GI methods, such as radiosity or photon mapping, are key to creating realistic indirect lighting. This simulates the way light bounces off surfaces, creating subtle illumination in areas not directly lit. It’s like how light bounces around a room, making dimly lit corners slightly brighter.

Material Properties: The surface properties of the objects in the scene heavily influence how light interacts with them. I use physically-based rendering (PBR) techniques, ensuring accurate reflectance, roughness, and metallic values for each material. A shiny metal will reflect light differently than a matte wooden surface.

Ambient Occlusion: This technique simulates the darkening of areas where surfaces are close together, adding realism to crevices and corners. Imagine the shadow in the corner of a room where two walls meet.

For example, when recreating a sunset scene, I’d start with a large area light simulating the sun, add smaller area lights representing the sky glow, and use volumetric lighting to create realistic atmospheric scattering. I would then carefully tune shadow parameters to create long, soft shadows stretching across the landscape, mimicking the time of day.

Q 9. Explain your understanding of UV mapping and texturing.

UV mapping and texturing are fundamental to adding detail and visual appeal to 3D models. UV mapping is the process of projecting a 3D model’s surface onto a 2D plane, creating a ‘UV map’. This 2D map then acts as a canvas for applying textures, which are images providing color, detail, and surface properties. Think of it like wrapping a present – the flat wrapping paper (UV map) is carefully applied to the 3D gift (model).

UV Mapping Techniques: Various techniques exist, including planar mapping (simple projection), cylindrical mapping, and spherical mapping. The choice depends on the model’s geometry and desired results. Complex models often require advanced unwrapping techniques to minimize distortion and seams.

Texture Creation and Application: Once the UV map is created, I use texture painting software or import existing textures. These can be simple solid colors or highly detailed photorealistic images. The process involves carefully selecting appropriate textures based on the model’s material and desired aesthetic. For example, a wooden crate would use a wood texture, whereas a metal surface would employ a metallic texture.

Texture Resolution and Optimization: Higher resolution textures offer more detail but increase file sizes. It’s crucial to balance detail with performance, especially in real-time applications. Techniques like tiling and normal mapping can create the illusion of high detail with lower resolution textures.

Normal Maps and other techniques: To create more realistic detail without excessively increasing polygon count, techniques such as normal mapping, displacement mapping, and ambient occlusion maps are used. A normal map adds surface detail (bumps, scratches) to a model, allowing artists to simulate depth without requiring many extra polygons.

For example, in creating a character, I would meticulously unwrap the model’s clothing and skin into separate UV maps, minimizing distortion. I would then paint detailed textures for each, perhaps using a photorealistic skin texture and a fabric texture for the clothing, carefully adjusting the UV seams to hide any visual imperfections.

Q 10. How do you troubleshoot technical issues in your animation pipeline?

Troubleshooting in an animation pipeline requires a systematic approach. My process involves identifying the issue, isolating the cause, and implementing a solution. I usually follow these steps:

Identify the Problem: Carefully document the error, including any error messages, specific circumstances when it occurs, and the expected vs. actual outcome.

Isolate the Source: This might involve checking individual components of the pipeline (modeling, rigging, animation, rendering). I systematically disable or isolate parts of the scene or workflow to pinpoint the problematic area. The process is often iterative.

Consult Resources: This includes reviewing documentation, searching online forums, consulting with colleagues, or contacting software support. Sometimes, the error message itself will lead to a solution.

Experiment with Solutions: Based on the research, I try potential fixes. This could involve adjusting settings, reinstalling software, recompiling shaders, or even simplifying parts of the model to test if a specific component is the source.

Version Control: I utilize version control systems like Git to track changes and revert to previous working states if necessary. This ensures that troubleshooting doesn’t cause irreversible damage.

Testing and Validation: Once a fix is implemented, thorough testing is crucial to ensure the problem is resolved without introducing new issues.

For instance, if rendering suddenly stops, I might first check render settings, then RAM usage, and finally investigate the scene’s complexity to see if it exceeds system capabilities. If textures appear corrupted, I’d check file paths, texture resolution, and potentially the UV map for errors.

Q 11. What are your preferred methods for creating realistic hair and fur?

Creating realistic hair and fur is a computationally intensive task demanding specialized techniques. My preferred methods involve a combination of techniques depending on the desired level of realism and performance requirements.

Hair and Fur Systems: Most 3D software packages include built-in hair and fur systems that use particle simulations. These systems allow for creating individual strands or fibers, which are then dynamically simulated to react to gravity, wind, and other forces. The parameters controlling strand length, thickness, curl, and clumping are crucial for achieving realism.

Grooming Tools: Dedicated grooming tools within these systems allow for combing, styling, and shaping the hair to create specific hairstyles. This often involves combing and brushing simulations to control the overall look.

Advanced Shading Techniques: Achieving realism also requires advanced shading techniques, like subsurface scattering (SSS). SSS simulates how light penetrates beneath the hair surface, giving it a translucent and lifelike appearance. Proper texturing is also critical, mimicking the way individual hairs reflect light.

Optimization Techniques: Hair and fur simulations can be very demanding on computing resources. Techniques like hair cards (flat planes mimicking hair clumps) and level of detail (LOD) systems are often used to optimize performance for real-time rendering.

For example, creating a character’s flowing hair might involve simulating thousands of individual strands using a hair particle system. I would then use grooming tools to style the hair, apply realistic shading with SSS, and optimize the hair simulation for the project’s requirements. I might use hair cards for less-detailed areas, like the background, to conserve resources and maintain a realistic look.

Q 12. Describe your experience with particle systems and FX simulation.

Particle systems and FX simulation are essential for creating realistic effects such as fire, smoke, water, explosions, and other dynamic phenomena. My experience spans various techniques and software, ensuring I can create visually compelling effects tailored to project needs.

Particle Simulation Techniques: Understanding different particle behaviors is key. This includes how particles react to forces like gravity, wind, and collisions. Creating believable fluid simulations often requires advanced techniques such as SPH (Smoothed Particle Hydrodynamics) or FLIP (Fluid Implicit Particle) methods.

Emitter and Modifier Settings: Carefully adjusting emitter parameters (such as particle rate, lifetime, size, and initial velocity) significantly impacts the final effect. Modifiers can then shape and control particle behavior over time, influencing their movement and interactions. For example, a vortex modifier can create swirling patterns in smoke or water.

Rendering Techniques: Volumetric rendering is essential for creating realistic smoke and fire effects. This technique renders the particles as volumes, allowing for the simulation of light scattering and absorption within the particles. This contrasts with surface rendering, which only renders the particle’s outer surface.

Software and Plugins: Various software packages and plugins offer advanced capabilities in FX simulation. My familiarity with these tools enables me to optimize the simulations for the best visual outcome.

For example, in creating a fire effect, I’d use a particle emitter to generate particles, apply a heat source and wind forces to guide their movement, and use volumetric rendering to create realistic flame and smoke. I might also use a turbulence modifier to make the fire less uniform, creating more randomness in its behavior.

Q 13. How familiar are you with motion capture data and its integration into animation?

Motion capture (mocap) data significantly enhances realism in character animation by providing a foundation of realistic human or animal movement. My experience involves acquiring, cleaning, and integrating mocap data into animation pipelines. This includes:

Data Acquisition: I understand the process of mocap data acquisition, whether using optical, inertial, or magnetic systems. Different systems offer varying levels of accuracy and are suited for different applications.

Data Cleaning and Retargeting: Raw mocap data often requires cleaning and retargeting. This involves removing noise, fixing inconsistencies, and transferring the motion data from the mocap rig to the 3D character model. This is crucial for achieving a natural look.

Integration with Animation Software: I’m proficient in importing and integrating mocap data into popular 3D animation software. This includes properly mapping the mocap data to the character rig’s joints and adjusting the data to fit the animation requirements.

Blending and Editing: Mocap data is often a starting point, and manual animation is usually used to refine the motion and adjust it to better fit the scene’s context. Blending between mocap and keyframe animation is a common practice.

For instance, in animating a fight scene, I might use mocap data to capture the realistic movements of martial artists, then refine the animation using keyframe animation to improve the timing and add stylistic touches. Retargeting would map that data to my character rig, ensuring accurate movement.

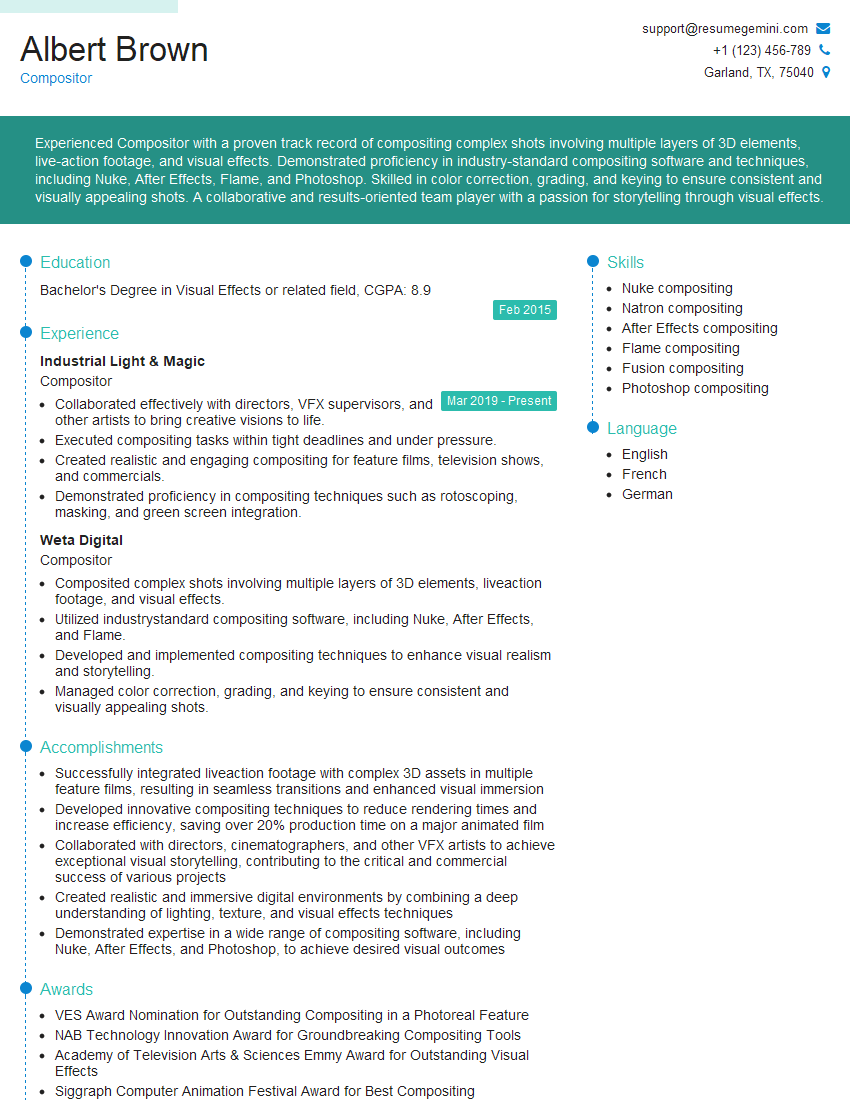

Q 14. Explain your experience with compositing and post-processing techniques.

Compositing and post-processing are crucial steps in finalizing the animation, adding final polish and enhancing the visual quality. My experience encompasses a wide range of techniques used to blend elements from various render passes, adjust color, and add visual effects.

Compositing Software: I’m proficient with compositing software like Nuke or After Effects, understanding how to blend different render layers (e.g., background, character, effects) to create a unified image. This involves techniques like keying, rotoscoping, and color correction.

Color Grading and Correction: I can adjust color and contrast to achieve a consistent look and feel across the entire animation, correcting for lighting inconsistencies or matching the overall tone to the project’s style. Techniques such as curves and color wheels are frequently employed.

Adding Visual Effects: Post-processing includes adding final visual effects like lens flares, glows, and other finishing touches. This step helps refine the visual impact and enhance the overall aesthetic.

Depth of Field and other effects: Techniques like depth of field, motion blur, and chromatic aberration can be added to enhance realism and create a cinematic feel. These effects help give the animation more depth and realism.

For example, I might composite a character rendered in 3D onto a live-action background, carefully matching the lighting and color to create a seamless blend. Then, I would use color grading to adjust the overall mood and contrast, potentially adding lens flares and subtle motion blur to enhance the realism. These steps add that professional ‘final polish’ to complete the animation.

Q 15. Describe your workflow for creating a short animation from concept to final render.

My animation workflow is a cyclical process, refining the visuals and story until the final render. It starts with conceptualization, sketching ideas and building a storyboard to visualize the narrative flow. Then comes modeling, where I create the 3D assets—characters, environments, props—using software like Maya or Blender. Next is rigging, giving the models an internal skeleton for animation. I then proceed to animation itself, meticulously keyframing the poses and movements, often using curves and graphs to fine-tune the timing and smoothness. After animation comes texturing, adding color, detail, and surface properties to make the assets look realistic or stylistic, depending on the project’s needs. Lighting is crucial—I carefully arrange lights and shaders to achieve the desired mood and visual appeal, often experimenting with different lighting setups to get the right look. Finally, rendering takes place, where the software processes all the data to create the final image sequence. Throughout this entire process, I constantly iterate, reviewing and refining each stage before moving on to the next, utilizing feedback and tests to ensure quality. For example, during the lighting phase, I might render test animations using lower settings to quickly assess different lighting styles without waiting for a full high-resolution render.

Career Expert Tips:

- Ace those interviews! Prepare effectively by reviewing the Top 50 Most Common Interview Questions on ResumeGemini.

- Navigate your job search with confidence! Explore a wide range of Career Tips on ResumeGemini. Learn about common challenges and recommendations to overcome them.

- Craft the perfect resume! Master the Art of Resume Writing with ResumeGemini’s guide. Showcase your unique qualifications and achievements effectively.

- Don’t miss out on holiday savings! Build your dream resume with ResumeGemini’s ATS optimized templates.

Q 16. What is your experience with version control systems for 3D assets?

Version control is fundamental for collaborative projects and preventing data loss. I’ve extensively used Git, integrated with tools like Perforce or Shotgun. My approach involves creating separate branches for different tasks, like modeling a character or animating a specific scene. This allows multiple artists to work concurrently without overwriting each other’s progress. Regular commits with descriptive messages track changes precisely. This detailed record allows easy rollback to previous versions if needed, invaluable for rectifying errors or exploring alternative designs. For example, I might branch to create a new version of a character model with different textures, and only merge it back into the main branch after testing and approval. I believe this system maintains project organization and facilitates efficient collaborative workflows.

Q 17. How do you optimize 3D models and animations for performance?

Optimizing 3D models and animations is crucial for smooth playback and efficient rendering. For models, I focus on polygon reduction—reducing the number of polygons without significantly impacting visual quality. Techniques like decimation and retopology are used. I also optimize textures, using smaller image sizes and compression methods without compromising visual fidelity. For animations, reducing the number of keyframes without compromising the fluidity of movement is important. Using efficient animation techniques like motion capture data and procedural animation can significantly reduce file sizes. Furthermore, I leverage level of detail (LOD) systems, switching to simpler models at greater distances to reduce rendering load. This is essential in large environments. For instance, in a scene with a vast landscape, I might use high-detail models for close-up shots but switch to lower-poly versions for distant views. Constant testing on different hardware configurations is critical to ensure performance across various systems.

Q 18. How do you handle feedback and critiques during the animation process?

Handling feedback and critiques is an integral part of the creative process. I actively solicit feedback throughout the pipeline, from initial concept sketches to final renders. I find it useful to have regular reviews with clients or team members, where we discuss the progress, addressing specific concerns and exploring alternative approaches. I treat constructive criticism as an opportunity to enhance the project. I visualize feedback using annotations on screenshots or directly in the software, creating a clear record of the revisions. This ensures that everyone is on the same page and promotes open communication. For instance, if feedback suggests a change in character expression, I may adjust the animation by modifying keyframes and re-rendering a short section for immediate feedback and review.

Q 19. What are your preferred methods for creating realistic water or smoke effects?

Realistic water and smoke effects are usually achieved through a combination of techniques. For water, I frequently use fluid simulation tools within my 3D software, defining parameters like density, viscosity, and surface tension to control the behavior. This allows for dynamic and realistic movements of water, which can be further enhanced by adding realistic shaders, with features like refraction and reflections to mimic the way light interacts with the water. For smoke, I utilize similar simulation tools, defining the source and behavior of the smoke particles. The density and opacity of the smoke are adjusted to create visual variations. Using volume rendering techniques enhances realism. Both water and smoke often benefit from the use of particle systems, adding details such as splashes or wisps of smoke, adding dynamism and realism. For example, simulating a waterfall involves careful setup of fluid dynamics, mesh resolution, and particle effects to achieve the appropriate cascading, foam, and spray.

Q 20. Describe your experience with different render engines (e.g., Arnold, V-Ray, Cycles).

I have extensive experience with various render engines, including Arnold, V-Ray, and Cycles. Arnold excels in its speed and ability to render high-quality photorealistic images. It’s particularly strong in handling complex lighting scenarios and detailed materials. V-Ray also offers excellent photorealism, renowned for its flexibility and extensive plugin support. Cycles, Blender’s integrated renderer, is a path-traced renderer providing excellent control over light interactions and materials. I choose a render engine based on the project’s specific requirements. For example, for fast turnaround times on a project with simpler geometry, Cycles might be my preferred choice due to its integration with Blender and speed. However, for projects requiring exceptionally high realism and complex effects, Arnold or V-Ray would be more appropriate, even with longer render times.

Q 21. Explain your understanding of color spaces and color management.

Understanding color spaces and color management is crucial for maintaining visual consistency throughout the animation pipeline. Color spaces define the range of colors that can be represented digitally. Common spaces include sRGB (for screens) and Adobe RGB (for print). Color management ensures that colors are accurately translated from one color space to another, avoiding unexpected shifts in hue and saturation. Inaccurate color management can lead to inconsistencies between the screen display, the final render, and the printed output. For example, if a scene is rendered in a wide gamut color space (like Adobe RGB) and the final output is for an sRGB screen, colors can appear oversaturated or desaturated. A well-defined color workflow, involving profiles for each device, ensures accurate color representation across the entire process. Using a color management system like those offered in the software ensures consistent rendering of colors.

Q 22. How do you create convincing character lip-sync?

Convincing character lip-sync is crucial for believable animation. It involves precisely matching mouth movements to audio, creating a seamless and natural illusion. This process goes beyond simply opening and closing the mouth; it requires understanding phonetics, facial muscle articulation, and the nuances of expression.

The process typically begins with recording high-quality audio. Then, using specialized software like Autodesk Maya or Blender, we create a rig (a skeletal structure) for the character’s head and face, allowing for precise control of individual facial muscles. We then use tools such as visemes (pre-defined mouth shapes corresponding to different sounds) and auto lip-sync software, which analyzes the audio and automatically suggests corresponding mouth shapes. However, manual adjustments are almost always necessary to refine the animation and add realism, paying close attention to subtle details like tongue movement and jaw position. For example, the ‘f’ sound requires a different lip position than the ‘b’ sound, and these differences must be accurately reflected in the animation. Finally, we review and refine the lip-sync multiple times, often working in conjunction with the audio engineer to ensure the lip movements align perfectly with the audio’s rhythm and emotional content.

Q 23. What is your experience with creating realistic cloth and fabric simulations?

I have extensive experience with realistic cloth and fabric simulation, leveraging both procedural and physically-based simulation techniques. Procedural techniques use pre-programmed rules to define how the fabric moves, which can be efficient but sometimes lacks realism. Physically-based simulations, on the other hand, model the actual physics of fabric, such as its weight, stiffness, and resistance to bending and stretching, leading to far more lifelike results. I’m proficient in using various software’s built-in simulation tools, including Maya’s nCloth and Blender’s cloth simulation.

For example, in a recent project involving a character wearing a flowing cape, I used physically-based simulation to achieve realistic drapery and movement. I carefully adjusted parameters such as the fabric’s weight, stiffness, and air resistance to achieve the desired level of realism. I also used collision detection to ensure the cape interacted naturally with the character’s body and the environment. Understanding the limitations of the software is crucial – overly complex simulations can be computationally expensive, so optimizing the simulation parameters is always necessary. Dealing with self-collisions (parts of the cloth interacting with itself) requires attention and often the use of collision groups and other techniques to resolve potential issues.

Q 24. Describe your familiarity with different animation principles (e.g., squash and stretch, anticipation).

My understanding of animation principles is foundational to my work. These are the guiding rules that create believable, engaging animation. I’m intimately familiar with principles such as:

- Squash and Stretch: Giving objects exaggerated deformation to convey weight and flexibility (think of a bouncing ball).

- Anticipation: Preparing the audience for an action (e.g., a character leaning back before a jump).

- Staging: Clearly communicating the action and emotion to the viewer.

- Follow Through and Overlapping Action: The parts of the character continue moving after the main action has stopped, adding realism (like hair swaying after a head turn).

- Slow In and Slow Out: Actions begin and end gradually for more realistic movement.

- Arcs: Most natural movements follow curved paths rather than straight lines.

- Secondary Action: Adding subtle actions that complement the main action, enhancing character expression.

- Timing: The speed and rhythm of movements significantly impact the overall feel.

- Exaggeration: Enhancing natural movement to make it more expressive and engaging.

- Solid Drawing: Understanding form, weight, volume, and structure contributes to realism and believability.

These principles aren’t just theoretical; I actively apply them in every project to enhance the quality and realism of the animation.

Q 25. How do you stay up-to-date with the latest advancements in 3D animation technology?

Keeping up with advancements in 3D animation is crucial. I actively engage in several strategies to stay current:

- Industry Publications and Websites: I regularly read publications like 3D World and CGSociety, and follow influential blogs and websites.

- Conferences and Workshops: Attending industry events like SIGGRAPH provides invaluable opportunities to learn about cutting-edge technologies and network with other professionals.

- Online Courses and Tutorials: Platforms like Udemy, Coursera, and Skillshare offer excellent opportunities to deepen my expertise in specific areas, such as new rendering techniques or animation software updates.

- Following Industry Leaders: I follow prominent animators and studios on social media and subscribe to their newsletters.

- Experimentation and Practice: I dedicate time to exploring new software features, techniques, and plugins, regularly experimenting with personal projects.

This multifaceted approach ensures I maintain a strong grasp of the latest developments and incorporate them into my workflow.

Q 26. Describe a time you had to overcome a technical challenge in your 3D animation work.

In one project, we encountered significant challenges simulating the realistic interaction of water with a highly detailed character model. The sheer volume of polygons and the complexity of the water physics engine caused the rendering process to become incredibly slow and unstable.

To overcome this, we implemented several strategies. Firstly, we optimized the character model, reducing the polygon count without sacrificing visual fidelity by using techniques such as level of detail (LOD) modeling. Secondly, we experimented with different water simulation parameters, finding a balance between realism and performance. We also employed a proxy geometry technique; a simplified version of the model was used for the initial water interaction calculations, then replaced with the high-resolution model for the final render. This significantly reduced the computational burden without sacrificing the final result. Finally, we used a render farm to distribute the workload across multiple machines, allowing us to complete the rendering in a reasonable time frame. The successful resolution of this challenge involved a combination of technical skill, problem-solving, and teamwork.

Q 27. How do you collaborate effectively with other members of a 3D animation team?

Effective collaboration is essential in 3D animation. I approach teamwork with a focus on clear communication, active listening, and mutual respect. I believe in the power of open communication where every team member feels comfortable sharing ideas and concerns.

Specifically, I frequently use project management tools like Asana or Jira to track progress, deadlines, and tasks. I also make heavy use of version control systems, like Git, to manage and share assets and ensure seamless integration of individual contributions. Regular team meetings are crucial to address challenges, brainstorm solutions, and maintain alignment on project goals. Furthermore, I actively seek feedback from others and offer constructive criticism in return, fostering a collaborative and supportive environment. My aim is always to contribute positively to the team, helping us achieve a shared vision through open dialogue and effective coordination.

Q 28. What are your salary expectations?

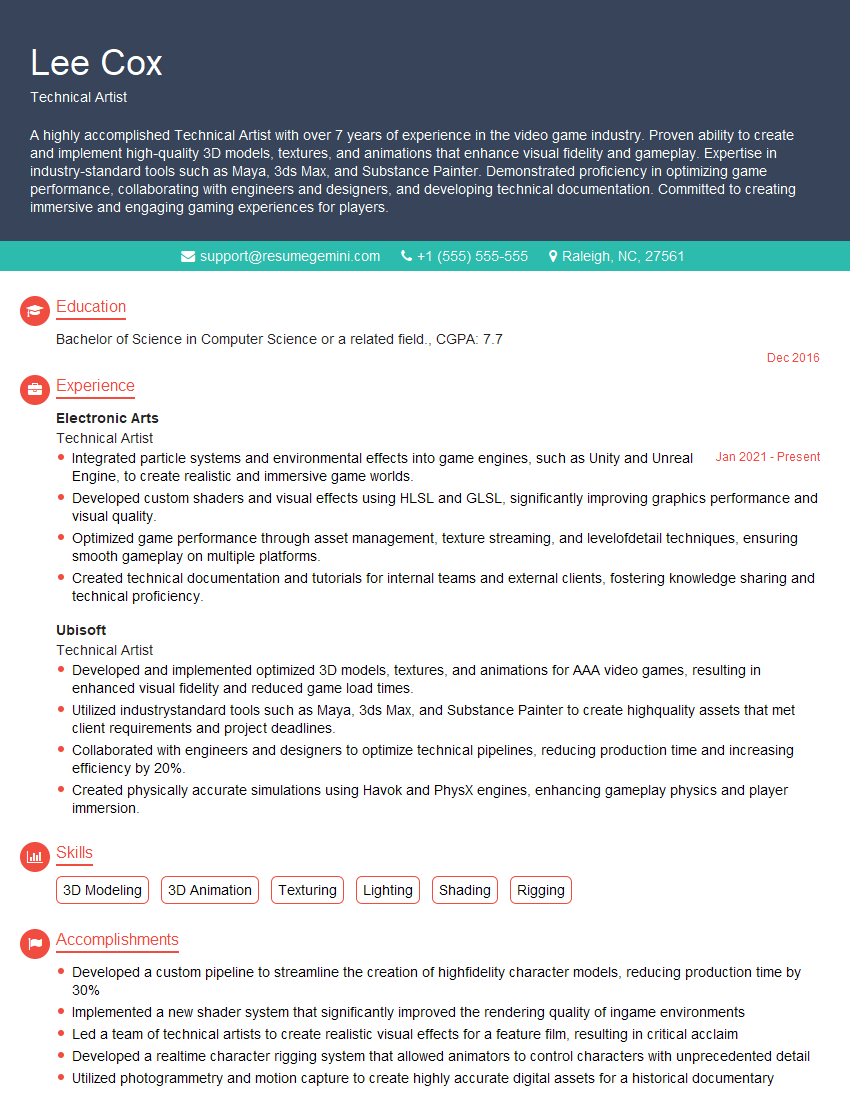

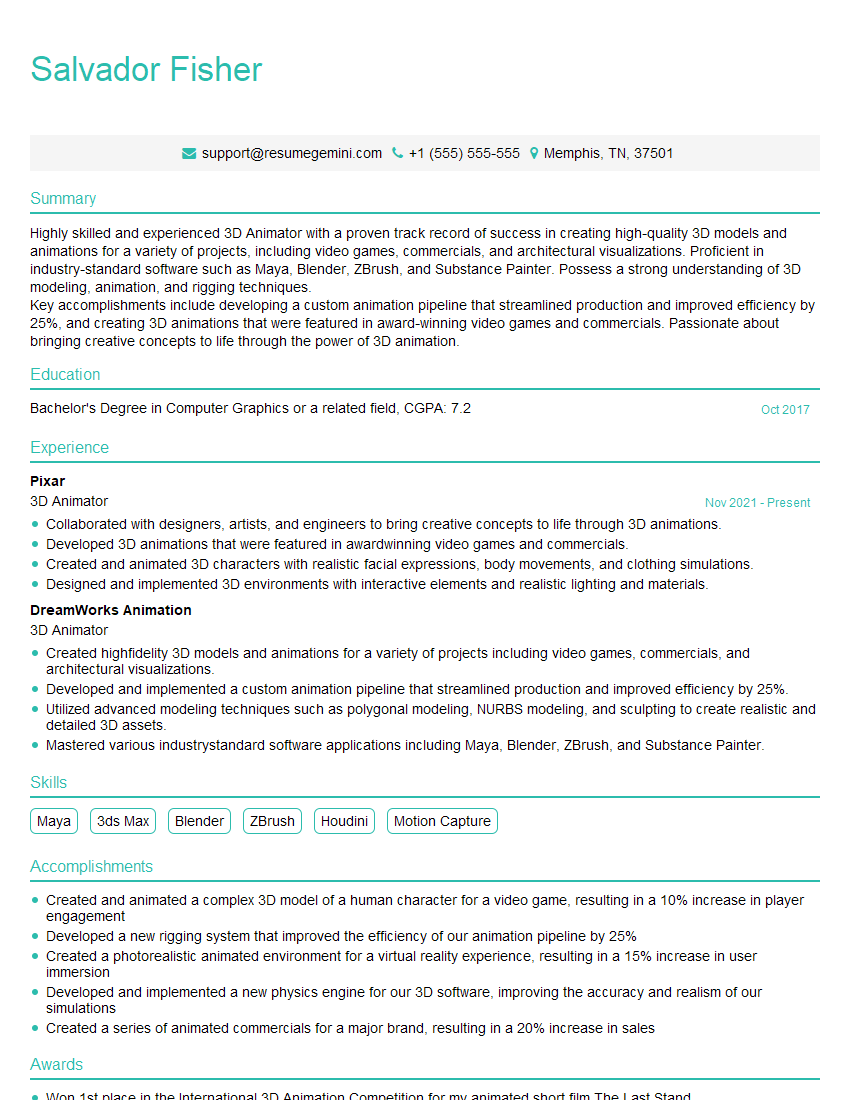

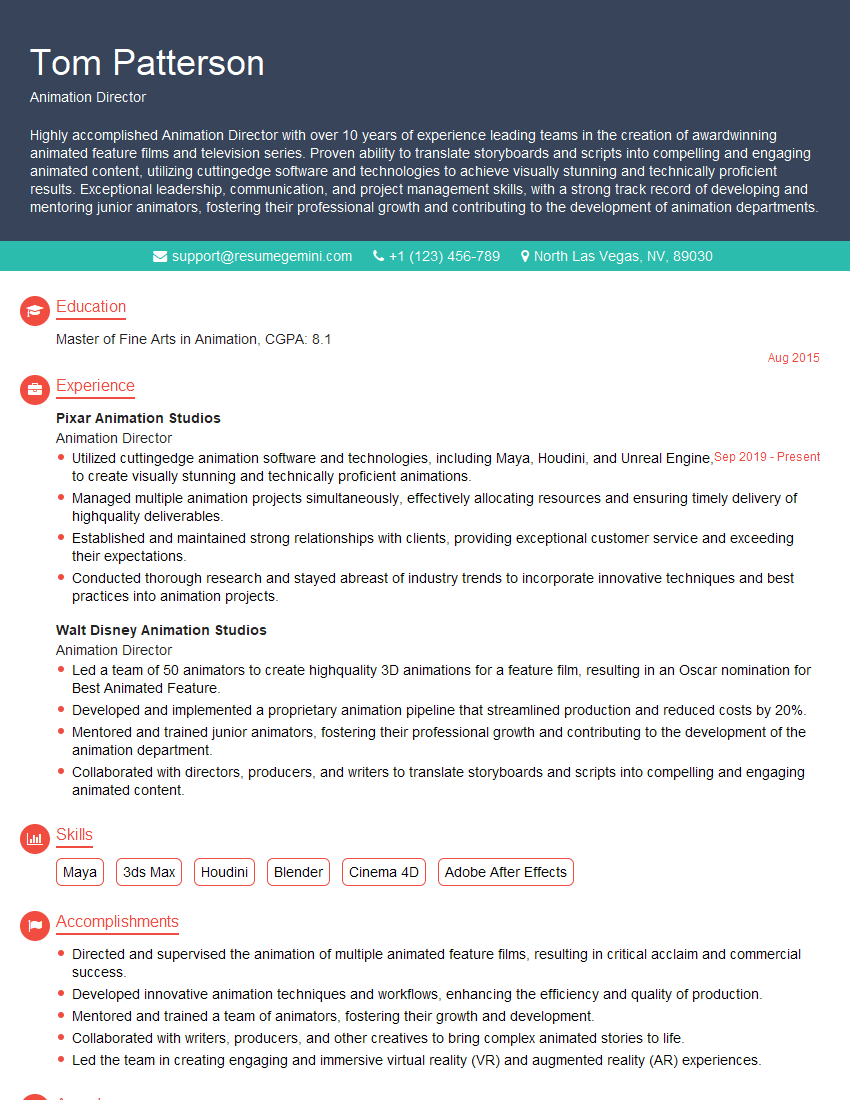

My salary expectations are commensurate with my experience and skillset in the 3D animation industry. Considering my extensive experience with advanced software, my proficiency in various techniques, and my consistent track record of delivering high-quality work, I am targeting a salary range of [Insert Salary Range Here]. I am open to discussing this further and aligning expectations based on the specific details of the role and company benefits.

Key Topics to Learn for Your 3D Animation Software Interview

- Modeling Fundamentals: Understanding polygon modeling, NURBS surfaces, and sculpting techniques. Practical application: Creating believable characters or environments for animation.

- Rigging and Animation Principles: Mastering skeletal rigging, skinning, and animation techniques like squash and stretch, anticipation, and follow-through. Practical application: Animating a character to walk, jump, or interact with the environment convincingly.

- Texturing and Shading: Working with different texture maps (diffuse, normal, specular), shaders, and material properties to achieve realistic or stylized visuals. Practical application: Creating realistic skin, metal, or wood textures.

- Lighting and Rendering: Understanding lighting principles (three-point lighting, global illumination), different render engines, and optimizing render times. Practical application: Creating visually appealing and mood-setting scenes.

- Software-Specific Features: Become proficient in the specific tools and workflows of the software you’re being interviewed for (e.g., Maya, Blender, 3ds Max). Explore advanced features and shortcuts for efficient workflow.

- Workflow and Pipeline: Understanding the stages of a 3D animation project, including asset creation, animation, lighting, rendering, and compositing. Practical application: Discussing your experience managing your own projects efficiently.

- Problem-Solving & Troubleshooting: Develop your ability to troubleshoot common animation issues, optimize performance, and efficiently resolve technical challenges. Practical application: Describe how you’ve overcome a specific technical hurdle in a past project.

Next Steps

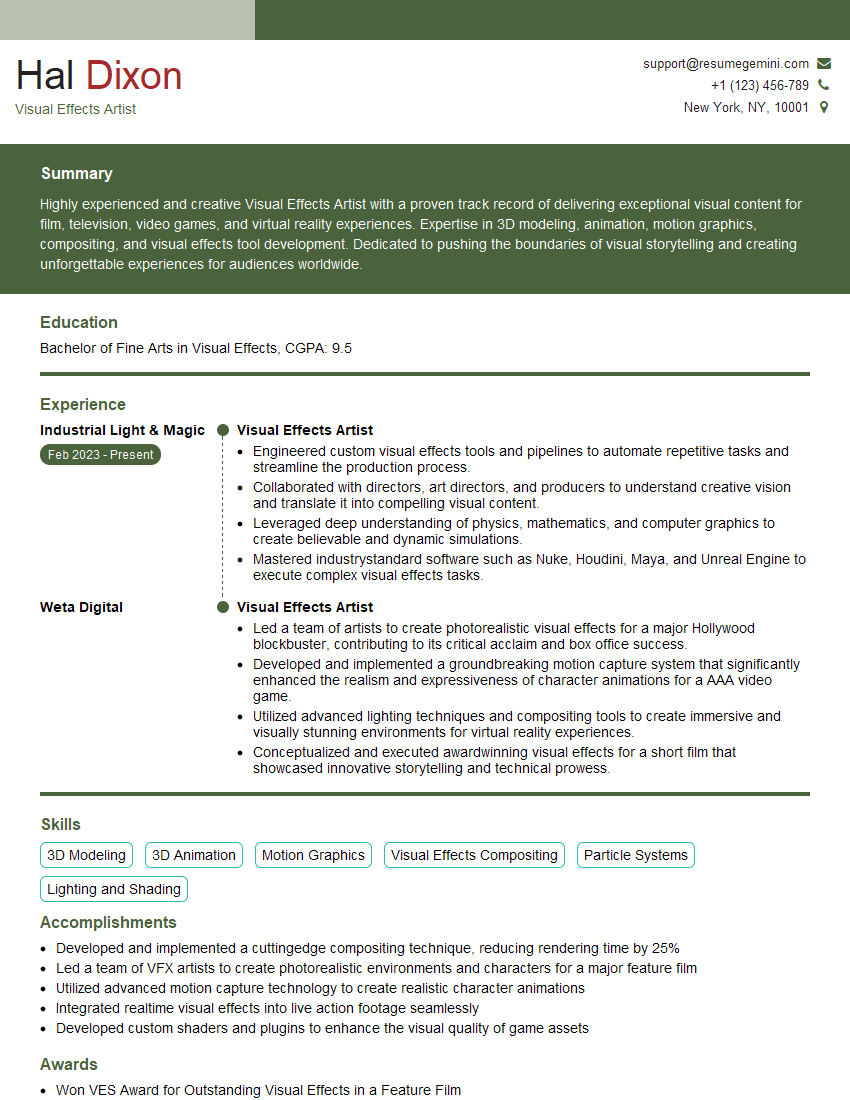

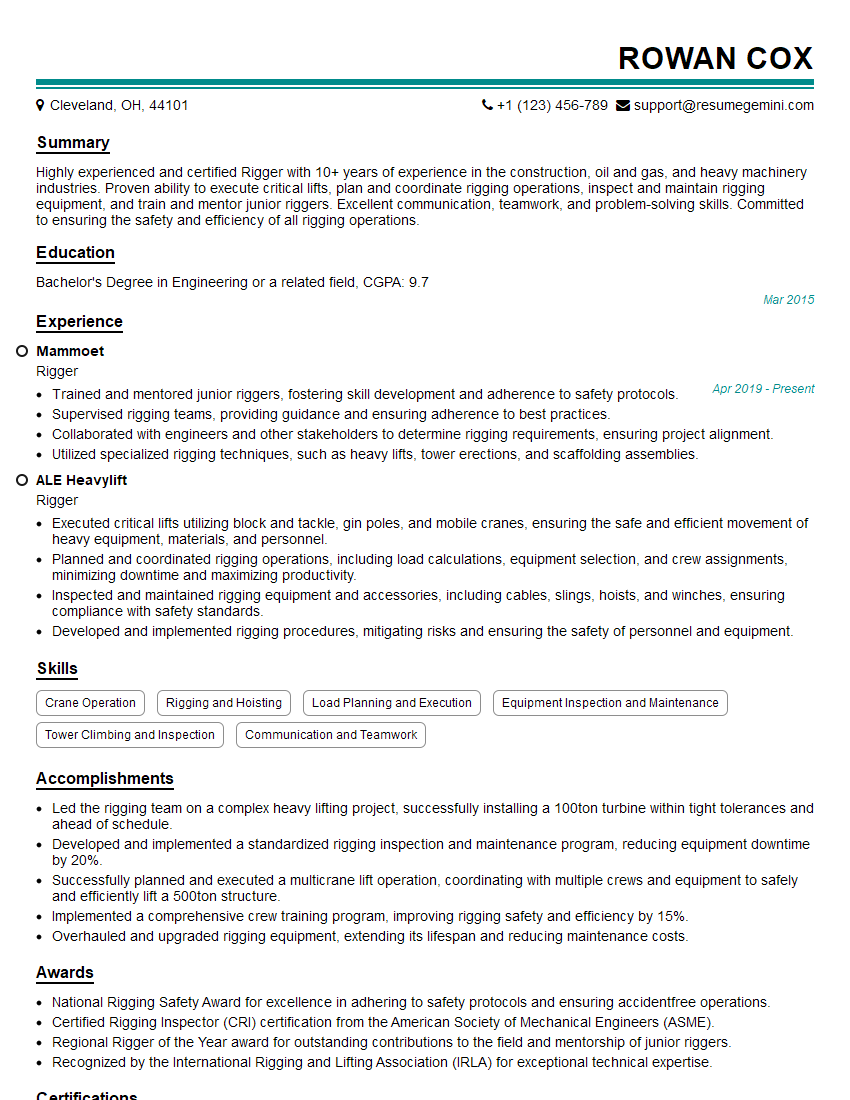

Mastering 3D animation software is crucial for a successful and rewarding career in this dynamic field. It opens doors to exciting opportunities in film, games, advertising, and more! To significantly boost your job prospects, create an ATS-friendly resume that highlights your skills and experience effectively. ResumeGemini is a fantastic resource to help you craft a professional and compelling resume that stands out. They provide examples of resumes tailored specifically to 3D animation software professionals, giving you a head start in showcasing your talents. Invest time in building a strong resume – it’s your first impression with potential employers.

Explore more articles

Users Rating of Our Blogs

Share Your Experience

We value your feedback! Please rate our content and share your thoughts (optional).

What Readers Say About Our Blog

Hi, I represent an SEO company that specialises in getting you AI citations and higher rankings on Google. I’d like to offer you a 100% free SEO audit for your website. Would you be interested?

good