Unlock your full potential by mastering the most common Processor Architecture (x86, ARM) interview questions. This blog offers a deep dive into the critical topics, ensuring you’re not only prepared to answer but to excel. With these insights, you’ll approach your interview with clarity and confidence.

Questions Asked in Processor Architecture (x86, ARM) Interview

Q 1. Explain the difference between x86 and ARM architectures.

x86 and ARM are two dominant instruction set architectures (ISAs) for processors, differing significantly in their design philosophies and applications. x86, primarily used in desktop and server computers, is a complex instruction set computing (CISC) architecture known for its backward compatibility and powerful instructions. ARM, prevalent in mobile devices, embedded systems, and increasingly servers, is a reduced instruction set computing (RISC) architecture emphasizing efficiency and low power consumption.

- Instruction Set Complexity: x86 uses complex instructions that often perform multiple operations at once. ARM utilizes simpler instructions, generally requiring more instructions to accomplish the same task. This leads to differences in code size and execution speed depending on the application.

- Addressing Modes: x86 offers a wider variety of addressing modes, providing flexibility but potentially adding complexity. ARM employs a simpler, more regular set of addressing modes.

- Endianness: x86 is typically little-endian (least significant byte first), while ARM can be both little-endian and big-endian (most significant byte first), offering hardware flexibility.

- Power Consumption: ARM’s simpler instructions and efficient design generally result in lower power consumption compared to x86, making it ideal for battery-powered devices.

- Applications: x86 dominates the desktop and server markets, known for its high computational power. ARM is the dominant architecture in mobile devices, IoT, and is making significant inroads into the server space.

Imagine it like this: x86 is like a Swiss Army knife – powerful and versatile but potentially complex. ARM is more like a set of precision screwdrivers – simpler, efficient, and tailored for specific tasks. The best choice depends on your needs.

Q 2. Describe the different addressing modes in x86.

x86 addressing modes specify how the CPU accesses data in memory. They define how the effective address of an operand is calculated. Key x86 addressing modes include:

- Register Addressing: The operand is directly in a register (e.g.,

mov eax, ebx). This is the fastest mode because it doesn’t involve memory access. - Immediate Addressing: The operand is encoded directly within the instruction itself (e.g.,

add eax, 5). This is efficient for small constant values. - Direct Addressing: The operand’s address is specified directly in the instruction (e.g.,

mov eax, [0x1000]). This address is fixed. - Indirect Addressing: The operand’s address is contained in a register (e.g.,

mov eax, [ebx]). The address is variable and depends on the register’s content. - Base + Index Addressing: The operand’s address is calculated by adding a base register, an index register (often scaled), and an optional displacement (e.g.,

mov eax, [ebx + ecx*4 + 10]). This is often used for array access. - Relative Addressing: The operand’s address is calculated relative to the instruction pointer (IP). This is frequently used for branching.

For example, in base+index addressing, accessing elements in an array stored in memory is highly efficient. The base register points to the start of the array, the index register holds the array index (scaled appropriately if the data type is larger than a byte), and the displacement could be used for offsets.

Q 3. What are the key features of ARM’s NEON architecture?

ARM’s NEON architecture is a SIMD (Single Instruction, Multiple Data) extension that significantly accelerates media processing, image manipulation, and other computationally intensive tasks. Key features include:

- SIMD Instructions: NEON allows performing the same operation on multiple data elements simultaneously, using 64-bit or 128-bit registers. This parallelism speeds up processing substantially.

- Vector Registers: NEON utilizes special vector registers, enabling efficient handling of multiple data items in parallel.

- Data Types: Supports various data types like integers, floating-point numbers, and single-precision vectors, making it flexible.

- Support for various instruction lengths: This adds to flexibility and efficiency depending on the application.

- Integration with ARM Core: NEON is tightly integrated with the ARM core, minimizing overhead and latency.

Consider image processing: NEON can apply a filter to multiple pixels simultaneously, accelerating the filtering process dramatically compared to scalar processing. This is a common application where the parallel processing capabilities shine.

Q 4. Explain the concept of pipelining in a CPU.

Pipelining in a CPU is a technique that allows multiple instructions to be processed concurrently. Think of it like an assembly line in a factory. Instead of completing one instruction fully before starting the next, each instruction is broken down into smaller stages, and different stages can process different instructions simultaneously. This significantly improves instruction throughput (instructions per second).

A typical pipeline might consist of stages like instruction fetch (IF), instruction decode (ID), execute (EX), memory access (MEM), and write-back (WB). Instruction 1 might be in the MEM stage while Instruction 2 is in the EX stage, and Instruction 3 is in the ID stage, all happening at the same time.

However, hazards like data dependencies (where one instruction needs the result of a previous instruction) or branch prediction failures can stall the pipeline. But overall, pipelining is crucial for modern CPUs to achieve high performance.

Q 5. What are branch predictors and how do they work?

Branch predictors attempt to guess whether a branch instruction (like a conditional jump) will be taken or not, before the branch condition is evaluated. This allows the CPU to start fetching instructions from the predicted path, avoiding stalls. If the prediction is correct, it speeds up execution; if incorrect, the pipeline is flushed and refilled from the correct path, causing a performance penalty.

Different prediction techniques exist:

- Static Prediction: Always assumes the branch will be taken (or not taken). Simple but inaccurate.

- Dynamic Prediction: Uses a history table to track past branch behavior and predicts based on that history. More accurate but more complex.

Modern processors often use sophisticated dynamic branch prediction schemes, such as two-level predictors or even more elaborate techniques to improve accuracy. The goal is to minimize pipeline stalls due to branch mispredictions, essential for high performance.

Q 6. Describe the different cache levels in a modern CPU.

Modern CPUs employ multiple levels of cache memory to bridge the speed gap between the processor and main memory (RAM). Each level is smaller and faster than the one below it, forming a hierarchical structure.

- L1 Cache: Extremely fast and small, typically integrated directly onto the CPU die. Often split into L1 data cache (for data) and L1 instruction cache (for instructions).

- L2 Cache: Larger than L1, still very fast, often shared by all cores on a CPU. The access latency is still low.

- L3 Cache: The largest cache level, shared by all cores. Slower than L1 and L2, but still significantly faster than main memory.

The cache hierarchy operates on the principle of locality of reference: recently accessed data is more likely to be accessed again soon. By storing frequently used data in faster caches, the average memory access time is drastically reduced, leading to significant performance improvements.

Q 7. Explain the concept of cache coherence.

Cache coherence ensures that all caches in a multi-core system maintain a consistent view of memory. If multiple cores access and modify the same data, cache coherence protocols ensure that all cores see the most up-to-date version of that data. Without this, inconsistencies and data corruption could occur.

Several protocols achieve cache coherence, including:

- Snooping Protocols: Each cache monitors (snoops) the bus for memory accesses from other caches. If a cache detects a write to data it also holds, it invalidates its copy or updates it, ensuring consistency.

- Directory-Based Protocols: A central directory keeps track of which caches hold copies of each memory block. When a write occurs, the directory notifies only the caches holding that data, reducing bus traffic.

Imagine a shared whiteboard. Without cache coherence, multiple people could write on it simultaneously, resulting in a messy and inaccurate final result. Cache coherence protocols are like a system of rules that ensure only one person can write at a time, maintaining a consistent and accurate representation on the whiteboard.

Q 8. What are the different types of memory in a computer system?

Computer systems employ a hierarchy of memory types, each with different speeds, capacities, and costs. Think of it like a filing cabinet: you keep frequently used documents on your desk (fast access), less frequently used ones in drawers (slower), and archived ones in a separate storage facility (slowest).

- Registers: The fastest memory, residing directly within the CPU. They hold data actively being processed. Imagine these as the CPU’s scratchpad.

- Cache: A smaller, faster memory sitting between the CPU and RAM. It stores frequently accessed data, significantly speeding up processing. Think of it as a well-organized desk drawer containing your most needed items.

- RAM (Random Access Memory): Main memory; stores data and instructions currently in use by the operating system and programs. It’s volatile, meaning data is lost when power is off. This is akin to your main filing cabinet, holding many documents you work with.

- Secondary Storage: Non-volatile memory like hard disk drives (HDDs), solid-state drives (SSDs), and optical drives. They store large amounts of data persistently. Imagine this as your off-site storage facility, containing many documents but requiring more time to access.

The interaction between these different levels is crucial for efficient system performance. For instance, when the CPU needs data, it first checks the registers. If not found, it checks the cache, then RAM, and finally secondary storage, a process called the memory hierarchy.

Q 9. Explain virtual memory and its advantages.

Virtual memory is a memory management technique that allows a computer to use more memory than is physically installed. It achieves this by using a portion of the hard drive as an extension of RAM. Imagine having a small desk but access to a massive warehouse. You keep the most frequently used documents on your desk, but when needed, you retrieve documents from the warehouse and place them on your desk, removing less frequently used items back into the warehouse.

Advantages:

- Increased Capacity: Run programs larger than physical RAM allows.

- Improved Efficiency: Enables multiple programs to run concurrently, even when the combined memory requirements exceed physical RAM.

- Memory Protection: Isolates processes from each other, preventing one program from corrupting another’s memory space.

For example, if your computer has 8GB of RAM and a program requires 12GB, virtual memory allows the program to run by using the remaining 4GB from the hard drive. The operating system manages the swapping of data between RAM and the hard drive (page swapping) transparently, but the slower access to data on the hard drive can significantly impact performance if excessive swapping occurs (known as “thrashing”).

Q 10. What are interrupts and how are they handled?

Interrupts are signals that temporarily halt the CPU’s current execution to handle a more urgent event. Think of it as a phone call interrupting a meeting – you pause the meeting to answer, then return to it.

Handling Interrupts:

- Interrupt Request (IRQ): A hardware device or software event generates an interrupt request.

- Interrupt Handling: The CPU saves its current state (registers, program counter) and jumps to a pre-defined interrupt handler routine.

- Interrupt Service Routine (ISR): The ISR addresses the event that triggered the interrupt (e.g., handling a key press, data arrival, or an error).

- Return from Interrupt: Once the ISR completes, the CPU restores its saved state and resumes execution from where it left off.

Different interrupt types have different priorities. For example, a hardware failure might interrupt a less critical task. Interrupt handling is a fundamental part of operating system functionality, enabling multitasking and responsiveness.

Q 11. Explain the concept of memory segmentation.

Memory segmentation is a memory management scheme that divides a program’s address space into logical segments. Each segment represents a distinct part of the program (code, data, stack, etc.). Think of it as dividing a large document into chapters, each with a specific topic.

Concept: Each segment has its own base address (starting address) and limit (size). The CPU uses a segment selector register to identify which segment is currently being accessed. This allows for better memory organization and protection, since different segments can have different access rights. The segmented approach helps in managing the large memory addresses that can be generated in programs, dividing a large memory space into smaller manageable pieces. This approach was widely adopted by the x86 architecture. ARM architecture uses a different memory management approach that involves paging.

A key advantage of segmentation is code reusability. Different programs can share the same code segment without conflicts, as long as their data segments are separate. However, it can also lead to increased memory fragmentation.

Q 12. Describe the different stages of the instruction cycle.

The instruction cycle is the fundamental process by which a CPU executes instructions. It’s a repetitive sequence of steps that fetch, decode, execute, and write back instructions. Think of it as an assembly line producing cars; each step plays a critical role.

- Fetch: The CPU retrieves the next instruction from memory, using the program counter (PC) to know where to find it. The PC holds the address of the next instruction to be executed.

- Decode: The CPU decodes the fetched instruction, determining the operation to be performed and the operands involved.

- Execute: The CPU performs the operation specified by the instruction. This might involve arithmetic calculations, data movement, or control flow changes.

- Write Back: The result of the execution is written back to a register or memory location.

This cycle repeats for each instruction in the program, forming the basic rhythm of computer execution. Modern CPUs often overlap these stages for improved performance, but the underlying principles remain the same.

Q 13. What are superscalar processors?

Superscalar processors are CPUs capable of executing multiple instructions simultaneously. Instead of processing one instruction after another, they use multiple execution units to work on different instructions concurrently. Think of it as having multiple assembly lines producing cars at the same time. This significantly boosts performance.

This is achieved through techniques such as pipelining (overlapping instruction execution stages) and multiple execution units, allowing several instructions to be processed in parallel. Modern CPUs are predominantly superscalar, leveraging this approach to improve throughput.

The effectiveness of superscalar processing relies on the ability of the CPU to identify independent instructions (instructions that can be executed without affecting each other). If many instructions depend on the result of previous ones, the advantage is lessened. Consequently, compiler optimization plays a critical role in maximizing superscalar performance.

Q 14. Explain the concept of out-of-order execution.

Out-of-order execution is a CPU technique that allows instructions to be executed in an order different from their program order. This increases performance by avoiding stalls caused by dependencies between instructions. Imagine construction workers working in parallel – sometimes they work on tasks in a different order than the architect’s initial plan, resulting in faster completion.

How it works: The CPU examines the instruction stream and identifies instructions that are ready to execute, regardless of their position in the original program order. If an instruction depends on the result of a previous one, it will be stalled until that result is available. A sophisticated mechanism within the CPU manages the instruction execution order, ensuring that the final results are consistent with the original program sequence.

Out-of-order execution is a crucial component in modern high-performance processors, allowing for significant performance improvements. However, it adds complexity to the CPU design and requires careful management to prevent errors.

Q 15. What is a micro-operation (μop)?

A micro-operation (μop), or microinstruction, is a low-level instruction that the processor’s control unit actually executes. Think of it as a tiny step in a larger process. A single high-level assembly instruction, like ADD R1, R2, R3 (add the contents of registers R2 and R3 and store the result in R1), might be broken down into several μops. This decomposition simplifies the processor’s design and allows for better optimization. For instance, fetching operands, performing the addition, and writing the result back are all separate μops. Modern processors, especially those with out-of-order execution capabilities, heavily rely on μops to enhance performance.

Career Expert Tips:

- Ace those interviews! Prepare effectively by reviewing the Top 50 Most Common Interview Questions on ResumeGemini.

- Navigate your job search with confidence! Explore a wide range of Career Tips on ResumeGemini. Learn about common challenges and recommendations to overcome them.

- Craft the perfect resume! Master the Art of Resume Writing with ResumeGemini’s guide. Showcase your unique qualifications and achievements effectively.

- Don’t miss out on holiday savings! Build your dream resume with ResumeGemini’s ATS optimized templates.

Q 16. Explain the difference between RISC and CISC architectures.

RISC (Reduced Instruction Set Computer) and CISC (Complex Instruction Set Computer) architectures differ fundamentally in their instruction set design. CISC architectures, like the classic x86, feature a large number of complex instructions that can perform multiple operations in a single instruction. This often involves intricate microcode within the processor to handle the instruction’s execution. In contrast, RISC architectures, such as ARM, employ a smaller, simpler set of instructions, each performing a single, well-defined operation. Each instruction typically executes in a single clock cycle. This simpler design allows for more efficient pipelining and parallel processing.

Q 17. What are the advantages and disadvantages of RISC vs. CISC?

RISC Advantages: Simpler instruction decoding, easier pipelining, faster clock speeds, generally higher performance in certain applications, reduced hardware complexity. RISC Disadvantages: Requires more instructions to accomplish complex tasks, potentially leading to larger program sizes.

CISC Advantages: Fewer instructions needed for complex operations, potentially smaller program sizes, potentially faster execution for specific complex operations. CISC Disadvantages: More complex instruction decoding, harder to pipeline efficiently, lower clock speeds compared to RISC for similar technology, increased hardware complexity.

Consider this analogy: Imagine building with LEGOs. CISC is like having a few giant, specialized LEGO structures – powerful, but less versatile. RISC is like having many smaller, simpler bricks – more adaptable, and better for complex designs once mastered.

Q 18. Explain the role of a Translation Lookaside Buffer (TLB).

The Translation Lookaside Buffer (TLB) is a cache that speeds up virtual-to-physical address translation. In modern operating systems, programs run in virtual memory, where addresses used by the program (virtual addresses) are mapped to physical addresses in RAM. The TLB stores recently used address translations, avoiding the slower process of looking them up in the page table. When the processor needs to access memory, it first checks the TLB. If the translation is found (a TLB hit), it’s used directly; otherwise (a TLB miss), the page table is consulted, the translation is performed, and the result is often added to the TLB for future use.

Q 19. Describe the different types of memory access (e.g., direct, indirect).

Memory access modes can be broadly categorized:

- Direct Addressing: The memory address is explicitly specified in the instruction. For example,

LOAD R1, 1000loads the contents of memory location 1000 into register R1. - Indirect Addressing: The memory address is stored in a register or another memory location. The instruction specifies the location holding the address, not the address itself. For instance,

LOAD R1, (R2)loads the contents of the memory location whose address is stored in register R2 into register R1. - Register Indirect Addressing: Similar to indirect addressing but uses a register as the pointer directly.

- Indexed Addressing: An index register is added to a base address to calculate the effective memory address. This is useful for accessing arrays or other sequential data structures.

- Base + Offset Addressing: Combines a base address with an offset to calculate the final address.

The choice of addressing mode impacts program size and execution speed. Direct addressing is simple and fast but can lead to larger code if many addresses are hardcoded; indirect addressing offers flexibility but involves an extra memory access.

Q 20. How does a cache work and what are its performance benefits?

A cache is a smaller, faster memory that sits between the CPU and main memory (RAM). It stores frequently accessed data and instructions, allowing the CPU to retrieve them much faster than accessing main memory. Imagine a librarian keeping frequently requested books close at hand. This significantly reduces the average memory access time, a critical factor in overall system performance. Caches are typically hierarchical, with multiple levels (L1, L2, L3, etc.), each progressively larger and slower.

Performance Benefits: Reduced memory access time, improved CPU utilization, faster program execution.

Q 21. Explain the concept of cache replacement policies (e.g., LRU, FIFO).

Cache replacement policies determine which data is evicted from the cache when it’s full and a new block needs to be added. Several policies exist:

- LRU (Least Recently Used): Evicts the block that hasn’t been accessed for the longest time. Intuitive and generally efficient, but requires tracking access history, adding complexity.

- FIFO (First-In, First-Out): Evicts the oldest block. Simple to implement but can be inefficient if frequently used blocks are evicted early.

- Random Replacement: Randomly selects a block for eviction. Simple, but unpredictable performance.

- Optimal Replacement: Evicts the block that will be used farthest in the future (theoretically optimal, but impossible to implement in practice).

The choice of policy impacts cache performance. LRU tends to perform well in practice but can be more complex to implement than FIFO or Random. The best policy depends on the workload characteristics and cache design.

Q 22. Discuss different techniques for power management in processors.

Power management in processors is crucial for extending battery life in mobile devices and reducing energy costs in data centers. It involves a variety of techniques aimed at minimizing power consumption without significantly impacting performance. These techniques can be broadly categorized into:

- Clock gating: This technique disables the clock signal to inactive parts of the processor, preventing them from consuming power. Think of it like turning off individual lights in a room you’re not using.

- Voltage scaling: Reducing the voltage supplied to the processor lowers its power consumption, but also reduces its operating frequency. It’s a trade-off between performance and power saving. This is often coupled with dynamic frequency scaling.

- Dynamic frequency scaling (DFS): This adjusts the processor’s clock speed based on the workload. When the processor is idle or under light load, the clock speed is lowered, conserving power. When demanding tasks arrive, the clock speed increases to meet performance requirements. This is common in laptops and smartphones.

- Power gating: This involves completely powering down inactive cores or modules within the processor. It’s a more aggressive approach than clock gating, resulting in significant power savings but with a latency penalty when the module needs to be reactivated.

- Thermal throttling: If the processor gets too hot, it will automatically reduce its clock speed or power consumption to prevent damage. This is a safety mechanism, not a deliberate power saving technique, but it indirectly contributes to power management.

- Sleep states: Processors offer various sleep states (e.g., C-states in x86), where different parts of the processor are powered down to varying degrees depending on the level of sleep. The deeper the sleep state, the lower the power consumption, but the longer the wake-up time.

Modern processors often employ a combination of these techniques to achieve optimal power efficiency. The specific implementation varies widely depending on the processor architecture, target application, and power budget.

Q 23. What are the challenges in designing a multi-core processor?

Designing multi-core processors presents several significant challenges:

- Inter-core communication: Efficiently transferring data between cores is crucial. Poorly designed communication mechanisms can create bottlenecks, negating the performance benefits of multiple cores. This often involves specialized interconnect fabrics like ring buses or mesh networks.

- Cache coherence: Ensuring that all cores have a consistent view of the data in shared caches is vital. Inconsistencies can lead to unpredictable behavior and data corruption. Sophisticated cache coherence protocols (e.g., MESI) are essential.

- Power consumption: Multi-core processors consume considerably more power than single-core processors. Careful power management techniques are necessary to minimize power consumption and thermal issues.

- Thermal management: The increased power consumption also leads to higher temperatures. Effective cooling solutions are vital to prevent thermal throttling and processor damage. This often involves sophisticated heat sinks and fans.

- Software development challenges: Writing efficient parallel programs that effectively utilize multiple cores requires specialized programming skills and tools. Poorly parallelized code can lead to performance degradation instead of improvement.

- Complexity of design and verification: Designing and verifying the correctness of a multi-core processor is significantly more complex than a single-core processor due to the increased number of components and interactions.

Successfully addressing these challenges requires careful consideration of architectural choices, advanced power management strategies, and robust verification methodologies. The design process often involves extensive simulation and testing to identify and mitigate potential issues.

Q 24. Explain the concept of SIMD (Single Instruction, Multiple Data).

SIMD, or Single Instruction, Multiple Data, is a parallel processing paradigm where a single instruction operates on multiple data points simultaneously. Imagine it like having multiple workers performing the same task on different pieces of material at the same time. This contrasts with SISD (Single Instruction, Single Data), the traditional sequential processing model.

For example, consider adding two vectors: [1, 2, 3] and [4, 5, 6]. A SIMD instruction could perform all three additions (1+4, 2+5, 3+6) concurrently, significantly faster than performing them sequentially. This is particularly useful for tasks involving media processing, image manipulation, scientific computation, and cryptography.

SIMD instructions are implemented through specialized hardware units within the processor, often containing multiple execution units capable of operating in parallel. The effectiveness of SIMD depends on the data being processed being amenable to parallel operations and the availability of appropriate SIMD instructions.

Q 25. Describe different instruction set extensions (e.g., MMX, SSE, AVX).

Instruction set extensions enhance a processor’s capabilities by adding new instructions optimized for specific types of operations. These extensions often target multimedia processing, scientific computing, or cryptography.

- MMX (Multimedia Extensions): An early x86 extension that added support for processing multiple integers simultaneously. It focused on applications like video and audio processing.

- SSE (Streaming SIMD Extensions): Extended MMX by adding support for single-precision floating-point operations, enhancing its capabilities for graphics and scientific computing. SSE2 further improved performance by adding double-precision support.

- AVX (Advanced Vector Extensions): A significant advancement providing wider registers and more powerful SIMD capabilities compared to SSE. AVX-512 represents the latest generation with even broader registers and more instructions. AVX is commonly used in high-performance computing applications.

These extensions provide substantial performance improvements for applications that can leverage them effectively. However, they introduce complexity as not all processors support all extensions. Programmers need to carefully consider the target processor and its instruction set capabilities.

ARM also has its own SIMD extensions like NEON, providing similar benefits for ARM-based processors.

Q 26. How does virtualization work at the processor level?

Processor-level virtualization allows multiple virtual machines (VMs) to run concurrently on a single physical processor. This is achieved through a combination of hardware and software mechanisms.

Hardware support: Modern processors include features like:

- Hardware-assisted virtualization extensions (e.g., Intel VT-x, AMD-V): These provide mechanisms for efficient context switching between VMs, protecting the hypervisor (the software that manages the VMs) from malicious code within the VMs, and improving performance.

- Memory management unit (MMU) enhancements: The MMU plays a crucial role by providing each VM with its own virtual address space, preventing them from interfering with each other.

Software support: A hypervisor or virtual machine monitor (VMM) is required. This software manages the resources of the physical processor and distributes them to the VMs. It handles tasks such as scheduling, memory allocation, and I/O operations.

In essence, the processor’s hardware assists in isolating the VMs, creating the illusion that each VM has its own dedicated processor and memory. The hypervisor ensures the smooth operation of these isolated environments.

Q 27. Explain the role of the MMU (Memory Management Unit).

The Memory Management Unit (MMU) is a crucial component of a computer system that translates virtual addresses used by programs into physical addresses in RAM. This translation serves several vital purposes:

- Address space protection: Each process gets its own virtual address space. Even if a program tries to access memory outside its allocated space, the MMU prevents it, preventing crashes and protecting system stability. This is essential for security and multitasking.

- Memory sharing: The MMU enables efficient and controlled sharing of memory between processes when necessary.

- Virtual memory: The MMU allows the use of virtual memory, which extends the address space beyond physical RAM by using a swap space on a hard drive. This is crucial for running larger programs than available physical memory. The MMU manages paging and swapping of data between RAM and the swap space.

- Memory protection: The MMU helps enforce access control permissions, allowing different levels of access (read, write, execute) to different memory regions. This further enhances security and stability.

The MMU uses page tables to perform the address translation. These tables map virtual pages to physical pages, managing the allocation and protection of memory. The process of address translation involves a series of table lookups before the actual physical address is found.

Q 28. Describe your experience with processor architecture design tools.

Throughout my career, I have extensively used various processor architecture design tools, including:

- SystemVerilog/VHDL: For hardware description and modeling. I’ve utilized these languages for detailed modeling of processor cores, caches, and memory controllers, creating both RTL and behavioral models for simulation and verification.

- Simulation tools (ModelSim, QuestaSim): For simulating and verifying the designs. I have used these tools to identify and debug design flaws and ensure functional correctness before physical implementation.

- Synthesis tools (Synopsys Design Compiler, Cadence RTL Compiler): To convert the HDL code into a netlist suitable for physical implementation. I have experience optimizing designs for area, performance, and power using these tools.

- Static timing analysis (STA) tools (Synopsys PrimeTime, Cadence Tempus): To verify the timing closure of the design. This ensures that all timing constraints are met, ensuring the processor operates correctly at the desired clock frequency.

- Formal verification tools (Cadence Jasper, Synopsys VC Formal): For verifying the correctness of the design against specifications, using techniques like model checking and equivalence checking. This increases confidence in the design’s reliability and robustness.

My experience extends to utilizing these tools in both academic and industrial projects, contributing to the design and verification of various processor architectures, including RISC-V and custom instruction set architectures. I have been involved in the full design flow, from initial architectural design to final physical implementation and verification.

Key Topics to Learn for Processor Architecture (x86, ARM) Interview

- Instruction Set Architectures (ISA): Understand the fundamental differences between x86 (CISC) and ARM (RISC) architectures, including instruction formats, addressing modes, and instruction pipelining. Consider the trade-offs between complexity and performance.

- Pipeline Stages and Hazards: Deeply grasp the concept of instruction pipelining, common hazards (data, control, structural), and techniques to mitigate these hazards (forwarding, branch prediction, stalling).

- Cache Memory Hierarchy: Master the concepts of caches (L1, L2, L3), cache coherence protocols (e.g., MESI), and their impact on overall system performance. Be prepared to discuss cache replacement algorithms (LRU, FIFO).

- Memory Management Units (MMUs): Explain virtual memory, paging, segmentation, and their roles in memory protection and efficient memory utilization. Understand translation lookaside buffers (TLBs).

- Parallel Processing and Multi-core Architectures: Discuss multi-core processors, thread management, synchronization primitives (mutexes, semaphores), and the challenges of parallel programming.

- Interrupts and Exception Handling: Understand interrupt mechanisms, interrupt handling routines, and exception handling in both x86 and ARM architectures. Be prepared to discuss different types of interrupts and their priorities.

- Practical Application: Analyze performance bottlenecks in code execution related to cache misses, pipeline stalls, or memory access patterns. Discuss optimization techniques to improve performance.

- Advanced Topics (Optional): Explore topics like out-of-order execution, branch prediction algorithms, vector processing (SIMD), and virtualization technologies relevant to either architecture for a competitive edge.

Next Steps

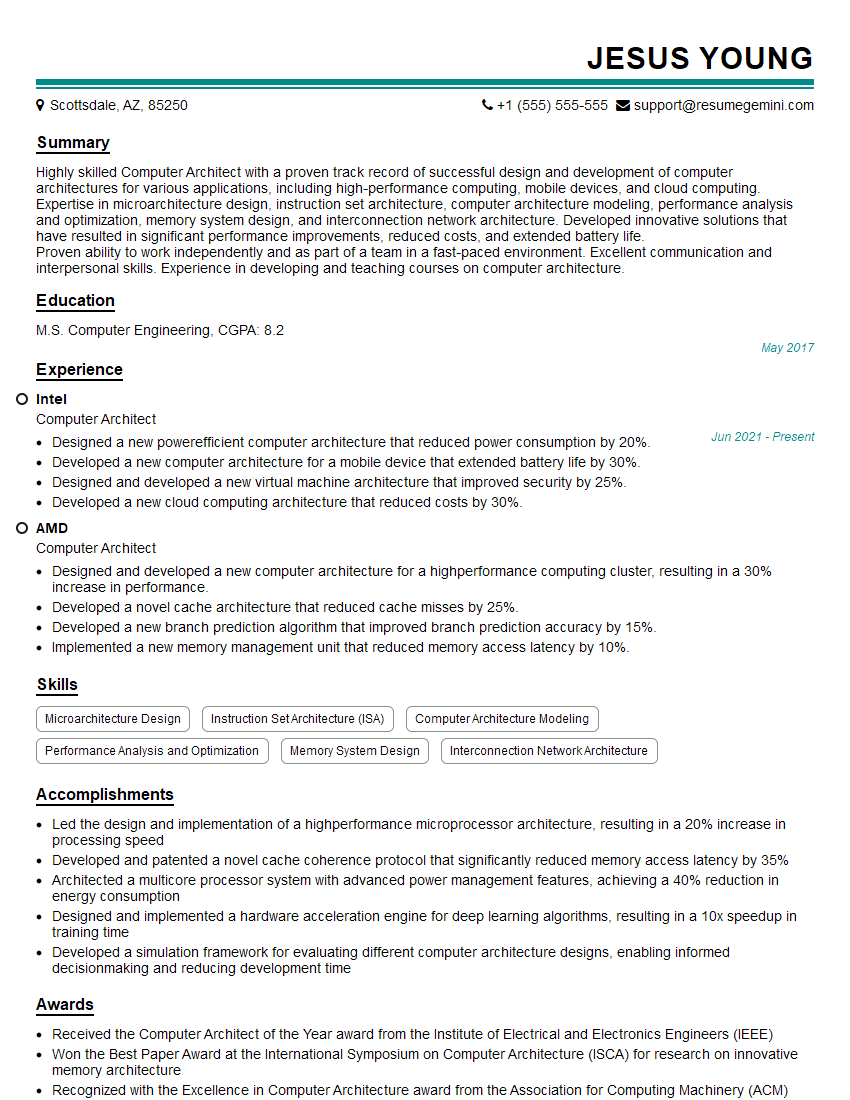

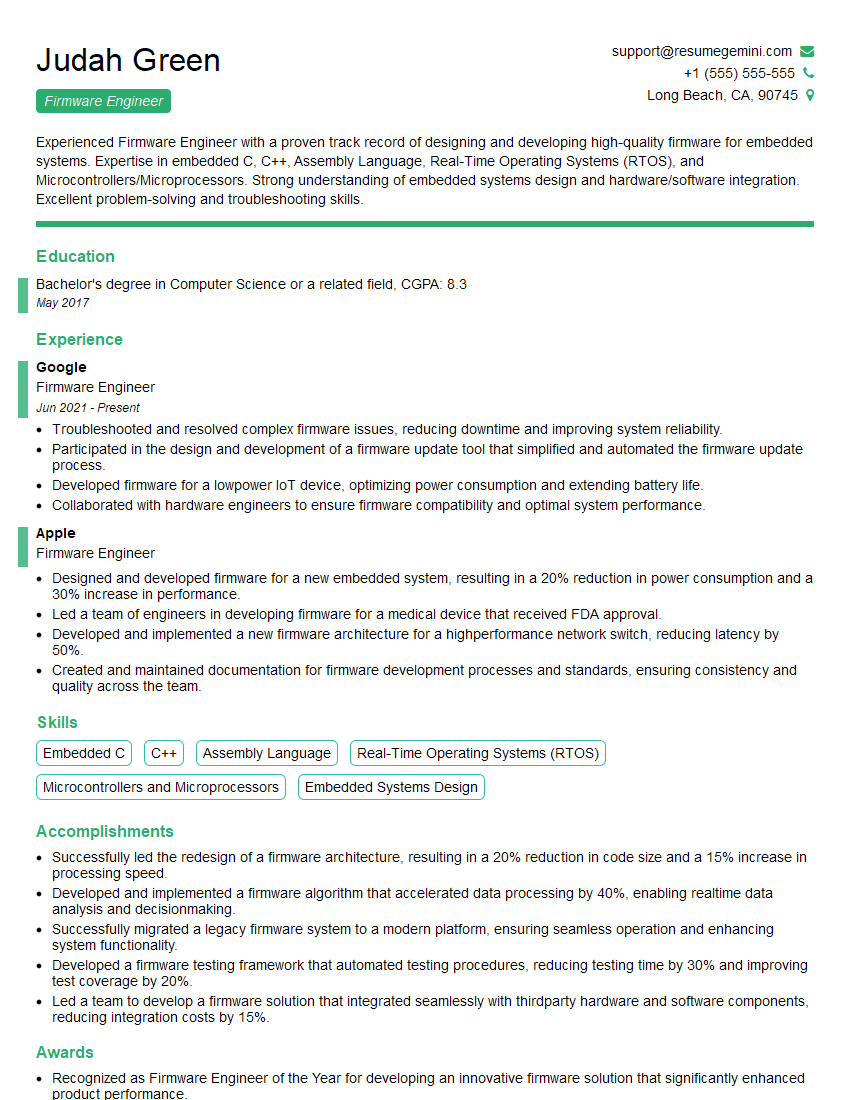

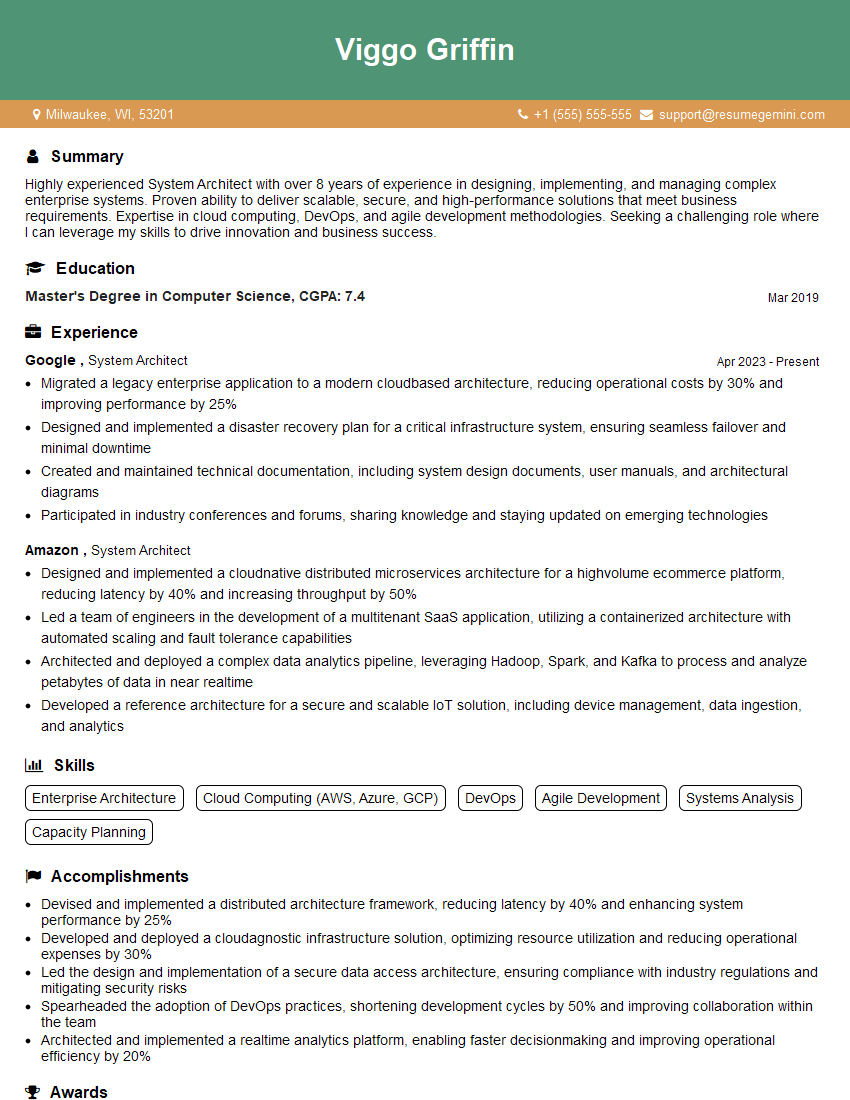

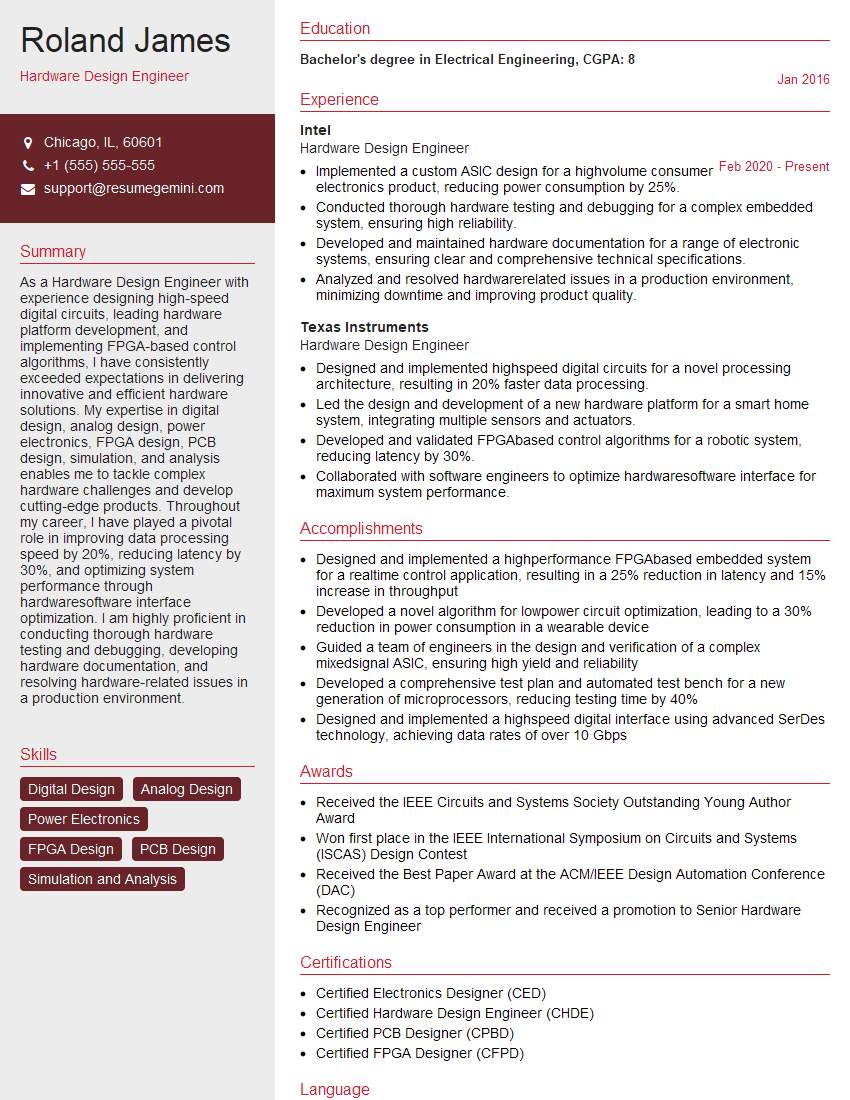

Mastering Processor Architecture (x86 and ARM) is crucial for career advancement in various fields, including embedded systems, high-performance computing, and software engineering. A strong understanding of these architectures demonstrates a deep technical proficiency highly valued by employers. To increase your job prospects, create an ATS-friendly resume that highlights your skills and experience effectively. ResumeGemini is a trusted resource that can help you build a professional and impactful resume. ResumeGemini offers examples of resumes tailored to Processor Architecture (x86, ARM) roles, providing a template for your own resume creation. Invest in crafting a compelling resume – it’s your first impression with potential employers!

Explore more articles

Users Rating of Our Blogs

Share Your Experience

We value your feedback! Please rate our content and share your thoughts (optional).

What Readers Say About Our Blog

Hi, I represent an SEO company that specialises in getting you AI citations and higher rankings on Google. I’d like to offer you a 100% free SEO audit for your website. Would you be interested?

good