Cracking a skill-specific interview, like one for Fusion compositing, requires understanding the nuances of the role. In this blog, we present the questions you’re most likely to encounter, along with insights into how to answer them effectively. Let’s ensure you’re ready to make a strong impression.

Questions Asked in Fusion compositing Interview

Q 1. Explain the difference between keyframing and expression-based animation in Fusion.

In Fusion, both keyframing and expressions offer ways to animate parameters, but they differ significantly in their approach. Keyframing is a direct, visual method where you set values at specific points in time, letting Fusion interpolate smoothly between them. Think of it like drawing a series of points on a graph; Fusion connects the dots to create the animation. Expressions, on the other hand, use code (usually a variation of JavaScript) to dynamically control parameter values. This allows for far more complex and procedural animations. It’s like giving Fusion a formula to follow, allowing for responsive and data-driven animations.

Example: Let’s say you want to animate a layer’s position. With keyframing, you’d manually set the x and y coordinates at various frames. With expressions, you might write something like pos = [time*100, sin(time*10)*50], making the layer move horizontally at a constant speed and oscillate vertically in a sinusoidal pattern. Keyframing is great for simple animations, while expressions shine when you need precise control, procedural generation, or animations tied to other parameters within the composite.

Q 2. Describe your experience with node-based compositing workflows.

My experience with node-based compositing workflows in Fusion is extensive. I’m highly proficient in building complex composites using a node-based structure, understanding the flow of data and how each node impacts the final output. I frequently leverage this approach for managing layers, effects, and transitions. The strength of this methodology lies in its modularity; it simplifies complex operations by breaking them into manageable chunks and allowing for easy adjustments and iterative refinement. For example, if I need to tweak a color correction, I only need to modify the Color Corrector node without affecting the rest of the composite.

I’m also experienced in organizing nodes into clear and well-documented structures, making complex projects more manageable. Techniques like grouping nodes and using custom node names help improve workflow efficiency and understanding. I believe this systematic organization is crucial for collaborative projects and for maintaining projects over longer periods.

Q 3. How do you manage color space transformations in Fusion?

Color space management is critical for accurate color reproduction and preventing unexpected color shifts in Fusion. I typically handle this using Fusion’s built-in color management tools. This involves setting appropriate color spaces for input footage (usually determined from the camera’s metadata or source information), working in a consistent color space throughout the compositing process (often Rec.709 for broadcast or a wider gamut like ACES for more flexibility), and then transforming to the final output color space. This frequently entails using Color Space transform nodes strategically placed within my node tree.

For instance, if I’m compositing footage shot with different cameras, I would first transform all the footage to a common working color space. This prevents problems like color banding or mismatched colors. Then, before output, a final transformation to the target color space (like sRGB for web or a print profile) ensures accurate color delivery to the intended destination. I also ensure that my monitor is correctly calibrated to match my working color space to avoid any visual discrepancies.

Q 4. Explain your process for creating realistic smoke or fire effects in Fusion.

Creating realistic smoke or fire effects in Fusion often involves a multi-stage process. I usually start with particle simulations, either using plugins or generating my own particle systems. These systems allow for the creation of complex movement and variations in density and opacity. Then, I often use procedural shaders to control the appearance of the particles, adding details such as glowing embers, wispy smoke trails, or turbulent fire behavior.

After generating the base effect, I carefully refine the look by layering and blending techniques. I might add subtle glows and highlights using glows and light wraps. Color correction and grading are crucial to achieving a photorealistic result, as is incorporating subtle volumetric lighting effects. Finally, I might integrate the simulated smoke or fire with live-action footage, using techniques like keying, masking, and compositing nodes to seamlessly integrate these elements.

A typical workflow might involve using a particle system for the base effect, followed by a Color Corrector node for fine-tuning the color and a Glow node to enhance the realistic visual appearance. It is common to use multiple layers of effects for a more realistic representation.

Q 5. How do you troubleshoot common compositing problems such as flickering or artifacts?

Troubleshooting flickering or artifacts in Fusion often involves a systematic approach. First, I check for issues in the source footage itself. Flickering might stem from problems during acquisition (like interlacing issues or sensor noise). Artifacts can be caused by compression problems or damaged files.

If the source footage is clean, I move on to examine the compositing nodes. Flickering can sometimes result from mismatched frame rates or resolutions between layers. Artifacts often arise from incorrect blending modes, filter settings, or numerical precision issues within specific nodes. I meticulously examine each node, checking for issues like pre-multiplied alpha channels, incorrect scaling, or aliasing effects. Zoom in to scrutinize the affected areas to better identify the source of the problem.

Strategies for solving these problems include: checking for mismatched frame rates and resolutions, adjusting blending modes, utilizing anti-aliasing filters, and carefully examining the node structure for potential calculation errors. A thorough approach, combined with a methodical approach to checking each node, is key to eliminating these issues.

Q 6. What are some techniques for optimizing render times in Fusion?

Optimizing render times in Fusion relies on several strategies. One key aspect is to use lower resolutions during the initial stages of compositing, switching to higher resolutions only for the final render. This significantly reduces processing time.

Another technique is to use pre-rendered elements wherever possible. If a portion of the composite is highly complex (e.g., a detailed particle effect), it’s often more efficient to render that element separately at a higher resolution and then composite it into the main scene. This offloads processing from the main render queue. I also make effective use of Fusion’s cache system; ensuring that renders are efficiently cached reduces repetitive calculations.

Furthermore, selecting appropriate render settings is crucial. Choosing optimized render settings, avoiding unnecessary passes, and utilizing Fusion’s built-in optimization features can significantly improve render performance. Finally, the system hardware can greatly impact performance; sufficient RAM and a fast CPU/GPU are essential for speedy rendering times.

Q 7. Describe your experience with rotoscoping and keying techniques.

Rotoscoping and keying are fundamental compositing skills. Rotoscoping, the process of manually tracing an object’s outline on each frame, is essential for isolating moving objects with complex, irregular shapes, especially when automatic keying fails. I’m proficient in using various rotoscoping tools within Fusion, often employing shape layers, splines, and Bezier curves for accurate masks. I use these masks to cut out or isolate the subject from the background, enabling precise control and allowing intricate details to be handled manually.

Keying, on the other hand, involves extracting a foreground subject from its background using color information. I’m experienced with various keying techniques, including chroma keying (green or blue screen), luminance keying, and more sophisticated techniques like spill suppression and color correction. I select the appropriate keying method based on the characteristics of the background and the desired outcome, and I’m experienced in adjusting keying parameters to achieve clean mattes with minimal spill or edge artifacts. Both rotoscoping and keying frequently necessitate the use of additional refinement tools, such as edge blur, mattes and more complex masking for a truly clean and polished final effect.

Q 8. How do you work with multiple layers and blend modes in Fusion?

Working with multiple layers and blend modes in Fusion is fundamental to compositing. Think of it like layering paint on a canvas, but with far more control. Each layer contains an image or element, and blend modes dictate how these layers interact.

Fusion offers a vast array of blend modes, each impacting how the pixels of the top layer combine with those of the underlying layer. For instance, ‘Normal’ mode simply places the top layer over the bottom. ‘Multiply’ darkens the underlying layer, often used for shadows or darkening effects. ‘Screen’ brightens it, great for highlights or adding glows. ‘Add’ combines the pixel values directly, creating bright, often overexposed effects.

- Normal: Standard layering, no blending.

- Multiply: Darkens underlying layer.

- Screen: Brightens underlying layer.

- Overlay: Blends both, depending on base layer brightness.

- Difference: Shows the difference between layers.

Managing multiple layers efficiently involves using folders to organize elements, naming layers clearly, and leveraging Fusion’s node-based structure for non-destructive editing. For example, I often use groups to isolate complex elements or effects, making adjustments without affecting the rest of the composition. A complex shot might have layers for background plates, foreground elements, visual effects, and color correction, each neatly organized in its own folder within the Fusion node tree. I’ll often use color-coded folders to further organize this.

Q 9. Explain your understanding of color correction and grading in Fusion.

Color correction and grading in Fusion are crucial for achieving the desired look and feel of a shot. Color correction aims to fix inconsistencies and inaccuracies in footage, while color grading is a more stylistic process, enhancing mood and atmosphere. In Fusion, both are primarily achieved using nodes like the Color Corrector, Grade, and Luma Key nodes.

The Color Corrector offers precise adjustments for individual color channels (red, green, blue), allowing me to correct color casts or balance exposure. For example, I might use it to remove a color cast from a scene shot under tungsten lighting. The Grade node provides more artistic controls, allowing me to adjust overall contrast, saturation, and hue, as well as utilizing curves and other tools for fine-tuning color shifts. I might use a curves node to subtly adjust midtones for a warmer cinematic look. Luma keying, on the other hand, allows for isolation of specific luminance levels; essential for creating clean mattes or manipulating specific areas within an image.

I often chain these nodes together to achieve complex color grading effects. For instance, I might start with a Color Corrector to fix any color imbalances, followed by a Grade node to apply a stylistic look, perhaps enhancing saturation and contrast, and finishing with a Luma Key to isolate and subtly tweak specific areas of the image.

Q 10. How familiar are you with Fusion’s particle systems?

I’m very familiar with Fusion’s particle systems. They’re incredibly versatile tools for creating realistic or stylized effects, ranging from simple sparks and dust to complex simulations of smoke, fire, or even crowds. Fusion’s particle system is based on a node-based workflow, allowing for non-destructive manipulation of particle properties.

The Particle System node itself defines the overall properties of the particles – size, speed, color, life span, etc. However, the real power lies in the ability to use other nodes to shape and influence the behavior. For example, using a Force node, I could simulate the effect of wind on a cloud of smoke, while a Noise node could add variations in particle movement for a more natural look. Furthermore, using the Particle Geometry node, we can create shapes or patterns from the particles.

For instance, in a recent project involving a magical explosion, I used a Particle System combined with a variety of force fields and particle shape modifiers to achieve a visually stunning effect. Each aspect of the effect, such as the initial burst, the lingering smoke, and the glowing embers, was controlled by different nodes, allowing for fine-grained adjustments and iterative refinements.

Q 11. Describe your experience using Fusion’s tracker.

Fusion’s tracker is a powerful tool for motion tracking, enabling me to seamlessly integrate CGI elements into live-action footage. It provides various tracking methods to handle different challenges, from planar tracking for simple movements to 3D camera tracking for more complex shots. Accuracy is paramount, and I use a variety of techniques to ensure the best results.

I typically start by carefully selecting tracking points. The choice of points greatly impacts the quality of the tracking. I look for distinct features which stand out and will be easily recognized throughout the video. For complex shots, I use the planar tracker which excels in tracking moving planes and flat surfaces. 3D camera tracking is used for more complex sequences, allowing for precise integration of 3D models within the scene, creating a believable sense of depth and realism.

I always verify the tracker’s accuracy before using the results in my compositing. Sometimes manual adjustments are necessary to fine-tune the results. Additionally, the solver settings can often impact precision and performance. I usually begin with a simpler solver and only utilize more complex ones when necessary. Knowing the limitations of each solver is vital.

Q 12. How do you manage large and complex Fusion projects?

Managing large and complex Fusion projects requires a structured approach. The key is organization, clear naming conventions, and utilizing Fusion’s features effectively. I start by breaking down the project into smaller, manageable components. This involves creating distinct compositions for different elements or shots, which can later be combined.

I use a hierarchical node structure, grouping related operations together for clarity. I consistently use meaningful names for all nodes and layers, making it easier for me (and collaborators) to understand the purpose and function of each part. Version control is also essential, allowing me to track changes and revert to previous versions if needed. I prefer to work in a non-destructive manner, using adjustments layers and linking nodes appropriately. This allows me to modify the project quickly and easily.

Furthermore, I leverage Fusion’s scripting capabilities when appropriate to automate repetitive tasks or create custom tools. This boosts productivity and ensures consistency throughout a large project. For example, I might use a script to generate a batch of nodes with specific settings for a set of similar shots.

Q 13. Explain your experience with Fusion’s 3D workspace.

Fusion’s 3D workspace is a powerful tool for integrating 3D models and elements into your composites. It allows for accurate camera tracking, 3D object manipulation, and realistic lighting and shadow interactions. It seamlessly integrates with other 3D software packages via file imports and exports and provides tools to match the camera in the 3D scene to the camera used in the footage.

My workflow typically involves importing a 3D model, aligning it to the tracked camera data, and then utilizing lights and shaders to realistically integrate the model into the scene. The ability to work with different render engines and import various 3D file formats provides incredible flexibility, and the ability to work in a real-time preview mode allows for immediate feedback and iterative adjustments to the 3D scene in relation to the live footage.

For instance, I used the 3D workspace to integrate a CGI spaceship into a live-action space battle scene. After accurately tracking the camera movements, I imported the 3D model of the spaceship, adjusted its position and orientation based on the camera track, and used lighting effects to match the overall look of the scene. The 3D workspace is crucial for tasks that require a high level of depth and realism.

Q 14. What are your preferred methods for creating matte paintings in Fusion?

Creating matte paintings in Fusion involves a combination of techniques, focusing on seamlessly integrating hand-painted elements with live-action footage or CGI backgrounds. My preferred methods involve a multi-layered approach, beginning with establishing a base plate and carefully matching the perspective and lighting.

I start by creating a rough sketch based on the reference images and then use various brush tools and filters to progressively refine the matte painting. I pay close attention to perspective, color matching, and light interactions to ensure a realistic integration with the surrounding elements. Fusion’s powerful rotoscoping and masking tools allow for precise control over the integration of the matte painting into the scene. I utilize layer masks and blending modes to enhance realism and blend the painting smoothly with the rest of the image.

For example, I’ve used this process to extend the environment of a shot, creating realistic extensions of buildings, landscapes, or skies. The key is to gradually build up layers of detail while meticulously matching the color temperature, lighting, and perspective of the existing scene. Often I use multiple reference images taken from various angles, lighting conditions, and times of day to maintain realism and depth.

Q 15. How do you collaborate effectively with other members of a VFX team?

Effective collaboration in VFX is paramount. In Fusion compositing, this involves clear communication, organized file management, and a proactive approach. I start by ensuring everyone understands the overall shot breakdown and individual responsibilities. We use version control systems like Git to track changes and avoid conflicts. Regular check-ins, utilizing tools like Slack or project management software, keep everyone informed of progress and identify potential roadblocks early. For example, if I’m working on a complex element integration, I’ll share pre-comps and WIPs frequently to receive feedback and ensure the composite aligns with the director’s vision and the needs of other artists like 3D modellers or animators. This collaborative spirit prevents costly revisions later in the pipeline. Finally, I believe in fostering a supportive environment where everyone feels comfortable sharing ideas and raising concerns.

Career Expert Tips:

- Ace those interviews! Prepare effectively by reviewing the Top 50 Most Common Interview Questions on ResumeGemini.

- Navigate your job search with confidence! Explore a wide range of Career Tips on ResumeGemini. Learn about common challenges and recommendations to overcome them.

- Craft the perfect resume! Master the Art of Resume Writing with ResumeGemini’s guide. Showcase your unique qualifications and achievements effectively.

- Don’t miss out on holiday savings! Build your dream resume with ResumeGemini’s ATS optimized templates.

Q 16. What are some common challenges you encounter in Fusion compositing, and how do you address them?

Common challenges in Fusion compositing include managing complex node trees, dealing with differing image resolutions and color spaces, and troubleshooting performance bottlenecks. To manage complex node trees, I rely on a modular approach, grouping similar operations into sub-comps. This improves organization and reduces errors. When dealing with resolution and color space issues, I meticulously check the metadata of each image file and utilize Fusion’s color management tools to achieve consistency. For performance issues, I optimize my node tree by using efficient nodes, pre-rendering elements where possible, and utilizing Fusion’s cache system. For instance, a recent project involved compositing a highly detailed cityscape. To avoid slowdowns, I pre-rendered sections of the city as individual plates, reducing the computational load on the main composite. Proactive problem-solving through meticulous organization and preemptive optimization is crucial.

Q 17. Explain your understanding of different image file formats and their implications for compositing.

Understanding image file formats is essential for successful compositing. Different formats offer varying levels of compression, color depth, and alpha channel support. For example, .exr (OpenEXR) is a high-dynamic-range (HDR) format offering superior quality and flexibility, crucial for preserving image detail in complex composites. .png offers lossless compression and alpha channel support, ideal for matte paintings and elements requiring crisp edges. .jpeg, with its lossy compression, is suitable for less critical elements where file size is a primary concern. Choosing the correct format impacts both image quality and file size, directly affecting rendering times and storage space. Ignoring these factors can lead to artifacts, color banding, or increased render times. In my workflow, I prioritize .exr for intermediate steps and select the final format based on the project’s delivery requirements.

Q 18. How do you use Fusion’s built-in tools for creating and managing masks?

Fusion provides a comprehensive suite of masking tools. I use the built-in tools frequently. The Mask tool allows for various shapes like rectangles, ellipses, and polygons, which can be further refined using bezier curves for precise control. The Roto tool is invaluable for masking moving subjects in footage, tracking them frame-by-frame. For more complex selections, I leverage the Keyer nodes, which analyze color differences to create masks. This could include the Luma Keyer, for isolating objects based on luminance, or the Chroma Keyer, for removing a specific color. For example, isolating a character in front of a green screen involves using a chroma keyer. These masks can then be refined further using blur nodes, feathering the edges for a more seamless integration. Finally, I often use the Dilate and Erode filters for fine-tuning mask edges, ensuring a clean composite. The power of Fusion lies in its ability to combine these tools effectively for various shots, from simple to extremely complex compositions.

Q 19. Describe your experience with using external plugins or scripts in Fusion.

I have extensive experience using external plugins and scripts in Fusion. These extend Fusion’s capabilities significantly, often offering specialized tools not readily available within the core software. For example, I’ve used particle effect plugins to enhance scenes with realistic effects like dust and debris, and I’ve used scripts for automating repetitive tasks, such as batch-processing multiple images or generating complex procedural textures. This familiarity extends to understanding how to troubleshoot issues that may arise from using third-party tools, involving debugging scripts and resolving compatibility problems. Knowing how to properly install, configure and use these resources is an important part of boosting my workflow, enabling faster turnaround times and access to more advanced compositing techniques.

Q 20. How familiar are you with different compositing techniques like screen-space reflections or depth of field?

I am very familiar with advanced compositing techniques like screen-space reflections (SSR) and depth of field (DOF). SSR involves simulating reflections on surfaces using the scene’s geometry and lighting information. This is usually achieved using specialized nodes or external plugins and can dramatically enhance realism. DOF simulates the natural blurring of objects out of focus, adding depth and realism to the shot. It’s often implemented using a depth map from the 3D render or through image-based techniques. In practice, I’ve used these techniques on numerous projects. For example, on a recent automotive commercial, I used SSR to create highly realistic reflections of the car’s environment on its chrome surfaces. For a fantasy film, I utilized DOF to enhance the visual storytelling, drawing the viewer’s attention to specific elements and creating a cinematic feel.

Q 21. Explain your understanding of the importance of image resolution and aspect ratio in compositing.

Image resolution and aspect ratio are critical in compositing. High resolution provides greater detail and allows for more flexible scaling and cropping without significant loss of quality. A low resolution may result in noticeable pixelation and artifacts, especially when enlarging elements. The aspect ratio refers to the proportional relationship between the image’s width and height. Inconsistent aspect ratios within a composite lead to distortion and misalignment of elements. For example, combining a 4K plate with a 2K element without proper scaling will lead to a noticeable resolution mismatch. Maintaining consistency in both resolution and aspect ratio is essential for ensuring a clean, professional-looking final composite. My workflow always starts by establishing a common resolution and aspect ratio for all elements, preventing later issues. Any scaling is done using high-quality resampling techniques to minimize artifacts.

Q 22. Describe your workflow for creating a realistic digital double in Fusion.

Creating a realistic digital double in Fusion involves a multi-stage process that hinges on meticulous planning and precise execution. It starts with acquiring high-quality plate footage of the actor or subject, ideally shot with consistent lighting and camera movement. Then, I’d use a method called rotoscoping, carefully outlining the subject in each frame using Fusion’s powerful rotoscoping tools. This creates a mask that isolates the subject from the background. Next, I’d build a 3D model of the subject using either external 3D software or, if the detail is less crucial, rely on Fusion’s 3D capabilities. This model is then textured using high-resolution images or scans to achieve photorealism. The crucial step is precise tracking and matching the camera perspective in Fusion to perfectly overlay the 3D model onto the rotoscoped area. Finally, I’d employ advanced techniques like subsurface scattering and displacement mapping in Fusion’s shaders to refine the model’s realism and integrate it seamlessly with the surrounding environment. This careful blending, using techniques like color correction and light matching, results in an almost invisible transition between the digital double and the original footage.

For instance, in a recent project involving a digital double replacing an actor in a fast-paced action scene, the use of sophisticated tracking and the meticulously created mask was key to ensuring the smoothness and realism of the effect. Without that careful preparation, any flaws in the 3D model or placement would be glaringly obvious.

Q 23. How familiar are you with using Fusion’s built-in tools for creating and manipulating text?

I’m very familiar with Fusion’s text tools. They offer a robust set of options beyond simple text creation. I often use the built-in text node to add titles, annotations, and even more complex visual elements. The node provides controls for font selection, kerning, leading, and more. Beyond basic text, I frequently leverage Fusion’s ability to manipulate the text as a shape using masks and effects to achieve more stylized results. For example, I might extrude text for a three-dimensional effect or use various distortion effects to create a dynamic look. The ability to use Fusion’s keyframing ensures smooth animations on text, critical for creating title sequences or adding visual cues.

In one project, I had to create a dynamic animated title sequence with text appearing from different directions, morphing, and ultimately dissolving. I used Fusion’s text tools, combined with keyframing, and various expressions to control these animations. The flexibility provided by Fusion allowed me to craft a title sequence that perfectly matched the tone and style of the film, exceeding what more limited text-editing software could offer.

Q 24. What are your preferred techniques for creating and maintaining a clean compositing pipeline?

Maintaining a clean compositing pipeline is paramount. My strategies focus on a highly organized node structure, using consistent naming conventions for layers and nodes to make everything easily identifiable. This means using a hierarchical structure in the node tree, grouping related nodes and using comments liberally to improve readability. I also rely heavily on the use of masks and pre-comps to break down complex shots into manageable pieces. Pre-composing allows me to work on specific aspects of a shot in isolation before integrating them into the main composition. This compartmentalization prevents conflicts and makes troubleshooting and revisions much simpler. Using color-coded nodes helps distinguish different elements and their roles. For example, using a specific color for background plates, another for foreground elements, and yet another for VFX elements helps greatly in maintaining visual clarity in a complex node tree.

Imagine a complex scene with multiple layers – background, foreground, VFX elements, and lighting passes. A messy node tree can quickly become overwhelming. By grouping the nodes for each element, using descriptive names, and adding comments, I’ve simplified the process, even for the most complex shots, making teamwork easier and reducing errors.

Q 25. How do you manage version control in your Fusion projects?

Version control is crucial. For Fusion projects, I primarily utilize external version control systems like Git. While Fusion doesn’t have native Git integration, I manage my Fusion compositions as files within a project folder that is tracked by Git. I regularly commit changes, with detailed descriptions of modifications, ensuring that I can revert to previous versions if needed. For extremely large projects, I might use a combination of Git for the overall project management and consider utilizing Fusion’s built-in save and load features to manage smaller iterations, saving frequently with descriptive file names. This ensures that I maintain the complete history of my work and avoid catastrophic data loss.

This process is particularly crucial for collaborative projects, allowing multiple artists to work concurrently while maintaining a clear version history and minimizing potential merge conflicts.

Q 26. Describe a time you had to troubleshoot a complex technical issue in Fusion.

I once encountered a perplexing issue involving a subtle flickering effect on a digital double during a particularly demanding scene. Initial checks revealed no obvious errors in the tracking or compositing nodes. After careful investigation, I discovered the issue stemmed from a minor discrepancy in the frame rate of the 3D model animation and the plate footage. The difference was imperceptible upon visual inspection, but it caused a subtle flicker on the edges of the digital double during fast motion. The solution involved carefully re-rendering the 3D animation with a precisely matched frame rate, ensuring the synchronization of the two elements. This involved a detailed review of my render settings in my 3D software, then re-rendering only the problematic portions of the 3D model, before re-importing it into Fusion. This highlights the importance of meticulously checking all details in every element of your compositing workflow.

Q 27. Explain your understanding of Fusion’s node tree structure and its implications for workflow.

Fusion’s node-based structure is its core strength. It’s essentially a visual programming language where each node performs a specific function (e.g., adding a filter, creating a mask, adjusting color). The output of one node becomes the input for another, creating a chain of operations. This structure allows for unparalleled flexibility and modularity. Understanding the flow of information through the node tree is essential. A well-organized tree is critical for managing complex shots, streamlining revisions, and facilitating collaboration. Poorly organized trees can quickly become confusing and difficult to troubleshoot. I use a methodical approach, organizing nodes into logical groups, clearly labeling them, and using pre-compositions to isolate distinct parts of the compositing process. This makes it simpler for others (and myself) to understand the work process, even months later.

Think of it like a factory assembly line. Each node is a station performing a specific task, and the final product is the finished composite. A well-structured line ensures efficient and accurate production.

Q 28. What are your strategies for maintaining organization and efficiency while working in Fusion?

Maintaining organization and efficiency in Fusion involves a multi-pronged approach. Firstly, consistent project naming conventions are crucial. I employ a clear naming system for files and layers to avoid confusion. Secondly, a well-defined folder structure keeps assets and compositions organized. This allows for quick access to specific elements. Thirdly, creating templates for common tasks streamlines workflows and ensures consistency. This might include pre-built compositions with common settings or custom tools for frequently used effects. Finally, using comments and notes to document my work is also important. This is particularly helpful for complex projects or during collaborative efforts. These steps ensure smooth and efficient workflow, reducing errors and facilitating collaborative efforts.

A well-organized project in Fusion is like a well-stocked and clearly labeled kitchen – everything is where it should be, making it easy to find what you need when you need it.

Key Topics to Learn for a Fusion Compositing Interview

- Node-Based Workflow: Understand the fundamental principles of Fusion’s node-based compositing system, including node connections, data flow, and the importance of a well-organized node tree. Practical application: Efficiently building complex compositing shots.

- Keying Techniques: Master various keying methods (e.g., chroma key, luma key, keylight) and understand their strengths and weaknesses. Practical application: Cleanly extracting subjects from their backgrounds for seamless integration.

- Color Correction and Grading: Become proficient in color space management, color correction tools, and advanced color grading techniques within Fusion. Practical application: Achieving consistent color and mood across shots.

- Matte Creation and Refinement: Learn to create and refine various types of mattes (e.g., alpha mattes, luminance mattes) for precise compositing and effects. Practical application: Creating realistic and believable composite shots.

- Roto- and Paint-Based Techniques: Develop skills in rotoscoping and painting for intricate cleanup and compositing tasks. Practical application: Removing unwanted elements or adding details to maintain visual consistency.

- Particle Systems and Simulations: Understand the principles and application of Fusion’s particle system for creating realistic effects (e.g., smoke, fire, dust). Practical application: Enhancement of visual effects realism.

- 3D Compositing in Fusion: Learn how to integrate 3D elements into your compositions, including importing 3D models and working with camera projections. Practical application: Creating immersive and complex composite shots.

- Performance Optimization: Understand techniques for optimizing Fusion projects for faster render times and smoother workflow. Practical application: Efficiently managing project size and render time to meet deadlines.

- Troubleshooting and Problem-Solving: Develop strategies for identifying and resolving common compositing challenges. Practical application: Maintaining workflow efficiency by quickly diagnosing and resolving technical issues.

Next Steps

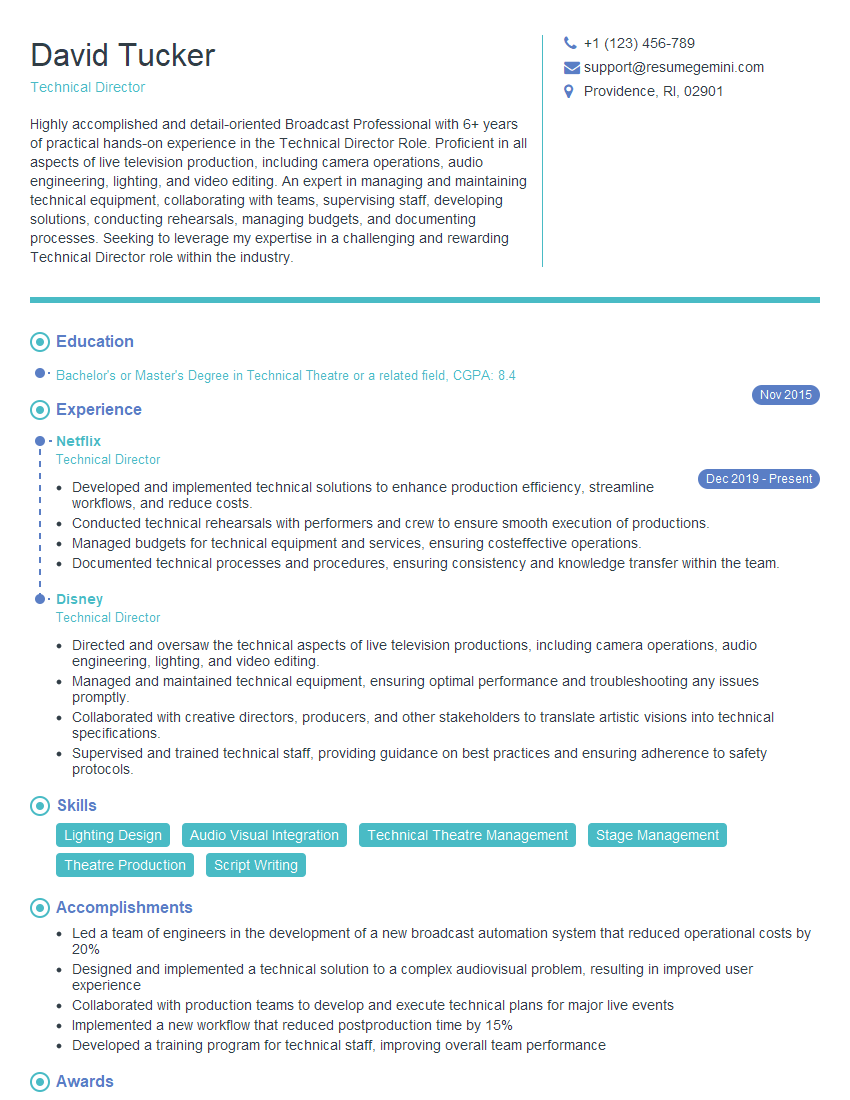

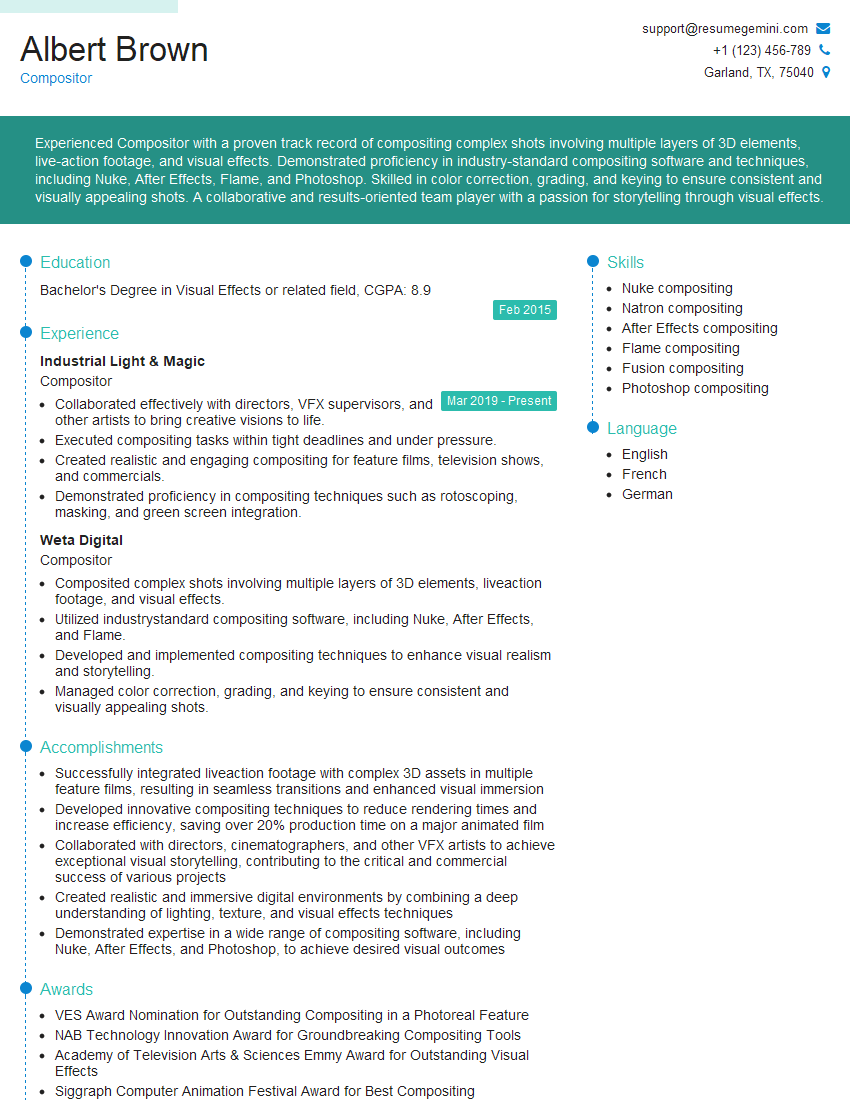

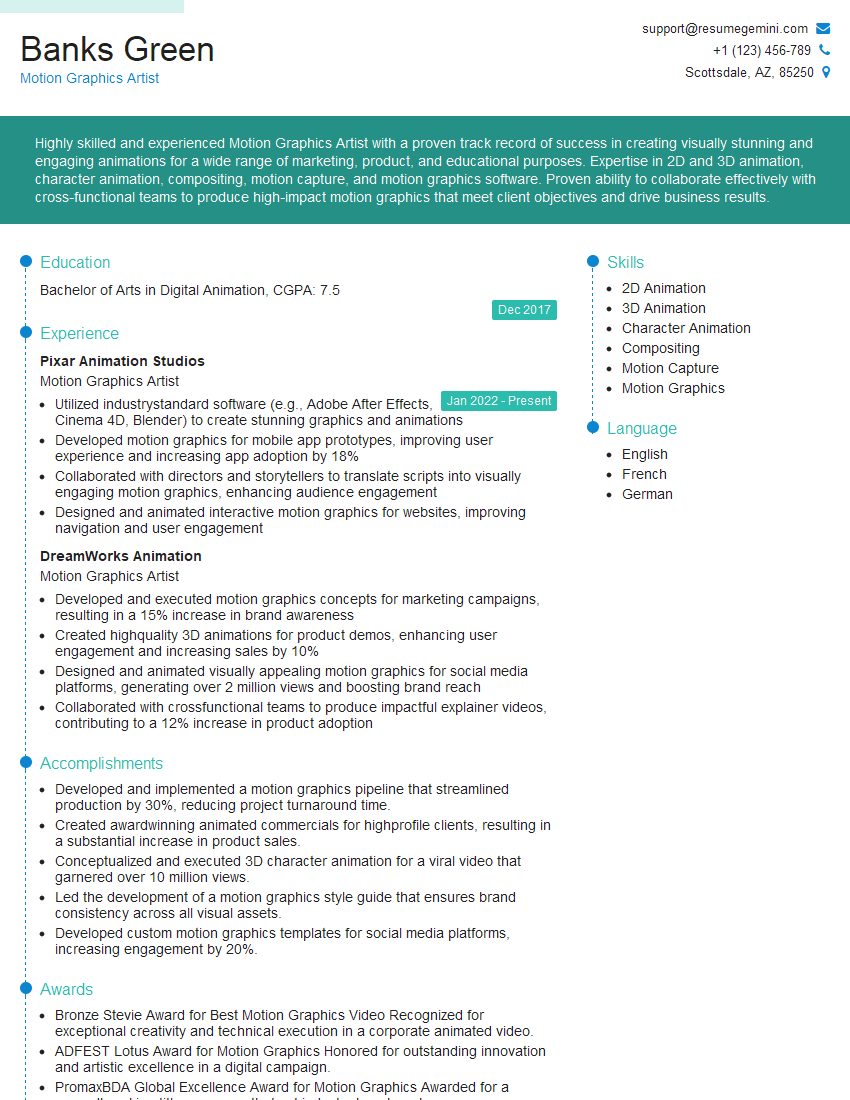

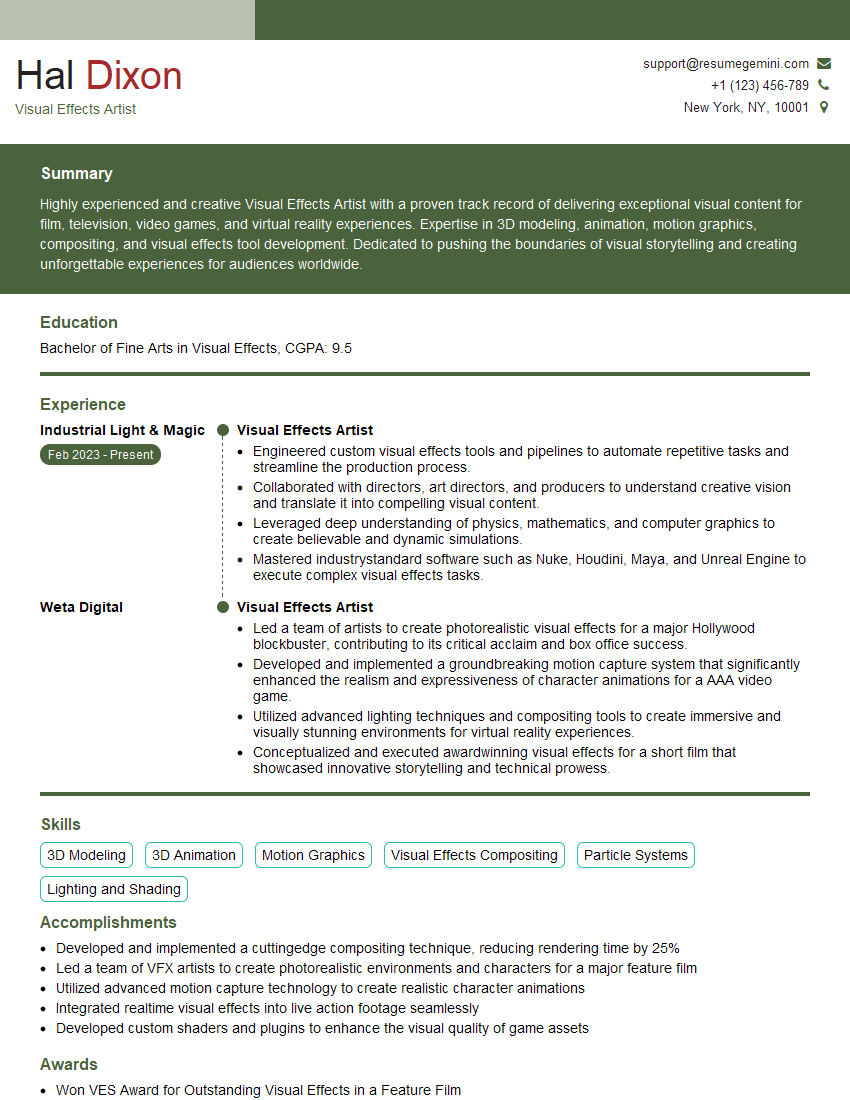

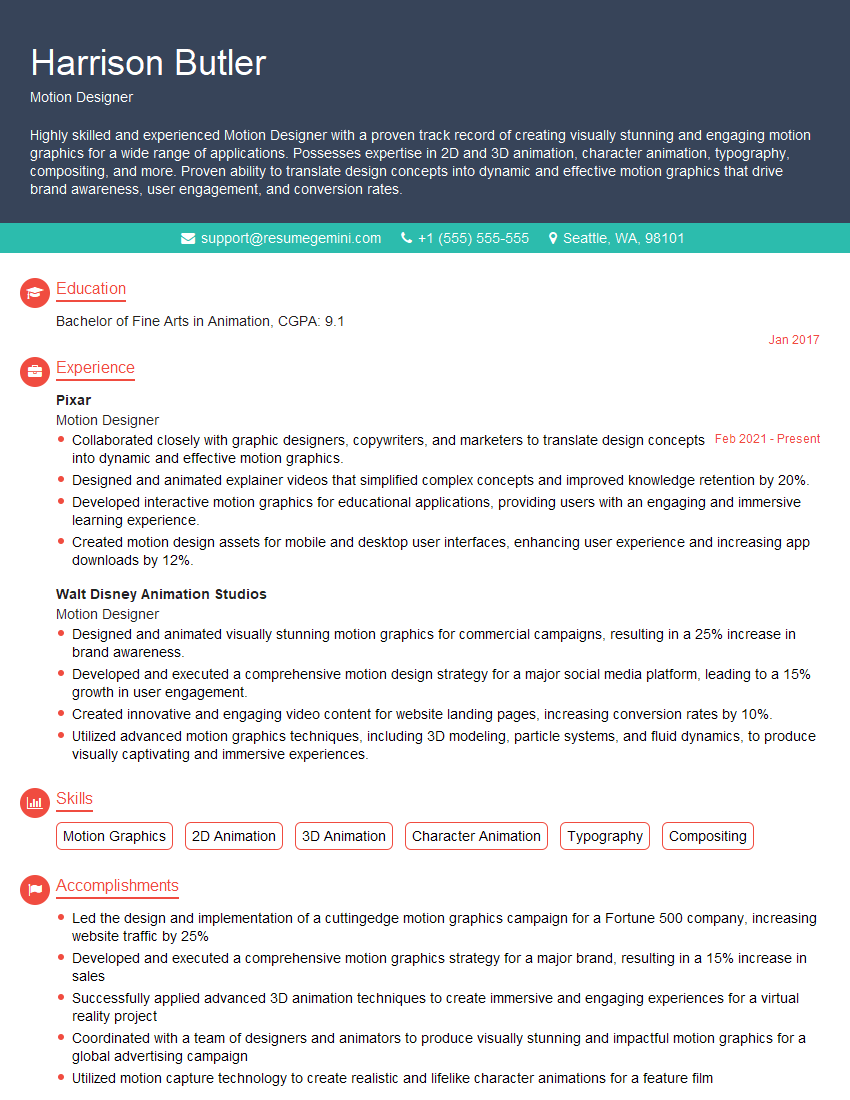

Mastering Fusion compositing significantly enhances your career prospects in visual effects, motion graphics, and post-production. A strong understanding of Fusion is highly sought after by studios and companies worldwide. To stand out, create an ATS-friendly resume that highlights your skills and experience. ResumeGemini is a trusted resource to help you build a professional and impactful resume. Examples of resumes tailored to Fusion compositing are available to help guide your efforts.

Explore more articles

Users Rating of Our Blogs

Share Your Experience

We value your feedback! Please rate our content and share your thoughts (optional).

What Readers Say About Our Blog

Hi, I represent an SEO company that specialises in getting you AI citations and higher rankings on Google. I’d like to offer you a 100% free SEO audit for your website. Would you be interested?

good