The right preparation can turn an interview into an opportunity to showcase your expertise. This guide to Knowledge of Quality Assurance Techniques interview questions is your ultimate resource, providing key insights and tips to help you ace your responses and stand out as a top candidate.

Questions Asked in Knowledge of Quality Assurance Techniques Interview

Q 1. Explain the difference between verification and validation.

Verification and validation are both crucial aspects of quality assurance, but they address different aspects of the software development process. Think of it like building a house: verification checks if you’re building the house correctly according to the blueprints, while validation checks if you’ve built the right house – the one that meets the client’s needs.

Verification is the process of evaluating a software system or component to determine whether the products of a given development phase satisfy the conditions imposed at the start of that phase. It’s about ensuring that the software meets its specifications. This often involves reviews, inspections, and static analysis of code to check for errors and compliance with standards. For example, verifying that a function correctly calculates a sum based on its defined algorithm.

Validation, on the other hand, is the process of evaluating a software system or component during or at the end of the development process to determine whether it satisfies specified requirements. It focuses on whether the software meets the customer’s needs and expectations. This usually involves testing the software with real-world data and scenarios. For example, validating that an e-commerce checkout process correctly handles various payment methods and securely processes transactions. In essence, verification confirms that you’re building the product right, while validation confirms you’re building the right product.

Q 2. Describe the software development lifecycle (SDLC) models you are familiar with.

I’m familiar with several SDLC models, each with its own strengths and weaknesses. The choice of model depends on the project’s size, complexity, and requirements.

- Waterfall Model: This is a linear, sequential approach where each phase must be completed before the next begins. It’s simple to understand and manage but lacks flexibility for changes.

- Iterative Model: This model breaks the project into smaller iterations, with each iteration producing a working version of the software. It allows for early feedback and adaptation to changing requirements but requires careful planning.

- Agile Models (Scrum, Kanban): These are iterative and incremental models focused on collaboration, flexibility, and frequent delivery of working software. Scrum uses sprints (short iterations) and defined roles, while Kanban focuses on visualizing workflow and limiting work in progress. They are excellent for complex projects with evolving requirements.

- Spiral Model: This model combines elements of iterative and waterfall models, incorporating risk assessment at each iteration. It’s suitable for high-risk projects requiring careful risk management.

- V-Model: This is an extension of the waterfall model, emphasizing the parallel execution of testing and development phases. Each development phase has a corresponding testing phase. It’s robust but less flexible than agile methodologies.

My experience includes significant work within Agile frameworks, particularly Scrum, due to its adaptability and focus on delivering value incrementally.

Q 3. What are the different types of software testing?

Software testing encompasses a wide range of techniques, broadly categorized as follows:

- Unit Testing: Testing individual components or modules of the software in isolation.

- Integration Testing: Testing the interaction between different modules or components.

- System Testing: Testing the entire system as a whole, verifying that all components work together correctly.

- Acceptance Testing: Testing the software to ensure it meets the user’s requirements and is ready for deployment. This often involves user acceptance testing (UAT).

- Regression Testing: Retesting after code changes to ensure that new changes haven’t introduced new bugs or broken existing functionality.

- Performance Testing: Assessing the software’s responsiveness, stability, and scalability under various load conditions.

- Security Testing: Identifying vulnerabilities and weaknesses in the software’s security mechanisms.

- Usability Testing: Evaluating the software’s ease of use and user-friendliness.

The specific types of testing employed will depend on the project’s requirements and risk profile.

Q 4. Explain the difference between black-box and white-box testing.

Black-box and white-box testing are two fundamental approaches to software testing that differ in their knowledge of the internal structure and workings of the software.

Black-box testing treats the software as a ‘black box,’ meaning the tester doesn’t know the internal implementation details. Tests are designed based on the software’s specifications and functionality, without examining the code. This approach is excellent for identifying issues from a user’s perspective and ensuring that the software meets its requirements. Examples include functional testing and user acceptance testing.

White-box testing, on the other hand, involves examining the internal structure and code of the software. Tests are designed to cover specific code paths and logic, aiming to achieve high code coverage. This allows for more thorough testing of individual components and can reveal internal logic errors or vulnerabilities. Examples include unit testing and integration testing.

Both black-box and white-box testing are valuable and often used in conjunction to ensure comprehensive testing.

Q 5. What is test-driven development (TDD)?

Test-driven development (TDD) is an agile software development approach where tests are written before the code they are intended to test. It’s a cycle of ‘red-green-refactor’:

- Red: Write a failing test that defines a specific requirement or functionality.

- Green: Write the simplest code possible to make the test pass.

- Refactor: Improve the code’s design and structure while ensuring the tests still pass.

This approach ensures that the code meets the specified requirements and improves code quality by encouraging modularity and maintainability. It’s particularly useful for complex projects requiring a high degree of code correctness and robustness. Think of it as building a house based on the design and then ensuring each room functions as planned before moving to the next room, rather than building the entire house and then seeing if it works.

Q 6. Describe your experience with Agile methodologies.

I have extensive experience with Agile methodologies, primarily Scrum. In my previous role at [Previous Company Name], I was part of a Scrum team responsible for [Project Description]. We followed Scrum practices rigorously, including daily stand-ups, sprint planning, sprint reviews, and sprint retrospectives. I played a key role in [Specific Role and Contributions, e.g., defining acceptance criteria, creating and executing test plans, collaborating with developers, identifying and reporting bugs].

My experience highlights my ability to adapt to changing requirements, collaborate effectively within a team, and deliver high-quality software within short iterations. I’m comfortable with Agile principles such as iterative development, continuous integration, and test-driven development. I’ve seen firsthand how Agile practices improve communication, enhance team collaboration and lead to better software products.

Q 7. How do you prioritize test cases?

Prioritizing test cases is crucial for effective testing, especially when dealing with limited time and resources. Several techniques can be used, often in combination:

- Risk-Based Prioritization: Prioritize test cases that cover areas with higher risk of failure or significant impact on the system. This often involves considering the criticality of functionality and potential consequences of failures.

- Business Value Prioritization: Prioritize test cases based on the business value of the features they cover. Focus on testing functionalities that are most important to the customer or business goals.

- Requirement Coverage Prioritization: Prioritize test cases that ensure comprehensive coverage of all requirements, ensuring all functionalities and specifications are tested.

- Test Case Dependency Prioritization: Identify dependencies between test cases and prioritize those that are independent to enable parallel execution and faster testing.

A practical approach is to use a combination of these techniques, creating a matrix to rank test cases based on several factors and use this matrix for prioritizing testing activities. This will ensure a balanced approach to testing considering various factors like risk, business value and required coverage.

Q 8. How do you handle bugs or defects found during testing?

Discovering a bug is just the beginning; effective handling is crucial. My process involves several key steps. First, I meticulously reproduce the bug, documenting the exact steps to trigger it. This includes the environment (OS, browser, etc.), inputs, and expected vs. actual results. I then capture screenshots or screen recordings as visual evidence. Next, I classify the bug based on its severity (critical, major, minor) and priority (urgent, high, low). This helps prioritize fixes. I utilize a bug tracking system, like Jira or Bugzilla, to formally log the bug, including all documentation. This ensures transparency and traceability. Finally, I communicate the bug details to the development team and follow up to ensure it’s addressed and verified as resolved.

For example, I once found a critical bug in an e-commerce application where users couldn’t add items to their cart. Following my process, I detailed the steps, provided screenshots of the error message, classified it as a critical bug, and logged it in Jira. The team quickly addressed it, and I verified the fix before release.

Q 9. What is a test plan, and what are its key components?

A test plan is a comprehensive document that outlines the testing strategy and activities for a software project. Think of it as a roadmap guiding the entire testing process. Its key components include:

- Test Scope: What parts of the software will be tested?

- Test Objectives: What are the goals of testing (e.g., find critical bugs, ensure performance)?

- Test Strategy: What types of testing (unit, integration, system, etc.) will be used?

- Test Environment: What hardware, software, and network configurations will be used?

- Test Schedule: When will each testing phase occur?

- Test Deliverables: What reports and documents will be produced?

- Test Resources: Who will be involved in testing and what tools will be used?

- Risk Assessment: What are the potential risks and how will they be mitigated?

A well-defined test plan ensures everyone is on the same page, avoids confusion, and helps manage the testing process effectively.

Q 10. What is a test case, and how do you write effective ones?

A test case is a set of actions executed to verify a specific functionality or feature of the software. It’s like a recipe for testing: you follow the steps, and you expect a certain outcome. An effective test case needs to be:

- Clear and Concise: Easy to understand and follow.

- Specific: Tests a single functionality.

- Repeatable: Can be executed multiple times with the same results.

- Independent: Doesn’t depend on the outcome of other test cases.

- Documented: Includes steps, expected results, and actual results.

Example of a test case for a login form:

Test Case ID: TC_Login_001

Test Case Name: Verify successful login with valid credentials

Steps:

1. Navigate to the login page.

2. Enter valid username: "testuser"

3. Enter valid password: "password123"

4. Click the login button.

Expected Result: User is successfully logged in and navigates to the home page.

Actual Result: [To be filled after execution]

Writing effective test cases is essential to comprehensive testing and helps identify issues early.

Q 11. Explain your experience with different testing tools.

I have extensive experience with a variety of testing tools. For defect tracking, I’ve used Jira and Bugzilla extensively. For test management, I’ve used TestRail and Zephyr. For performance testing, I’m proficient in JMeter and LoadRunner. I also have experience with automated testing tools like Selenium (for UI testing), Rest-Assured (for API testing), and Appium (for mobile testing). My experience includes choosing the right tool for the job, configuring and using them effectively, and integrating them into the overall testing process.

Q 12. Describe your experience with test automation frameworks.

I’ve worked with several test automation frameworks, including Selenium WebDriver with Java, Cucumber for Behavior-Driven Development (BDD), and Cypress for end-to-end testing. In choosing a framework, I consider factors like the project’s size and complexity, the technology used, and the team’s expertise. For example, I used the Selenium framework with Java for automating UI tests for a large web application. This involved designing test suites, implementing page object models for maintainability, and integrating tests with CI/CD pipelines for continuous testing. My experience includes not only implementing the framework but also maintaining and updating it as the application evolves. Proper framework design is critical for scalability and long-term success.

Q 13. How do you ensure test coverage?

Ensuring adequate test coverage is vital to identify and prevent defects. This is achieved through a combination of techniques. Firstly, I utilize requirement traceability matrices (RTM) to map test cases to requirements, ensuring all requirements are covered by tests. Secondly, I employ various testing methodologies like black-box testing (functional, integration, system) and white-box testing (unit, integration). This provides different perspectives on the software’s behavior. Thirdly, I use code coverage tools to measure how much of the code is executed during testing, providing insights into areas that might require more thorough testing. Finally, risk-based testing helps prioritize test efforts on critical functionality. The goal is not 100% code coverage (often impractical), but rather achieving sufficient coverage to mitigate significant risks.

Q 14. What are some common metrics used in QA?

Several metrics are crucial for monitoring the quality and effectiveness of the testing process. These include:

- Defect Density: The number of defects found per lines of code or per feature.

- Defect Severity: A classification (critical, major, minor) of defects based on their impact.

- Defect Rate: The number of defects found during a specific time period.

- Test Case Execution Rate: The percentage of test cases executed successfully.

- Test Coverage: The percentage of requirements or code covered by test cases.

- Test Execution Time: The time it takes to execute a set of tests.

Analyzing these metrics helps to identify trends, pinpoint areas needing improvement, and assess the overall quality of the software.

Q 15. Explain your experience with performance testing.

Performance testing is crucial for ensuring an application can handle the expected workload without performance degradation. My experience encompasses a wide range of techniques, from load testing to stress testing and endurance testing. I’m proficient in using tools like JMeter and LoadRunner to simulate realistic user loads, monitor key performance indicators (KPIs) like response time, throughput, and resource utilization (CPU, memory, network), and identify bottlenecks.

For instance, in a recent project involving an e-commerce website, we used JMeter to simulate thousands of concurrent users adding items to their carts and checking out. We identified a bottleneck in the database query responsible for updating inventory, which we addressed by optimizing the database schema and adding caching mechanisms. The result was a significant improvement in response time and overall website stability under heavy load. I also have experience with performance testing mobile applications, considering factors specific to mobile networks and device capabilities.

My approach to performance testing is data-driven. I carefully analyze requirements, define realistic test scenarios, and establish clear success criteria before executing tests. Post-testing, I meticulously analyze the results, identify root causes of performance issues, and work collaboratively with developers to implement solutions.

Career Expert Tips:

- Ace those interviews! Prepare effectively by reviewing the Top 50 Most Common Interview Questions on ResumeGemini.

- Navigate your job search with confidence! Explore a wide range of Career Tips on ResumeGemini. Learn about common challenges and recommendations to overcome them.

- Craft the perfect resume! Master the Art of Resume Writing with ResumeGemini’s guide. Showcase your unique qualifications and achievements effectively.

- Don’t miss out on holiday savings! Build your dream resume with ResumeGemini’s ATS optimized templates.

Q 16. How do you perform security testing?

Security testing is a critical aspect of software development, aiming to identify vulnerabilities before malicious actors can exploit them. My approach encompasses various techniques, including static and dynamic application security testing (SAST and DAST). SAST involves analyzing the codebase without execution, identifying potential vulnerabilities like SQL injection or cross-site scripting (XSS) flaws. DAST, conversely, involves testing the application while it’s running, simulating attacks to uncover vulnerabilities.

I’m experienced in using tools like OWASP ZAP (for DAST) and SonarQube (for SAST). Beyond these automated tools, I also perform manual security tests, focusing on areas like authentication and authorization, input validation, session management, and error handling. A crucial aspect is penetration testing, where I simulate real-world attacks to uncover vulnerabilities.

For example, in a project involving a banking application, I discovered a vulnerability in the password reset functionality that allowed unauthorized access. This was identified through a combination of DAST and manual testing. My detailed report included the vulnerability’s severity, steps to reproduce it, and recommended mitigation strategies. Addressing these vulnerabilities is a collaborative process, working closely with developers to implement fixes and ensure the application’s security.

Q 17. What is your experience with mobile application testing?

My experience with mobile application testing is extensive and spans both iOS and Android platforms. This involves testing various aspects, including functionality, usability, performance, and security, across a range of devices and operating systems. I’m familiar with different testing methodologies, including automated testing using tools like Appium and Espresso, and manual testing to cover user flows and edge cases.

A significant part of mobile testing focuses on handling different screen sizes, resolutions, and network conditions. I also have experience testing specific mobile features like location services, camera integration, and push notifications. Beyond functional testing, I conduct performance tests to evaluate responsiveness, battery drain, and data usage.

For example, in a recent project involving a mobile banking app, we conducted extensive compatibility testing across different Android and iOS versions and devices to ensure seamless functionality. This involved using emulators and real devices to cover various screen sizes and OS versions. We also performed thorough performance testing under different network conditions (3G, 4G, Wi-Fi) to identify potential performance bottlenecks.

Q 18. How do you handle conflicting priorities?

Handling conflicting priorities is an inevitable part of QA. My approach involves prioritizing tasks based on risk and impact. I start by understanding the objectives of each task, assessing its potential impact on the project, and identifying the associated risks if it’s not completed on time or with the required quality.

I use a prioritization matrix to rank tasks based on urgency and importance. High-risk, high-impact tasks always take precedence. I then communicate clearly with stakeholders, explaining the prioritization rationale and any potential trade-offs. This collaborative approach helps manage expectations and ensures everyone is aligned on the priorities. I also utilize project management tools and techniques to track progress and adjust priorities as needed, maintaining transparency and facilitating effective communication.

For example, if facing a conflict between finishing functional testing and completing performance testing before a release, I would prioritize based on the risk assessment. If a critical functionality is at risk of failure, I would allocate more resources there. A transparent communication strategy would inform stakeholders of any potential delays or trade-offs.

Q 19. Describe a time you had to deal with a critical bug.

In a previous project involving a financial trading platform, we discovered a critical bug just days before the launch. The bug caused incorrect calculation of trade values under certain market conditions, potentially leading to significant financial losses.

My immediate response was to reproduce the bug, isolate the root cause, and assess its impact. We implemented a triage process to prioritize the bug fix over other tasks. Collaboration was crucial; I worked closely with developers, business analysts, and project managers to expedite the resolution. We used version control to manage the bug fix and rigorous regression testing to ensure it didn’t introduce new issues. Clear communication with stakeholders was vital; we kept them updated regularly on the progress and the expected timeline for the fix.

The bug was successfully fixed and the launch proceeded without significant delay. The experience highlighted the importance of comprehensive testing, proactive risk management, and efficient team collaboration in addressing critical issues.

Q 20. How do you contribute to continuous improvement in QA processes?

Continuous improvement is a cornerstone of effective QA. I actively contribute by participating in regular testing process reviews, suggesting improvements based on my experience and observations. This involves analyzing test metrics, identifying areas for automation, and recommending new tools or techniques.

I also advocate for the adoption of best practices and standards. This includes promoting the use of test management tools, establishing clear test processes, and implementing robust defect tracking systems. I actively participate in knowledge sharing sessions, mentoring junior team members, and staying up-to-date with the latest testing methodologies and technologies.

For example, I identified an opportunity to improve our regression testing process by implementing automated tests, significantly reducing testing time and improving efficiency. I presented a proposal outlining the benefits and costs, and worked with the team to implement the solution. This resulted in a more efficient and reliable testing process.

Q 21. What is your experience with risk management in testing?

Risk management in testing is about proactively identifying, assessing, and mitigating potential risks that could impact the quality and timely delivery of software. My approach involves a systematic process. First, I identify potential risks through various means, including reviewing requirements, analyzing previous test results, and understanding the technical complexities of the application.

I then assess each risk based on its likelihood and potential impact. A risk matrix is a useful tool for this. This assessment informs the development of mitigation strategies. For example, if a high-risk feature is identified, we might dedicate more resources to testing it or implement additional automated tests.

Regular risk reviews are essential. Throughout the testing cycle, I monitor the identified risks and make necessary adjustments to the mitigation strategies. Clear communication and collaboration with stakeholders are key, ensuring that everyone understands the potential risks and is aligned on the chosen mitigation strategies.

For instance, in a project with a tight deadline, we identified a risk of insufficient testing time. We mitigated this by prioritizing critical functionalities, implementing more automated tests, and closely monitoring progress against the schedule. Regular risk reviews allowed us to adjust our plan as needed, successfully delivering the product on time and with acceptable quality.

Q 22. How do you work with developers and other stakeholders?

Collaboration is key in QA. I believe in proactive communication and building strong relationships with developers and all stakeholders. I achieve this through various methods. Firstly, I actively participate in sprint planning and daily stand-up meetings to understand development progress and potential roadblocks early. This allows for timely feedback and prevents late-stage surprises. Secondly, I advocate for clear and concise defect reporting, ensuring developers have all the information they need to reproduce and resolve issues effectively. I use a consistent format for bug reports, including steps to reproduce, expected versus actual results, screenshots or screen recordings, and severity levels. Finally, I foster a collaborative environment where developers and testers see themselves as a single team working towards a shared goal of quality software. Rather than pointing fingers, we focus on finding solutions and improving the overall development process. For example, in a recent project, I noticed a recurring pattern in a specific module’s bugs. Instead of simply reporting them individually, I collaborated with the developer to understand the root cause. This led to a significant improvement in code quality and reduced the number of defects in subsequent sprints.

Q 23. What is your experience with different types of test environments?

My experience encompasses a wide range of test environments, from simple development sandboxes to complex, multi-tiered production-like setups. I’ve worked with virtual machines (VMs), cloud-based environments like AWS and Azure, and on-premise servers. I’m familiar with different types of environments including: Development (DEV), Testing (TEST), Staging (UAT – User Acceptance Testing), and Production. Each environment has unique characteristics and configurations. For instance, a DEV environment might be unstable and prone to changes, while a UAT environment should mirror production as closely as possible to ensure realistic testing. I understand the importance of configuring these environments to accurately reflect real-world usage scenarios. In one project, we faced challenges with accurately replicating a specific network latency issue in our testing environment. After careful analysis, we implemented a network emulator to mimic the real-world conditions and successfully identified and resolved the performance bottleneck.

Q 24. How do you stay up-to-date with the latest QA trends and technologies?

Staying current in QA requires a multi-faceted approach. I regularly read industry publications and blogs, attend webinars and conferences, and actively participate in online communities. This helps me stay abreast of new testing methodologies, tools, and technologies. I also actively pursue certifications to validate my knowledge and skills. For example, I recently completed a course on automated testing with Selenium, expanding my skillset in UI testing. Moreover, I actively contribute to open-source projects and engage in peer-learning opportunities, gaining practical experience and learning from experts across the globe. Continuous learning ensures I remain competitive and proficient in adapting to the evolving landscape of software quality assurance.

Q 25. What are some challenges you’ve faced in previous QA roles?

One of the biggest challenges I’ve faced involved testing a legacy system with limited documentation. The codebase was complex and poorly documented. The biggest challenge was in understanding the system’s intricacies. To overcome this, I adopted a risk-based testing approach, focusing on critical functionalities and areas most likely to impact users. I created detailed test cases, prioritized them based on risk, and collaborated closely with senior developers to understand the system’s architecture. This allowed us to efficiently identify and address high-priority issues, even with limited information. Another significant challenge was dealing with tight deadlines while maintaining high quality standards. I used techniques such as test automation and parallel testing to streamline the testing process and meet deadlines without compromising quality. In cases where deadlines were incredibly tight, I would prioritize the most critical functionalities and focus on risk-based testing, working closely with the development team to address the most impactful bugs first. Prioritization and clear communication were key to successful project delivery under pressure.

Q 26. How do you approach testing complex systems?

Testing complex systems requires a structured and systematic approach. I typically employ a combination of testing techniques, including:

- Risk-based testing: Identifying and prioritizing high-risk areas for thorough testing.

- Modular testing: Breaking down the system into smaller, manageable modules for individual testing.

- Integration testing: Testing the interaction between different modules.

- Regression testing: Ensuring that new changes don’t break existing functionality.

- Test automation: Automating repetitive tests to save time and resources.

Q 27. Explain your experience with database testing.

My experience with database testing includes verifying data integrity, consistency, and accuracy. I’m proficient in using SQL to write queries to check data validity and identify inconsistencies. I’ve used various techniques including:

- Data validation: Verifying that data is accurate, complete, and conforms to business rules.

- Data integrity: Ensuring that data is consistent across different tables and databases.

- Performance testing: Evaluating database performance under different loads.

- Security testing: Verifying that data is protected from unauthorized access.

Q 28. How do you handle pressure and tight deadlines?

Handling pressure and tight deadlines requires strong organizational skills, effective time management, and clear communication. I prioritize tasks based on risk and impact, focusing on critical areas first. I break down large tasks into smaller, manageable steps, allowing for better tracking of progress. I also use tools like project management software (Jira, for example) to effectively manage my workload and track deadlines. When faced with high pressure, I maintain open communication with the team, ensuring everyone is informed and aligned. I also actively look for ways to streamline processes and optimize workflows to improve efficiency. A proactive and collaborative approach is critical in mitigating pressure and meeting deadlines consistently. For example, during a particularly challenging project launch, I collaborated closely with the development team to proactively identify and resolve potential issues early in the process, avoiding last-minute crises and ensuring a successful launch.

Key Topics to Learn for Knowledge of Quality Assurance Techniques Interview

- Software Development Life Cycle (SDLC) Models: Understand various SDLC methodologies (Waterfall, Agile, DevOps) and how QA fits within each. Consider practical applications like test planning within an Agile sprint.

- Test Planning & Strategy: Learn to create comprehensive test plans, including scope definition, test environment setup, risk assessment, and resource allocation. Practice applying these principles to different project scenarios.

- Test Case Design Techniques: Master various techniques like equivalence partitioning, boundary value analysis, decision table testing, and state transition testing. Be prepared to explain how you’d apply these to real-world examples.

- Test Automation Frameworks: Familiarize yourself with popular frameworks like Selenium, Appium, or Cypress. Discuss your experience with automation tools and scripting languages (e.g., Python, Java).

- Defect Tracking and Reporting: Understand the process of identifying, documenting, and tracking defects using tools like Jira or Bugzilla. Practice clear and concise defect reporting to facilitate efficient resolution.

- Performance and Security Testing: Gain a foundational understanding of performance testing methodologies (load, stress, endurance) and security testing principles (OWASP Top 10). Discuss relevant tools and approaches.

- Different Testing Types: Be prepared to discuss various testing types (unit, integration, system, user acceptance testing) and their purpose within the QA process. Highlight your experience with different levels of testing.

- Quality Metrics and Reporting: Understand key QA metrics (defect density, test coverage, etc.) and how to effectively communicate testing results to stakeholders. Consider how to present data clearly and concisely.

Next Steps

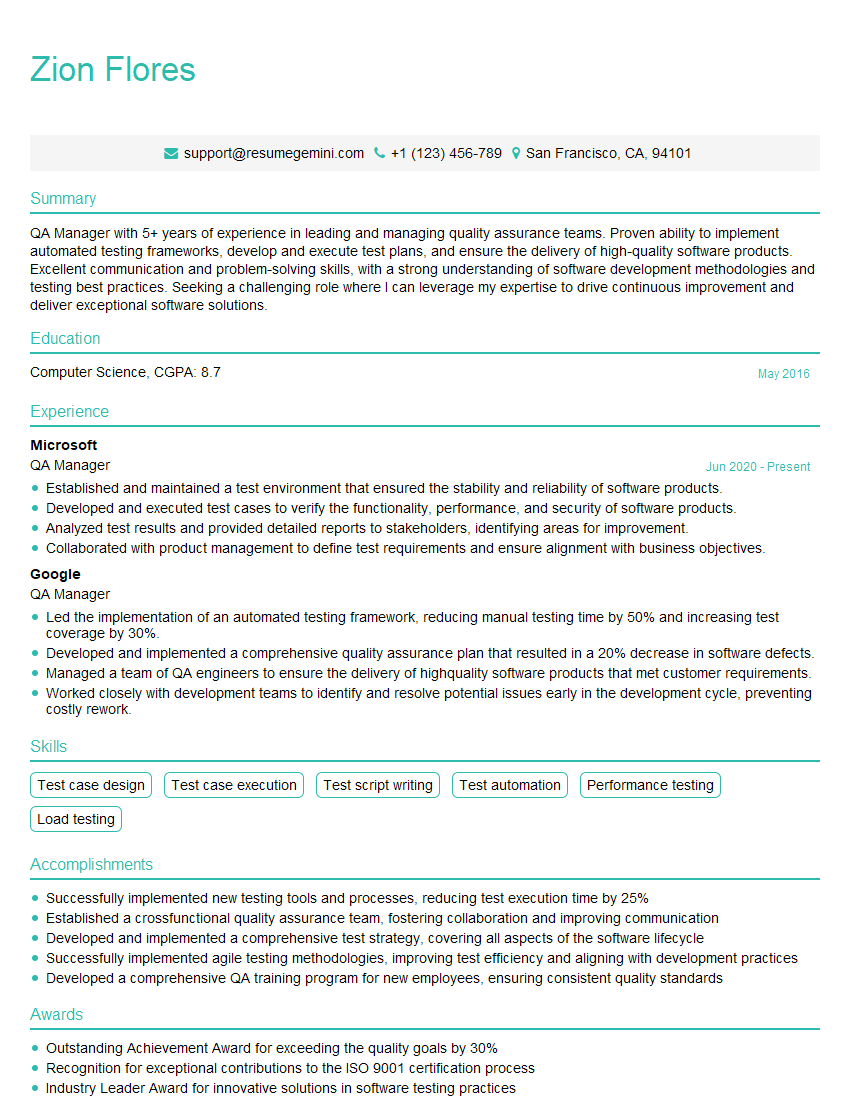

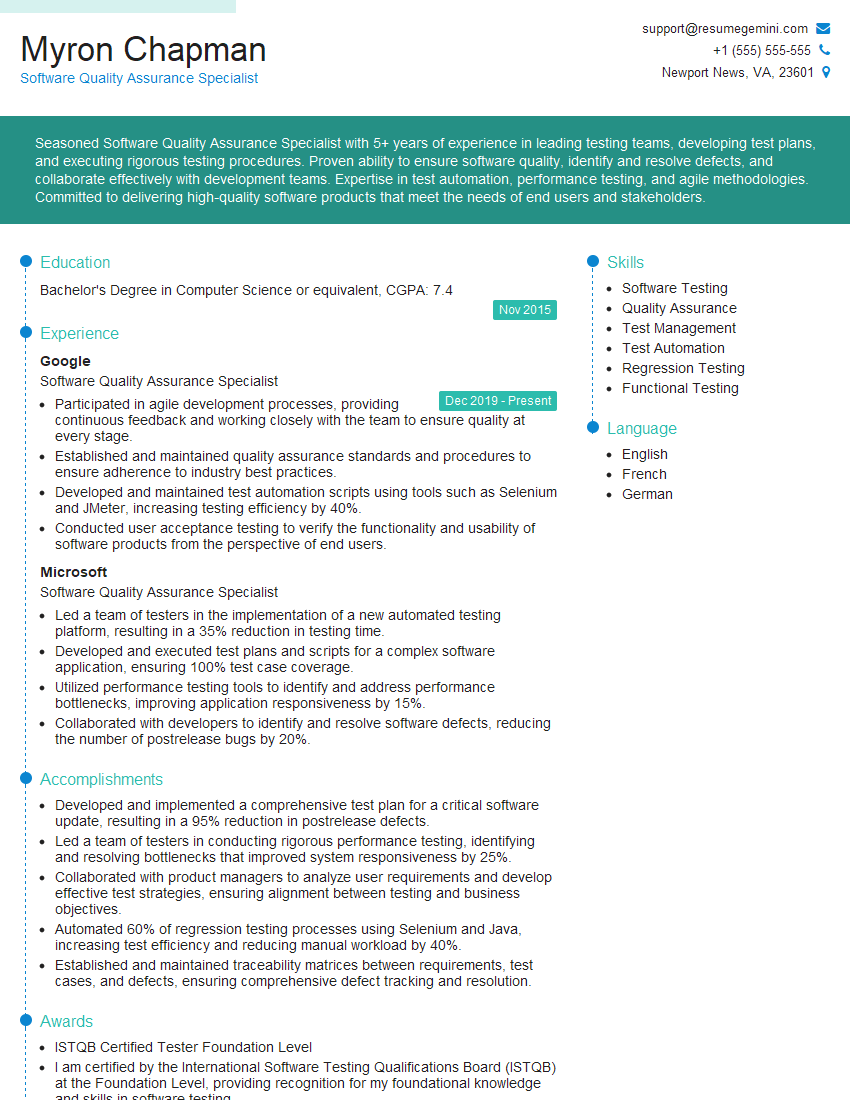

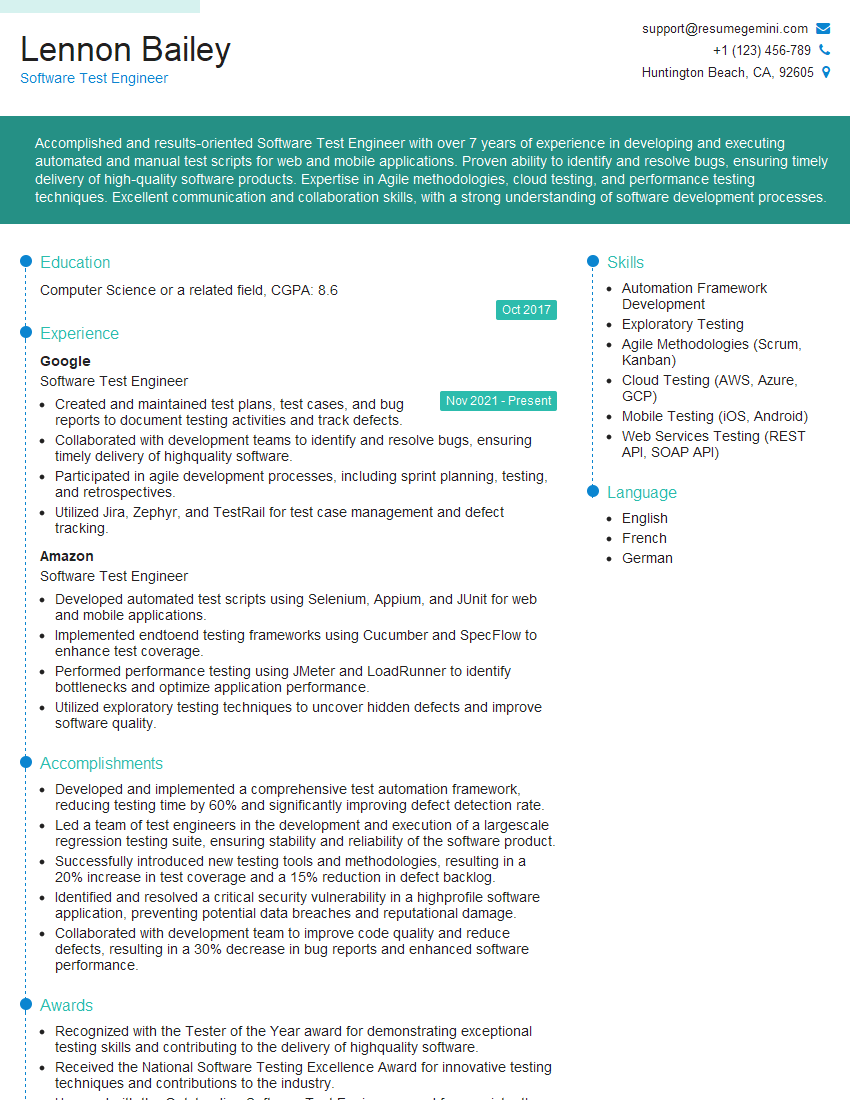

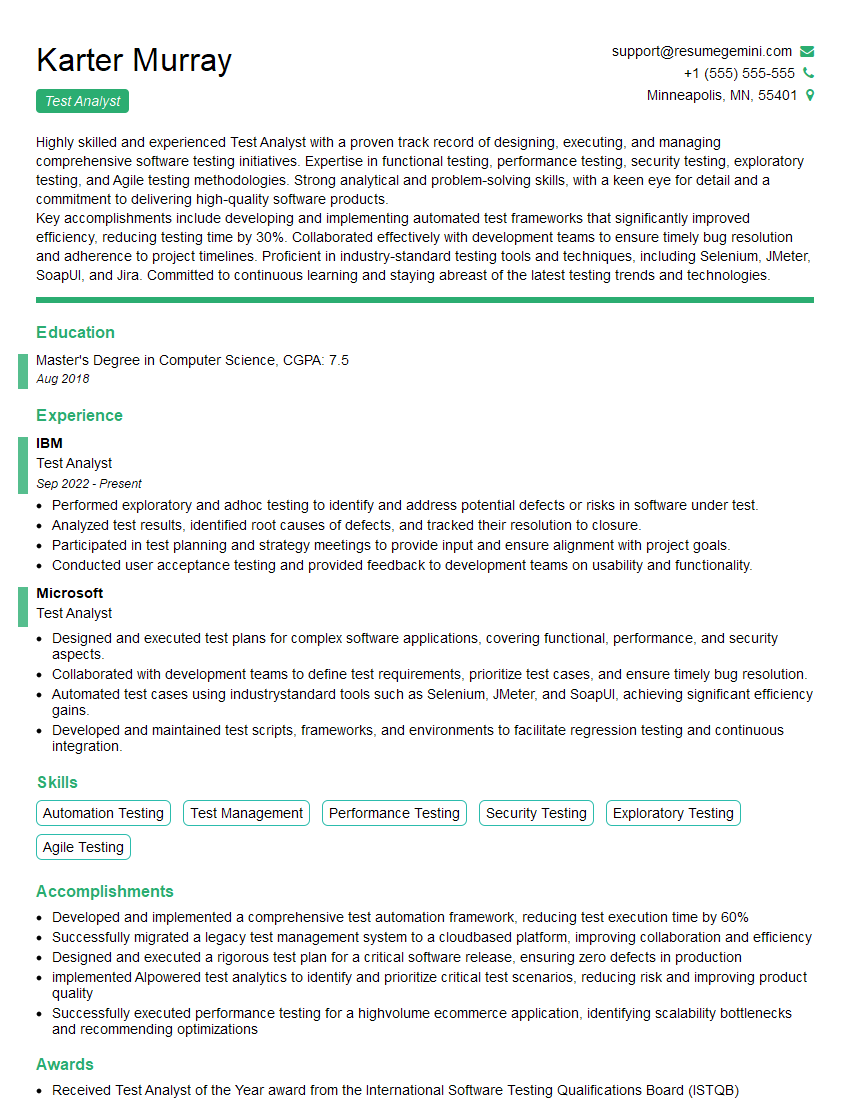

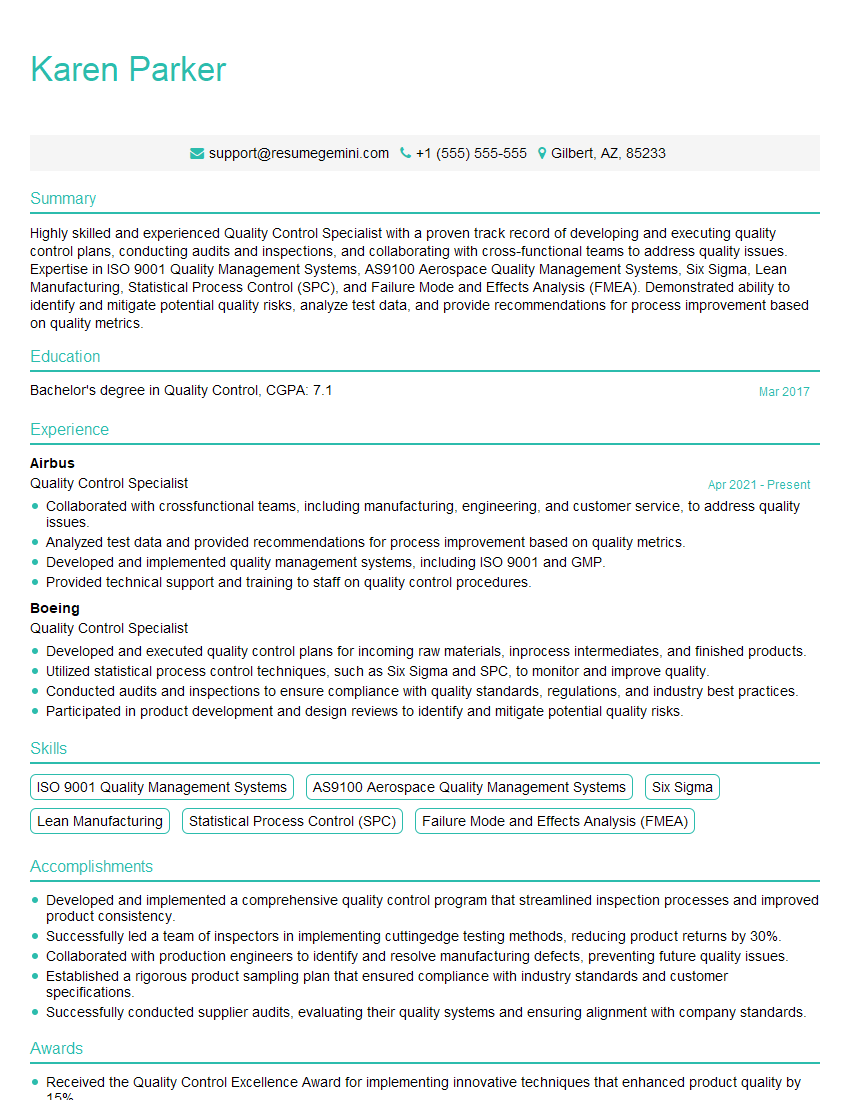

Mastering Quality Assurance techniques is crucial for career advancement in the ever-evolving tech landscape. A strong understanding of these concepts significantly increases your marketability and opens doors to exciting opportunities. To maximize your chances, create an ATS-friendly resume that highlights your skills and experience effectively. ResumeGemini is a valuable resource to help you build a professional and impactful resume. We provide examples of resumes tailored to Knowledge of Quality Assurance Techniques to guide you. Invest the time in crafting a compelling resume – it’s your first impression and a key to unlocking your career goals.

Explore more articles

Users Rating of Our Blogs

Share Your Experience

We value your feedback! Please rate our content and share your thoughts (optional).

What Readers Say About Our Blog

Hello,

We found issues with your domain’s email setup that may be sending your messages to spam or blocking them completely. InboxShield Mini shows you how to fix it in minutes — no tech skills required.

Scan your domain now for details: https://inboxshield-mini.com/

— Adam @ InboxShield Mini

Reply STOP to unsubscribe

Hi, are you owner of interviewgemini.com? What if I told you I could help you find extra time in your schedule, reconnect with leads you didn’t even realize you missed, and bring in more “I want to work with you” conversations, without increasing your ad spend or hiring a full-time employee?

All with a flexible, budget-friendly service that could easily pay for itself. Sounds good?

Would it be nice to jump on a quick 10-minute call so I can show you exactly how we make this work?

Best,

Hapei

Marketing Director

Hey, I know you’re the owner of interviewgemini.com. I’ll be quick.

Fundraising for your business is tough and time-consuming. We make it easier by guaranteeing two private investor meetings each month, for six months. No demos, no pitch events – just direct introductions to active investors matched to your startup.

If youR17;re raising, this could help you build real momentum. Want me to send more info?

Hi, I represent an SEO company that specialises in getting you AI citations and higher rankings on Google. I’d like to offer you a 100% free SEO audit for your website. Would you be interested?

Hi, I represent an SEO company that specialises in getting you AI citations and higher rankings on Google. I’d like to offer you a 100% free SEO audit for your website. Would you be interested?

good