Every successful interview starts with knowing what to expect. In this blog, we’ll take you through the top Analytical Thinking and Decision Making interview questions, breaking them down with expert tips to help you deliver impactful answers. Step into your next interview fully prepared and ready to succeed.

Questions Asked in Analytical Thinking and Decision Making Interview

Q 1. Describe your approach to solving a complex problem with limited data.

When faced with a complex problem and limited data, my approach centers on a structured process that prioritizes understanding, exploration, and informed estimation. I begin by clearly defining the problem, breaking it down into smaller, manageable components. This helps to focus my efforts and identify the most critical data gaps.

Next, I explore available data sources thoroughly, looking for any patterns or trends, even if they’re weak. I might employ techniques like exploratory data analysis (EDA) to visualize the data and uncover hidden insights. Qualitative information, such as expert opinions or anecdotal evidence, can also be surprisingly valuable in complementing limited quantitative data.

Where data is truly sparse, I rely on making informed estimations using statistical modeling or simulation. For instance, if I’m predicting customer churn with limited historical data, I might use Bayesian methods to incorporate prior beliefs and expert knowledge into the model. Sensitivity analysis helps me understand the impact of uncertainty in my assumptions.

Throughout this process, transparency and communication are crucial. I clearly document my assumptions, methods, and the limitations of my analysis, ensuring that the conclusions are presented with appropriate caveats. The goal isn’t to claim certainty with insufficient information, but to provide the best possible estimate supported by available evidence and sound methodology.

Q 2. Explain a time you identified a flawed assumption in a dataset or analysis.

During a project analyzing customer purchase behavior, we initially assumed a uniform distribution of customer spending across different product categories. However, our analysis revealed a highly skewed distribution, with a significant portion of revenue concentrated in a small number of product categories. This flawed assumption, if left uncorrected, would have led to inaccurate predictions of future sales and ineffective inventory management.

I identified the flaw through careful visual inspection of the data during exploratory data analysis. Histograms and box plots clearly showed the non-uniform distribution. Further investigation revealed a seasonal element influencing purchasing behavior, concentrated within specific product categories during particular times of year. We corrected this by segmenting the data by seasonality and employing a more appropriate distribution model to accurately reflect the purchasing patterns. This correction significantly improved the accuracy of our forecasts and informed more effective resource allocation.

Q 3. How do you prioritize tasks when facing competing deadlines and multiple projects?

Prioritizing tasks with competing deadlines requires a structured approach combining urgency, importance, and dependency analysis. I typically use a matrix prioritizing tasks based on their urgency and importance, often visualized using a Eisenhower Matrix (Urgent/Important). This helps me focus my energy on high-impact tasks first.

Understanding task dependencies is also crucial. Some tasks must be completed before others can begin, so a clear understanding of the workflow is essential. Tools like Gantt charts can be incredibly helpful for visualizing task timelines and dependencies.

Finally, effective communication is key. I openly discuss priorities and potential bottlenecks with my team and stakeholders, ensuring everyone is aligned and aware of potential delays or resource constraints. Regularly reviewing and adjusting the priority list based on changing circumstances helps maintain flexibility and adaptability in a dynamic environment.

Q 4. Walk me through your process for evaluating the risks associated with a particular decision.

Evaluating the risks associated with a decision involves a systematic process that identifies potential negative outcomes and assesses their likelihood and impact. I typically begin by identifying all possible outcomes of the decision, both positive and negative. This brainstorming process can involve techniques like SWOT analysis (Strengths, Weaknesses, Opportunities, Threats) or a decision tree.

For each identified risk, I then assess its likelihood of occurrence and potential impact. This often involves both qualitative judgments (e.g., high, medium, low) and quantitative estimations (e.g., probability of failure, financial loss). A risk matrix helps visualize this information, facilitating comparison of different risks.

Finally, I develop mitigation strategies for the most significant risks. These strategies might include contingency plans, risk transfer (e.g., insurance), or risk avoidance altogether. The choice of mitigation strategy depends on the cost and effectiveness of various options. This entire process helps in making an informed decision that accounts for potential downsides and proactive strategies to mitigate them.

Q 5. How do you approach interpreting conflicting data from multiple sources?

Interpreting conflicting data from multiple sources requires a critical and systematic approach. I start by carefully examining each data source for potential biases, inaccuracies, or methodological differences. This might involve reviewing the data collection methods, sample sizes, and the potential influence of external factors.

Next, I look for patterns and commonalities among the datasets, even if they seem contradictory at first glance. This often reveals underlying truths or highlights areas where more investigation is needed. Statistical methods, such as meta-analysis, can be used to combine data from different sources in a rigorous way, weighting data based on reliability and sample size.

If discrepancies remain after careful analysis, I try to understand the reasons for the conflict. It could be due to differences in definitions, measurement methods, or sampling biases. Further investigation, potentially including triangulation (using a third data source for validation), is crucial to resolving the conflict and reaching a more reliable conclusion.

Q 6. Describe a situation where you had to make a difficult decision with incomplete information. What was your process?

In a previous role, we needed to decide whether to launch a new product with incomplete market research data. The available data suggested potential but didn’t definitively prove market demand. The decision was crucial, as a failed launch would have significant financial repercussions.

My approach involved a structured decision-making framework. First, I gathered all available information, even incomplete data points, and clearly articulated the uncertainties. I then explored different scenarios, using sensitivity analysis to understand how the outcome would change under different market conditions. We also sought expert opinions from marketing and sales, incorporating their qualitative insights into our assessment.

Despite incomplete information, we decided to launch a smaller-scale pilot program rather than a full-scale launch. This allowed us to test the market response with less risk, gathering valuable data to refine our strategies before a larger commitment. This phased approach reduced uncertainty and allowed us to make a more informed decision based on real-world feedback, rather than relying solely on incomplete initial data.

Q 7. How do you ensure the accuracy and reliability of your data analysis?

Ensuring the accuracy and reliability of data analysis is paramount. My approach involves a multi-faceted strategy focused on data quality, methodological rigor, and validation. I start by carefully vetting the data sources, ensuring they are reputable and relevant to the analysis. This includes examining data collection methods, potential biases, and the accuracy of data entry.

Methodologically, I favor rigorous and transparent approaches. This includes clearly documenting my analysis steps, using appropriate statistical techniques, and employing robust validation methods. Cross-validation, comparing results from different models or datasets, is crucial for confirming the robustness of my findings.

Furthermore, I always check for errors and inconsistencies in the data. Techniques like outlier detection and data cleaning help eliminate erroneous data points that could skew the results. Finally, peer review and external validation, where feasible, provide an additional layer of quality control, ensuring that my analyses are accurate, reliable, and withstand scrutiny.

Q 8. Explain a time you identified a critical error in an analysis. What actions did you take?

During a project analyzing customer churn, I discovered a significant discrepancy. My initial analysis, using a simple regression model, indicated that customer service interactions were the primary driver of churn. However, a deeper dive revealed a flaw: the model didn’t account for the fact that customers who were already planning to churn were more likely to contact customer service as a last resort. This led to a spurious correlation.

My immediate actions were:

- Verification: I revisited the data cleaning and preprocessing steps to ensure no errors had occurred there.

- Alternative Modeling: I implemented a survival analysis model, which is better suited for analyzing time-to-event data like churn. This model allowed me to control for other factors influencing churn, like contract length and demographics.

- Sensitivity Analysis: I performed sensitivity analysis to understand how my results varied based on the assumptions of the model.

- Communication: I documented my findings and the methodological changes, clearly explaining the initial error and the revised analysis to my team. We discussed the implications for our customer retention strategies.

The revised analysis, incorporating the survival model, correctly identified marketing campaign effectiveness and product usability issues as the key drivers of churn, leading to a more targeted and effective customer retention plan.

Q 9. How do you communicate complex analytical findings to a non-technical audience?

Communicating complex analytical findings to a non-technical audience requires translating technical jargon into plain language and focusing on the story, not the methodology. I use a storytelling approach, combining clear visualizations with simple language.

Here’s my strategy:

- Start with the “So What?”: Begin by highlighting the key insights and their implications in plain language. What are the main takeaways and why do they matter?

- Use Visualizations: Charts and graphs are powerful tools for communicating complex data simply and effectively. I prefer clear and uncluttered charts like bar graphs, line graphs, and pie charts – avoiding overly complicated designs.

- Analogy and Metaphor: Relating complex concepts to everyday experiences can make them more accessible. For example, explaining statistical significance by comparing it to the likelihood of flipping a coin ten times and getting heads every time.

- Focus on the Narrative: Frame the analysis as a story, with a beginning (the problem), a middle (the analysis), and an end (the solution or recommendations).

- Interactive Dashboards (when appropriate): For more involved audiences, interactive dashboards can allow them to explore the data at their own pace and delve into details if they wish.

For example, instead of saying “The p-value was less than 0.05, indicating statistical significance,” I might say, “Our analysis shows a strong relationship between X and Y; the chances of observing this relationship by chance are less than 5%.”

Q 10. What are some common biases that can affect decision-making, and how do you mitigate them?

Cognitive biases are systematic errors in thinking that can affect our judgments and decisions. Some common biases include:

- Confirmation Bias: The tendency to search for, interpret, favor, and recall information in a way that confirms or supports one’s prior beliefs or values.

- Anchoring Bias: Over-reliance on the first piece of information received (the “anchor”) even if it’s irrelevant.

- Availability Heuristic: Overestimating the likelihood of events that are easily recalled, often due to their vividness or recent occurrence.

- Overconfidence Bias: Overestimating one’s own abilities or the accuracy of one’s predictions.

To mitigate these biases, I employ several strategies:

- Data-Driven Approach: Relying on objective data and evidence, rather than gut feelings or assumptions.

- Seeking Diverse Perspectives: Engaging with colleagues who have different viewpoints and experiences to challenge my assumptions and identify blind spots.

- Structured Decision-Making Frameworks: Utilizing frameworks like cost-benefit analysis or decision trees to ensure a systematic and unbiased approach.

- Sensitivity Analysis: Testing the robustness of my conclusions by varying the assumptions or inputs to the analysis.

- Regular Self-Reflection: Actively reflecting on my own biases and potential sources of error.

For instance, when evaluating a new product launch, I’d actively seek data from various sources, and challenge my own assumptions by considering alternative scenarios, ensuring I am not anchored to initial market research.

Q 11. How do you use data visualization to enhance decision-making?

Data visualization is crucial for enhancing decision-making by making complex data more understandable and actionable. It transforms raw data into easily digestible visual representations, revealing patterns, trends, and outliers that might be missed in raw numerical data.

I use data visualization in several ways:

- Identifying Trends and Patterns: Line graphs are effective for showing trends over time, while scatter plots reveal correlations between variables.

- Comparing Data: Bar charts and pie charts excel at comparing different categories or groups.

- Highlighting Outliers: Box plots effectively showcase data distribution and highlight outliers that may warrant further investigation.

- Communicating Insights: Visualizations are a powerful tool for presenting findings to both technical and non-technical audiences.

- Interactive Dashboards: Allows users to explore data dynamically, filtering and drilling down into specific aspects.

For example, visualizing website traffic data using a line graph can quickly show seasonal patterns or the impact of a marketing campaign. A heatmap can illustrate geographic variations in sales performance, pinpointing high-performing and underperforming regions.

Q 12. Describe a time you used statistical modeling to support a business decision.

In a previous role, we were considering expanding our product line into a new market segment. To support this decision, I built a regression model to predict the potential market demand for the new product.

The model incorporated factors such as:

- Market size: Data on the population size and demographics of the target segment.

- Competitor analysis: Data on the existing competitors in the market, their market share, and pricing strategies.

- Pricing strategy: Different pricing scenarios were tested in the model.

- Marketing spend: Various levels of marketing investment were included to assess their impact on sales.

The model generated forecasts for different scenarios, allowing us to assess the potential profitability and risk associated with entering the new market. The results showed that under certain pricing and marketing scenarios, the market entry would be profitable. This quantitative analysis, provided by the statistical modeling, significantly influenced the decision to proceed with the product line expansion. The model’s projections helped justify the investment and provided a framework for monitoring performance post-launch.

Q 13. How familiar are you with different statistical methods and when would you apply each?

I am familiar with a range of statistical methods and choose the most appropriate based on the research question, data type, and assumptions.

Here are a few examples:

- Regression Analysis (Linear, Logistic, etc.): Used to model the relationship between a dependent variable and one or more independent variables. Linear regression is suitable for continuous dependent variables, while logistic regression is used for binary outcomes.

- Hypothesis Testing (t-tests, ANOVA, Chi-square): Used to test specific hypotheses about population parameters. A t-test compares means between two groups, while ANOVA compares means across multiple groups. Chi-square tests for association between categorical variables.

- Time Series Analysis (ARIMA, Exponential Smoothing): Used to analyze data collected over time, identifying trends, seasonality, and other patterns. Helpful for forecasting future values.

- Clustering (K-means, Hierarchical): Used to group similar data points together. Useful for customer segmentation or market research.

- Survival Analysis (Kaplan-Meier, Cox proportional hazards): Used to analyze time-to-event data, like customer churn or equipment failure.

The choice of method depends heavily on the specific context. For example, if I am analyzing customer satisfaction scores, I might use a t-test to compare satisfaction levels between two customer segments. If forecasting sales is the goal, a time series model is more appropriate. For understanding how various factors influence customer churn, survival analysis is the preferred method. It’s important to consider the data properties, assumptions of each method, and carefully interpret the results.

Q 14. Explain the concept of ‘root cause analysis’ and provide a specific example of its application.

Root cause analysis (RCA) is a systematic approach to identifying the underlying cause of a problem, rather than just addressing the symptoms. It aims to prevent the problem from recurring by addressing the root cause instead of just treating the immediate issue. Think of it like fixing a leaky faucet – you could just keep wiping up the water, or you could find and fix the leak itself.

The “5 Whys” technique is a common RCA method. You repeatedly ask “Why?” to drill down to the root cause. Let’s illustrate with an example:

Problem: High customer complaints about late deliveries.

- Why? Because many orders were not shipped on time.

- Why? Because the warehouse was understaffed during peak season.

- Why? Because we didn’t hire enough temporary workers for the peak season.

- Why? Because the demand forecasting was inaccurate.

- Why? Because we didn’t adequately account for the impact of a new marketing campaign on order volume.

The root cause is the inaccurate demand forecasting due to a failure to account for the marketing campaign’s impact. Solving the problem requires improving the demand forecasting model, not just hiring more temporary staff.

Other RCA methods include Fishbone Diagrams (Ishikawa diagrams) and Fault Tree Analysis. The choice of method depends on the complexity of the problem and the available data. The key is to be systematic and avoid jumping to conclusions based on surface-level observations.

Q 15. How do you differentiate between correlation and causation?

Correlation and causation are often confused, but they represent distinct relationships between variables. Correlation simply indicates a statistical relationship between two or more variables – they tend to change together. This relationship can be positive (both increase together), negative (one increases as the other decreases), or zero (no apparent relationship). Causation, on the other hand, means that one variable directly influences or causes a change in another. Just because two variables are correlated doesn’t mean one causes the other.

Example: Ice cream sales and crime rates are often positively correlated – both tend to be higher in the summer. However, this doesn’t mean that eating ice cream causes crime, or vice versa. The underlying factor is the warm weather, which influences both.

To differentiate, you need to consider other factors, use control groups, and ideally conduct experiments to establish a causal link. Statistical methods like regression analysis can help quantify the correlation, but they cannot prove causation.

Career Expert Tips:

- Ace those interviews! Prepare effectively by reviewing the Top 50 Most Common Interview Questions on ResumeGemini.

- Navigate your job search with confidence! Explore a wide range of Career Tips on ResumeGemini. Learn about common challenges and recommendations to overcome them.

- Craft the perfect resume! Master the Art of Resume Writing with ResumeGemini’s guide. Showcase your unique qualifications and achievements effectively.

- Don’t miss out on holiday savings! Build your dream resume with ResumeGemini’s ATS optimized templates.

Q 16. How do you handle uncertainty and ambiguity in your decision-making process?

Uncertainty and ambiguity are inherent in many decision-making situations. My approach involves a structured process:

- Define the problem clearly: Start by carefully outlining the problem, including known and unknown factors. The more precise the problem definition, the better we can address the uncertainties.

- Gather information: Actively seek out all relevant data, even if it’s incomplete or unreliable. Multiple data sources provide a more robust picture.

- Assess risks and probabilities: Using available data and expert judgment, estimate the likelihood of different outcomes. Techniques like scenario planning can be helpful here, where we create several possible future scenarios based on various assumptions.

- Use decision-making frameworks: Employ frameworks such as decision trees or multi-criteria decision analysis (MCDA) to systematically evaluate options and their potential consequences. These tools help quantify and weigh different factors, even with uncertainty.

- Embrace iterative decision-making: Recognize that decisions may need adjustments as new information becomes available. A continuous monitoring and feedback loop is crucial.

Example: In launching a new product, market research might yield incomplete data on consumer preferences. Instead of waiting for perfect information, I’d gather available data, conduct focus groups, and utilize market research models to predict potential success based on various scenarios (e.g., high vs. low adoption rates). The launch would then be treated as a pilot, with ongoing monitoring and adjustments based on market response.

Q 17. Explain your understanding of cost-benefit analysis.

Cost-benefit analysis (CBA) is a systematic approach to evaluating the merits of a project or decision by comparing its total costs to its total benefits. It’s a powerful tool for making informed choices, particularly when resources are scarce.

A well-conducted CBA involves:

- Identifying all relevant costs: This includes direct costs (e.g., materials, labor), indirect costs (e.g., opportunity costs, administrative overhead), and intangible costs (e.g., environmental impact).

- Quantifying costs and benefits: Expressing both costs and benefits in monetary terms (or other comparable units) is crucial. This might involve using estimates and making assumptions about future events.

- Discounting future cash flows: Because money today is worth more than money in the future (due to inflation and potential investment opportunities), future costs and benefits must be discounted to their present values.

- Calculating the net present value (NPV): The NPV is the sum of the discounted benefits minus the sum of the discounted costs. A positive NPV indicates that the project is likely to be worthwhile.

- Sensitivity analysis: This involves examining how the NPV changes when assumptions about costs and benefits are varied. This helps assess the robustness of the analysis and identify critical uncertainties.

Example: A company might use CBA to decide whether to invest in a new manufacturing facility. They’d estimate the costs of construction, equipment, labor, etc. and the benefits from increased production and sales. The CBA would then show whether the investment is likely to generate a positive return.

Q 18. Describe a situation where you had to justify a recommendation based on quantitative data.

During a project to optimize our customer service call center, I analyzed call handling times, customer satisfaction scores, and agent productivity metrics. I found a strong correlation between average handling time and customer satisfaction. Longer call times led to lower satisfaction scores. I used regression analysis to quantify this relationship, demonstrating that a reduction in average handling time by 1 minute could lead to an X% increase in customer satisfaction.

My recommendation was to invest in a new call routing system and implement updated training protocols for agents, focusing on efficient call resolution techniques. This recommendation was supported by a detailed cost-benefit analysis showing a significant return on investment in terms of improved customer satisfaction and reduced operational costs. The quantitative data presented in charts and graphs, clearly illustrating the relationship between handling time and customer satisfaction, helped secure leadership buy-in.

Q 19. How do you stay updated on the latest advancements in analytical tools and techniques?

Staying current in the rapidly evolving field of analytics requires a multi-faceted approach:

- Professional development courses and conferences: I regularly attend workshops and conferences to learn about new techniques and tools. These events often feature leading experts and offer hands-on experience with cutting-edge technologies.

- Online learning platforms and resources: Sites like Coursera, edX, and DataCamp offer excellent courses on various analytical techniques, programming languages (like Python and R), and data visualization tools.

- Industry publications and journals: Keeping up with industry publications, journals, and research papers allows me to stay abreast of the latest findings and advancements in my field.

- Networking and collaboration: Engaging with colleagues, attending meetups, and participating in online communities fosters the exchange of knowledge and best practices. Discussions with peers are a fantastic source of learning.

- Hands-on projects and experimentation: Applying new techniques and tools to real-world datasets helps solidify understanding and builds practical experience.

Q 20. Describe your experience with different data analysis software and tools.

I possess extensive experience with a range of data analysis software and tools, including:

- Programming Languages: Python (with libraries like Pandas, NumPy, Scikit-learn), R (with packages like dplyr, ggplot2)

- Data Visualization Tools: Tableau, Power BI, matplotlib, seaborn

- Statistical Software: SPSS, SAS

- Database Management Systems: SQL, MySQL, PostgreSQL

- Cloud Computing Platforms: AWS, Azure, GCP (for data storage and processing)

My proficiency in these tools enables me to effectively handle diverse data analysis tasks, from data cleaning and preprocessing to complex statistical modeling and insightful data visualization.

Q 21. How do you define success in your analytical work?

Success in my analytical work is defined by a combination of factors:

- Accuracy and reliability of insights: My analyses must be rigorously conducted and produce reliable, defensible conclusions. This includes using appropriate methodologies, validating findings, and carefully considering potential biases.

- Actionable recommendations: The insights I provide should be directly applicable and drive informed decision-making. They need to be clear, concise, and easily understood by the intended audience.

- Impact and contribution to business goals: Ultimately, my work should contribute meaningfully to the achievement of organizational objectives, whether it’s improving efficiency, increasing revenue, or mitigating risk. A successful analysis is one that leads to tangible improvements.

- Communication and collaboration: Effectively communicating my findings to both technical and non-technical audiences is critical. Collaboration with stakeholders is key to ensuring the analysis addresses the right questions and the results are effectively implemented.

Q 22. How do you measure the effectiveness of a decision you’ve made?

Measuring the effectiveness of a decision isn’t a simple yes or no; it’s a multifaceted process requiring careful consideration of both intended and unintended consequences. We need to establish clear, measurable objectives before making the decision. This forms our baseline for evaluation. Then, post-decision, we track key performance indicators (KPIs) related to those objectives.

- Quantitative Metrics: For example, if the decision was to launch a new marketing campaign, we’d track website traffic, sales conversions, and customer acquisition cost. A significant increase in these metrics, exceeding the pre-defined targets, would indicate effectiveness.

- Qualitative Metrics: We also need to consider less easily quantifiable aspects like customer satisfaction (through surveys or reviews), employee morale (if the decision impacted the team), and brand reputation. This often involves gathering qualitative data like feedback forms or conducting interviews.

- Comparative Analysis: Comparing the actual outcomes against a counterfactual – what would have happened if a different decision had been made – provides valuable insight. This might involve scenario planning or A/B testing.

Ultimately, measuring effectiveness requires a holistic approach, combining quantitative data with qualitative insights to create a complete picture of the decision’s impact.

Q 23. How do you deal with conflicting priorities in a fast-paced environment?

Conflicting priorities in a fast-paced environment are a common challenge. My approach involves a structured prioritization framework, often employing a combination of techniques.

- Prioritization Matrix: I use a matrix plotting urgency versus importance. High urgency, high importance tasks get immediate attention. High importance, low urgency tasks are scheduled. Low importance tasks may be delegated or deferred.

- MoSCoW Method: This involves categorizing requirements as Must have, Should have, Could have, and Won’t have. This allows for a clear understanding of which tasks are essential and which can be sacrificed if necessary to meet deadlines.

- Timeboxing: I allocate specific time blocks to each task, enforcing focus and preventing scope creep. This ensures that even amidst many competing demands, progress is made on each critical item.

- Communication and Collaboration: Open communication with stakeholders is key. It ensures everyone is on the same page about priorities and helps manage expectations. Sometimes, renegotiating deadlines or adjusting scope becomes necessary.

Essentially, it’s about strategically allocating resources – time, effort, and personnel – based on a clear understanding of the relative value of each task. It’s a continuous process of re-evaluation and adjustment.

Q 24. Describe a time you had to make a quick, high-stakes decision. What was the outcome?

In a previous role, we faced a critical system failure during peak hours. The system processed vital financial transactions, and the downtime was causing significant financial losses and reputational damage. It was a high-stakes situation demanding a quick decision.

After quickly assessing the situation – the root cause was identified as a database server crash – we had to decide between attempting a quick fix that risked further damage or initiating a full system rollback, which would be slower but safer. The rollback would mean a longer period of downtime but would ensure data integrity.

We opted for the rollback, which meant a longer outage (about 3 hours). While frustrating for our clients, this decision minimized further damage, prevented data loss, and protected the company’s reputation. Post-incident analysis identified the root cause and implemented preventive measures to avoid recurrence.

The outcome was initially negative due to downtime, but the long-term consequence of choosing data integrity over a rapid, potentially risky fix ultimately proved successful.

Q 25. How do you handle criticism of your analytical work?

Criticism is an invaluable opportunity for growth and improvement. I welcome constructive criticism and view it as a chance to refine my analytical work and learn from others’ perspectives. My approach involves:

- Active Listening: I listen carefully to understand the critic’s points, asking clarifying questions to ensure full comprehension.

- Objective Assessment: I objectively evaluate the criticism, separating emotional reactions from factual observations. I examine whether the points raised are valid and supported by evidence.

- Seeking Clarification: If aspects of the criticism are unclear, I seek further clarification to better understand the concerns.

- Reflection and Improvement: I reflect on the feedback and identify areas for improvement in my methodology, data handling, or communication. I incorporate the constructive feedback into my future work.

- Documentation: For significant projects, I maintain detailed documentation of my analytical process, allowing for easy review and addressing concerns effectively.

If the criticism is unfounded or based on misinterpretations, I politely but firmly explain my rationale, providing supporting evidence to substantiate my conclusions. My goal is continuous improvement, and constructive criticism is essential for that journey.

Q 26. Describe your approach to identifying and evaluating potential solutions to a problem.

My approach to identifying and evaluating potential solutions is systematic and iterative. It involves:

- Problem Definition: First, I clearly define the problem. This often involves understanding its root causes, not just the symptoms.

- Brainstorming: I brainstorm potential solutions using techniques like mind mapping or lateral thinking, encouraging diverse perspectives.

- Prioritization: I prioritize potential solutions based on feasibility, cost, and expected impact. A decision matrix or cost-benefit analysis can be helpful here.

- Solution Evaluation: I evaluate the chosen solutions using criteria like efficiency, effectiveness, risk mitigation, and scalability. This may involve prototyping or simulations.

- Refinement and Iteration: The solution may need refinement based on the evaluation results. This is an iterative process. I’m open to revisiting and improving upon the selected solution.

- Implementation Plan: Once a solution is finalized, I develop a comprehensive implementation plan that includes timelines, resource allocation, and risk management strategies.

This structured approach ensures that I explore a range of possibilities before settling on the most effective and feasible solution, while considering various factors to ensure its success.

Q 27. How do you balance the need for speed in decision-making with the need for thorough analysis?

Balancing speed and thoroughness in decision-making is a delicate act, akin to navigating a tightrope. It requires a contextual approach, where the urgency and complexity of the situation dictate the level of analysis.

In high-pressure situations requiring immediate action, I might employ a simplified decision-making framework, focusing on critical factors and readily available information. This could involve using heuristics or rules of thumb based on past experience and readily available data.

For more complex decisions with significant consequences, a more thorough analysis is warranted. This may involve using advanced analytical techniques, data modeling, and scenario planning to explore the potential outcomes of different options. The key is to prioritize the most impactful analyses while managing time constraints. This may require breaking down the problem into smaller, manageable parts and allocating time strategically.

In essence, it’s about adapting the level of analysis to the context, recognizing that sometimes a quick, informed decision is better than a perfect but delayed one. It’s about finding the sweet spot between informed speed and robust analysis.

Q 28. How would you approach the analysis of a large, unstructured dataset?

Analyzing a large, unstructured dataset requires a methodical approach. My strategy typically involves these steps:

- Data Exploration and Cleaning: The first step is exploring the data to understand its structure, identify missing values, and handle outliers. This may involve using tools like Python’s pandas library for data manipulation and cleaning.

- Data Reduction and Feature Engineering: Given the size of the data, dimensionality reduction techniques might be applied. This could involve feature selection, principal component analysis (PCA), or other dimensionality reduction techniques to reduce the number of variables while retaining important information. Feature engineering is crucial, creating new features from existing ones to potentially improve predictive power.

- Exploratory Data Analysis (EDA): I’d employ EDA techniques (using visualization tools like Matplotlib, Seaborn, or Tableau) to identify patterns, trends, and relationships within the data. This helps formulate hypotheses and guide subsequent analysis.

- Modeling and Analysis: Depending on the objective (e.g., prediction, clustering, or anomaly detection), I’d choose appropriate analytical techniques. This could range from simple statistical methods to advanced machine learning algorithms. Techniques like natural language processing (NLP) might be used if text data is present.

- Model Evaluation and Interpretation: It’s crucial to evaluate the model’s performance using appropriate metrics. For instance, accuracy, precision, recall, and F1-score are common metrics in classification problems. The results need to be interpreted in the context of the business problem.

Throughout the process, iterative refinement is key. The results of each step inform the subsequent steps, allowing for an adaptive approach to data analysis. This ensures efficiency and effective insights even when dealing with the complexities of big, unstructured data.

Key Topics to Learn for Analytical Thinking and Decision Making Interview

- Data Analysis & Interpretation: Understanding various data types, identifying patterns, drawing inferences, and communicating findings effectively. Practical application: Analyzing sales figures to identify trends and inform marketing strategies.

- Problem Decomposition & Structuring: Breaking down complex problems into smaller, manageable parts; defining clear objectives and constraints. Practical application: Developing a structured approach to solve a customer service issue involving multiple stakeholders.

- Critical Evaluation & Hypothesis Testing: Assessing the validity of information, formulating hypotheses, and designing tests to validate them. Practical application: Evaluating the effectiveness of a new marketing campaign using A/B testing methodology.

- Decision-Making Frameworks: Utilizing frameworks like cost-benefit analysis, decision trees, or prioritization matrices to guide decision-making processes. Practical application: Choosing between different project options based on resource allocation and potential ROI.

- Risk Assessment & Mitigation: Identifying potential risks and developing strategies to mitigate their impact. Practical application: Developing contingency plans for a project launch, considering potential delays or market changes.

- Communication & Collaboration: Effectively communicating analytical findings and collaborating with others to solve problems. Practical application: Presenting data-driven recommendations to senior management, effectively justifying your conclusions.

- Logical Reasoning & Deductive/Inductive Thinking: Applying logic to solve problems and draw conclusions from evidence. Practical application: Troubleshooting a technical issue by systematically eliminating possible causes.

Next Steps

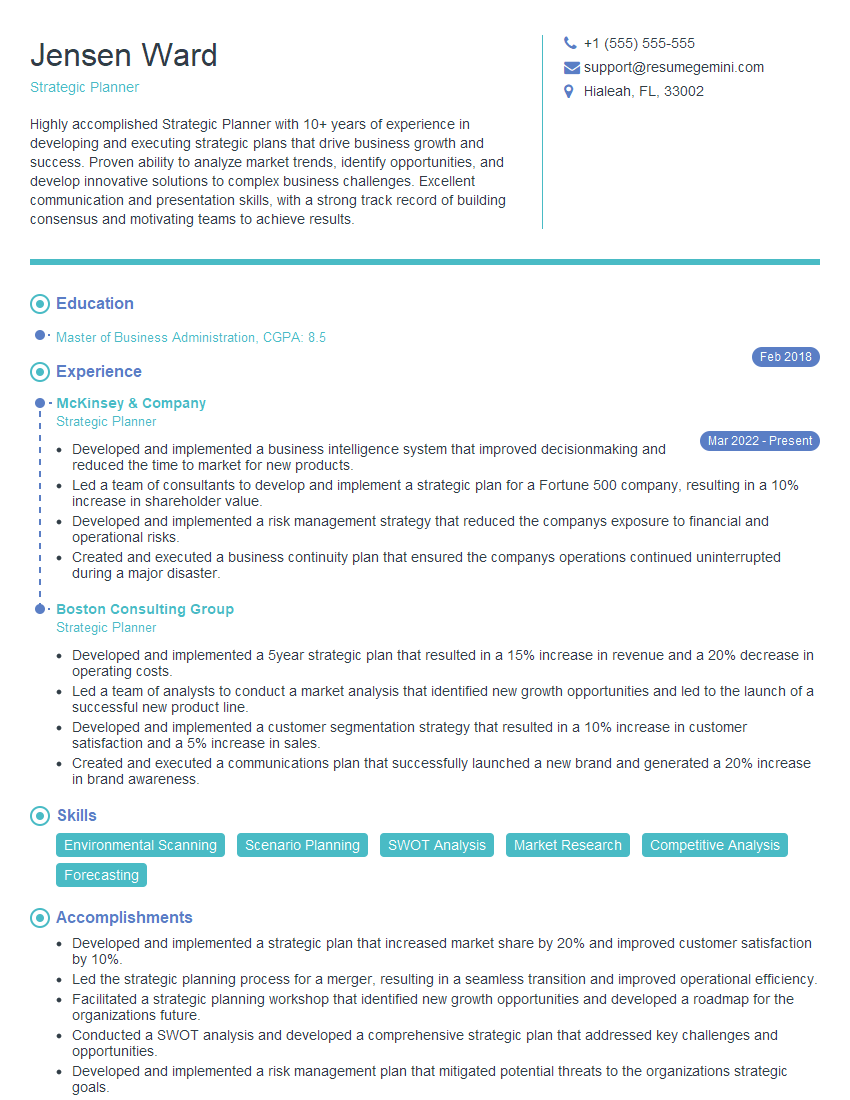

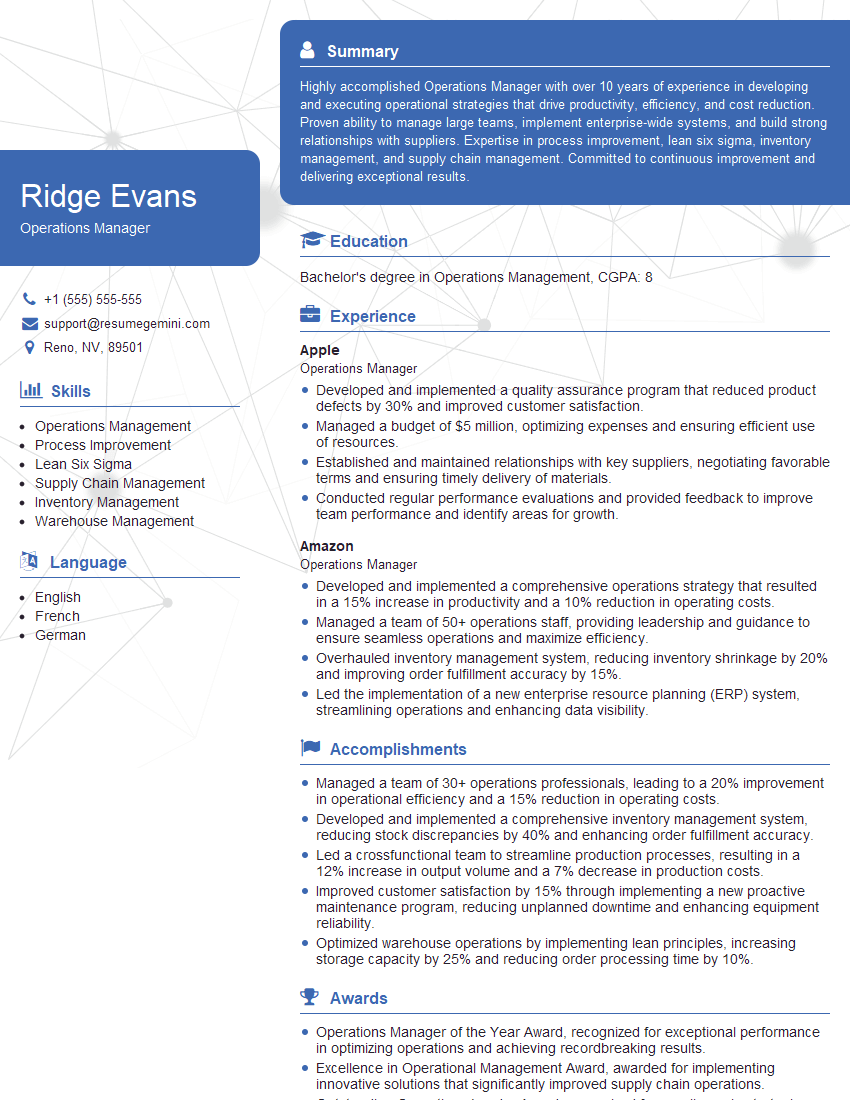

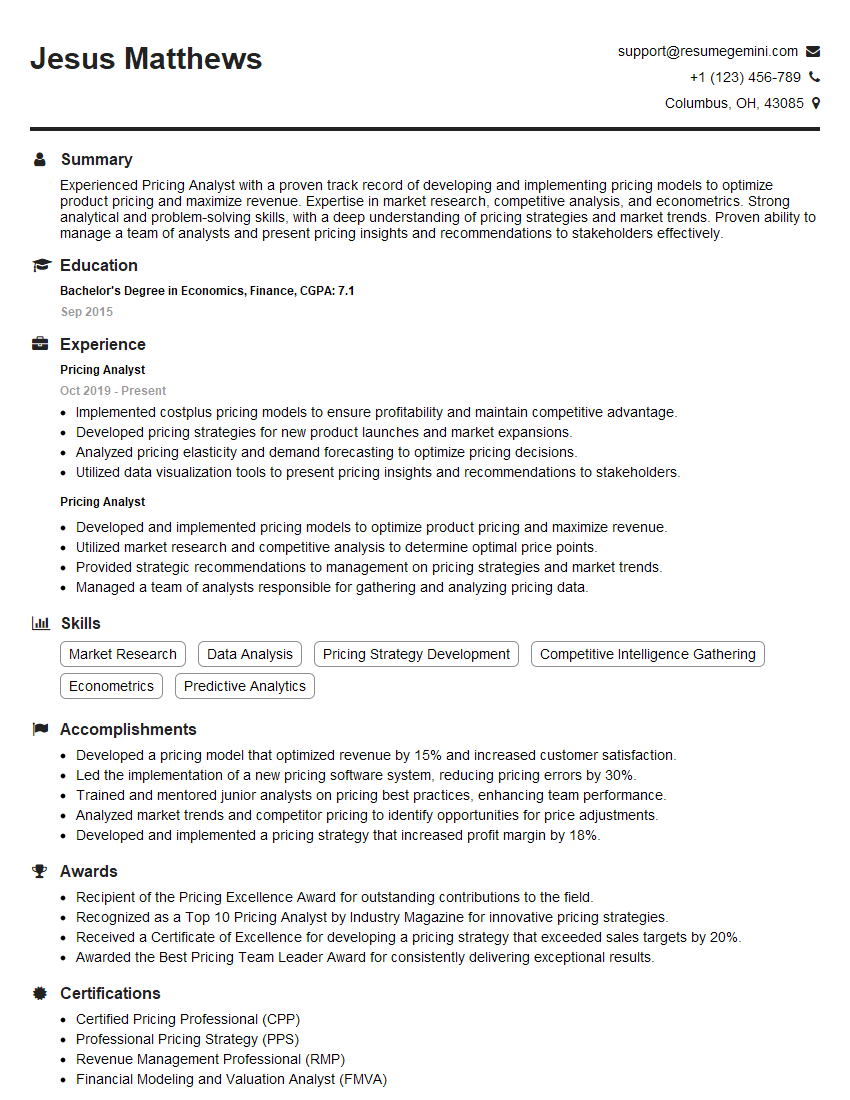

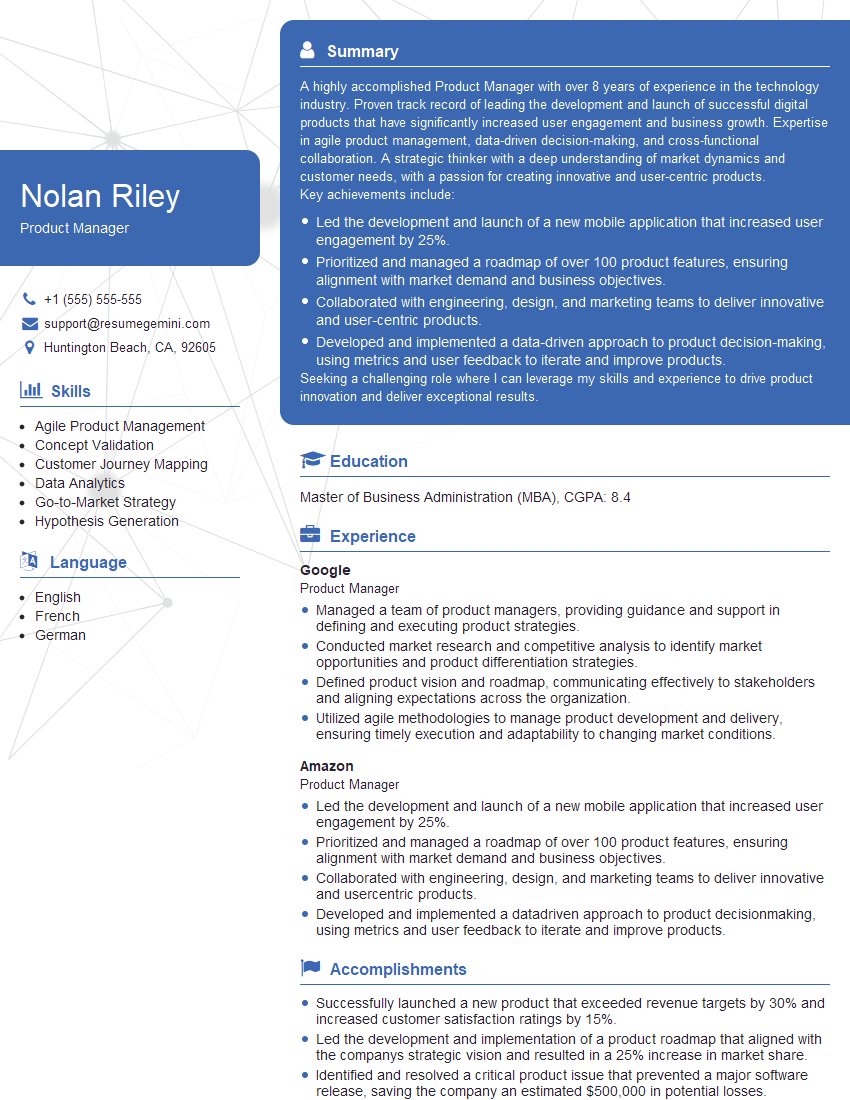

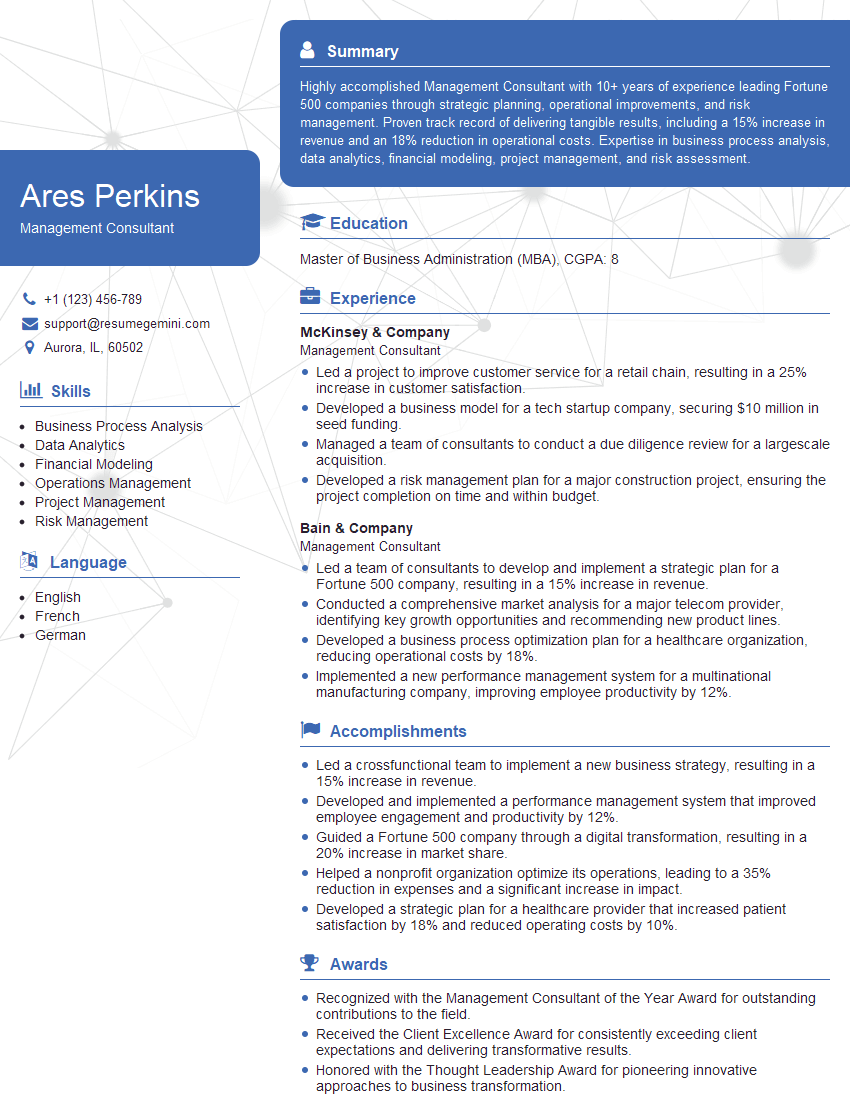

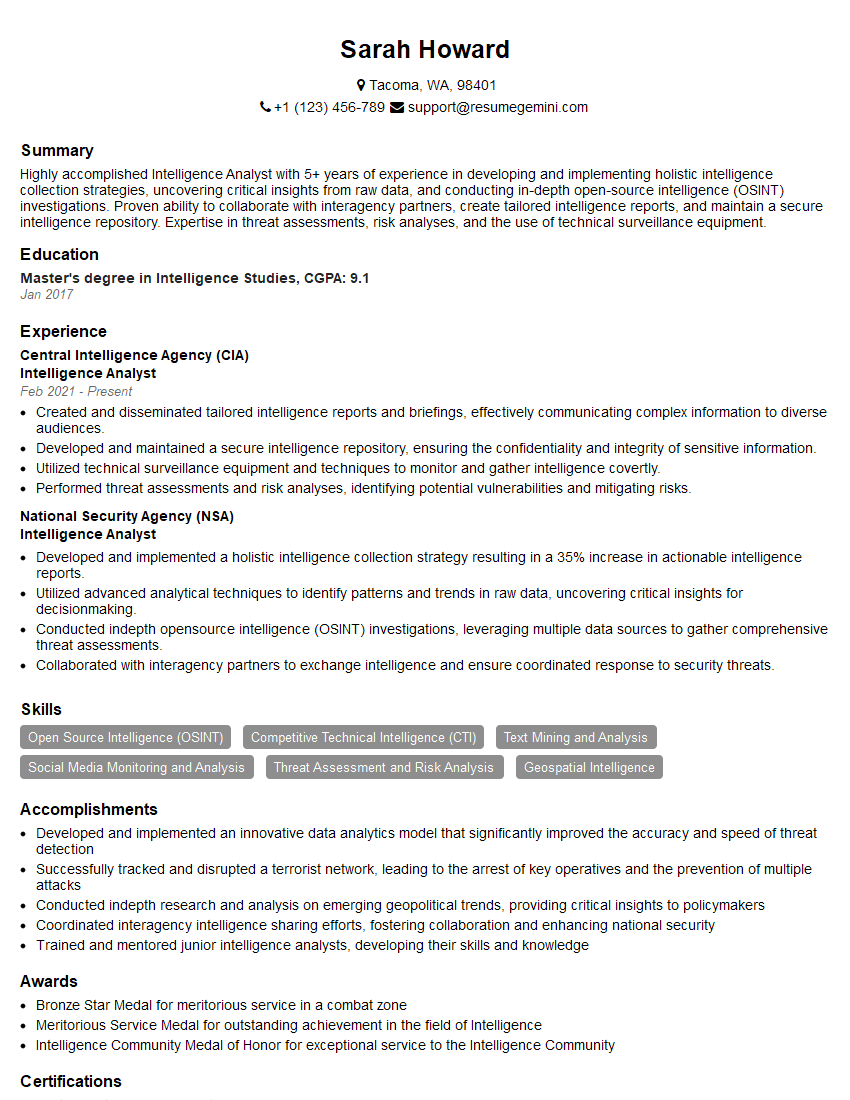

Mastering Analytical Thinking and Decision Making is crucial for career advancement in today’s data-driven world. These skills are highly sought after across numerous industries, leading to greater responsibility and higher earning potential. To significantly boost your job prospects, it’s essential to present these abilities effectively on your resume. Creating an ATS-friendly resume is key to getting your application noticed by recruiters. ResumeGemini is a trusted resource to help you build a professional and impactful resume that showcases your skills effectively. We provide examples of resumes tailored to highlight Analytical Thinking and Decision Making capabilities, allowing you to see practical implementation and inspiration for your own resume.

Explore more articles

Users Rating of Our Blogs

Share Your Experience

We value your feedback! Please rate our content and share your thoughts (optional).

What Readers Say About Our Blog

Hello,

We found issues with your domain’s email setup that may be sending your messages to spam or blocking them completely. InboxShield Mini shows you how to fix it in minutes — no tech skills required.

Scan your domain now for details: https://inboxshield-mini.com/

— Adam @ InboxShield Mini

Reply STOP to unsubscribe

Hi, are you owner of interviewgemini.com? What if I told you I could help you find extra time in your schedule, reconnect with leads you didn’t even realize you missed, and bring in more “I want to work with you” conversations, without increasing your ad spend or hiring a full-time employee?

All with a flexible, budget-friendly service that could easily pay for itself. Sounds good?

Would it be nice to jump on a quick 10-minute call so I can show you exactly how we make this work?

Best,

Hapei

Marketing Director

Hey, I know you’re the owner of interviewgemini.com. I’ll be quick.

Fundraising for your business is tough and time-consuming. We make it easier by guaranteeing two private investor meetings each month, for six months. No demos, no pitch events – just direct introductions to active investors matched to your startup.

If youR17;re raising, this could help you build real momentum. Want me to send more info?

Hi, I represent an SEO company that specialises in getting you AI citations and higher rankings on Google. I’d like to offer you a 100% free SEO audit for your website. Would you be interested?

Hi, I represent an SEO company that specialises in getting you AI citations and higher rankings on Google. I’d like to offer you a 100% free SEO audit for your website. Would you be interested?

good