Interviews are more than just a Q&A session—they’re a chance to prove your worth. This blog dives into essential Process Variation Analysis interview questions and expert tips to help you align your answers with what hiring managers are looking for. Start preparing to shine!

Questions Asked in Process Variation Analysis Interview

Q 1. Explain the concept of process capability and its importance.

Process capability refers to a process’s ability to consistently produce outputs within specified limits. Think of it like a basketball player’s free-throw percentage – a high percentage indicates a capable process (consistent shots), while a low percentage indicates a less capable process (inconsistent shots). It’s crucial because it tells us how well a process meets customer requirements and specifications. A high process capability minimizes defects and waste, leading to increased customer satisfaction and reduced costs. It’s measured using indices like Cp and Cpk, which compare the process’s natural variation to the tolerance limits set by the customer or design specifications.

For example, a manufacturing process producing engine parts needs to ensure the parts fall within a specific size range. A high process capability indicates the process consistently produces parts within that range, minimizing rejects and ensuring quality.

Q 2. Describe different types of process variation (common cause vs. special cause).

Process variation is the natural fluctuation in outputs from a process. There are two main types: common cause and special cause variation.

- Common cause variation is the inherent, ever-present variability within a process. It’s the background noise, the random fluctuations we expect to see. Think of it as the consistent, small variations in the size of cookies baked from the same recipe – some are slightly larger, some slightly smaller, but all are within an acceptable range.

- Special cause variation, on the other hand, is unusual or unexpected variation that signals a problem. It’s a signal that something outside of the usual process is happening. This could be a machine malfunction, a change in raw materials, or a new operator making errors. Think of it as a sudden, large change in the size of the cookies – maybe one batch is significantly smaller than others, indicating a problem with the oven temperature or ingredient quantity.

Understanding the difference between these two types is critical for effective process improvement. Addressing common cause variation requires systemic changes to the process itself, while special cause variation requires immediate investigation and correction of the root cause.

Q 3. How do you identify special cause variation in a process?

Identifying special cause variation requires careful analysis of process data. We often use control charts for this purpose (explained more in detail in the following answers), but other methods include:

- Run Charts: Simple plots of data over time can reveal trends or shifts that indicate special cause variation.

- Control Charts: These provide statistically defined limits for identifying points outside of common cause variation.

- Process Audits: A thorough examination of the process can uncover flaws or unusual events that could contribute to variation.

- Data Analysis Tools: Statistical tools such as regression analysis or ANOVA can help pinpoint factors causing unexpected variation.

In short, you look for anything unusual or unexpected. This might be a single point far outside the typical range, a series of points trending upwards or downwards, or a sudden shift in the average.

For example, if you’re monitoring the weight of bags of flour, and suddenly one bag is significantly lighter than the others, that’s a clear indication of special cause variation, and you’d investigate the packaging process or weighing machine.

Q 4. What are control charts and how are they used in process variation analysis?

Control charts are powerful visual tools used to monitor process variation and identify special cause variation. They plot data points over time, with statistically calculated control limits. These limits represent the expected range of variation due to common causes. Points falling outside these limits signal potential special cause variation, requiring investigation. Control charts essentially help distinguish between random fluctuations and significant changes in a process. They’re an essential part of Statistical Process Control (SPC).

They’re used in a wide range of industries, from manufacturing and healthcare to finance and software development, to monitor process stability and prevent defects. They provide a visual and statistical approach to understanding process behavior.

Q 5. Explain the difference between X-bar and R charts and when to use each.

Both X-bar and R charts are used to monitor the central tendency and dispersion of a process, but they focus on different aspects.

- X-bar chart tracks the average (mean) of subgroups of data points over time. It monitors the central tendency of the process. Think of it like tracking the average weight of bags of sugar from multiple batches.

- R chart monitors the range of variation within each subgroup. It monitors the dispersion or spread of the process. Think of it like tracking the difference between the heaviest and lightest bags of sugar in each batch.

When to use which:

- Use X-bar and R charts together when you’re measuring continuous data (e.g., weight, length, temperature) and have subgroups of data (e.g., multiple samples taken at different times). The X-bar chart shows if the average is shifting, while the R chart shows if the variability is increasing or decreasing.

- If you only need to monitor variation (e.g., the variation in the time it takes to complete a task), the R chart alone might suffice.

- Other charts, like p-charts (for proportions) or c-charts (for counts), are used for attribute data (data classified into categories).

Q 6. Describe the process of constructing a control chart.

Constructing a control chart involves several steps:

- Define the process characteristic to be monitored: Decide what you’re measuring (e.g., weight, diameter, time).

- Collect data: Gather data in subgroups (e.g., samples of 5 items produced every hour). Aim for at least 20-25 subgroups for a robust analysis.

- Calculate statistics: For X-bar and R charts, calculate the mean (X-bar) and range (R) for each subgroup.

- Calculate control limits: Use appropriate formulas (based on the subgroup size and data type) to calculate the upper and lower control limits (UCL and LCL) for both the X-bar and R charts. These formulas involve factors like the average range (R-bar) and the standard deviation.

- Plot the data: Plot the X-bar and R values on their respective charts.

- Analyze the chart: Look for points outside the control limits or patterns indicating special cause variation.

There are specific formulas for calculating control limits (using factors from statistical tables), but statistical software packages can automate this process. The key is ensuring appropriate subgroup selection and sufficient data for accurate results.

Q 7. Interpret a control chart showing out-of-control points.

Out-of-control points on a control chart indicate a potential problem within the process. These points lie outside the upper or lower control limits (UCL and LCL), suggesting special cause variation. Interpreting these points requires careful consideration of several patterns:

- One point outside the control limits: This strongly suggests a special cause. Investigate the conditions surrounding that data point to identify the root cause.

- Several points near the control limits: This indicates a potential shift in the process mean or increase in variability. It warrants further investigation.

- A trend of points consistently increasing or decreasing: This indicates a systematic drift in the process, requiring immediate attention.

- Stratification or clustering of points: Points clustered in a particular area might indicate a cause related to a specific time or subgroup.

- Cycles or patterns within the control limits: Unusual repetitive patterns suggest periodic influences on the process that needs analysis.

When you find out-of-control points, it’s crucial not to simply react. You need to investigate the root cause. Was there a machine malfunction? Did a new operator introduce errors? Understanding the cause is essential for implementing effective corrective actions and preventing future occurrences.

Q 8. What is process capability index (Cpk) and how is it calculated?

The process capability index, Cpk, is a statistical measure that assesses how well a process can consistently produce output within the specified customer tolerance limits. It tells us how capable our process is of meeting customer requirements. A higher Cpk indicates better process capability.

Cpk is calculated using the following formula:

Cpk = min[(USL - μ)/(3σ), (μ - LSL)/(3σ)]

Where:

- USL = Upper Specification Limit

- LSL = Lower Specification Limit

- μ = Process Mean

- σ = Process Standard Deviation

In simpler terms, we’re comparing the distance between the process mean and the specification limits (both upper and lower) to the process variation (3σ representing approximately 99.7% of the data in a normal distribution). The smaller of these two ratios is the Cpk. If the process is centered, then Cpk equals Cp (discussed below).

Example: Imagine a manufacturing process producing bolts with a target diameter of 10mm. The customer’s tolerance is ±0.1mm (USL = 10.1mm, LSL = 9.9mm). After collecting data, we find the process mean (μ) is 10.05mm, and the standard deviation (σ) is 0.02mm. Cpk = min[(10.1 – 10.05)/(3*0.02), (10.05 – 9.9)/(3*0.02)] = min[0.83, 0.83] = 0.83

Q 9. What is the significance of Cp and Cpk values?

Cp and Cpk are both crucial process capability indices, but they convey slightly different information:

- Cp (Process Capability): Cp measures the inherent capability of the process, regardless of its centering. It reflects the ratio of the tolerance width to the process spread.

Cp = (USL - LSL) / (6σ). A Cp of 1 indicates the process spread is equal to the tolerance; Cp > 1 suggests the process spread is less than the tolerance. Cp doesn’t account for process centering. - Cpk (Process Capability Index): Cpk considers both the process spread and its centering relative to the specification limits. It’s a more comprehensive indicator as it considers both the variability and the offset of the mean from the target. A Cpk of 1 indicates the process is capable of meeting specifications, considering both variation and centering.

Significance: High Cp and Cpk values are desirable. They indicate a process that is both precise (low variation) and accurate (centered on target). These indices are vital for continuous improvement, supplier selection, and demonstrating conformance to customer requirements. Low values signal potential quality issues, highlighting the need for corrective actions.

Q 10. Explain the concept of process sigma level.

The process sigma level represents the number of standard deviations between the process mean and the nearest specification limit. It quantifies the process performance in terms of defects per million opportunities (DPMO). The higher the sigma level, the fewer defects are expected. The sigma level is often calculated using the shorter tail approach where we consider the distance from the mean to the closer specification limit, not the further one. This is because deviations closer to the mean would affect Cpk more dramatically.

For example, a 3-sigma process produces approximately 27,000 defects per million opportunities (DPMO). A 6-sigma process aims for near-zero defects (3.4 DPMO). These levels are derived from the normal distribution.

Sigma levels are widely used to benchmark process performance against industry standards and aid in identifying improvement targets. They provide a common language for discussing quality levels across different processes and industries.

Q 11. How do you determine the appropriate sample size for process variation analysis?

Determining the appropriate sample size for process variation analysis is crucial for achieving accurate results. The sample size depends on several factors:

- Desired precision: A larger sample size provides greater precision in estimating process parameters.

- Process variability: Higher process variability requires a larger sample size.

- Confidence level: The desired confidence level (e.g., 95%, 99%) influences the sample size.

- Acceptable margin of error: A smaller margin of error necessitates a larger sample size.

Several methods exist for calculating sample size, including:

- Power analysis: This statistical method helps determine the sample size needed to detect a specific effect size with a given power and significance level.

- Rule of thumb: Some guidelines suggest a minimum sample size of 30 observations. However, this rule is not always sufficient and should be used cautiously.

In practice, a pilot study can be conducted to estimate process variability and then use this estimate in a power analysis to determine the final sample size. Software packages like Minitab or JMP can greatly assist in performing these calculations.

Q 12. Describe different methods for estimating process parameters (mean, standard deviation).

Several methods exist for estimating process parameters, each with its advantages and limitations:

- Descriptive Statistics: This is the simplest method, using sample mean (x̄) and sample standard deviation (s) as estimators for the population mean (μ) and standard deviation (σ), respectively. These are calculated directly from the collected data.

- Maximum Likelihood Estimation (MLE): MLE finds the parameter values that maximize the likelihood of observing the collected data. It’s often used for more complex distributions.

- Method of Moments: This method equates sample moments (e.g., mean, variance) to population moments, resulting in equations that can be solved for the parameters.

- Bayesian Estimation: This approach incorporates prior knowledge about the parameters into the estimation process. It’s useful when prior information is available, leading to more robust estimates, especially with small sample sizes.

The choice of method depends on the data characteristics, the desired precision, and the availability of prior information. For example, for normally distributed data, descriptive statistics are often sufficient, while for non-normal data, transformations or more robust methods may be necessary.

Q 13. What are the assumptions underlying the use of control charts?

The effective use of control charts relies on several key assumptions:

- Data Independence: Observations should be independent of each other. Autocorrelation or trends in the data violate this assumption. This is often checked through autocorrelation analysis.

- Data Normality (often): Many control charts, such as X̄ and R charts, assume the data is normally distributed or approximately so. However, robust methods exist for non-normal data (discussed in the next question).

- Process Stability: The process should be in a state of statistical control, meaning that the variation is consistent over time and there are no assignable causes of variation present. Out-of-control points are identified via control chart rules and require investigation.

- Common Cause Variation Only: Control charts are designed to detect special cause variation. Therefore, it’s essential to have addressed common cause variations before applying control charts.

Violating these assumptions can lead to inaccurate interpretations of the control chart, potentially leading to misinformed decisions regarding process improvement efforts. Before applying any control chart, it’s essential to examine the data for compliance with these assumptions.

Q 14. How do you handle non-normal data in process variation analysis?

Handling non-normal data in process variation analysis requires careful consideration. Here are some strategies:

- Transformations: Applying mathematical transformations (e.g., logarithmic, square root, Box-Cox) can sometimes normalize the data. The goal is to find a transformation that makes the transformed data approximately normal. This may involve several attempts.

- Non-parametric methods: These methods do not rely on assumptions of normality. Examples include rank-based tests and control charts that are based on ranks or medians instead of means and standard deviations.

- Robust methods: These methods are less sensitive to outliers and deviations from normality. Examples include using the median and median absolute deviation (MAD) instead of the mean and standard deviation.

- Bootstrapping: This resampling technique allows for estimating the distribution of process parameters without assuming a specific distributional form.

The choice of method depends on the severity of the non-normality and the specific goals of the analysis. For instance, if the data exhibits mild deviations from normality, a transformation might be sufficient. However, for severely skewed data, non-parametric or robust methods might be more appropriate.

It’s crucial to always assess the normality of the data before applying standard statistical techniques. Tools like histograms, Q-Q plots, and normality tests (e.g., Shapiro-Wilk test) can help determine the suitability of the normality assumption. If the assumption is violated, using appropriate methods for non-normal data ensures accurate and reliable conclusions.

Q 15. Explain the concept of Gage R&R studies and their importance.

Gage R&R (Repeatability and Reproducibility) studies assess the variation inherent in a measurement system. Imagine you’re weighing ingredients for a cake: some variation is expected due to the scale itself (repeatability), and some variation comes from different people using the scale (reproducibility). A Gage R&R study quantifies these variations to determine if your measurement system is accurate enough for its intended purpose. It’s crucial because inaccurate measurements lead to flawed conclusions about your process and product quality. If your measuring device is unreliable, any attempts at process improvement will be based on faulty data.

Essentially, a Gage R&R study helps determine if the variation from the measurement system is significantly smaller than the variation within the process itself. If the measurement system variation is too large, it masks the true process variation, hindering effective process improvement efforts.

Career Expert Tips:

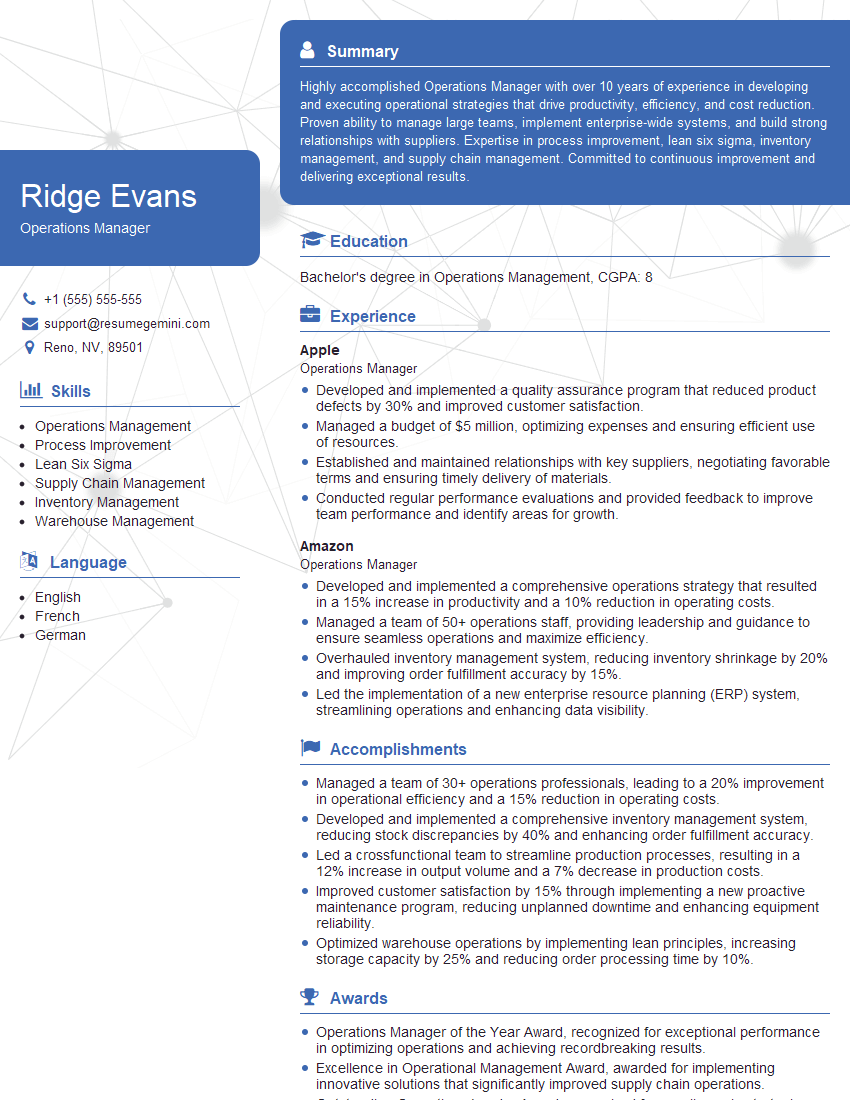

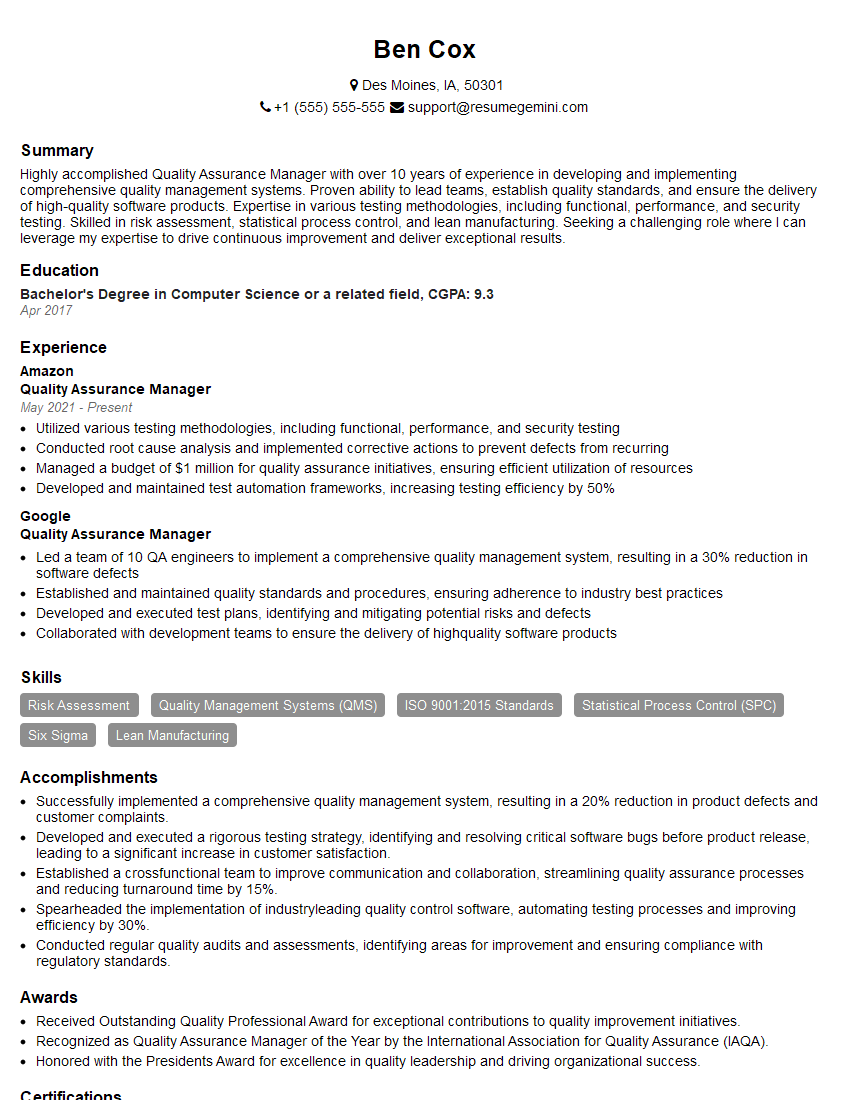

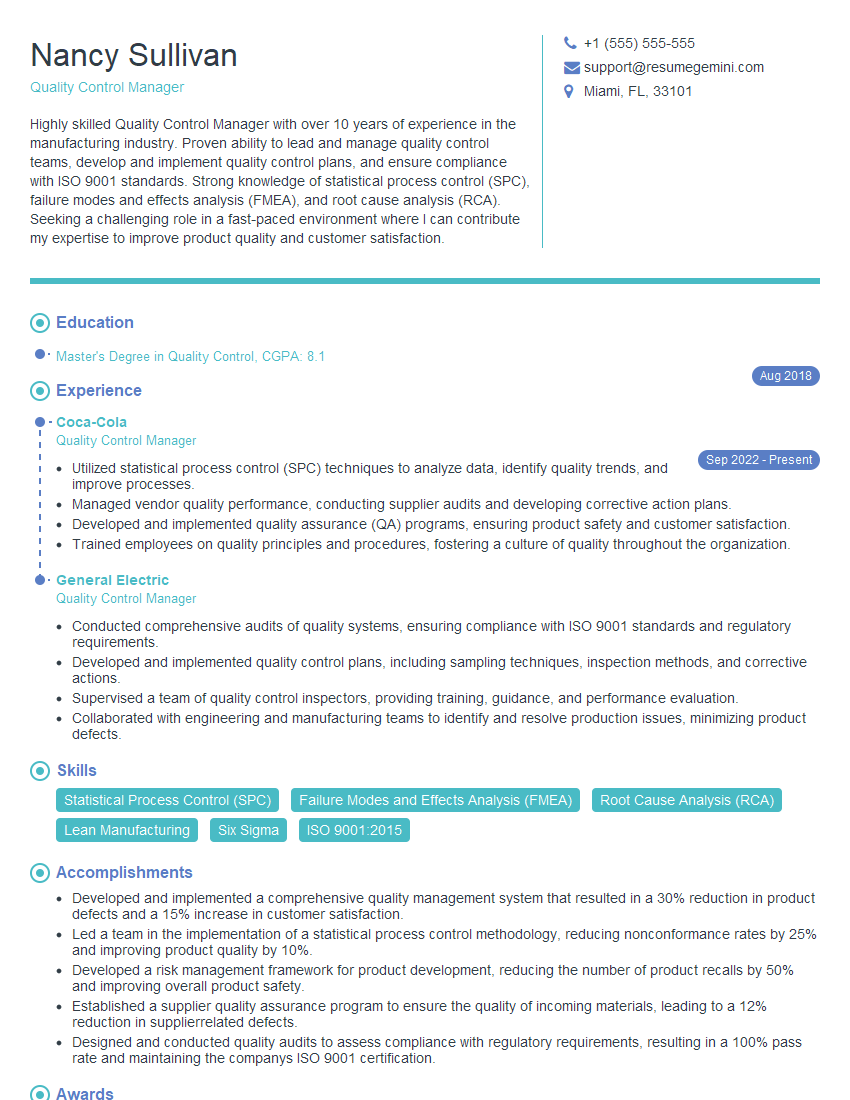

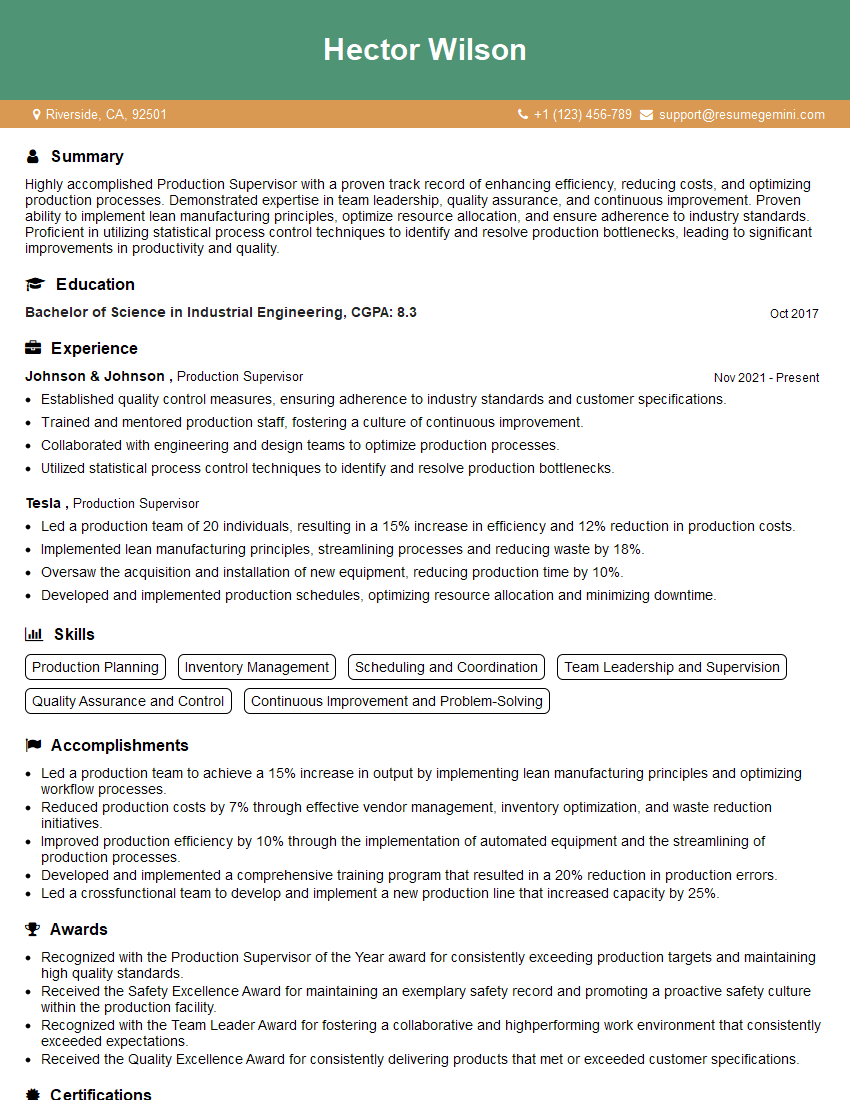

- Ace those interviews! Prepare effectively by reviewing the Top 50 Most Common Interview Questions on ResumeGemini.

- Navigate your job search with confidence! Explore a wide range of Career Tips on ResumeGemini. Learn about common challenges and recommendations to overcome them.

- Craft the perfect resume! Master the Art of Resume Writing with ResumeGemini’s guide. Showcase your unique qualifications and achievements effectively.

- Don’t miss out on holiday savings! Build your dream resume with ResumeGemini’s ATS optimized templates.

Q 16. How do you perform a Gage R&R study and interpret its results?

A Gage R&R study typically involves multiple appraisers measuring multiple parts multiple times. The data is then analyzed using ANOVA (Analysis of Variance) to separate the variation due to repeatability (variation from the same appraiser measuring the same part multiple times), reproducibility (variation from different appraisers measuring the same part), and part-to-part variation (the actual variation in the parts themselves). Results are often summarized in a table and graphically displayed.

Steps:

- Planning: Define the measurement system, select parts representative of the process variation, and determine the number of appraisers and repetitions.

- Data Collection: Each appraiser measures each part multiple times, recording the results.

- Data Analysis: Use statistical software (like Minitab or JMP) to perform an ANOVA and calculate key metrics like %Study Var, %Repeatability, %Reproducibility, and %Part-to-Part Variation.

- Interpretation: These percentages show the proportion of the total variation attributed to each source. Generally, you want the measurement system variation (%Repeatability + %Reproducibility) to be a small percentage of the total variation, ideally less than 10%. A higher percentage indicates a problem with the measurement system.

Example Interpretation: If %Repeatability is 5%, %Reproducibility is 2%, and %Part-to-Part is 93%, this suggests the measurement system is reasonably good. However, if %Repeatability is 20% and %Reproducibility is 15%, the measurement system is creating significant variation and needs improvement (e.g., better training for appraisers, more precise equipment).

Q 17. Describe different methods for reducing process variation.

Reducing process variation involves a multifaceted approach. Here are several key methods:

- Improved Equipment and Technology: Upgrading to more precise and reliable machines significantly reduces variation caused by equipment limitations.

- Process Standardization: Implementing standardized procedures ensures consistency in how tasks are performed, minimizing human error and variation.

- Operator Training: Well-trained operators are more likely to perform tasks consistently and correctly, reducing variation.

- Preventive Maintenance: Regularly maintaining equipment prevents unexpected breakdowns and performance issues, leading to more stable output.

- Statistical Process Control (SPC): Implementing SPC charts allows for real-time monitoring of the process, identifying and addressing deviations from targets before they become major problems.

- Design of Experiments (DOE): DOE helps identify the factors that most significantly impact process variation, allowing for targeted improvements.

- Automation: Automating repetitive tasks minimizes human error and inconsistency.

- Material Selection and Control: Using consistent, high-quality materials minimizes variation caused by material inconsistencies.

- Environmental Controls: Controlling factors like temperature and humidity can reduce variation caused by environmental fluctuations.

Q 18. What are some common sources of process variation in manufacturing?

Common sources of process variation in manufacturing are numerous and interconnected. Let’s categorize some:

- Machinery and Equipment: Wear and tear, miscalibration, and inherent variations in machine performance.

- Materials: Inconsistencies in raw materials, such as differences in density, composition, or impurities.

- Operators: Human error, skill variations, fatigue, and inconsistent adherence to procedures.

- Environment: Temperature, humidity, vibrations, and other environmental factors affecting the process.

- Measurement System: As discussed earlier, inaccuracies and inconsistencies in measurement devices.

- Process Design: Poorly designed processes lacking clear instructions, inadequate controls, or inefficient workflows.

- Process Parameters: Variations in settings or parameters like temperature, pressure, or speed.

For example, inconsistent mixing of ingredients in a food processing plant can lead to variations in the final product’s texture and taste. This variation could stem from issues with the mixing machine, differences in ingredient quality, or variations in the operator’s mixing technique.

Q 19. How do you use process variation analysis to improve product quality?

Process variation analysis is fundamental to improving product quality. By identifying and quantifying the sources of variation, we can take targeted actions to reduce defects and enhance consistency. This involves:

- Identifying Key Characteristics: Determining which product characteristics are critical to quality.

- Data Collection and Analysis: Gathering data on these characteristics and analyzing it using statistical methods to identify sources of variation.

- Root Cause Analysis: Investigating the root causes of significant variations, often using tools like Pareto charts or fishbone diagrams.

- Implementing Corrective Actions: Implementing solutions to address the identified root causes, such as improvements to equipment, processes, or training.

- Monitoring and Control: Continuously monitoring the process to ensure the implemented solutions are effective and maintaining improved quality levels.

For example, if a process variation analysis reveals that a significant portion of defects is caused by inconsistent raw material quality, the solution might involve stricter supplier quality controls or switching to a more reliable supplier.

Q 20. How do you use process variation analysis to reduce manufacturing costs?

Reducing process variation directly translates to lower manufacturing costs. Less variation means:

- Fewer Defects: Reduced scrap, rework, and waste, saving on material and labor costs.

- Improved Efficiency: Consistent processes lead to smoother workflows and increased productivity.

- Less Downtime: Fewer process disruptions due to defects mean more efficient use of equipment and personnel.

- Better Inventory Control: Reduced need for excess inventory to compensate for defects.

- Reduced Warranty Claims: Fewer product failures mean fewer warranty claims and associated costs.

Consider a scenario where a manufacturing process produces a significant amount of defective parts. The costs associated with scrapping these parts, reworking them, or handling customer complaints directly impact the bottom line. By implementing process variation analysis and reducing the defect rate, the company can significantly decrease these costs and improve profitability.

Q 21. Explain the relationship between process variation and customer satisfaction.

The relationship between process variation and customer satisfaction is direct and significant. High process variation translates to inconsistent product quality, leading to dissatisfied customers. Customers expect a certain level of consistency and reliability in the products they purchase. Large variations in product characteristics (e.g., dimensions, performance, appearance) lead to:

- Lower Quality Perception: Inconsistent quality erodes trust and lowers customer perception of the product.

- Increased Complaints and Returns: Products with high variation are more likely to malfunction or fail to meet expectations, leading to increased complaints and returns.

- Negative Word-of-Mouth: Dissatisfied customers often share their negative experiences, damaging the brand’s reputation.

- Loss of Revenue and Market Share: In the long run, inconsistent product quality can lead to a loss of revenue and market share to competitors who deliver higher quality and consistency.

Therefore, reducing process variation is crucial for enhancing customer satisfaction and building brand loyalty. Consistent quality fosters positive customer experiences, repeat business, and positive word-of-mouth referrals.

Q 22. How do you present your findings from process variation analysis to stakeholders?

Presenting findings from a process variation analysis to stakeholders requires clarity, conciseness, and a focus on actionable insights. I begin by summarizing the key findings in a non-technical way, highlighting the impact of variation on key performance indicators (KPIs). Then, I use visuals like charts and graphs (e.g., histograms, control charts, Pareto charts) to illustrate the data and make complex information easily digestible. For instance, instead of saying ‘the Cp value is 1.2’, I would explain ‘the current process capability is only meeting 1.2 times the required specifications, indicating a significant risk of producing non-conforming products’. I then propose specific, prioritized recommendations for improvement, outlining the potential impact (e.g., cost savings, reduced defects, improved efficiency) and the resources needed for implementation. Finally, I conclude by outlining next steps and establishing a timeline for monitoring the effectiveness of implemented solutions. A question-and-answer session is crucial to address any stakeholder concerns and to ensure alignment on the proposed action plan.

Q 23. Describe your experience with statistical software packages (e.g., Minitab, JMP).

I’m proficient in several statistical software packages, including Minitab and JMP. My experience spans data cleaning, exploratory data analysis (EDA), statistical process control (SPC) chart creation and analysis (e.g., X-bar and R charts, p-charts, c-charts), capability analysis (Cp, Cpk), and hypothesis testing. In Minitab, for example, I frequently utilize the ‘Assistant’ feature for guided analysis and report generation, especially when working with less experienced colleagues. In JMP, I leverage its powerful visual capabilities for exploring complex datasets and its robust modeling tools for advanced analyses. I’m also adept at using R and Python for more specialized analyses and data visualization, particularly when dealing with large datasets or unconventional data structures. A recent project involved using JMP to perform a Design of Experiments (DOE) to optimize a manufacturing process, resulting in a 15% reduction in defect rates.

Q 24. How do you handle conflicting data in process variation analysis?

Handling conflicting data in process variation analysis requires a systematic approach. First, I thoroughly investigate the source of the conflict. This might involve examining data collection methods, checking for recording errors, or identifying potential outliers. For example, if data from two different measuring instruments show significant discrepancies, I’d assess the calibration status and accuracy of both instruments. If errors are identified, I’d correct them or remove flawed data points. Next, I analyze the data using robust statistical methods less sensitive to outliers, such as median instead of mean. If inconsistencies persist despite these measures, I might consider further investigation, such as conducting a root cause analysis to identify the underlying process issues driving the discrepancies. Transparency is key—I meticulously document my methodology and any assumptions or limitations in my analysis to ensure the integrity and trustworthiness of my findings.

Q 25. What are some limitations of process variation analysis?

Process variation analysis, while powerful, has limitations. Firstly, it assumes that the data is representative of the entire process. If the data sample is biased or insufficient, the analysis may lead to inaccurate conclusions. Secondly, the analysis may not reveal the root cause of variation; it identifies the presence and extent of variation, but further investigation is often required to understand the ‘why’. Thirdly, the analysis relies on historical data, which may not accurately predict future performance, especially if process changes are introduced. Finally, the effectiveness of the analysis depends heavily on data quality. Inaccurate or incomplete data will inevitably lead to flawed results. For example, if a key process variable is not measured consistently, the analysis might underestimate the true extent of process variation.

Q 26. Describe a time you identified and solved a problem related to process variation.

In a previous role, we noticed an increase in customer complaints about the inconsistent color of a particular product. Using control charts in Minitab, I analyzed the historical color data, revealing significant variation beyond the acceptable limits. The initial investigation revealed no obvious problems with the machinery or raw materials. However, a detailed review of the process steps, along with operator interviews, revealed that the mixing process was not consistently following the established procedure. Operators were using different mixing speeds and times, resulting in varying color outcomes. We addressed this by implementing a standardized operating procedure with clear instructions and visual aids, along with providing additional training to the operators. Subsequent monitoring using control charts showed a significant reduction in color variation and customer complaints, demonstrating the effectiveness of the problem-solving approach.

Q 27. How do you stay current with advances in process variation analysis techniques?

Staying current in process variation analysis involves a multifaceted approach. I regularly attend conferences and workshops focusing on quality control and statistical methods. I also actively participate in online professional communities and forums, engaging with other experts and learning about the latest developments. I subscribe to relevant journals and publications in the field of quality management and statistics. Furthermore, I actively seek out opportunities for professional development through online courses and training programs offered by organizations such as ASQ (American Society for Quality). This continuous learning helps me stay abreast of emerging techniques and tools, ensuring that my analysis methods remain accurate and relevant.

Q 28. Explain the difference between attribute and variable data in process control.

In process control, attribute data and variable data represent different types of quality characteristics. Variable data are continuous measurements, expressed on a numerical scale. Examples include weight, length, temperature, or the time to complete a task. Variable data provide more detailed information, allowing for precise statistical analysis and calculation of metrics like mean, standard deviation, and capability indices. Attribute data, in contrast, are categorical and represent the presence or absence of a characteristic, often expressed as counts or proportions. Examples include the number of defects per unit, the percentage of non-conforming items, or whether a part is conforming or non-conforming. Attribute data are often simpler to collect but provide less detailed information compared to variable data. The choice between using attribute or variable data depends on the nature of the quality characteristic being measured and the level of detail required for analysis.

Key Topics to Learn for Process Variation Analysis Interview

- Statistical Process Control (SPC): Understanding control charts (X-bar and R, p-chart, c-chart), process capability analysis (Cp, Cpk), and their application in identifying and reducing variation.

- Measurement System Analysis (MSA): Evaluating the accuracy and precision of measurement systems, including Gauge R&R studies, to ensure data reliability in variation analysis.

- Design of Experiments (DOE): Applying DOE methodologies (e.g., factorial designs, Taguchi methods) to identify the key factors contributing to process variation and optimize process parameters.

- Root Cause Analysis (RCA): Utilizing various RCA techniques (e.g., 5 Whys, Fishbone diagrams, Fault Tree Analysis) to effectively pinpoint the underlying causes of process variation.

- Process Mapping and Value Stream Mapping: Visualizing the process flow to identify bottlenecks and areas prone to significant variation.

- Data Analysis Techniques: Proficiency in statistical software (e.g., Minitab, JMP) for data analysis, including hypothesis testing, regression analysis, and ANOVA.

- Six Sigma methodologies: Understanding DMAIC (Define, Measure, Analyze, Improve, Control) and its application in reducing process variation and improving quality.

- Practical Applications: Be prepared to discuss how you’ve applied these concepts in real-world scenarios, focusing on problem-solving and results achieved.

- Advanced Topics (for Senior Roles): Explore topics like advanced statistical modeling, multivariate analysis, and robust design techniques.

Next Steps

Mastering Process Variation Analysis significantly enhances your career prospects in various industries, opening doors to advanced roles with higher earning potential and greater responsibility. A well-crafted resume is crucial in showcasing your skills and experience to potential employers. Building an ATS-friendly resume is key to ensuring your application gets noticed. We highly recommend using ResumeGemini to create a professional and effective resume that highlights your expertise in Process Variation Analysis. ResumeGemini provides examples of resumes tailored specifically to this field, helping you present your qualifications in the most impactful way.

Explore more articles

Users Rating of Our Blogs

Share Your Experience

We value your feedback! Please rate our content and share your thoughts (optional).

What Readers Say About Our Blog

Hello,

We found issues with your domain’s email setup that may be sending your messages to spam or blocking them completely. InboxShield Mini shows you how to fix it in minutes — no tech skills required.

Scan your domain now for details: https://inboxshield-mini.com/

— Adam @ InboxShield Mini

Reply STOP to unsubscribe

Hi, are you owner of interviewgemini.com? What if I told you I could help you find extra time in your schedule, reconnect with leads you didn’t even realize you missed, and bring in more “I want to work with you” conversations, without increasing your ad spend or hiring a full-time employee?

All with a flexible, budget-friendly service that could easily pay for itself. Sounds good?

Would it be nice to jump on a quick 10-minute call so I can show you exactly how we make this work?

Best,

Hapei

Marketing Director

Hey, I know you’re the owner of interviewgemini.com. I’ll be quick.

Fundraising for your business is tough and time-consuming. We make it easier by guaranteeing two private investor meetings each month, for six months. No demos, no pitch events – just direct introductions to active investors matched to your startup.

If youR17;re raising, this could help you build real momentum. Want me to send more info?

Hi, I represent an SEO company that specialises in getting you AI citations and higher rankings on Google. I’d like to offer you a 100% free SEO audit for your website. Would you be interested?

Hi, I represent an SEO company that specialises in getting you AI citations and higher rankings on Google. I’d like to offer you a 100% free SEO audit for your website. Would you be interested?

good