Are you ready to stand out in your next interview? Understanding and preparing for Advanced Proficiency in SQL interview questions is a game-changer. In this blog, we’ve compiled key questions and expert advice to help you showcase your skills with confidence and precision. Let’s get started on your journey to acing the interview.

Questions Asked in Advanced Proficiency in SQL Interview

Q 1. Explain the difference between INNER JOIN, LEFT JOIN, RIGHT JOIN, and FULL OUTER JOIN.

SQL joins are used to combine rows from two or more tables based on a related column between them. Let’s break down the four main types:

- INNER JOIN: Returns rows only when there is a match in both tables. Think of it like finding the overlapping area of two Venn diagrams. If a row in one table doesn’t have a corresponding match in the other, it’s excluded from the result.

- LEFT (OUTER) JOIN: Returns all rows from the left table (the one specified before

LEFT JOIN), even if there’s no match in the right table. For rows in the left table without a match, the columns from the right table will show asNULL. - RIGHT (OUTER) JOIN: Similar to

LEFT JOIN, but it returns all rows from the right table (the one specified afterRIGHT JOIN), andNULLvalues for unmatched rows in the left table. - FULL (OUTER) JOIN: Returns all rows from both tables. If there’s a match, the corresponding row from both tables is returned. If there’s no match in one table, the columns from the other table show as

NULL. This gives you the complete picture from both tables.

Example: Let’s say we have two tables, Customers and Orders, linked by a CustomerID.

SELECT * FROM Customers INNER JOIN Orders ON Customers.CustomerID = Orders.CustomerID;This INNER JOIN would only show customers who have placed orders.

SELECT * FROM Customers LEFT JOIN Orders ON Customers.CustomerID = Orders.CustomerID;This LEFT JOIN would show all customers, and those without orders would have NULL values in the Orders table columns.

Q 2. How do you optimize SQL queries for performance?

Optimizing SQL queries for performance is crucial for any application. Here are key strategies:

- Use Indexes: Indexes are like the index in a book – they speed up data retrieval. Create indexes on frequently queried columns, especially those used in

WHEREclauses. - Write Efficient Queries: Avoid using

SELECT *; select only the necessary columns. Use appropriate data types and avoid unnecessary subqueries. - Optimize Joins: Choose the right join type (

INNER JOINis usually faster thanFULL OUTER JOIN). Ensure join conditions are optimized. - Use Query Hints: Some database systems allow query hints to influence the query optimizer’s choices (e.g., forcing certain indexes or join methods). Use these cautiously and with a deep understanding.

- Analyze Execution Plans: Most database systems provide tools to analyze the execution plan of a query. This shows how the database will process the query, revealing potential bottlenecks.

- Data Partitioning: For very large tables, partitioning can significantly improve query performance by splitting the data into smaller, more manageable chunks.

- Caching: Utilize database caching mechanisms to store frequently accessed data in memory.

- Upgrade Hardware: Sometimes, the simplest solution is to upgrade the database server’s hardware (more RAM, faster processors).

- Database Tuning: Regular database tuning by a DBA ensures optimal performance and resource allocation.

Example: Instead of SELECT * FROM Products WHERE Category = 'Electronics';, use SELECT ProductID,ProductName,Price FROM Products WHERE Category = 'Electronics';. This reduces the amount of data transferred.

Q 3. Describe different indexing strategies and when to use them.

Indexing strategies are vital for query optimization. Different index types suit different scenarios:

- B-tree Index: The most common type, suitable for equality, range, and sorting operations. Good for columns used in

WHERE,ORDER BY, andJOINclauses. - Hash Index: Very fast for equality searches, but not suitable for range searches or sorting. Typically used for columns frequently used in equality comparisons.

- Full-text Index: Designed for searching within textual data, supporting complex search queries like wildcard searches and stemming.

- Spatial Index: Used for querying geographic data (e.g., points, polygons) based on location or proximity.

- Composite Index: An index on multiple columns. The order of columns in a composite index is crucial; the leftmost columns are used most frequently in query optimization.

When to use them:

- Use indexes on frequently queried columns, especially those in

WHEREclauses. - Avoid over-indexing; too many indexes can slow down data modification operations (inserts, updates, deletes).

- Consider composite indexes when queries frequently filter on multiple columns.

- Use full-text indexes for searching large volumes of text data.

Example: A B-tree index on the CustomerID column in the Orders table would dramatically speed up queries like SELECT * FROM Orders WHERE CustomerID = 123;

Q 4. Explain the concept of normalization and its different forms.

Database normalization is the process of organizing data to reduce redundancy and improve data integrity. It involves breaking down larger tables into smaller ones and defining relationships between them. Different forms exist:

- 1NF (First Normal Form): Eliminate repeating groups of data within a table. Each column should contain atomic values (indivisible values).

- 2NF (Second Normal Form): Be in 1NF and eliminate redundant data that depends on only part of the primary key (in tables with composite keys).

- 3NF (Third Normal Form): Be in 2NF and eliminate data that depends on non-key attributes (transitive dependency).

- BCNF (Boyce-Codd Normal Form): A stricter version of 3NF; it addresses certain anomalies that 3NF might not.

Example: Consider a table storing customer information with multiple phone numbers. This is not in 1NF because the phone numbers are repeating. Normalizing it would create a separate CustomerPhones table with CustomerID and PhoneNumber.

Normalization reduces data redundancy (saving storage space), improves data integrity (preventing inconsistencies), and simplifies data modification (changes only need to be made in one place).

Q 5. How do you handle NULL values in SQL?

NULL values represent the absence of a value. Here are strategies for handling them:

IS NULLandIS NOT NULL: Use these operators inWHEREclauses to filter rows based on the presence or absence ofNULLvalues.COALESCE(orIFNULLin some databases): ReplacesNULLvalues with a specified value. Useful for displaying a default value or for calculations.NVL(Oracle-specific): Similar toCOALESCE.- Outer Joins: As discussed earlier, outer joins include rows even if there’s no match, resulting in

NULLvalues for unmatched columns. This is a natural way to handle potentialNULLs in relational data.

Example: To find customers with missing email addresses:

SELECT * FROM Customers WHERE Email IS NULL;To replace NULL email addresses with ‘Unknown’:

SELECT CustomerName, COALESCE(Email, 'Unknown') AS EmailAddress FROM Customers;Q 6. What are common SQL injection vulnerabilities and how can they be prevented?

SQL injection is a serious security vulnerability where malicious SQL code is inserted into an application’s input to manipulate the database. This can lead to data breaches, unauthorized access, or even complete database compromise.

- Vulnerability: Suppose an application directly concatenates user input into a SQL query. If a user enters malicious code, it can alter the intended query.

- Prevention:

- Parameterized Queries/Prepared Statements: This is the most effective defense. Instead of concatenating user input directly, use parameterized queries, where input values are treated as data, not as executable code.

- Input Validation: Strictly validate all user inputs to ensure they conform to expected formats and lengths. Sanitize input to remove potentially harmful characters.

- Stored Procedures: Use stored procedures to encapsulate database logic and prevent direct SQL execution from the application.

- Least Privilege: Grant database users only the necessary permissions. Avoid granting excessive privileges that could allow malicious activity.

- Regular Security Audits: Conduct regular security audits and penetration testing to identify and address vulnerabilities.

Example (Vulnerable):

String query = "SELECT * FROM Users WHERE username = '" + username + "';"Example (Safe):

PreparedStatement statement = connection.prepareStatement("SELECT * FROM Users WHERE username = ?; "); statement.setString(1, username);Q 7. Explain the use of CTEs (Common Table Expressions).

CTEs (Common Table Expressions) are temporary, named result sets that can be referenced within a single query. They’re useful for simplifying complex queries and improving readability.

- Recursive CTEs: Can be used to perform hierarchical queries, such as traversing tree structures.

- Non-recursive CTEs: Used to break down complex queries into smaller, more manageable parts.

Benefits:

- Improved Readability: Make complex queries easier to understand and maintain.

- Code Reusability: A CTE can be referenced multiple times within a single query.

- Modularity: Break down complex logic into smaller, reusable units.

Example (Non-recursive):

WITH CustomerOrders AS ( SELECT CustomerID, COUNT(*) AS OrderCount FROM Orders GROUP BY CustomerID ) SELECT c.CustomerName, co.OrderCount FROM Customers c JOIN CustomerOrders co ON c.CustomerID = co.CustomerID;This query uses a CTE called CustomerOrders to calculate the number of orders for each customer before joining it with the Customers table. This improves readability compared to writing a single, nested query.

Q 8. How do you perform data aggregation using SQL?

Data aggregation in SQL involves summarizing data from multiple rows into a single row. Think of it like consolidating a spreadsheet’s many entries into concise summary statistics. We use aggregate functions to achieve this. These functions operate on sets of values and return a single value.

COUNT(*): Counts the number of rows.SUM(column_name): Calculates the sum of values in a column.AVG(column_name): Calculates the average of values in a column.MIN(column_name): Finds the minimum value in a column.MAX(column_name): Finds the maximum value in a column.

Example: Let’s say we have a table called Sales with columns ProductID, QuantitySold, and Price. To find the total revenue for each product, we would use:

SELECT ProductID, SUM(QuantitySold * Price) AS TotalRevenue FROM Sales GROUP BY ProductID;The GROUP BY clause groups rows with the same ProductID, and the SUM() function calculates the total revenue for each group. This is a very common task in any data analysis role, crucial for understanding sales trends, inventory management, and more.

Q 9. Describe different ways to handle transactions in SQL.

SQL transactions are sequences of operations treated as a single unit of work. Imagine a bank transaction: you wouldn’t want just the debit to happen without the corresponding credit. Transactions ensure data integrity and consistency. They’re controlled using BEGIN TRANSACTION, COMMIT, and ROLLBACK statements.

BEGIN TRANSACTION: Starts a transaction. All subsequent SQL statements are part of this transaction.COMMIT: Saves all changes made within the transaction and ends it. The changes are permanently written to the database.ROLLBACK: Undoes all changes made within the transaction. The database is restored to its state before the transaction began.

Example: Consider transferring money between two accounts. We need both the debit and credit to succeed; otherwise, we’d have an inconsistent state. A transaction guarantees atomicity (all or nothing).

BEGIN TRANSACTION;UPDATE Accounts SET Balance = Balance - 100 WHERE AccountID = 1;UPDATE Accounts SET Balance = Balance + 100 WHERE AccountID = 2;COMMIT;If any of the UPDATE statements fail, the ROLLBACK will undo both changes. This is crucial for maintaining data consistency in financial systems and other applications requiring accuracy.

Q 10. Explain the concept of stored procedures and their benefits.

Stored procedures are pre-compiled SQL code blocks that can be stored in a database and reused. Think of them as reusable functions, but for databases. They encapsulate SQL logic and improve performance, security, and maintainability.

- Improved Performance: The database pre-compiles the procedure, leading to faster execution compared to executing the same SQL statements repeatedly.

- Enhanced Security: You can grant execute permissions on stored procedures without granting direct access to underlying tables, improving database security.

- Reduced Network Traffic: Instead of sending multiple SQL statements over the network, you send a single call to the stored procedure, minimizing network traffic.

- Better Code Maintainability: Stored procedures centralize database logic, making code easier to manage and update.

Example: A stored procedure to get customer details by ID:

CREATE PROCEDURE GetCustomerDetails (@CustomerID INT)ASBEGINSELECT * FROM Customers WHERE CustomerID = @CustomerID;END;This procedure can be called multiple times without rewriting the SELECT statement. This is immensely beneficial in large applications where the same logic is used repeatedly throughout the application.

Q 11. How do you troubleshoot and debug SQL queries?

Troubleshooting SQL queries involves a systematic approach. It often starts with understanding the expected results and comparing them to the actual output. Debugging is crucial for ensuring correctness.

- Examine Error Messages: Database systems provide detailed error messages. Carefully read and understand these messages. They usually pinpoint the problem.

- Check Query Syntax: Ensure correct syntax and proper use of keywords, operators, and functions. Even a small typo can cause a failure.

- Analyze Execution Plan: Many database systems provide query execution plans. These plans visualize how the query is executed, highlighting performance bottlenecks.

- Use Logging and Tracing: Enable database logging and tracing to monitor query execution and identify slow queries or unexpected behavior.

- Simplify the Query: Break down complex queries into smaller, simpler parts to identify the source of the error. A common approach is to comment out parts of the query and run it iteratively.

- Test with Sample Data: Use small, known datasets to test the query and isolate problems before running it against the full dataset.

Tools like SQL Profiler (SQL Server) and explain plan features within other database systems are invaluable for identifying the root cause of slow or incorrect query execution. Always start with the simplest explanations—it’s often a simple mistake, not a complex problem.

Q 12. How do you write efficient SQL queries for large datasets?

Writing efficient SQL queries for large datasets is crucial for performance. Inefficient queries can lead to extremely long processing times or system crashes. Optimizing for large data requires a deep understanding of database design and query optimization strategies.

- Use Indexes Appropriately: Indexes speed up data retrieval. Carefully choose columns to index based on frequent search criteria.

- Avoid

SELECT *: Only retrieve the necessary columns to minimize data transfer. - Optimize

JOINOperations: Use appropriateJOINtypes (inner, left, right, full) based on your needs and ensure efficient join conditions. - Use Subqueries Judiciously: Subqueries can be slow if not optimized. Consider using joins as an alternative where applicable.

- Limit the Use of Functions in

WHEREClauses: Functions inWHEREclauses can prevent index usage, hindering performance. - Partitioning: For extremely large tables, consider partitioning to distribute data across multiple smaller tables for faster access.

- Analyze Execution Plans: Regularly review query execution plans to identify performance bottlenecks and optimize accordingly.

Remember, the best approach starts with well-designed database schemas and smart query design. A small tweak in the query can make a huge difference when dealing with millions of rows.

Q 13. Explain the difference between clustered and non-clustered indexes.

Clustered and non-clustered indexes are ways to organize data to speed up data retrieval. Think of them as different ways to sort a deck of cards.

- Clustered Index: A clustered index defines the physical order of data rows in a table. It’s like sorting your cards by suit and then by rank. A table can have only one clustered index because the data can only be physically sorted in one way.

- Non-Clustered Index: A non-clustered index stores data in a separate structure, pointing to the actual data rows. It’s like having a separate index card for each card in your deck, indicating where to find it. A table can have multiple non-clustered indexes.

Analogy: Imagine a library. A clustered index is like organizing books by their Dewey Decimal classification; the physical arrangement reflects the order. A non-clustered index is like a card catalog—it provides an alternative way to find books (by author, title, etc.), without changing the books’ physical arrangement.

Choosing the right type of index is critical for optimal query performance. For frequently queried columns, a clustered index can significantly improve search speed. Non-clustered indexes are useful for quick lookups on multiple columns.

Q 14. How do you use window functions in SQL?

Window functions perform calculations across a set of table rows that are somehow related to the current row. They differ from aggregate functions because they return a value for every row in the specified partition, not a single aggregated value for the entire group.

Example: Let’s say you have a table of sales data. You want to calculate the running total of sales for each customer. This can be done using the SUM() function as a window function:

SELECT CustomerID, OrderDate, SalesAmount, SUM(SalesAmount) OVER (PARTITION BY CustomerID ORDER BY OrderDate) AS RunningTotal FROM Sales;Here, the SUM() function calculates the running total of SalesAmount for each CustomerID, ordered by OrderDate. The OVER clause specifies the partition (CustomerID) and the order (OrderDate) for the calculation. Window functions are powerful tools for data analysis, enabling efficient calculation of running totals, moving averages, ranks, and more.

Other common window functions include RANK(), ROW_NUMBER(), LAG(), and LEAD(). Each offers unique functionalities to enrich data analysis. They are particularly useful when you need to incorporate context from related rows within a dataset without resorting to more complex joins.

Q 15. Describe different types of database locks and their impact on concurrency.

Database locks are mechanisms that control access to data, preventing conflicts when multiple users or processes try to modify the same data simultaneously. Think of it like a library – locks ensure only one person can borrow a specific book at a time. The impact on concurrency (the ability of multiple tasks to run simultaneously) is significant; without locks, data corruption can easily occur. There are several types:

- Shared Locks (S Locks): Allow multiple users to read data concurrently but prevent any user from modifying it. Imagine several people reading the same book simultaneously.

- Exclusive Locks (X Locks): Prevent all other users from accessing the data, whether reading or writing. This is like someone borrowing a book and taking it home.

- Update Locks (U Locks): A special type of lock that initially grants shared access, but then escalates to an exclusive lock if the data needs to be updated. This allows for more concurrency initially.

- Intent Locks: These are signals indicating a user’s intention to acquire a shared or exclusive lock on a resource. They help in managing locking across multiple levels of data hierarchy (e.g., table and page levels).

The choice of lock depends on the specific application and the required level of concurrency. Overuse of exclusive locks can significantly reduce concurrency, leading to performance bottlenecks, while insufficient locking can lead to data inconsistency. Database management systems (DBMS) use sophisticated lock management algorithms to optimize concurrency and minimize lock contention.

Career Expert Tips:

- Ace those interviews! Prepare effectively by reviewing the Top 50 Most Common Interview Questions on ResumeGemini.

- Navigate your job search with confidence! Explore a wide range of Career Tips on ResumeGemini. Learn about common challenges and recommendations to overcome them.

- Craft the perfect resume! Master the Art of Resume Writing with ResumeGemini’s guide. Showcase your unique qualifications and achievements effectively.

- Don’t miss out on holiday savings! Build your dream resume with ResumeGemini’s ATS optimized templates.

Q 16. How do you optimize query performance using query hints?

Query hints provide a way to instruct the database optimizer on how to execute a query. The optimizer usually does a great job, but in complex scenarios, or with specific knowledge of the data, you might override its choices. These hints can be crucial for performance tuning.

For example, you might use a hint to force the optimizer to use a specific index, or to join tables in a particular order. It’s important to understand that hints should be used judiciously. Overusing them can lead to unexpected behavior or even decrease performance if you don’t understand the underlying mechanics. They should only be applied after thorough performance analysis and testing.

Example (SQL Server):

SELECT /*+ INDEX(mytable, myindex) */ * FROM mytable WHERE condition;This hint forces the optimizer to use the index ‘myindex’ on the table ‘mytable’.

Important Note: The specific syntax and available hints vary considerably between database systems (SQL Server, Oracle, MySQL, PostgreSQL, etc.). Always refer to your DBMS’s documentation for the correct syntax and the potential impact of using specific query hints.

Q 17. What are the different data types in SQL and their uses?

SQL offers a rich set of data types to accommodate various kinds of data. Choosing the correct data type is essential for data integrity and query performance. Here are some common types:

- INTEGER/INT: Whole numbers (e.g., 10, -5, 0).

- FLOAT/DOUBLE: Numbers with decimal points (e.g., 3.14, -2.5).

- VARCHAR(n)/CHAR(n): Variable-length and fixed-length character strings respectively. ‘VARCHAR’ is generally preferred for better space efficiency unless fixed-length is absolutely needed.

- DATE/TIME/DATETIME: Store date and time information. The specific types and their formats vary across DBMS.

- BOOLEAN/BIT: True/False values. Often represented as 1/0.

- BLOB (Binary Large Object): Stores large binary data like images or documents.

- TEXT: Stores large text data. Treatment can vary based on the DBMS.

Selecting appropriate data types helps reduce storage space, improves query performance (the database can optimize operations based on the data type), and ensures data integrity by preventing invalid data from being entered.

Q 18. Explain the concept of database triggers.

Database triggers are stored procedures that automatically execute in response to certain events on a particular table or view. They’re like automated responders that perform actions based on changes in your database. They ensure data integrity, enforce business rules, or audit changes automatically.

Example Scenarios:

- Auditing: A trigger can log every update to a table, recording the old and new values for auditing purposes.

- Data Validation: A trigger can prevent invalid data from being inserted into a table. For instance, preventing negative values in a ‘quantity’ column.

- Cascading Updates: A trigger can automatically update related tables when data in a primary table changes.

Example (Conceptual): A trigger on an ‘Orders’ table might automatically update an ‘Inventory’ table when a new order is placed, decreasing the inventory count of the ordered items.

Triggers enhance data integrity and enforce business rules automatically, making your database more robust and less prone to errors. However, complex triggers can potentially impact performance, so they should be designed carefully.

Q 19. How do you create and manage user roles and permissions in a database?

Managing user roles and permissions is crucial for database security. This involves creating different user accounts with varying levels of access to prevent unauthorized data modification or viewing. The process usually involves:

- Creating Users: Define user accounts with unique usernames and passwords.

- Creating Roles: Define roles representing different access levels (e.g., ‘administrator’, ‘data_entry’, ‘read_only’). Roles group permissions.

- Granting Permissions: Assign specific permissions (e.g., SELECT, INSERT, UPDATE, DELETE) to individual roles. You can grant permissions on specific tables, columns, or even views.

- Assigning Roles to Users: Assign roles to users, giving them the combined permissions of the assigned roles.

Example (Conceptual): Create a ‘data_entry’ role that can only INSERT and UPDATE data in the ‘Products’ table but cannot DELETE or view data from other tables. Then assign this role to specific users.

Effective role management minimizes security risks and ensures users only access the data they need, improving data security and adhering to regulatory compliance requirements.

Q 20. How do you handle data integrity constraints in SQL?

Data integrity constraints are rules that ensure data accuracy and consistency. They prevent invalid data from entering the database. Common types include:

- NOT NULL: Ensures a column cannot have NULL values.

- UNIQUE: Ensures all values in a column are unique (e.g., primary keys).

- PRIMARY KEY: Uniquely identifies each row in a table. It’s a combination of NOT NULL and UNIQUE constraints.

- FOREIGN KEY: Creates a link between two tables, ensuring referential integrity. It prevents actions that would destroy links between related data (e.g., deleting a record in a parent table if child records referencing it still exist).

- CHECK: Ensures values in a column satisfy a specific condition (e.g., age > 0).

Example: A ‘Customers’ table might have a ‘CustomerID’ as the PRIMARY KEY, and an ‘Orders’ table might have a ‘CustomerID’ as a FOREIGN KEY, referencing the ‘Customers’ table. This prevents creating an order for a non-existent customer.

Data integrity constraints are vital for maintaining accurate and reliable data. They reduce errors, improve data quality, and help ensure consistency throughout your database.

Q 21. Explain the concept of database views.

Database views are virtual tables based on the result-set of an SQL statement. They don’t store data themselves; they provide a customized or simplified view of the underlying base tables. Think of them as pre-defined queries stored as named objects.

Benefits of Views:

- Data Security: Views can restrict access to sensitive data by only showing selected columns or rows.

- Data Simplification: Views can combine data from multiple tables into a single, easier-to-query structure.

- Query Simplification: Complex queries can be encapsulated in a view, simplifying access for other users or applications.

- Data Integrity: Views can be used to enforce business rules and data integrity through their underlying queries.

Example: A view could be created to display only customer names and order totals, hiding the underlying tables containing detailed order information. This simplifies data access for reporting while protecting detailed order data.

Views are powerful tools for managing data access, simplifying queries, and enhancing security and usability of your database.

Q 22. Describe different ways to perform data backup and recovery.

Data backup and recovery are crucial for ensuring data availability and business continuity. There are several methods, each with its strengths and weaknesses. Think of it like having multiple copies of a crucial document – you want different copies stored in different ways, just in case one method fails.

- Full Backup: This involves copying the entire database at a specific point in time. It’s like making a complete photocopy of your document. It’s resource-intensive but provides a complete restore point. Restoration is usually faster than other methods.

- Differential Backup: This backs up only the data that has changed since the last full backup. It’s like noting down the changes made to your document since the last complete copy. It requires less storage than full backups, but restoring requires both the full and differential backups.

- Incremental Backup: This backs up only the data that has changed since the last backup (full or incremental). It’s similar to tracking every tiny change made to your document. It minimizes storage space and backup time but restoring is the most complex, as it requires the full backup and all subsequent incremental backups.

- Transaction Log Backups (for systems that support them): These backups capture all database transactions since the last backup. This is like tracking every keystroke made in your document. Useful for point-in-time recovery and minimizing data loss.

The choice of method depends on factors like recovery time objective (RTO) – how quickly you need to restore data – recovery point objective (RPO) – how much data loss is acceptable – and available storage space. Many organizations employ a combination of methods, for example, a full backup weekly, differential backups daily, and transaction log backups hourly for maximum protection.

Q 23. How do you monitor database performance?

Monitoring database performance is essential for maintaining efficiency and preventing issues. Think of it like checking your car’s vital signs – oil pressure, temperature, etc. – to ensure smooth operation. Effective monitoring involves several key areas:

- Query Performance: Tools like SQL Profiler (SQL Server), explain plan (Oracle, PostgreSQL), and slow query logs (MySQL) help identify slow-running queries that need optimization.

- Resource Utilization: Monitoring CPU, memory, I/O, and network usage reveals bottlenecks. Database management systems (DBMS) usually provide built-in monitoring tools or interfaces for this.

- Blocking and Deadlocks: These occur when multiple transactions interfere with each other. Monitoring tools can pinpoint these situations for resolution.

- Transaction Performance: Tracking transaction commit times and error rates provides insights into overall database health.

- Schema Integrity: Regular checks for data integrity ensure data accuracy and consistency.

By using a combination of built-in monitoring tools and third-party performance monitoring software, we can identify performance bottlenecks, optimize queries, and ensure the database runs smoothly. Regular monitoring and proactive adjustments are key to preventing performance issues before they impact users.

Q 24. Explain your experience with different database systems (e.g., MySQL, PostgreSQL, SQL Server, Oracle).

I have extensive experience with several database systems, each with its own strengths and nuances. It’s like knowing different types of cars – each has its pros and cons for different situations.

- MySQL: A robust and versatile open-source relational database system, ideal for web applications and smaller-scale projects. I’ve used it extensively for projects requiring scalability and cost-effectiveness.

- PostgreSQL: Another powerful open-source relational database, known for its advanced features like support for JSON, PostGIS (geospatial extensions), and robust data types. I’ve found it particularly useful for applications requiring complex data modeling and advanced features.

- SQL Server: A commercial relational database management system from Microsoft, known for its enterprise-grade features, excellent integration with other Microsoft products, and robust security features. I’ve used it in large-scale enterprise applications demanding high performance and reliability.

- Oracle: A leading commercial relational database, known for its scalability, performance, and comprehensive features for managing large and complex datasets. I’ve worked on projects utilizing Oracle’s advanced features such as RAC (Real Application Clusters) for high availability.

My experience includes designing schemas, writing optimized queries, tuning performance, and managing backups and recovery for these systems. The specific tools and techniques used vary depending on the system, but the core principles of database design and management remain consistent.

Q 25. How do you use recursive CTEs?

Recursive Common Table Expressions (CTEs) are a powerful tool for handling hierarchical data. Think of them like a way to explore a family tree – you start at the top and recursively traverse down to find all descendants.

A recursive CTE defines a CTE that references itself. This allows for iterative processing of data until a termination condition is met. Here’s a simple example showing a hierarchical structure of departments:

WITH RECURSIVE DepartmentHierarchy AS ( SELECT department_id, department_name, parent_department_id FROM Departments WHERE parent_department_id IS NULL -- Start with top-level departments UNION ALL SELECT d.department_id, d.department_name, d.parent_department_id FROM Departments d INNER JOIN DepartmentHierarchy dh ON d.parent_department_id = dh.department_id ) SELECT * FROM DepartmentHierarchy;

This query starts with top-level departments (WHERE parent_department_id IS NULL) and recursively joins the Departments table with itself until it reaches the leaf nodes of the hierarchy. The UNION ALL combines the results of each iteration. Recursive CTEs are invaluable for navigating hierarchical data structures such as organizational charts, bill of materials, and many other real-world scenarios.

Q 26. Explain the concept of partitioning and its benefits.

Database partitioning is a technique of dividing a large database table into smaller, more manageable pieces called partitions. Think of it like dividing a large library into smaller sections based on genre – fiction, non-fiction, etc. This improves performance and manageability.

- Improved Query Performance: Queries only need to scan relevant partitions, significantly reducing processing time, particularly for large tables.

- Enhanced Data Management: Partitioning allows for easier data maintenance, like archiving or deleting old partitions.

- Scalability: Adding new partitions is often simpler than expanding the entire table.

- Parallel Processing: Partitions can be processed in parallel, further improving query speed.

The choice of partitioning strategy (range, list, hash) depends on the data distribution and query patterns. For example, range partitioning is suitable for time-series data, while hash partitioning distributes data evenly across partitions. Partitioning can dramatically improve performance and manageability for very large databases, making it a powerful tool for handling massive datasets.

Q 27. Describe your experience with database replication and high availability.

Database replication and high availability are essential for ensuring data redundancy and minimizing downtime. It’s like having multiple copies of important data stored in different locations. This ensures continued service even if one server fails.

- Replication: This involves copying data from a primary database to one or more secondary databases (replicas). This ensures data redundancy. Different replication methods exist, such as synchronous (immediate replication) and asynchronous (delayed replication), each with its trade-offs in terms of performance and data consistency.

- High Availability (HA): This ensures that the database remains available even in the event of hardware failure or other disruptions. This is commonly achieved through clustering and failover mechanisms. If the primary database fails, a replica automatically takes over.

My experience includes implementing both synchronous and asynchronous replication using various tools and techniques depending on the specific DBMS. I’ve also worked on setting up high-availability clusters, ensuring minimal downtime during failures. Understanding replication and HA techniques is critical for building robust and resilient database systems.

Q 28. How would you approach designing a database schema for a specific complex scenario (e.g., e-commerce platform)?

Designing a database schema for a complex scenario like an e-commerce platform requires careful planning and consideration. It’s like designing the blueprint for a complex building – you need to think about every detail to ensure its functionality and scalability.

A typical e-commerce schema would include tables for:

- Products: Product information (ID, name, description, price, category, etc.).

- Categories: Product categories (ID, name, description, parent category, etc.).

- Customers: Customer details (ID, name, address, email, etc.).

- Orders: Order information (ID, customer ID, order date, total amount, etc.).

- Order Items: Details of each item in an order (order ID, product ID, quantity, price, etc.).

- Inventory: Information on product stock (product ID, quantity, warehouse location, etc.).

- Payments: Payment information (ID, order ID, payment method, amount, etc.).

- Reviews: Customer reviews for products (ID, product ID, customer ID, rating, review text, etc.).

Relationships between these tables would be established using foreign keys to maintain data integrity. Indexing would be crucial for optimizing query performance. Normalization techniques would be employed to reduce data redundancy and improve data consistency. Consideration would also be given to scalability, ensuring the database can handle increasing amounts of data and traffic. Finally, regular schema review and optimization are necessary to maintain the database’s efficiency and adapt to changing business needs. The design process would involve iterative refinement, incorporating feedback and adapting to the evolving requirements of the e-commerce platform.

Key Topics to Learn for Advanced Proficiency in SQL Interview

- Window Functions: Understand concepts like partitioning, ordering, and the use of functions like RANK(), ROW_NUMBER(), LAG(), and LEAD(). Practical application: Analyzing sales trends over time, identifying top performers within different regions.

- Common Table Expressions (CTEs): Master the use of CTEs for simplifying complex queries and improving readability. Practical application: Breaking down a large query into smaller, manageable logical units for better understanding and maintainability.

- Advanced JOINs: Go beyond basic JOINs and explore techniques using FULL OUTER JOINs, LEFT/RIGHT JOINs with conditions, and understanding their implications on data retrieval. Practical application: Combining data from multiple tables with complex relationships to answer business questions.

- Indexing and Query Optimization: Learn how to analyze query execution plans, identify performance bottlenecks, and create efficient indexes to improve query speed. Practical application: Tuning slow-running queries to achieve optimal performance in production environments.

- Stored Procedures and Functions: Understand the creation, use, and benefits of stored procedures and functions for code reusability and data integrity. Practical application: Encapsulating complex business logic into reusable units for better database management.

- Transactions and Concurrency Control: Learn about ACID properties, isolation levels, and techniques for handling concurrent access to the database. Practical application: Ensuring data consistency and integrity in multi-user environments.

- Data Modeling and Normalization: Understand different database normalization forms and their application in designing efficient and scalable databases. Practical application: Building robust database schemas that minimize data redundancy and improve data integrity.

- Database Security: Explore concepts of user roles, permissions, and security best practices to protect sensitive data. Practical application: Implementing secure access controls and preventing unauthorized data access.

Next Steps

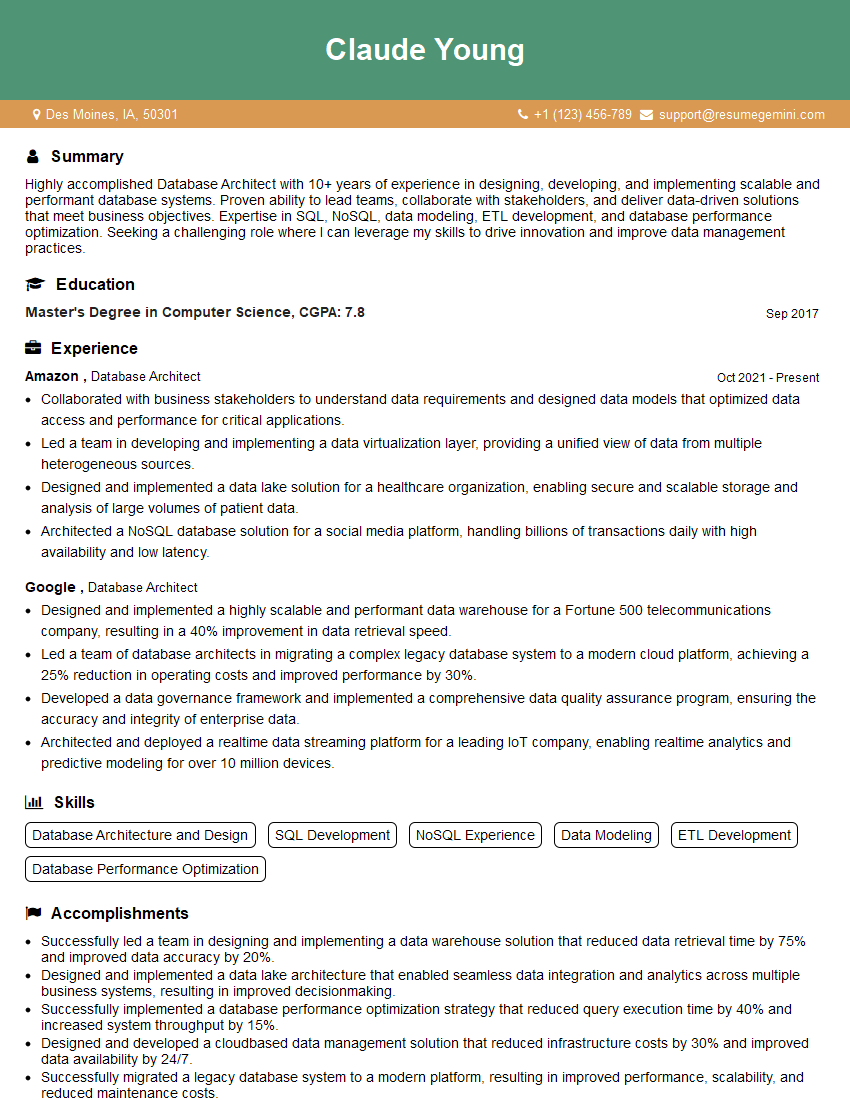

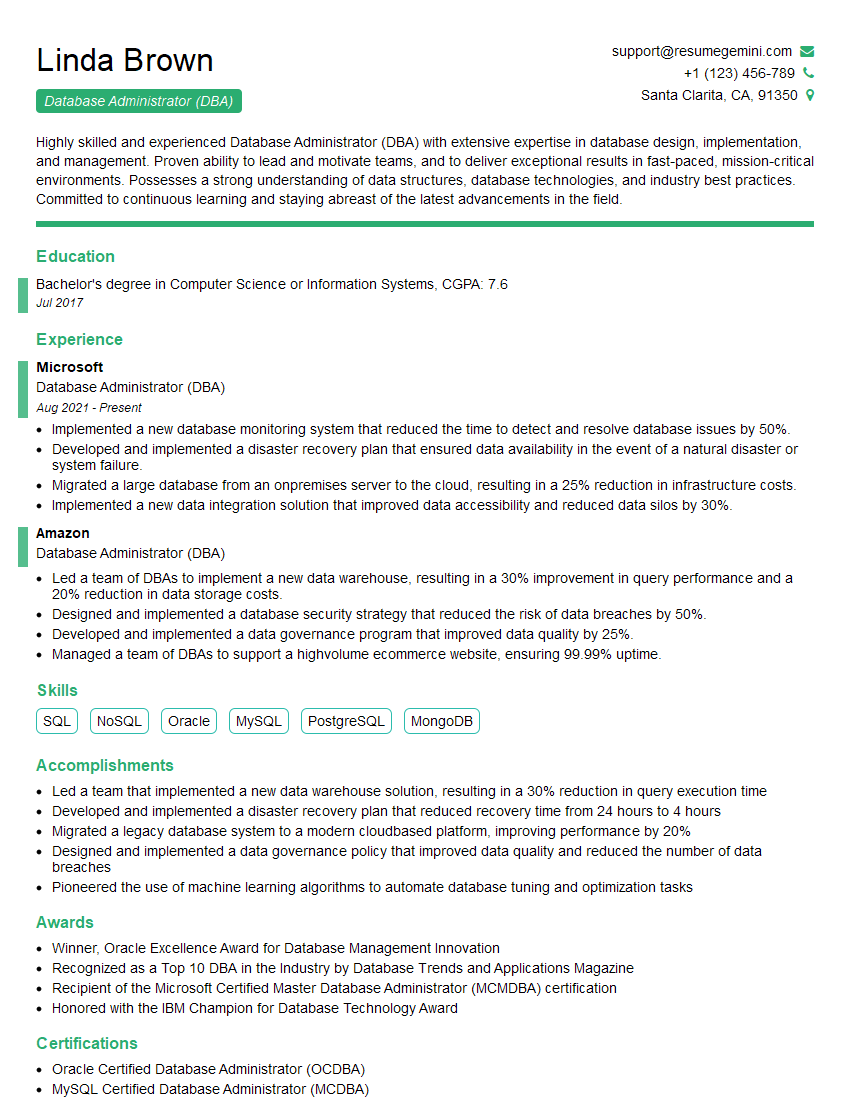

Mastering Advanced Proficiency in SQL significantly boosts your career prospects, opening doors to high-demand roles in data analysis, database administration, and software development. An ATS-friendly resume is crucial for getting your application noticed by recruiters. To enhance your job search, we strongly encourage you to use ResumeGemini, a trusted resource for crafting professional and effective resumes. ResumeGemini provides examples of resumes tailored to Advanced Proficiency in SQL, helping you showcase your skills and experience effectively.

Explore more articles

Users Rating of Our Blogs

Share Your Experience

We value your feedback! Please rate our content and share your thoughts (optional).

What Readers Say About Our Blog

Hello,

We found issues with your domain’s email setup that may be sending your messages to spam or blocking them completely. InboxShield Mini shows you how to fix it in minutes — no tech skills required.

Scan your domain now for details: https://inboxshield-mini.com/

— Adam @ InboxShield Mini

Reply STOP to unsubscribe

Hi, are you owner of interviewgemini.com? What if I told you I could help you find extra time in your schedule, reconnect with leads you didn’t even realize you missed, and bring in more “I want to work with you” conversations, without increasing your ad spend or hiring a full-time employee?

All with a flexible, budget-friendly service that could easily pay for itself. Sounds good?

Would it be nice to jump on a quick 10-minute call so I can show you exactly how we make this work?

Best,

Hapei

Marketing Director

Hey, I know you’re the owner of interviewgemini.com. I’ll be quick.

Fundraising for your business is tough and time-consuming. We make it easier by guaranteeing two private investor meetings each month, for six months. No demos, no pitch events – just direct introductions to active investors matched to your startup.

If youR17;re raising, this could help you build real momentum. Want me to send more info?

Hi, I represent an SEO company that specialises in getting you AI citations and higher rankings on Google. I’d like to offer you a 100% free SEO audit for your website. Would you be interested?

Hi, I represent an SEO company that specialises in getting you AI citations and higher rankings on Google. I’d like to offer you a 100% free SEO audit for your website. Would you be interested?

good