Interviews are more than just a Q&A session—they’re a chance to prove your worth. This blog dives into essential Analytical and Data Interpretation interview questions and expert tips to help you align your answers with what hiring managers are looking for. Start preparing to shine!

Questions Asked in Analytical and Data Interpretation Interview

Q 1. Explain the difference between correlation and causation.

Correlation and causation are two distinct concepts in statistics. Correlation refers to a statistical relationship between two or more variables; it indicates how strongly they are related. Causation, on the other hand, implies a direct cause-and-effect relationship between variables – one variable directly influences the other.

Think of it this way: correlation is like noticing that two things often happen together, while causation is like understanding that one thing *makes* the other thing happen. For instance, ice cream sales and crime rates might be positively correlated (both increase in summer), but this doesn’t mean that increased ice cream sales *cause* an increase in crime. The underlying factor – the hot weather – is the cause of both. It’s crucial to avoid assuming causation from correlation alone. Rigorous methods like randomized controlled trials are often necessary to establish causality.

In a professional context, mistaking correlation for causation can lead to flawed conclusions and ineffective strategies. For example, a company might observe a correlation between increased ad spending and increased sales, but this correlation might be due to seasonal factors, and increasing ad spend might not always lead to a direct increase in sales. A proper analysis would involve isolating and controlling for confounding factors.

Q 2. How would you handle missing data in a dataset?

Handling missing data is a crucial step in data analysis. Ignoring missing values can lead to biased results. The best approach depends on the nature of the data, the amount of missing data, and the reason for its absence. Several techniques exist:

- Deletion: This involves removing rows or columns with missing values. Listwise deletion removes entire rows with any missing data; pairwise deletion uses available data for each analysis. This method is simple but can lead to significant information loss if many values are missing.

- Imputation: This involves replacing missing values with estimated values. Common methods include mean/median/mode imputation (replacing with the average/middle/most frequent value), regression imputation (predicting values based on other variables), and k-Nearest Neighbors imputation (using the values from similar data points). Imputation preserves more data, but it can introduce bias if not done carefully.

- Multiple Imputation: This creates multiple plausible imputed datasets and analyzes each separately, combining the results to provide more robust estimates. This addresses the uncertainty inherent in single imputation.

The choice of method is crucial. For example, mean imputation is simple but may not be appropriate if the data is not normally distributed. Regression imputation is more sophisticated but requires careful consideration of the relationships between variables. In my experience, I often start with exploratory data analysis to assess the pattern of missing data and then decide on the most suitable imputation method based on its characteristics.

Q 3. Describe your experience with different data visualization techniques.

I’m proficient in various data visualization techniques, tailoring my choices to the specific data and the insights I aim to convey. My experience includes:

- Histograms and Density Plots: For visualizing the distribution of a single continuous variable. I use these frequently to understand the central tendency, spread, and skewness of data.

- Scatter Plots and Line Charts: For showing the relationship between two or more continuous variables. I use these to identify trends, correlations, and potential outliers.

- Bar Charts and Pie Charts: For visualizing categorical data or proportions. These are effective for presenting summaries of data distributions.

- Box Plots: For comparing the distribution of a continuous variable across different categories. They’re excellent for showcasing median, quartiles, and outliers.

- Heatmaps: For representing correlation matrices or other tabular data. They effectively highlight patterns in large datasets.

- Geographic Maps: For visualizing data with geographic location information. I’ve used these to analyze spatial trends and patterns.

I believe that effective visualization is key to making data understandable and actionable. I always consider the audience and the message I want to convey when selecting a visualization technique. For instance, a complex heatmap might be appropriate for an expert audience but could be overwhelming for a less technical audience. In such cases, simpler charts like bar charts or line graphs are more suitable.

Q 4. What are some common data cleaning techniques you’ve used?

Data cleaning is a critical step in any data analysis project. It involves identifying and addressing inconsistencies, inaccuracies, and errors in the data. My experience encompasses a range of techniques:

- Handling Missing Values: As discussed earlier, I employ various imputation or deletion techniques depending on the context.

- Outlier Detection and Treatment: I use various methods (e.g., box plots, Z-scores) to identify outliers. Depending on the cause and impact, I might remove them, transform the data (e.g., using logarithmic transformations), or investigate further to understand the reason for their presence.

- Data Transformation: I often transform data to meet the assumptions of statistical methods. This might involve standardizing data (using z-scores), log-transforming skewed data, or creating dummy variables for categorical data.

- Error Correction: This involves identifying and correcting errors like typos, inconsistent formats, or duplicate entries. This often requires manual review and validation.

- Data Deduplication: Removing duplicate entries is crucial to ensure data integrity and avoid bias in the analysis.

For example, in a project involving customer data, I had to address inconsistent spellings of customer names and inconsistent date formats. I implemented automated scripts to standardize these elements before further analysis.

Q 5. Explain your understanding of A/B testing.

A/B testing, also known as split testing, is a method used to compare two versions of something (e.g., a webpage, an email, an advertisement) to determine which performs better. It involves randomly assigning users to one of the two versions (A or B) and measuring a key metric (e.g., click-through rate, conversion rate). This randomized assignment is crucial for ensuring that differences in outcomes are not due to other factors.

The process typically involves defining a hypothesis, selecting a metric to measure, assigning users randomly to versions A and B, collecting data, and then statistically analyzing the results to determine if there is a significant difference between the two versions. Statistical significance testing (e.g., t-tests, chi-square tests) is crucial in determining whether any observed differences are truly meaningful or just due to random chance. A well-designed A/B test carefully considers factors like sample size, duration, and the potential for confounding variables.

I’ve used A/B testing in several projects to optimize website design, marketing campaigns, and product features. For example, I helped a client test different calls to action on their website landing page. By randomly assigning visitors to either the original page or the revised page, we identified a significant improvement in conversion rates with the revised version.

Q 6. How do you identify outliers in a dataset?

Identifying outliers is crucial because they can significantly influence the results of statistical analyses and may represent errors in data collection or genuine anomalies. Various methods can be used:

- Visual Inspection: Using scatter plots, box plots, and histograms allows for quick visual identification of data points that deviate significantly from the rest of the data.

- Z-scores: This method calculates the number of standard deviations each data point is from the mean. Data points with Z-scores above a certain threshold (e.g., 3 or -3) are considered outliers.

- IQR (Interquartile Range): This method calculates the difference between the 75th and 25th percentiles of the data. Data points falling outside a certain range (e.g., 1.5 times the IQR below the first quartile or above the third quartile) are identified as outliers.

- DBSCAN (Density-Based Spatial Clustering of Applications with Noise): This clustering algorithm groups data points based on density, identifying outliers as points that do not belong to any cluster.

The choice of method depends on the nature of the data and the desired level of sensitivity. For example, Z-scores are sensitive to the presence of other outliers, while the IQR method is more robust. It’s often beneficial to use a combination of methods to gain a comprehensive understanding of outliers in the data.

Q 7. What statistical methods are you familiar with?

My statistical methods expertise encompasses a broad range, including:

- Descriptive Statistics: Calculating measures of central tendency (mean, median, mode), dispersion (variance, standard deviation, range), and skewness. I use these to summarize and understand the key features of data distributions.

- Inferential Statistics: This involves using sample data to make inferences about the population. My experience includes t-tests, ANOVA (analysis of variance), chi-square tests, regression analysis (linear, multiple, logistic), and correlation analysis. I use these to test hypotheses and build predictive models.

- Time Series Analysis: Analyzing time-dependent data, including forecasting techniques like ARIMA (Autoregressive Integrated Moving Average) and exponential smoothing.

- Hypothesis Testing: Formulating and testing hypotheses using appropriate statistical tests to determine whether observed differences or relationships are statistically significant.

- Regression Modeling: Developing and evaluating regression models to predict outcomes based on predictor variables. I have experience with model selection techniques (e.g., stepwise regression) and model evaluation metrics (e.g., R-squared, adjusted R-squared, RMSE).

I’m also familiar with statistical software packages such as R and Python (with libraries like Pandas, NumPy, Scikit-learn, and Statsmodels) to execute these analyses efficiently and effectively.

Q 8. How would you approach analyzing a large dataset?

Analyzing a large dataset requires a systematic approach. It’s not just about throwing it into a software and hoping for insights; it’s about understanding the data, cleaning it, exploring it, and finally, drawing meaningful conclusions. My approach involves several key steps:

- Understanding the Business Problem: Before touching the data, I need to understand the questions we’re trying to answer. What are the goals of this analysis? This guides the entire process.

- Data Exploration and Profiling: This involves using descriptive statistics and visualizations to understand the dataset’s characteristics – data types, distribution, missing values, outliers. Tools like Pandas in Python are invaluable here. For example, I’d check for skewed distributions, identify potential errors, and understand the relationships between different variables.

- Data Cleaning and Preprocessing: This is often the most time-consuming step. It involves handling missing values (imputation or removal), dealing with outliers (transformation or removal), and potentially transforming variables (e.g., log transformation for skewed data).

- Feature Engineering: This is where I create new variables from existing ones to improve the model’s performance. For example, I might create interaction terms or derive new features from dates.

- Exploratory Data Analysis (EDA): This involves using visualization tools (e.g., Matplotlib, Seaborn in Python) and statistical methods to identify patterns, trends, and relationships within the data. This helps formulate hypotheses and refine the analysis.

- Model Building and Selection: Depending on the business problem, I’d choose appropriate analytical techniques – regression, classification, clustering, etc. I’d also consider model evaluation metrics relevant to the problem (e.g., accuracy, precision, recall, AUC).

- Model Deployment and Monitoring: Once the model is built, it might need to be deployed and monitored over time to ensure its performance remains consistent. This often involves creating dashboards and alerts.

For instance, if analyzing customer churn data, I’d focus on identifying factors contributing to churn, building a predictive model, and potentially recommending actions to reduce churn.

Q 9. Describe a time you had to interpret complex data to solve a problem.

In a previous role, we were experiencing a significant drop in website conversions. The marketing team had several hypotheses, but no clear data-driven evidence. The data was quite complex, consisting of website traffic data, user behavior data (clicks, scroll depth, time spent), A/B testing results, and marketing campaign data.

My approach was to:

- Consolidate the data: I combined data from various sources into a single dataset, ensuring data consistency and addressing inconsistencies.

- Segment the data: I segmented users based on different criteria (e.g., traffic source, device type, geographic location) to identify potential patterns. This revealed a significant drop in conversions from mobile users.

- Analyze user behavior: I compared user behavior patterns between high-converting and low-converting segments. This highlighted a usability issue on the mobile version of the website.

- Visualize the findings: I created dashboards and reports illustrating the key findings, clearly showing the correlation between mobile usability and conversion rates.

The visualization clearly showed the problem. By communicating these findings, the development team prioritized fixing the mobile usability issues, resulting in a significant increase in conversions from mobile users. This demonstrated the power of data interpretation to solve real-world business problems.

Q 10. What are your preferred tools for data analysis?

My preferred tools for data analysis depend on the task and the size of the data, but generally include:

- Programming Languages: Python (with libraries like Pandas, NumPy, Scikit-learn, Matplotlib, Seaborn) and R are my go-to languages for data manipulation, analysis, and modeling. Python’s versatility makes it ideal for diverse tasks.

- Databases: SQL is essential for querying and managing relational databases. I’m proficient in writing complex queries to extract and transform data.

- Big Data Technologies: For very large datasets, I’m experienced with tools like Spark and Hadoop.

- Data Visualization Tools: Tableau and Power BI are excellent for creating interactive and shareable dashboards.

- Cloud Computing Platforms: AWS, Google Cloud Platform, and Azure offer scalable resources for data storage, processing, and analysis.

The choice of specific tools depends on the context. For smaller datasets, Python and SQL might suffice, whereas for large, complex datasets, tools like Spark are necessary.

Q 11. How do you communicate data findings to a non-technical audience?

Communicating data findings to a non-technical audience requires translating complex technical concepts into clear, concise, and engaging narratives. My approach involves:

- Focus on the Story: Frame the data analysis as a story with a clear beginning (the problem), middle (the findings), and end (the recommendations).

- Visualizations: Use charts, graphs, and other visuals to represent complex data in an easily understandable format. Avoid overwhelming the audience with too much detail; focus on the key takeaways.

- Plain Language: Avoid jargon and technical terms; use simple, everyday language that everyone can understand. Analogies and metaphors can be powerful tools.

- Focus on the Impact: Emphasize the significance of the findings and their implications for the business. What actions can be taken based on the analysis?

- Interactive Presentations: Interactive dashboards and presentations allow non-technical audiences to explore the data themselves, fostering better understanding.

For example, instead of saying “The p-value is less than 0.05, indicating statistical significance,” I might say “Our analysis shows a strong correlation between X and Y, suggesting that investing in X is likely to significantly improve Y.”

Q 12. Explain your experience with SQL or other database querying languages.

I have extensive experience with SQL, using it daily for data extraction, transformation, and loading (ETL) processes. I’m proficient in writing complex queries involving joins, subqueries, aggregations, and window functions. I can optimize queries for performance and handle large datasets efficiently.

For example, I’ve used SQL to:

- Extract data from multiple tables: Using joins to combine data from different tables based on common keys.

- Aggregate data: Calculating summary statistics (e.g., sums, averages, counts) using aggregate functions.

- Filter data: Selecting specific rows based on certain criteria using

WHEREclauses. - Sort data: Ordering data based on specific columns using

ORDER BYclauses.

Here’s an example of a SQL query to calculate the average order value:

SELECT AVG(order_total) AS average_order_value FROM orders;Beyond SQL, I’m also familiar with NoSQL databases such as MongoDB for handling unstructured or semi-structured data.

Q 13. Describe your experience with data mining techniques.

Data mining techniques are crucial for uncovering hidden patterns and insights in large datasets. My experience includes applying various techniques, including:

- Association Rule Mining (Apriori Algorithm): Identifying relationships between items in transactional data (e.g., market basket analysis to understand which products are frequently purchased together).

- Classification: Building models to predict categorical outcomes (e.g., customer churn prediction, spam detection). I’ve used algorithms like decision trees, support vector machines (SVMs), and logistic regression.

- Clustering: Grouping similar data points together (e.g., customer segmentation, anomaly detection). K-means and hierarchical clustering are techniques I’ve used.

- Regression: Modeling the relationship between variables to predict continuous outcomes (e.g., sales forecasting, price prediction). Linear regression and other regression models are frequently used.

In a project involving customer segmentation, I used K-means clustering to group customers based on their purchasing behavior, demographics, and engagement metrics. This allowed the marketing team to tailor their campaigns to specific customer segments, leading to improved marketing efficiency.

Q 14. How do you validate the accuracy of your data analysis?

Validating the accuracy of data analysis is critical to ensure the reliability of conclusions and recommendations. My approach involves several steps:

- Data Validation: This involves checking the data for accuracy, completeness, and consistency. This includes examining data sources, performing data profiling, and identifying outliers or errors.

- Cross-Validation: Using techniques like k-fold cross-validation to evaluate the performance of machine learning models and prevent overfitting.

- Sensitivity Analysis: Assessing how sensitive the results are to changes in assumptions or input data. This helps understand the robustness of the analysis.

- Peer Review: Having other analysts review the analysis process and findings to identify potential biases or errors.

- Compare to External Data: When possible, comparing the findings to external data sources or benchmarks to validate the results.

- Documenting the Process: Maintaining detailed documentation of the data analysis process, including data sources, methods used, and assumptions made. This is crucial for reproducibility and transparency.

For instance, in a regression model, I would check the model’s R-squared, adjusted R-squared, and other diagnostic statistics to ensure a good fit and assess the accuracy of predictions. I would also perform residual analysis to identify potential violations of model assumptions.

Q 15. What are some common challenges in data analysis, and how have you overcome them?

Data analysis, while powerful, presents several challenges. One common hurdle is data quality: incomplete, inconsistent, or inaccurate data can lead to flawed conclusions. I’ve overcome this by implementing rigorous data cleaning processes, involving checks for missing values, outlier detection using techniques like box plots and Z-scores, and employing data imputation methods like K-Nearest Neighbors or multiple imputation for missing data, depending on the nature and extent of the missingness. Another challenge is data bias; datasets can reflect existing societal biases, leading to unfair or discriminatory outcomes. To mitigate this, I focus on understanding the data’s origin and potential biases, employing techniques like stratified sampling and careful feature engineering to ensure fairness and representativeness. Finally, the curse of dimensionality, where having too many variables makes analysis computationally expensive and difficult to interpret, is a common problem. I handle this by using dimensionality reduction techniques like Principal Component Analysis (PCA) or feature selection methods to identify the most relevant variables.

For instance, in a project analyzing customer churn, I discovered significant inconsistencies in customer address data. By carefully examining the data and using geographical mapping tools, I identified a data entry error leading to an inaccurate churn rate calculation. Implementing a data validation step prevented similar errors in future analyses.

Career Expert Tips:

- Ace those interviews! Prepare effectively by reviewing the Top 50 Most Common Interview Questions on ResumeGemini.

- Navigate your job search with confidence! Explore a wide range of Career Tips on ResumeGemini. Learn about common challenges and recommendations to overcome them.

- Craft the perfect resume! Master the Art of Resume Writing with ResumeGemini’s guide. Showcase your unique qualifications and achievements effectively.

- Don’t miss out on holiday savings! Build your dream resume with ResumeGemini’s ATS optimized templates.

Q 16. How would you interpret a regression analysis output?

Interpreting a regression analysis output involves understanding several key components. The coefficients indicate the change in the dependent variable for a one-unit change in the independent variable, holding other variables constant. The p-values associated with each coefficient tell us the statistical significance of that variable; a low p-value (typically below 0.05) suggests a statistically significant relationship. The R-squared value represents the proportion of variance in the dependent variable explained by the independent variables; a higher R-squared indicates a better fit. Finally, the residuals (the differences between observed and predicted values) provide insights into the model’s accuracy and the presence of any patterns or outliers.

Imagine analyzing the relationship between advertising spend (independent variable) and sales (dependent variable). A regression output might show a positive coefficient for advertising spend with a low p-value, indicating that increased advertising spend is significantly associated with increased sales. The R-squared might be 0.7, suggesting that 70% of the variation in sales can be explained by advertising spend. Examining the residuals plot could reveal potential non-linearity or heteroscedasticity (unequal variance) in the data, requiring model adjustments.

Q 17. Explain the concept of hypothesis testing.

Hypothesis testing is a statistical method used to make inferences about a population based on a sample of data. It involves formulating a null hypothesis (H0), which represents the status quo or a claim we want to disprove, and an alternative hypothesis (H1), which is the opposite of the null hypothesis. We then collect data and use statistical tests to determine whether there’s enough evidence to reject the null hypothesis in favor of the alternative hypothesis. The process involves setting a significance level (alpha), usually 0.05, which represents the probability of rejecting the null hypothesis when it’s actually true (Type I error). If the p-value from the test is less than alpha, we reject the null hypothesis; otherwise, we fail to reject it.

For example, let’s say we want to test whether a new drug reduces blood pressure. The null hypothesis would be that the drug has no effect on blood pressure (H0: no effect), and the alternative hypothesis would be that the drug reduces blood pressure (H1: reduction). We’d conduct a t-test comparing the blood pressure of patients who received the drug to those who received a placebo. If the p-value is less than 0.05, we’d reject the null hypothesis and conclude that the drug significantly reduces blood pressure.

Q 18. How would you handle skewed data?

Skewed data, where the distribution is not symmetrical, can affect the results of many statistical analyses. Several techniques exist to handle skewed data. Data transformation is a common approach; logarithmic, square root, or Box-Cox transformations can often normalize the distribution, making it more suitable for analysis. Alternatively, if the skewness is due to outliers, we could consider removing them after careful consideration of their potential impact or using robust statistical methods less sensitive to outliers. Using non-parametric methods, which make fewer assumptions about the data distribution, can also be beneficial when dealing with highly skewed data.

Consider income data, which is often right-skewed (long tail to the right). Taking the logarithm of the income values often makes the distribution more symmetrical and allows the use of methods that assume normality. If only a few extremely high incomes are causing the skewness, we could explore ways to handle those values; capping them at a reasonable level, or creating a separate category for very high incomes to avoid distorting the mean or standard deviation.

Q 19. Describe your experience with different types of data (e.g., categorical, numerical).

My experience encompasses a wide range of data types. I’m proficient in working with numerical data, including continuous variables (e.g., height, weight, temperature) and discrete variables (e.g., count of items, number of customers). I frequently utilize descriptive statistics (mean, median, standard deviation) and various statistical tests to analyze numerical data. I’m also comfortable handling categorical data, which includes nominal variables (e.g., color, gender) and ordinal variables (e.g., education level, customer satisfaction rating). For categorical data, I often employ techniques like frequency distributions, cross-tabulations, and chi-square tests. Furthermore, I have extensive experience with text data, applying natural language processing (NLP) techniques like sentiment analysis and topic modeling to extract meaningful insights. Finally, I have worked with temporal data (data with a time component) using time series analysis techniques for forecasting and trend identification. This experience allows me to adapt my analytical approach to the specific characteristics of each dataset.

Q 20. How do you ensure data quality and integrity?

Ensuring data quality and integrity is paramount. My approach involves a multi-step process. Firstly, data validation at the source helps prevent errors from entering the dataset. This involves checking for data type consistency, range constraints, and adherence to predefined rules. Secondly, data cleaning involves identifying and addressing issues like missing values, outliers, and inconsistencies. Techniques such as imputation and outlier treatment are employed as appropriate. Thirdly, data transformation might be needed to make the data suitable for analysis, potentially involving standardization or normalization. Finally, regular data audits help monitor data quality over time and identify potential problems early on. This may include comparing the current data to historical data or against external sources. Documentation plays a crucial role, clearly outlining data sources, cleaning processes, and any transformations applied.

For instance, in a recent project, I implemented automated checks to ensure consistency in date formats and validated customer IDs against a master database, preventing duplicate entries and improving data accuracy.

Q 21. What are some common metrics used to evaluate the performance of a model?

The choice of metrics for evaluating model performance depends on the specific type of model and the business objective. For classification models (predicting categories), common metrics include accuracy (overall correctness), precision (proportion of true positives among predicted positives), recall (proportion of true positives among actual positives), and the F1-score (harmonic mean of precision and recall). The AUC-ROC curve (Area Under the Receiver Operating Characteristic curve) provides a measure of the model’s ability to distinguish between classes. For regression models (predicting continuous values), common metrics include Mean Squared Error (MSE), Root Mean Squared Error (RMSE), Mean Absolute Error (MAE), and R-squared. These metrics quantify the difference between predicted and actual values. Beyond these standard metrics, business-specific KPIs may also be relevant, such as customer lifetime value or conversion rate, depending on the context of the model.

For example, in a fraud detection model, high precision is crucial to minimize false positives (flagging legitimate transactions as fraudulent), even if it means slightly lower recall (missing some actual fraudulent transactions). However, in a medical diagnosis model, high recall is prioritized to ensure all true cases are identified, even if it increases the number of false positives.

Q 22. How do you choose the appropriate statistical test for a given problem?

Choosing the right statistical test is crucial for drawing valid conclusions from your data. It depends entirely on several factors: the type of data you have (categorical, continuous, ordinal), the number of groups you’re comparing, the research question you’re asking (e.g., are you testing for differences, relationships, or making predictions?), and the assumptions of the test (like normality of data distribution).

Think of it like choosing the right tool for a job. You wouldn’t use a hammer to screw in a screw, right? Similarly, using the wrong statistical test can lead to inaccurate results and flawed interpretations.

- For comparing means between two groups: If your data is normally distributed and variances are equal, you’d use an independent samples t-test. If variances are unequal, you’d use a Welch’s t-test. If the data is not normally distributed, a Mann-Whitney U test (a non-parametric test) is more appropriate.

- For comparing means among more than two groups: A one-way ANOVA (Analysis of Variance) is used if data is normally distributed. A Kruskal-Wallis test is the non-parametric alternative for non-normally distributed data.

- For analyzing the relationship between two continuous variables: Pearson’s correlation is suitable for linearly related, normally distributed data. Spearman’s rank correlation is used for non-linearly related or non-normally distributed data.

- For analyzing the relationship between a categorical and a continuous variable: An independent samples t-test or ANOVA (depending on the number of categories) might be used.

In practice, I always start by carefully examining the data, checking for normality and other assumptions. I then select the appropriate test based on these characteristics and the research question. I also document my choices and rationale clearly, ensuring transparency and reproducibility of my analysis.

Q 23. Explain your understanding of different sampling methods.

Sampling methods are crucial for obtaining a representative subset of a larger population, allowing us to make inferences about the population without having to analyze every single data point. The choice of sampling method heavily influences the accuracy and generalizability of our findings.

- Probability Sampling: Every member of the population has a known, non-zero probability of being selected. This includes:

- Simple Random Sampling: Each member has an equal chance of selection. Think of a lottery draw.

- Stratified Sampling: The population is divided into strata (subgroups) and samples are randomly drawn from each stratum. Useful when you want representation from all subgroups.

- Cluster Sampling: The population is divided into clusters, and a random sample of clusters is selected. All members within the selected clusters are included. Cost-effective for geographically dispersed populations.

- Systematic Sampling: Selecting every kth member from a list after a random starting point. Simple but can be biased if the list has a pattern.

- Non-Probability Sampling: The probability of selection is unknown. This is often used when probability sampling is impractical or impossible, but it limits the generalizability of findings.

- Convenience Sampling: Selecting readily available participants. Easy but highly prone to bias.

- Quota Sampling: Similar to stratified sampling, but the selection within each stratum isn’t random.

- Snowball Sampling: Participants refer others. Useful for hard-to-reach populations, but can be biased.

The choice of sampling method depends on factors like the research question, budget, time constraints, and access to the population. I always carefully consider potential biases and limitations of the chosen method and clearly document these limitations in my reports.

Q 24. Describe your experience with time series analysis.

Time series analysis involves analyzing data points collected over time to understand patterns, trends, and seasonality. It’s used extensively in various fields like finance (stock price prediction), economics (GDP forecasting), and meteorology (weather forecasting).

My experience involves using various techniques, including:

- Decomposition: Separating a time series into its components (trend, seasonality, cyclical, and residual) to better understand the underlying patterns. This often involves moving averages or seasonal decomposition of time series (STL) methods.

- ARIMA modeling: Autoregressive Integrated Moving Average models are powerful tools for forecasting time series data by modeling the relationship between past and present values. Model selection involves determining the appropriate order (p, d, q) parameters.

- Exponential Smoothing: A family of forecasting methods that assigns exponentially decreasing weights to older observations. Simple exponential smoothing, Holt’s method (for trend), and Holt-Winters method (for trend and seasonality) are commonly used.

- Prophet (from Meta): A robust and widely used forecasting algorithm specifically designed for business time series data that handles seasonality and trend changes effectively.

For example, in a previous role, I used ARIMA modeling to forecast customer demand for a retail company, which helped optimize inventory levels and reduce storage costs. The key is to choose the appropriate model based on the characteristics of the time series data and the forecasting horizon. Thorough model diagnostics, including residual analysis, are essential to ensure the model’s accuracy and reliability.

Q 25. How do you identify and address biases in data?

Identifying and addressing biases in data is paramount for ensuring the validity and reliability of any analysis. Biases can significantly distort results and lead to incorrect conclusions.

My approach involves a multi-step process:

- Data Collection Bias: This occurs during the data collection process. For example, selection bias happens when the sample is not representative of the population. Sampling bias occurs when certain groups are over or under-represented. I mitigate this by employing appropriate sampling methods (as discussed earlier) and documenting the sampling procedure meticulously.

- Measurement Bias: This arises from inaccuracies in how data is measured. For example, observer bias occurs when the researcher’s expectations influence the observations. To address this, I use standardized measurement instruments and blind or double-blind procedures where appropriate.

- Confirmation Bias: This is a cognitive bias where we tend to favor information that confirms our preconceived notions. I combat this by developing hypotheses *before* data analysis and ensuring my analysis is objective and unbiased.

Once biases are identified, I address them through various techniques, including data cleaning, transformation, weighting, and using robust statistical methods that are less sensitive to outliers. Documenting the biases and how they were handled is essential for transparency and reproducibility.

Q 26. How familiar are you with data warehousing and ETL processes?

I have significant experience with data warehousing and ETL (Extract, Transform, Load) processes. Data warehousing involves designing and implementing a central repository for storing large volumes of data from disparate sources, allowing for efficient querying and analysis. ETL processes are the crucial steps involved in populating the data warehouse.

My experience includes:

- Designing dimensional data models: Creating star or snowflake schemas to organize data for efficient querying and reporting.

- Developing ETL pipelines: Using tools like Apache Kafka, Apache NiFi, or cloud-based services (AWS Glue, Azure Data Factory) to extract data from various sources, transform it (cleaning, aggregation, data type conversion), and load it into the data warehouse.

- Data quality management: Implementing data validation rules and monitoring data quality metrics to ensure data accuracy and consistency.

- Database administration: Managing and optimizing the performance of the data warehouse database (e.g., using SQL Server, PostgreSQL, or cloud-based databases).

For example, in a previous project, I designed and implemented an ETL pipeline to consolidate sales data from multiple retail stores into a central data warehouse, allowing for comprehensive sales analysis and reporting. This involved dealing with various data formats, handling inconsistencies, and ensuring data quality.

Q 27. Describe your experience with predictive modeling.

Predictive modeling involves building statistical models to predict future outcomes based on historical data. It’s a core component of many data science applications, from fraud detection to customer churn prediction.

My experience encompasses various predictive modeling techniques, including:

- Regression models (linear, logistic, polynomial): Used for predicting continuous (regression) or categorical (classification) outcomes.

- Decision trees and ensemble methods (Random Forest, Gradient Boosting): Powerful techniques for handling complex relationships and high-dimensional data.

- Support Vector Machines (SVM): Effective for both classification and regression tasks, particularly in high-dimensional spaces.

- Neural networks: Complex models capable of learning intricate patterns and making accurate predictions, often used for image recognition or natural language processing.

The process typically involves data preprocessing, feature engineering, model selection, training, evaluation (using metrics like accuracy, precision, recall, AUC), and deployment. Model selection involves considering the nature of the data, the problem being addressed, and the desired level of interpretability. For instance, in a project focused on customer churn prediction, I compared several models (logistic regression, random forest, gradient boosting) before selecting the best-performing model based on its AUC score and ease of interpretation.

Q 28. Explain your understanding of different machine learning algorithms.

Machine learning algorithms are the core building blocks of predictive modeling and other data science applications. They are categorized broadly into supervised, unsupervised, and reinforcement learning.

- Supervised Learning: The algorithm learns from labeled data (data with known inputs and outputs). Examples include:

- Linear Regression: Predicts a continuous outcome based on a linear relationship with predictor variables.

- Logistic Regression: Predicts a categorical outcome (e.g., 0 or 1).

- Support Vector Machines (SVM): Finds the optimal hyperplane to separate data points into different classes.

- Decision Trees: Creates a tree-like model to classify or regress data.

- Random Forest: An ensemble method that combines multiple decision trees.

- Gradient Boosting Machines (GBM): Another ensemble method that builds trees sequentially, correcting errors from previous trees.

- Unsupervised Learning: The algorithm learns patterns from unlabeled data. Examples include:

- K-means clustering: Groups data points into clusters based on similarity.

- Principal Component Analysis (PCA): Reduces the dimensionality of data while retaining most of the variance.

- Reinforcement Learning: The algorithm learns through trial and error by interacting with an environment and receiving rewards or penalties. Used in robotics and game playing.

My familiarity extends across these categories. The choice of algorithm depends on the problem, the type of data, and the desired outcome. I’m proficient in implementing and tuning these algorithms using Python libraries like scikit-learn and TensorFlow/Keras.

Key Topics to Learn for Analytical and Data Interpretation Interview

- Descriptive Statistics: Understanding measures of central tendency (mean, median, mode), dispersion (variance, standard deviation), and their application in interpreting data sets. Practical application: Analyzing sales figures to identify peak seasons and areas for improvement.

- Inferential Statistics: Grasping concepts like hypothesis testing, confidence intervals, and regression analysis. Practical application: Determining the significance of A/B testing results or predicting future trends based on historical data.

- Data Visualization: Mastering the creation and interpretation of various charts and graphs (bar charts, histograms, scatter plots, etc.) to effectively communicate insights. Practical application: Presenting complex data findings to both technical and non-technical audiences.

- Data Cleaning and Preprocessing: Learning techniques for handling missing values, outliers, and inconsistencies in datasets to ensure data accuracy and reliability. Practical application: Preparing raw data for analysis using tools like SQL or Python.

- Problem-solving methodologies: Developing a structured approach to tackling analytical problems, including defining the problem, formulating hypotheses, and validating conclusions. Practical application: Effectively approaching case studies and real-world analytical challenges during the interview process.

- Specific analytical tools and techniques: Familiarize yourself with relevant software or methodologies (e.g., SQL, Excel, R, Python libraries like Pandas and NumPy) depending on the specific job description. Practical Application: Demonstrating proficiency in analyzing data using your preferred tools.

Next Steps

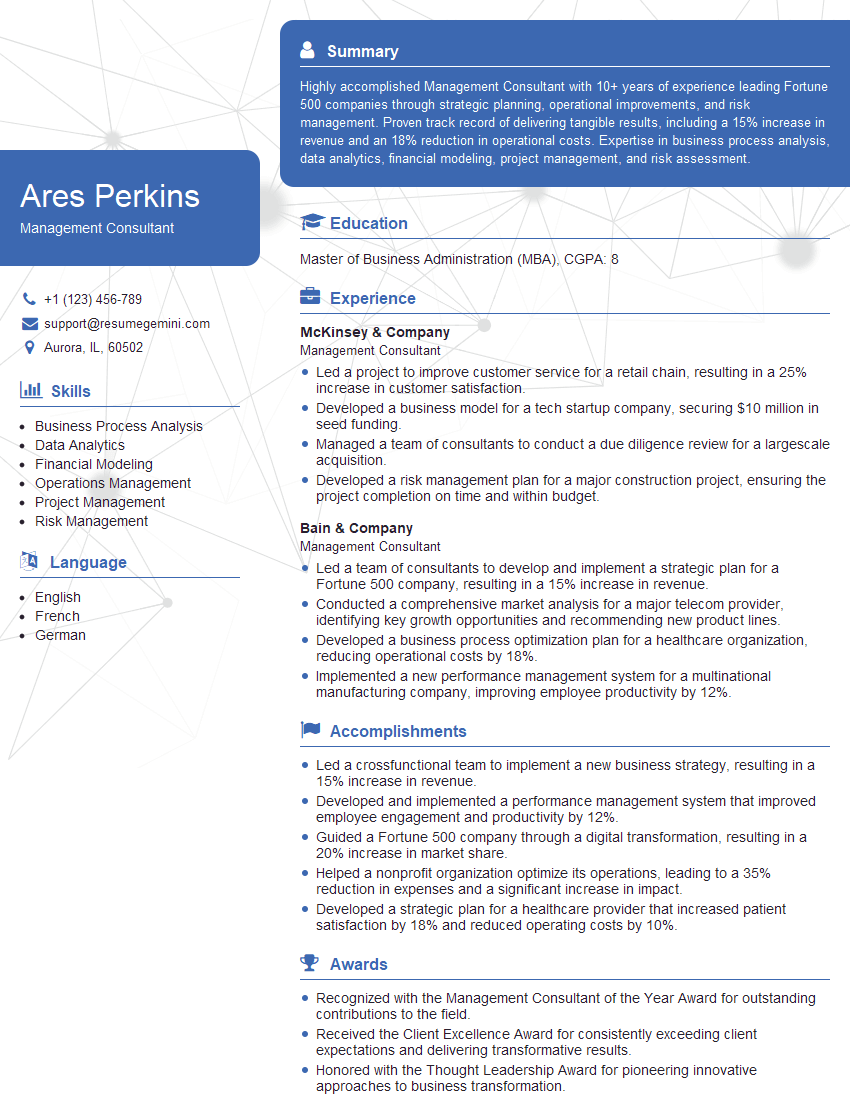

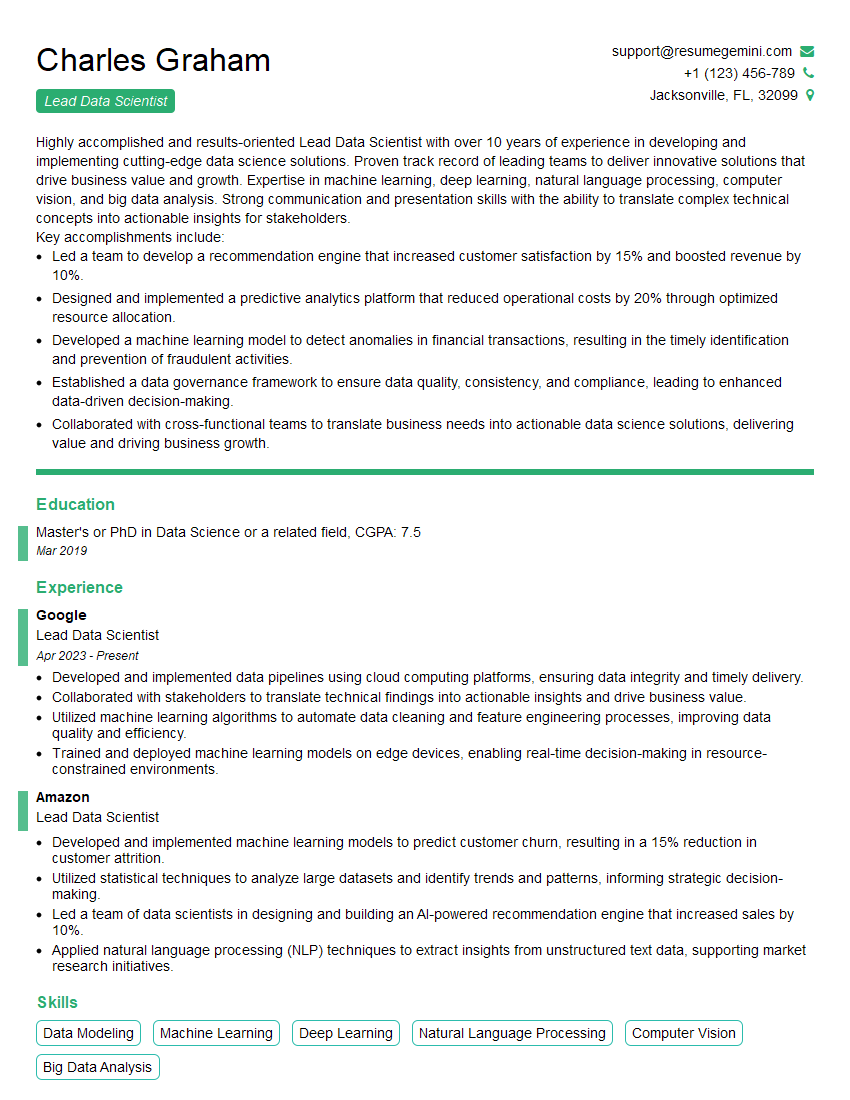

Mastering Analytical and Data Interpretation is crucial for career advancement in today’s data-driven world. It opens doors to exciting opportunities and allows you to contribute significantly to strategic decision-making. To maximize your chances of landing your dream role, crafting an ATS-friendly resume is essential. This ensures your application gets noticed by recruiters and hiring managers. We highly recommend using ResumeGemini to build a professional and impactful resume that showcases your analytical skills effectively. ResumeGemini provides examples of resumes tailored specifically to Analytical and Data Interpretation roles, helping you present your qualifications in the best possible light.

Explore more articles

Users Rating of Our Blogs

Share Your Experience

We value your feedback! Please rate our content and share your thoughts (optional).

What Readers Say About Our Blog

Hello,

We found issues with your domain’s email setup that may be sending your messages to spam or blocking them completely. InboxShield Mini shows you how to fix it in minutes — no tech skills required.

Scan your domain now for details: https://inboxshield-mini.com/

— Adam @ InboxShield Mini

Reply STOP to unsubscribe

Hi, are you owner of interviewgemini.com? What if I told you I could help you find extra time in your schedule, reconnect with leads you didn’t even realize you missed, and bring in more “I want to work with you” conversations, without increasing your ad spend or hiring a full-time employee?

All with a flexible, budget-friendly service that could easily pay for itself. Sounds good?

Would it be nice to jump on a quick 10-minute call so I can show you exactly how we make this work?

Best,

Hapei

Marketing Director

Hey, I know you’re the owner of interviewgemini.com. I’ll be quick.

Fundraising for your business is tough and time-consuming. We make it easier by guaranteeing two private investor meetings each month, for six months. No demos, no pitch events – just direct introductions to active investors matched to your startup.

If youR17;re raising, this could help you build real momentum. Want me to send more info?

Hi, I represent an SEO company that specialises in getting you AI citations and higher rankings on Google. I’d like to offer you a 100% free SEO audit for your website. Would you be interested?

Hi, I represent an SEO company that specialises in getting you AI citations and higher rankings on Google. I’d like to offer you a 100% free SEO audit for your website. Would you be interested?

good