Cracking a skill-specific interview, like one for Barcoding and Data Entry, requires understanding the nuances of the role. In this blog, we present the questions you’re most likely to encounter, along with insights into how to answer them effectively. Let’s ensure you’re ready to make a strong impression.

Questions Asked in Barcoding and Data Entry Interview

Q 1. Explain the different types of barcodes you are familiar with (e.g., UPC, EAN, QR Code).

Barcodes are visual representations of data, allowing for efficient automated data capture. Several types exist, each with specific applications.

- UPC (Universal Product Code): A barcode commonly found on retail products in North America. It typically consists of 12 digits, identifying the manufacturer and the specific product. For example, a UPC might look like this:

0 70475 34567 6. The first six digits identify the manufacturer, while the next five identify the product, and the last digit is a check digit used for error detection. - EAN (European Article Number): Similar to UPC, but used internationally. It’s often a 13-digit code. The structure is similar to UPC, with a different numbering scheme to accommodate a global product identification system.

- QR Code (Quick Response Code): A two-dimensional barcode that can store significantly more data than linear barcodes like UPC and EAN. QR codes can include text, URLs, contact information, and other data, and are often scanned using smartphones. They look like a square grid of black and white squares. A QR code can encode a long URL such as

https://www.example.com/product/12345, making it convenient for accessing information quickly. - Code 39: A very common alphanumeric barcode that’s easily read by scanners. It uses narrow and wide bars to represent digits, characters, and symbols. While less dense than other codes, it’s highly reliable and commonly used for inventory management and asset tracking.

Understanding the differences between these barcode types is crucial because it determines how they are generated, scanned, and integrated into various systems.

Q 2. Describe your experience with data entry software and applications.

I have extensive experience with various data entry software and applications, including spreadsheet programs like Microsoft Excel and Google Sheets, dedicated data entry applications such as KeyCapture and Data Entry Express, and database management systems (DBMS) like MySQL and SQL Server. My experience includes designing data entry forms, developing data validation rules, and importing/exporting data between various systems. In one role, I developed a custom data entry application in Excel using VBA macros to automate repetitive tasks and improve data entry speed and accuracy by 30%. This included features such as automated data validation and error checking, ensuring that only correct data could be inputted.

Q 3. What is your typing speed and accuracy?

My typing speed is consistently above 80 words per minute with an accuracy rate exceeding 99%. This is based on multiple typing tests conducted over the years. I continuously practice to maintain this level of proficiency and adapt to varying keyboard layouts when necessary. Accuracy is paramount in my work, and I maintain rigorous attention to detail. Speed is useful but accuracy is essential in data entry and prevents downstream errors.

Q 4. How do you ensure data accuracy during data entry?

Ensuring data accuracy is a top priority in my work. I employ several methods to achieve this:

- Data validation: I use built-in validation rules in software to ensure data conforms to specified formats and ranges. For example, I might set up a rule to only accept numbers within a certain range for quantities or specific date formats.

- Double-entry method: When crucial, I use a double-entry system, where another person independently enters the same data and the results are compared to find discrepancies.

- Regular checks and verification: I conduct regular checks and comparisons of data entered against source documents to identify and correct errors early on.

- Source data quality: I ensure source data is accurate and clear. A mistake in the source data will propagate downstream, so it’s key to verify the inputs are correct.

Q 5. What methods do you use to identify and correct data entry errors?

I identify and correct data entry errors using a combination of techniques:

- Automated error checks: Many software applications provide automated error checks, flagging inconsistencies or invalid data. I meticulously review these alerts.

- Data comparison: I often compare entered data with source documents to spot discrepancies. Spreadsheets are useful in this situation.

- Data validation rules: The same validation rules that prevent errors can also highlight them by rejecting invalid input. This is a very proactive approach.

- Regular audits: Periodic audits of entered data help identify systemic errors or trends, leading to process improvements. This can reveal underlying issues with processes or data sources.

Q 6. Describe your experience with barcode scanners and their different functionalities.

I have extensive experience with various barcode scanners, from basic handheld laser scanners to more advanced imagers and presentation scanners. I’m familiar with their different functionalities, including:

- Data transmission: Understanding how scanners transmit data (e.g., USB, keyboard wedge, Bluetooth) is essential for seamless integration into different systems.

- Decoding capabilities: I understand the various barcode symbologies that different scanners can read, ensuring compatibility with the required barcodes. This might involve adjusting scanner settings for optimal performance.

- Error correction: Advanced scanners often include error correction features that improve data accuracy, even with partially damaged barcodes.

- Connectivity options: I’m proficient in connecting scanners to various devices and systems, optimizing data flow and reducing manual intervention.

Q 7. How familiar are you with data validation techniques?

I’m very familiar with data validation techniques, which are essential for maintaining data quality. These techniques help ensure data accuracy, completeness, and consistency. They include:

- Range checks: Verifying that numerical data falls within a predefined range (e.g., age must be between 0 and 120).

- Format checks: Ensuring data conforms to a specific format (e.g., phone numbers, email addresses).

- Check digits: Using algorithms to verify the accuracy of numeric codes (e.g., ISBNs, UPCs).

- Cross-field validation: Checking consistency between different fields (e.g., start and end dates).

- Lookup tables: Validating data against a predefined list of acceptable values (e.g., states, countries).

Q 8. How do you handle large volumes of data entry?

Handling large volumes of data entry efficiently requires a systematic approach. It’s not just about speed, but accuracy and preventing errors. My strategy involves a combination of techniques:

- Data Validation and Pre-processing: Before I begin, I meticulously check the data source for inconsistencies or errors. Cleaning the data upfront significantly reduces issues later. For example, if I’m entering customer addresses, I’d verify that the postal codes are correct and consistent with the city and state.

- Automation: Wherever possible, I automate repetitive tasks. This might involve using macros in Excel, scripting in Python, or employing specialized data entry software with features like intelligent character recognition (ICR) and optical character recognition (OCR) if dealing with scanned documents. For instance, I might create a macro to automatically format data into the correct columns.

- Batch Processing: Instead of entering data one record at a time, I work in batches. This allows for a rhythm and improves focus. Breaking down a large task into smaller, manageable chunks makes the whole process less overwhelming. I’d often work with batches of 50-100 records, then take a short break to prevent burnout.

- Regular Checks and Verification: I perform frequent spot checks of my work, comparing against the source document to ensure accuracy. Regular checks, even small ones throughout a session, significantly improve the overall data quality and reduce the time spent on corrections at the end.

Think of it like building a house – laying a solid foundation (data cleaning) and utilizing the right tools (automation and software) speeds up construction and prevents costly mistakes later on.

Q 9. What are your strategies for maintaining focus and efficiency during long data entry sessions?

Maintaining focus and efficiency during long data entry sessions is crucial for accuracy and productivity. My strategies include:

- Ergonomics: I ensure my workstation is ergonomically set up to prevent strain and fatigue. This includes proper posture, a comfortable chair, and sufficient lighting. A well-set-up workspace minimizes physical discomfort, which can lead to mental fatigue.

- Short Breaks: I regularly take short breaks (5-10 minutes) every hour. These breaks allow me to stretch, walk around, and clear my head, preventing mental burnout. Even a quick walk can significantly improve concentration.

- Minimize Distractions: I minimize distractions like social media or email notifications during data entry. I usually put my phone on silent and close unnecessary tabs on my computer. A distraction-free environment is essential for efficient work.

- Background Music: I find that instrumental music or ambient sounds can help create a focused atmosphere. The key is to find music without lyrics that doesn’t distract.

- Change of Pace: If possible, I vary the type of data I’m entering to prevent monotony. This keeps the work engaging and prevents boredom.

Essentially, I treat data entry like a marathon, not a sprint. Consistent effort, smart breaks, and a comfortable environment significantly impact productivity and accuracy.

Q 10. Describe a time you had to meet a tight deadline for data entry. How did you manage it?

I once had to enter over 5,000 customer survey responses before the end of the business day. The deadline was incredibly tight. To manage it, I:

- Prioritized the Critical Data: I focused on the most essential fields first, ensuring those were complete and accurate. This allowed me to deliver a core dataset quickly, even if some less important data points were incomplete.

- Enlisted Help (if possible): I discussed the situation with my supervisor, explored the possibility of team work and additional resources. Though this wasn’t feasible in this particular case, it’s always an important consideration.

- Used Automation Tools: I leveraged Excel’s features and macros to import and format data efficiently. Using copy-paste commands efficiently saved time during data input.

- Double-Checked Regularly: I performed regular checks for errors, which reduced the amount of time spent correcting mistakes at the end. A few smaller checks are much more efficient than one giant check at the end.

- Worked in Focused Sprints: I worked in concentrated bursts with short breaks in between, maintaining focus and energy throughout the process. This prevented burnout and ensured consistent accuracy.

The key was strategic planning, using the right tools, and maintaining a consistent pace. By prioritizing, using automation, and staying focused, I met the tight deadline while maintaining data quality.

Q 11. How do you prioritize tasks when you have multiple data entry assignments?

Prioritizing multiple data entry assignments requires a structured approach. I use a combination of methods:

- Urgency and Importance: I prioritize tasks based on their urgency and importance. This is often represented using an Eisenhower Matrix (Urgent/Important). Tasks that are both urgent and important get immediate attention.

- Deadline-Based Prioritization: Tasks with the closest deadlines are given priority. I list tasks with their due dates to ensure deadlines are met efficiently.

- Data Volume and Complexity: I also consider the volume and complexity of each task. A large, complex task might require more time, thus influencing the order of tasks.

- Dependencies: I identify dependencies between tasks. If one task needs to be completed before another can start, I ensure that the prerequisite task is completed first.

Essentially, I create a prioritized task list that considers all relevant factors. This ensures that I allocate my time effectively and meet all deadlines successfully.

Q 12. How familiar are you with different data formats (e.g., CSV, Excel, XML)?

I am highly familiar with various data formats, including CSV, Excel, and XML. My experience includes:

- CSV (Comma Separated Values): I regularly work with CSV files, which are simple and widely used for exchanging data between different applications. I am proficient in importing and exporting CSV data, as well as cleaning and manipulating data within the file format.

- Excel (XLSX, XLS): I’m highly proficient in using Microsoft Excel, including data manipulation, formula creation, and advanced features like pivot tables. I can also use Excel to clean, transform, and prepare data before importing it into databases.

- XML (Extensible Markup Language): I have experience working with XML, including parsing XML files using various tools and programming languages (e.g., Python with libraries like lxml). XML is particularly useful when dealing with structured, hierarchical data.

- JSON (JavaScript Object Notation): In recent years I’ve also gained familiarity with JSON, particularly for handling data from web APIs. This is important in modern data integration scenarios.

My understanding of these formats enables me to work efficiently with a wide range of data sources and applications.

Q 13. Describe your experience with database management systems.

My experience with database management systems (DBMS) includes working with both relational (SQL) and NoSQL databases. I have:

- SQL Databases (e.g., MySQL, PostgreSQL, SQL Server): I’m proficient in writing SQL queries for data retrieval, insertion, update, and deletion. I understand database normalization principles and can design efficient database schemas. I’ve also worked with stored procedures and triggers to automate database tasks.

- NoSQL Databases (e.g., MongoDB): I have experience working with NoSQL databases, particularly MongoDB, for handling large volumes of unstructured or semi-structured data. I understand the differences between relational and NoSQL databases and can select the appropriate database technology for a given task.

- Data Import/Export: I am skilled in importing and exporting data to and from various database systems using different methods (e.g., command-line tools, scripting).

My DBMS skills are integral to ensuring efficient data management and retrieval in my work.

Q 14. Explain the importance of data integrity in your work.

Data integrity is paramount in my work. It refers to the accuracy, consistency, and reliability of data. Maintaining data integrity ensures that the information is trustworthy and can be used for accurate analysis and decision-making. Compromised data integrity can have serious consequences, such as:

- Inaccurate Reporting and Analysis: Errors in data lead to flawed reports and analyses, resulting in poor business decisions.

- Financial Losses: Incorrect data can lead to financial losses, especially in areas like billing or inventory management.

- Compliance Issues: In regulated industries, maintaining data integrity is essential for complying with legal and regulatory requirements.

- Reputational Damage: Data breaches or inconsistencies can damage an organization’s reputation.

To maintain data integrity, I employ several strategies:

- Data Validation: I rigorously validate data at every stage of the entry process. This includes using data validation rules in spreadsheets or databases.

- Data Cleaning: I meticulously clean and standardize data to ensure consistency and accuracy.

- Regular Backups: I always back up my work regularly to prevent data loss.

- Version Control: For larger projects, I utilize version control systems (like Git) to track changes and revert to previous versions if necessary.

Maintaining data integrity is not merely a technical requirement; it’s a fundamental responsibility that ensures the reliability and usefulness of information.

Q 15. How do you troubleshoot common issues with barcode scanning?

Troubleshooting barcode scanning issues often involves a systematic approach. First, we need to identify whether the problem lies with the scanner, the barcode itself, or the software/system interpreting the scan.

- Scanner Problems: Check the scanner’s power source, cable connections, and whether it’s properly configured. Test with a known good barcode. If the scanner is wireless, ensure sufficient signal strength and battery life. Sometimes, simply cleaning the scanner’s lens can resolve issues.

Example: A dirty lens might cause misreadings or intermittent scans. - Barcode Problems: Inspect the barcode for damage, smudging, or poor print quality. Ensure the barcode is printed correctly according to the symbology specifications. Low-quality printing can result in decoding failures.

Example: A barcode that’s faded or partially obscured will not scan reliably. - Software/System Issues: Verify that the software or system correctly interprets the scanned data. Check for driver updates for the scanner, and ensure that the data format settings in your system match the barcode symbology (e.g., UPC-A, EAN-13, Code 128). Incorrect settings can lead to data entry errors.

Example: If your system expects UPC-A but the scanner is reading EAN-13, the data won’t match, leading to an error.

By systematically investigating these areas, we can usually pinpoint and rectify the problem quickly. For complex issues, reviewing log files from the scanner and the receiving system is also important for tracking the source of errors.

Career Expert Tips:

- Ace those interviews! Prepare effectively by reviewing the Top 50 Most Common Interview Questions on ResumeGemini.

- Navigate your job search with confidence! Explore a wide range of Career Tips on ResumeGemini. Learn about common challenges and recommendations to overcome them.

- Craft the perfect resume! Master the Art of Resume Writing with ResumeGemini’s guide. Showcase your unique qualifications and achievements effectively.

- Don’t miss out on holiday savings! Build your dream resume with ResumeGemini’s ATS optimized templates.

Q 16. How familiar are you with RFID technology?

I’m quite familiar with RFID (Radio-Frequency Identification) technology. While it’s different from barcoding, it offers a powerful alternative for tracking and identifying items. I understand its advantages and limitations compared to barcode systems.

RFID uses radio waves to identify and track tags attached to objects. Unlike barcodes, RFID tags don’t require line-of-sight for reading. They can be read through various materials, making them ideal for applications where items might be stacked or obscured. This is a key advantage over barcodes, especially in inventory management or supply chain applications.

My knowledge extends to different RFID frequencies (e.g., LF, HF, UHF), tag types (passive, active), and reader technologies. I’m aware of the implementation challenges, such as signal interference and the cost of implementing an RFID system. In fact, I once helped a client migrate from a barcode-based inventory system to an RFID system, optimizing it to reduce the signal interference problems caused by the metallic storage racks.

Q 17. What are some common data entry errors and how can they be prevented?

Common data entry errors range from simple typos and transposition errors (switching digits) to more complex mistakes involving incorrect data formats or missing values.

- Typos and Transposition Errors: These are often caused by human error and fatigue. They can lead to inaccurate records and downstream problems.

- Incorrect Data Formats: Entering data in the wrong format (e.g., using commas instead of periods for decimal numbers) can make the data unusable.

- Missing Values: Leaving important fields blank can compromise data integrity and analytics.

Prevention strategies include using data validation techniques within software applications. This involves:

- Input masks: These guide users to enter data in the correct format (e.g., phone number format).

- Data type validation: The system automatically checks if the entered data matches the expected type (e.g., number, text, date).

- Drop-down lists and pre-defined values: Limit user choices to avoid incorrect entries.

- Double data entry: Having two people enter the same data and compare their entries.

- Automated checks: Regularly perform data quality checks to identify and fix inconsistencies.

These methods reduce the risk of errors and improve overall data accuracy.

Q 18. Describe your experience with data cleaning and cleansing techniques.

Data cleaning and cleansing is a crucial part of my workflow. I’ve extensive experience using various techniques to improve data quality. My approach is iterative and typically involves several steps:

- Identifying and Handling Missing Values: This can involve replacing missing values with averages, medians, or using predictive modeling to estimate the missing values, depending on the context. Sometimes, missing values simply need to be flagged for further investigation.

- Detecting and Correcting Inconsistent Data: This often involves identifying and standardizing inconsistent formats (e.g., different date formats), spellings, or abbreviations.

- Removing Duplicates: I use various techniques to identify and remove duplicates, including deduplication tools that compare records based on key fields. Careful analysis is needed to ensure that legitimate entries are not mistakenly removed.

- Smoothing Noisy Data: This involves techniques like binning or regression to remove outliers or smooth noisy data points.

- Transforming Data: This may involve creating new variables from existing ones, recoding values, or converting data types to make it suitable for analysis or reporting.

I use a combination of programming languages (such as Python with Pandas and SQL) and dedicated data cleaning tools. For example, I once cleaned a customer database with over 100,000 records, which involved removing duplicate entries, standardizing address formats, and correcting inconsistent customer information resulting in significant improvements to the quality and accuracy of data used for marketing campaigns.

Q 19. How do you handle conflicting or duplicate data?

Handling conflicting or duplicate data requires a careful and methodical approach. The first step is to identify the source of the conflict or duplication. This may involve examining data entry procedures, source systems, or integration processes.

Once identified, I analyze the conflicting data to determine the most accurate or reliable version. This may involve manual review, comparison of data sources, or using data quality rules and algorithms to prioritize certain records. If data is truly duplicated, a clear decision needs to be made on which record should be retained, and the others should be flagged or archived to preserve data provenance.

For example, I recently worked on a project where two different systems were maintaining customer contact information. By identifying the source of discrepancies and applying data quality rules, we successfully consolidated the data into a single, accurate, and reliable database. The process involved developing rules to prioritize data from the system with more comprehensive data validation procedures.

Q 20. How do you ensure compliance with data security regulations?

Data security is paramount. My approach involves adherence to relevant regulations such as GDPR, CCPA, and HIPAA, depending on the context.

- Access Control: I ensure that only authorized personnel have access to sensitive data through role-based access control systems.

- Data Encryption: I use encryption techniques to protect data both in transit and at rest. This minimizes the risk of unauthorized access even if a breach occurs.

- Data Loss Prevention (DLP): I implement DLP measures to prevent sensitive data from leaving the organization’s network without authorization.

- Regular Security Audits: I participate in regular security audits to identify and address vulnerabilities in data storage and handling practices.

- Data Minimization: I only collect and retain the data necessary for the specific purpose. This minimizes risk and compliance overhead.

I am trained to handle sensitive personal information responsibly and ethically and always implement robust security procedures to comply with all relevant regulations and best practices. My experience includes implementing data masking and anonymization techniques to protect sensitive information while still permitting data analysis.

Q 21. Describe your experience with report generation from data entry.

I have extensive experience generating reports from data entry systems. This usually involves using various tools, depending on the data’s complexity and the client’s needs. My experience includes using business intelligence (BI) tools, spreadsheet software, and programming languages to create comprehensive and informative reports.

My reporting skills encompass:

- Data Aggregation and Summarization: Creating summary tables, charts, and graphs to visualize data patterns and trends.

- Data Filtering and Sorting: Creating reports that focus on specific subsets of data based on criteria like dates, locations, or values.

- Report Customization: Adapting reports to meet specific user requirements by adjusting the layout, content, and formatting.

- Data Export and Distribution: Exporting reports in various formats (e.g., PDF, Excel, CSV) for distribution to stakeholders.

For instance, I once developed a series of automated weekly reports on sales performance for a retail company that extracted data directly from the point-of-sale (POS) system. These reports helped management to track sales trends, identify top-performing products, and make better business decisions.

Q 22. How do you stay updated on new technologies related to data entry and barcoding?

Staying current in the dynamic fields of data entry and barcoding requires a multifaceted approach. I actively participate in online communities like LinkedIn groups focused on data management and supply chain technologies. These groups often host discussions on emerging trends and new software releases. I also subscribe to industry newsletters and publications such as those offered by GS1 (Global Standards One), a leading organization in barcoding standards. Furthermore, I regularly attend webinars and conferences, both online and in-person, to learn about the latest advancements in barcode scanners, data entry software, and related technologies. Finally, I dedicate time to exploring vendor websites and technical documentation to understand new features and capabilities being offered by leading providers.

Q 23. What is your understanding of data warehousing and business intelligence?

Data warehousing is the process of collecting and storing data from various sources into a central repository, optimized for querying and analysis. Think of it as a giant, organized library for your company’s data. Business intelligence (BI) then uses that stored data to generate insights and support strategic decision-making. BI tools analyze the data in the warehouse, identifying trends, patterns, and anomalies that can inform business strategy. For example, a retail company might use data warehousing to combine sales data, customer data, and inventory data. BI tools could then analyze this data to predict future sales, identify best-selling products, and optimize inventory levels. My experience includes working with data warehousing systems to track and analyze barcode data, optimizing efficiency and improving business decisions based on these insights.

Q 24. Explain your proficiency with different keyboard shortcuts for efficient data entry.

Proficiency in keyboard shortcuts is crucial for efficient data entry. My daily workflow heavily relies on these shortcuts. For example, I use Ctrl+C (or Cmd+C on Mac) to copy data, Ctrl+X/Cmd+X to cut, and Ctrl+V/Cmd+V to paste. These are essential for moving data quickly between fields. Tab (Tab) is invaluable for navigating between fields, and Ctrl+Z/Cmd+Z (Undo) saves time when making mistakes. Beyond these basics, I’m comfortable using Ctrl+A/Cmd+A (Select All) for selecting entire data sets, and Ctrl+F/Cmd+F (Find) for quickly searching within large datasets. I’ve also mastered application-specific shortcuts, such as those in inventory management systems, to further streamline data input.

Q 25. Describe your experience with inventory management systems and their integration with barcoding.

My experience with inventory management systems (IMS) spans several years, integrating barcoding extensively to enhance accuracy and efficiency. I’ve worked with various IMS, from simple spreadsheet-based systems to sophisticated enterprise resource planning (ERP) systems. The integration with barcoding is always central – it’s how we move from manual data entry to automated tracking. For example, in a warehouse setting, a barcode scanner reads the barcode on a product, automatically updating the IMS with information about its location, quantity, and status. This eliminates manual data entry, significantly reducing errors and increasing the speed of inventory updates. I’ve also worked on projects involving the customization of IMS to accommodate specific barcode symbologies and data structures. This includes configuring the system to read different barcode types and ensuring the data captured is accurately stored and analyzed within the system.

Q 26. How do you manage your time effectively when handling multiple data entry tasks?

Managing multiple data entry tasks effectively requires a structured approach. I prioritize tasks based on urgency and importance using methods like the Eisenhower Matrix (urgent/important). I break down large tasks into smaller, manageable chunks. I set realistic deadlines for each task and use time-blocking techniques to allocate specific time slots for focused work. Regular breaks are also crucial to maintain focus and avoid burnout. I utilize tools like task management software to keep track of deadlines and progress. Finally, I proactively communicate with colleagues to ensure coordination and avoid potential bottlenecks. This proactive and organized approach ensures that I consistently meet deadlines and maintain high accuracy, even under pressure.

Q 27. What is your experience working with different barcode symbologies?

My experience encompasses various barcode symbologies, each with its own strengths and weaknesses. I’m proficient in working with common symbologies such as Code 128 (high density, alphanumeric), UPC-A (used in retail), EAN-13 (used in retail internationally), and Code 39 (human-readable, used in various applications). Understanding the nuances of each symbology is critical, as the data structure and encoding methods differ. For instance, Code 128 offers more characters than Code 39, making it suitable for applications requiring more information. I’ve also worked with less common symbologies based on project requirements. This experience ensures I can select the optimal symbology for any given application and troubleshoot any reading or scanning issues that might arise due to the particular characteristics of the chosen symbology.

Q 28. Explain your understanding of data normalization and its significance.

Data normalization is the process of organizing data to reduce redundancy and improve data integrity. Think of it like organizing a cluttered closet: instead of having multiple copies of the same item scattered around, you put everything in its proper place. This is achieved by splitting databases into two or more tables and defining relationships between the tables. This improves efficiency because it reduces storage space and simplifies data updates. It also ensures data consistency; if you change information in one place, it’s automatically updated everywhere else. For example, if you have a customer database with multiple addresses for each customer, normalization would involve creating separate tables for customers and addresses, linked by a customer ID. This prevents data duplication and ensures that updates to a customer’s address are reflected consistently across the database. In barcoding and data entry, normalization is vital for maintaining the accuracy and consistency of product information, customer data, and inventory tracking. It’s crucial for the overall reliability and maintainability of databases used in conjunction with barcode systems.

Key Topics to Learn for Barcoding and Data Entry Interview

- Barcode Symbologies: Understanding different barcode types (e.g., EAN, UPC, Code 128) and their applications in various industries. This includes knowing their structure and limitations.

- Data Entry Techniques: Mastering efficient keyboarding skills, data validation methods, and error detection/correction strategies. Consider speed and accuracy benchmarks.

- Data Integrity and Accuracy: Explore the importance of maintaining data accuracy and the consequences of errors. Discuss quality control measures and best practices.

- Barcode Scanning Equipment: Familiarity with different types of barcode scanners (handheld, presentation, fixed-mount) and their operational characteristics.

- Data Entry Software and Systems: Understanding various data entry software applications and database management systems (DBMS) used in the industry. Be prepared to discuss your experience with specific systems if you have any.

- Data Cleaning and Transformation: Learn about techniques for cleaning and transforming raw data to ensure consistency and accuracy before entry. This includes handling missing data and outliers.

- Workflow Processes: Understanding the typical workflow involved in barcoding and data entry processes, including receiving, processing, and verifying data.

- Troubleshooting and Problem-Solving: Develop your ability to identify and resolve common issues encountered during barcoding and data entry, such as scanner malfunctions or data inconsistencies.

- Data Security and Confidentiality: Discuss the importance of data security and adhering to best practices for protecting sensitive information during data entry.

Next Steps

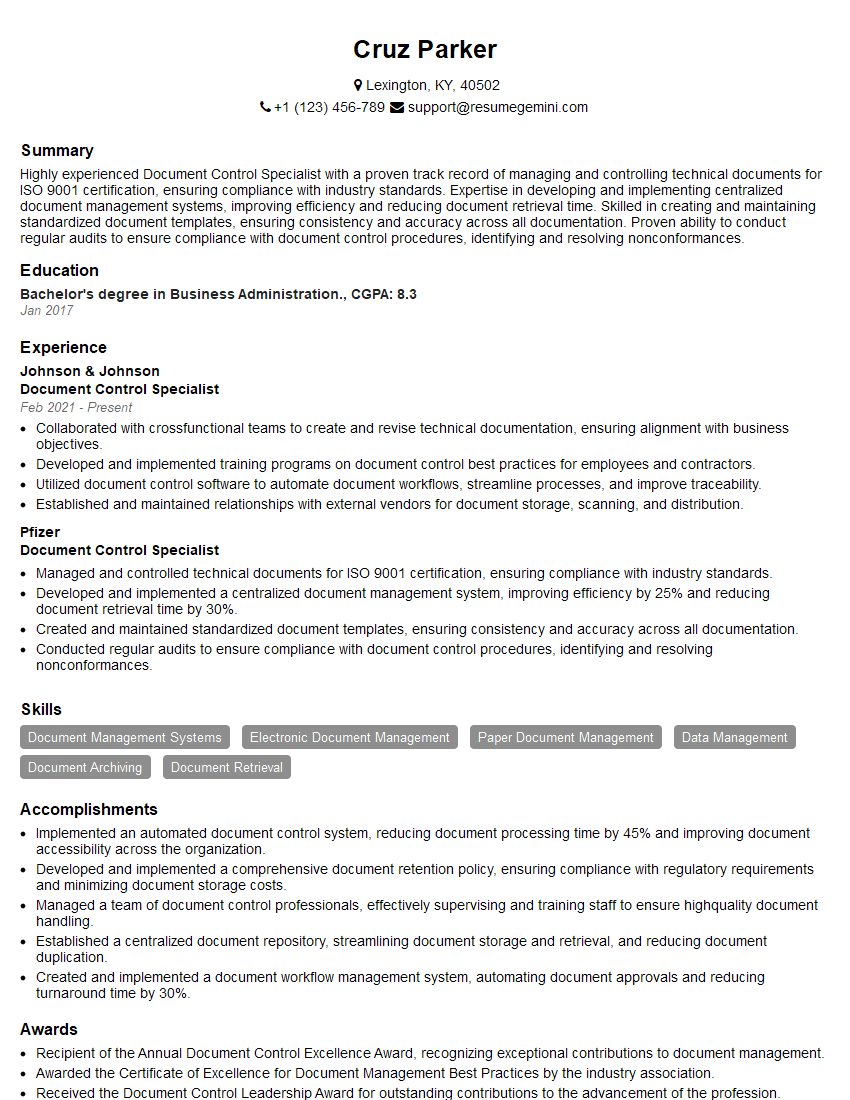

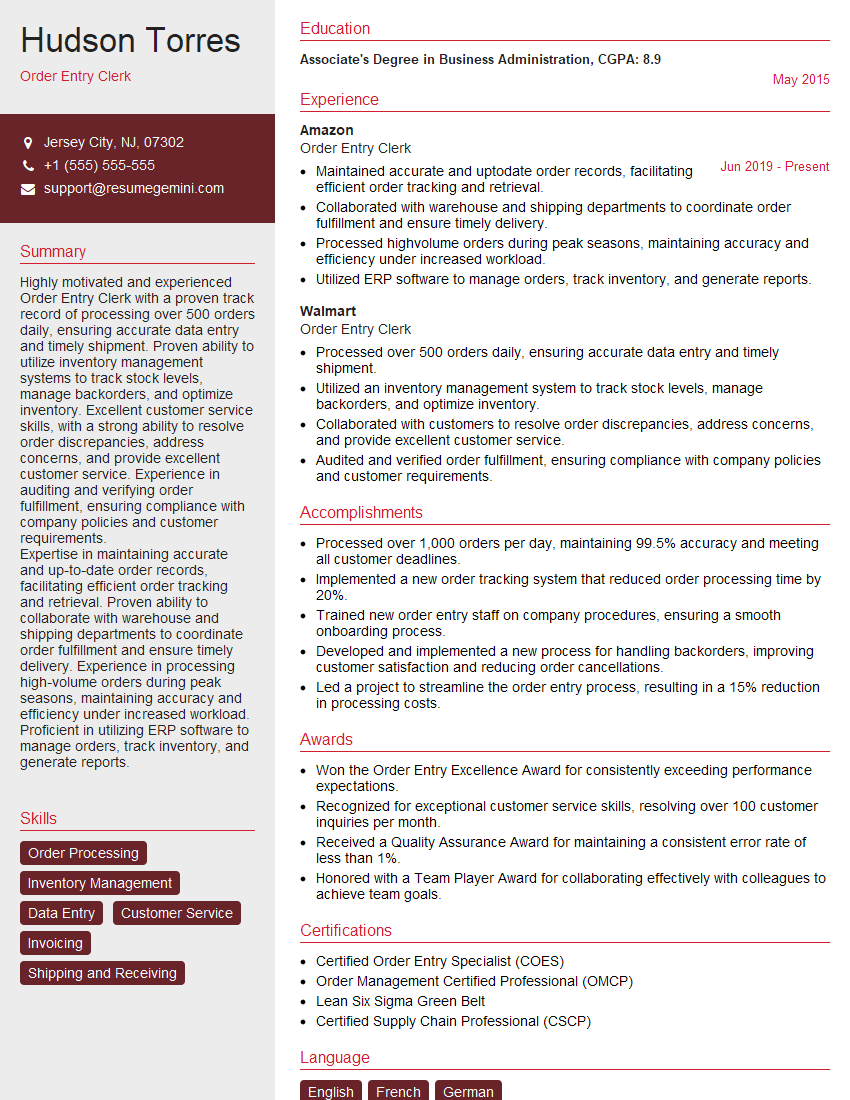

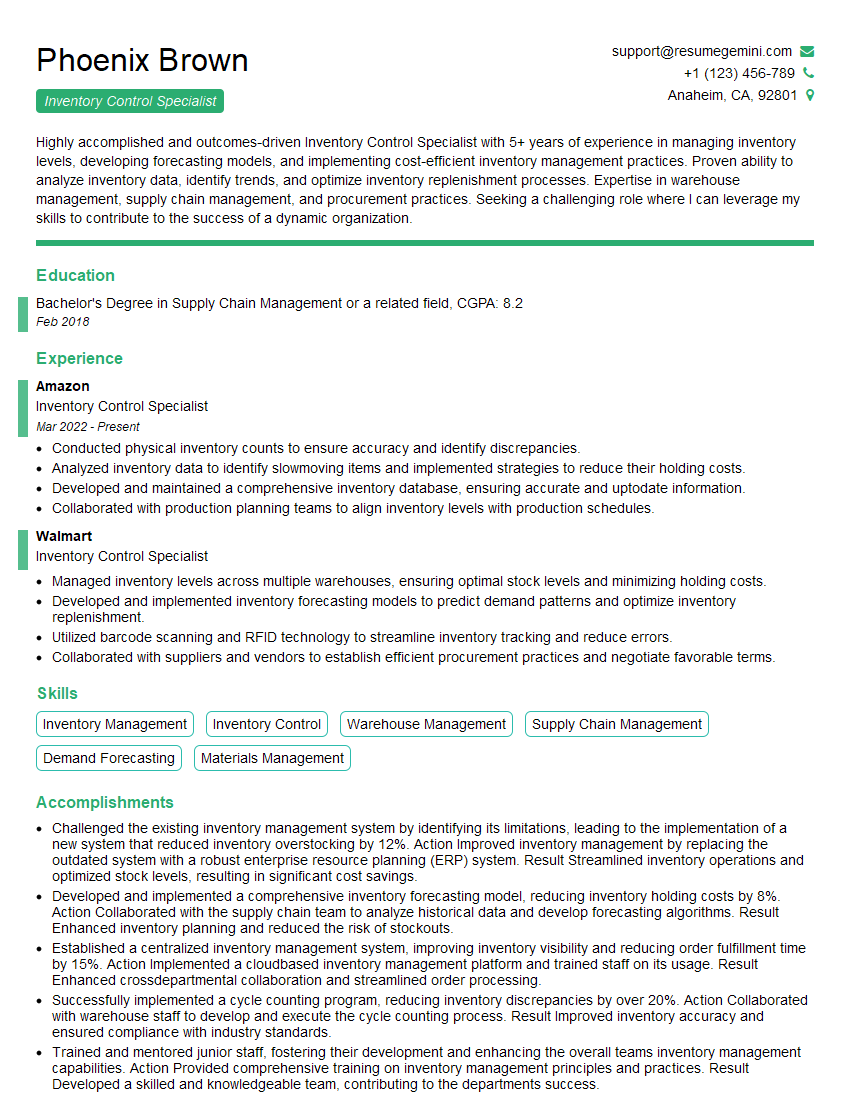

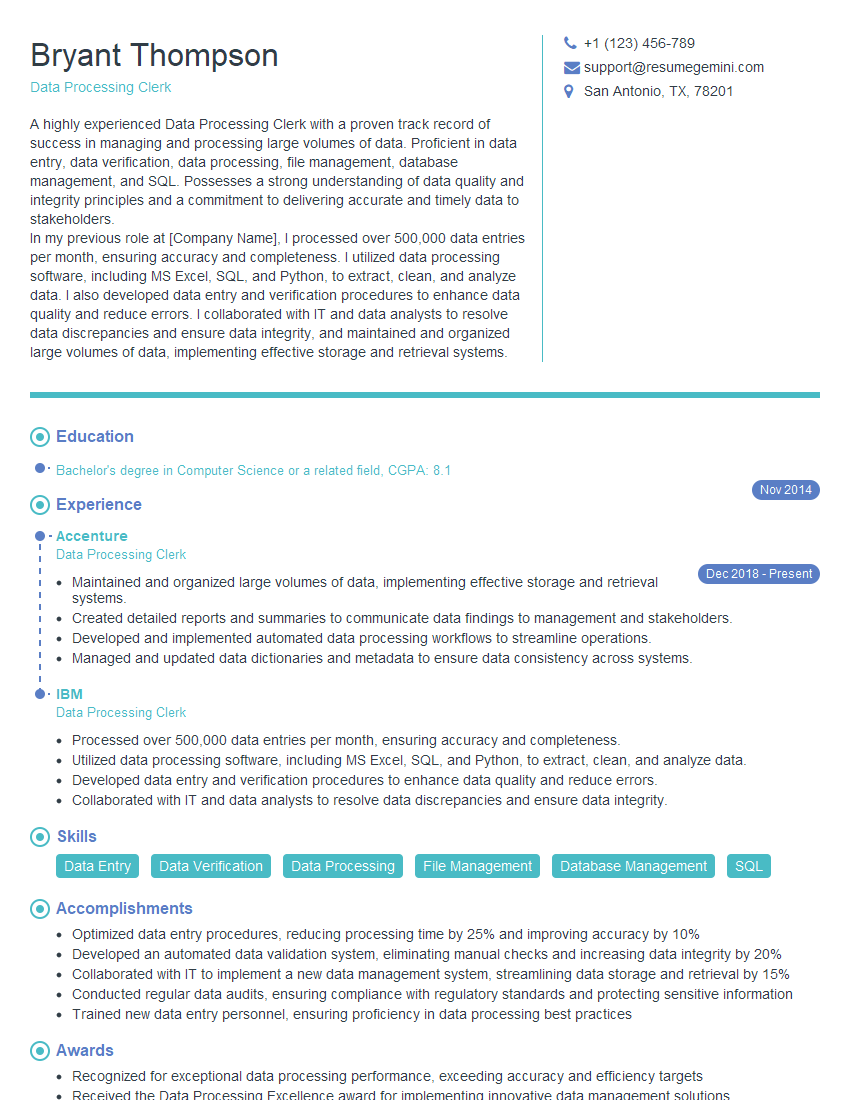

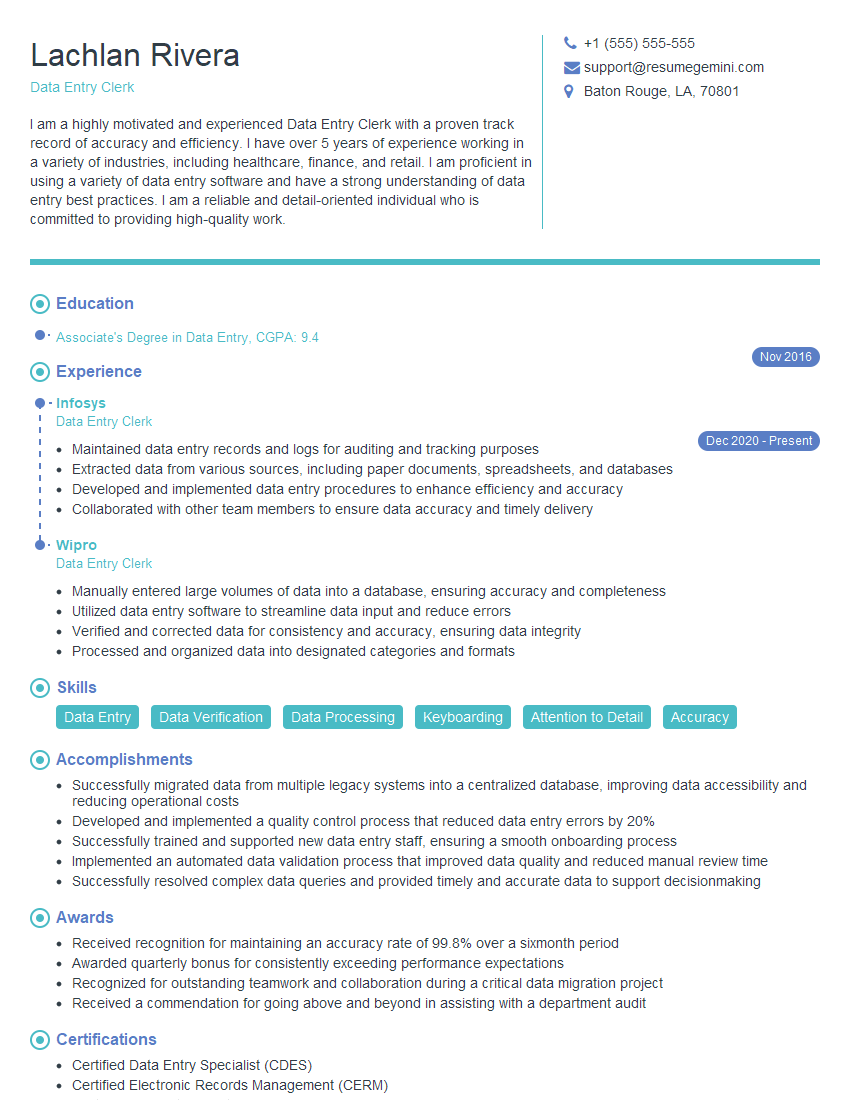

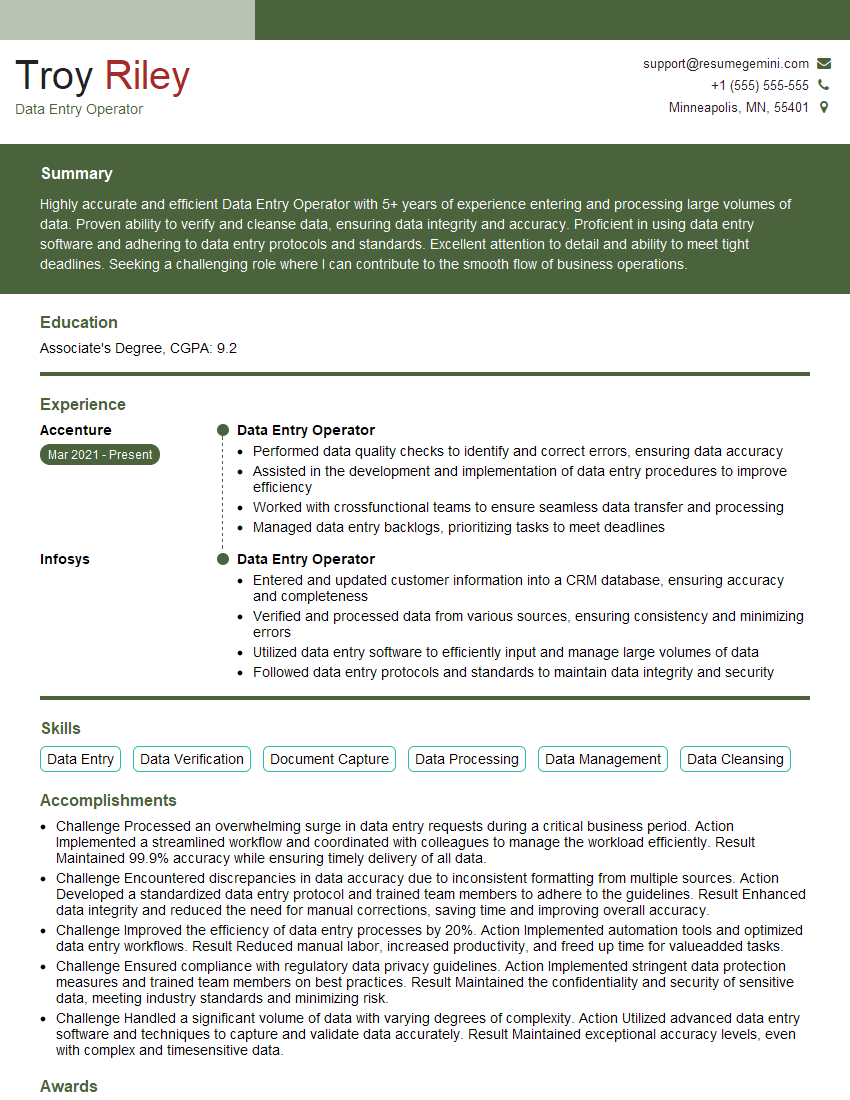

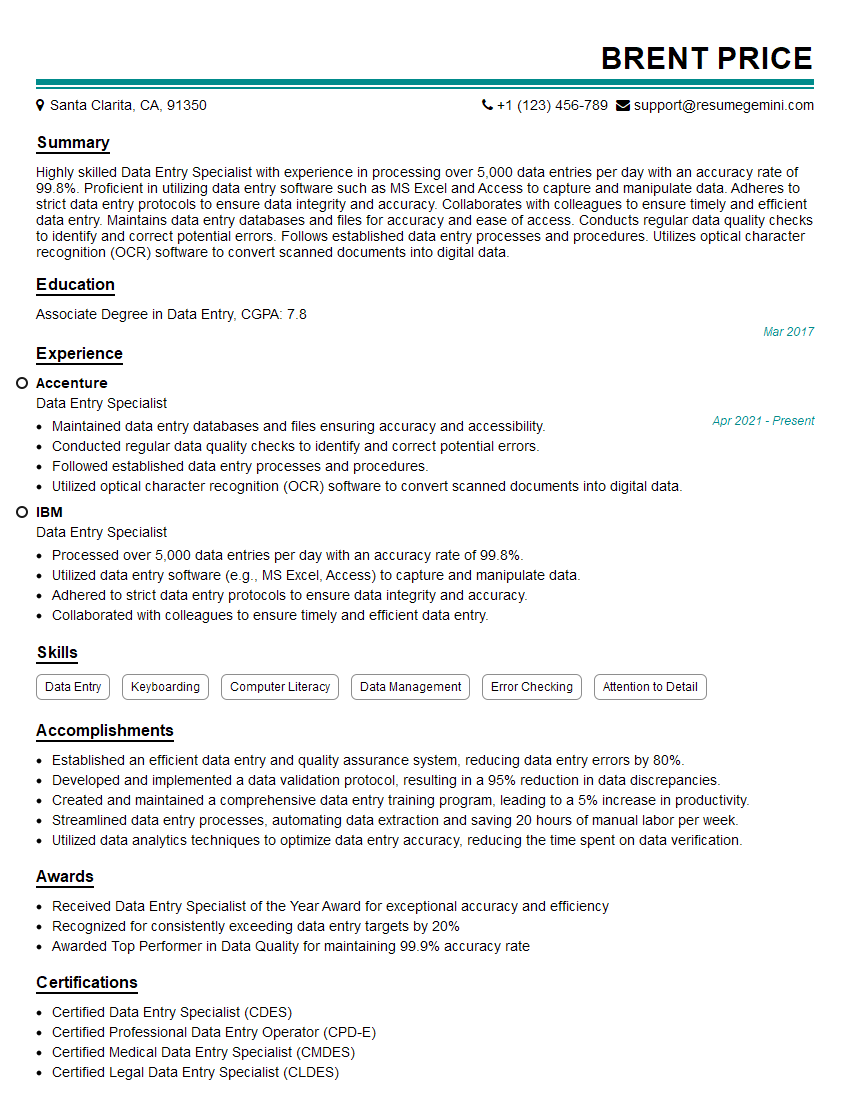

Mastering barcoding and data entry opens doors to diverse roles in logistics, healthcare, manufacturing, and retail. These skills are in high demand, ensuring a strong and stable career path. To maximize your job prospects, invest time in crafting an ATS-friendly resume that highlights your skills and experience effectively. ResumeGemini is a trusted resource that can significantly enhance your resume-building experience. They provide examples of resumes tailored to the Barcoding and Data Entry field to help you present your qualifications in the best possible light.

Explore more articles

Users Rating of Our Blogs

Share Your Experience

We value your feedback! Please rate our content and share your thoughts (optional).

What Readers Say About Our Blog

Hello,

We found issues with your domain’s email setup that may be sending your messages to spam or blocking them completely. InboxShield Mini shows you how to fix it in minutes — no tech skills required.

Scan your domain now for details: https://inboxshield-mini.com/

— Adam @ InboxShield Mini

Reply STOP to unsubscribe

Hi, are you owner of interviewgemini.com? What if I told you I could help you find extra time in your schedule, reconnect with leads you didn’t even realize you missed, and bring in more “I want to work with you” conversations, without increasing your ad spend or hiring a full-time employee?

All with a flexible, budget-friendly service that could easily pay for itself. Sounds good?

Would it be nice to jump on a quick 10-minute call so I can show you exactly how we make this work?

Best,

Hapei

Marketing Director

Hey, I know you’re the owner of interviewgemini.com. I’ll be quick.

Fundraising for your business is tough and time-consuming. We make it easier by guaranteeing two private investor meetings each month, for six months. No demos, no pitch events – just direct introductions to active investors matched to your startup.

If youR17;re raising, this could help you build real momentum. Want me to send more info?

Hi, I represent an SEO company that specialises in getting you AI citations and higher rankings on Google. I’d like to offer you a 100% free SEO audit for your website. Would you be interested?

Hi, I represent an SEO company that specialises in getting you AI citations and higher rankings on Google. I’d like to offer you a 100% free SEO audit for your website. Would you be interested?

good