Unlock your full potential by mastering the most common Mapping and GPS Systems interview questions. This blog offers a deep dive into the critical topics, ensuring you’re not only prepared to answer but to excel. With these insights, you’ll approach your interview with clarity and confidence.

Questions Asked in Mapping and GPS Systems Interview

Q 1. Explain the difference between latitude and longitude.

Latitude and longitude are coordinates that specify the precise location of a point on the Earth’s surface. Think of it like a grid system wrapped around a sphere.

Latitude measures the distance north or south of the Equator. The Equator is 0° latitude, the North Pole is 90° North, and the South Pole is 90° South. Latitude lines run parallel to the Equator and are called parallels.

Longitude measures the distance east or west of the Prime Meridian, which passes through Greenwich, England. The Prime Meridian is 0° longitude. Longitude lines run from the North Pole to the South Pole and are called meridians. Longitude ranges from 180° East to 180° West.

For example, a location with coordinates 34°N, 118°W is located approximately 34 degrees north of the Equator and 118 degrees west of the Prime Meridian – this is near Los Angeles.

Q 2. Describe the various map projections and their applications.

Map projections are ways of representing the three-dimensional surface of the Earth on a two-dimensional map. Because it’s impossible to perfectly flatten a sphere without distortion, different projections emphasize different properties (like area, shape, distance, or direction).

- Mercator Projection: Preserves direction and shape at small scales, but distorts area significantly towards the poles. It’s commonly used for navigation because compass bearings are accurate.

- Lambert Conformal Conic Projection: Minimizes distortion in areas that are relatively long and narrow, such as countries extending north-south. Commonly used for mapping mid-latitude regions.

- Albers Equal-Area Conic Projection: Preserves area accurately, making it suitable for thematic mapping where the relative size of features is important (e.g., population density maps).

- Robinson Projection: A compromise projection that attempts to balance distortions in shape, area, distance, and direction. Often used for world maps because it shows the entire globe with relatively little distortion.

The choice of projection depends entirely on the map’s purpose. A navigator needs accuracy of bearing, while a cartographer displaying population distribution needs accurate area representation.

Q 3. What are the different types of GPS errors and how can they be mitigated?

GPS errors can be categorized into several types:

- Atmospheric Errors: Signals travel slower through the ionosphere and troposphere, leading to timing errors. These can be mitigated using sophisticated models that correct for atmospheric delays.

- Multipath Errors: Signals bounce off buildings or other surfaces before reaching the receiver, causing delayed and distorted signals. This can be reduced using advanced signal processing techniques and antenna design.

- Receiver Noise: Electronic noise in the receiver can affect signal reception. High-quality receivers with signal processing algorithms help minimize this.

- Satellite Geometry (GDOP): The geometry of satellites relative to the receiver affects accuracy. Poor geometry (high GDOP) results in lower accuracy. Using more satellites improves geometry and accuracy.

- Satellite Clock Errors: Slight inaccuracies in satellite clocks can lead to positioning errors. These are corrected using highly precise atomic clocks and data from ground stations.

Mitigation strategies often involve using multiple receivers, differential GPS (DGPS), or real-time kinematic (RTK) GPS, which use additional data to correct errors. Sophisticated algorithms and error models are also employed to minimize the impact of these errors.

Q 4. How does GPS triangulation work?

GPS triangulation uses the signals from multiple GPS satellites to pinpoint a location. Each satellite transmits a signal containing its precise location and the time the signal was sent. The GPS receiver measures the time it takes to receive the signal from each satellite. By knowing the speed of light, the receiver can calculate the distance to each satellite.

With signals from at least four satellites, the receiver can solve a system of equations to determine its three-dimensional position (latitude, longitude, and altitude). Imagine it like drawing circles on a map; each satellite’s distance represents a circle’s radius around the satellite’s position. The intersection of the circles represents the receiver’s location.

Q 5. Explain the concept of georeferencing.

Georeferencing is the process of assigning geographic coordinates (latitude and longitude) to points on an image or map. This links the data to a real-world location. Think of it as giving a digital image a ‘geo-address’.

For example, a scanned historical map might be georeferenced by identifying common features (roads, landmarks) that have known coordinates in a modern GIS database. This allows you to overlay the historical map onto a modern map, enabling spatial analysis and comparisons across time.

Georeferencing is crucial for integrating diverse datasets and making them spatially meaningful. It’s commonly used in tasks such as creating maps from aerial photography, aligning satellite imagery, and integrating various geographic datasets.

Q 6. What are the key differences between raster and vector data?

Raster and vector data are two fundamental ways of representing geographic information in GIS.

- Raster data represents geographic features as a grid of cells (pixels), each containing a value representing a particular characteristic. Think of it like a digital photograph; each pixel has a color value. Examples include satellite imagery, aerial photographs, and scanned maps.

- Vector data represents geographic features as points, lines, and polygons. Each feature has a precise location and attributes (e.g., a point representing a well, a line representing a road, and a polygon representing a lake). Vector data is best for representing discrete features with sharp boundaries.

The key difference lies in how they represent spatial information: raster is cell-based and continuous, while vector is object-based and discrete. The choice between raster and vector depends on the application and the nature of the geographic data. For example, analyzing land cover changes using satellite images would require raster data, while managing a road network would use vector data.

Q 7. Describe your experience with GIS software (e.g., ArcGIS, QGIS).

I have extensive experience with both ArcGIS and QGIS, having utilized them for numerous projects involving spatial analysis and cartography. In ArcGIS, I’ve worked extensively with its geoprocessing tools for tasks like creating buffers, overlaying datasets, and performing spatial joins. I am proficient in using its various extensions for specialized analysis, such as 3D Analyst and Spatial Analyst. I’ve also used ArcGIS Pro for creating interactive maps and web applications.

My experience with QGIS focuses on its open-source capabilities, which allows for flexibility and customization. I’ve used QGIS for processing large raster datasets, creating custom map layouts, and conducting geospatial analysis. For example, I’ve used it to process satellite imagery for land-use change analysis and create custom cartographic visualizations for publication.

Beyond basic functionality, I have experience utilizing both platforms for advanced tasks such as creating geodatabases, scripting using Python, and implementing spatial statistical methods.

Q 8. How do you handle spatial data inconsistencies?

Handling spatial data inconsistencies is crucial for the accuracy and reliability of any GIS project. Inconsistencies can arise from various sources, including errors in data collection, different data projections, or variations in data formats. My approach involves a multi-step process:

- Data Cleaning and Preprocessing: This initial step involves identifying and correcting obvious errors like duplicate entries, missing values, and outliers. Tools like ArcGIS Pro or QGIS offer powerful functionalities for this, including editing tools and spatial queries.

- Data Transformation and Standardization: Next, I ensure all data is in a consistent projection and coordinate system. Tools like

ogr2ogrcan be used for format conversion and coordinate system transformations. For example, converting data from UTM to WGS84 is often necessary to ensure compatibility. - Spatial Consistency Checks: I employ spatial analysis techniques like topology checks (e.g., verifying that lines connect properly at vertices, polygons don’t overlap) to detect inconsistencies. Software packages usually offer built-in topology rules that automatically flag errors.

- Data Integration and Reconciliation: When dealing with multiple datasets, integrating them requires addressing discrepancies. This might involve using spatial joins to link data based on location, or using fuzzy matching techniques for slightly different place names. The choice of method depends on the nature of the data and the level of error tolerance.

- Validation and Quality Control: Finally, I always perform thorough quality checks to ensure the final data is consistent and accurate. This might involve visual inspection, statistical analysis, or comparison against independent data sources.

For instance, I once worked on a project where cadastral data from multiple sources had inconsistencies in property boundaries. By using topology rules and manual editing in ArcGIS, I was able to identify and resolve overlaps and gaps, ensuring the final map accurately reflected the land ownership.

Q 9. Explain your understanding of spatial analysis techniques.

Spatial analysis techniques are the heart of GIS, allowing us to extract meaningful information from geographic data. They range from simple measurements to complex modeling. My understanding encompasses several key areas:

- Buffering: Creating zones around features. For example, creating a 500-meter buffer around a hospital to assess its service area.

- Overlay Analysis: Combining multiple layers to identify spatial relationships. Intersection, union, and difference are common operations – imagine overlaying population density and flood risk maps to identify vulnerable areas.

- Network Analysis: Analyzing movement and connectivity on networks like roads or pipelines. This can be used for route optimization or emergency response planning.

- Proximity Analysis: Measuring distances between features. This helps in finding nearest neighbors, determining travel times, or assessing spatial accessibility.

- Spatial Interpolation: Estimating values at unsampled locations based on known values. Useful for creating continuous surfaces like elevation models or pollution concentrations.

- Geostatistics: Using statistical methods to analyze spatially correlated data, accounting for spatial autocorrelation. Commonly used in environmental modeling.

SELECT ST_Distance(geom1, geom2) FROM my_table; (PostGIS example showing distance calculation between two geometries)

In a recent project, I used spatial interpolation to create a continuous surface of rainfall intensity across a region using sparse rainfall gauge data. This allowed for better flood risk assessment compared to relying solely on point measurements.

Q 10. What are some common applications of remote sensing in mapping?

Remote sensing plays a vital role in creating and updating maps. Data acquired from satellites and aerial platforms provides crucial information for a wide range of mapping applications:

- Land Cover Classification: Identifying different land cover types (forests, urban areas, water bodies) using multispectral or hyperspectral imagery. Algorithms like supervised and unsupervised classification are used to interpret the spectral signatures.

- Elevation Modeling: Generating Digital Elevation Models (DEMs) from LiDAR or stereo aerial photography, essential for terrain analysis and 3D visualization.

- Change Detection: Monitoring changes over time, such as deforestation, urban sprawl, or glacial retreat, by comparing images taken at different dates.

- Environmental Monitoring: Assessing water quality, monitoring pollution, or detecting agricultural stress by analyzing spectral characteristics.

- Disaster Response: Providing rapid damage assessments after natural disasters like earthquakes or floods.

For instance, in a forestry project, we utilized satellite imagery to map forest cover, assess deforestation rates, and monitor forest health over several decades, allowing for effective conservation planning.

Q 11. Describe your experience working with different coordinate systems.

Experience with various coordinate systems is fundamental. Understanding their differences is crucial for data accuracy and integration. I have extensive experience with:

- Geographic Coordinate Systems (GCS): Latitude and longitude based, using a spherical or ellipsoidal model of the Earth (e.g., WGS84).

- Projected Coordinate Systems (PCS): Transforming GCS coordinates onto a flat plane, using different map projections (e.g., UTM, State Plane). Each projection distorts the Earth’s surface differently, so the choice depends on the application and area.

- Local Coordinate Systems: Custom coordinate systems used for specific projects or areas, often based on a local datum.

I’m proficient in using GIS software to reproject data and handle coordinate transformations. Misunderstanding projections can lead to significant errors in distance, area, and shape calculations, so accuracy in projection management is paramount. For example, I successfully resolved a major discrepancy in a project where data from different sources were using different datums and projections. I used a GIS to reproject all data to a common projection before proceeding with the spatial analysis.

Q 12. How familiar are you with spatial databases (e.g., PostGIS, Oracle Spatial)?

I’m very familiar with spatial databases, particularly PostGIS and Oracle Spatial. They are essential for managing and querying large geospatial datasets efficiently. My expertise includes:

- Data Modeling: Designing database schemas to efficiently store and manage spatial data, including geometry types (points, lines, polygons).

- Spatial Queries: Using SQL extensions like PostGIS’s

ST_Contains,ST_Intersects, andST_Distancefunctions to retrieve data based on spatial relationships. - Spatial Indexing: Implementing spatial indexes (e.g., R-trees) to optimize query performance on large datasets.

- Data Management: Implementing procedures for data loading, updating, and backup.

SELECT * FROM my_table WHERE ST_Intersects(geom, ST_GeomFromText('POLYGON(...)')); (PostGIS example showing spatial query)

In a previous project, we utilized PostGIS to manage a large-scale land-use database, enabling rapid querying of land parcels based on location, area, and other attributes, greatly improving efficiency compared to traditional file-based systems.

Q 13. How do you ensure data accuracy and integrity in a GIS project?

Ensuring data accuracy and integrity is critical. My approach involves a combination of best practices throughout the project lifecycle:

- Data Source Evaluation: Carefully selecting reliable data sources and assessing their accuracy and completeness.

- Metadata Management: Thoroughly documenting data sources, attributes, projections, and any known limitations.

- Data Validation: Performing checks for inconsistencies, errors, and outliers at each stage of data processing.

- Version Control: Using version control systems to track changes and allow for reverting to previous versions if necessary.

- Quality Assurance/Quality Control (QA/QC): Implementing systematic procedures to detect and correct errors.

- Data Backup and Recovery: Implementing robust backup and recovery mechanisms to prevent data loss.

Imagine working with survey data. A small error in a coordinate can propagate through the entire analysis. I would always perform rigorous checks, possibly through comparisons with existing data or ground truthing to ensure accuracy. Proper metadata ensures that others understand the limitations of the data, fostering trust and collaboration.

Q 14. Describe your experience with data visualization techniques for mapping data.

Effective data visualization is key to communicating spatial information. I’m experienced with a wide range of techniques:

- Thematic Mapping: Using color, symbols, and patterns to represent data values across a map (choropleth, proportional symbol, dot density maps).

- Cartographic Design Principles: Applying principles of map design to create clear, accurate, and aesthetically pleasing maps.

- Interactive Mapping: Creating web maps using tools like Leaflet or ArcGIS JavaScript API, allowing users to explore data dynamically.

- 3D Visualization: Creating 3D models and visualizations for better understanding of complex spatial relationships.

- Data Dashboards: Creating interactive dashboards that combine maps with other data visualizations to provide comprehensive insights.

For instance, in a public health project, I used interactive maps to visualize the spread of a disease, allowing public health officials to easily identify hotspots and track trends over time. Effective use of color and symbology was crucial in conveying the severity of the outbreak.

Q 15. Explain your process for creating a thematic map.

Creating a thematic map involves visually representing geographical data to highlight a specific theme or pattern. It’s like telling a story with your data, where the map is the canvas and the data points are the characters. My process typically follows these steps:

- Data Acquisition and Preparation: This involves gathering the necessary data, which could be anything from census data to satellite imagery. Data cleaning and processing are crucial; ensuring accuracy and consistency is paramount. For example, I might need to project data from different sources into a common coordinate system.

- Choosing a Suitable Base Map: I select a base map that best suits my theme and audience. This could be a road map, a topographic map, or even a satellite image. The choice depends on the level of detail needed and the message I want to convey.

- Selecting an Appropriate Classification Method: This step determines how the data will be visually represented. Common methods include equal interval, quantile, natural breaks, and standard deviation. The best method depends on the data distribution and the message I want to communicate. For instance, for income data, a quantile classification might highlight differences between income brackets more effectively than an equal interval classification.

- Map Design and Symbology: This involves choosing colors, symbols, and labels to represent the data clearly and effectively. The goal is to create a visually appealing and informative map that’s easy to understand. Color choice is particularly important; using a color scheme that is both visually appealing and easy to distinguish between categories is crucial.

- Map Layout and Annotation: I add a title, legend, scale bar, north arrow, and any other necessary annotations to ensure the map is clear and complete. This is a crucial step to making sure the map tells its story accurately and completely.

- Quality Control and Review: Finally, I thoroughly check the map for accuracy and consistency before finalizing it. Peer review is also helpful to catch any potential errors or inconsistencies.

For example, I once created a thematic map showing the distribution of air pollution levels in a city. I used air quality sensor data, overlaid it on a street map, and employed a color scale to represent different pollution levels. The map effectively highlighted pollution hotspots, allowing city planners to target interventions more effectively.

Career Expert Tips:

- Ace those interviews! Prepare effectively by reviewing the Top 50 Most Common Interview Questions on ResumeGemini.

- Navigate your job search with confidence! Explore a wide range of Career Tips on ResumeGemini. Learn about common challenges and recommendations to overcome them.

- Craft the perfect resume! Master the Art of Resume Writing with ResumeGemini’s guide. Showcase your unique qualifications and achievements effectively.

- Don’t miss out on holiday savings! Build your dream resume with ResumeGemini’s ATS optimized templates.

Q 16. How do you deal with large geospatial datasets?

Handling large geospatial datasets requires efficient strategies and tools. Think of it like organizing a massive library – you wouldn’t try to find a book by searching every shelf individually! My approach involves:

- Data Subsetting and Sampling: For initial analysis and visualization, I often work with subsets of the data, selecting relevant areas or features. This allows for faster processing and exploration.

- Geospatial Databases: I leverage powerful geospatial database systems like PostGIS (PostgreSQL extension) or ArcGIS geodatabases. These systems are optimized for storing, querying, and analyzing large datasets efficiently. They allow for complex spatial queries and analysis to be performed much faster than if you were trying to handle the data in a flat file format.

- Cloud Computing: Cloud platforms such as Amazon Web Services (AWS) or Google Cloud Platform (GCP) provide scalable computing resources for processing and analyzing large geospatial datasets. Their tools and services allow for parallel processing, significantly reducing processing time. This becomes especially important when working with massive raster datasets like satellite imagery.

- Data Compression and Formats: Choosing appropriate data formats and compression techniques is crucial for minimizing storage space and improving processing speeds. For example, using GeoTIFF with appropriate compression for raster data or optimized vector formats.

- Parallel Processing: Utilizing parallel processing techniques and tools allows you to break down the analysis into smaller chunks that can be processed simultaneously on multiple processors, leading to substantial time savings.

For example, when working with a nationwide land cover dataset, I used a cloud-based processing environment to perform change detection analysis by dividing the dataset into smaller tiles and processing them in parallel.

Q 17. What are some common challenges in GPS mapping, and how do you overcome them?

GPS mapping faces several challenges. Think of it like trying to find your way using only a slightly inaccurate compass – you’ll get close, but not perfectly. Common challenges include:

- Signal Obstructions: Buildings, trees, and even heavy cloud cover can block or weaken GPS signals, leading to inaccurate positioning. This is particularly problematic in urban canyons or dense forests.

- Multipath Errors: Signals reflecting off surfaces like buildings or water can cause errors in positioning, as the receiver might interpret the reflected signal as a direct signal.

- Atmospheric Effects: The ionosphere and troposphere can affect the speed of GPS signals, causing slight delays and inaccuracies in positioning.

- Receiver Noise and Errors: The GPS receiver itself can introduce errors due to internal noise or imperfections in its components.

To overcome these challenges, various techniques are employed:

- Differential GPS (DGPS): This technique uses a reference station with a known location to correct for errors in the GPS signal. (This is explained in more detail in a later question.)

- Real-Time Kinematic (RTK) GPS: RTK-GPS provides centimeter-level accuracy by using a network of base stations and sophisticated signal processing techniques. This is crucial for high-precision applications like surveying.

- Sensor Fusion: Combining GPS data with other sensors like IMUs (Inertial Measurement Units) or LiDAR can improve accuracy and reliability, especially in challenging environments. The other sensors help to fill in the gaps or correct errors in the GPS data.

- Data Post-Processing: Applying sophisticated post-processing techniques to the raw GPS data can help to smooth out errors and improve accuracy.

Q 18. What is your experience with GPS receivers and their functionalities?

My experience with GPS receivers spans a wide range, from basic handheld units to sophisticated geodetic receivers. I’m proficient in operating and utilizing various functionalities including:

- Position Determination: Accurate determination of latitude, longitude, and altitude is the fundamental functionality. I have extensive experience working with various receiver types to optimize accuracy for different applications.

- Data Logging: Recording positional data, time stamps, and other relevant information for later processing and analysis. I’m familiar with different data logging formats and their use in various applications.

- Differential GPS Correction: Using DGPS to enhance accuracy by applying corrections from a reference station. This is a common practice that I have extensive experience with.

- Signal Tracking and Analysis: Monitoring the signal strength, quality, and number of satellites being tracked. I can diagnose issues and identify sources of signal degradation.

- Communication Interfaces: Interfacing GPS receivers with other devices and systems through various communication protocols, including serial, NMEA, and RTCM.

- Configuration and Calibration: I understand the various receiver settings and can optimize them for different applications and environmental conditions.

For instance, in a recent project involving precision agriculture, I used RTK GPS receivers to accurately map crop fields, enabling precise application of fertilizers and pesticides. The precision of the positioning was critical for the success of that project.

Q 19. Explain the difference between absolute and relative positioning in GPS.

The difference between absolute and relative positioning in GPS boils down to the reference point used to determine location. Imagine trying to pinpoint your location on a map:

- Absolute Positioning: This determines the position of a receiver relative to a known global coordinate system, typically WGS84. Think of it as finding your location on a world map by using your GPS coordinates directly. The accuracy of absolute positioning is often limited by factors like signal obstructions and atmospheric conditions.

- Relative Positioning: This determines the position of one receiver relative to another. It’s like figuring out your location by measuring your distance and direction from a known landmark. Relative positioning is generally more accurate than absolute positioning because it eliminates or reduces certain error sources common to both receivers.

For example, absolute positioning is used for navigation apps in your phone, providing you with coordinates relative to the earth. Relative positioning is commonly used in surveying, where the distance between two points is more important than their absolute coordinates relative to the earth.

Q 20. What is differential GPS (DGPS) and how does it improve accuracy?

Differential GPS (DGPS) significantly improves GPS accuracy by correcting for errors in the raw GPS signal. It’s like having a second, more precise compass to refine your direction. Here’s how it works:

A base station with a precisely known location receives the same GPS signals as the roving receiver whose position we want to determine. The base station compares its known position with the position calculated from the GPS signals. Any difference between the known position and the GPS-calculated position represents errors in the GPS signal. These errors are then broadcast to the roving receiver (often via radio signals), which uses them to correct its own position calculations.

This correction significantly reduces errors caused by atmospheric effects and satellite clock inaccuracies, leading to much higher accuracy. The improvement in accuracy can be dramatic, going from several meters with standard GPS to a few centimeters with DGPS. The accuracy depends on factors like the distance between the base station and the rover, signal conditions, and the type of DGPS correction being applied.

DGPS is crucial in many applications where high accuracy is needed, such as surveying, precision agriculture, and construction.

Q 21. How would you approach a mapping project with limited resources?

Approaching a mapping project with limited resources requires careful planning and prioritization. It’s similar to building a house with a limited budget – you need to focus on the essentials. My approach would be:

- Define Scope and Objectives Clearly: Restrict the project’s scope to a manageable area and focus on the most critical data. This could involve using a smaller study area or focusing on specific data elements only.

- Utilize Free and Open-Source Tools: Leverage free and open-source GIS software like QGIS, along with free and open-source data sources. This significantly reduces software costs.

- Creative Data Acquisition: Explore cost-effective data acquisition methods. This might involve using readily available data like OpenStreetMap data, or conducting fieldwork with simple equipment. Crowd-sourced data can also be a viable option in certain circumstances.

- Prioritize Data Quality: Focus on data quality rather than quantity. It’s better to have less, high-quality data than a lot of inaccurate data.

- Strategic Partnerships: Collaborate with other organizations or institutions that might have access to relevant data or resources. Shared costs and resources can help significantly.

- Volunteer Support: Consider recruiting volunteers to help with data collection or other tasks. This can save on labor costs.

- Phased Approach: Break down the project into smaller, manageable phases. This allows for iterative progress and adjustments based on available resources.

For instance, I once mapped a community’s informal settlements using readily available satellite imagery and volunteered data from local community members. This allowed us to create a useful map despite very limited funding.

Q 22. Describe your experience with cartographic design principles.

Cartographic design principles are the foundational rules and guidelines for creating effective and visually appealing maps. They focus on clarity, accuracy, and ease of interpretation. My experience encompasses a wide range of these principles, from choosing appropriate map projections to selecting suitable symbology and typography. For example, I’ve worked on projects where choosing a Mercator projection was necessary for navigation, even though it distorts area at higher latitudes, and then clearly communicated that distortion to the map user. I also have extensive experience in designing thematic maps, where the selection of color ramps and data classification methods significantly affect the message communicated.

Consider designing a map for emergency responders. The principles of visual hierarchy become crucial. We’d use distinct symbols for hospitals, fire stations, and hazardous material locations. Clear labeling, a readily understandable legend, and appropriate scale would also be paramount for rapid decision-making during a crisis. I’ve successfully applied these principles to design maps for various clients, optimizing communication and facilitating better spatial understanding.

- Map Projection Selection: Choosing the right projection for the specific geographic area and intended purpose (e.g., navigation, thematic analysis).

- Symbol Design: Creating visually distinct and easily interpretable symbols for features and data.

- Typography and Labeling: Selecting readable fonts and strategically placing labels to avoid visual clutter.

- Color Theory: Using color effectively to enhance visual communication and highlight key information. Understanding color blindness is critical here!

- Data Classification: Applying appropriate methods for categorizing data values (e.g., equal interval, quantile, natural breaks).

Q 23. Explain your understanding of map scales and their impact on data representation.

Map scale represents the ratio between the distance on a map and the corresponding distance on the ground. It dictates the level of detail and the amount of data that can be effectively represented. A large-scale map (e.g., 1:1000) shows a small area with high detail, suitable for planning a building site. A small-scale map (e.g., 1:1,000,000) shows a large area with less detail, useful for regional planning. The choice of scale profoundly affects the type of data that can be meaningfully presented.

For instance, individual trees might be visible on a large-scale map, but only forests or woodlands would be shown on a small-scale map. Data aggregation and generalization become necessary at smaller scales. Imagine trying to display every house in a city on a map of the entire country—it would be illegible. Therefore, understanding scale is crucial for making informed decisions about data representation, simplification and generalization techniques used during map production. Misinterpreting scale can lead to inaccurate analysis and poor decision-making.

Q 24. How do you ensure the security and privacy of geospatial data?

Securing and protecting geospatial data is paramount. My approach involves a multi-layered strategy that encompasses technical, administrative, and procedural measures. Technically, we employ encryption both in transit and at rest, using robust protocols like HTTPS and AES encryption. Access control mechanisms, such as role-based access control (RBAC), ensure that only authorized personnel can access sensitive information. Data is often anonymized or generalized to minimize the risk of revealing individual identities.

Administratively, we adhere to strict data governance policies, regularly auditing access logs and implementing regular security assessments. Employee training on data security best practices is essential. Procedurally, we follow a principle of least privilege—granting users only the necessary access level. We also maintain detailed data provenance logs to track data usage and identify potential security breaches quickly. This proactive approach minimises risks and ensures compliance with relevant regulations like GDPR.

Q 25. Describe a time you had to troubleshoot a GPS or mapping system issue.

During a large-scale environmental monitoring project, our GPS devices started reporting inaccurate coordinates. We initially suspected GPS signal interference, but after checking antenna placement, and signal strength, we realized it wasn’t the cause. The issue turned out to be a systematic error in the GPS data logging software.

Our troubleshooting involved a systematic approach:

- Data Analysis: We visually inspected the GPS tracks and noticed a repeating pattern of offset coordinates. This suggested a consistent error and helped rule out random glitches.

- Software Review: We examined the GPS logging software’s code and found a bug that incorrectly calculated coordinates under certain conditions.

- Testing and Verification: We created a test environment to reproduce the error and verify the fix in the software.

- Data Rectification: Once the bug was fixed, we reprocessed the collected data, correcting the coordinates to ensure data accuracy.

This experience highlighted the importance of rigorous data validation and quality control. A seemingly simple GPS error led to extensive investigation and careful data correction to ensure project integrity. The timely identification and rectification prevented significant project delays and inaccurate analysis.

Q 26. What are your preferred methods for data quality control in GIS?

Data quality control in GIS is crucial for reliable analysis. My preferred methods include a combination of automated and manual checks. Automated checks leverage GIS software capabilities for spatial and attribute data validation. This includes checking for geometric errors (e.g., self-intersections, overlaps), attribute consistency (e.g., valid data types, range checks), and topological errors. A very common automated check is detecting sliver polygons – which are usually errors in data.

Manual checks involve visual inspection of the data using interactive GIS maps. This includes checking for obvious errors not caught by automated checks, validating data against external sources (e.g., comparing a dataset with a high-resolution imagery), and verifying data accuracy through field surveys or ground truthing. We often employ accuracy assessment techniques like root mean square error (RMSE) to quantify positional errors.

It’s a iterative process. Automated checks identify potential issues; manual checks confirm and resolve them, refining the dataset’s accuracy and validity before further analysis. The choice of techniques also depends on the type of data and project requirements.

Q 27. What are some emerging trends in mapping and GPS technology?

Mapping and GPS technology is evolving rapidly. Some key emerging trends include:

- Increased use of AI and Machine Learning: AI is automating many GIS tasks, such as feature extraction from imagery, change detection, and predictive modeling. This drastically improves efficiency and accuracy in processing huge amounts of data.

- Rise of 3D GIS and Virtual Reality: We’re moving beyond 2D maps to create immersive 3D models of the world, allowing for richer visualizations and simulations. VR integration enhances spatial understanding and communication of complex geographic data.

- Integration of IoT and Sensor Data: GPS and mapping are becoming integrated with the Internet of Things (IoT) to create smart environments. Data from sensors (e.g., air quality, traffic flow) is overlaid onto maps for real-time monitoring and analysis.

- Development of Open-Source Tools and Data: The availability of open-source GIS software (e.g., QGIS) and open data initiatives (e.g., OpenStreetMap) has democratized access to spatial technology and increased collaboration within the field.

- Advancements in GNSS Technology: With new satellite constellations (e.g., Galileo, BeiDou) coming online, we can expect more precise and reliable positioning even in challenging environments. Multi-GNSS techniques that combine data from multiple constellations are improving accuracy further.

These advancements are transforming many aspects of our lives, from urban planning to disaster response and environmental monitoring.

Q 28. Describe your experience with Python libraries used in geospatial analysis (e.g., GDAL, GeoPandas).

I have extensive experience using Python libraries for geospatial analysis, particularly GDAL and GeoPandas. GDAL (Geospatial Data Abstraction Library) is a powerful tool for reading, writing, and manipulating various geospatial data formats (e.g., shapefiles, GeoTIFFs, rasters). I’ve used GDAL extensively for data preprocessing, format conversion, and geoprocessing tasks. I often use it for tasks such as raster clipping, reprojection, and mosaic creation. Here’s a simple example of clipping a raster using GDAL through Python:

from osgeo import gdal

gdal.UseExceptions()

dataset = gdal.Open('input.tif')

output = gdal.Warp('output.tif', dataset, cutlineDSName='clip.shp')

output = NoneGeoPandas builds upon the Pandas library to provide convenient data structures and functions for working with vector data. It simplifies tasks such as spatial joins, overlay analysis, and geometric operations. I’ve frequently used GeoPandas for spatial queries, calculating distances and areas, and performing spatial analysis on vector data. It streamlines workflows significantly and makes complex spatial operations easier.

Both GDAL and GeoPandas are crucial components of my geospatial toolkit. Their combination allows me to effectively handle various types of geospatial data and perform a wide range of analyses, increasing my efficiency and analytical power.

Key Topics to Learn for Mapping and GPS Systems Interview

- Geospatial Data Fundamentals: Understanding different data formats (shapefiles, GeoJSON, GeoTIFF), projections, coordinate systems (WGS84, UTM), and data transformations.

- GPS Technology: How GPS works (satellites, signals, triangulation), accuracy limitations (atmospheric effects, multipath), and differential GPS techniques.

- Mapping Software and Tools: Familiarity with GIS software (ArcGIS, QGIS), online mapping platforms (Google Maps API, Mapbox), and data visualization techniques.

- Spatial Analysis Techniques: Understanding spatial relationships (proximity, overlay), spatial statistics, and applying these techniques to solve real-world problems.

- Cartography and Map Design: Principles of effective map design, symbolization, and communication of spatial information.

- Database Management Systems (DBMS) for Geospatial Data: Working with spatial databases (PostGIS, Oracle Spatial) and querying geospatial data efficiently.

- Practical Applications: Discuss experience or knowledge of applications in areas like navigation, urban planning, environmental monitoring, logistics, or asset tracking.

- Problem-Solving and Algorithm Design: Be prepared to discuss your approach to solving spatial problems, potentially involving algorithms related to routing, pathfinding, or geoprocessing.

- Current Trends: Stay updated on emerging technologies like remote sensing, LiDAR, and the use of AI/ML in geospatial analysis.

Next Steps

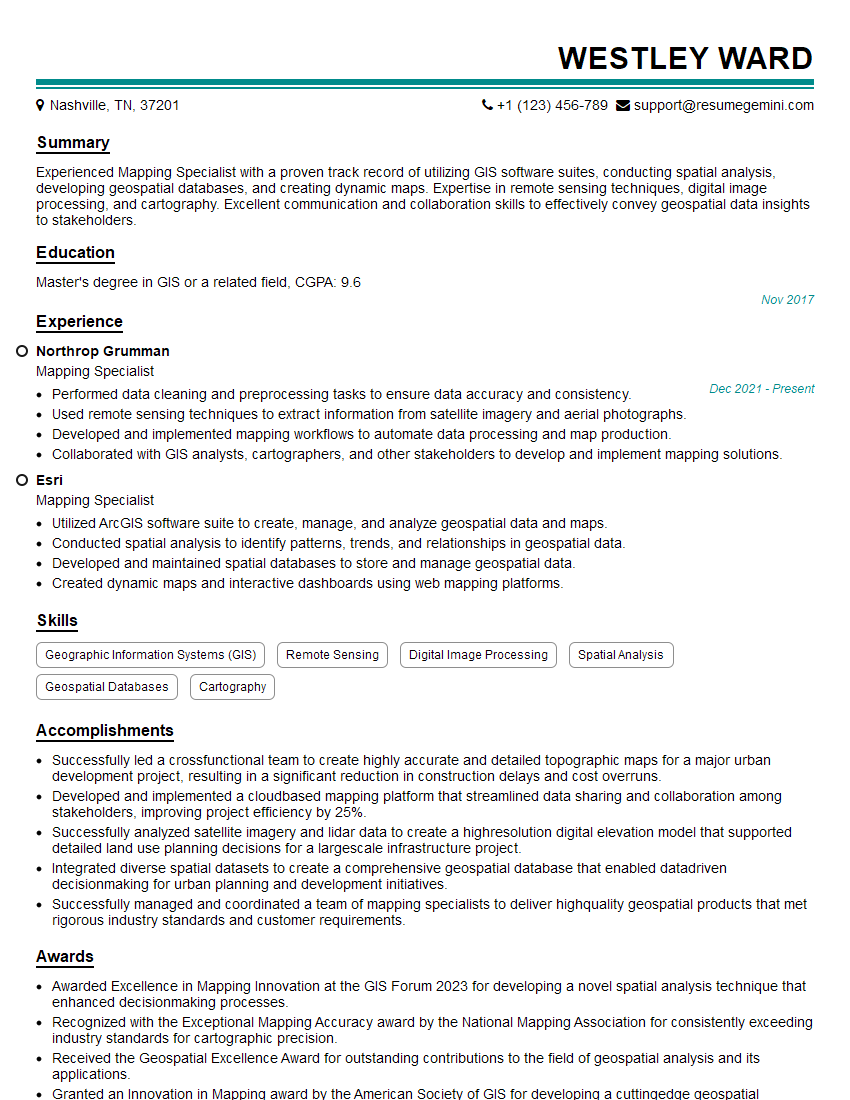

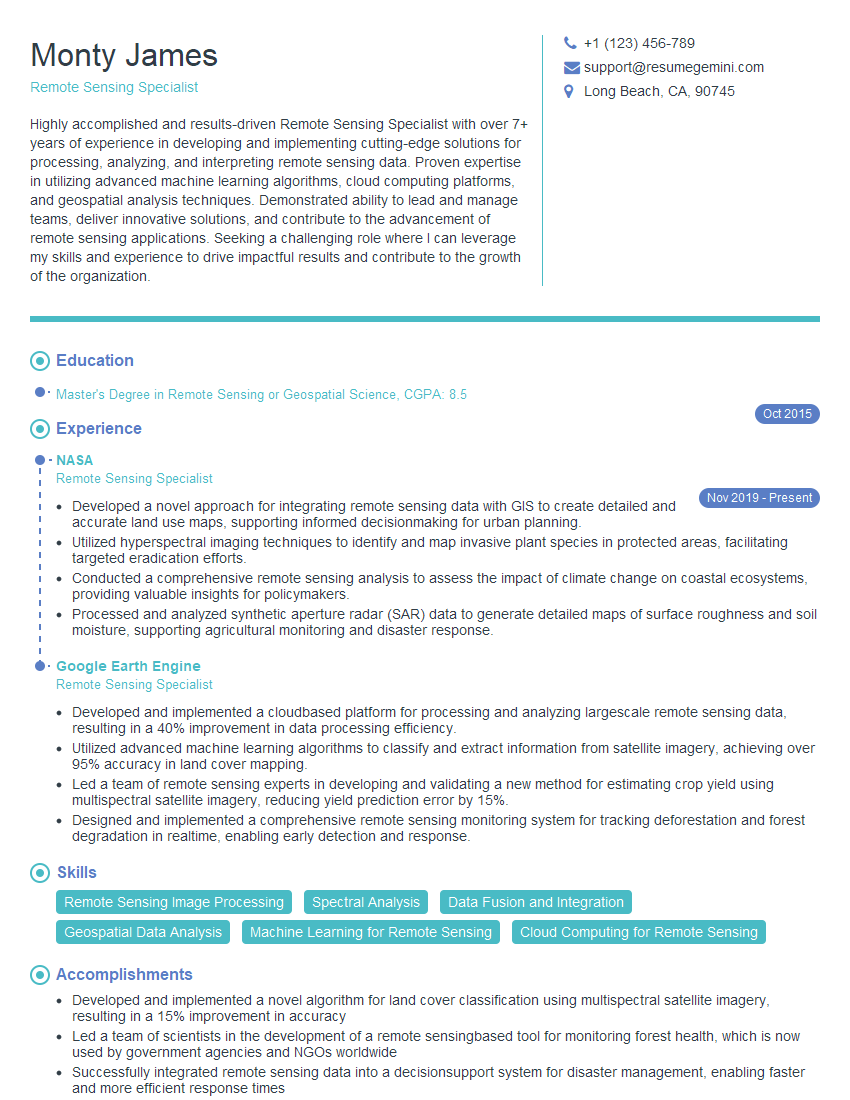

Mastering Mapping and GPS Systems opens doors to exciting careers in various sectors, offering strong growth potential and high demand. A well-crafted resume is crucial for showcasing your skills and experience effectively to potential employers. An ATS-friendly resume, optimized for Applicant Tracking Systems, significantly increases your chances of getting your application noticed. To create a compelling and effective resume that highlights your unique qualifications in Mapping and GPS Systems, we encourage you to utilize ResumeGemini. ResumeGemini provides a user-friendly platform for building professional resumes, and we offer examples of resumes tailored specifically to the Mapping and GPS Systems field to help you get started.

Explore more articles

Users Rating of Our Blogs

Share Your Experience

We value your feedback! Please rate our content and share your thoughts (optional).

What Readers Say About Our Blog

good