Are you ready to stand out in your next interview? Understanding and preparing for Knowledge of data acquisition and analysis techniques interview questions is a game-changer. In this blog, we’ve compiled key questions and expert advice to help you showcase your skills with confidence and precision. Let’s get started on your journey to acing the interview.

Questions Asked in Knowledge of data acquisition and analysis techniques Interview

Q 1. Explain the difference between structured and unstructured data.

The key difference between structured and unstructured data lies in how organized and easily searchable it is. Think of it like this: structured data is neatly arranged in a filing cabinet with clearly labeled folders and documents, while unstructured data is more like a huge pile of papers scattered on a desk.

Structured data is highly organized and conforms to a predefined data model. It’s typically stored in relational databases with rows and columns, and each data point has a specific type (e.g., integer, text, date). Examples include data from spreadsheets, relational databases (like SQL databases), and CSV files. Imagine a customer database with columns for Name, Address, Phone Number, and Purchase History. Each entry follows the same structure.

Unstructured data, conversely, lacks a predefined format or organization. It’s difficult to search and analyze directly without significant preprocessing. Examples include text documents, images, audio files, video recordings, and social media posts. Think of the billions of tweets on Twitter – each is unique in format and content.

Semi-structured data falls in between. It has some organizational properties but doesn’t fully conform to a relational model. XML and JSON files are common examples. These often have tags or keys, providing some structure, but are not as rigidly defined as a relational database.

Q 2. Describe your experience with various data acquisition methods (APIs, web scraping, databases).

My experience with data acquisition spans various methods. I’ve extensively used APIs, web scraping, and database interactions to collect data for numerous projects.

- APIs (Application Programming Interfaces): I’ve worked with numerous APIs, including those from Twitter, Google Maps, and various financial data providers. For example, I used the Twitter API to collect tweets for sentiment analysis, leveraging its rate limits and authentication mechanisms effectively. Understanding API documentation and rate limiting is crucial for efficient data acquisition.

- Web Scraping: I have experience using Python libraries like Beautiful Soup and Scrapy to extract data from websites. A recent project involved scraping product information (prices, reviews, descriptions) from e-commerce sites. Ethical considerations and respecting robots.txt are paramount when web scraping. I always ensure adherence to website terms of service.

- Databases: I’m proficient in querying and extracting data from both SQL and NoSQL databases. I’ve used SQL to retrieve data from large relational databases using optimized queries to ensure speed and efficiency. With NoSQL databases like MongoDB, I’ve worked with JSON-like documents, which allows for flexible schema and efficient storage of unstructured data.

Choosing the right data acquisition method depends greatly on the data source, the desired data format, and the project’s scale and constraints. My approach always involves careful planning and consideration of potential limitations.

Q 3. How do you handle missing data in a dataset?

Missing data is a common problem in real-world datasets. Ignoring it can lead to biased or inaccurate analyses. My approach to handling missing data is multifaceted and depends on the nature and extent of the missingness.

- Deletion: If the missing data is minimal and random, listwise deletion (removing entire rows with missing values) might be acceptable. However, this can lead to substantial data loss if the missing data is not random.

- Imputation: This is often the preferred method. It involves replacing missing values with estimated ones. Techniques include:

- Mean/Median/Mode imputation: Simple but can distort the distribution if the missingness is not random.

- Regression imputation: Predicts missing values based on other variables using regression models. This is more sophisticated but requires assumptions about the relationships between variables.

- K-Nearest Neighbors (KNN) imputation: Replaces missing values based on the values of similar data points. It’s useful when dealing with non-linear relationships.

- Multiple Imputation: Generates multiple plausible imputed datasets, addressing uncertainty associated with imputation. This produces more robust estimates.

The best approach requires careful consideration of the context. I would often start with exploratory data analysis to understand the pattern of missing data and then choose the appropriate technique. Documenting the imputation method is crucial for reproducibility and transparency.

Q 4. What are some common data cleaning techniques?

Data cleaning is crucial for ensuring data quality and accuracy. It’s often the most time-consuming part of the data analysis process. Some common techniques I employ include:

- Handling Missing Values: As previously discussed, this involves deletion or imputation.

- Outlier Detection and Treatment: Outliers are data points that deviate significantly from the rest. I use techniques like box plots, scatter plots, and statistical methods (e.g., Z-score) to identify outliers. Treatment options include removal, transformation (e.g., logarithmic transformation), or winsorization (capping values).

- Data Transformation: This involves converting data into a more suitable format. For example, I might standardize numerical data (using Z-score normalization) to have a mean of 0 and a standard deviation of 1, or convert categorical data into numerical representations using one-hot encoding.

- Smoothing: Used to reduce noise in time series data or other data with trends. Techniques include moving averages or exponential smoothing.

- Data Deduplication: Identifying and removing duplicate records to ensure data integrity.

- Inconsistency Resolution: Addressing inconsistencies in data formats or naming conventions. This might involve standardizing data entries to ensure uniformity.

The specific cleaning techniques used depend on the dataset’s characteristics and the analysis goals. A well-defined cleaning strategy is critical for accurate and reliable results.

Q 5. Explain the importance of data validation and verification.

Data validation and verification are fundamental to ensuring data quality and trustworthiness. They are two distinct but related processes.

Data Validation is the process of ensuring that data conforms to predefined rules and constraints. It focuses on preventing invalid data from entering the system. Think of it as a gatekeeper. This often involves checking data types, ranges, formats, and consistency. For example, validating that an age is a positive integer or that a date is in a correct format. Validation often happens during data entry or import.

Data Verification is the process of confirming that data is accurate and reliable after it has been collected. This involves comparing the data against trusted sources or using techniques to detect errors or inconsistencies. Imagine verifying customer addresses by comparing them against a postal service database. Verification often involves cross-checking data from multiple sources or using checksums.

Both validation and verification are crucial for preventing errors, improving data quality, and building trust in the analysis results. Neglecting these steps can lead to flawed conclusions and inaccurate decision-making.

Q 6. Describe your experience with different database systems (SQL, NoSQL).

My experience encompasses both SQL and NoSQL database systems. The choice between them depends largely on the nature of the data and the application requirements.

SQL (Structured Query Language) databases are relational databases that use a structured schema with predefined tables and relationships. They are excellent for managing structured data with well-defined relationships between entities. I’ve used SQL extensively for projects requiring complex queries, joins, and transactions, ensuring data integrity and consistency. My experience includes working with systems like MySQL, PostgreSQL, and SQL Server. I’m familiar with optimizing SQL queries for performance in large datasets.

NoSQL (Not Only SQL) databases are non-relational databases that offer flexibility in schema design and are well-suited for handling large volumes of unstructured or semi-structured data. I’ve used NoSQL databases like MongoDB for projects involving JSON-like documents, where schema flexibility is crucial. These are often preferred when dealing with rapidly evolving data structures or handling large amounts of semi-structured data like social media posts or sensor readings.

I understand the strengths and limitations of each type and can choose the appropriate system based on the project’s needs. My expertise allows me to effectively leverage the features of both systems to build robust and scalable data solutions.

Q 7. How do you perform data transformation and feature engineering?

Data transformation and feature engineering are crucial steps in preparing data for analysis and modeling. They involve manipulating and creating new features to improve the performance and interpretability of models.

Data Transformation focuses on changing the format or structure of existing variables. Common transformations include:

- Scaling: Normalizing or standardizing numerical data to a specific range (e.g., 0-1 or -1 to 1).

- Encoding: Converting categorical variables into numerical representations (e.g., one-hot encoding, label encoding).

- Log Transformation: Applying a logarithmic function to reduce skewness in data.

- Data Aggregation: Combining multiple data points into a single summary statistic (e.g., calculating the average, sum, or count).

Feature Engineering involves creating new features from existing ones. This often requires domain knowledge and creativity. Examples include:

- Creating interaction terms: Combining two or more variables to capture their interaction effects.

- Generating polynomial features: Adding polynomial terms of existing features to capture non-linear relationships.

- Extracting features from text data: Using techniques like TF-IDF or word embeddings to extract meaningful features from text documents.

- Creating time-based features: Extracting features like day of the week, month, or time of day from timestamps.

Effective data transformation and feature engineering can significantly improve model accuracy and predictive power. I approach this process iteratively, experimenting with different transformations and features to find the optimal combination.

Q 8. What are your preferred data visualization tools and techniques?

My preferred data visualization tools depend heavily on the context of the analysis, but I frequently use a combination of tools to leverage their strengths. For interactive dashboards and exploratory data analysis, I find Tableau and Power BI incredibly powerful and user-friendly. Their drag-and-drop interfaces allow for quick prototyping and iteration, crucial in the early stages of understanding data. For more complex visualizations and when needing greater control over aesthetics and customization, I often turn to Python libraries like Matplotlib, Seaborn, and Plotly. These offer extensive flexibility in creating publication-quality figures and interactive web-based visualizations. Finally, for statistical graphics and those requiring rigorous statistical approaches, R with ggplot2 is indispensable.

In terms of techniques, I prioritize clarity and effective communication. I avoid overly cluttered or misleading visuals and always consider the audience and their level of understanding when choosing chart types. For example, a simple bar chart is often better than a complex network graph if the data allows, emphasizing the importance of selecting the right tool for the message. I regularly employ techniques such as using color effectively, labeling axes clearly, and providing informative titles to ensure the data story is easily understood.

Q 9. Explain your understanding of different data analysis methodologies (e.g., statistical analysis, machine learning).

Data analysis methodologies encompass a broad range of techniques, with statistical analysis and machine learning being two prominent approaches. Statistical analysis focuses on summarizing and interpreting data using established statistical principles. This can involve descriptive statistics (like mean, median, standard deviation) to understand data characteristics, inferential statistics (hypothesis testing, confidence intervals) to draw conclusions about a population based on a sample, and regression analysis to model relationships between variables. Think of it as rigorously extracting insights from data using established mathematical methods.

Machine learning, on the other hand, employs algorithms that allow computers to learn patterns from data without being explicitly programmed. This is particularly valuable when dealing with large and complex datasets where traditional statistical methods might be insufficient. Machine learning techniques can be categorized into supervised learning (e.g., regression, classification), where the algorithm learns from labeled data, and unsupervised learning (e.g., clustering, dimensionality reduction), where the algorithm identifies patterns in unlabeled data. For instance, I might use linear regression to predict house prices based on features like size and location, or clustering algorithms to group customers with similar purchasing behaviors.

Q 10. How do you select appropriate statistical methods for a given dataset and research question?

Selecting appropriate statistical methods involves a careful consideration of several factors. First and foremost is the research question. What are we trying to learn from the data? Are we looking for relationships between variables, comparing groups, or making predictions? The type of research question dictates the appropriate statistical test. For example, if we want to compare the means of two groups, we might use a t-test; if we want to model the relationship between multiple variables, we might use multiple linear regression. The type of data is also crucial. Are the variables continuous, categorical, or ordinal? Different methods are applicable depending on the data’s nature. Finally, the data’s characteristics, such as its distribution (normal or skewed), sample size, and potential outliers, need to be carefully evaluated. For example, for non-normal data with small sample size, non-parametric methods might be preferred over parametric ones. The process often involves exploring the data visually, performing preliminary analysis, and potentially consulting statistical literature to choose the most appropriate and robust method.

Q 11. Describe your experience with ETL processes.

ETL (Extract, Transform, Load) processes are central to any data analysis project involving multiple data sources. My experience encompasses the entire ETL pipeline, from designing and implementing data extraction strategies to building robust data transformation and loading procedures. I’ve worked with various tools and technologies, including SQL Server Integration Services (SSIS), Apache Kafka, and Python libraries like Pandas and PySpark. A recent project involved extracting customer data from multiple databases, transforming it to a unified format, and loading it into a data warehouse for reporting and analysis. This involved handling data inconsistencies, cleaning missing values, and ensuring data integrity throughout the process. I’ve also built custom ETL pipelines using Python to process data from diverse sources such as APIs, CSV files, and NoSQL databases. The key to efficient ETL is careful planning and the use of appropriate tools and techniques to handle large volumes of data efficiently, reliably, and securely.

Q 12. Explain your understanding of data warehousing and data lake concepts.

Data warehousing and data lakes represent distinct approaches to data storage and management, each with its own strengths and weaknesses. A data warehouse is a centralized repository designed for analytical processing. Data is structured, organized, and optimized for querying. Think of it as a highly organized library, where all books are neatly categorized and easily accessible for research. Data warehouses are typically used for reporting, business intelligence, and decision support, focusing on historical data.

In contrast, a data lake is a raw, unstructured storage repository. It’s more like a large storage facility where data is stored in its original format, regardless of its structure or type. This flexibility allows for storing various data types—structured, semi-structured, and unstructured. Data lakes are ideal for exploratory data analysis, big data processing, and machine learning, where the structure of data might not be known in advance or may evolve over time. I’ve worked with both, often leveraging a data lake for initial data ingestion and processing before loading structured data into a data warehouse for analytical reporting. The choice between the two depends heavily on the specific needs of the project.

Q 13. How do you ensure data quality throughout the data lifecycle?

Ensuring data quality is a continuous process spanning the entire data lifecycle. It starts with understanding the data’s origin, its intended use, and potential sources of error. Data profiling techniques, such as checking for missing values, outliers, and data type inconsistencies, are crucial in the early stages. Data cleansing involves handling missing values (imputation or removal), correcting inconsistencies, and removing duplicates. During the transformation phase of ETL, data validation rules and constraints help ensure data integrity. Regular data quality monitoring, both manual and automated, is vital to detect and address issues promptly. This includes establishing metrics to track key data quality indicators and using automated tools to flag potential problems. Furthermore, documenting data lineage and implementing version control enables tracing data back to its source and understanding changes over time. Establishing clear data governance policies and procedures within the organization is critical to fostering a culture of data quality.

Q 14. Describe your experience with data mining techniques.

My experience with data mining techniques encompasses various methods used to discover patterns, anomalies, and insights from large datasets. I’ve applied association rule mining (e.g., Apriori algorithm) to identify relationships between products frequently purchased together, enabling improved product placement and targeted marketing strategies. Classification techniques like decision trees and support vector machines have been used for customer segmentation and risk assessment. Clustering algorithms, such as k-means and DBSCAN, have helped identify customer groups with similar purchasing behaviors, allowing for personalized marketing campaigns. Regression models have been used to predict customer churn or future sales trends. I’ve also worked with anomaly detection methods to identify fraudulent transactions or equipment malfunctions. The selection of a specific data mining technique depends on the nature of the data and the research question. It’s a process that involves careful consideration of the strengths and limitations of different methods and requires significant domain knowledge to interpret the results effectively and avoid misleading conclusions.

Q 15. How do you evaluate the accuracy and performance of a predictive model?

Evaluating the accuracy and performance of a predictive model is crucial for ensuring its reliability. We typically use a combination of metrics and techniques, tailored to the specific problem and model type. For classification problems, metrics like accuracy, precision, recall, and the F1-score provide a comprehensive view of the model’s performance. For regression problems, metrics such as Mean Squared Error (MSE), Root Mean Squared Error (RMSE), and R-squared are commonly used.

Beyond these basic metrics, more sophisticated techniques like cross-validation are employed to avoid overfitting. K-fold cross-validation, for instance, splits the data into k subsets, trains the model on k-1 subsets, and tests it on the remaining subset. This process is repeated k times, providing a more robust estimate of model performance. Furthermore, a crucial step involves creating a confusion matrix, which visualizes the model’s predictions against the actual values, allowing for a deeper understanding of its strengths and weaknesses. Finally, we always consider the business context. A model with high accuracy might not be useful if its predictions are not actionable or cost-effective in a real-world setting.

For example, imagine building a model to predict customer churn. A high recall is critical, as we want to identify as many at-risk customers as possible, even if it means some false positives. Conversely, in a fraud detection system, a high precision is more important, minimizing false alarms.

Career Expert Tips:

- Ace those interviews! Prepare effectively by reviewing the Top 50 Most Common Interview Questions on ResumeGemini.

- Navigate your job search with confidence! Explore a wide range of Career Tips on ResumeGemini. Learn about common challenges and recommendations to overcome them.

- Craft the perfect resume! Master the Art of Resume Writing with ResumeGemini’s guide. Showcase your unique qualifications and achievements effectively.

- Don’t miss out on holiday savings! Build your dream resume with ResumeGemini’s ATS optimized templates.

Q 16. Explain your understanding of different machine learning algorithms.

Machine learning algorithms can be broadly categorized into supervised, unsupervised, and reinforcement learning. Supervised learning uses labeled data to train models that predict outcomes (e.g., linear regression, logistic regression, support vector machines, decision trees, random forests). Unsupervised learning deals with unlabeled data, aiming to discover patterns or structures (e.g., clustering algorithms like k-means, dimensionality reduction techniques like PCA). Reinforcement learning focuses on training agents to make decisions in an environment to maximize rewards (e.g., Q-learning).

Let’s take a closer look at some popular algorithms:

- Linear Regression: Predicts a continuous output variable based on a linear relationship with input variables. Think predicting house prices based on size and location.

- Logistic Regression: Predicts a categorical output variable (often binary). Useful for tasks like spam detection or credit risk assessment.

- Support Vector Machines (SVM): Finds the optimal hyperplane to separate data points into different classes. Effective in high-dimensional spaces.

- Decision Trees: Creates a tree-like model to classify or regress data based on a series of decisions. Easy to interpret but prone to overfitting.

- Random Forests: An ensemble method that combines multiple decision trees to improve accuracy and reduce overfitting.

- K-Means Clustering: Groups data points into k clusters based on their similarity. Useful for customer segmentation or anomaly detection.

The choice of algorithm depends heavily on the nature of the data and the problem being solved. Consider the size of the dataset, the number of features, the type of output variable, and the desired interpretability of the model.

Q 17. How do you handle outliers in a dataset?

Outliers are data points that significantly deviate from the rest of the data. Handling them is critical as they can skew results and negatively impact model performance. The approach depends on the nature of the outlier and the context of the analysis.

Here are some common strategies:

- Detection: Identify outliers using techniques like box plots, scatter plots, Z-score, or Interquartile Range (IQR).

- Removal: Simply remove the outliers if they’re deemed to be errors or irrelevant to the analysis. However, this should be done cautiously, as you might lose valuable information.

- Transformation: Apply transformations like log transformation or Box-Cox transformation to reduce the impact of outliers. This compresses the range of the data, minimizing the influence of extreme values.

- Winsorization/Trimming: Replace extreme values with less extreme ones (Winsorization) or remove a certain percentage of the most extreme values (Trimming).

- Robust Methods: Use statistical methods that are less sensitive to outliers, such as median instead of mean, or robust regression techniques.

For example, if I were analyzing customer purchase data and encountered an outlier with an unusually high purchase value, I might investigate it. If it’s due to a data entry error, I’d correct it. If it’s a legitimate but unusual purchase (e.g., a large business purchase), I might retain it but use robust methods to mitigate its impact on the analysis.

Q 18. What are some common challenges in data acquisition and how do you overcome them?

Data acquisition presents numerous challenges, including data quality issues (incompleteness, inconsistencies, errors), data accessibility limitations (data silos, restricted access), data volume and velocity (big data challenges), and data security concerns (privacy, confidentiality).

Here’s how I overcome these:

- Data Cleaning and Preprocessing: Implement robust data cleaning pipelines to handle missing values, outliers, and inconsistencies. This often involves techniques like imputation, standardization, and normalization.

- Data Integration: Develop strategies to integrate data from various sources, addressing inconsistencies in formats and schemas. ETL (Extract, Transform, Load) processes are vital here.

- Data Governance and Quality Control: Establish clear data governance procedures to ensure data quality, consistency, and compliance with regulations. This includes defining data standards and implementing quality checks at various stages of the acquisition process.

- Data Security and Privacy: Implement appropriate security measures to protect data throughout its lifecycle. This includes encryption, access controls, and adherence to data privacy regulations.

- Big Data Technologies: For large-scale data acquisition, leverage technologies like Hadoop or Spark to handle the volume, velocity, and variety of data efficiently.

For instance, when acquiring customer data from multiple databases, I would create an ETL pipeline to extract, transform (standardize formats, handle inconsistencies), and load data into a unified data warehouse for analysis. This ensures data quality and consistency across sources.

Q 19. How do you communicate complex data insights to non-technical audiences?

Communicating complex data insights to non-technical audiences requires translating technical jargon into plain language and using visualizations to effectively convey key findings. Instead of focusing on statistical details, I prioritize the story and the implications of the data.

My approach involves:

- Storytelling: Frame the data insights within a compelling narrative that highlights the key takeaways and their relevance to the audience.

- Visualizations: Use charts, graphs, and other visuals to simplify complex data and make it easier to understand. Choose appropriate visualization types depending on the data and the message.

- Analogies and Metaphors: Use relatable analogies and metaphors to explain complex concepts in a simplified manner. This makes the information more accessible and memorable.

- Interactive Dashboards: For more interactive communication, create dashboards that allow users to explore the data themselves and discover insights.

- Plain Language: Avoid technical jargon and use clear, concise language that everyone can understand.

For example, when presenting customer churn analysis to executives, I wouldn’t overwhelm them with statistical models. Instead, I’d focus on the key drivers of churn, illustrating them with clear visuals (e.g., a bar chart showing the impact of customer service issues on churn) and summarizing the key actions needed to reduce churn.

Q 20. Explain your experience with version control systems for data projects (e.g., Git).

Version control is indispensable for data projects, ensuring collaboration, traceability, and reproducibility. I have extensive experience with Git, utilizing it for managing code, data, and documentation throughout the project lifecycle.

My workflow typically involves:

- Repository Setup: Creating a Git repository to store all project-related files. This includes code for data processing, analysis scripts, and even data files (especially smaller datasets).

- Branching and Merging: Employing branching strategies to isolate development work, allowing multiple team members to contribute concurrently without conflicts. Regularly merging changes back into the main branch ensures everyone works with the latest updates.

- Committing and Pushing: Frequently committing changes with clear and descriptive commit messages that explain the modifications. Pushing these changes to a remote repository (like GitHub or GitLab) facilitates collaboration and backups.

- Pull Requests: Using pull requests for code reviews and collaborative development, ensuring that changes are reviewed and approved before merging into the main branch.

- Conflict Resolution: Proficiently resolving merge conflicts, either manually or using Git tools, to maintain a consistent project history.

Using Git allows me to track changes, revert to previous versions if necessary, and collaborate effectively with others. For larger datasets, I might use Git LFS (Large File Storage) to manage large files efficiently.

Q 21. Describe your experience with big data technologies (e.g., Hadoop, Spark).

I have experience with big data technologies like Hadoop and Spark, enabling me to process and analyze massive datasets that exceed the capacity of traditional relational databases. I understand the distributed computing paradigms these technologies offer.

My experience includes:

- Hadoop: Working with the Hadoop Distributed File System (HDFS) for storing and managing large datasets, and using MapReduce for parallel processing of data. I’m familiar with the Hadoop ecosystem, including tools like Hive and Pig for data querying and manipulation.

- Spark: Utilizing Spark’s in-memory processing capabilities for faster and more efficient data analysis compared to Hadoop. I’ve worked with Spark SQL for structured data processing and Spark MLlib for machine learning tasks on large datasets.

- Data Warehousing and ETL: Building data pipelines to ingest, process, and load data into data warehouses using big data tools. This ensures data is available for analysis and reporting.

- Cloud-based Big Data Services: Leveraging cloud platforms (like AWS, Azure, or GCP) that provide managed big data services. This simplifies the management and scalability of big data infrastructure.

For example, I’ve used Spark to perform real-time analysis of streaming data from social media platforms, processing terabytes of data to identify trending topics or customer sentiment. The scalability and speed of Spark were crucial for this task.

Q 22. How do you ensure data security and privacy?

Data security and privacy are paramount in my work. I employ a multi-layered approach, starting with robust data encryption both in transit and at rest. This involves using protocols like TLS/SSL for secure communication and encryption algorithms like AES-256 for data storage. Access control is crucial; I implement role-based access control (RBAC) to restrict access to sensitive data based on individual roles and responsibilities. For example, only authorized analysts would have access to personally identifiable information (PII). Furthermore, I rigorously adhere to data minimization principles, collecting only the necessary data and deleting it when no longer required. Regular security audits and penetration testing are also integral to identifying and mitigating vulnerabilities. Finally, I ensure compliance with relevant data privacy regulations like GDPR and CCPA, implementing procedures for data subject requests and breach notification.

Think of it like a fortress: encryption is the walls, access control is the gatekeepers, data minimization is reducing the size of the fortress, and audits are the regular inspections to ensure everything is secure. Each layer adds to the overall security posture.

Q 23. Explain your experience with data governance policies and procedures.

My experience with data governance is extensive. I’ve been involved in developing and implementing data governance policies across several projects, focusing on data quality, security, and compliance. This includes defining data ownership, establishing data quality metrics, and creating data dictionaries to ensure consistency and clarity. I’ve worked with various teams to establish data governance frameworks, utilizing tools to monitor data quality and compliance. For instance, in a previous role, we implemented a data quality monitoring system that alerted us to anomalies or inconsistencies in our datasets, allowing for prompt remediation. This ensured the accuracy and reliability of our analyses and reports, ultimately contributing to better business decisions.

A key aspect of this is fostering a data-driven culture where everyone understands their responsibility in maintaining data quality and security. We achieve this through regular training and communication.

Q 24. Describe a time you had to deal with a large and complex dataset. What challenges did you face and how did you overcome them?

I once worked with a massive dataset containing billions of customer transactions over five years. The sheer volume presented several challenges. Processing this data on a single machine was impractical, so we employed a distributed computing framework like Hadoop/Spark. This required careful partitioning and optimization of the data to ensure efficient processing. Another challenge was the data’s inconsistency; some fields were missing, while others contained errors. We used data cleaning techniques, including imputation for missing values and anomaly detection to identify and correct erroneous data points. Further, visualizing and interpreting insights from such a large dataset required sophisticated visualization tools and techniques. We employed dimensionality reduction techniques like PCA to simplify the data before applying machine learning algorithms for predictive modeling.

Overcoming these challenges involved meticulous planning, selecting the right tools and technologies, and a strong understanding of distributed computing and data cleaning techniques. Teamwork and effective communication were also crucial in coordinating the various aspects of the project.

Q 25. What are some ethical considerations in data analysis?

Ethical considerations in data analysis are paramount. Bias is a major concern; algorithms trained on biased data can perpetuate and amplify existing inequalities. For instance, a facial recognition system trained primarily on images of white faces might perform poorly on images of people with darker skin tones. Therefore, ensuring data representativeness and mitigating biases during data collection and model building are critical. Privacy is another key consideration; we must always protect the privacy of individuals whose data we are analyzing, adhering to relevant regulations and ethical guidelines. Transparency is essential; we need to be clear about how data is being collected, used, and analyzed, and we should be prepared to justify our methods and findings. Finally, we must consider the potential societal impact of our analyses and strive to use data for good, avoiding its misuse for discriminatory or harmful purposes.

Ethical data analysis requires a conscious effort to be aware of potential biases and their consequences and to prioritize responsible data handling practices.

Q 26. How do you stay up-to-date with the latest advancements in data acquisition and analysis?

Staying current in this rapidly evolving field requires continuous learning. I actively participate in online courses offered by platforms like Coursera and edX, focusing on areas like deep learning, advanced statistical modeling, and big data technologies. I regularly attend industry conferences and workshops to learn about the latest advancements and network with other professionals. I also follow prominent researchers and practitioners on social media platforms and read peer-reviewed publications to stay abreast of the latest research findings. Subscription to relevant journals and newsletters ensures that I am informed about new methods and tools.

Continuous learning isn’t just about keeping up; it’s about staying ahead of the curve and being able to apply the latest techniques to solve real-world problems.

Q 27. Describe your experience with A/B testing and experimental design.

A/B testing and experimental design are crucial for evaluating the effectiveness of different interventions. My experience involves designing controlled experiments to compare different versions of websites, marketing campaigns, or product features. This involves defining a clear hypothesis, randomly assigning users to different groups (A and B), and carefully measuring the key metrics. For example, I might conduct an A/B test to compare the click-through rates of two different website designs. The design process includes defining the sample size needed to achieve statistical significance, ensuring the randomization process is robust, and controlling for confounding variables. After the experiment, I analyze the results using statistical methods, such as hypothesis testing, to determine whether there is a statistically significant difference between the two groups. The data is cleaned and prepared for analysis using various techniques, including outlier detection.

Proper experimental design and statistical analysis are crucial to ensure the results are reliable and meaningful.

Q 28. How do you prioritize different data analysis tasks?

Prioritizing data analysis tasks involves a blend of strategic considerations and practical constraints. I use a framework that considers the business value, urgency, feasibility, and resource availability. High-value tasks that deliver significant business insights and are urgently needed get priority. I also assess the feasibility – some tasks might require significant resources or expertise which might delay them. Resource constraints such as time, personnel, and computing power also play a role in prioritization. I often use a combination of methods like MoSCoW (Must have, Should have, Could have, Won’t have) and prioritization matrices to rank tasks based on these factors. This structured approach ensures that we focus our efforts on the most impactful analyses first, maximizing the return on investment of our data analysis work.

It’s not just about speed, it’s about strategic alignment and efficient resource allocation.

Key Topics to Learn for Knowledge of Data Acquisition and Analysis Techniques Interview

- Data Acquisition Methods: Understanding various data sources (databases, APIs, web scraping, sensors), data formats (structured, semi-structured, unstructured), and best practices for data collection, ensuring data quality and integrity.

- Data Cleaning and Preprocessing: Mastering techniques like handling missing values, outlier detection and treatment, data transformation (normalization, standardization), and feature engineering to prepare data for analysis.

- Exploratory Data Analysis (EDA): Proficiency in using statistical summaries, data visualization (histograms, scatter plots, box plots), and other techniques to gain insights from data and identify patterns before formal modeling.

- Data Analysis Techniques: Understanding and applying statistical methods (regression, hypothesis testing, ANOVA), machine learning algorithms (classification, regression, clustering), and their appropriate application based on the data and problem.

- Data Visualization and Communication: Creating effective visualizations (charts, dashboards) to communicate findings clearly and concisely to both technical and non-technical audiences. Practicing explaining complex data insights in a simple, understandable way.

- Database Management Systems (DBMS): Familiarity with SQL and NoSQL databases, including querying, data manipulation, and understanding database design principles for efficient data storage and retrieval.

- Big Data Technologies (Optional): Depending on the role, understanding concepts related to Hadoop, Spark, or cloud-based data platforms might be beneficial. Focus on demonstrating understanding of scalable data processing techniques.

- Ethical Considerations: Understanding data privacy, bias in data, and responsible data handling practices.

Next Steps

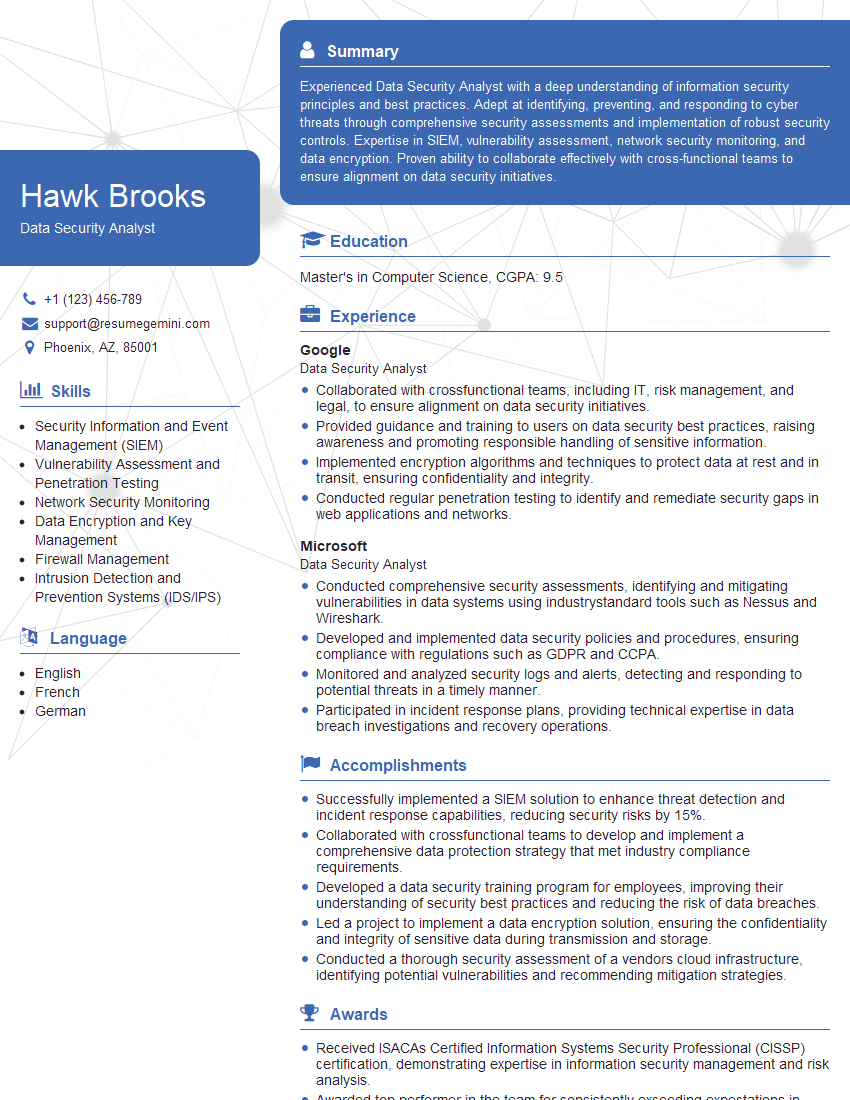

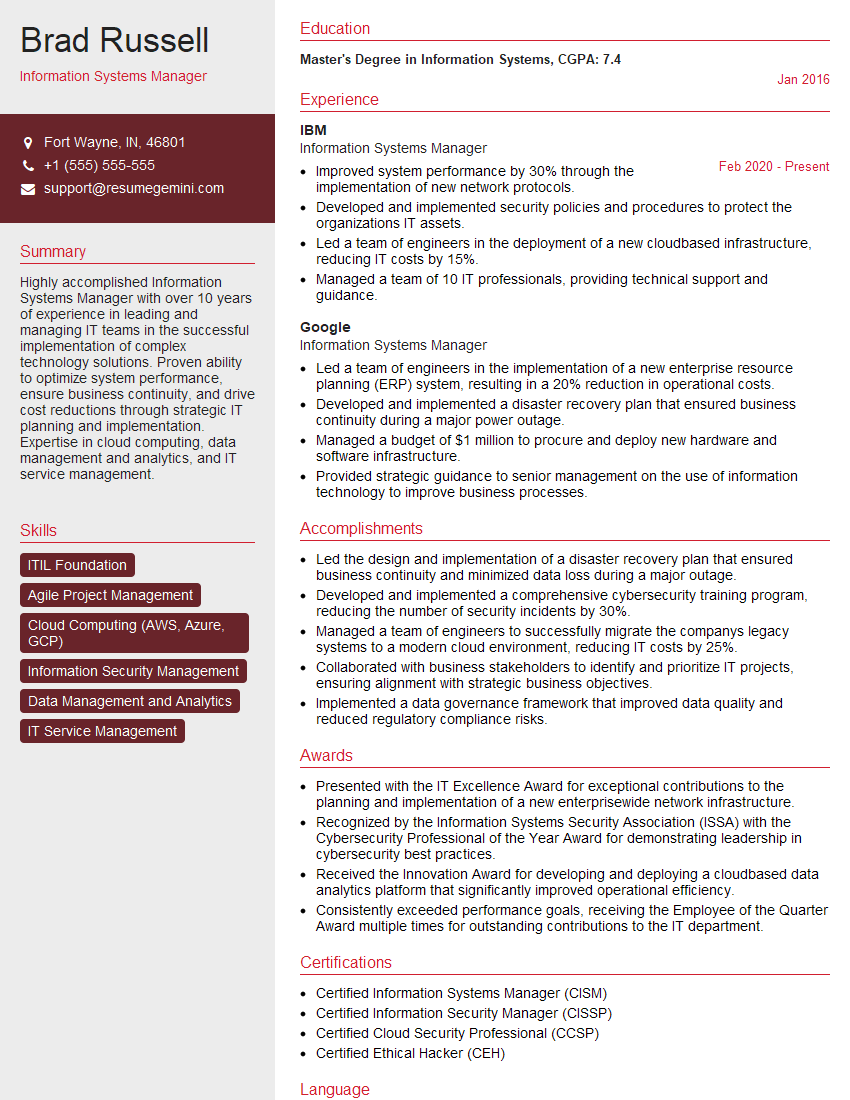

Mastering data acquisition and analysis techniques is crucial for career advancement in today’s data-driven world. These skills are highly sought after across numerous industries, opening doors to exciting and rewarding opportunities. To maximize your job prospects, crafting an ATS-friendly resume that highlights your expertise is essential. ResumeGemini is a trusted resource that can help you build a professional and impactful resume tailored to your specific skills and experience. We provide examples of resumes specifically designed for candidates with expertise in knowledge of data acquisition and analysis techniques to guide you in creating your own compelling application materials.

Explore more articles

Users Rating of Our Blogs

Share Your Experience

We value your feedback! Please rate our content and share your thoughts (optional).

What Readers Say About Our Blog

Hello,

We found issues with your domain’s email setup that may be sending your messages to spam or blocking them completely. InboxShield Mini shows you how to fix it in minutes — no tech skills required.

Scan your domain now for details: https://inboxshield-mini.com/

— Adam @ InboxShield Mini

Reply STOP to unsubscribe

Hi, are you owner of interviewgemini.com? What if I told you I could help you find extra time in your schedule, reconnect with leads you didn’t even realize you missed, and bring in more “I want to work with you” conversations, without increasing your ad spend or hiring a full-time employee?

All with a flexible, budget-friendly service that could easily pay for itself. Sounds good?

Would it be nice to jump on a quick 10-minute call so I can show you exactly how we make this work?

Best,

Hapei

Marketing Director

Hey, I know you’re the owner of interviewgemini.com. I’ll be quick.

Fundraising for your business is tough and time-consuming. We make it easier by guaranteeing two private investor meetings each month, for six months. No demos, no pitch events – just direct introductions to active investors matched to your startup.

If youR17;re raising, this could help you build real momentum. Want me to send more info?

Hi, I represent an SEO company that specialises in getting you AI citations and higher rankings on Google. I’d like to offer you a 100% free SEO audit for your website. Would you be interested?

Hi, I represent an SEO company that specialises in getting you AI citations and higher rankings on Google. I’d like to offer you a 100% free SEO audit for your website. Would you be interested?

good