The thought of an interview can be nerve-wracking, but the right preparation can make all the difference. Explore this comprehensive guide to Assembly and Testing interview questions and gain the confidence you need to showcase your abilities and secure the role.

Questions Asked in Assembly and Testing Interview

Q 1. Explain the difference between verification and validation in assembly testing.

In assembly testing, verification and validation are distinct but equally crucial processes ensuring the quality of the final product. Think of it like baking a cake: verification checks if you’re following the recipe correctly (are you using the right ingredients and steps?), while validation checks if the final product meets your expectations (is the cake delicious and properly baked?).

Verification focuses on confirming that the assembled product adheres to the design specifications. This involves detailed checks at each stage of assembly, comparing the actual implementation against the intended design. For example, verifying that the correct number of components are used, that connections are made properly, and that components are placed in the right location.

Validation, on the other hand, focuses on determining if the assembled product meets the overall requirements and functionalities. This involves testing the complete assembly to ensure it performs as intended. For example, testing the functionality of a circuit board after assembly to ensure all components work together correctly, or validating the structural integrity of a mechanical assembly under load.

In essence, verification confirms that you built the product *right*, while validation confirms that you built the *right* product.

Q 2. Describe your experience with automated test equipment (ATE).

I have extensive experience using Automated Test Equipment (ATE) in various assembly testing contexts. My experience spans different ATE platforms, including Teradyne, Advantest, and Schlumberger systems. I’m proficient in programming ATE systems using languages like C++, Python, and proprietary ATE scripting languages. A significant portion of my work involved developing and implementing automated test routines for high-volume manufacturing of complex electronic assemblies. This includes defining test strategies, writing test programs, configuring the ATE hardware, and analyzing test results.

For instance, I developed an automated test program for a high-speed data acquisition system that used a Teradyne UltraFLEX system. This program automatically tested the functionality of multiple analog and digital circuits, with a throughput exceeding 100 units per hour. The process involved analyzing the circuit schematics, creating a comprehensive test plan, implementing the test program in the ATE’s scripting language, debugging and optimizing the test sequence, and creating comprehensive reports with statistical analysis of test outcomes.

Q 3. How do you handle test failures during assembly?

Handling test failures during assembly requires a systematic approach. The first step is always to thoroughly document the failure. This includes documenting the specific test that failed, the exact error message (if any), and relevant environmental conditions. After documentation, I typically follow these steps:

- Isolate the Problem: Using debugging tools and techniques, I narrow down the potential sources of the failure. This may involve visual inspection, checking component values, and using diagnostic tools.

- Root Cause Analysis: Once the problem area is identified, I conduct a root cause analysis to determine the underlying reason for the failure. This may involve reviewing assembly procedures, inspecting component quality, and examining the design specifications.

- Corrective Action: Based on the root cause analysis, I implement appropriate corrective actions. This may involve repairing faulty components, correcting assembly procedures, or modifying the design.

- Verification and Retesting: Once the corrective actions are implemented, I re-run the test to verify that the failure has been resolved. I also perform additional tests to ensure that the correction didn’t introduce other issues.

- Documentation and Reporting: All actions taken, including the root cause analysis and corrective actions, are meticulously documented and reported. This information is vital for continuous improvement and preventing similar failures in the future.

For example, in one instance where a power supply consistently failed during testing, systematic investigation revealed a faulty solder joint which was easily fixed after correcting the soldering technique. Proper documentation ensured this issue wasn’t repeated.

Q 4. What are the common types of assembly tests?

Common types of assembly tests encompass a broad spectrum depending on the assembly’s complexity and intended functionality. These tests can be categorized as:

- In-circuit testing (ICT): Tests individual components and their interconnections on a printed circuit board (PCB) to identify faulty components or incorrect connections.

- Functional testing: Verifies that the assembled product performs its intended function according to specifications. This may involve testing the overall functionality, individual modules, or specific performance metrics.

- Environmental testing: Assesses the assembly’s ability to withstand environmental conditions such as temperature extremes, humidity, vibration, and shock. This is crucial for products destined for harsh environments.

- Mechanical testing: Evaluates the mechanical properties of the assembly, such as strength, durability, and dimensional accuracy. Examples include stress testing, fatigue testing, and dimensional verification.

- Electrical testing: Verifies the electrical characteristics of the assembly, such as voltage, current, resistance, and impedance. This often includes measurements using multimeters, oscilloscopes, and other specialized electrical test equipment.

The choice of tests often depends on the assembly type. For example, a complex electronic assembly might require ICT, functional, and environmental testing, while a simpler mechanical assembly might focus on mechanical and dimensional tests.

Q 5. Explain your experience with different testing methodologies (e.g., unit, integration, system).

My experience encompasses various testing methodologies, applied strategically depending on the complexity of the system under test.

- Unit testing: I have extensively used this at the component level within an assembly, testing individual parts independently to isolate faults. For instance, testing individual transistors before integration into a larger circuit. This allows faster debugging and problem identification.

- Integration testing: I’m skilled in assembling and testing multiple components or modules together to ensure proper interaction and communication. This is vital for identifying interface issues between different parts of the final assembly.

- System testing: I’m proficient in evaluating the completed assembly as a whole to ensure that it meets the overall design specifications and fulfills its intended functionality. This includes testing against requirements, performance criteria, and user acceptance criteria.

In practice, these methods are often combined; unit testing informs integration testing, which then informs system testing. A well-structured approach using these methods ensures thorough testing and minimizes unexpected problems later in the process.

Q 6. Describe your experience with debugging assembly errors.

Debugging assembly errors requires a methodical approach that combines technical expertise with problem-solving skills. My process typically includes:

- Reproduce the error: The first step is to reliably reproduce the error. This often involves careful execution of the failing test case, noting all parameters and conditions.

- Analyze the error: Using debugging tools such as logic analyzers, oscilloscopes, and debuggers, I examine the assembly’s behavior during the error. This might involve examining signals, memory contents, and program flow.

- Isolating the Problem: I use a divide-and-conquer approach, systematically isolating the faulty component or section of the code. This may involve removing components or using partial assemblies to narrow down the source of the problem.

- Review Design and Assembly Procedures: A thorough review of the design specifications and assembly procedures can identify potential issues leading to the error. This includes checking for design flaws, misinterpretations, or assembly errors.

- Implement Corrective Actions: After identifying the root cause, I implement the necessary corrections, which can range from replacing faulty components to modifying the software or hardware design.

- Retest: Once corrections are made, I rigorously retest the assembly to confirm that the error has been resolved.

For example, a recent issue with an embedded system revealed a timing conflict caused by inaccurate clock signal generation after careful signal analysis and a design review.

Q 7. How do you ensure the accuracy and reliability of your test results?

Ensuring accurate and reliable test results is paramount. My approach focuses on several key aspects:

- Calibration and Maintenance: Regular calibration and maintenance of test equipment are essential to guarantee the accuracy of measurements. This includes documented calibration schedules and rigorous maintenance procedures.

- Test Fixture Design: Well-designed test fixtures minimize measurement errors and ensure consistent testing conditions. Proper grounding, shielding, and signal integrity are vital.

- Test Procedure Development: Clear and unambiguous test procedures prevent human error and ensure consistent results. The procedures should include detailed steps, expected outcomes, and pass/fail criteria.

- Statistical Process Control (SPC): Implementing SPC techniques helps monitor test data for trends and outliers, providing insights into process variation and potential problems. Control charts, for example, are valuable tools for this.

- Traceability: Maintaining traceability throughout the entire testing process is crucial. This involves careful record-keeping, documenting all test results, calibration data, and corrective actions taken.

- Peer Review: Having colleagues review test procedures and results adds another layer of verification and helps identify potential biases or errors.

Through these measures, confidence in the reliability and accuracy of test results is significantly enhanced, leading to higher-quality products and fewer costly mistakes.

Q 8. What are some common challenges in assembly testing and how have you overcome them?

Assembly testing, while crucial for ensuring product quality, presents unique challenges. One major hurdle is the complexity of the process itself. With numerous components and steps, isolating the root cause of a failure can be time-consuming. Another significant challenge lies in achieving high test coverage, as testing every possible combination of parts and assembly sequences can be impractical. Finally, balancing speed and thoroughness is critical; we need to deliver products quickly without compromising quality.

To overcome these challenges, I’ve employed several strategies. For complex failures, I use a structured debugging approach, starting with a visual inspection and gradually narrowing down the potential culprits. I utilize fault injection techniques—intentionally introducing minor defects to examine error handling and resilience. In terms of test coverage, I prioritize risk-based testing, focusing on areas identified as higher risk through Failure Mode and Effects Analysis (FMEA). Automation is also key – robotic systems or specialized tools execute repetitive tests, allowing us to increase testing speed and reduce human error. Lastly, I meticulously analyze historical data to identify recurring issues, allowing preventative measures to be implemented to reduce future problems.

Q 9. Describe your experience with test fixture design and development.

Test fixture design is fundamental to effective assembly testing. A well-designed fixture ensures consistent and repeatable testing. My experience encompasses designing fixtures for a variety of applications, from simple mechanical clamping systems to complex automated setups integrating sensor feedback. I’ve worked with both off-the-shelf and custom-designed fixtures. A critical aspect of my work is to account for potential variations in the components being tested—this involves designing fixtures that can accommodate tolerances and still provide accurate measurements.

For instance, in testing the assembly of a small motor, I designed a fixture that precisely positioned the motor housing, allowing for consistent torque measurement during the assembly process. The fixture included adjustable clamping mechanisms to accommodate minor variations in the housing dimensions. It also incorporated sensors to measure torque and rotational speed. The software controlling the fixture automated the torque application and recorded the readings, providing reliable, repeatable data. This automated approach significantly improved efficiency compared to manual testing.

Q 10. How do you manage and document test procedures?

Managing and documenting test procedures is essential for reproducibility and traceability. My approach involves a combination of structured documentation and version control. I utilize a standardized template for test procedures, including detailed steps, expected results, and acceptance criteria. This template ensures consistency across all test procedures and allows for easy review and update. The procedures are stored in a centralized repository (usually a version control system like Git) to facilitate collaborative work and track changes over time. Each revision is tagged with a date and description, making it simple to audit the history of testing.

Furthermore, test reports are automatically generated after each test run, summarizing the results and highlighting any failures. These reports include relevant data points such as timestamps, sensor readings, and images captured during the test. This detailed documentation provides a complete audit trail, allowing for quick identification of problems and improved future iterations.

Q 11. What software or tools have you used for assembly testing?

Throughout my career, I’ve used a wide range of software and tools for assembly testing. These include National Instruments LabVIEW for data acquisition and control of automated test systems; Python with libraries like NumPy and SciPy for data analysis and statistical process control; and specialized software from equipment vendors for calibration and sensor management. For example, in one project, we used LabVIEW to interface with a vision system to automatically inspect the assembly of printed circuit boards, identifying any misaligned components or soldering defects. For managing large datasets generated during testing, I relied on databases to store and retrieve data efficiently. The selection of the tools always depends on the specific requirements of the project, balancing cost, functionality, and ease of use.

Q 12. Explain your experience with statistical process control (SPC) in assembly.

Statistical Process Control (SPC) plays a crucial role in monitoring and improving assembly processes. My experience with SPC involves using control charts (like X-bar and R charts, and C and U charts) to track key process parameters, such as assembly time, defect rate, and component dimensions. By regularly monitoring these charts, I can identify trends and patterns indicating potential issues before they lead to major problems. For example, an upward trend in defect rate might signal the need for adjustments to the assembly process or preventative maintenance of equipment.

The data collected from SPC charts is also utilized to determine process capability indices (Cp and Cpk), offering a quantitative assessment of the process’s ability to meet specifications. This information assists in setting goals for continuous improvement, and by identifying the root cause of assignable causes, we can focus efforts on the most significant areas for quality enhancement.

Q 13. How do you prioritize test cases based on risk and criticality?

Prioritizing test cases is vital, especially when dealing with constrained time and resources. I utilize a risk-based approach, employing techniques such as Failure Mode and Effects Analysis (FMEA) to identify potential failure modes and their associated risks. Test cases are then prioritized based on the severity and probability of these failure modes. For instance, a failure mode with high severity (e.g., safety-critical) and high probability receives top priority, while less critical failures are assigned lower priority.

I also consider the criticality of different system functionalities. Test cases focused on core functionalities and essential components are usually prioritized higher. This ensures that the most important aspects of the product are thoroughly tested. Alongside FMEA, I consider test coverage metrics to ensure that we are testing the most crucial functionalities while optimizing our efforts.

Q 14. Describe your experience with different types of sensors used in assembly testing.

My experience with sensors in assembly testing covers a broad range of technologies. These include force sensors (measuring insertion force or clamping pressure), torque sensors (assessing the tightness of fasteners), vision systems (inspecting component alignment and quality), proximity sensors (detecting the presence of components), and temperature sensors (monitoring heat build-up during soldering or other processes). The choice of sensor depends heavily on the specific testing requirements.

For example, in an automotive assembly line, we might use vision systems to ensure that all components are correctly installed and oriented, while force sensors would monitor the insertion force during the assembly of critical components. In addition to choosing the right sensors, the data acquisition, processing and analysis methodology is critical. For high-speed lines, very fast response times from sensors and processing is important. Careful calibration of sensors and maintaining their accuracy are paramount.

Q 15. How do you troubleshoot issues related to test equipment malfunctions?

Troubleshooting test equipment malfunctions requires a systematic approach. Think of it like diagnosing a car problem – you wouldn’t just start replacing parts randomly. Instead, you follow a logical process.

- Initial Observation: Carefully note the error messages, unusual readings, or behavior exhibited by the equipment. Document everything precisely.

- Calibration Check: First, ensure the equipment is properly calibrated. A simple calibration issue can often mimic a more serious problem.

- Visual Inspection: Look for any obvious physical damage, loose connections, or signs of overheating. A burnt resistor or a loose cable is easily missed but can cause significant problems.

- Component Testing: If the problem persists, systematically test individual components. This might involve isolating sections of the equipment and verifying their functionality using known good inputs and checking against expected outputs.

- Signal Tracing: Using an oscilloscope or logic analyzer, trace the signals through the equipment to identify the point of failure. This is where understanding the internal workings of the equipment is crucial.

- Reference Documentation: Consult the equipment’s service manual, schematics, or troubleshooting guides. Manufacturers often provide valuable resources.

- Seeking External Support: If all else fails, contact the manufacturer’s support or a qualified technician. Sometimes, specialized knowledge is required.

For example, I once encountered a situation where a spectrum analyzer displayed erratic readings. After careful inspection, I found a loose internal connection causing intermittent signal loss. A simple resoldering fixed the problem. In another case, a faulty calibration led us down a rabbit hole of complex troubleshooting before realizing the issue was simple miscalibration.

Career Expert Tips:

- Ace those interviews! Prepare effectively by reviewing the Top 50 Most Common Interview Questions on ResumeGemini.

- Navigate your job search with confidence! Explore a wide range of Career Tips on ResumeGemini. Learn about common challenges and recommendations to overcome them.

- Craft the perfect resume! Master the Art of Resume Writing with ResumeGemini’s guide. Showcase your unique qualifications and achievements effectively.

- Don’t miss out on holiday savings! Build your dream resume with ResumeGemini’s ATS optimized templates.

Q 16. Explain your understanding of design for testability (DFT).

Design for Testability (DFT) focuses on making a design easier to test. It’s all about building testability into the product from the beginning, rather than adding it as an afterthought. Think of it as designing a house with easy access to all systems for maintenance. This saves time and money in the long run.

- Accessibility: DFT ensures easy access to internal nodes and signals for testing. This often involves adding test points or using boundary-scan technology (discussed later).

- Controllability: The ability to control internal signals and set the system into various states for testing is crucial. This might involve adding special test modes or registers.

- Observability: It’s vital to be able to observe the internal state of the system easily during testing. This often includes dedicated outputs for monitoring critical signals.

- Built-in Self-Test (BIST): Integrating self-testing capabilities into the design allows the system to check its own functionality without external equipment. This is particularly useful for deployed systems where access is limited.

A good DFT strategy can significantly reduce the time and cost of testing, leading to higher quality products and faster time-to-market.

Q 17. How familiar are you with JTAG and boundary scan testing?

I’m highly familiar with JTAG (Joint Test Action Group) and boundary-scan testing. JTAG is a standardized interface that allows access to internal nodes of an integrated circuit for testing and programming purposes. Boundary-scan is a specific application of JTAG that focuses on testing the connections between components on a printed circuit board (PCB).

Boundary-scan uses dedicated test circuitry within each integrated circuit to allow testing of the connections between the ICs. Imagine it like having tiny little sensors on each chip that can check their connections to neighbouring chips. This is invaluable for finding problems like shorts, open circuits, or incorrect routing on a PCB.

I have extensive experience using JTAG and boundary-scan tools for both in-circuit testing (ICT) and functional testing. It’s incredibly useful for identifying hard-to-find issues that might otherwise only be discovered after assembly.

Q 18. Describe your experience with programming test equipment (e.g., LabVIEW, Python).

I have significant experience programming test equipment using LabVIEW and Python. LabVIEW’s graphical programming environment is particularly well-suited for instrument control and data acquisition. I’ve used it to develop automated test sequences for various applications, including data logging, signal analysis, and stimulus generation. Think of LabVIEW as building with LEGO – you visually connect blocks representing different instrument functions and data processing steps.

Python, with its versatility and extensive libraries like PyVISA and Numpy, provides a powerful alternative for instrument control and data analysis. Its flexibility allows for more complex automation and integration with other systems. I’ve used Python to create custom test scripts and integrate them with database systems for data management and reporting.

For example, I developed a LabVIEW application to control a multi-channel data acquisition system, performing automated measurements and generating comprehensive test reports. Another project involved writing a Python script to automate a complex system-level test, reducing test time by over 50%.

Q 19. How do you handle conflicting priorities between speed and quality during assembly testing?

Balancing speed and quality in assembly testing is a crucial aspect of efficient manufacturing. It’s a constant negotiation – cutting corners on quality to save time can be disastrous, while excessive testing slows down production. The key is finding the optimal balance.

- Risk-Based Testing: Prioritize testing based on the criticality of the components and potential failure modes. Focus more resources on high-risk areas.

- Statistical Sampling: Instead of testing every single unit, use statistical methods to determine a representative sample size. This reduces testing time while still providing confidence in the quality.

- Automation: Automation dramatically speeds up testing without compromising quality. Automated test equipment can perform repetitive tasks much faster and more consistently than humans.

- Process Optimization: Continuously evaluate the assembly and testing processes to identify and eliminate bottlenecks. Streamlining processes reduces both time and errors.

For instance, implementing a statistical sampling plan reduced our testing time by 40% without impacting the quality of our product. This enabled us to significantly increase throughput while maintaining a high level of reliability.

Q 20. How do you ensure traceability throughout the assembly and testing process?

Traceability in assembly and testing is paramount. It ensures that every component, process, and test result can be linked back to its origin and history. Think of it as a meticulously maintained audit trail.

- Unique Identification: Assigning unique identifiers (serial numbers, barcodes) to each component and assembly allows for clear tracking throughout the entire process.

- Database Management: Use a database system to record all information related to components, assemblies, test results, and personnel involved. This creates a centralized repository of information.

- Automated Data Logging: Integrate automated data logging into the test equipment to record test results directly into the database. This minimizes the potential for human error.

- Documentation Control: Maintain detailed documentation of all procedures, test plans, and test results. This ensures that processes are consistently followed and results are easily accessible.

A robust traceability system provides transparency and accountability, allowing quick identification of issues and enabling corrective actions. It also plays a vital role in regulatory compliance and quality assurance.

Q 21. What is your experience with root cause analysis of assembly failures?

Root cause analysis of assembly failures requires a structured approach. I typically follow a framework like the 5 Whys, Fishbone diagrams (Ishikawa diagrams), or Failure Mode and Effects Analysis (FMEA). The goal is to move beyond simply identifying the symptom and get to the root of the problem.

- Data Collection: Gather all relevant information regarding the failure, including test data, visual inspection reports, and operator logs.

- Symptom Definition: Clearly define the observed failure. Avoid jumping to conclusions.

- Root Cause Identification: Use techniques like the 5 Whys (repeatedly asking “Why?” to peel back the layers of cause and effect) or a Fishbone diagram (identifying potential causes categorized by factors like materials, methods, environment) to pinpoint the underlying causes.

- Corrective Actions: Develop and implement corrective actions to address the identified root causes. This might involve changes to the design, processes, or components.

- Verification: Verify the effectiveness of the corrective actions to ensure the problem is resolved and doesn’t recur.

For example, using the 5 Whys, I once traced a recurring solder joint failure back to an inadequate pre-heating stage in the reflow oven. A simple adjustment to the oven’s profile eliminated the problem.

Q 22. Describe your experience with fault isolation and diagnosis in assembly.

Fault isolation and diagnosis in assembly language is like detective work. You’re given a program that’s malfunctioning, and you need to pinpoint the exact instruction causing the problem. My approach involves a systematic process. First, I’d carefully examine the assembly code, looking for obvious errors like incorrect addressing modes, invalid instructions, or logical flaws. Then, I’d utilize debugging tools, such as a debugger with breakpoints and single-stepping capabilities, to execute the code line by line. This allows me to monitor register values, memory contents, and flags at each step, identifying the point where the program deviates from expected behavior.

For instance, if a program unexpectedly crashes, setting a breakpoint just before the suspected crash location helps isolate the culprit. If the issue involves incorrect data manipulation, carefully observing the register contents helps trace the source of corrupted data. I might also incorporate logging statements within the assembly code to track variable values or program flow during execution, providing a trail for analysis. Finally, after identifying the fault, I meticulously document the steps involved in identifying and resolving the issue, including the relevant code snippets and any observations made during the debugging process.

In one project involving embedded systems, a device malfunctioned due to a subtle issue in the interrupt handling routine. Using a debugger, I pinpointed an incorrect calculation affecting the interrupt vector, leading to a crash. By meticulously reconstructing the execution flow and examining the register states, I swiftly identified and corrected the fault.

Q 23. How familiar are you with different types of failure analysis techniques?

My familiarity with failure analysis techniques is extensive. I’m proficient in several methods. Static analysis, for example, involves inspecting the code without actually executing it. This is useful for detecting potential problems like buffer overflows or undefined behavior, early in the development process. Dynamic analysis, on the other hand, involves executing the code and observing its behavior. This could involve techniques like tracing, profiling, and memory debugging to identify runtime issues.

Beyond these, I’ve used fault injection techniques, deliberately introducing errors to assess the robustness of the system. This can reveal vulnerabilities that might otherwise go unnoticed. Furthermore, I have experience in using reverse engineering techniques to understand the functionality of existing systems and isolate points of failure. For example, I might use disassemblers to convert machine code back into assembly language to better understand its operation and identify potential weak points.

In a real-world scenario, a system might fail due to a race condition. By utilizing dynamic analysis and observing execution timing using profiling tools, coupled with static analysis of concurrency control mechanisms, I can successfully diagnose the root cause.

Q 24. Explain your experience with generating test reports and documentation.

Generating clear and comprehensive test reports and documentation is critical to ensure effective communication and collaboration. My reports typically include a detailed summary of the testing activities, a list of test cases executed, the results of each test case (pass/fail), a description of any defects or failures encountered, and a comprehensive analysis of the findings. I use various tools to generate these reports. For instance, I use spreadsheets or dedicated test management software for data organization and then integrate this information into comprehensive reports.

I also ensure the reports contain sufficient detail for developers to understand the nature of defects and reproduce them. This usually involves including screenshots, logs, and code snippets. Moreover, I believe that well-structured documentation is equally essential. I adhere to standard formats (e.g., using templates) to enhance consistency and readability. The documentation includes sections detailing test methodologies, procedures, and any specific tools or configurations used. Clear diagrams or flowcharts are incorporated where appropriate to visualize complex processes.

In a recent project, we discovered an intermittent network connectivity issue during testing. My comprehensive report clearly outlined steps to reproduce the problem, providing logs capturing the network errors and timestamps. This detailed reporting enabled developers to promptly identify and fix the underlying network driver issue.

Q 25. What experience do you have with working in a cleanroom environment?

I have extensive experience working in cleanroom environments, specifically in the context of embedded systems development and testing. Cleanroom environments are crucial for preventing contamination that could affect sensitive components or lead to unreliable results. My experience encompasses adhering to strict protocols regarding personal protective equipment (PPE), such as wearing cleanroom suits, gloves, and head coverings. I’m proficient in following contamination control procedures, including electrostatic discharge (ESD) precautions.

My understanding extends to cleanroom classifications (e.g., ISO Class 7) and the importance of maintaining a controlled environment. I understand the procedures for entering and exiting cleanrooms, as well as the proper handling and storage of equipment and materials within a cleanroom setting. In practice, this means following established protocols diligently. This includes performing periodic equipment checks, cleaning workstations, and logging activities to guarantee the integrity and cleanliness of the working space.

During a project involving the assembly of high-precision sensors, my adherence to cleanroom protocols ensured the avoidance of contamination that could have negatively impacted the sensors’ accuracy and reliability.

Q 26. How do you stay updated on the latest technologies and advancements in assembly and testing?

Staying updated in the rapidly evolving fields of assembly and testing requires a multi-pronged approach. I actively participate in industry conferences and webinars, which expose me to the latest research, trends, and best practices. I regularly read industry publications and journals, keeping abreast of technological advancements and new tools. Furthermore, I actively engage with online communities and forums, participating in discussions and leveraging the collective knowledge within these networks.

I also make use of online learning platforms offering courses and tutorials on new technologies and methodologies. This allows me to deepen my knowledge in areas like automated testing frameworks, advanced debugging techniques, and new assembly language features. Finally, I engage in personal projects, applying new knowledge and exploring the practical aspects of emerging technologies. This hands-on experience helps solidify understanding and foster innovation.

Recently, I completed a course on a new automated test framework, significantly improving our team’s testing efficiency.

Q 27. Describe your experience with working with cross-functional teams in an assembly environment.

Collaborating effectively within cross-functional teams is essential in assembly environments. My experience includes working alongside engineers from different disciplines, including hardware, software, and manufacturing, on numerous projects. I utilize strong communication skills, ensuring clarity and understanding across teams with differing technical backgrounds. I actively participate in team meetings and discussions, contributing my expertise and listening attentively to others’ perspectives.

To ensure smooth collaboration, I leverage collaborative tools to facilitate communication and project management. This includes using shared documentation systems, version control repositories, and project management software. I’m adept at resolving conflicts and facilitating constructive discussions, always prioritizing the project’s success. I see my role as a bridge between the different disciplines, translating technical details and ensuring alignment on goals and priorities.

In one project, I worked with hardware engineers to debug a board-level issue, providing assembly-level diagnostics that helped identify a faulty component. This required a clear understanding of both the hardware and the software aspects of the system.

Q 28. How do you handle pressure and deadlines in a fast-paced assembly and testing environment?

Working in a fast-paced assembly and testing environment often involves handling pressure and tight deadlines. My approach involves prioritization and effective time management. I start by carefully reviewing project requirements and timelines, breaking down complex tasks into smaller, manageable components. I use project management techniques to track progress and identify potential bottlenecks early on. Open communication with team members is crucial, allowing for proactive problem-solving and adjustments to the schedule if needed.

Furthermore, I cultivate a proactive approach, anticipating potential challenges and formulating contingency plans. This minimizes disruptions and ensures the project stays on track. When facing intense pressure, I focus on remaining calm and organized. I break down complex problems into smaller, achievable steps, helping me maintain focus and avoid feeling overwhelmed. This methodical approach ensures that even under pressure, quality remains paramount.

During a critical product launch, we faced an unexpected delay due to a hardware fault. By remaining calm, prioritizing tasks, and effectively communicating with the team, we were able to mitigate the impact and deliver the product on time, with minimal quality compromise.

Key Topics to Learn for Assembly and Testing Interview

- Assembly Language Fundamentals: Understanding registers, memory addressing modes, instruction sets, and basic assembly programming concepts. Practical application: Analyzing assembly code to understand program execution flow and optimize performance.

- Testing Methodologies: Grasping different testing approaches such as unit testing, integration testing, system testing, and regression testing. Practical application: Designing and implementing effective test plans and test cases to ensure software quality.

- Debugging Techniques: Mastering debugging tools and techniques for identifying and resolving software defects in assembly code. Practical application: Using debuggers to step through code, inspect variables, and pinpoint the root cause of errors.

- Software Development Lifecycle (SDLC): Familiarity with the stages of the SDLC and how testing fits within each phase. Practical application: Understanding the role of testing in ensuring software meets requirements and quality standards.

- Test Automation Frameworks: Exposure to automated testing frameworks relevant to assembly language projects. Practical application: Writing automated tests to improve efficiency and reduce manual testing efforts.

- Version Control Systems (e.g., Git): Understanding the importance of version control in collaborative software development and testing. Practical application: Using Git for tracking changes, collaborating with team members, and managing different versions of code and test scripts.

- Understanding Assembly’s Role in Embedded Systems: Explore the specific challenges and considerations of testing in embedded systems environments. Practical application: Designing tests to account for hardware limitations and real-time constraints.

Next Steps

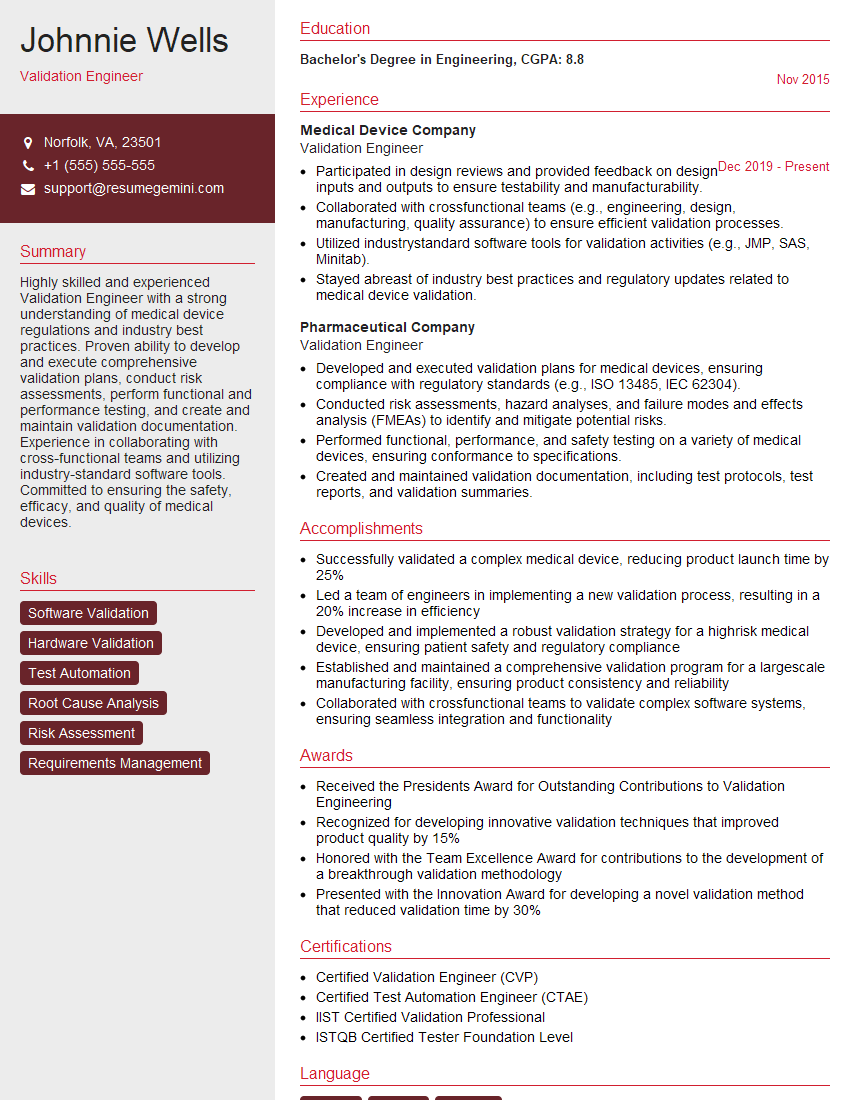

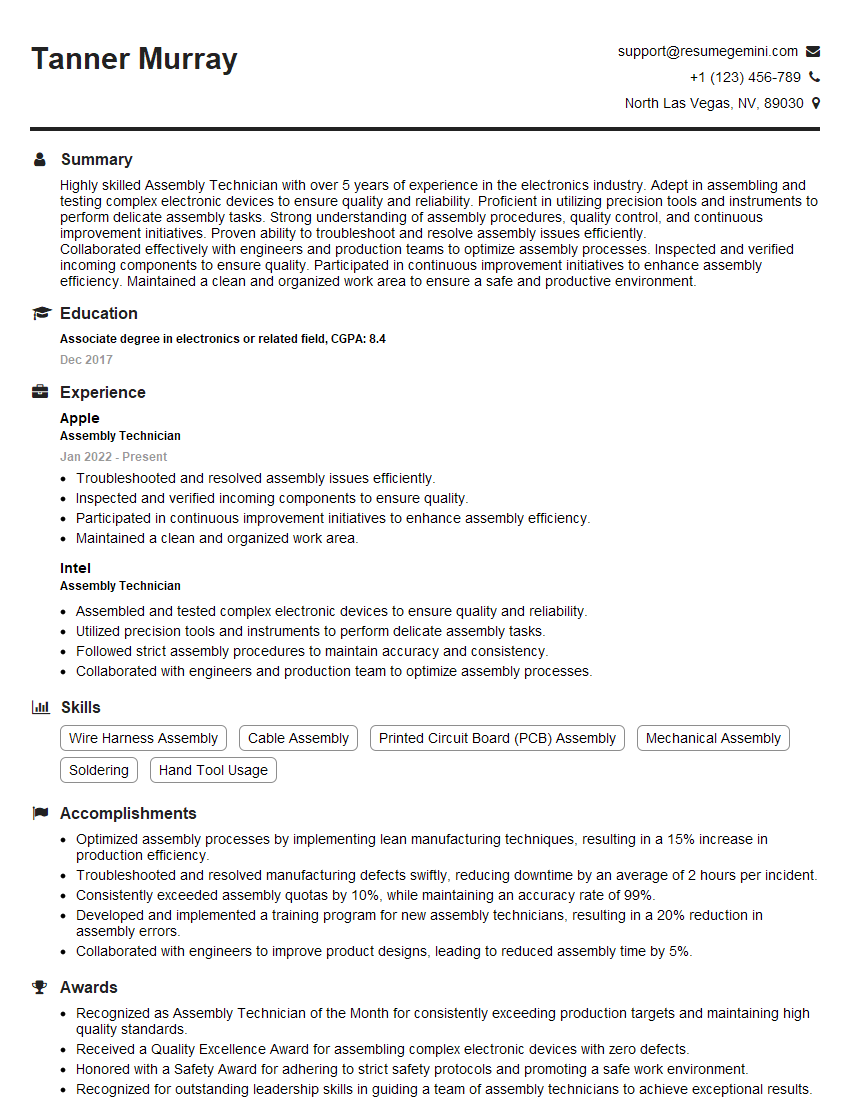

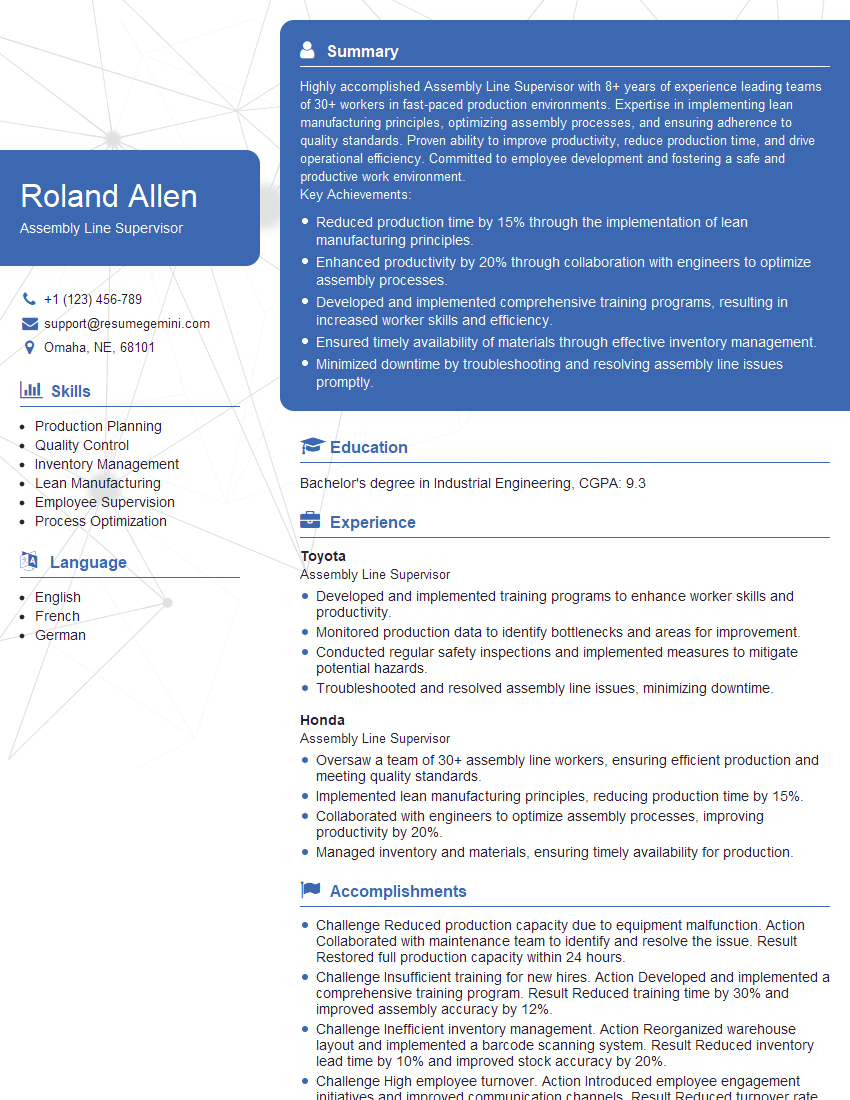

Mastering Assembly and Testing opens doors to exciting career opportunities in embedded systems, game development, performance optimization, and more. A strong foundation in these areas significantly enhances your value to potential employers. To maximize your job prospects, create an ATS-friendly resume that clearly highlights your skills and experience. ResumeGemini is a trusted resource for building professional resumes that make a lasting impression. They provide examples of resumes tailored to Assembly and Testing roles to guide you through the process. Take the next step towards your dream job – craft a compelling resume that showcases your expertise.

Explore more articles

Users Rating of Our Blogs

Share Your Experience

We value your feedback! Please rate our content and share your thoughts (optional).

What Readers Say About Our Blog

Hello,

We found issues with your domain’s email setup that may be sending your messages to spam or blocking them completely. InboxShield Mini shows you how to fix it in minutes — no tech skills required.

Scan your domain now for details: https://inboxshield-mini.com/

— Adam @ InboxShield Mini

Reply STOP to unsubscribe

Hi, are you owner of interviewgemini.com? What if I told you I could help you find extra time in your schedule, reconnect with leads you didn’t even realize you missed, and bring in more “I want to work with you” conversations, without increasing your ad spend or hiring a full-time employee?

All with a flexible, budget-friendly service that could easily pay for itself. Sounds good?

Would it be nice to jump on a quick 10-minute call so I can show you exactly how we make this work?

Best,

Hapei

Marketing Director

Hey, I know you’re the owner of interviewgemini.com. I’ll be quick.

Fundraising for your business is tough and time-consuming. We make it easier by guaranteeing two private investor meetings each month, for six months. No demos, no pitch events – just direct introductions to active investors matched to your startup.

If youR17;re raising, this could help you build real momentum. Want me to send more info?

Hi, I represent an SEO company that specialises in getting you AI citations and higher rankings on Google. I’d like to offer you a 100% free SEO audit for your website. Would you be interested?

Hi, I represent an SEO company that specialises in getting you AI citations and higher rankings on Google. I’d like to offer you a 100% free SEO audit for your website. Would you be interested?

good