Interviews are more than just a Q&A session—they’re a chance to prove your worth. This blog dives into essential Site Reliability Engineering interview questions and expert tips to help you align your answers with what hiring managers are looking for. Start preparing to shine!

Questions Asked in Site Reliability Engineering Interview

Q 1. Explain the four golden signals of monitoring.

The four golden signals—latency, traffic, errors, and saturation—are fundamental metrics for monitoring the health and performance of any system. They provide a holistic view, allowing engineers to quickly identify and diagnose issues.

- Latency: This measures the response time of your system. High latency indicates slow performance, potentially due to bottlenecks or inefficient code. For example, if your website takes more than 3 seconds to load, that’s high latency and a bad user experience.

- Traffic: This tracks the volume of requests or transactions your system is handling. Sudden spikes or drops can signal problems, such as a surge in demand or a service outage. Think of it like the number of cars on a highway; too many, and you get traffic jams.

- Errors: This measures the rate of failed requests or exceptions within your system. A high error rate is a clear indication of problems that need immediate attention. Examples include 5xx server errors or application exceptions.

- Saturation: This refers to the resource utilization of your system. High saturation in CPU, memory, or network indicates your system is nearing its capacity limit, leading to performance degradation. Think of it like a nearly full parking lot; there’s little room for new cars.

By constantly monitoring these four signals, you gain a comprehensive understanding of your system’s health and can proactively address potential issues before they impact users.

Q 2. Describe your experience with SLOs (Service Level Objectives) and SLAs (Service Level Agreements).

SLOs (Service Level Objectives) and SLAs (Service Level Agreements) are crucial for defining and managing service expectations. SLOs define the target performance levels for a service, while SLAs are contracts that outline the consequences of failing to meet those objectives.

In my previous role, we defined SLOs for our core services, such as API response time (99.9% under 200ms), and error rates (less than 1%). We used these SLOs to drive improvements in our infrastructure and code. We then incorporated these SLOs into SLAs with our internal teams and even external clients, specifying penalties for consistent failure to meet the agreed-upon levels. For example, if we failed to meet our API response time SLO for three consecutive months, it triggered a review process to identify the root cause and develop remediation plans.

My experience with SLOs and SLAs taught me the importance of setting realistic yet ambitious targets, clearly communicating them to stakeholders, and constantly monitoring progress towards those targets. It’s a crucial aspect of establishing trust and ensuring accountability.

Q 3. How do you handle on-call rotations and escalations?

Effective on-call rotations and escalations are essential for maintaining system stability. We utilize a well-defined schedule, ensuring equitable distribution of on-call responsibilities across the team. We employ a tiered escalation system, ensuring that issues are addressed by the most appropriate individual or team.

Our process includes:

- Rotating Schedules: We use a tool to manage our on-call rotations, ensuring fair distribution and minimizing burnout. The schedule is visible to everyone, promoting transparency.

- Clear Communication Channels: We rely on tools like PagerDuty or Opsgenie for alerts and communication. This ensures rapid response to critical situations.

- Escalation Paths: If an on-call engineer can’t resolve an issue, there’s a clear path to escalate it to more senior engineers or specialized teams. This ensures that problems are addressed even during off-peak hours.

- Post-Incident Reviews: After significant incidents, we conduct thorough postmortems to analyze what happened, identify root causes, and implement preventive measures. This process continuously improves our response capabilities.

In my experience, a well-defined and practiced on-call process is key to minimizing downtime and ensuring a smooth operation, even during unexpected events.

Q 4. What are your preferred monitoring tools and why?

My preferred monitoring tools depend on the specific needs of the system, but generally, I value a layered approach combining various tools.

- Datadog: This is a powerful, centralized platform for monitoring metrics, logs, and traces across multiple services. Its dashboards, alerting, and visualization features are excellent for understanding system behavior.

- Prometheus & Grafana: This open-source combination offers great flexibility and scalability. Prometheus collects metrics, and Grafana provides beautiful dashboards and visualizations for analyzing the data. It’s particularly useful for complex systems requiring custom metrics and dashboards.

- New Relic: Excellent for application performance monitoring (APM), New Relic helps pinpoint performance bottlenecks within application code. This is invaluable for understanding how application changes impact overall system performance.

- CloudWatch (AWS), Cloud Monitoring (GCP), or Azure Monitor: These cloud-native monitoring services are essential for monitoring cloud infrastructure resources, such as compute, storage, and networking. They are deeply integrated with the respective cloud platforms.

The choice of tools often depends on factors like existing infrastructure, team expertise, budget, and the complexity of the system. I often favor a hybrid approach, combining commercial and open-source tools to leverage the strengths of each.

Q 5. Describe your experience with incident management and postmortems.

Incident management and postmortems are critical for learning from failures and preventing future incidents. My approach follows a structured process.

- Incident Response: During an incident, we prioritize containing the issue, mitigating its impact, and restoring service. This involves clear communication with stakeholders, and utilizing established runbooks.

- Postmortem Analysis: After the incident is resolved, we conduct a thorough postmortem using a blameless framework. We analyze the root cause, identify contributing factors, and develop actionable recommendations to prevent similar incidents in the future.

- Actionable Recommendations and Implementation: The postmortem is not just a report; it’s a roadmap for improvement. We assign owners to implement the recommended changes, track their progress, and verify their effectiveness.

- Documentation and Knowledge Sharing: We meticulously document the incident and its resolution, updating runbooks and internal knowledge bases. This shared knowledge helps the team learn from past mistakes and improves response times in future incidents.

I’ve found that a structured approach to incident management and postmortems fosters a culture of continuous learning and improvement, leading to a more resilient and reliable system. It also strengthens the team’s ability to handle future disruptions efficiently.

Q 6. Explain your understanding of capacity planning and performance testing.

Capacity planning and performance testing are crucial for ensuring system scalability and performance. Capacity planning involves predicting future resource needs based on historical data and projected growth. Performance testing evaluates the system’s ability to handle expected and peak loads.

My experience involves using a combination of techniques for capacity planning:

- Historical Data Analysis: We analyze historical metrics (traffic, CPU usage, memory consumption) to understand trends and predict future resource needs.

- Load Forecasting: We utilize forecasting models to project future growth based on various factors, including seasonal trends and marketing campaigns.

- Simulation and Modeling: We use simulation tools to model different scenarios and test the system’s capacity under various loads.

For performance testing, we use a mix of techniques:

- Load Testing: We simulate realistic user traffic to determine the system’s performance under expected load.

- Stress Testing: We push the system beyond its expected capacity limits to identify breaking points and bottlenecks.

- Endurance Testing: We run the system under sustained load to evaluate its stability and performance over extended periods.

Effective capacity planning and performance testing allow for proactive scaling, preventing performance bottlenecks and ensuring a positive user experience.

Q 7. How do you ensure system reliability and availability?

Ensuring system reliability and availability requires a multi-faceted approach encompassing several key strategies.

- Redundancy and Failover Mechanisms: Implementing redundant systems and failover mechanisms ensures that if one component fails, another takes over seamlessly, minimizing downtime. This includes redundant servers, databases, and networks.

- Automated Monitoring and Alerting: Proactive monitoring and automated alerting systems provide early warnings of potential issues, allowing for timely intervention and preventing major outages. This requires comprehensive instrumentation and effective alerting strategies.

- Regular Maintenance and Updates: Regular maintenance, including patching, upgrades, and security audits, is crucial for identifying and addressing vulnerabilities and ensuring system stability. A well-defined maintenance schedule is paramount.

- Disaster Recovery Planning: Developing and regularly testing a robust disaster recovery plan is vital for ensuring business continuity in case of major incidents or natural disasters. This plan should outline procedures for data backup, system restoration, and communication.

- Code Quality and Testing: Thorough testing and code reviews ensure high-quality code with fewer bugs, improving system stability and reducing the likelihood of outages. This includes unit testing, integration testing, and end-to-end testing.

- Capacity Planning and Performance Testing (as discussed above): Proactive capacity planning and rigorous performance testing prevent performance bottlenecks and ensure the system can handle expected and peak loads.

A combination of these strategies, coupled with a strong culture of reliability and continuous improvement, is critical for achieving high system reliability and availability. It’s an ongoing process that requires constant attention and adaptation.

Q 8. What is your experience with automation and scripting (e.g., Python, Bash)?

Automation and scripting are fundamental to Site Reliability Engineering (SRE). My experience spans several years, primarily using Python and Bash. I’ve leveraged Python for complex tasks like automating infrastructure provisioning, building monitoring dashboards, and creating custom tools for log analysis and incident response. For example, I developed a Python script that automatically detects and alerts on anomalous CPU usage across our server fleet, preventing performance degradation. Bash scripting has been instrumental in automating repetitive administrative tasks, such as deploying software updates and managing system configurations. I often use Bash for quick, one-off tasks due to its rapid iteration cycle and tight integration with the Linux command line. I am also proficient in using configuration management tools like Ansible and Terraform which are powerful extensions to my scripting abilities.

For instance, I once used Ansible to automate the deployment of a new microservice across multiple servers, ensuring consistent configurations and minimizing human error. This significantly improved the deployment speed and reliability. I am comfortable working with various other scripting languages as needed.

Q 9. How do you approach troubleshooting complex system issues?

Troubleshooting complex system issues requires a methodical approach. I typically follow a structured process: first, I gather as much information as possible, including error logs, metrics, and user reports. Next, I reproduce the issue if possible, as this helps isolate the problem and rule out transient errors. Then, I employ a process of elimination, starting with the most likely causes. I frequently use tools like tcpdump for network analysis, strace for system call tracing, and various monitoring dashboards to get a complete view of the system’s health.

One example involved a sudden surge in database latency. By analyzing database logs and monitoring CPU usage, I discovered a specific query causing contention. After optimizing the query, latency returned to normal. I believe in documenting every step of the troubleshooting process, which is crucial for future reference and for collaborating with other team members.

Q 10. Explain your experience with different cloud platforms (e.g., AWS, Azure, GCP).

I have extensive experience with AWS, Azure, and GCP, having designed and deployed systems on each platform. My experience includes using various services such as compute (EC2, Azure VMs, Compute Engine), storage (S3, Azure Blob Storage, Cloud Storage), databases (RDS, Azure SQL Database, Cloud SQL), and networking (VPC, Azure Virtual Network, VPC). I understand the nuances of each platform, including their strengths and weaknesses, and can choose the most appropriate service for specific requirements.

For example, I utilized AWS Lambda to build a serverless architecture for processing large datasets, taking advantage of its scalability and cost-effectiveness. On Azure, I’ve worked extensively with Azure DevOps for CI/CD pipeline implementation. On GCP, I’ve used Kubernetes Engine to orchestrate containerized applications. My understanding extends beyond individual services; I am proficient in managing billing, security, and compliance aspects across these platforms. I also have practical experience in migrating systems between cloud providers.

Q 11. Describe your experience with containerization technologies (e.g., Docker, Kubernetes).

Containerization technologies like Docker and Kubernetes are essential for modern SRE practices. My experience includes building Docker images, deploying and managing containers using Kubernetes, and implementing robust CI/CD pipelines using container registries. Docker enables efficient packaging and deployment of applications, while Kubernetes provides the orchestration and management capabilities required for scalability and high availability.

I’ve used Kubernetes to create self-healing deployments, enabling automated rolling updates and scaling based on resource utilization. For example, I implemented a Kubernetes deployment strategy that automatically scaled up the number of pods based on CPU usage, ensuring consistent application performance during peak demand. This significantly improved the application’s resilience and reduced operational overhead. I also understand the importance of container security and have practical experience implementing security best practices, such as using security contexts and pod security policies.

Q 12. What are your strategies for preventing outages and minimizing downtime?

Preventing outages and minimizing downtime requires a multi-faceted approach. This includes proactive monitoring, robust alerting, comprehensive testing (unit, integration, system), regular code deployments, and a strong incident management process. Furthermore, chaos engineering helps identify weaknesses before they result in outages. Implementing automated rollback strategies is also crucial to rapidly recover from failed deployments.

For example, we implemented a comprehensive monitoring system that provides real-time visibility into the health of our applications and infrastructure. This system generates alerts based on predefined thresholds, allowing us to respond to potential issues before they escalate into major incidents. We also conduct regular load tests to ensure the systems can handle expected peak traffic. Our strong incident management process ensures effective collaboration and knowledge sharing during and after incidents. The use of canary deployments and blue-green deployments help reduce the impact of errors on live systems.

Q 13. Explain your understanding of different database technologies and their reliability aspects.

I’m familiar with a range of database technologies, including relational databases (like MySQL, PostgreSQL, and Oracle) and NoSQL databases (like MongoDB, Cassandra, and Redis). Understanding the reliability aspects of each is crucial. Relational databases offer ACID properties (Atomicity, Consistency, Isolation, Durability), ensuring data integrity, but can be less scalable than NoSQL solutions. NoSQL databases are better suited for high-volume, unstructured data but may offer less data consistency.

The reliability of a database depends on factors such as replication, backup and recovery strategies, high availability configurations, and performance tuning. For example, I’ve implemented read replicas for improved performance and database sharding for scalability in high-volume applications. I understand the importance of proper indexing and query optimization to ensure database responsiveness. I also have experience implementing different failover mechanisms to ensure high availability. Choosing the right database technology for a specific application depends on the application’s requirements and scaling needs.

Q 14. How do you manage technical debt in a production environment?

Managing technical debt is an ongoing process in any production environment. It’s vital to balance the need for immediate improvements with long-term maintainability. My approach involves prioritizing technical debt based on its impact on reliability, performance, and security. I use tools to track and categorize technical debt. Then I establish a plan to address it systematically, often incorporating it into the sprint backlog.

For instance, I might prioritize fixing a known security vulnerability over improving the performance of an infrequently used feature. We regularly conduct code reviews to identify areas for improvement and to prevent the accumulation of new technical debt. I also advocate for writing clean, well-documented code to reduce the likelihood of future problems. Open communication within the team and with stakeholders is essential to ensure everyone understands the importance of addressing technical debt and its impact on the overall system health.

Q 15. Describe your experience with CI/CD pipelines and automation.

CI/CD (Continuous Integration/Continuous Delivery) pipelines are the backbone of modern software development, automating the process of building, testing, and deploying software. My experience spans several years, working with various tools and technologies. I’ve extensively used tools like Jenkins, GitLab CI, and CircleCI to build robust and efficient pipelines.

For example, in a previous role, we migrated from a manual deployment process to a fully automated CI/CD pipeline using Jenkins. This involved creating various stages: code commit triggers, automated build processes, comprehensive testing suites (unit, integration, and end-to-end), and finally, automated deployment to different environments (staging, production). We utilized infrastructure-as-code (IaC) with tools like Terraform to manage our infrastructure, allowing for reproducible deployments across environments. This significantly reduced deployment time from several hours to minutes, minimizing human error and increasing the frequency of releases. We implemented blue/green deployments to minimize downtime during releases, ensuring a seamless transition.

Another project involved integrating security scanning tools directly into the pipeline, performing static and dynamic analysis to catch vulnerabilities early in the development process. This proactive approach helped us maintain a high level of security in our applications.

Career Expert Tips:

- Ace those interviews! Prepare effectively by reviewing the Top 50 Most Common Interview Questions on ResumeGemini.

- Navigate your job search with confidence! Explore a wide range of Career Tips on ResumeGemini. Learn about common challenges and recommendations to overcome them.

- Craft the perfect resume! Master the Art of Resume Writing with ResumeGemini’s guide. Showcase your unique qualifications and achievements effectively.

- Don’t miss out on holiday savings! Build your dream resume with ResumeGemini’s ATS optimized templates.

Q 16. How do you balance feature development with maintaining system stability?

Balancing feature development with system stability is a crucial aspect of SRE. It’s not about choosing one over the other, but rather finding the optimal balance to ensure continuous improvement while mitigating risk. This often involves employing techniques like:

- Feature flags/toggles: Allowing features to be deployed but disabled until fully tested and ready for release. This allows for quicker deployments without immediate impact on the production system.

- Canary deployments: Rolling out new features to a small subset of users before a full release, allowing for early detection and mitigation of issues in a controlled environment.

- A/B testing: Comparing different versions of features to identify which performs better and has less negative impact on the system.

- Prioritization and risk assessment: Carefully prioritizing features based on their impact and risk, ensuring that the most critical features are developed and tested thoroughly.

- Robust monitoring and alerting: Implementing comprehensive monitoring systems that alert on potential issues, allowing for quick responses and preventing minor issues from escalating.

A practical example involved prioritizing a crucial security patch over a new feature request. Although the new feature was highly requested, addressing the security vulnerability was paramount to maintaining system stability and preventing potential breaches. This highlights the importance of context-aware prioritization.

Q 17. What metrics do you use to measure the success of your SRE efforts?

Measuring the success of SRE efforts requires a multi-faceted approach, focusing on both system reliability and team efficiency. Key metrics include:

- MTTR (Mean Time To Recovery): The average time it takes to restore service after an outage. Lower MTTR indicates better incident response capabilities.

- MTBF (Mean Time Between Failures): The average time between system failures. A higher MTBF indicates greater system reliability.

- Error Rate/Failure Rate: The frequency of errors or failures in the system. Lower rates suggest improved stability.

- Availability/Uptime: The percentage of time the system is operational. Higher uptime is the primary goal.

- Change Failure Rate: The percentage of deployments that cause incidents. Lower rates indicate better deployment processes and stability.

- Alert fatigue/Noise reduction rate: Monitoring the effectiveness of alert systems by measuring false positives and ensuring alerts are actionable and valuable.

- Team velocity & efficiency: Measuring the team’s ability to complete tasks, address issues, and contribute to improvements. Examples include time spent on on-call duties, time spent on incident response, and the number of incidents resolved.

By tracking these metrics, we gain insights into system performance, identify areas for improvement, and demonstrate the impact of SRE initiatives.

Q 18. Explain your experience with chaos engineering and fault injection.

Chaos engineering is a proactive approach to building resilience by intentionally injecting failures into a system to identify vulnerabilities before they impact users. My experience involves designing and executing chaos experiments using tools like Gremlin and LitmusChaos.

In one project, we simulated network partitions to test the resilience of our microservices architecture. By strategically introducing latency and disconnections, we identified a single point of failure within our service mesh. This discovery allowed us to proactively implement circuit breakers and improve fault tolerance, preventing potential cascading failures in a real-world scenario. We also used fault injection techniques to test the behavior of our database under various load conditions, identifying performance bottlenecks and improving database resilience.

It’s crucial to approach chaos engineering systematically, beginning with small-scale experiments and gradually increasing complexity. Proper planning, clear objectives, and detailed analysis of results are essential for obtaining valuable insights and improving system resilience.

Q 19. How do you ensure security best practices are followed in your SRE work?

Security is paramount in SRE. Ensuring best practices are followed involves a multi-layered approach:

- Secure coding practices: Enforcing secure coding standards and conducting regular security code reviews.

- Vulnerability scanning and penetration testing: Regularly scanning for vulnerabilities and performing penetration testing to identify security weaknesses.

- Principle of least privilege: Granting users only the necessary permissions to perform their tasks.

- Secure configuration management: Using infrastructure-as-code tools to manage infrastructure configurations and ensure security configurations are applied consistently and correctly.

- Secrets management: Using dedicated secrets management tools to securely store and manage sensitive information like API keys and database credentials. Examples include HashiCorp Vault or AWS Secrets Manager.

- Regular security audits and compliance checks: Conducting regular security audits to ensure compliance with relevant regulations and security standards.

For example, we integrated automated security scans into our CI/CD pipeline to identify vulnerabilities early in the development lifecycle. This proactive approach helped us prevent vulnerabilities from reaching production, minimizing the risk of security breaches.

Q 20. Describe your experience with logging and tracing systems.

Robust logging and tracing systems are critical for monitoring, debugging, and incident response. My experience encompasses designing and implementing centralized logging and tracing solutions using tools like Elasticsearch, Fluentd, Kibana (EFK stack), Prometheus, Grafana, and Jaeger.

A key aspect of effective logging is structured logging, where data is logged in a consistent and machine-readable format (like JSON). This allows for easier analysis and automated alerting. Tracing, on the other hand, provides end-to-end visibility into the flow of requests through a distributed system, enabling quick identification of bottlenecks and errors.

In a recent project, we implemented distributed tracing using Jaeger to track requests across multiple microservices. This provided crucial insights into request latency and error rates, enabling us to identify and address performance bottlenecks and resolve issues much faster than with traditional logging alone. We also implemented custom dashboards in Grafana to visualize key metrics, providing a single source of truth for system health and performance.

Q 21. What is your approach to incident communication and transparency?

Incident communication and transparency are crucial for building trust and fostering collaboration. My approach focuses on:

- Timely communication: Providing updates promptly and regularly, even if there is no immediate resolution.

- Clear and concise messaging: Using simple and straightforward language to avoid technical jargon.

- Multiple communication channels: Utilizing various channels like email, Slack, and status pages to reach a broad audience.

- Post-incident reviews (PIRs): Conducting thorough post-incident reviews to identify root causes, implement corrective actions, and prevent future occurrences.

- Publicly accessible status page: Maintaining a public status page to inform users about outages and service disruptions, keeping them updated on progress.

For instance, during a recent incident, we leveraged a status page to keep users informed about the issue, its impact, and our progress toward resolution. We also used a dedicated Slack channel for internal communication, facilitating quick collaboration and efficient information sharing among team members.

Transparency, even during challenging situations, builds trust and allows stakeholders to understand the situation and its impact.

Q 22. How do you handle conflicting priorities and competing demands?

Handling conflicting priorities requires a structured approach. Think of it like a skilled air traffic controller managing multiple planes – each with its own destination and urgency. My strategy involves several key steps:

- Prioritization Matrix: I use a matrix to categorize tasks based on urgency and importance (e.g., Eisenhower Matrix). This helps visually separate critical tasks from less important ones.

- Data-Driven Decisions: Instead of relying on gut feeling, I gather data to support prioritization. For instance, if two projects compete for resources, I’d analyze their potential impact on service level objectives (SLOs) and customer experience.

- Stakeholder Alignment: Open communication is crucial. I discuss competing priorities with stakeholders, explaining the trade-offs involved and collaboratively deciding on the best course of action. This ensures everyone is informed and on board.

- Timeboxing and Delegation: I break down large tasks into smaller, manageable chunks and allocate time effectively using timeboxing techniques. When possible, I delegate tasks to free up time for higher-priority items.

- Continuous Monitoring and Adjustment: I regularly review the progress and readjust priorities as needed. Unexpected issues often arise, requiring adjustments to the original plan.

For example, during a recent incident, we had a critical bug fix competing with a planned infrastructure upgrade. Using a prioritization matrix, coupled with data on the bug’s impact (high user impact, major service degradation), we prioritized the bug fix, scheduling the upgrade for a less critical time.

Q 23. Explain your experience with different monitoring and alerting systems.

My experience spans several monitoring and alerting systems, each with its strengths and weaknesses. I’ve worked extensively with:

- Prometheus and Grafana: This powerful combination offers excellent time-series data collection and visualization. Prometheus’s pull-based model is robust and scalable, while Grafana’s dashboards provide customizable views for monitoring metrics and visualizing key performance indicators (KPIs).

- Datadog: A comprehensive platform providing monitoring, alerting, tracing, and logging. Its ease of use and rich feature set make it ideal for teams needing a unified monitoring solution. The automated dashboards and anomaly detection features are particularly useful.

- CloudWatch: AWS’s native monitoring service seamlessly integrates with other AWS services. It’s a cost-effective option for organizations heavily reliant on AWS, offering strong metrics and log analysis capabilities. However, its complexity can be a barrier for less experienced users.

- New Relic: A comprehensive APM (Application Performance Monitoring) platform focusing on application performance insights. It excels in providing detailed transaction traces and application-level metrics, aiding in identifying bottlenecks and performance issues.

Choosing the right system depends on the specific needs of the organization and the technology stack. For instance, a microservices architecture might benefit from Prometheus and Grafana’s scalability, while a monolithic application could leverage Datadog’s comprehensive feature set.

Q 24. How do you stay updated on the latest technologies and trends in SRE?

Staying updated in the rapidly evolving world of SRE requires a multi-pronged approach:

- Conferences and Meetups: Attending industry conferences like SREcon and DevOpsDays offers invaluable insights from leading experts and provides networking opportunities.

- Online Courses and Certifications: Platforms like Coursera, edX, and A Cloud Guru provide structured learning paths covering advanced SRE concepts and technologies.

- Blogs and Publications: Following reputable blogs, such as Google’s SRE blog and various tech publications, allows me to stay abreast of the latest trends and best practices.

- Open Source Contributions: Contributing to open-source projects allows for hands-on experience with cutting-edge technologies and exposes me to diverse coding styles and problem-solving approaches.

- Community Engagement: Participating in online forums and communities like Stack Overflow and Reddit’s r/sre fosters collaboration and knowledge sharing.

For example, recently I completed a course on Chaos Engineering, enhancing my ability to design and implement resilience testing strategies within our infrastructure.

Q 25. Describe a time you had to make a difficult decision under pressure.

During a major service outage, we faced a critical decision under immense pressure. A critical database node failed, causing a cascading failure impacting multiple services. Two options existed:

- Option A (Faster, Riskier): Immediately restore from a less recent backup. This was faster but carried a higher risk of data loss.

- Option B (Slower, Safer): Attempt a more time-consuming repair process, minimizing the risk of data loss but potentially prolonging the outage.

After a brief assessment, weighing the impact on users against potential data loss, we opted for option B. Though slower, the careful approach prevented significant data loss, which would have had far more severe consequences in the long run. Post-incident analysis revealed underlying infrastructure weaknesses, leading to improvements in our disaster recovery plan.

Q 26. How do you contribute to a culture of learning and improvement within your team?

Fostering a culture of learning and improvement is a core responsibility. I achieve this through:

- Knowledge Sharing Sessions: Organizing regular team meetings where we discuss challenges, share best practices, and learn from incidents. These sessions are crucial for disseminating knowledge and preventing past mistakes from recurring.

- Mentorship and Pair Programming: Mentoring junior team members and practicing pair programming helps create a supportive learning environment. This approach facilitates knowledge transfer and improves coding standards across the team.

- Internal Training Programs: Developing internal training programs tailored to specific skill gaps within the team ensures everyone stays up-to-date on relevant technologies and procedures.

- Post-Incident Reviews (PIRs): Conducting thorough PIRs after incidents, focusing not on blame, but on identifying root causes and implementing preventative measures. This fosters a culture of continuous improvement.

- Promoting Experimentation and Innovation: Encouraging team members to experiment with new tools and technologies, allowing them to expand their skill sets and share their knowledge with colleagues.

For instance, I recently initiated a series of workshops on Chaos Engineering, equipping the team with the skills needed to improve the resilience of our systems.

Q 27. Explain your understanding of different deployment strategies (e.g., blue/green, canary).

Deployment strategies are crucial for minimizing downtime and risk during releases. Here are a few common strategies:

- Blue/Green Deployment: Two identical environments exist – blue (live) and green (staging). New code is deployed to the green environment, tested thoroughly, and then traffic is switched from blue to green. If issues arise, traffic can be quickly switched back to blue. This is relatively low-risk and fast to rollback.

- Canary Deployment: A small subset of users is routed to the new version of the application while the majority remains on the older version. This allows for gradual rollout, monitoring the performance and stability of the new version in a real-world environment before a full deployment. This minimizes the impact of any unforeseen problems.

- Rolling Deployment: New code is deployed incrementally to a group of servers. This approach allows for continuous monitoring and rollback if necessary. It’s often used with automated tools to orchestrate the deployment process across multiple servers.

- A/B Testing: This focuses on feature flags, allowing for different versions of a feature to be deployed to different user segments. This enables collecting data to compare the performance of different versions, helping choose the best option before full deployment.

The best approach depends on the application’s complexity, criticality, and risk tolerance. For example, a critical financial application might favor a blue/green deployment due to its low-risk nature, while a less critical application might use canary deployment for a more gradual rollout.

Key Topics to Learn for Site Reliability Engineering Interview

- System Design and Architecture: Understanding distributed systems, microservices, and various architectural patterns is crucial. Consider how to design for scalability, reliability, and maintainability.

- Monitoring and Alerting: Learn how to implement effective monitoring strategies using tools like Prometheus, Grafana, or Datadog. Practice designing alerting systems that minimize noise while ensuring critical issues are addressed promptly.

- Incident Management and Response: Master the incident management lifecycle, from initial detection to post-mortem analysis. Develop strong communication and collaboration skills for effective teamwork during critical situations.

- Automation and Infrastructure as Code (IaC): Gain proficiency in tools like Terraform, Ansible, or Chef for automating infrastructure deployments and management. Understand the benefits and challenges of IaC.

- Capacity Planning and Performance Optimization: Learn techniques for predicting future resource needs and optimizing system performance. This includes understanding load balancing, caching, and database optimization strategies.

- Security Best Practices: Familiarize yourself with security considerations in SRE, including access control, vulnerability management, and incident response planning related to security breaches.

- Cloud Platforms (AWS, Azure, GCP): Understanding at least one major cloud provider is essential. Focus on services relevant to SRE, such as compute, storage, networking, and monitoring tools.

- Containerization and Orchestration (Docker, Kubernetes): Gain practical experience with containerization and orchestration technologies. Understand how these tools contribute to scalability, deployment, and management of applications.

- Data Analysis and Troubleshooting: Develop skills in analyzing system logs and metrics to identify performance bottlenecks and troubleshoot issues effectively.

Next Steps

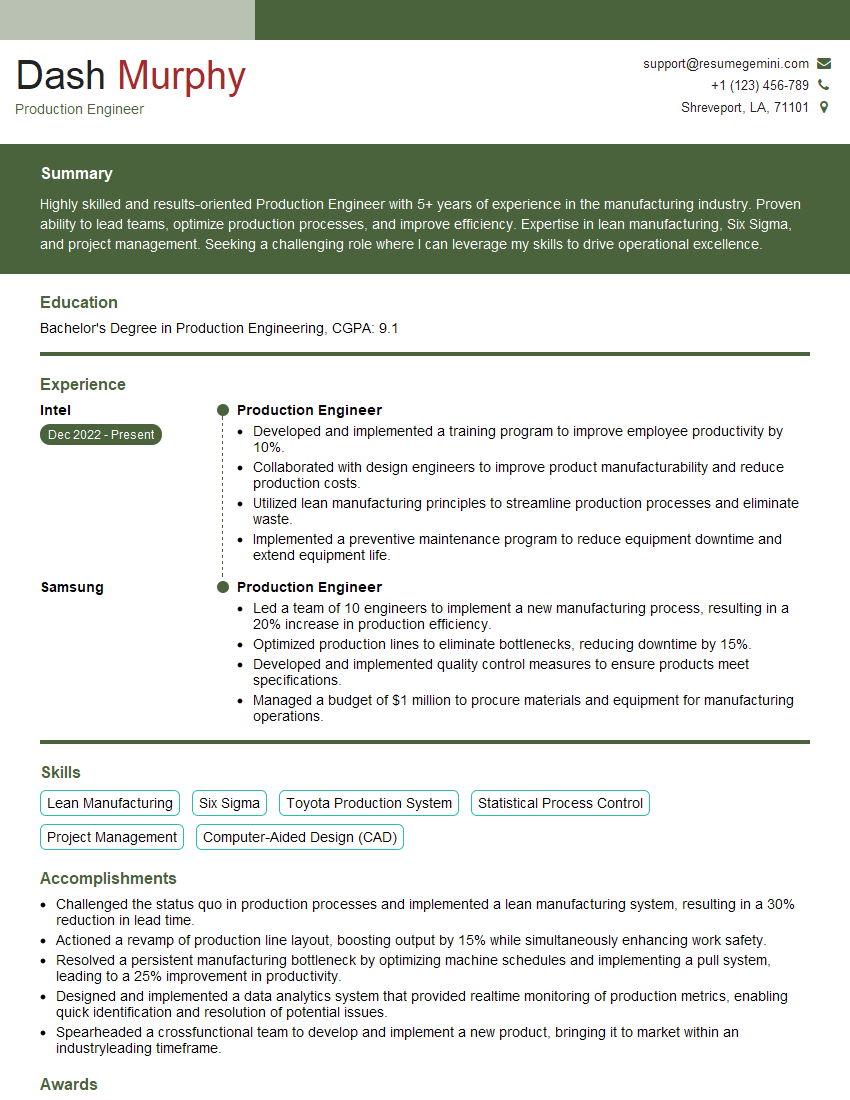

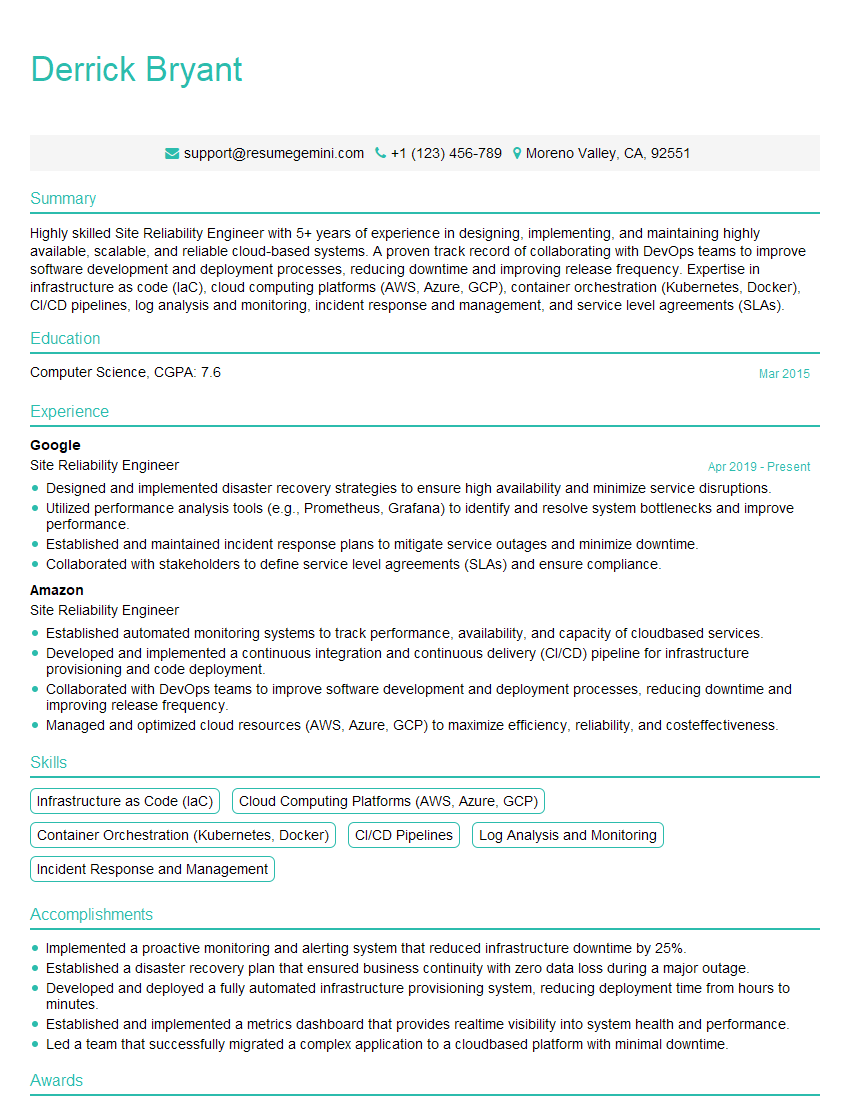

Mastering Site Reliability Engineering opens doors to high-demand, high-impact roles offering significant career growth and competitive compensation. To maximize your job prospects, it’s vital to present your skills effectively. Create an ATS-friendly resume that showcases your achievements and technical expertise. ResumeGemini is a trusted resource to help you build a professional and impactful resume that stands out. They provide examples of resumes tailored to Site Reliability Engineering, enabling you to craft a document that accurately reflects your qualifications and experience.

Explore more articles

Users Rating of Our Blogs

Share Your Experience

We value your feedback! Please rate our content and share your thoughts (optional).

What Readers Say About Our Blog

Hello,

We found issues with your domain’s email setup that may be sending your messages to spam or blocking them completely. InboxShield Mini shows you how to fix it in minutes — no tech skills required.

Scan your domain now for details: https://inboxshield-mini.com/

— Adam @ InboxShield Mini

Reply STOP to unsubscribe

Hi, are you owner of interviewgemini.com? What if I told you I could help you find extra time in your schedule, reconnect with leads you didn’t even realize you missed, and bring in more “I want to work with you” conversations, without increasing your ad spend or hiring a full-time employee?

All with a flexible, budget-friendly service that could easily pay for itself. Sounds good?

Would it be nice to jump on a quick 10-minute call so I can show you exactly how we make this work?

Best,

Hapei

Marketing Director

Hey, I know you’re the owner of interviewgemini.com. I’ll be quick.

Fundraising for your business is tough and time-consuming. We make it easier by guaranteeing two private investor meetings each month, for six months. No demos, no pitch events – just direct introductions to active investors matched to your startup.

If youR17;re raising, this could help you build real momentum. Want me to send more info?

Hi, I represent an SEO company that specialises in getting you AI citations and higher rankings on Google. I’d like to offer you a 100% free SEO audit for your website. Would you be interested?

Hi, I represent an SEO company that specialises in getting you AI citations and higher rankings on Google. I’d like to offer you a 100% free SEO audit for your website. Would you be interested?

good