Every successful interview starts with knowing what to expect. In this blog, we’ll take you through the top CGI and Motion Capture interview questions, breaking them down with expert tips to help you deliver impactful answers. Step into your next interview fully prepared and ready to succeed.

Questions Asked in CGI and Motion Capture Interview

Q 1. Explain the difference between keyframing and motion capture.

Keyframing and motion capture are both methods for creating animation, but they differ significantly in their approach. Keyframing is a manual process where an animator sets key poses at specific points in time, and the software interpolates the movement between those poses. Think of it like drawing individual frames of a flipbook. Each frame is a key pose, and the sequence creates the illusion of movement. Motion capture, on the other hand, involves capturing the movement of a real actor using specialized sensors and then translating that movement to a 3D character. It’s like having a real-life model perform the action and then digitally transferring their performance to your character.

The key difference lies in control and realism. Keyframing offers precise control over every aspect of the animation, allowing for stylized or exaggerated movements. Motion capture, while offering very realistic movements, requires post-processing, cleanup, and retargeting to perfectly fit the digital character.

For example, creating a stylized cartoon run would likely be more efficient using keyframing, whereas a realistic depiction of a person walking would benefit greatly from motion capture.

Q 2. Describe your experience with various rigging techniques.

My rigging experience encompasses a range of techniques, from simple bone rigs suitable for basic character animation to complex rigs employing advanced features like inverse kinematics (IK) and stretchy bones. I’m proficient in creating rigs for both bipedal and quadrupedal characters, as well as non-organic objects requiring specific types of deformation. I’ve worked extensively with:

- Forward Kinematics (FK): The traditional method, where each bone’s position is controlled directly.

- Inverse Kinematics (IK): A more sophisticated approach that allows for manipulating the end effector (like a hand or foot) and having the joints adjust accordingly, simplifying complex poses.

- Spline IK: Useful for creating smoother, more natural curves, especially for characters with long limbs.

- Muscle Systems and Facial Rigging: Designing rigs for realistic facial expressions and muscle deformation.

I’ve utilized various software solutions for rigging, including Autodesk Maya, 3ds Max, and Blender. My experience also includes adapting and troubleshooting existing rigs, ensuring they function efficiently and smoothly.

Q 3. How do you optimize 3D models for real-time rendering?

Optimizing 3D models for real-time rendering is crucial for maintaining high frame rates in games or interactive applications. This involves a multi-pronged approach focused on reducing polygon count, optimizing textures, and leveraging efficient rendering techniques.

- Poly Reduction: Lowering the polygon count of the model without sacrificing visual quality. Techniques such as decimation and retopology are frequently employed.

- Texture Optimization: Using efficient texture formats (like BC7 or ASTC), compressing textures, and using appropriate texture resolutions. Larger textures require more memory and processing power.

- Level of Detail (LOD): Creating multiple versions of the model with varying polygon counts; the engine switches to lower-poly versions at greater distances to improve performance.

- Normal Maps and other Baking Techniques: These techniques allow you to add surface detail without increasing polygon count significantly. For example, baking high-poly details onto normal maps creates the illusion of high detail on a low-poly model.

- Material Optimization: Simple shaders and efficient material configurations are essential. Avoid overly complex shaders that impact rendering performance.

For example, a character model might have a high-poly version for close-ups and a much lower-poly version for distance shots.

Q 4. What software packages are you proficient in for CGI and motion capture?

My proficiency spans a broad range of software used in CGI and motion capture, including:

- Autodesk Maya: A comprehensive 3D modeling, animation, and rendering software used extensively throughout my career.

- Autodesk 3ds Max: Another strong 3D suite, particularly known for its polygon modeling capabilities and rendering plugins.

- Blender: A powerful open-source alternative offering comparable features to commercial software.

- MotionBuilder: Specifically designed for motion capture data processing, cleanup, and retargeting.

- Substance Painter/Designer: Used for creating high-quality textures.

- Unreal Engine/Unity: I am experienced in real-time rendering and integration within these game engines.

I am adaptable and comfortable learning new software as needed for project requirements.

Q 5. Explain your process for creating realistic skin shaders.

Creating realistic skin shaders involves a deep understanding of both the visual properties of skin and the capabilities of rendering software. The key is to accurately simulate the subsurface scattering of light that occurs within skin tissue. I achieve this through a combination of techniques:

- Subsurface Scattering (SSS): This is the most crucial element. SSS simulates how light penetrates the skin and scatters beneath the surface, creating a translucent effect. Various SSS techniques exist, from simple approximations to more sophisticated methods using dedicated SSS shaders.

- Diffuse and Specular Maps: These textures determine how light reflects and scatters from the surface. Diffuse maps control the overall color and texture, while specular maps determine the shininess.

- Normal Maps: These add fine surface details like pores and wrinkles without requiring a high polygon count.

- Layered Shaders: Often, a layered approach is needed to capture nuances like blood vessels and underlying muscle tones.

- Principled BSDF (Bidirectional Scattering Distribution Function): This type of shader node provides a comprehensive set of controls for material appearance and is becoming increasingly standard in real-time rendering pipelines.

I often experiment and iterate on different shader configurations to achieve the desired level of realism, considering the overall lighting setup and the style of the project.

Q 6. Describe your experience with motion capture data cleanup and retargeting.

Motion capture data often requires significant cleanup and retargeting. Raw motion capture data frequently contains noise, unwanted movements, and inconsistencies that need to be addressed before it can be applied to a 3D character. My process involves:

- Noise Reduction: Filtering out minor jitters and artifacts in the motion data using various smoothing and filtering techniques within software like MotionBuilder.

- Cleanup: Manually correcting errors and inconsistencies, such as fixing unrealistic poses or removing unwanted movements.

- Retargeting: Mapping the motion capture data from the actor’s rig to the 3D character’s rig. This often requires significant adjustments due to differences in body proportions and skeletal structures.

- Blending and Editing: Combining different motion capture clips and fine-tuning the animation using curves and other editing tools.

A common challenge is handling differences in the actor’s movement and the character’s anatomy. For example, adjusting the foot-planting animations when retargeting motion from an actor to a character with smaller feet requires careful attention to detail.

Q 7. How do you handle complex character animation with many bones and constraints?

Animating complex characters with numerous bones and constraints necessitates a structured and organized workflow. My strategy is:

- Hierarchical Organization: Using a well-defined hierarchy of bones improves control and reduces conflicts between different parts of the rig.

- Constraint Management: Careful planning and implementation of constraints (like IK, pole vectors, and orientation constraints) is essential to prevent unexpected behavior. Constraints must work together seamlessly.

- Layered Animation: Decomposing complex movements into simpler, more manageable layers allows for easier control and editing. This could involve creating separate animation layers for subtle facial movements, locomotion, and larger body gestures.

- Scripting and Procedural Animation: For extremely complex animations, scripting or procedural animation techniques can automate repetitive tasks and streamline the workflow. For example, using MEL scripts in Maya can create automated rigging or animation tools.

- Testing and Iteration: Regularly testing the animation for glitches and inconsistencies throughout the process ensures the final product is smooth and natural.

For example, a character with a highly detailed face could have separate layers for the eyes, mouth, and eyebrows, allowing for individual control and smoother blends between different expressions.

Q 8. What are the different types of motion capture markers and their applications?

Motion capture markers come in various types, each suited for different applications. The choice depends on factors like the level of detail required, the budget, and the environment.

- Passive Markers: These are reflective markers that don’t require a power source. They’re tracked by infrared cameras, reflecting light back to the system. They are cost-effective but can be affected by ambient light and occlusion (when one marker is hidden behind another). Think of the small white balls you often see actors wearing in motion capture shoots.

- Active Markers: These markers contain LEDs or other light sources, emitting their own light, making them less susceptible to ambient light interference. They are more accurate and reliable than passive markers, but are more expensive and require batteries or other power sources. This type is frequently used for complex movements or scenes with difficult lighting conditions.

- Inertial Sensors (IMU): These are small, self-contained units that measure acceleration and rotation. They don’t rely on cameras for tracking, making them suitable for environments where camera-based systems are impractical. However, their accuracy can drift over time, requiring calibration and potentially fusion with other data sources. Commonly used in virtual reality and augmented reality applications.

- Magnetic Sensors: These rely on magnetic fields to track movement and are primarily used in specific applications where camera-based systems are difficult to deploy. They are less common than other methods due to sensitivity to magnetic interference.

For example, in a high-budget feature film focusing on realistic character animation, active markers would likely be preferred for their accuracy. A lower-budget independent film might use passive markers, carefully controlling the lighting environment to minimize interference. For a virtual reality game where the player’s body needs to be tracked in an unconstrained environment, inertial sensors would be a better choice.

Q 9. Explain your workflow for creating realistic lighting in a CGI scene.

Creating realistic lighting in a CGI scene is a multi-step process involving understanding light sources, materials, and the overall mood. My workflow typically follows these steps:

- Reference Gathering: I start by gathering reference images and videos of real-world scenes that match the desired look and feel. This helps in understanding how light interacts with objects and the environment.

- Light Source Placement and Properties: I then set up the primary light sources (key light, fill light, rim light, etc.) in my 3D software. I carefully adjust parameters like intensity, color temperature, and shadow softness to achieve the desired effect. I might use different light types (e.g., point lights, spotlights, area lights) to simulate various sources like sun, lamps, or ambient light.

- Material Definition: Realistic lighting depends heavily on how materials reflect and absorb light. I pay close attention to material properties like reflectivity, roughness, and color. I often use physically based rendering (PBR) techniques to ensure realistic interactions between light and surfaces.

- Global Illumination: To simulate realistic indirect lighting (light bouncing off multiple surfaces), I use global illumination techniques such as radiosity or path tracing. This adds subtle details and realism that are often missing in simple direct lighting setups. Think of how light indirectly illuminates a room – that’s what global illumination simulates.

- Ambient Occlusion: Ambient occlusion simulates the darkening of areas where surfaces are close together and don’t receive much direct light. This adds another layer of realism, especially in crevices and corners.

- Color Grading and Post-Processing: Finally, I use color grading techniques to fine-tune the colors, contrast, and overall mood. This often involves adjusting saturation, exposure, and contrast to enhance the final look.

For example, in a scene depicting a sunset over a forest, I’d use a warm-colored area light to simulate the sun, a soft fill light from the sky, and darker shadows in the forest to create depth and realism. The leaves on the trees would be rendered using PBR materials with appropriate reflectivity to capture the subtle interplay of light and shadows.

Q 10. How do you troubleshoot issues with motion capture data during post-processing?

Troubleshooting motion capture data requires a systematic approach. Issues can range from simple errors to complex problems requiring advanced techniques.

- Data Visualization: The first step is to thoroughly visualize the raw motion capture data in dedicated software. This allows me to identify obvious problems like missing markers, noisy data points, or incorrect marker labeling.

- Noise Reduction: Motion capture data is often noisy due to various factors like camera limitations or actor movement. I employ filtering techniques (like median filters or Kalman filters) to smooth out the data without losing crucial details. This is a delicate balancing act – too much filtering can obscure important details, while too little leaves the data noisy.

- Marker Occlusion Handling: When markers are temporarily blocked from view, the tracking system may produce incorrect data. I often use interpolation or predictive algorithms to fill in the missing gaps, based on the preceding and succeeding data points. Sophisticated systems can use data from other markers to make educated estimations.

- Retargeting: If the motion capture data doesn’t match the target character’s rig (skeleton), I use retargeting techniques to map the motion data onto the correct skeletal structure. This requires careful consideration of scale, proportions, and joint relationships.

- Manual Cleaning and Editing: Sometimes, manual cleanup is necessary. This involves manually adjusting individual frames or keyframes to correct errors or improve the quality of the animation. This is often the most time-consuming but sometimes essential step.

- Advanced Techniques: For complex issues, I may employ more advanced techniques like machine learning algorithms to automatically identify and correct errors or use specialized software that allows for detailed editing and refinement of the motion data.

For instance, if a marker is consistently jittering, I’d apply a smoothing filter. If a marker is briefly occluded, I’d use interpolation to reconstruct its position. If the motion captured data is from a large person, I would need to retarget it to fit my smaller character model.

Q 11. Describe your experience with different compositing techniques.

Compositing is crucial for integrating CGI elements seamlessly into live-action or other CGI scenes. I’m proficient in a variety of techniques:

- Keying: This involves isolating the subject (CGI element or live-action foreground) from its background. Common methods include chroma keying (green screen) and luma keying (based on brightness values). Careful preparation of the footage is vital for clean keying; lighting and color consistency are paramount.

- Matte Painting: This technique involves creating digital backgrounds or extending existing ones, frequently used to enhance landscapes or create environments not practically achievable during filming. It requires skill in matching lighting, perspective, and texture with the footage.

- Depth of Field Effects: These create a more realistic sense of depth by blurring elements based on distance from the camera. Precise matching of depth maps (from the CGI and live-action) is crucial for believable results.

- Motion Blur and Temporal Effects: Matching motion blur and other temporal effects between CGI and live-action elements is essential for smooth integration. This often requires careful frame-by-frame adjustments.

- Color Correction and Grading: Consistent color grading across all elements ensures visual harmony. This frequently involves matching the color temperature, saturation, and contrast to ensure a seamless blend.

For example, in a film, I might use chroma keying to remove a green screen background from a CGI character, then use matte painting to create a detailed fantasy environment behind them, ensuring careful matching of lighting and shadows for smooth integration.

Q 12. What are the common challenges in integrating CGI with live-action footage?

Integrating CGI with live-action footage presents several challenges:

- Matching Lighting: Achieving consistent lighting between the CGI and live-action elements is crucial. Differences in color temperature, intensity, and shadow detail can make the CGI look unnatural or out of place. This often necessitates careful lighting and matching techniques in post-production.

- Camera Movement and Perspective: CGI elements need to be perfectly integrated with the live-action camera movement and perspective. Any mismatches can lead to noticeable inconsistencies. Techniques like camera tracking and 3D matchmoving are employed to address this.

- Texture and Material Consistency: CGI elements need to have similar texture and material properties to their live-action counterparts. Discrepancies in surface roughness, reflectivity, or shading can create a jarring effect. Careful modeling and texturing are required.

- Performance Capture and Animation: When integrating CGI characters into live-action scenes, achieving believable performance is challenging. This requires skilled animators and often involves techniques like motion capture to capture the nuances of human movement. If the character’s movement doesn’t match the context of the scene, the result can feel unnatural.

- Resolution and Detail: Matching the resolution and level of detail between the CGI and live-action elements can be demanding. High-resolution CGI needs to be scaled appropriately to avoid artifacts or noticeable inconsistencies.

For example, if a CGI character interacts with a live actor, it’s critical that the lighting on both is consistent. A mismatched camera angle, where the perspective doesn’t match up, could make the scene look unconvincing.

Q 13. Explain your approach to creating believable facial expressions in animation.

Creating believable facial expressions in animation is a complex process that requires a multi-pronged approach:

- High-Quality 3D Models: It all begins with a detailed facial model that accurately captures the subtle nuances of human anatomy. This includes accurate muscle structure, realistic skin texturing, and precise control over individual facial features.

- Facial Rigging: A well-designed rig with appropriate controls for the jaw, eyes, mouth, cheeks, and other facial muscles is essential. The rig needs to be intuitive and efficient for animators to use, allowing subtle control over the expressions.

- Motion Capture: Motion capture technology can capture the subtle movements of a performer’s face, providing a foundation for realistic animation. This often involves using specialized cameras and facial capture systems to capture data. This data provides a foundation for realistic animation but usually needs refining.

- Animation Techniques: Skilled animators use various techniques to refine the captured data and achieve expressive results. This involves observing real human facial expressions and translating them into believable animations using keyframing or more advanced methods like muscle simulation or procedural animation.

- Subtlety and Timing: Believable facial expressions depend not just on the shapes of the expressions but also on the timing and subtlety of the movements. A small, gradual change can often be more expressive than a sudden, exaggerated one. Subtle changes in eye movement can significantly enhance believability.

For instance, to create a surprised expression, I wouldn’t just open the eyes and mouth wide – I’d subtly animate the raising of the eyebrows, the widening of the eyes, and the quick, almost involuntary opening of the mouth, ensuring the timing is natural and convincing.

Q 14. How do you maintain consistency in animation across multiple shots?

Maintaining consistency in animation across multiple shots requires careful planning, organization, and attention to detail.

- Reference Sheets and Style Guides: Creating comprehensive reference sheets and style guides outlining character poses, movements, and expressions ensures consistency across the entire project. This serves as a visual benchmark for the entire animation team.

- Shot Breakdown and Storyboarding: A thorough shot breakdown and storyboarding phase helps in planning the overall flow of animation and identifying potential inconsistencies early on. This ensures that character movements and actions are consistent across various scenes.

- Rigging and Animation Standards: Using standardized rigging techniques and animation practices across all shots guarantees consistency in character movements. This includes establishing clear guidelines for joint limits, controls, and animation workflows.

- Version Control and Collaboration Tools: Employing robust version control systems and collaboration tools allows for seamless communication and collaboration among animators. These tools ensure that everyone works with the latest version of assets and can easily access previous versions for reference.

- Review and Feedback Processes: Regular reviews and feedback sessions help to identify and address inconsistencies before they become major issues. This ensures that the entire team is aligned with the overall vision and maintains a consistent animation style.

For example, if a character has a specific walking style in one shot, that same walking style must be consistently used throughout the entire film, including subtle details like arm and head movements. A consistent reference guide helps keep the entire team on the same page.

Q 15. How do you address performance issues when rendering complex CGI scenes?

Optimizing performance in complex CGI scenes is crucial for efficient workflow and timely project completion. It’s a multi-pronged approach focusing on scene complexity reduction, efficient rendering techniques, and hardware optimization.

Level of Detail (LOD): Implementing LODs significantly reduces polygon count. Faraway objects use low-poly models, while close-ups utilize high-resolution ones. Think of it like viewing a landscape: you see individual trees up close, but from afar, they blend into a textured mass.

Geometry Optimization: Cleaning up geometry is key. Removing unnecessary polygons, merging similar meshes, and using optimized modeling techniques (like using fewer quads over triangles where possible) improves rendering speed. Imagine sculpting a clay figure—you wouldn’t want unnecessary bumps and details that don’t contribute to the overall shape.

Efficient shaders: Complex shaders can heavily impact performance. Using simpler, optimized shaders where possible can dramatically improve render times. A simple diffuse shader is far less computationally expensive than a physically-based rendering (PBR) shader with subsurface scattering. Choose the level of realism appropriate for your project’s needs.

Rendering techniques: Techniques like ray tracing can be beautiful but computationally expensive. Consider using path tracing or rasterization for faster renders when appropriate. A balance between realism and render time is often necessary.

Hardware optimization: Utilizing render farms and investing in powerful hardware with multiple GPUs significantly accelerates rendering. Distributing the workload across multiple machines is like having a team work on a project simultaneously—each person handles a part, resulting in faster overall completion.

Career Expert Tips:

- Ace those interviews! Prepare effectively by reviewing the Top 50 Most Common Interview Questions on ResumeGemini.

- Navigate your job search with confidence! Explore a wide range of Career Tips on ResumeGemini. Learn about common challenges and recommendations to overcome them.

- Craft the perfect resume! Master the Art of Resume Writing with ResumeGemini’s guide. Showcase your unique qualifications and achievements effectively.

- Don’t miss out on holiday savings! Build your dream resume with ResumeGemini’s ATS optimized templates.

Q 16. Explain your understanding of different camera tracking methods.

Camera tracking involves reconstructing the camera’s movement in a real-world scene, typically from video footage, to accurately integrate CGI elements. Several methods exist:

2D Tracking: This method uses feature points within the video footage to track camera motion. Software identifies and tracks these points across frames, estimating camera position and orientation. It’s simpler and faster but less accurate than 3D tracking, especially with complex camera movements.

3D Tracking: This utilizes known points in the scene (markers or objects with known dimensions) to achieve more precise camera tracking. This provides both camera position and orientation information with higher accuracy, suitable for projects demanding precise integration of CGI.

Solve Tracking: This advanced method combines 2D and 3D tracking techniques for enhanced accuracy and robustness, particularly in challenging scenarios with limited feature points or complex camera movement. This often involves iterative refinement of the camera solve.

Matchmoving: This is the general term for the process, encompassing all techniques used to integrate live-action footage and CGI. This requires skill and careful planning, often involving iterative refinement and adjustments.

The choice of method depends on factors such as the complexity of the scene, the accuracy requirements, and the available resources (hardware and software).

Q 17. What are the best practices for creating and managing textures?

Texture creation and management are crucial for realistic CGI. Best practices include:

Resolution and Compression: Choose appropriate resolution for the detail level required, balancing quality with file size. Utilize effective compression methods like DDS or OpenEXR to maintain quality while reducing storage space. Overly high resolutions are unnecessary and slow down rendering.

Texture Organization: Create a clear and organized texture library with a consistent naming convention. Using a hierarchical folder structure can improve workflow and makes assets easily accessible.

Texture Mapping: Use appropriate mapping techniques like UV unwrapping to ensure seamless and efficient texture application. Careful UV unwrapping minimizes distortion and avoids stretching or seams.

Normal Maps and other Detail Maps: Supplement base color textures with normal maps, specular maps, and other detail maps to add surface detail without increasing polygon count. These subtle details dramatically enhance realism. It’s like adding fine brushstrokes to a painting for more detail.

Version Control: Use version control systems like Git to track texture changes and manage different versions. This prevents accidental overwrites and ensures efficient collaboration among artists.

Q 18. Describe your experience with different types of shaders and their applications.

Shaders are programs that determine how light interacts with surfaces in a 3D scene. Different shader types offer unique visual effects.

Diffuse Shaders: These simulate basic light scattering, providing a flat, matte appearance. They are simple and computationally inexpensive, ideal for quick renders or low-detail models.

Specular Shaders: These simulate light reflection, creating shiny surfaces. The level of shininess (specular intensity) can be adjusted. Used to create metallic, glassy, or other reflective surfaces.

Phong and Blinn-Phong Shaders: These are common models for simulating both diffuse and specular lighting. They provide a good balance between realism and performance.

Physically Based Rendering (PBR) Shaders: These shaders accurately simulate light interaction based on real-world physics. They produce highly realistic results but are computationally expensive. PBR shaders are the gold standard for photorealistic rendering.

Subsurface Scattering Shaders: These shaders simulate light scattering beneath the surface of materials like skin or wax. This creates a more realistic look for translucent objects.

The choice of shader depends on the visual style and performance requirements of your project. Simple shaders are best for stylized visuals, while PBR is favored for realism.

Q 19. How do you use reference images and videos effectively during animation?

Reference images and videos are essential for creating believable animation. They serve as guides for posing, movement, and overall aesthetic.

Posing Reference: Images and videos of people or objects in specific poses provide a crucial guide to accurate character rigging and animation. This ensures realistic postures.

Movement Reference: Video clips of real-world movements (e.g., a walk cycle, a fight sequence) serve as blueprints for animation. This ensures natural and believable motion.

Environmental Reference: Pictures and videos of the intended environment help in building accurate and immersive settings.

Integration: Software like Maya, 3ds Max, and Blender allows you to directly import reference images and videos to overlay on your models or animation for live comparison.

Creative Interpretation: References don’t need to be copied verbatim. They serve as inspirational guides that help create a unique and compelling animation.

Q 20. Explain your workflow for creating realistic hair and fur in CGI.

Creating realistic hair and fur requires specialized techniques and tools. My workflow typically involves:

Hair and Fur Systems: Utilizing dedicated hair and fur systems within 3D software packages (like XGen in Maya or Ornatrix) provides the necessary tools to generate and manipulate realistic hair and fur. These systems allow controlling individual strands or using algorithms to automatically generate large amounts of hair.

Grooming: This crucial step involves shaping, styling, and adjusting the hair or fur to achieve the desired look. This includes combing, cutting, and guiding individual strands for a realistic and cohesive appearance.

Simulation: Simulating hair and fur movement adds significant realism, capturing its natural flow and interaction with wind and other forces. This process can be computationally intensive, requiring careful optimization.

Shading and Texturing: Appropriate shaders and textures are essential to mimic the subtle variations in color, shine, and transparency that contribute to realism. Consider using specialized shaders for hair to simulate its unique reflective properties.

Optimization: Hair and fur can quickly become performance-intensive. Optimizing the geometry and shading techniques is crucial to keep render times manageable.

Q 21. What are the ethical considerations when using motion capture technology?

Ethical considerations in motion capture (mocap) are vital. Key concerns include:

Informed Consent: Actors must provide fully informed consent, understanding how their performance will be used and the implications of data capture. They should know who owns the captured data and what restrictions are in place.

Data Privacy and Security: Mocap data is sensitive and needs proper security measures to prevent unauthorized access and misuse. Anonymisation techniques may be considered, depending on context.

Fair Compensation and Credit: Actors should be fairly compensated for their work, with appropriate credit given for their performance. This encompasses appropriate payment and recognition in production credits.

Potential for Misrepresentation: Mocap data might be used to create a character that doesn’t accurately reflect the actor’s image, potentially leading to misrepresentation or defamation. Care must be taken to avoid this.

Representation and Diversity: Mocap should strive for diverse representation, ensuring various body types, ethnicities, and genders are included in the data sets. This will assist in creating more inclusive and representative virtual characters.

Q 22. Describe your experience with different types of particle systems.

Particle systems are fundamental in CGI for simulating a wide range of effects, from explosions and fire to rain and snow. My experience encompasses various types, each with its strengths and weaknesses.

- Emitter-based systems: These are the most common, where particles are emitted from a specific point or area. I’ve used these extensively for creating realistic fire effects in a recent project, adjusting parameters like particle lifespan, velocity, and size to control the flame’s behavior.

- Fluid systems: These are more complex, simulating the movement and interaction of liquids or gases. For example, I’ve used fluid dynamics solvers to create convincing ocean waves and underwater currents in an animated short. These often require significant computational resources.

- Force-based systems: These use forces (gravity, wind, etc.) to manipulate particle trajectories. This is crucial for realistic smoke simulations or for adding subtle movements to dust particles in a desert scene. I’ve fine-tuned these systems to create believable wind effects interacting with foliage in a recent game environment.

- GPU-accelerated systems: Modern particle systems heavily rely on GPU acceleration for performance, enabling the simulation of millions of particles simultaneously. I have extensive experience optimizing particle systems for real-time rendering in video games, leveraging techniques like instancing and level of detail to maintain frame rates.

Understanding the nuances of each type is crucial for creating visually compelling and believable effects. The choice depends heavily on the specific needs of the project – computational resources, desired realism, and the specific effect to be simulated.

Q 23. How do you create believable cloth simulations?

Creating believable cloth simulations involves understanding the physics of fabric. It’s not just about visual appearance; it’s about how the material interacts with gravity, wind, and collisions.

My approach involves a multi-step process:

- Choosing the right simulation software: I’ve worked with industry-standard packages like Maya, Houdini, and Unreal Engine’s cloth simulation tools. Each offers different levels of control and realism.

- Defining fabric properties: This is critical. Parameters like mass, stiffness, drag, and friction all drastically affect the simulation’s outcome. A stiff material like denim will behave differently from a flowing silk.

- Mesh design: The quality of the 3D model is crucial. A low-poly mesh will produce unnatural results, while an overly dense mesh can be computationally expensive. I strive to find a balance between detail and performance.

- Collision detection: Accurately simulating how the cloth interacts with other objects in the scene is essential. This involves setting up collision parameters and defining which objects the cloth should interact with. For example, simulating a flag flapping in the wind requires careful consideration of the collision between the flag and the flagpole.

- Iteration and refinement: It’s rarely a one-and-done process. I often iterate on the simulation parameters, adjusting values and observing the results until a satisfactory level of realism is achieved.

For example, in one project, I had to simulate a character’s cape billowing behind them. Achieving a natural-looking drape required careful adjustment of the cloth simulation parameters and meticulous mesh design to avoid self-intersections and unnatural folds.

Q 24. What are the advantages and disadvantages of different motion capture suits?

Motion capture (mocap) suits vary significantly in their technology, cost, and resulting data quality. Here’s a comparison of some common types:

- Optical Mocap: Uses cameras to track reflective markers placed on the performer. Advantages include high accuracy and detailed data. Disadvantages are the need for a dedicated studio environment, potential for marker occlusion, and high cost of equipment.

- Inertial Mocap: Uses sensors embedded in a suit to track movement. Advantages are portability and lower cost, as it doesn’t require a dedicated studio. Disadvantages include lower accuracy than optical systems, susceptibility to drift over time, and potential for sensor occlusion.

- Magnetic Mocap: Uses magnetic fields to track the performer’s movements. Advantages can be high accuracy and robustness to occlusions. Disadvantages include a limited range of motion and potential interference from metallic objects.

- Hybrid Systems: Combine aspects of optical and inertial systems, often leveraging the strengths of both approaches to enhance accuracy and robustness.

The choice of suit depends on the project’s budget, the required level of detail, and the environment in which the capture will take place. For example, a high-budget feature film might utilize an optical system for its superior accuracy, whereas a low-budget indie project might opt for an inertial system for its portability and cost-effectiveness.

Q 25. Explain your experience with virtual production techniques.

Virtual production (VP) techniques are revolutionizing filmmaking by combining real-time rendering with live-action shooting. My experience includes working on projects using LED walls, game engines like Unreal Engine and Unity, and real-time rendering pipelines.

Key aspects of my VP workflow include:

- Pre-visualization: Creating detailed pre-vis helps plan camera movements and lighting setups before shooting, optimizing the process and minimizing time on set.

- Real-time rendering and compositing: Using game engines to render backgrounds and environments in real-time, allowing actors to interact with the virtual environment during filming.

- Camera tracking and matching: Ensuring the virtual environment aligns perfectly with the live-action footage requires precise camera tracking techniques.

- Lighting integration: Matching the lighting in the virtual environment with the real-world lighting on set is critical for photorealism.

- Post-production integration: Combining the virtual and real elements seamlessly in post-production, using tools like Nuke or Fusion.

In a recent project, we used an LED wall to project a virtual cityscape behind actors, allowing us to change the background dynamically without changing the physical set. This significantly reduced production time and costs compared to traditional post-production techniques.

Q 26. Describe your knowledge of different rendering engines.

My experience with rendering engines spans several platforms, each with unique strengths and weaknesses.

- V-Ray: Known for its photorealistic rendering capabilities, particularly in architectural visualization and product design. I’ve used V-Ray extensively for high-quality image rendering, leveraging its advanced features like global illumination and subsurface scattering.

- Arnold: Another industry-standard renderer known for its speed and robustness, often used in film and VFX. Its robust ray tracing capabilities are excellent for creating realistic lighting and shadow effects.

- Redshift: A GPU-accelerated renderer, ideal for large-scale projects needing fast render times. I’ve utilized Redshift’s capabilities for real-time rendering in virtual production workflows.

- RenderMan: A highly versatile and powerful renderer, widely adopted in feature animation and VFX. Its advanced features and control over the rendering process make it a strong choice for complex scenarios.

- Unreal Engine and Unity: These game engines also offer powerful rendering capabilities, suitable for real-time applications and virtual production. I have utilized both for creating interactive experiences and virtual environments.

The optimal rendering engine depends on the project’s specific requirements: render quality, speed, budget, and the software pipeline in use. Choosing the right engine is a crucial decision impacting both the final output and the production workflow.

Q 27. How would you approach creating a convincing underwater scene using CGI?

Creating a convincing underwater scene requires attention to numerous details beyond simply placing objects underwater.

My approach would involve:

- Accurate water simulation: This is fundamental. I would use specialized fluid simulation software or a game engine’s built-in fluid dynamics tools to create realistic water movement, including waves, currents, and refractions.

- Realistic lighting: Underwater light behaves differently than in the air; it’s attenuated and scattered more quickly, creating a unique color palette. I’d adjust the lighting accordingly, accounting for color shifts and light penetration depth.

- Subsurface scattering: This is crucial for rendering underwater objects realistically. Subsurface scattering simulates how light penetrates and scatters through translucent materials, such as skin, creating a more lifelike appearance.

- Particle effects: Simulating bubbles, dust particles, or other suspended materials enhances realism. I’d use particle systems to create these effects dynamically, taking into account water currents and movement.

- Careful camera work: The camera’s position and movement impact how the scene appears. Underwater scenes often utilize subtle camera shake or movement to enhance the sense of immersion.

- Correct refractive effects: The refraction of light as it passes from water to air creates a unique visual effect. Accurately simulating refraction is key to creating a believable underwater environment.

For example, in one project involving a deep-sea exploration scene, I painstakingly simulated the light attenuation and scattering, creating a bluish hue common in deeper waters and accurately rendering the refraction of light on the submerged submersible.

Q 28. Describe your understanding of color spaces and their importance in CGI.

Color spaces are critical in CGI, defining how colors are represented and interpreted. A proper understanding of different color spaces is essential for ensuring color accuracy across different stages of the pipeline.

Common color spaces include:

- sRGB: The standard color space for the internet and most monitors. It’s a relatively small color gamut (range of colors), making it suitable for web and screen display.

- Adobe RGB: A wider gamut color space, offering a larger range of colors, commonly used in photography and graphic design. It’s important for ensuring colors remain consistent across different devices and software.

- Rec.709: The standard color space for HDTV. It’s a more controlled color space offering a specific standard in color reproduction.

- ACEScg: Academy Color Encoding System is a wide gamut color space designed to improve color accuracy and consistency throughout the entire production pipeline, from capture to final output. It prevents color shifts that can occur when transferring between different color spaces.

Color space management is crucial for consistency. Working in a wide-gamut color space during production (like ACEScg) and converting to the appropriate color space (like sRGB) for final output helps prevent color shifts and ensure the final image accurately represents the artistic intent. Ignoring color spaces can result in color banding, color shifts, and inconsistencies across different platforms and displays, impacting the final aesthetic and perception of the CGI work.

Key Topics to Learn for CGI and Motion Capture Interview

- 3D Modeling Fundamentals: Understanding polygon modeling, NURBS surfaces, and sculpting techniques. Practical application: Creating realistic characters or environments for a film or game.

- Texturing and Shading: Mastering PBR (Physically Based Rendering) workflows, material creation, and lighting techniques. Practical application: Achieving photorealistic visuals and enhancing the believability of virtual characters and environments.

- Animation Principles: Knowledge of 12 principles of animation and their application in CGI and Motion Capture. Practical application: Creating believable and engaging character animations.

- Motion Capture Pipeline: Understanding the process from capturing raw motion data to cleaning, retargeting, and integrating it into a 3D animation software. Practical application: Troubleshooting issues in motion capture data and ensuring seamless integration with CGI elements.

- Software Proficiency: Demonstrate expertise in industry-standard software such as Maya, 3ds Max, Blender, MotionBuilder, or similar. Practical application: Efficiently using these tools to complete projects within deadlines.

- Rendering and Compositing: Understanding rendering techniques, optimizing render settings, and compositing elements together for final output. Practical application: Creating high-quality visuals that meet industry standards.

- Problem-Solving and Troubleshooting: Ability to identify and resolve technical challenges related to modeling, animation, rendering, and motion capture data. Practical application: Demonstrating resourcefulness and adaptability in tackling complex problems.

- Pipeline Management: Familiarity with various aspects of a production pipeline, including asset management, version control, and collaboration techniques. Practical application: Working effectively within a team and contributing to a smooth production workflow.

Next Steps

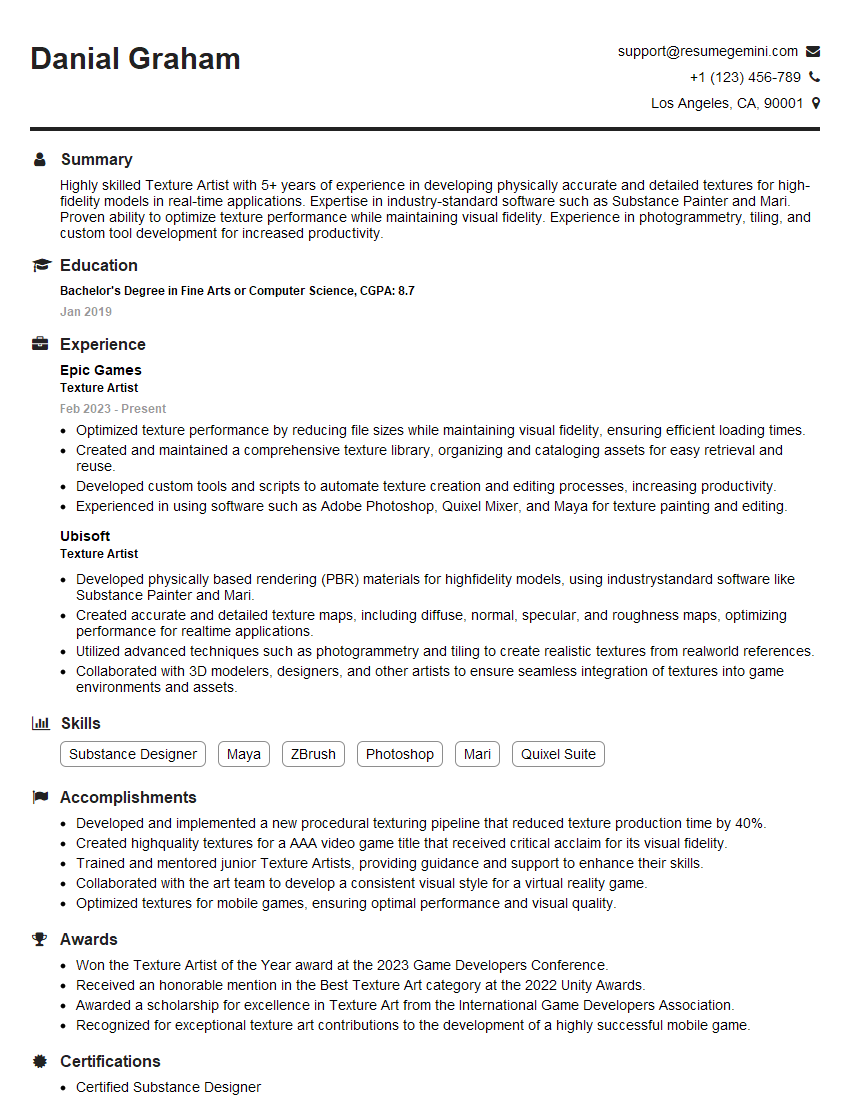

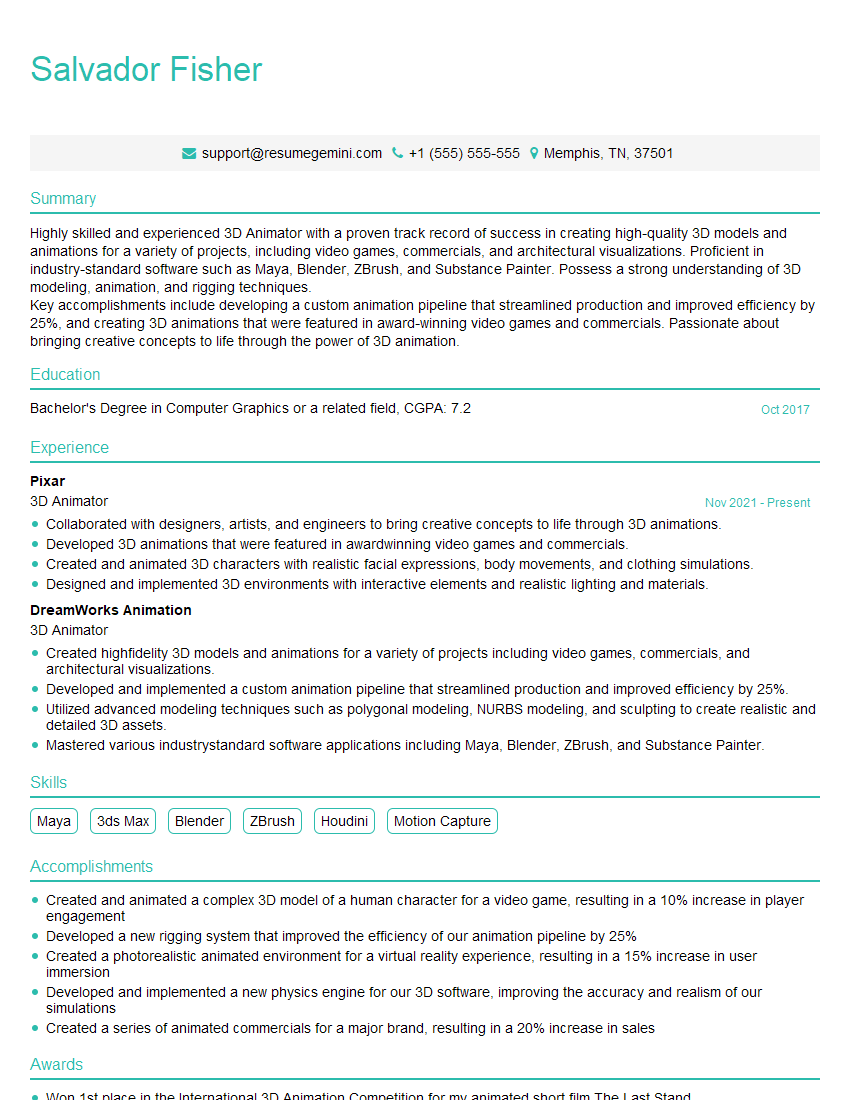

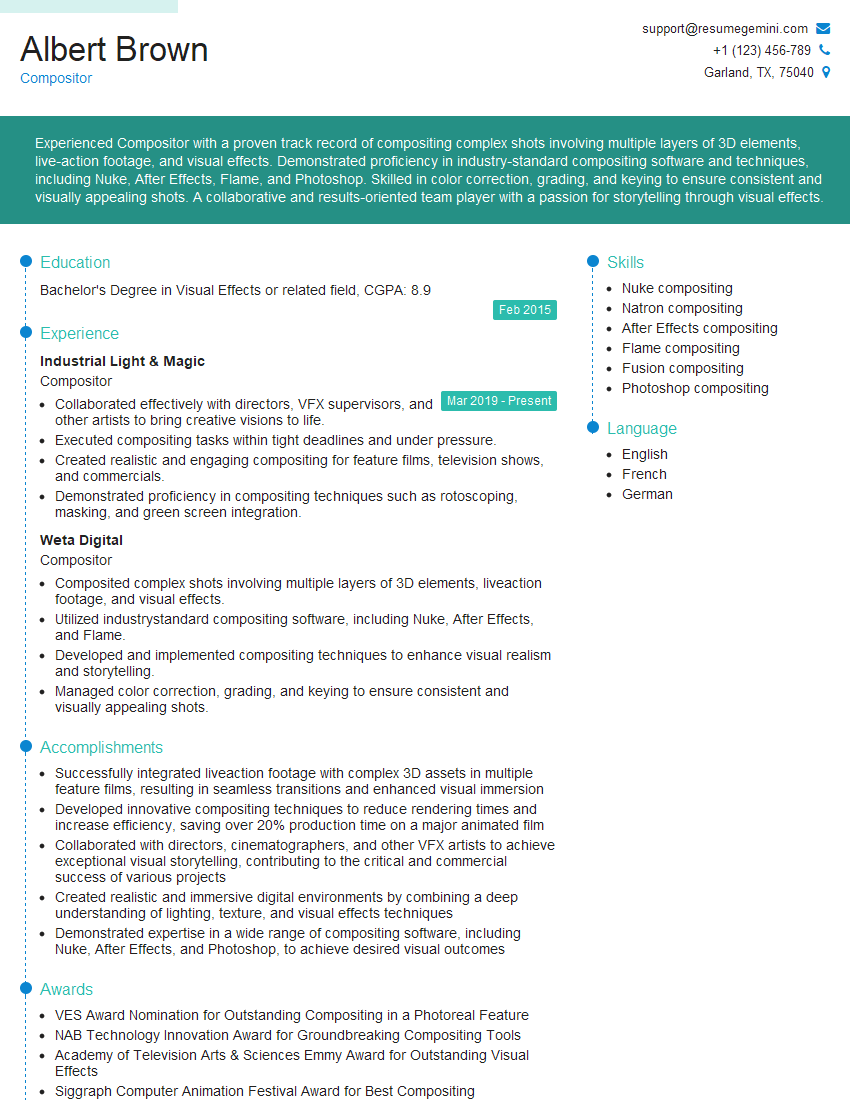

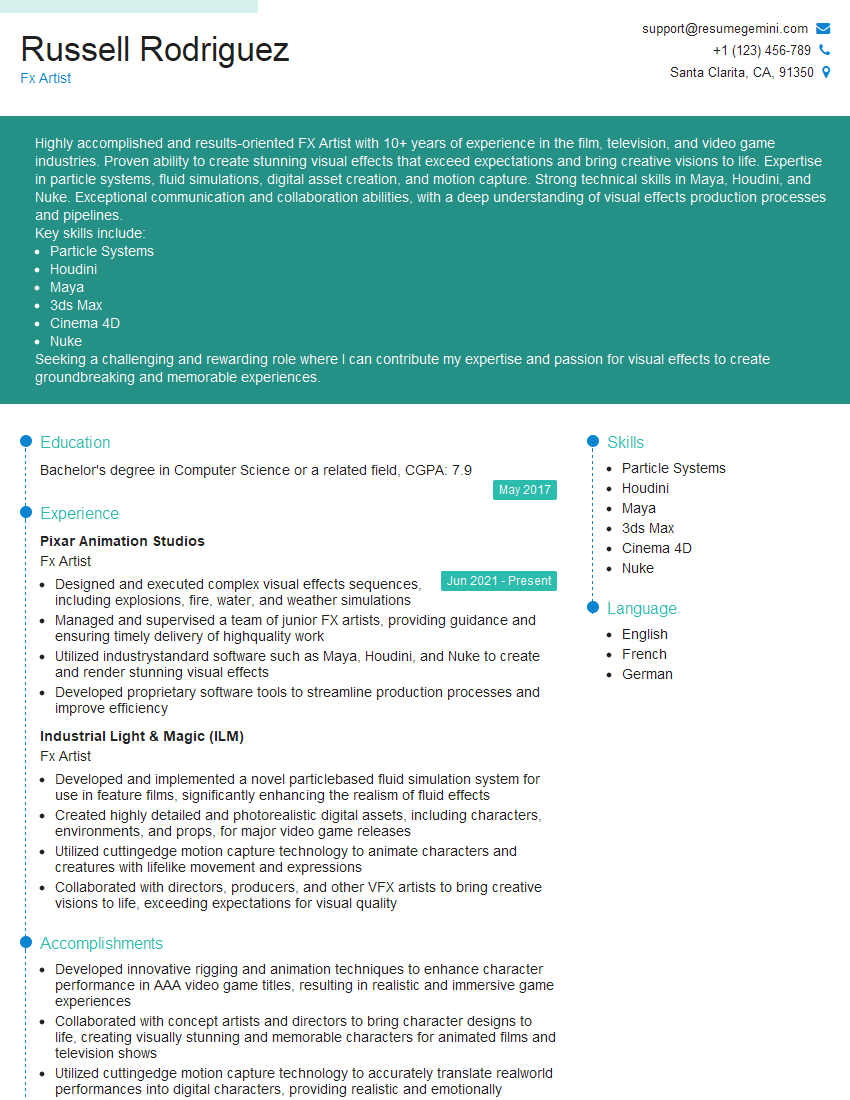

Mastering CGI and Motion Capture opens doors to exciting careers in film, gaming, advertising, and beyond. To maximize your job prospects, create an ATS-friendly resume that effectively highlights your skills and experience. ResumeGemini is a trusted resource to help you build a professional resume that grabs recruiters’ attention. Examples of resumes tailored to CGI and Motion Capture roles are available to guide you. Invest time in crafting a compelling resume – it’s your first impression!

Explore more articles

Users Rating of Our Blogs

Share Your Experience

We value your feedback! Please rate our content and share your thoughts (optional).

What Readers Say About Our Blog

Hello,

We found issues with your domain’s email setup that may be sending your messages to spam or blocking them completely. InboxShield Mini shows you how to fix it in minutes — no tech skills required.

Scan your domain now for details: https://inboxshield-mini.com/

— Adam @ InboxShield Mini

Reply STOP to unsubscribe

Hi, are you owner of interviewgemini.com? What if I told you I could help you find extra time in your schedule, reconnect with leads you didn’t even realize you missed, and bring in more “I want to work with you” conversations, without increasing your ad spend or hiring a full-time employee?

All with a flexible, budget-friendly service that could easily pay for itself. Sounds good?

Would it be nice to jump on a quick 10-minute call so I can show you exactly how we make this work?

Best,

Hapei

Marketing Director

Hey, I know you’re the owner of interviewgemini.com. I’ll be quick.

Fundraising for your business is tough and time-consuming. We make it easier by guaranteeing two private investor meetings each month, for six months. No demos, no pitch events – just direct introductions to active investors matched to your startup.

If youR17;re raising, this could help you build real momentum. Want me to send more info?

Hi, I represent an SEO company that specialises in getting you AI citations and higher rankings on Google. I’d like to offer you a 100% free SEO audit for your website. Would you be interested?

Hi, I represent an SEO company that specialises in getting you AI citations and higher rankings on Google. I’d like to offer you a 100% free SEO audit for your website. Would you be interested?

good