Interviews are opportunities to demonstrate your expertise, and this guide is here to help you shine. Explore the essential Experience in conducting human factors research using qualitative and quantitative methods interview questions that employers frequently ask, paired with strategies for crafting responses that set you apart from the competition.

Questions Asked in Experience in conducting human factors research using qualitative and quantitative methods Interview

Q 1. Explain the difference between qualitative and quantitative research methods in human factors.

Qualitative and quantitative research methods in human factors are complementary approaches to understanding user behavior and experience. Qualitative research focuses on why users behave the way they do, exploring in-depth the reasons behind their actions and experiences. It emphasizes rich, descriptive data, often gathered through interviews, observations, and focus groups. Think of it as delving deep into a few specific cases to understand the underlying motivations. Quantitative research, on the other hand, focuses on how many or how much, using numerical data to measure and analyze trends. It employs statistical analysis to identify patterns and relationships in a larger sample population. For example, measuring the time it takes to complete a task or the error rate in a system would be a quantitative approach. In practice, a mixed-methods approach combining both is often the most effective.

Example: Imagine we are designing a new mobile banking app. Qualitative research might involve conducting in-depth interviews with users to understand their frustrations with existing banking apps and their expectations for a new one. Quantitative research could then involve usability testing with a larger group to measure task completion times and error rates for specific functions in the prototype, providing objective data on the app’s usability.

Q 2. Describe your experience designing and conducting user interviews.

Designing and conducting user interviews is a crucial part of my research process. I begin by defining clear objectives and formulating specific, open-ended questions that encourage detailed responses rather than simple ‘yes’ or ‘no’ answers. The interview guide is rigorously tested in pilot interviews to refine its flow and ensure clarity. During the interview itself, I create a comfortable and non-judgmental environment, actively listening to participants and probing for deeper insights when necessary. I always obtain informed consent and ensure participant confidentiality.

Example: In a recent project evaluating a new medical device, I developed an interview protocol focusing on users’ experiences, pain points, and suggestions for improvement. I conducted semi-structured interviews with clinicians and patients, tailoring my approach to the specific audience. The interviews provided rich qualitative data, revealing unexpected challenges in using the device and subsequently informed iterative design improvements.

Q 3. How do you analyze qualitative data, such as interview transcripts or observations?

Analyzing qualitative data, such as interview transcripts or observation notes, involves a systematic approach. It begins with transcription (for interviews) followed by coding, where I identify recurring themes, patterns, and keywords within the data. I often use thematic analysis, a widely recognized method for identifying patterns and relationships within qualitative data. Software like NVivo can assist in this process. After coding, I organize the data into meaningful categories, build narratives, and draw conclusions based on the emergent themes. The process is iterative, involving constant review and refinement until a coherent interpretation is achieved. Member checking—returning to participants for feedback on the analysis—ensures that my interpretations align with their experiences.

Example: In a study examining user experiences with an online grocery service, I coded interview transcripts for themes like ‘ease of use’, ‘product selection’, ‘delivery experience’, and ‘customer service’. Analyzing the frequency and context of these themes revealed that issues with delivery times were a major source of frustration for users, impacting overall satisfaction.

Q 4. What statistical methods are you proficient in using for quantitative human factors data analysis?

I’m proficient in a range of statistical methods for quantitative data analysis relevant to human factors. This includes descriptive statistics (means, standard deviations, frequencies) to summarize data; inferential statistics like t-tests, ANOVA, and regression analysis to identify significant differences or relationships between variables; and non-parametric tests when data doesn’t meet the assumptions of parametric tests. I also have experience using statistical software packages such as R and SPSS to perform complex analyses.

Example: In a usability study comparing two different website designs, I used a t-test to compare the mean task completion times for users interacting with each design. A significant difference in means would suggest that one design is significantly more efficient than the other.

Q 5. Describe your experience with usability testing methodologies.

My experience with usability testing methodologies is extensive. I’ve conducted various types of usability tests, including moderated and unmoderated remote testing, think-aloud protocols, heuristic evaluations, and cognitive walkthroughs. I’m skilled in developing test plans, recruiting participants, creating test scripts, conducting tests, collecting data, and analyzing the results to identify usability issues and make design recommendations. I adapt my methodology to the specific product and target audience.

Example: In a recent project evaluating a new software application, I conducted a think-aloud usability test, where participants verbalized their thoughts and actions while interacting with the software. This provided valuable insights into their decision-making processes and helped identify areas of confusion or frustration.

Q 6. How would you measure user satisfaction in a product design context?

Measuring user satisfaction in a product design context involves a multi-faceted approach. I typically employ both quantitative and qualitative methods. Quantitatively, I use standardized questionnaires like the System Usability Scale (SUS) or the User Satisfaction Questionnaire (USQ) to obtain numerical scores reflecting overall satisfaction. These questionnaires provide a reliable and efficient way to gather numerical data about user satisfaction on a scale. Qualitatively, I gather in-depth feedback through post-task interviews or open-ended survey questions to understand the reasons behind the quantitative scores. This helps to identify specific aspects of the product that contribute to or detract from user satisfaction, offering valuable context to the numerical data. Combining these methods gives a comprehensive understanding of user satisfaction.

Example: After usability testing a new e-commerce website, I administered the SUS questionnaire to participants, obtaining a numerical score indicating overall usability. Follow-up interviews then allowed me to explore the reasons for high or low scores and understand the specific aspects of the website that influenced user satisfaction.

Q 7. Explain your experience with A/B testing and its application in human factors.

A/B testing, or split testing, is a powerful method for comparing two versions of a design element to determine which performs better. In the context of human factors, this might involve comparing two different layouts of a website, two different button designs, or two different instructions for a task. I typically use A/B testing to evaluate the impact of design changes on key metrics like task completion rate, error rate, and user satisfaction. The results guide iterative improvements and evidence-based design decisions.

Example: To optimize the checkout process on an e-commerce website, we conducted A/B testing, comparing two different versions of the checkout page: one with a simplified layout and the other with the existing, more complex layout. By measuring conversion rates (successful checkouts) and task completion times for each version, we determined that the simplified layout significantly improved performance. This data-driven approach allowed for a demonstrable improvement in the user experience.

Q 8. What are some common pitfalls to avoid when conducting human factors research?

Common pitfalls in human factors research often stem from flawed study design, inadequate data analysis, or insufficient consideration of the human element. One major pitfall is confirmation bias, where researchers unintentionally seek data that confirms their pre-existing hypotheses, ignoring contradictory evidence. For example, if a researcher believes a specific interface is intuitive, they might unintentionally lead participants towards confirming this belief during testing. Another common error is inadequate sampling. A sample size that’s too small can lead to statistically insignificant results, while a sample that doesn’t represent the target user population (e.g., testing a new elderly care app only on young adults) can render the findings irrelevant. Poorly defined metrics is another significant pitfall. If the success criteria aren’t clearly established before the study begins, it becomes difficult to interpret the results meaningfully. Finally, neglecting to account for individual differences among participants can significantly skew the data and compromise the study’s validity. Researchers should always strive for representative samples and carefully consider participant diversity in terms of age, abilities, and experience.

Q 9. How do you ensure ethical considerations are met during human factors research?

Ethical considerations are paramount in human factors research. Prioritizing participant well-being and ensuring informed consent is fundamental. This means obtaining explicit, written consent from each participant before the study commences, fully explaining the study’s purpose, procedures, risks, and benefits. Participants must be free to withdraw at any time without penalty. Maintaining anonymity and confidentiality is also crucial. Data should be anonymized where possible, and researchers must establish robust protocols to protect participant privacy. Debriefing after the study is essential; this allows participants to ask questions and express concerns, ensuring a positive experience. Furthermore, any potential discomfort or stress experienced by participants should be minimized and addressed appropriately. For studies involving deception, thorough justification and debriefing are absolutely necessary. Finally, Institutional Review Board (IRB) approval is essential before conducting any human subjects research, to ensure adherence to ethical guidelines and regulations.

Q 10. Describe your experience with different types of data collection techniques (e.g., surveys, physiological measurements).

My experience encompasses a wide range of data collection techniques. I’ve extensively used surveys to gather quantitative data on user attitudes, preferences, and perceptions. For example, I used a Likert scale survey to assess user satisfaction with a new software interface. I’ve also employed qualitative methods like semi-structured interviews to gain in-depth insights into user experiences and pain points. One project involved interviewing users about their challenges with a complex medical device. In addition, I have considerable experience with physiological measurements. For instance, I’ve used electroencephalography (EEG) to measure brain activity during specific tasks, providing objective measures of cognitive workload. I’ve also utilized eye-tracking to analyze visual attention and identify areas of interest or confusion in an interface (more on this in a later answer). Finally, I’ve employed think-aloud protocols where participants vocalize their thoughts while performing a task, allowing for direct observation of their cognitive processes. Choosing the right method depends heavily on the research questions and the nature of the phenomenon being studied.

Q 11. Explain your understanding of human factors principles related to workload, fatigue, and stress.

Understanding workload, fatigue, and stress is critical in human factors. Workload refers to the mental and physical demands placed on a person by a task. High workload can lead to errors and reduced performance. Measuring workload often involves subjective scales (e.g., NASA-TLX) and objective metrics (e.g., task completion time, error rate). Fatigue is a state of reduced physical or mental capacity, often stemming from prolonged exertion or lack of sleep. It impairs performance and increases error rates. We often assess fatigue through self-report questionnaires, physiological measures (e.g., heart rate variability), and performance-based tests. Stress, a response to perceived threats or demands, can manifest physically (e.g., increased heart rate, muscle tension) and psychologically (e.g., anxiety, irritability). We can measure stress through physiological indicators and psychological scales, and understanding its impact on human performance is vital in designing safe and effective systems. In practice, I often integrate multiple measures to get a comprehensive picture of how these factors interact to influence human performance. For example, in a study on air traffic controllers, we measured workload using NASA-TLX, fatigue using a sleepiness scale, and stress via salivary cortisol levels.

Q 12. How do you translate research findings into actionable design recommendations?

Translating research findings into actionable design recommendations requires a systematic approach. First, I thoroughly analyze the data, identifying key trends and patterns. Then, I map these findings back to the original research questions and objectives. This allows me to directly connect the data to specific design issues. For example, if eye-tracking data reveals users consistently overlook a critical button, that directly informs the need for a more prominent or better-placed button. Next, I develop specific, measurable, achievable, relevant, and time-bound (SMART) design recommendations. These recommendations should be practical and feasible to implement within the given constraints. Finally, I clearly communicate these recommendations to the design team, providing context, supporting data, and visual aids (e.g., annotated screenshots, flowcharts) to ensure understanding and buy-in. I often present findings in a clear and concise report including both qualitative and quantitative data, supporting figures and tables, and summarizing the key implications for design.

Q 13. Describe a time you had to adapt your research methods due to unforeseen challenges.

During a study on the usability of a new medical device, we initially planned to conduct in-person usability testing with a diverse group of healthcare professionals. However, due to unexpected travel restrictions related to a pandemic, we had to rapidly adapt. We transitioned to remote usability testing using video conferencing and screen sharing software. This required re-designing the testing protocol to accommodate the remote environment, ensuring we could still collect meaningful data. For instance, we implemented more detailed screen recording to capture user interactions and incorporated a remote think-aloud protocol with clear instructions to the participants. While the change was challenging, it ultimately proved successful, demonstrating the adaptability required in human factors research. The resulting data were comparable to what we would have collected in a traditional setting, showcasing the importance of flexibility and problem-solving skills in this field.

Q 14. How familiar are you with eye-tracking technology and its application in usability testing?

I’m very familiar with eye-tracking technology and its application in usability testing. Eye-tracking provides valuable insights into user attention, visual search patterns, and areas of difficulty within an interface. It complements other methods by offering objective data on where users look and for how long, revealing potential usability issues that might otherwise be overlooked. For example, in a recent project, eye-tracking revealed that users consistently missed a crucial error message in a web form because it was visually overshadowed by other elements. This objective data allowed us to make data-driven recommendations to improve the message’s visibility. We typically use heatmaps and gaze plots to visualize the eye-tracking data, facilitating the identification of design improvements. Analyzing fixation durations and saccade lengths provides valuable information about the cognitive processes and difficulty levels encountered while using an interface. The choice of eye-tracking methodology (e.g., remote vs. lab-based) depends on the research goals and practical considerations.

Q 15. What software or tools are you proficient in using for data analysis in human factors research?

For data analysis in human factors research, I’m proficient in several software packages. My core competency lies in using statistical software like R and SPSS for quantitative analysis. R, in particular, offers incredible flexibility with its extensive libraries for statistical modeling, data visualization, and creating custom analyses. I frequently utilize packages like ggplot2 for creating publication-quality graphs and lme4 for mixed-effects modeling, which is crucial when dealing with repeated measures designs common in human factors studies. For qualitative data analysis, I rely on NVivo and ATLAS.ti. These software packages allow me to manage large datasets of interview transcripts, observations, and other qualitative data, facilitating efficient coding, thematic analysis, and the identification of patterns and trends. I’m also comfortable using spreadsheet software like Excel and Google Sheets for simpler data management and preliminary analyses.

Career Expert Tips:

- Ace those interviews! Prepare effectively by reviewing the Top 50 Most Common Interview Questions on ResumeGemini.

- Navigate your job search with confidence! Explore a wide range of Career Tips on ResumeGemini. Learn about common challenges and recommendations to overcome them.

- Craft the perfect resume! Master the Art of Resume Writing with ResumeGemini’s guide. Showcase your unique qualifications and achievements effectively.

- Don’t miss out on holiday savings! Build your dream resume with ResumeGemini’s ATS optimized templates.

Q 16. Describe your experience working with diverse participant populations in research studies.

I have extensive experience working with diverse participant populations. This includes studies involving individuals with varying levels of technological proficiency, age groups ranging from young children to senior citizens, and individuals with disabilities. For example, in a recent study on the usability of a medical device, we included participants with varying levels of dexterity and visual acuity. To ensure inclusivity and mitigate bias, we employed several strategies. This involved careful participant recruitment through diverse channels, adapting our research materials and methods to accommodate individual needs (e.g., using large-font materials, providing alternative input methods), and employing trained research assistants who were proficient in working with diverse populations. The crucial aspect is tailoring the research design to the specific needs of the population, ensuring the data collected is reliable and representative. Considerate protocol adaptation is critical to meaningful results and ethical research practices.

Q 17. Explain the concept of human error and how it relates to system design.

Human error is a deviation from an intended course of action. It’s not simply carelessness but often stems from complex interactions between the human operator, the task, and the environment. Think of it like this: a perfectly skilled pilot can still make a mistake if the cockpit controls are poorly designed or if they’re suffering from fatigue. In system design, understanding human error is paramount. We apply this understanding by designing systems that anticipate and mitigate potential errors. This involves considering human limitations in attention, memory, and perception. For instance, designing a system with clear visual cues, simplified workflows, and redundancy to catch mistakes can significantly reduce error rates. We use methods like Human Error Analysis (HEA) to systematically investigate errors and their root causes, helping to design safer and more user-friendly systems.

Q 18. How would you apply human factors principles to improve the safety of a specific system or product (e.g., a medical device, an automobile)?

Let’s consider improving the safety of an automobile. Human factors principles can significantly improve safety. We would start by analyzing current accident data and reports to identify common causes of accidents. This data analysis would illuminate specific areas where human factors play a significant role. For example, we might discover that driver distraction is a major contributor to accidents. Based on this, we could implement several human factors interventions: 1. Improved in-car interface design: Minimizing distractions caused by complex infotainment systems by simplifying the interface, incorporating voice control options, and reducing visual clutter. 2. Advanced driver-assistance systems (ADAS): Incorporating features like lane departure warnings, adaptive cruise control, and automatic emergency braking to help drivers avoid accidents. 3. Improved ergonomics of the driving environment: Ensuring that controls are easily accessible and intuitive, reducing driver fatigue by optimizing seat adjustability and visibility.

Q 19. What is your experience with creating and implementing human factors guidelines or standards?

I have experience creating and implementing human factors guidelines in collaboration with engineering teams. In a previous role, I worked on developing usability guidelines for a new medical device, ensuring its design aligned with ISO standards. This involved defining specific metrics for usability, such as task completion time, error rate, and subjective workload. I then participated in iterative testing and design adjustments based on user feedback. I’ve also been involved in creating internal guidelines within my company. These guidelines emphasize best practices for user interface design, task analysis, and usability testing, helping our teams to consistently create user-centered products. The creation of these guidelines frequently involves literature reviews, expert panels, and pilot testing to ensure that they are practical, effective, and adaptable to various contexts.

Q 20. Explain your understanding of cognitive ergonomics and its applications.

Cognitive ergonomics focuses on understanding and optimizing the cognitive processes involved in human-system interaction. It examines how human mental processes – such as attention, memory, decision-making, and problem-solving – affect the performance and safety of tasks. For example, consider designing a control panel for a power plant. Cognitive ergonomics principles would guide the design of the interface to reduce cognitive load and minimize the chance of operator errors. This might involve using clear visual cues, organizing information logically, and minimizing the number of steps required to complete a task. Applications range from workplace design to software development, ensuring that systems and tasks are aligned with human cognitive capabilities. It involves using techniques like cognitive task analysis, mental workload assessment, and situation awareness analysis to improve system design and user experience.

Q 21. How do you ensure the validity and reliability of your human factors research?

Ensuring validity and reliability is crucial. Validity refers to whether the research measures what it intends to measure. We enhance validity by using established methods like peer review and rigorous testing of our instruments. Reliability, on the other hand, refers to the consistency and reproducibility of the results. We ensure reliability through rigorous research design, using standardized procedures, and employing multiple measures whenever possible. For example, in a usability study, we might use both objective measures (e.g., task completion time, error rate) and subjective measures (e.g., questionnaires, interviews) to assess the usability of a product. Triangulation, using multiple data sources, allows for a more robust and credible interpretation of the findings. We also meticulously document our methods to ensure transparency and replicability.

Q 22. Describe your experience with mixed-methods research designs.

Mixed-methods research combines both qualitative and quantitative approaches to gain a more comprehensive understanding of a research problem. Think of it like this: qualitative methods provide the ‘why’ – the rich, nuanced understanding of human experiences and perspectives – while quantitative methods provide the ‘how many’ – the statistical data to quantify those experiences. In my work, I’ve frequently employed this approach. For example, in a recent study on user interface design, we first conducted user interviews (qualitative) to understand users’ frustrations and pain points with the existing system. We then used A/B testing (quantitative) to compare the effectiveness of different design solutions identified during the qualitative phase, measuring metrics like task completion time and error rates. This integrated approach provided a much richer and more actionable understanding than either method could have achieved alone.

The design of a mixed-methods study is crucial. I often use a concurrent design, where qualitative and quantitative data are collected and analyzed simultaneously, informing each other throughout the process. Other approaches involve sequential designs, where one method follows the other, with the first informing the second. The choice depends on the research question and resources available.

Q 23. Explain your experience with different sampling techniques in human factors research.

Sampling techniques are vital for ensuring the generalizability of research findings. In human factors, the choice of sampling method depends heavily on the research question and the population of interest. I’ve worked with a range of techniques, including:

- Random sampling: Every member of the population has an equal chance of being selected. This is ideal for maximizing generalizability but can be challenging in practice, especially with large or dispersed populations.

- Stratified sampling: The population is divided into subgroups (strata) based on relevant characteristics (e.g., age, experience level), and participants are randomly selected from each stratum. This ensures representation from all key subgroups.

- Convenience sampling: Participants are selected based on their availability and ease of access. While convenient, this method introduces bias and limits the generalizability of findings. I use this cautiously, usually in exploratory studies or pilot tests.

- Purposive sampling: Participants are selected based on their specific characteristics relevant to the research question (e.g., expert users for usability testing). This is common in qualitative research where specific perspectives are sought.

In a recent study on the usability of a medical device, we employed stratified sampling to ensure representation across different levels of medical expertise among users. This allowed us to identify usability issues specific to different user groups and tailor design recommendations accordingly.

Q 24. What is your experience with statistical significance testing?

Statistical significance testing is crucial for determining whether observed effects in quantitative data are likely due to chance or represent a real effect. I’m proficient in various tests, including t-tests, ANOVA, and chi-squared tests. The choice of test depends on the type of data (e.g., continuous, categorical) and the research design. It’s important to remember that statistical significance doesn’t necessarily equate to practical significance. A statistically significant effect might be too small to be meaningful in a real-world context. I always consider both statistical and practical significance when interpreting results.

For example, in analyzing A/B test data, we might find that a new design leads to a statistically significant reduction in task completion time. However, if the reduction is only a fraction of a second, it might not be practically significant enough to justify the cost and effort of redesigning the system. Therefore, I always present both the p-value (representing statistical significance) and effect size (representing practical significance) when reporting results.

Q 25. How do you present and communicate your research findings to a technical and non-technical audience?

Effective communication of research findings is crucial. I tailor my communication style to the audience. For technical audiences (e.g., fellow researchers, engineers), I present detailed statistical analyses, methodological explanations, and in-depth discussions of the results. I use charts, graphs, and tables to present data effectively. For non-technical audiences (e.g., stakeholders, clients), I focus on the key findings, their implications, and recommendations for action, using clear, concise language and avoiding jargon. I rely more on visual aids like infographics and plain-language summaries.

In all presentations, whether technical or non-technical, I emphasize the story of the research—the problem, the methodology, the key findings, and the implications. This makes the findings more engaging and memorable for the audience. For example, I might use case studies or anecdotes from user interviews to illustrate key findings and make them more relatable.

Q 26. Describe your understanding of human-computer interaction (HCI) principles.

Human-computer interaction (HCI) principles guide the design of user-centered systems. My understanding encompasses several key areas:

- Usability: The system should be easy to learn, efficient to use, memorable, and free from errors. This involves considering factors like learnability, efficiency, memorability, errors, and satisfaction.

- Accessibility: The system should be usable by people with disabilities, adhering to guidelines like WCAG (Web Content Accessibility Guidelines).

- User experience (UX): The overall experience of interacting with the system, encompassing emotional responses, satisfaction, and engagement.

- Cognitive ergonomics: Understanding human cognitive processes (attention, memory, perception) to design systems that minimize cognitive load and maximize performance.

- Iterative design: A cyclical process of design, testing, and refinement based on user feedback.

For instance, in a project involving the design of a mobile banking app, we applied HCI principles to ensure the app was intuitive, accessible to users with visual impairments, and provided a positive and engaging user experience. We conducted iterative usability testing throughout the design process to incorporate user feedback and improve the app’s design.

Q 27. What are some current trends or emerging technologies in the field of human factors research?

The field of human factors is constantly evolving. Some current trends and emerging technologies include:

- Artificial intelligence (AI) in human factors research: AI is being used to automate data analysis, personalize user experiences, and create more realistic simulations for testing. For example, AI-powered eye-tracking analysis can provide insights into user attention and cognitive processes.

- Virtual reality (VR) and augmented reality (AR) for usability testing: VR and AR provide immersive environments for testing user interfaces and interactions in realistic contexts. This allows for more engaging and ecologically valid testing.

- Big data and analytics: Analyzing large datasets from user interactions to identify patterns and predict user behavior. This allows for data-driven design and personalized user experiences.

- Wearable sensors and physiological data: Using wearable sensors to collect physiological data (e.g., heart rate, skin conductance) to measure user stress and workload. This provides objective measures of user experience.

- User-centered design approaches focused on ethical considerations and inclusive design: There is growing emphasis on designing systems that are not only usable but also ethical and inclusive, considering the needs and perspectives of all users, including those from diverse backgrounds and abilities.

These technologies and approaches are transforming how we conduct human factors research and design user-centered systems. Staying abreast of these developments is critical for maintaining expertise in this dynamic field.

Key Topics to Learn for Experience in conducting human factors research using qualitative and quantitative methods Interview

- Understanding Human Factors Principles: Review core principles of human factors, including human capabilities and limitations, ergonomics, and usability. Consider the theoretical frameworks that underpin your research approach.

- Qualitative Research Methods: Be prepared to discuss your experience with methods like interviews, focus groups, ethnographic studies, and usability testing. Highlight your ability to analyze qualitative data and draw meaningful conclusions.

- Quantitative Research Methods: Showcase your proficiency in quantitative methods such as surveys, experiments, and statistical analysis. Be ready to explain your choices of statistical tests and interpret results effectively.

- Research Design and Methodology: Demonstrate understanding of different research designs (e.g., experimental, correlational, quasi-experimental) and their application in human factors research. Be able to justify your methodological choices.

- Data Analysis and Interpretation: Explain your experience with both qualitative and quantitative data analysis techniques. Focus on your ability to synthesize findings from diverse data sources and present compelling conclusions.

- Problem-Solving and Critical Thinking: Prepare examples showcasing how you used human factors research to identify and solve problems related to product design, user experience, or workplace safety. Emphasize your analytical skills.

- Communication and Collaboration: Discuss your experience working in teams, presenting research findings, and communicating complex information clearly and concisely to diverse audiences.

- Specific Software & Tools: Mention any statistical software (e.g., SPSS, R), qualitative data analysis software (e.g., NVivo), or usability testing tools you are proficient in.

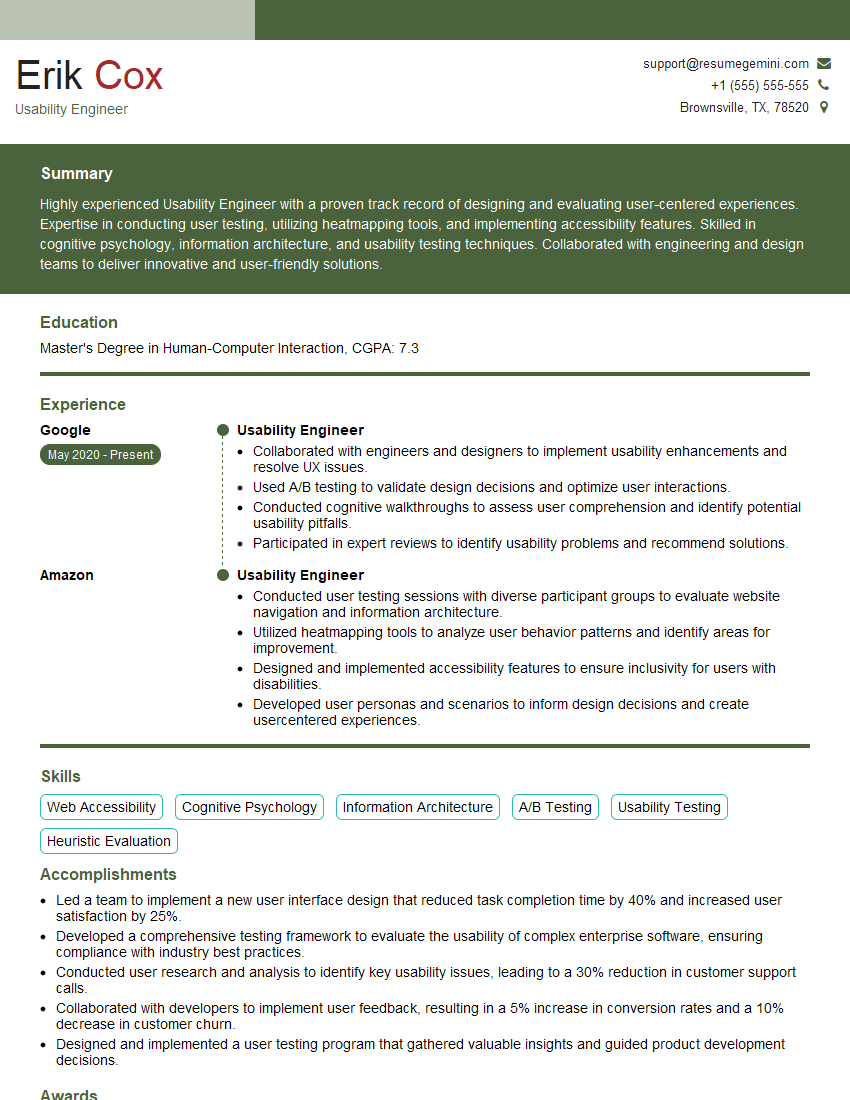

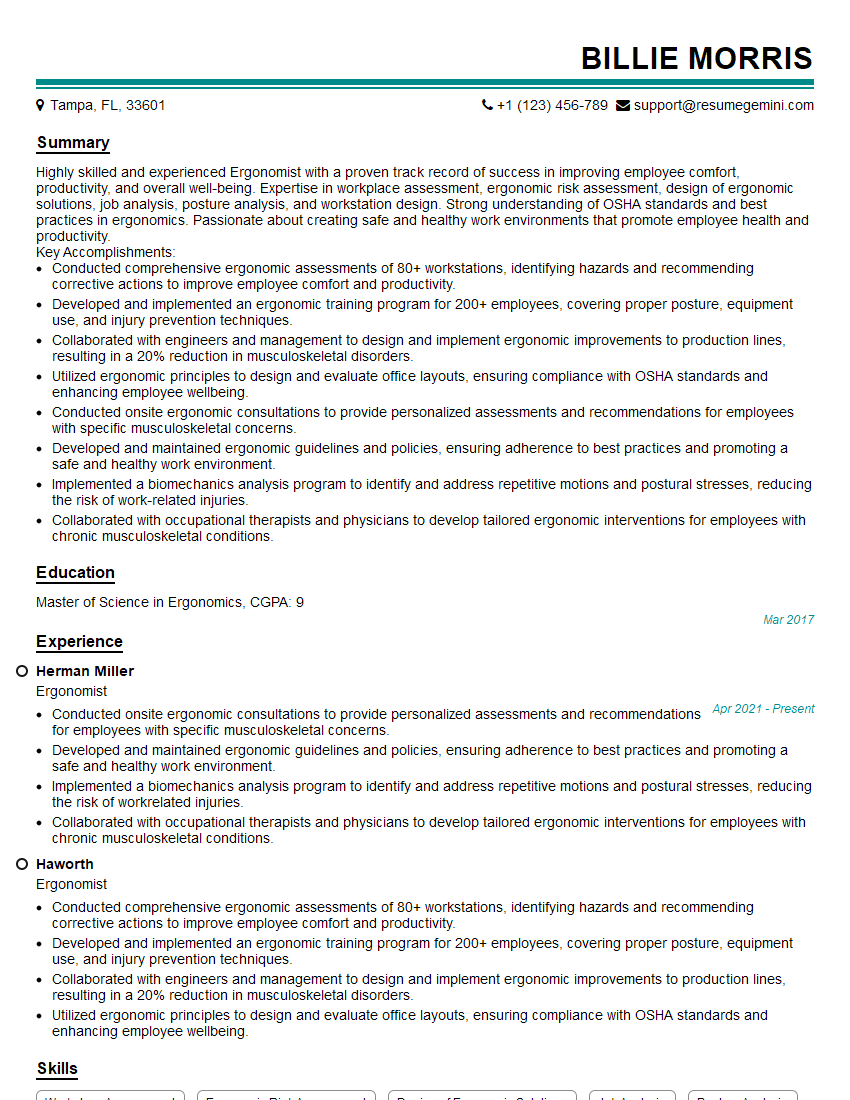

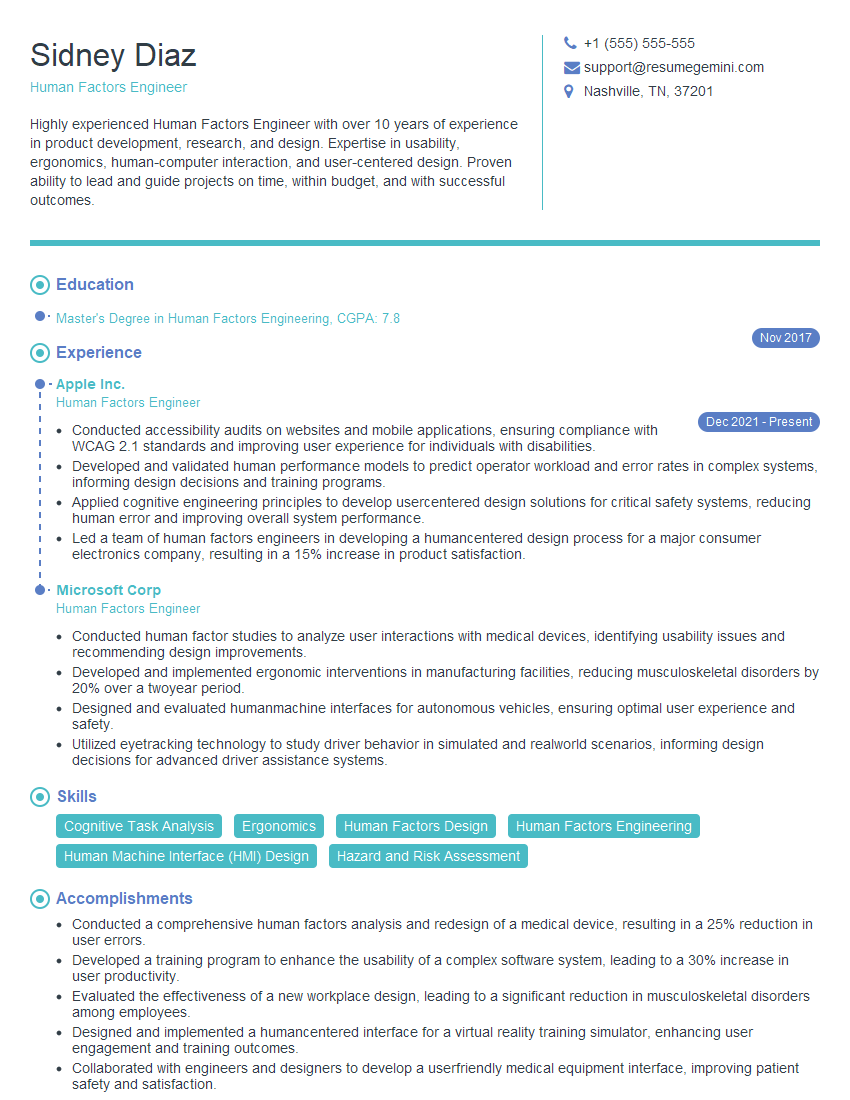

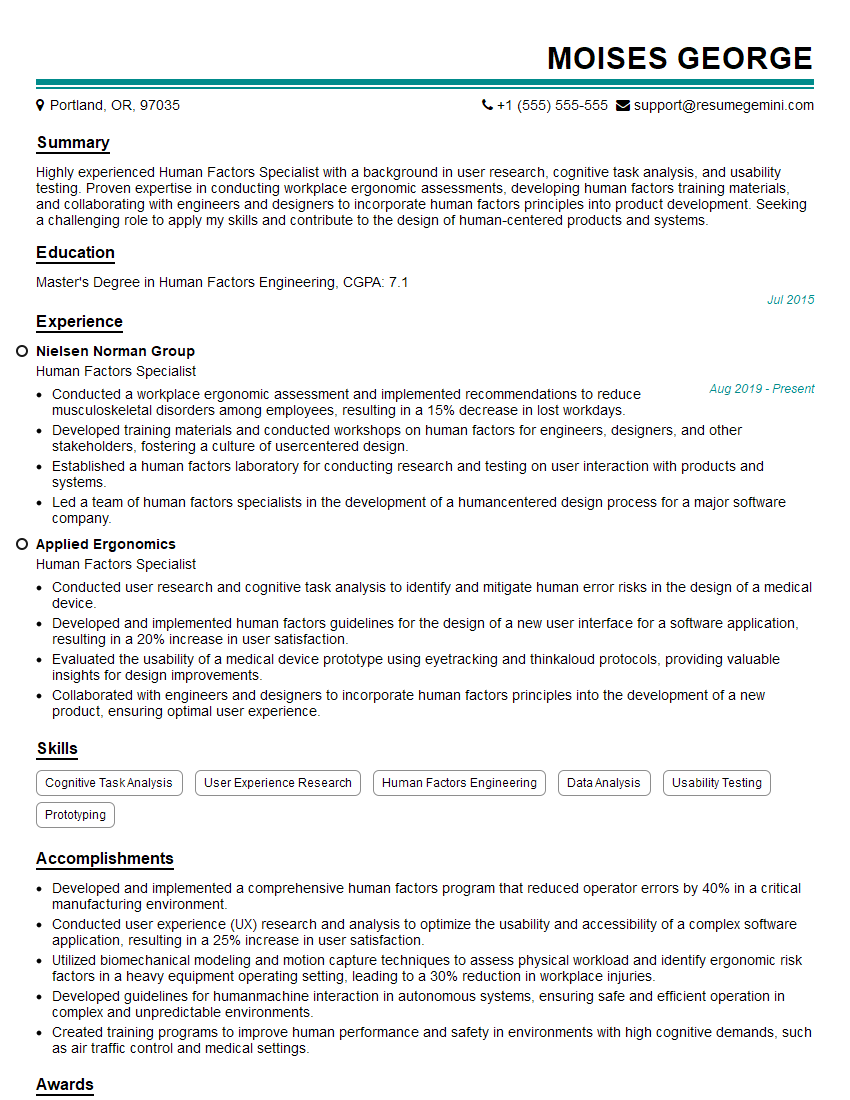

Next Steps

Mastering human factors research using both qualitative and quantitative methods is crucial for career advancement in this field. A strong understanding of these methodologies will significantly enhance your interview performance and job prospects. Creating an ATS-friendly resume is vital for getting your application noticed. ResumeGemini is a trusted resource that can help you build a professional and impactful resume tailored to your experience. Examples of resumes tailored to highlight experience in conducting human factors research using qualitative and quantitative methods are available, allowing you to craft a compelling application that showcases your skills effectively.

Explore more articles

Users Rating of Our Blogs

Share Your Experience

We value your feedback! Please rate our content and share your thoughts (optional).

What Readers Say About Our Blog

Hello,

We found issues with your domain’s email setup that may be sending your messages to spam or blocking them completely. InboxShield Mini shows you how to fix it in minutes — no tech skills required.

Scan your domain now for details: https://inboxshield-mini.com/

— Adam @ InboxShield Mini

Reply STOP to unsubscribe

Hi, are you owner of interviewgemini.com? What if I told you I could help you find extra time in your schedule, reconnect with leads you didn’t even realize you missed, and bring in more “I want to work with you” conversations, without increasing your ad spend or hiring a full-time employee?

All with a flexible, budget-friendly service that could easily pay for itself. Sounds good?

Would it be nice to jump on a quick 10-minute call so I can show you exactly how we make this work?

Best,

Hapei

Marketing Director

Hey, I know you’re the owner of interviewgemini.com. I’ll be quick.

Fundraising for your business is tough and time-consuming. We make it easier by guaranteeing two private investor meetings each month, for six months. No demos, no pitch events – just direct introductions to active investors matched to your startup.

If youR17;re raising, this could help you build real momentum. Want me to send more info?

Hi, I represent an SEO company that specialises in getting you AI citations and higher rankings on Google. I’d like to offer you a 100% free SEO audit for your website. Would you be interested?

Hi, I represent an SEO company that specialises in getting you AI citations and higher rankings on Google. I’d like to offer you a 100% free SEO audit for your website. Would you be interested?

good