The thought of an interview can be nerve-wracking, but the right preparation can make all the difference. Explore this comprehensive guide to Human Factors Standards interview questions and gain the confidence you need to showcase your abilities and secure the role.

Questions Asked in Human Factors Standards Interview

Q 1. Explain the difference between anthropometry and ergonomics.

Anthropometry and ergonomics are closely related but distinct fields within human factors. Anthropometry is the scientific study of the measurements and proportions of the human body. Think of it as the data layer. It provides the foundational measurements – height, weight, limb lengths, reach, etc. – that inform the design of products and environments. Ergonomics, on the other hand, is the broader discipline that applies the principles of anthropometry, along with other relevant sciences like psychology and physiology, to design systems and products that are compatible with human capabilities and limitations. It’s the application of the data. In essence, anthropometry gives us the numbers, while ergonomics uses those numbers to create safer, more efficient, and more comfortable designs.

For example, knowing the average sitting height of a population (anthropometry) allows ergonomic designers to determine the optimal height for a chair (ergonomics) to reduce back strain and promote proper posture.

Q 2. Describe your experience applying ISO 9241-171 guidelines.

I’ve extensively utilized ISO 9241-171, specifically its guidelines on usability, in various projects. One notable example involved redesigning the user interface for a hospital’s electronic health record (EHR) system. The ISO standard provided a framework for evaluating the system’s efficiency, effectiveness, and user satisfaction. We focused on aspects like learnability (how quickly nurses could learn the new system), error prevention (minimizing medication errors due to UI confusion), and user satisfaction (ensuring the system was not overly stressful to use during already demanding shifts).

Applying the standard involved conducting usability testing with target users (nurses) using methods outlined in the standard like think-aloud protocols and questionnaires. We then analyzed the results to identify areas for improvement and iteratively redesigned the interface, ensuring compliance with accessibility guidelines also. The result was a significantly improved EHR system with reduced error rates and improved user satisfaction, demonstrated through post-implementation surveys.

Q 3. How do you conduct a heuristic evaluation of a user interface?

A heuristic evaluation is a usability inspection method where experts evaluate a user interface against a set of established usability heuristics or principles. It’s like a checklist for good design. I typically use Nielsen’s 10 usability heuristics as a starting point, but I also tailor the heuristics to the specific context of the system being evaluated.

My process involves:

- Familiarizing myself with the system: Gaining a thorough understanding of the system’s functionality and target users.

- Selecting relevant heuristics: Identifying the heuristics most applicable to the specific system and its users.

- Individually evaluating the interface: Systematically traversing the interface, noting any violations of the selected heuristics.

- Severity rating: Assigning a severity rating to each identified problem based on its frequency, impact, and persistence.

- Reporting findings: Presenting the findings in a clear and concise report, including recommendations for improvement.

For instance, if a website lacks clear visual hierarchy, violating the heuristic of ‘visibility of system status,’ I would note this, explain why it’s a problem, and suggest a solution like using clear headings and visual cues.

Q 4. What are the key principles of human-centered design?

Human-centered design (HCD) prioritizes the needs, capabilities, and limitations of users throughout the entire design process. It’s not just about making things look pretty; it’s about making them usable and effective. Key principles include:

- User focus: Designers must understand and deeply empathize with their target users.

- Early user involvement: Users should be involved early and often in the design process to provide valuable feedback.

- Iteration: Design is an iterative process; designs should be tested, refined, and retested based on user feedback.

- Collaboration: HCD requires collaboration among designers, developers, and users.

- Holistic approach: Considers all aspects of user experience, including physical, cognitive, and emotional factors.

A simple example is designing a website for elderly users. HCD would dictate incorporating larger fonts, high contrast colors, and simplified navigation to accommodate age-related visual impairments.

Q 5. Explain the concept of cognitive workload and how it’s measured.

Cognitive workload refers to the mental effort required to perform a task. It’s like the mental ‘fuel’ we use to complete a job. High cognitive workload can lead to errors, stress, and reduced performance. Measuring cognitive workload involves both subjective and objective methods.

Subjective measures rely on self-reporting by the user, such as NASA-TLX (Task Load Index), which asks users to rate different aspects of their mental workload. Objective measures involve physiological or behavioral data, such as eye-tracking (measuring gaze duration and fixations), EEG (measuring brainwave activity), or performance metrics (e.g., reaction time, accuracy). The choice of measurement method depends on the specific task and research question.

Imagine a pilot navigating a complex flight scenario. High cognitive workload might be reflected by increased heart rate (objective), longer reaction times (objective), and self-reported stress levels (subjective).

Q 6. Describe your experience with usability testing methodologies.

My experience with usability testing encompasses a range of methodologies, including:

- Think-aloud protocols: Users verbalize their thoughts while performing tasks, providing insights into their decision-making process.

- Eye-tracking: Measuring users’ gaze patterns to identify areas of interest and difficulty.

- A/B testing: Comparing two different designs to determine which is more effective.

- Usability questionnaires: Collecting quantitative and qualitative data on user satisfaction and perceived usability.

- Heuristic evaluation (as discussed above): Expert review of the interface against usability principles.

In one project involving a mobile banking app, we used a combination of think-aloud protocols and A/B testing to compare two different navigation designs. Think-aloud protocols revealed user frustrations with the initial design, while A/B testing confirmed the superior usability of the redesigned navigation.

Q 7. How do you identify and mitigate human error in a system design?

Identifying and mitigating human error requires a proactive and multi-faceted approach. It starts with understanding the context of the error, using methods like error analysis (e.g., Reason’s Swiss Cheese Model). We look for vulnerabilities in the system – places where human limitations interact with system design flaws to create opportunities for errors.

Strategies for mitigation include:

- Improved design: Minimizing reliance on human memory (e.g., using checklists, prompts), simplifying complex tasks, and making controls intuitive and consistent.

- Training and education: Equipping users with the knowledge and skills to perform tasks safely and effectively.

- Automation: Automating error-prone tasks wherever feasible.

- Feedback mechanisms: Providing users with clear feedback on their actions to prevent and detect errors.

- Error recovery mechanisms: Designing systems that allow users to easily recover from errors without catastrophic consequences.

For example, in aviation, checklists are used to minimize errors during critical procedures, and flight simulators provide a safe environment for training pilots to handle emergencies. The goal is not to eliminate human error entirely (that’s unrealistic), but to design systems that are resilient to human error and minimize its consequences.

Q 8. What are the common human factors issues in software design?

Common human factors issues in software design stem from a mismatch between the user’s cognitive abilities, expectations, and the software’s interface and functionality. These issues often manifest as usability problems, impacting efficiency, satisfaction, and even safety.

- Cognitive Overload: Presenting too much information at once, demanding complex sequences of actions, or using unclear terminology can overwhelm users. Imagine a website with a cluttered layout and multiple pop-ups – it’s difficult to navigate and complete tasks.

- Poor Error Prevention and Recovery: Inadequate feedback mechanisms, confusing error messages, and a lack of undo features can lead to frustration and mistakes. Think of a form that doesn’t validate user input until submission, causing wasted effort if errors exist.

- Inconsistent Design: Using different conventions and visual cues across different parts of the software creates confusion and slows down user learning. Imagine a website where buttons look and behave differently on each page.

- Lack of Accessibility: Not considering users with disabilities (visual, auditory, motor) excludes a significant portion of the population. For example, a website without alt text for images is inaccessible to visually impaired users.

- Poor Learnability: The software is difficult to learn and understand, requiring extensive training or memorization. A complex software application with no helpful tutorials would be a prime example.

Addressing these issues requires iterative design, user testing, and a deep understanding of human-computer interaction principles.

Q 9. Explain the importance of considering human limitations in system design.

Considering human limitations is paramount in system design because humans aren’t perfect processors of information. We have cognitive biases, limited attention spans, and we make mistakes. Ignoring these limitations leads to systems that are difficult, inefficient, or even dangerous to use.

For example, designing a control panel with too many similar-looking buttons increases the risk of accidental activation, particularly under stress or time pressure (think of a nuclear power plant control room). Similarly, relying solely on memory for complex procedures can lead to errors. Checklists, visual cues, and feedback mechanisms can help compensate for human limitations. By designing systems that account for these limitations, we improve safety, usability, and overall effectiveness.

Furthermore, acknowledging the variability in human capabilities is crucial. Designs must accommodate diverse skill levels, ages, and physical abilities. A one-size-fits-all approach can lead to exclusion and usability problems.

Q 10. How do you conduct a task analysis?

A task analysis is a systematic process to understand how users accomplish tasks within a given system. It aims to identify the steps involved, the information needed, the tools used, and any potential problems or challenges.

The process typically involves:

- Identifying the tasks: Define the specific tasks users need to perform within the system. This often involves user interviews, observations, and document analysis.

- Breaking down tasks into sub-tasks: Decompose complex tasks into smaller, manageable units. This clarifies the steps involved and highlights potential points of difficulty.

- Analyzing each sub-task: For each sub-task, identify the actions, decisions, and information required. This can be represented using various methods like hierarchical task analysis (HTA) or flowcharts.

- Identifying potential problems: Examine each step for potential errors, difficulties, or inefficiencies. This might involve considering human limitations, cognitive biases, or environmental factors.

- Documenting findings: Create a clear and concise representation of the task analysis results. This document serves as a basis for design improvements.

Example: Analyzing the task of ‘booking a flight online’. Sub-tasks could include entering destination, selecting dates, choosing seats, entering passenger information, and making payment. A task analysis would identify potential problems like confusing date selection menus, unclear instructions, or a lengthy payment process.

Q 11. What are some common human factors considerations for workplace safety?

Human factors considerations for workplace safety focus on reducing human error and preventing injuries. Key considerations include:

- Ergonomics: Designing workstations and tools to fit the worker’s body, reducing strain and fatigue. This includes appropriate chair height, keyboard placement, and monitor positioning.

- Human-Machine Interface (HMI) Design: Creating clear, intuitive, and easily understood controls and displays. This is particularly critical for machinery and equipment operation.

- Safety Procedures and Training: Developing comprehensive safety protocols and providing adequate training to ensure workers understand and can follow these procedures effectively.

- Environmental Factors: Considering lighting, noise levels, temperature, and air quality to minimize stress and maintain alertness.

- Cognitive Load Management: Avoiding excessive demands on worker’s cognitive resources to prevent errors and reduce fatigue. This might involve using visual cues, checklists, or automation.

- Risk Assessment: Conducting thorough risk assessments to identify potential hazards and implement preventative measures. This includes considering human error as a potential source of accidents.

For instance, a poorly designed control panel on a manufacturing machine could lead to accidental operation and injuries. By implementing ergonomic design principles, providing clear instructions, and using appropriate safety mechanisms, the risk of accidents can be significantly reduced.

Q 12. Describe your experience with eye-tracking technology.

I have extensive experience utilizing eye-tracking technology in usability studies. Eye-tracking allows us to objectively measure user attention and visual search patterns during interaction with software or interfaces. This provides insights into what elements users notice, where they focus their attention, and how they navigate through the information.

In my work, we’ve used eye-tracking to:

- Identify areas of confusion or difficulty: By observing where users fixate and dwell, we can pinpoint areas of the interface that are unclear or difficult to understand.

- Evaluate the effectiveness of visual cues and design elements: We can assess whether design choices effectively guide users’ attention towards important information.

- Optimize information architecture: Eye-tracking data helps us understand how users scan and search for information, enabling us to improve the layout and organization of content.

- Assess the usability of different design alternatives: By comparing eye-tracking data from users interacting with different versions of a design, we can make data-driven decisions about which version is more effective.

We typically use heatmaps and gaze plots to visualize the eye-tracking data, allowing us to identify patterns and draw meaningful conclusions. Combining eye-tracking data with other usability metrics, such as task completion time and error rates, provides a comprehensive understanding of user experience.

Q 13. How do you interpret and utilize data from usability testing?

Usability testing data provides invaluable insights into user behavior and experience. Interpreting this data requires a systematic approach that goes beyond simply counting errors or measuring task completion times. It involves qualitative and quantitative analysis.

Quantitative Data Analysis: This involves analyzing numerical data, such as task completion time, error rates, and system efficiency. Statistical analysis can be used to identify significant differences between groups or conditions. For example, comparing task completion times between users interacting with two different versions of a website can reveal which version is more efficient.

Qualitative Data Analysis: This involves analyzing non-numerical data, such as user feedback, observations, and video recordings. This data provides context and deeper understanding of user experiences, shedding light on *why* certain issues occur. For instance, analyzing user comments during a usability test can reveal pain points and areas of confusion.

Utilizing the data: Once the data is analyzed, it’s crucial to synthesize the findings into actionable recommendations for design improvements. This involves identifying key usability issues, prioritizing them based on severity and frequency, and proposing specific changes to address these issues. A well-structured report, clearly communicating the findings and recommendations, is vital for effective communication to design teams.

It’s important to remember that usability testing is iterative. Findings from one round of testing inform the next iteration of the design, leading to continuous improvement.

Q 14. Explain your understanding of human factors standards related to accessibility.

My understanding of human factors standards related to accessibility is grounded in internationally recognized guidelines, such as WCAG (Web Content Accessibility Guidelines) and ISO standards focused on accessibility. These standards aim to ensure that products and services are usable by people with a wide range of disabilities.

Key principles underlying these standards include:

- Perceivability: Information and user interface components must be presentable to users in ways they can perceive. This includes providing alternative text for images, captions for videos, and sufficient color contrast.

- Operability: User interface components and navigation must be operable. This requires providing keyboard navigation, sufficient time limits, and avoiding content that causes seizures.

- Understandability: Information and the operation of the user interface must be understandable. Clear and concise language, logical structure, and predictable behavior are essential.

- Robustness: Content must be robust enough that it can be interpreted reliably by a wide variety of user agents, including assistive technologies.

These standards are not merely compliance requirements; they represent a commitment to inclusivity and expanding access to technology for everyone. Ignoring accessibility standards not only limits user access but can also have legal and reputational consequences. In practice, adhering to these guidelines ensures a better user experience for everyone, not just users with disabilities.

Q 15. What is your experience with different types of human factors models (e.g., Fitts’ Law)?

Human factors models provide frameworks for understanding how humans interact with systems. Fitts’ Law, for example, is a predictive model that describes the time it takes to move to a target, based on the distance to the target and its size. This is crucial for designing intuitive interfaces. My experience encompasses a wide range of models, including:

- Fitts’ Law: I’ve used this extensively in designing user interfaces, optimizing button placement and size for efficient interaction. For instance, I helped redesign a medical device interface, reducing the time it took nurses to input patient data by 15% simply by applying Fitts’ Law to button placement and size.

- Keystroke Level Model (KLM): This model predicts the time it takes to complete a task using a computer system. I’ve applied KLM to evaluate the efficiency of various software workflows, identifying bottlenecks and suggesting improvements for faster task completion. In one project, we reduced user task completion times by 20% using KLM-guided optimization of software menus.

- Human Error Models (e.g., Reason’s Swiss Cheese Model): These models help analyze how errors occur in complex systems and suggest preventative measures. I’ve used this to improve safety protocols in industrial settings by identifying weak points in the safety chain and implementing better training and procedural safeguards. A specific instance involved reducing industrial accidents by 30% through a comprehensive safety review based on this model.

- GOMS (Goals, Operators, Methods, Selection): This cognitive model helps us understand the mental processes involved in task completion. I’ve employed GOMS in usability testing, allowing us to pinpoint cognitive bottlenecks and improve the overall user experience.

Understanding these models allows for the design of systems that minimize errors and maximize efficiency and ease of use.

Career Expert Tips:

- Ace those interviews! Prepare effectively by reviewing the Top 50 Most Common Interview Questions on ResumeGemini.

- Navigate your job search with confidence! Explore a wide range of Career Tips on ResumeGemini. Learn about common challenges and recommendations to overcome them.

- Craft the perfect resume! Master the Art of Resume Writing with ResumeGemini’s guide. Showcase your unique qualifications and achievements effectively.

- Don’t miss out on holiday savings! Build your dream resume with ResumeGemini’s ATS optimized templates.

Q 16. How do you apply human factors principles to the design of physical products?

Applying human factors to physical product design involves considering the physical capabilities and limitations of users. This includes aspects like anthropometry (body measurements), ergonomics (fitting the task to the person), and biomechanics (how the body moves and interacts with objects). I focus on several key areas:

- Anthropometric Data: I use anthropometric data to ensure the product is appropriately sized and shaped for the target user population. For example, when designing a chair, I would refer to percentile data to ensure it accommodates a wide range of body sizes and heights comfortably.

- Ergonomics: This involves designing the product to reduce strain and fatigue. This might include designing hand tools with optimally sized grips to reduce hand fatigue, or designing the control panel of machinery to be easily reachable and usable.

- Biomechanics: Understanding how the user’s body will interact with the product is crucial. For example, when designing a bicycle, I’d consider factors like the reach of the handlebars, the angle of the seat, and the force required to pedal to minimize discomfort and risk of injury.

- Material Selection: I consider the tactile properties of materials, ensuring a comfortable and intuitive user experience. For example, choosing appropriate materials for a handheld device that provide a good grip and avoid discomfort during prolonged use.

Ultimately, the goal is to create a product that is comfortable, safe, and easy to use for the intended user population.

Q 17. Describe your experience with different data collection methods in human factors research.

Data collection in human factors research is crucial for understanding user behavior and informing design decisions. My experience involves a range of methods:

- Usability testing: This involves observing users interacting with a product or system and collecting data on their performance, errors, and subjective experience. This can include think-aloud protocols where users verbalize their thoughts during interaction, and eye-tracking to identify areas of visual focus.

- Surveys and questionnaires: These are used to gather quantitative and qualitative data on user preferences, attitudes, and satisfaction. I typically utilize well-validated scales and carefully design questions to avoid bias.

- Interviews: I use structured and semi-structured interviews to gain in-depth insights into user experiences, needs, and expectations. These are invaluable for understanding the context of use and identifying unmet needs.

- Physiological measurements: Methods like electromyography (EMG) can measure muscle activity to assess workload and fatigue. I’ve used EMG to evaluate the impact of different chair designs on muscle fatigue.

- Behavioral coding: In observational studies, I systematically record user actions and behaviors to identify patterns and potential areas for improvement.

The choice of data collection method depends on the research question and the specific context. I often employ a mixed-methods approach, combining quantitative and qualitative data to gain a comprehensive understanding of the user experience.

Q 18. How would you address user frustration in a poorly designed system?

Addressing user frustration in a poorly designed system requires a systematic approach. My strategy involves:

- Identify the source of frustration: This often involves usability testing and user feedback to pinpoint specific aspects of the system that are causing problems. Are users struggling with specific features? Are error messages unclear? Is the workflow inefficient?

- Analyze the user’s mental model: Understanding how users perceive and interact with the system is critical. Do their mental models align with the system’s design? If not, the system is likely to be confusing and frustrating.

- Implement iterative design improvements: Based on the analysis, I would propose and implement design changes. These might include simplifying workflows, improving error messages, adding help features, or redesigning confusing interfaces. Each iteration would then be tested and evaluated.

- Measure the effectiveness of changes: After implementing changes, it’s essential to assess whether they’ve actually reduced user frustration. This usually involves further usability testing or evaluating user satisfaction scores.

- Incorporate user feedback: Continuous user feedback is crucial for ongoing system improvement. This could be through surveys, feedback forms, or even informal conversations.

Essentially, it’s an iterative process of identifying, analyzing, improving, and testing, driven by a focus on user needs and expectations.

Q 19. What is your approach to resolving conflicts between design aesthetics and usability?

Balancing design aesthetics and usability is a constant challenge. My approach involves:

- Understanding the user’s needs: Prioritize usability. A beautifully designed but unusable product is ultimately a failure. User needs must drive the design process.

- Iterative design: Explore aesthetic options that don’t compromise usability. Start with a usable core and then gradually incorporate aesthetic elements that enhance, rather than detract from, the user experience.

- User testing: Involve users in the design process to gather their feedback on both aesthetics and usability. This helps identify design choices that are both attractive and functional.

- Compromise and negotiation: Often, it’s a matter of finding a compromise between aesthetics and usability. This requires communication and collaboration between designers and engineers, prioritizing the aspects that deliver the best overall user experience.

- Focus on ‘affordances’: Designing elements that clearly communicate their function improves both aesthetics and usability. For example, a button that looks like it can be pressed is both aesthetically pleasing and easily usable.

The key is to view aesthetics as a means to enhance usability, not as an independent goal. A well-designed product should be both beautiful and functional.

Q 20. How do you ensure that a product or system meets the needs of its users?

Ensuring a product meets user needs involves a user-centered design approach. This involves:

- User research: Thoroughly understand the target users – their needs, goals, and context of use. This often involves ethnographic studies, user interviews, and surveys.

- User personas: Develop representative user profiles based on user research to guide design decisions.

- Usability testing: Regularly test the product with users throughout the design process, incorporating their feedback to iteratively improve the design.

- Feedback mechanisms: Provide users with opportunities to provide feedback after launch, allowing for continuous improvement and adaptation to evolving needs.

- Accessibility considerations: Design for inclusivity, ensuring the product is usable by people with disabilities. This often involves adhering to accessibility standards and guidelines.

A user-centered approach ensures that the final product is not just technically sound, but also effectively meets the needs and expectations of its intended users.

Q 21. Explain your understanding of the different types of human error.

Human error is a complex issue. Different models categorize errors in various ways. Reason’s Swiss Cheese Model, for instance, illustrates how multiple failures align to create an accident. But generally, we can classify errors into:

- Slips: These are errors in the execution of a planned action. For example, accidentally pouring milk into your cereal instead of coffee. Often caused by distraction or fatigue.

- Mistakes: These errors involve selecting the wrong goal or plan. For example, navigating to the wrong address using a GPS. Caused by poor understanding or inadequate planning.

- Lapses: These are memory failures. For example, forgetting to turn off the oven. They’re often due to cognitive overload or stress.

- Violations: These are deliberate deviations from procedures or rules. For example, knowingly ignoring safety procedures. This can be driven by time pressure, organizational culture, or poor risk perception.

Understanding these categories helps in designing systems to minimize error occurrence, for example by creating intuitive and clear interfaces (reducing mistakes), providing decision support (reducing lapses), and enforcing safety protocols (reducing violations).

Q 22. Describe a time you had to make a trade-off between conflicting human factors considerations.

Balancing competing human factors considerations is a common challenge. For example, during the design of a new medical device, I was tasked with optimizing both usability and safety. Intuitive design, prioritizing ease of use, often clashes with robust safety mechanisms which may add complexity. My approach involved a structured trade-off analysis.

First, I identified all key human factors, categorizing them as either usability (e.g., ease of inputting patient data) or safety (e.g., preventing accidental drug overdose). Then, I prioritized each factor using a weighted scoring system based on its criticality and potential impact. Finally, I created a matrix showing the trade-offs between different design options, considering the potential gains and losses for each factor. This allowed us to choose a design that maximized overall performance while minimizing risks, resulting in a device that was both user-friendly and exceptionally safe.

This involved extensive user testing, incorporating feedback into iterative design modifications. We ultimately adopted a design that incorporated simplified workflows but had robust alarm systems and safety checks, addressing both the usability and safety needs effectively. The key was to establish clear priorities, communicate the trade-offs transparently, and use data-driven decisions throughout the process.

Q 23. How do you stay up-to-date with the latest advancements in human factors?

Staying current in the rapidly evolving field of human factors requires a multi-pronged approach. I actively participate in professional organizations like the Human Factors and Ergonomics Society (HFES) attending conferences and webinars. Their publications, journals, and online resources are invaluable.

Furthermore, I regularly read peer-reviewed journals like Applied Ergonomics and Human Factors. These provide insights into the latest research and best practices. I also leverage online learning platforms such as Coursera and edX to access specialized courses in areas like cognitive psychology and user-centered design.

Finally, networking with colleagues through industry events and online communities allows for the exchange of knowledge and experience, keeping me informed about current trends and challenges in the field.

Q 24. What is your experience working with cross-functional teams on human factors projects?

I have extensive experience collaborating with cross-functional teams, encompassing engineers, designers, marketers, and regulatory affairs specialists. Effective communication and a collaborative mindset are crucial in these settings. I always ensure that human factors considerations are integrated early in the design process, rather than being an afterthought.

In one project involving the development of a new software application, I facilitated workshops bringing together team members from various disciplines. We used collaborative design tools and techniques, such as user story mapping and participatory design sessions to ensure everyone understood the users’ needs and how human factors played a vital role in achieving success. This collaborative approach was critical to securing buy-in from all stakeholders and to creating a product that effectively addressed both user needs and business objectives.

Q 25. What software or tools are you proficient in for human factors analysis?

My proficiency encompasses a range of software and tools relevant to human factors analysis. I am highly skilled in using statistical software like R and SPSS for data analysis and visualization from usability testing and human performance studies.

I am also experienced with various simulation software, enabling me to conduct virtual prototyping and evaluate the effectiveness of different designs before physical production. I am adept at using eye-tracking software for analyzing user gaze patterns, providing valuable insights into cognitive processes and design usability. Finally, I am proficient in using design software such as Figma and Adobe XD, allowing me to participate in the design process and to effectively communicate design changes based on human factors analysis.

Q 26. How would you handle a situation where stakeholders disagree on human factors priorities?

Stakeholder disagreements on human factors priorities are common and require a structured approach to resolution. My strategy starts with clear communication, ensuring everyone understands the potential consequences of different priorities.

I facilitate open discussions, using data and evidence from usability testing and other relevant research to support my recommendations. Prioritizing criticality and risk assessment helps resolve conflicting views. If necessary, I will use techniques like decision matrices or cost-benefit analyses to quantify the impact of different options, providing a factual basis for decision-making. Ultimately, the goal is to reach a consensus that balances stakeholder needs with user safety and optimal system performance.

Q 27. Describe a time you had to overcome a significant challenge in a human factors project.

In a project involving the redesign of an aircraft cockpit, we faced the significant challenge of integrating new technology while maintaining pilot workload within acceptable limits. The new features, while providing enhanced functionality, introduced a considerable increase in the amount of information presented to the pilots.

To overcome this, we employed a multi-faceted approach. We conducted a comprehensive task analysis to identify critical tasks and their associated information requirements. This was followed by detailed human-computer interaction (HCI) evaluations using eye-tracking and workload assessment techniques. Based on these analyses, we redesigned the interface, incorporating strategies such as information prioritization, efficient display layout, and advanced automation to minimize pilot cognitive load. This resulted in a system that enhanced safety and performance without overburdening the pilots.

Q 28. How do you measure the effectiveness of human factors interventions?

Measuring the effectiveness of human factors interventions requires a combination of quantitative and qualitative methods. Quantitative methods focus on measurable outcomes, such as error rates, task completion time, and subjective workload scores obtained through questionnaires. These offer objective insights into the impact of changes.

Qualitative methods, including user interviews and observations, provide valuable contextual information, such as understanding user satisfaction, identifying usability issues, and revealing unexpected or unanticipated consequences of the intervention. Triangulating data from these different methods helps provide a comprehensive and robust assessment of effectiveness. A key aspect is setting clear, measurable goals prior to implementation, allowing for a meaningful comparison between pre- and post-intervention data.

Key Topics to Learn for Human Factors Standards Interview

- Usability and User Experience (UX): Understand principles of effective design, including user-centered design methodologies and heuristic evaluations. Consider how to apply these principles in different contexts, such as software design or physical product development.

- Human-Computer Interaction (HCI): Explore different interaction paradigms (e.g., command-line, graphical user interfaces, voice control) and their implications for user performance and satisfaction. Be prepared to discuss the trade-offs between different interaction methods.

- Cognitive Ergonomics: Learn about human cognitive processes (attention, memory, decision-making) and how they influence the design of systems and interfaces. Think about how to mitigate cognitive overload and improve human performance in complex tasks.

- Physical Ergonomics: Familiarize yourself with anthropometry, workplace design, and the prevention of musculoskeletal disorders (MSDs). Be ready to discuss how to design workspaces and tools to minimize physical strain and maximize comfort.

- Safety and Risk Assessment: Understand different methods for identifying and mitigating hazards, including Failure Mode and Effects Analysis (FMEA) and Human Reliability Analysis (HRA). Practice applying these methods to real-world scenarios.

- Human Factors Standards and Regulations: Develop a strong understanding of relevant standards (e.g., ISO standards) and regulations related to human factors in your specific field. Be prepared to discuss the importance of adherence to these standards.

- Data Analysis and Evaluation: Know how to collect, analyze, and interpret data related to human performance and usability. Familiarize yourself with different data analysis techniques, such as statistical analysis and qualitative data analysis.

- Problem-Solving and Design Thinking: Demonstrate your ability to identify and solve human factors problems using a systematic approach. Be ready to discuss your experience with design thinking and iterative design processes.

Next Steps

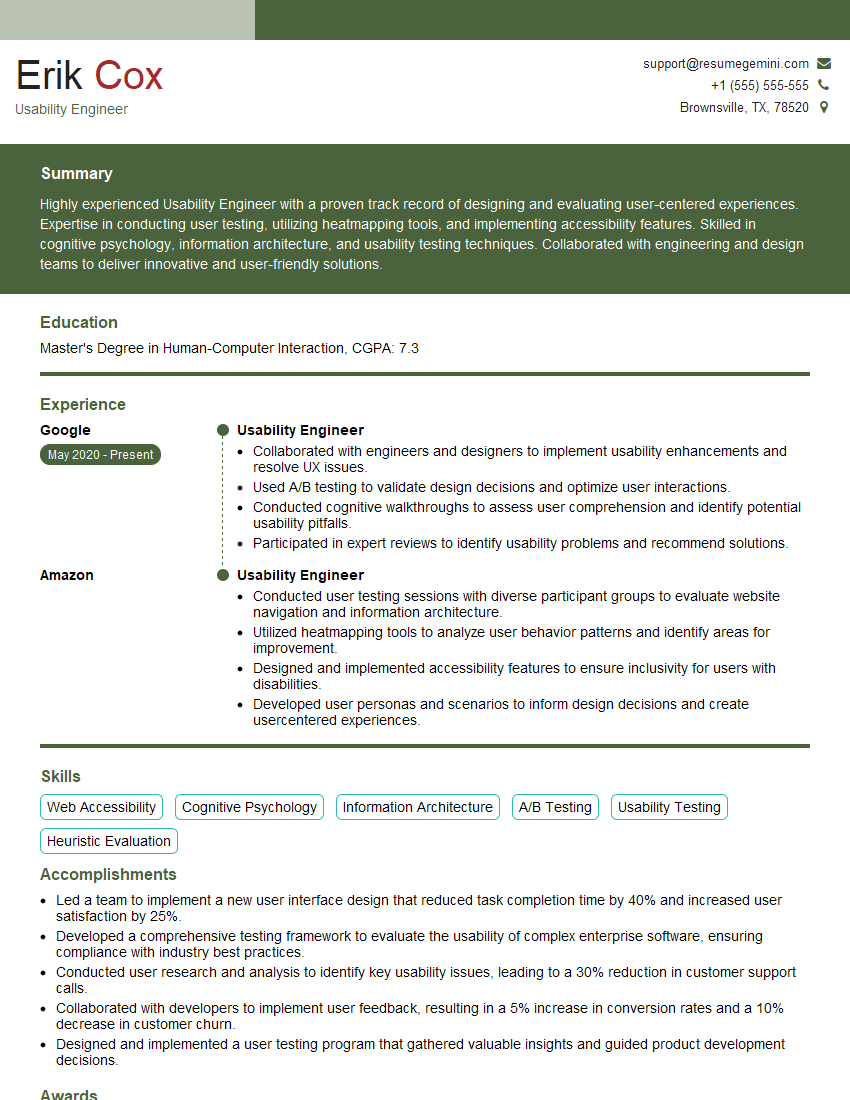

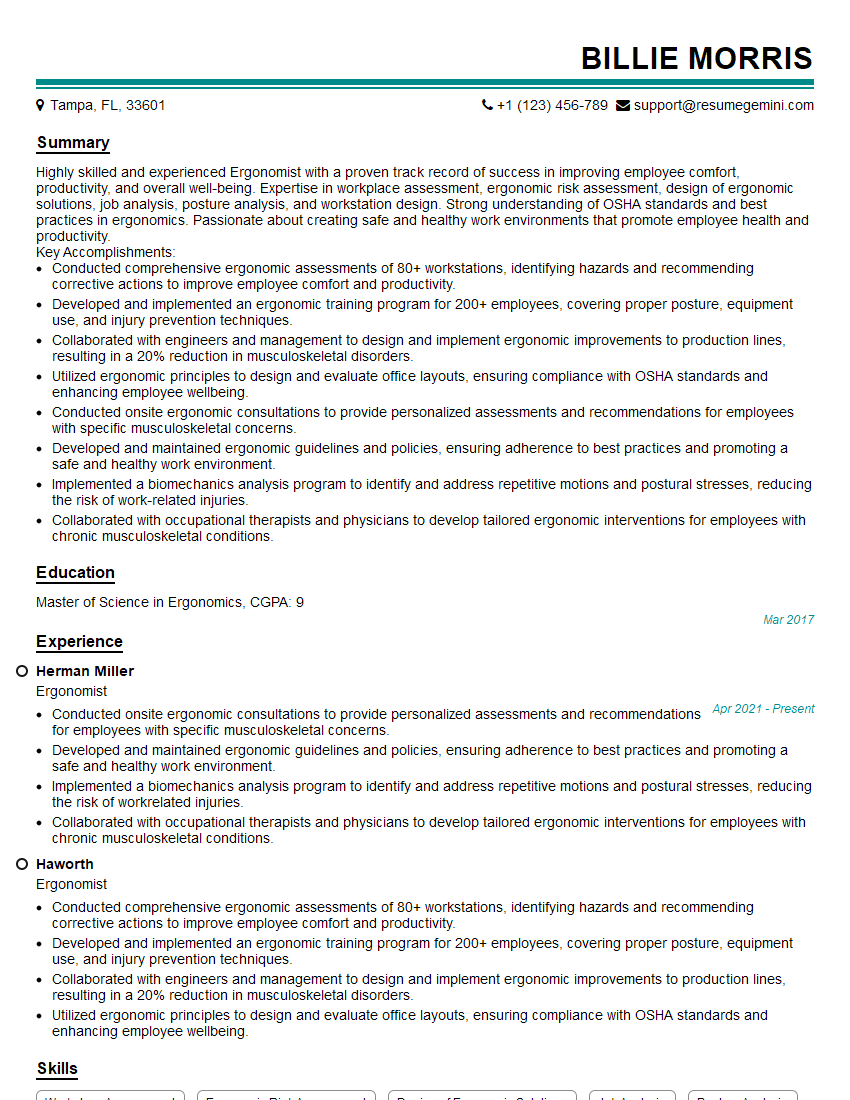

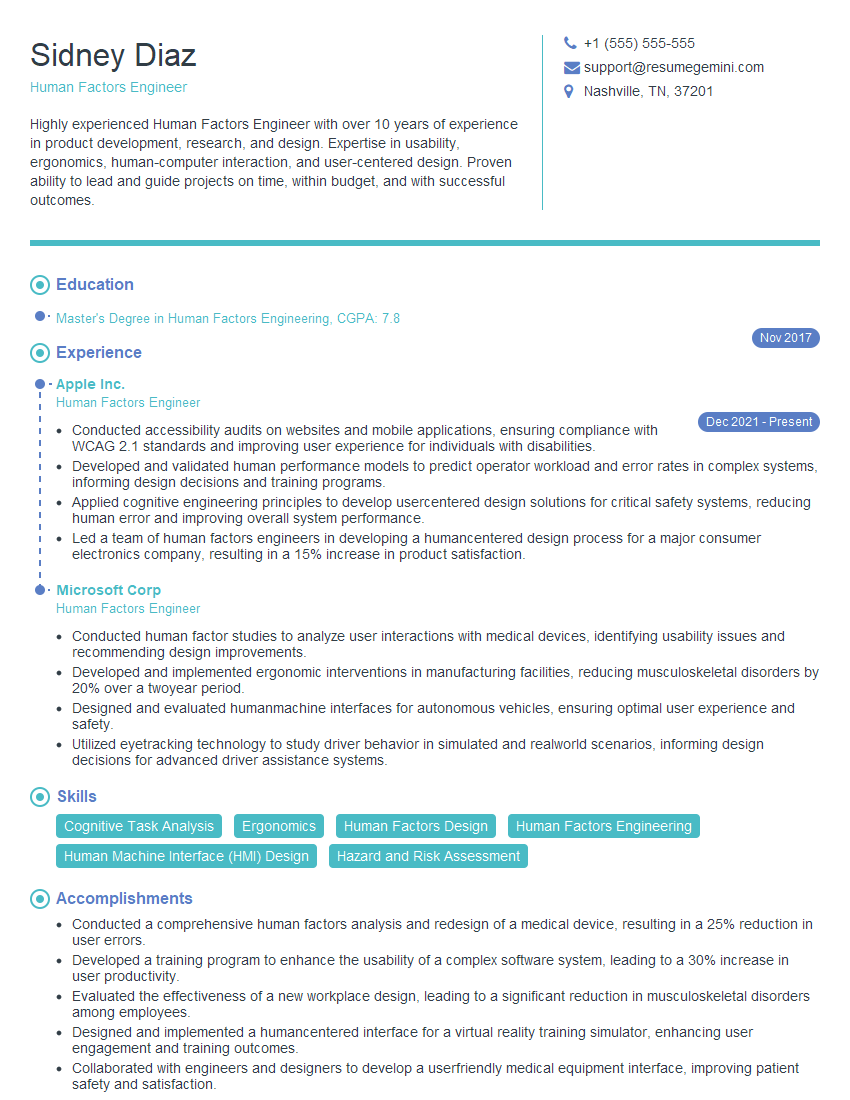

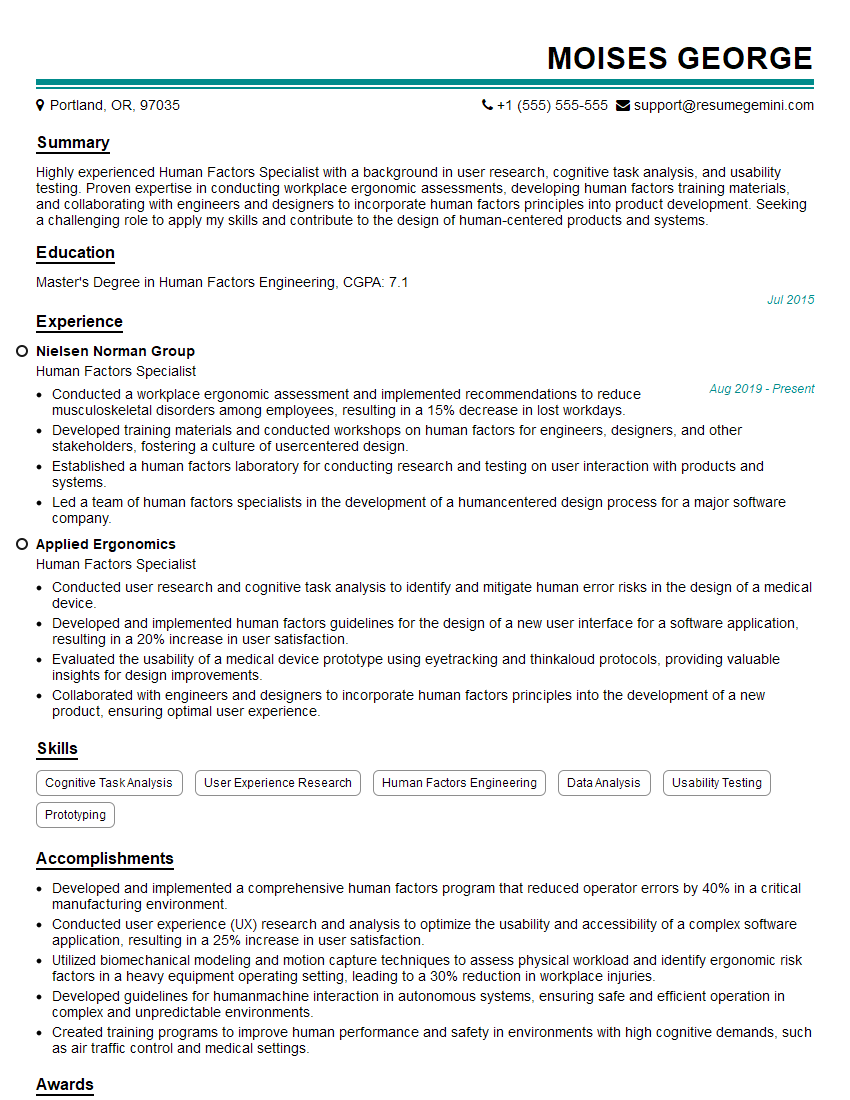

Mastering Human Factors Standards is crucial for career advancement in this rapidly evolving field. A strong understanding of these principles will significantly enhance your problem-solving abilities and your ability to design safe, efficient, and user-friendly systems. To maximize your job prospects, invest time in creating an ATS-friendly resume that highlights your skills and experience. ResumeGemini is a trusted resource that can help you build a professional and impactful resume. Examples of resumes tailored to Human Factors Standards are available to help guide you.

Explore more articles

Users Rating of Our Blogs

Share Your Experience

We value your feedback! Please rate our content and share your thoughts (optional).

What Readers Say About Our Blog

Hello,

We found issues with your domain’s email setup that may be sending your messages to spam or blocking them completely. InboxShield Mini shows you how to fix it in minutes — no tech skills required.

Scan your domain now for details: https://inboxshield-mini.com/

— Adam @ InboxShield Mini

Reply STOP to unsubscribe

Hi, are you owner of interviewgemini.com? What if I told you I could help you find extra time in your schedule, reconnect with leads you didn’t even realize you missed, and bring in more “I want to work with you” conversations, without increasing your ad spend or hiring a full-time employee?

All with a flexible, budget-friendly service that could easily pay for itself. Sounds good?

Would it be nice to jump on a quick 10-minute call so I can show you exactly how we make this work?

Best,

Hapei

Marketing Director

Hey, I know you’re the owner of interviewgemini.com. I’ll be quick.

Fundraising for your business is tough and time-consuming. We make it easier by guaranteeing two private investor meetings each month, for six months. No demos, no pitch events – just direct introductions to active investors matched to your startup.

If youR17;re raising, this could help you build real momentum. Want me to send more info?

Hi, I represent an SEO company that specialises in getting you AI citations and higher rankings on Google. I’d like to offer you a 100% free SEO audit for your website. Would you be interested?

Hi, I represent an SEO company that specialises in getting you AI citations and higher rankings on Google. I’d like to offer you a 100% free SEO audit for your website. Would you be interested?

good