The thought of an interview can be nerve-wracking, but the right preparation can make all the difference. Explore this comprehensive guide to Usability Guidelines interview questions and gain the confidence you need to showcase your abilities and secure the role.

Questions Asked in Usability Guidelines Interview

Q 1. Explain Nielsen’s 10 Usability Heuristics and provide an example of how you’ve applied them.

Nielsen’s 10 Usability Heuristics are widely accepted principles for designing user-friendly interfaces. They provide a framework for evaluating the usability of a system and identifying areas for improvement. They are:

- Visibility of system status: The system should always keep users informed about what is going on, through appropriate feedback within reasonable time.

- Match between system and the real world: The system should speak the users’ language, with words, phrases and concepts familiar to the user, rather than system-oriented terms. Follow real-world conventions, making information appear in a natural and logical order.

- User control and freedom: Users often choose system functions by mistake and will need a clearly marked “emergency exit” to leave the unwanted state without having to go through an extended dialogue. Support undo and redo.

- Consistency and standards: Users should not have to wonder whether different words, situations, or actions mean the same thing. Follow platform conventions.

- Error prevention: Even better than good error messages is a careful design which prevents a problem from occurring in the first place. Either eliminate error-prone conditions or check for them and present users with a confirmation option before they commit to the action.

- Recognition rather than recall: Minimize the user’s memory load by making objects, actions, and options visible. The user should not have to remember information from one part of the dialogue to another. Instructions for use of the system should be visible or easily retrievable whenever appropriate.

- Flexibility and efficiency of use: Accelerators — unseen by the novice user — may often speed up the interaction for the expert user such that the system can cater to both inexperienced and experienced users. Allow users to tailor frequent actions.

- Aesthetic and minimalist design: Dialogues should not contain information which is irrelevant or rarely needed. Every extra unit of information in a dialogue competes with the relevant units of information and diminishes their relative visibility.

- Help users recognize, diagnose, and recover from errors: Error messages should be expressed in plain language (no codes), precisely indicate the problem, and constructively suggest a solution.

- Help and documentation: Even though it is better if the system can be used without documentation, it may be necessary to provide help and documentation. Any such information should be easy to search, focused on the user’s task, list concrete steps to be carried out, and not be too large.

Example: During the design of a new e-commerce checkout process, I applied these heuristics. For ‘Visibility of system status,’ a progress bar was implemented to show the user’s stage in the checkout. For ‘Error prevention,’ a confirmation screen was added before final order submission. For ‘Consistency and standards,’ the checkout process mirrored common online shopping patterns.

Q 2. Describe the difference between usability testing and user research.

While both usability testing and user research aim to improve the user experience, they differ significantly in their scope and methods. User research is a broader field encompassing various methods to understand users, their needs, and their context. This might include surveys, interviews, ethnographic studies, competitive analysis, and more. It aims to provide a deep understanding of the user’s background and motivations. Usability testing, on the other hand, is a specific type of user research focused on evaluating the ease of use and efficiency of a product or system. It directly observes users interacting with a prototype or final product to identify usability issues.

Think of it this way: user research helps you understand *why* users do what they do, while usability testing helps you understand *how well* they can do it with your design.

Q 3. What methods do you use to gather user feedback during usability testing?

Gathering user feedback during usability testing involves a combination of methods to get a holistic view. I typically use:

- Think-aloud protocols: Users verbalize their thoughts and actions while interacting with the system. This provides invaluable insights into their decision-making process.

- Post-task interviews: Structured or semi-structured interviews conducted after completing specific tasks. These help to understand the user’s overall experience and identify areas of frustration or confusion.

- Observation: Passive observation helps to identify nonverbal cues like hesitation or frustration. This can highlight problems that users may not explicitly mention.

- Heuristic evaluation: Experts evaluate the interface against established usability guidelines (like Nielsen’s heuristics).

- Questionnaires/Surveys: These are useful for gathering quantitative data and broader feedback on satisfaction and ease of use. They are often used before and after testing sessions.

- Screen recordings and eye-tracking: Technology-assisted methods that give detailed insights into user behavior and attention patterns.

The specific combination of methods depends on the project’s goals, budget, and timeline.

Q 4. How do you prioritize usability issues based on severity and impact?

Prioritizing usability issues involves assessing both their severity (how bad the problem is) and impact (how many users are affected). I often use a severity and frequency matrix:

A simple approach is to use a matrix. The severity can range from (1) minor inconvenience to (4) critical blocking issue. Frequency can range from (1) rare to (4) very frequent. Multiplying severity and frequency gives a weighted priority score. For example:

- Severity 4, Frequency 4 = Priority 16 (highest priority)

- Severity 2, Frequency 1 = Priority 2 (low priority)

This allows for a data-driven approach to prioritization. Issues with high priority scores should be addressed first. The severity and frequency are determined through observation from usability testing and the user feedback.

Q 5. Explain your process for conducting a heuristic evaluation.

My process for conducting a heuristic evaluation involves these steps:

- Define the scope: Clearly specify the system components to be evaluated.

- Select evaluators: Choose at least 3 usability experts familiar with the target user group and the applicable heuristics. Diverse perspectives are key.

- Explain the evaluation criteria: Clearly communicate the goals and the heuristics that will guide the evaluation.

- Independent evaluations: Evaluators should conduct their assessments independently to avoid bias.

- Severity rating: Each evaluator assigns a severity rating to each identified problem based on impact and frequency (similar to the matrix mentioned earlier).

- Consolidation and discussion: The evaluators meet to discuss their findings, compare severity ratings, and agree on a final list of issues.

- Prioritization: The usability issues are prioritized based on their severity and frequency.

- Report generation: A report summarizes the findings, including identified usability issues, severity ratings, and recommendations for improvements.

Q 6. How do you incorporate accessibility guidelines (WCAG) into your design process?

Incorporating accessibility guidelines (WCAG) is crucial for creating inclusive designs. I integrate WCAG into my design process from the very beginning. This includes:

- Using accessible design tools: Selecting tools that support accessibility features and allow for checking compliance.

- Following WCAG guidelines: Ensuring that the design complies with WCAG success criteria, particularly focusing on perceivable, operable, understandable, and robust content.

- Regular accessibility testing: Conducting accessibility reviews throughout the design and development process to catch and address issues early on.

- Using accessibility checkers: Leveraging automated tools that help in identifying potential accessibility problems.

- Involving users with disabilities: Including users with various disabilities in usability testing to gain their feedback and ensure the design meets their needs. This provides real-world insights not easily gained from heuristic analysis or automated tools.

- Developing accessible content: Creating content that is easily understood by all users, including those with cognitive disabilities.

By proactively integrating accessibility into every step, we create products that are usable by everyone.

Q 7. Describe a time you identified a usability problem and how you solved it.

During the usability testing of a mobile banking app, we observed users struggling to locate the ‘transfer funds’ feature. The icon was small, and the label was not very descriptive. Many users abandoned the task after repeated unsuccessful attempts. This was a high-severity issue due to the significant impact on a core functionality of the app.

Solution: We addressed this by:

- Increasing the size of the icon: Making it more prominent and easily visible.

- Improving the label: Changing the label to a more user-friendly and descriptive one, such as ‘Send Money’.

- Adding visual cues: Using a clearer visual hierarchy, to ensure that the button stands out.

- Rearranging the button location: Moving it to a more intuitive and accessible spot in the app’s navigation menu.

Post-implementation usability testing showed a significant increase in successful task completion rates, confirming the effectiveness of our changes.

Q 8. What are some common usability problems you’ve encountered and how did you address them?

One common usability problem is poor navigation. Users often get lost or frustrated when they can’t easily find what they’re looking for. For example, a website with an unclear menu structure or inconsistent labeling can lead to significant usability issues. To address this, I employ user research methods like card sorting and tree testing to understand how users mentally categorize information. This helps me design intuitive navigation structures and clear labeling systems. Another frequent issue is inconsistent design patterns. If a button looks and behaves differently in various parts of the application, users become confused and may make errors. I solve this by creating and adhering to a comprehensive style guide that dictates visual elements and interaction patterns throughout the application, ensuring a consistent and predictable user experience.

Another recurring problem is unclear calls to action (CTAs). Users might not understand what action they should take next, leading to abandonment. For instance, a form with a submit button that isn’t clearly labelled or visually prominent will cause usability problems. The solution is to use clear, concise, and visually appealing CTAs. I use A/B testing to compare different CTA designs and optimize for higher conversion rates.

Q 9. How do you measure the success of a usability improvement?

Measuring the success of a usability improvement involves both qualitative and quantitative methods. Quantitative metrics include task completion rates, error rates, task time, and user satisfaction scores (e.g., System Usability Scale or SUS). A higher completion rate and lower error rate, coupled with faster task times and higher SUS scores, indicate an improvement. For example, if a redesign decreased the average task completion time by 20% and increased the SUS score by 15 points, it clearly demonstrates a successful improvement.

Qualitative methods provide rich insights into why improvements were successful. This often involves usability testing sessions, where we observe users interacting with the system and gather feedback. By analyzing user behavior and comments, we gain valuable insights into pain points and areas of improvement. For example, observing user frustration during a task might reveal a previously unidentified design flaw, even if the quantitative metrics appear acceptable. Combining these quantitative and qualitative data provides a complete picture of the usability enhancement’s effectiveness.

Q 10. Explain the concept of cognitive load and how it impacts usability.

Cognitive load refers to the amount of mental effort required to perform a task. High cognitive load can lead to frustration, errors, and task abandonment. It’s essentially the mental ‘processing power’ a user expends. Think of it like your brain’s RAM—too much data or too complex a process can overload it. A simple example is a website with cluttered layouts or overly complex instructions. This forces the user to expend significant mental energy just to understand the basic information, leaving them less capacity to focus on the actual task.

Usability aims to minimize cognitive load by streamlining information architecture, using clear and concise language, and providing visual cues to guide the user. Effective usability design anticipates users’ mental models and provides sufficient support to reduce their mental effort. For instance, a well-designed form uses clear labels, logical grouping of fields, and helpful error messages to reduce cognitive load on the user. Conversely, poorly designed interfaces with ambiguous labels, confusing navigation, or unexpected behavior can significantly increase cognitive load, leading to a poor user experience.

Q 11. What are some best practices for designing user-friendly forms?

Designing user-friendly forms requires careful consideration of several factors. Clear and concise labels are essential; each field should be clearly labeled with what type of information is needed. Avoid jargon or technical terms that users may not understand. Logical grouping of fields using visual cues such as whitespace or section headers helps improve readability and understandability. Progressive disclosure, where only relevant information is displayed at each step, prevents information overload. For example, a multi-step form is better than a single page with numerous fields.

Input validation and helpful error messages are crucial. Forms should validate inputs in real-time, providing specific and constructive feedback when an error occurs. For example, instead of simply saying “Invalid input,” a message like “Please enter a valid email address” helps the user correct the mistake immediately. Visual feedback on user interaction helps keep users engaged and informed. For instance, using animations or visual cues to show that a submission is in progress provides a more satisfying experience. Finally, consider accessibility. Use appropriate ARIA attributes to support assistive technologies used by people with disabilities.

Q 12. How do you handle conflicting design requirements from stakeholders?

Handling conflicting design requirements from stakeholders requires skillful negotiation and prioritization. I begin by documenting all requirements clearly, ensuring everyone understands the implications of each. Then, I facilitate a discussion to understand the underlying rationale behind each request. Often, seemingly conflicting requirements stem from different perspectives or incomplete understanding of user needs.

My approach involves using a prioritization matrix, weighing requirements based on their importance to users and feasibility. We might use a weighted scoring system, where factors like user impact, business value, and technical complexity are considered. This facilitates a data-driven decision-making process, reducing reliance on opinions alone. I also emphasize the importance of user research data—showing concrete evidence of user preferences helps to objectively justify design choices. Finally, compromises are inevitable. I advocate for iterative design, testing different options, and gathering feedback, allowing us to refine solutions that balance various stakeholders’ needs and user experience.

Q 13. Explain your understanding of A/B testing and how it relates to usability.

A/B testing is a method of comparing two versions of a design element (e.g., button color, form layout, navigation) to determine which performs better. It’s directly relevant to usability because it allows us to test different design choices and quantify their impact on key metrics like task completion rates, error rates, and user satisfaction.

In a typical A/B test, a control group experiences the original design, while a test group interacts with the modified design. Statistical analysis is then used to determine which version leads to a statistically significant improvement in the chosen metrics. For instance, we might test two variations of a signup form: one with a simplified layout and the other with the original, more complex design. By tracking conversion rates for each version, we can objectively assess which design leads to higher user engagement and successful form submissions. A/B testing provides a data-driven approach to improving usability by quantifying the impact of design changes and guiding decisions towards the most user-friendly solution.

Q 14. Describe your experience with usability testing software and tools.

I have extensive experience with various usability testing software and tools. I’m proficient in using tools like UserTesting.com for remote user testing, enabling me to collect video recordings of user sessions and gather qualitative feedback. For moderated remote testing, I utilize Zoom or Microsoft Teams, facilitating real-time interaction and observation.

For eye-tracking studies, I have used tools such as Tobii Pro to gain insights into visual attention patterns during user interactions. Additionally, I have experience with heatmap tools like Hotjar and Crazy Egg to analyze user behavior on websites and identify areas of interest and frustration. I’m also familiar with analytics platforms like Google Analytics, which provides quantitative data on user behavior and helps inform usability improvements. The choice of tool depends on the specific research questions and the budget available. Each tool offers unique capabilities, allowing me to tailor my approach to the project’s specific needs.

Q 15. How do you analyze user data from usability tests to identify key findings?

Analyzing usability test data involves a systematic approach to uncovering key insights that inform design improvements. It’s not just about counting errors; it’s about understanding why users struggled. My process typically involves these steps:

- Data Consolidation: I gather all data sources – video recordings, session recordings, user feedback forms, and notes from observers. This data is then organized into a manageable format, often using spreadsheets or qualitative data analysis software.

- Qualitative Analysis: I carefully review video recordings and transcripts, identifying patterns in user behavior. This involves looking for recurring difficulties, moments of frustration, and areas where users deviated from the intended workflow. I look for recurring themes and code the data to organize similar observations.

- Quantitative Analysis: I analyze quantitative data, such as task completion rates, error rates, and time-on-task, to identify statistically significant trends. This helps to quantify the severity of usability issues.

- Synthesis: Finally, I synthesize the qualitative and quantitative data to build a comprehensive understanding of the user experience. This might involve creating affinity diagrams or using other visualization techniques to highlight key themes and patterns.

For instance, if users consistently struggle with a particular form field, I might observe their actions on the video and analyze quantitative data like error rate to confirm the severity of the issue. This provides compelling evidence to support design recommendations.

Career Expert Tips:

- Ace those interviews! Prepare effectively by reviewing the Top 50 Most Common Interview Questions on ResumeGemini.

- Navigate your job search with confidence! Explore a wide range of Career Tips on ResumeGemini. Learn about common challenges and recommendations to overcome them.

- Craft the perfect resume! Master the Art of Resume Writing with ResumeGemini’s guide. Showcase your unique qualifications and achievements effectively.

- Don’t miss out on holiday savings! Build your dream resume with ResumeGemini’s ATS optimized templates.

Q 16. What are some common usability metrics you track?

Common usability metrics provide a quantitative measure of user experience. They offer a valuable complement to qualitative observations. Some of the most valuable metrics I track include:

- Task Success Rate: The percentage of users who successfully complete a given task.

- Error Rate: The number of errors users make while completing a task, divided by the number of opportunities to make an error.

- Time on Task: The average time it takes users to complete a given task.

- Efficiency: A measure of how quickly users can complete tasks while minimizing errors (often calculated by dividing time on task by task success rate).

- System Usability Scale (SUS): A widely used questionnaire that measures overall user satisfaction.

- Net Promoter Score (NPS): Measures user willingness to recommend the product or service.

- Clickstream Data: Tracks users’ navigation through the interface, revealing patterns of use (or disuse).

By tracking these metrics across different user groups and iterations of a design, I can objectively measure the impact of design changes and identify areas for improvement.

Q 17. Describe your experience with different types of usability testing (e.g., moderated, unmoderated).

I have extensive experience with both moderated and unmoderated usability testing. Each method offers unique advantages:

- Moderated Usability Testing: Involves a researcher guiding participants through tasks and observing their behavior in real-time. This provides rich qualitative data, including insights into user thought processes and motivations. It’s great for exploring complex tasks or when probing for deeper understanding.

- Unmoderated Usability Testing: Participants complete tasks independently, usually via online platforms. This method allows for broader reach and scalability, as you can test with a larger number of participants. It’s more cost-effective and ideal for tasks that are easily understood. Analysis primarily relies on recordings and task completion data.

The choice between the two depends on the project goals, budget, and complexity of the interface. For example, during a recent project involving a complex medical application, moderated testing was preferred to ensure participants received sufficient guidance and to gather in-depth qualitative data. For a simpler e-commerce website, unmoderated testing was sufficient to assess overall usability and reach a larger sample.

Q 18. How do you create user personas and use them to inform your design decisions?

User personas are fictional representations of your target users. They’re based on research and data, not guesses. Creating them involves:

- User Research: Conduct user interviews, surveys, and analyze existing data to understand user needs, behaviors, and motivations.

- Identify Patterns: Look for commonalities and differences among your users. This will help you identify distinct user segments.

- Create Personas: For each user segment, develop a persona with a name, picture, background information, goals, and frustrations. Include details that are relevant to the product or service.

- Use Personas to Inform Design: Use your personas to guide design decisions, ensuring that your design meets the needs and expectations of your target users. For instance, if a persona has limited technical skills, the design should be intuitive and easy to navigate.

For example, when designing a banking app, I created two personas: ‘Busy Professional’ (tech-savvy, prioritizes efficiency) and ‘Cautious Senior’ (less tech-proficient, values security and ease of understanding). This helped us prioritize features and design elements accordingly, leading to a more inclusive and effective application.

Q 19. How do you ensure your designs are inclusive and accessible to users with disabilities?

Inclusive and accessible design is paramount. It’s about creating products and services that are usable by people with a wide range of abilities. My approach incorporates several key strategies:

- Following WCAG Guidelines: I adhere to the Web Content Accessibility Guidelines (WCAG) to ensure that designs meet accessibility standards. This involves using appropriate color contrast, providing alternative text for images, ensuring keyboard navigation, and offering captions for videos.

- Diverse Testing: I ensure that usability testing includes participants with various disabilities. This provides direct feedback on accessibility challenges.

- Using Assistive Technologies: I test designs using assistive technologies such as screen readers and voice recognition software to identify potential barriers for users with visual or motor impairments.

- Considering Cognitive Accessibility: I pay attention to aspects of design that impact users with cognitive disabilities, such as providing clear and concise language, using consistent navigation, and reducing cognitive load.

For instance, in a recent project, we used screen readers to test our website and identified several issues with navigation that were easily fixed. We also employed color contrast analysis tools to ensure adequate readability for users with visual impairments.

Q 20. How do you communicate your findings from usability testing to stakeholders?

Communicating usability findings effectively is crucial for influencing design decisions. My approach involves creating clear, concise, and visually compelling reports and presentations that emphasize actionable insights. This typically includes:

- Executive Summary: A brief overview of the key findings and recommendations.

- Problem Statement: Clearly articulating the usability problems identified.

- Visualizations: Using charts, graphs, and images to illustrate key findings (e.g., heatmaps showing user interaction patterns).

- User Quotes: Including direct quotes from users to add context and humanize the findings.

- Actionable Recommendations: Providing specific, prioritized recommendations for improving the design.

- Prioritized Issues: Focus on the most critical usability issues based on severity and impact.

I prioritize presenting information in a way that stakeholders can easily understand, regardless of their technical background. I often use storytelling techniques to highlight the user experience and make the data more relatable.

Q 21. What are some common biases that can affect usability testing?

Several biases can significantly impact the validity of usability testing. It’s crucial to be aware of these biases and take steps to mitigate their influence:

- Confirmation Bias: The tendency to search for or interpret information in a way that confirms preconceived notions. To avoid this, I maintain a neutral perspective and actively seek disconfirming evidence.

- Sampling Bias: When the participants don’t accurately represent the target user population. Careful participant recruitment is key, ensuring a diverse and representative sample.

- Experimenter Bias: The researcher’s expectations influence the interpretation of data. Using blind testing procedures, where observers are unaware of the hypotheses, can help minimize this bias.

- Social Desirability Bias: Participants may behave in ways that they perceive as socially acceptable, rather than reflecting their true behavior. Maintaining a safe and non-judgmental testing environment is crucial.

- Order Effect: The order in which tasks are presented can influence participant performance. Counterbalancing task order across participants is a common solution.

For instance, to mitigate sampling bias, I carefully define my target audience and use appropriate recruitment methods to reach a diverse set of participants that accurately reflect the target population. By actively acknowledging and mitigating these biases, I aim to ensure the reliability and validity of my findings.

Q 22. How do you ensure usability testing results are reliable and valid?

Ensuring reliable and valid usability testing results hinges on meticulous planning and execution. Reliability refers to the consistency of the results; if you repeated the test, would you get similar findings? Validity asks whether the test actually measures what it intends to – in this case, the usability of the design.

- Representative Participants: Recruit participants who accurately represent your target audience. A diverse sample size is crucial. Using a small, homogenous group might skew results and limit generalizability.

- Well-Defined Tasks: Create clear, realistic tasks reflecting how users would interact with the system in real-world scenarios. Ambiguous tasks lead to unreliable data.

- Controlled Environment: Minimize external distractions during testing. A quiet, comfortable space helps participants focus and provides more accurate data.

- Standardized Procedures: Follow a consistent testing protocol for all participants. This ensures uniformity and prevents bias from influencing results. Use a pre-determined script for instructions and observations.

- Multiple Methods: Employing multiple data collection methods (e.g., think-aloud protocols, questionnaires, performance metrics) offers a more comprehensive and robust understanding of usability than relying on a single method. Triangulation of results strengthens validity.

- Pilot Testing: Conduct a small-scale pilot test to identify and fix any flaws in the testing procedure before the main study. This is vital for refining the methodology and improving data quality.

- Data Analysis Rigor: Apply appropriate statistical methods (for quantitative data) and thematic analysis (for qualitative data) to thoroughly analyze your data and draw meaningful conclusions. Avoid cherry-picking data to support pre-conceived notions.

For instance, I once conducted a usability test for a banking app. By carefully selecting participants representing different age groups and tech proficiency levels, and by using standardized tasks like ‘transferring funds’ and ‘paying a bill’, we were able to identify key usability issues that a less rigorous approach might have missed. This led to significant improvements in the app’s design.

Q 23. Explain the difference between qualitative and quantitative usability data.

Qualitative and quantitative usability data offer different perspectives on user experience. Qualitative data provides rich, descriptive insights into why users behave a certain way, whereas quantitative data focuses on measuring how much or how often something occurs.

- Qualitative Data: This is descriptive and exploratory. It includes observations from user interviews, think-aloud protocols (where users verbalize their thoughts while using the system), and open-ended survey questions. It provides context, understanding user motivations and feelings, and uncovering unexpected issues. Examples include user comments like “I found that button confusing” or “The navigation was frustrating.”

- Quantitative Data: This involves numerical measurements, allowing for statistical analysis. It includes metrics like task completion time, error rates, number of clicks, and user satisfaction scores (e.g., System Usability Scale – SUS). It provides objective data on user performance and efficiency.

Think of it like this: qualitative data tells you the story of the user experience, while quantitative data provides the numbers. A complete usability evaluation benefits from both types of data, providing a holistic understanding. For example, you might discover from qualitative data that users find a particular feature confusing (qualitative), and then quantify this by measuring the error rate when using that feature (quantitative).

Q 24. How do you balance usability with other design considerations (e.g., aesthetics, performance)?

Balancing usability with other design considerations requires a strategic approach. Usability should never be sacrificed entirely, but sometimes compromises are necessary. The key is to prioritize and make informed decisions based on user needs and business goals.

- Prioritization Matrix: Use a matrix to weigh the importance of different design aspects (usability, aesthetics, performance) and rank them based on their impact on user experience and business objectives. This helps establish a clear hierarchy of needs.

- Iterative Design: Embrace an iterative process where you can test and refine the design throughout the development cycle. This allows for adjustments based on user feedback and insights without significant rework.

- User Research: Involve users from the beginning to understand their priorities and expectations. This helps establish a shared understanding of what constitutes a good user experience.

- Trade-off Analysis: Document and justify any compromises made. For example, a simpler design might improve usability but sacrifice some aesthetic appeal. The justification should explicitly state the reasoning behind the trade-off, considering the overall impact on the user experience and business goals.

In a project I worked on, we had to choose between a visually stunning but slightly slower-loading animation and a simpler, faster-loading one. Based on user testing, we discovered that the slightly slower animation was causing noticeable frustration and affecting task completion rates. Therefore, we prioritized usability and opted for the faster-loading version, even though it was less visually engaging. We documented this decision, highlighting the improvement in usability metrics that justified the trade-off.

Q 25. Describe your experience with eye-tracking or other user behavior analysis tools.

I have extensive experience with eye-tracking technology and other user behavior analysis tools. These tools provide valuable insights into how users interact with a design, often revealing issues that traditional methods might miss.

- Eye-tracking: This technology measures where users look on a screen, providing data on visual attention, scan paths, and areas of interest. This helps identify design elements that attract or repel attention, and uncover usability problems that might not be apparent through observation alone. For example, eye-tracking can reveal if users are consistently missing important calls to action.

- Heatmaps: Derived from eye-tracking data, heatmaps visually represent areas of high and low visual attention on a screen. They provide a clear, intuitive overview of user focus and interaction patterns.

- Mouse Tracking: This records mouse movements, clicks, and cursor hover time, providing information about users’ navigation and interaction patterns. It can highlight areas of difficulty or confusion during navigation.

In a recent project involving a complex web application, eye-tracking revealed that users were consistently overlooking a crucial navigation element. This was unexpected, as the element was prominently placed according to our initial design. The eye-tracking data clearly showed that the element’s visual design blended with the background, causing it to be missed. This led to a significant redesign of the navigation element, leading to a marked improvement in usability.

Q 26. What are your favorite usability resources (books, articles, websites)?

My favorite usability resources encompass books, articles, and websites that provide a well-rounded perspective on usability principles and best practices. I constantly refer to these sources to enhance my knowledge and stay informed about the latest advancements in the field.

- Books: “Don’t Make Me Think, Revisited” by Steve Krug, “The Design of Everyday Things” by Don Norman, and “About Face: The Essentials of Interaction Design” by Alan Cooper are invaluable resources offering foundational knowledge and practical insights.

- Articles: I regularly read articles from Nielsen Norman Group and UX Collective, which provide up-to-date research findings, best practices, and expert opinions on various UX-related topics.

- Websites: Aside from the aforementioned, I frequently visit websites like Interaction Design Foundation, providing online courses and resources on usability and interaction design.

Q 27. How do you stay up-to-date with the latest trends and best practices in usability?

Staying current in the dynamic field of usability requires a proactive and multi-faceted approach.

- Conferences and Workshops: Attending industry conferences like UXPA International and CHI provides opportunities to learn about the latest research, trends, and best practices from leading experts in the field.

- Online Courses and Webinars: Platforms like Coursera, edX, and Udemy offer a wide range of courses on usability and UX, providing structured learning and practical exercises.

- Professional Networks: Engaging with professional networks like UXPA and LinkedIn allows for continuous learning through interaction with other professionals, participation in discussions, and access to valuable resources.

- Reading and Research: Regularly reading industry publications, blogs, and research papers keeps me informed about the latest advancements in usability principles and methodologies.

- Testing and Experimentation: I constantly apply new knowledge and techniques through practical application in real-world projects, refining my skills and expanding my understanding.

For example, recently I attended a workshop on accessibility testing and learned how to use assistive technologies for evaluation. This greatly enhanced my ability to design more inclusive and user-friendly interfaces.

Q 28. Describe a situation where you had to make a difficult usability trade-off. How did you justify your decision?

In one project, we faced a tough usability trade-off concerning the design of a mobile payment app. We discovered that a key feature (a biometric authentication method) was causing significant usability issues for a segment of our users – older adults who were less comfortable with facial recognition technology.

Initially, we prioritized security and wanted to incorporate this advanced biometric feature for enhanced security. However, usability testing revealed that it was causing significant frustration and impacting task completion rates for older users. We had to weigh the security benefits against the usability challenges experienced by a significant portion of our target audience.

To resolve this, we implemented a phased approach. We kept the biometric authentication as an option for users comfortable with it, but also introduced a fallback method using a simple PIN code. This ensured that the app remained secure while improving usability for all users. We justified this decision by prioritizing inclusive design and demonstrating the increased user satisfaction and task completion rates with the fallback mechanism through A/B testing. The trade-off was documented and presented to stakeholders, who ultimately agreed that a balanced approach optimizing both security and accessibility was the best strategy.

Key Topics to Learn for Your Usability Guidelines Interview

Ace your upcoming interview by mastering these core concepts. Remember, understanding the “why” behind the guidelines is as important as knowing the “what”.

- User-Centered Design Principles: Understand the core philosophies behind prioritizing user needs and incorporating iterative design processes. Consider how these principles translate into practical design decisions.

- Heuristic Evaluation: Learn to effectively apply Nielsen’s heuristics or other established usability evaluation methods. Practice identifying usability issues in various interfaces and propose concrete solutions.

- Information Architecture (IA): Grasp the importance of clear and intuitive information organization. Be prepared to discuss different IA models and their impact on user experience.

- Accessibility Guidelines (WCAG): Demonstrate familiarity with accessibility best practices and how they ensure inclusivity in design. Be ready to discuss specific examples and their implementation.

- Usability Testing Methodologies: Understand different testing methods (e.g., moderated vs. unmoderated, think-aloud protocols) and their strengths and weaknesses. Be able to discuss the value of user feedback in the design process.

- Interaction Design Principles: Discuss your understanding of interaction paradigms (e.g., direct manipulation, menu selection) and how they affect user experience. Consider examples of effective and ineffective interaction designs.

- Visual Design and Layout: While not strictly usability, a solid understanding of visual hierarchy, typography, and color theory contributes significantly to a positive user experience. Be prepared to discuss their role in usability.

Next Steps: Level Up Your Career

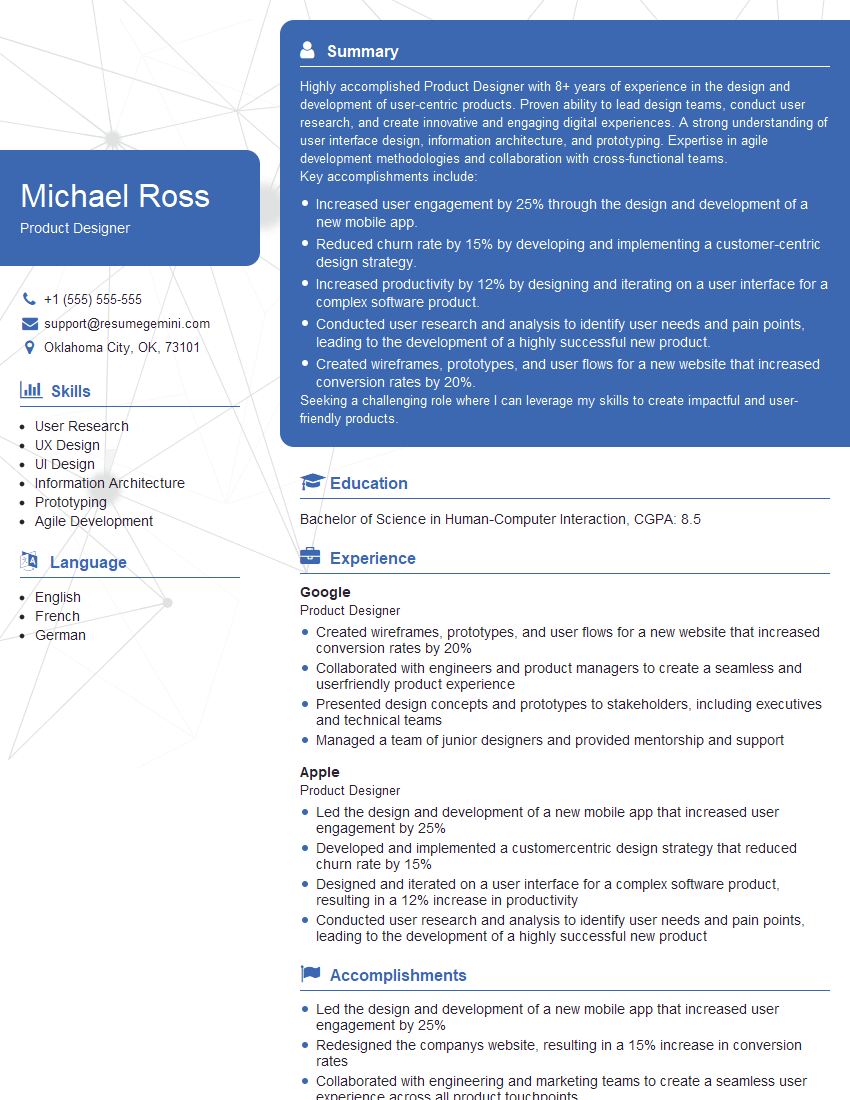

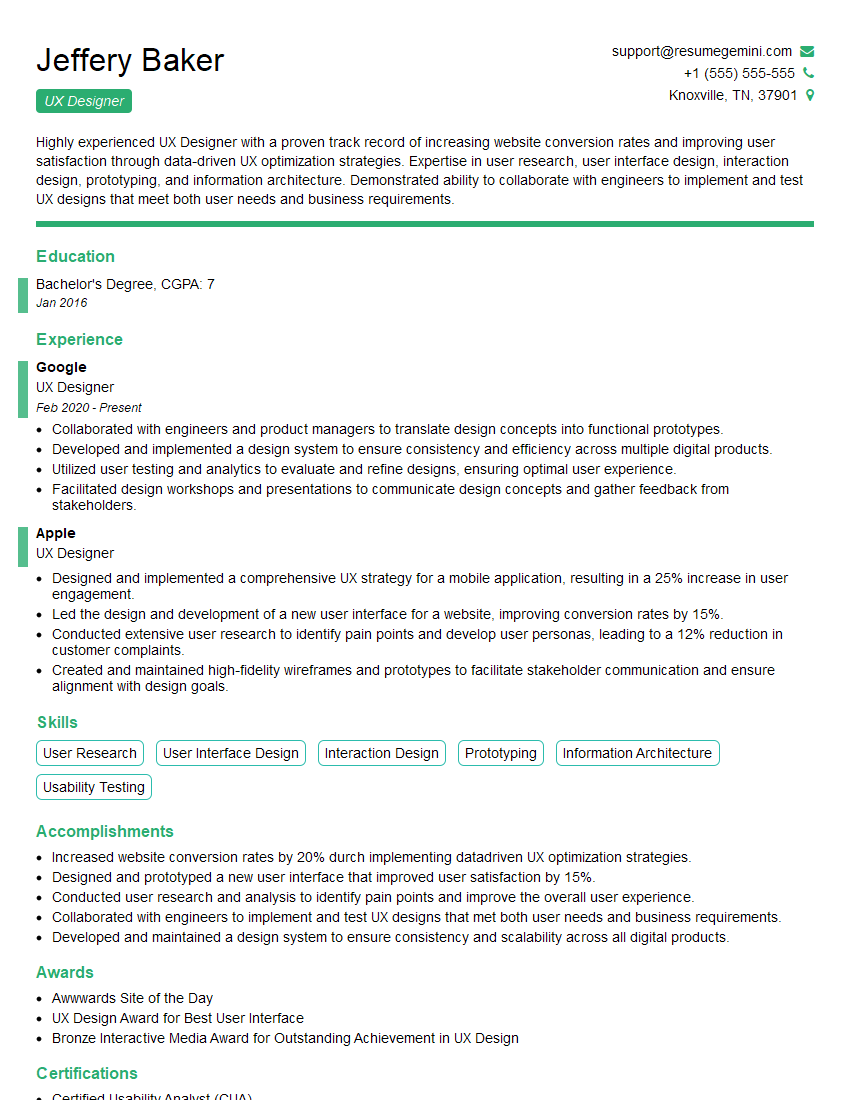

Mastering Usability Guidelines is key to unlocking exciting opportunities in the UX/UI field. A strong understanding of these principles sets you apart and demonstrates your commitment to creating user-centric experiences. To maximize your chances of landing your dream role, invest time in crafting an ATS-friendly resume that highlights your skills and experience effectively. ResumeGemini is a trusted resource for building professional, impactful resumes. They provide examples of resumes tailored to highlight Usability Guidelines expertise – check them out to gain a competitive edge!

Explore more articles

Users Rating of Our Blogs

Share Your Experience

We value your feedback! Please rate our content and share your thoughts (optional).