The thought of an interview can be nerve-wracking, but the right preparation can make all the difference. Explore this comprehensive guide to Remote Sensing and Data Acquisition interview questions and gain the confidence you need to showcase your abilities and secure the role.

Questions Asked in Remote Sensing and Data Acquisition Interview

Q 1. Explain the difference between active and passive remote sensing.

The core difference between active and passive remote sensing lies in how they acquire data. Passive remote sensing systems, like cameras, measure energy reflected or emitted by the Earth’s surface. Think of it like taking a photograph – you’re relying on existing light. Examples include Landsat satellites and aerial photography. They’re great for mapping vegetation health (using reflected sunlight) or monitoring thermal conditions (using emitted infrared radiation). Active remote sensing, on the other hand, provides its own source of energy and then measures the energy that is backscattered. This is like shining a flashlight at a wall and measuring the light bouncing back. Radar and LiDAR are prime examples. Radar can penetrate clouds and foliage, making it invaluable for mapping terrain under adverse weather conditions. LiDAR uses laser pulses to create highly accurate 3D models of the earth’s surface, useful in urban planning and geological surveys.

Q 2. Describe various spectral bands used in remote sensing and their applications.

Remote sensing utilizes various spectral bands, each revealing different characteristics of the Earth’s surface. These bands are portions of the electromagnetic spectrum.

- Visible (0.4-0.7 µm): This is the range our eyes see. Red, green, and blue bands are commonly used to create color images. Applications include land cover classification and urban mapping.

- Near-Infrared (NIR, 0.7-1.3 µm): Highly sensitive to vegetation; healthy plants reflect strongly in this band. Used for vegetation indices like NDVI (Normalized Difference Vegetation Index) to assess plant health and biomass.

- Shortwave Infrared (SWIR, 1.3-3 µm): Useful for detecting moisture content in soil and vegetation, and differentiating between different types of minerals.

- Thermal Infrared (TIR, 3-15 µm): Measures thermal radiation emitted by the Earth’s surface. Used for monitoring temperature variations, identifying heat sources, and monitoring volcanic activity.

- Microwave (longer wavelengths): Can penetrate clouds and even some ground cover. Used in radar systems for mapping topography, monitoring sea ice, and even observing subsurface features.

The specific bands used depend on the application. For example, a study on vegetation health would heavily utilize the visible and near-infrared bands, whereas a geological survey might focus on SWIR and microwave bands.

Q 3. What are the advantages and disadvantages of different sensor platforms (e.g., satellite, airborne, UAV)?

Different sensor platforms—satellites, airborne, and UAVs (Unmanned Aerial Vehicles)—each offer unique advantages and disadvantages:

- Satellites:

- Advantages: Large area coverage, repetitive coverage for temporal analysis, global perspective.

- Disadvantages: High cost, limited spatial resolution depending on the sensor, data acquisition can be scheduled, and atmospheric effects can be significant.

- Airborne:

- Advantages: Higher spatial resolution than satellites, better control over data acquisition, flexible flight paths.

- Disadvantages: More expensive than UAVs, limited area coverage per flight, weather dependent.

- UAVs:

- Advantages: Very high spatial resolution, low cost, high maneuverability, rapid data acquisition.

- Disadvantages: Limited flight time, restricted by regulations, susceptible to weather, and generally covers a smaller area than airborne or satellite.

The best platform depends on the project’s scale, budget, and required spatial resolution. For example, monitoring deforestation over a large region would benefit from satellite data, while a detailed survey of a small agricultural field might utilize a UAV.

Q 4. Explain the concept of spatial resolution and its impact on data analysis.

Spatial resolution refers to the size of the smallest discernible detail in a remotely sensed image. It’s often expressed as the ground sample distance (GSD) – the size of a single pixel on the ground. A high spatial resolution image shows finer details, while a low spatial resolution image appears blurry.

The impact on data analysis is significant. High-resolution imagery allows for the identification of smaller features, leading to more accurate classification and analysis. For example, a high-resolution image might differentiate individual trees in a forest, while a low-resolution image would only show a general forested area. The choice of spatial resolution is crucial. High-resolution imagery comes at a higher cost and requires greater processing power.

Imagine looking at a photo of a city: a high-resolution image shows individual cars, houses and people, allowing you to discern fine details, while a low-resolution image only shows buildings and roads, obscuring smaller objects.

Q 5. How do you handle atmospheric effects in remote sensing data?

Atmospheric effects, such as scattering and absorption by gases and aerosols, significantly degrade the quality of remote sensing data. These effects can lead to inaccurate measurements and misinterpretations. Several techniques are used to minimize their impact:

- Atmospheric Correction Models: These models use atmospheric parameters (e.g., water vapor, aerosol content) to estimate and remove atmospheric effects. Popular models include MODTRAN and 6S.

- Dark Object Subtraction: This simple method assumes that the darkest pixels in an image represent areas with no reflected radiation. This dark value is then subtracted from the entire image to remove some atmospheric scattering effects.

- Empirical Line Methods: These methods establish a relationship between the radiance measured by the sensor and the surface reflectance. They use reference targets (areas with known reflectance) to calibrate the data.

The choice of technique depends on the sensor, atmospheric conditions, and the level of accuracy required. Proper atmospheric correction is crucial for accurate interpretation and quantitative analysis of remote sensing data.

Q 6. Describe different types of image preprocessing techniques.

Image preprocessing is a critical step in remote sensing data analysis. It involves various techniques to enhance image quality and prepare the data for further analysis. Common techniques include:

- Radiometric Correction: Addresses variations in sensor response and illumination. Techniques include histogram equalization, which enhances contrast, and normalization, to reduce brightness inconsistencies across the image.

- Geometric Correction: Addresses distortions in the image geometry due to sensor orientation, Earth’s curvature, and relief displacement. This involves techniques like orthorectification, to create geometrically accurate images.

- Atmospheric Correction: As discussed earlier, this addresses atmospheric scattering and absorption to improve accuracy of spectral measurements.

- Filtering: Techniques like spatial filtering (e.g., smoothing and sharpening filters) can improve image quality by reducing noise or enhancing edges.

- Image Enhancement: Improves visual interpretation and analysis by increasing contrast, sharpening edges, or reducing noise.

The specific preprocessing techniques applied depend on the data quality, the type of analysis to be performed, and the desired level of accuracy. A well-preprocessed dataset is foundational for successful remote sensing applications.

Q 7. What are common geometric corrections applied to remote sensing imagery?

Geometric corrections are applied to remote sensing imagery to rectify geometric distortions that arise from various factors. These corrections aim to create geometrically accurate images where the spatial relationships between features are preserved. Common geometric corrections include:

- Orthorectification: This is the most common and sophisticated technique, removing effects of terrain relief and transforming the image to a map projection. It requires a Digital Elevation Model (DEM) representing the terrain’s elevation.

- Georeferencing: Aligns the image to a known coordinate system using ground control points (GCPs). GCPs are points with known coordinates in both the image and a map or other reference data. The software then uses these points to transform the image’s coordinates.

- Rubber Sheeting: A simpler approach than orthorectification, it uses GCPs to warp the image, removing some geometric distortions. Less accurate than orthorectification for significantly rugged terrain.

- Polynomial Transformation: Uses mathematical functions to model and correct geometric distortions. This method is useful when the distortions are relatively small.

The choice of geometric correction technique depends on the type and extent of distortions present in the imagery, the accuracy requirements, and the availability of ancillary data such as DEMs. Accurate geometric correction is fundamental for tasks like map creation, spatial analysis, and change detection.

Q 8. Explain the process of orthorectification.

Orthorectification is the process of geometrically correcting a remotely sensed image to remove distortions caused by terrain relief and sensor geometry. Imagine taking a picture of a mountain range from an airplane – the slopes appear distorted. Orthorectification ‘flattens’ the image, making it geometrically accurate and map-projectable.

The process involves several steps:

- Sensor Model Acquisition: Defining the exact position and orientation of the sensor during image acquisition. This often involves using metadata embedded in the image file.

- Digital Elevation Model (DEM) Acquisition: Obtaining a DEM, which provides elevation data for the area covered by the image. This is crucial for correcting relief displacement.

- Geometric Correction: Applying mathematical transformations to the image pixels based on the sensor model and DEM. This corrects for distortions caused by terrain relief, sensor perspective, and Earth curvature.

- Resampling: Interpolating pixel values to create a new image with the desired spatial resolution and geometry. Common resampling techniques include nearest neighbor, bilinear, and cubic convolution.

The result is an orthorectified image where distances and areas are accurately represented, making it suitable for precise measurements and integration with GIS data. For example, in precision agriculture, an orthorectified image allows for accurate measurement of field areas and crop yields.

Q 9. What is radiometric calibration and why is it important?

Radiometric calibration is the process of converting the digital numbers (DN) recorded by a sensor into physically meaningful units, typically radiance or reflectance. Think of it like calibrating a kitchen scale to ensure accurate weight measurements. Without calibration, the raw sensor data is just a set of numbers without a clear physical interpretation.

It’s crucial because it allows for consistent and comparable analysis across different images and sensors. If we don’t calibrate, we can’t accurately compare the ‘brightness’ of a feature in images taken at different times or with different sensors. For example, comparing the chlorophyll content in vegetation using satellite imagery requires radiometric calibration to ensure accurate reflectance values are used in vegetation indices calculations.

Calibration methods vary depending on the sensor, but often involve using known reference targets (e.g., calibration sites with known reflectance) or atmospheric correction models. Incorrect calibration can lead to inaccurate results and misinterpretations in various applications, from environmental monitoring to urban planning.

Q 10. Describe different image classification techniques (e.g., supervised, unsupervised).

Image classification assigns each pixel in an image to a specific thematic class (e.g., water, vegetation, urban). There are various techniques, with supervised and unsupervised methods being the most common.

- Supervised Classification: This method requires ‘training’ the classifier by identifying samples of known classes within the image. The classifier then uses these samples to learn the spectral characteristics of each class and assign the rest of the pixels accordingly. Algorithms include Maximum Likelihood, Support Vector Machines (SVM), and Random Forest.

- Unsupervised Classification: This approach doesn’t require prior knowledge of classes. The algorithm automatically groups pixels based on their spectral similarity. K-means clustering is a widely used unsupervised technique. Think of it as automatically grouping similar-colored objects together without knowing beforehand what those objects are.

The choice between supervised and unsupervised classification depends on the available data and the project objectives. Supervised classification is generally more accurate but requires more manual effort, while unsupervised classification is faster but may require post-classification interpretation to determine class meaning.

Q 11. Explain the concept of accuracy assessment in remote sensing.

Accuracy assessment evaluates the correctness of a classification map or other remote sensing product. It’s essential to quantify the reliability and trustworthiness of the results. We want to know how well our classification reflects reality on the ground.

This typically involves comparing the classified image to a reference data set, often obtained through ground truthing (field surveys) or high-resolution imagery. Common metrics include:

- Overall Accuracy: The percentage of correctly classified pixels.

- Producer’s Accuracy: The probability that a pixel of a given class is correctly classified.

- User’s Accuracy: The probability that a pixel classified as a given class actually belongs to that class.

- Kappa Coefficient: A measure of agreement between the classified image and the reference data, accounting for chance agreement.

A high accuracy assessment score indicates a reliable classification, providing confidence in the use of the results for decision-making in areas such as land cover mapping or disaster response.

Q 12. What is the difference between pixel-based and object-based image analysis?

Pixel-based and object-based image analysis (OBIA) are two different approaches to processing remotely sensed data. Pixel-based analysis treats each pixel as an independent unit, while OBIA considers groups of pixels (objects) with similar characteristics.

- Pixel-based analysis: This traditional method classifies individual pixels based on their spectral values. It’s computationally efficient but can be sensitive to noise and mixed pixels (pixels containing multiple land cover types).

- Object-based image analysis (OBIA): This more advanced technique segments the image into meaningful objects (e.g., buildings, trees) before classification. This allows for the incorporation of spatial context and features, leading to improved accuracy, especially in heterogeneous landscapes. OBIA uses segmentation algorithms to group pixels based on spectral and spatial similarity.

Imagine analyzing an aerial image of a city. Pixel-based analysis might struggle to distinguish between a building and a road if their spectral values are similar. OBIA, however, can identify them as distinct objects based on their shape, size, and texture, improving classification accuracy.

Q 13. How do you handle cloud cover in satellite imagery?

Cloud cover is a major challenge in satellite imagery analysis, as clouds obscure the Earth’s surface, preventing the acquisition of clear observations. Several strategies exist to handle this:

- Image Selection: Choosing cloud-free images from a series of acquisitions over time. This might involve selecting a specific time of year with typically less cloud cover or using multiple images to fill in cloud gaps.

- Cloud Masking: Identifying and removing cloud pixels from the image. This often involves thresholding algorithms on specific spectral bands sensitive to cloud reflectance.

- Cloud Filling/Interpolation: Estimating the values of cloud-covered pixels using information from neighboring cloud-free pixels. This can involve spatial interpolation techniques or using data from other images.

- Cloud Removal Techniques: Sophisticated algorithms, such as those using machine learning, are now available for advanced cloud removal from images.

The best strategy depends on the application and the amount of cloud cover present. For instance, for applications requiring long time-series of data, cloud filling might be more appropriate. For projects where high-accuracy is paramount and cloud-free images are readily available, selecting a clear image is often preferred.

Q 14. Describe your experience with LiDAR data acquisition and processing.

I have extensive experience in LiDAR data acquisition and processing, spanning various applications from forestry to urban planning. My experience includes:

- Data Acquisition: Planning and executing LiDAR surveys using various platforms, including airborne and terrestrial systems. This involved selecting appropriate sensor settings (e.g., pulse density, flight altitude) based on project requirements and terrain characteristics. I’ve worked with different LiDAR sensor manufacturers and platforms to ensure best practices and data quality.

- Data Processing: I’m proficient in processing LiDAR point clouds using software such as LAStools and ArcGIS. This includes tasks such as data filtering (removing noise and outliers), georeferencing, classification (distinguishing ground points from objects), and generating various derived products such as Digital Terrain Models (DTMs), Digital Surface Models (DSMs), and canopy height models (CHMs).

- Data Analysis and Application: I have utilized processed LiDAR data for various analyses including volumetric calculations, change detection, and feature extraction. For example, I used LiDAR data to create detailed 3D models of urban areas for city planning projects or assess forest biomass and structural diversity in ecological studies.

A recent project involved generating high-resolution DTMs from airborne LiDAR data for flood modeling. This required careful processing and quality control to ensure accurate elevation data were used for flood inundation simulations. This demonstrated not only my technical proficiency but also my ability to apply LiDAR data to solve real-world problems.

Q 15. Explain different data formats used in remote sensing (e.g., GeoTIFF, HDF).

Remote sensing data comes in various formats, each designed to store specific types of information efficiently. Let’s explore some key formats:

- GeoTIFF (.tif, .tiff): This is a widely used format that combines the advantages of the Tagged Image File Format (TIFF) with geospatial metadata. This metadata includes crucial information about the image’s geographic location, projection, and resolution, making it directly usable in GIS software. Think of it as a highly organized photo album where each photo’s location is precisely marked on a map. It’s great for storing raster data like satellite imagery and aerial photographs.

- HDF (Hierarchical Data Format): HDF is a versatile format capable of storing a wide variety of data types, including raster and vector data, along with associated metadata. It’s especially useful for handling large, complex datasets like those from satellite missions with multiple bands or instruments. Imagine it as a sophisticated filing cabinet that can hold different types of documents (data) and keep them organized by subject (metadata) This is very common for storing data from sensors like MODIS or Landsat.

- ENVI (.dat): The ENVI format is proprietary to the ENVI software package and is commonly used for storing hyperspectral imagery. This format efficiently handles the large number of spectral bands found in such imagery. Think of this as a specialized database for highly detailed images.

- NetCDF (.nc): NetCDF (Network Common Data Form) is a self-describing format designed for sharing and archiving scientific data. It’s particularly suitable for handling climate data, oceanographic data, and other large multi-dimensional datasets. It’s very robust for collaborative work.

The choice of data format often depends on the specific application, the size of the dataset, and the software used for processing.

Career Expert Tips:

- Ace those interviews! Prepare effectively by reviewing the Top 50 Most Common Interview Questions on ResumeGemini.

- Navigate your job search with confidence! Explore a wide range of Career Tips on ResumeGemini. Learn about common challenges and recommendations to overcome them.

- Craft the perfect resume! Master the Art of Resume Writing with ResumeGemini’s guide. Showcase your unique qualifications and achievements effectively.

- Don’t miss out on holiday savings! Build your dream resume with ResumeGemini’s ATS optimized templates.

Q 16. What GIS software are you proficient in?

I’m proficient in several GIS software packages, including ArcGIS Pro, QGIS, and ERDAS IMAGINE. My experience spans from basic data manipulation and visualization to advanced geoprocessing tasks using spatial analysis tools. For example, in ArcGIS Pro, I regularly use tools for raster and vector data manipulation such as clipping, mosaicking, and overlay analysis. In QGIS, I utilize its open-source capabilities for processing large datasets and customizing workflows. My experience with ERDAS IMAGINE involves primarily working with high-resolution satellite imagery and orthorectification.

Q 17. Describe your experience with programming languages used in geospatial analysis (e.g., Python, R).

Python and R are essential tools in my geospatial analysis workflow. In Python, I extensively use libraries like GDAL/OGR for data I/O, NumPy for numerical computation, Scikit-learn for machine learning algorithms, and Matplotlib/Seaborn for data visualization. A common task is using GDAL to read and process raster data, then using NumPy to perform calculations on the pixel values. For example: import gdal; ds = gdal.Open('image.tif'); band = ds.GetRasterBand(1); array = band.ReadAsArray() This snippet reads a GeoTIFF into a NumPy array. In R, I utilize packages like ‘raster’, ‘sp’, and ‘rgdal’ for similar tasks, leveraging R’s statistical capabilities for analyzing spatial patterns and modeling. I often combine these languages, using Python for data pre-processing and R for statistical modelling.

Q 18. How do you ensure the quality and accuracy of your data?

Data quality and accuracy are paramount in remote sensing. My approach involves a multi-step process:

- Pre-processing: This includes atmospheric correction to remove atmospheric effects, geometric correction to rectify distortions, and radiometric calibration to ensure accurate measurements. This step is crucial to reduce noise and enhance accuracy.

- Data Validation: I compare my processed data with ground truth data (e.g., field measurements, GPS data) to assess accuracy. Statistical measures like root mean square error (RMSE) help quantify the discrepancies.

- Quality Control Checks: Regular checks during processing, including visual inspections of the data and metadata analysis, help identify and rectify potential errors. I pay particular attention to metadata to ensure proper projection and coordinate systems.

- Uncertainty Assessment: Acknowledging and quantifying uncertainty associated with the data is crucial. This includes uncertainty stemming from sensor limitations, atmospheric conditions, and processing methods. I communicate uncertainty transparently in my results.

This rigorous approach ensures the reliability and credibility of my analyses.

Q 19. Describe a project where you used remote sensing data to solve a problem.

In a recent project, I utilized Landsat 8 imagery to map deforestation in a rainforest region. The goal was to assess the extent and rate of deforestation over a five-year period. I used image differencing techniques to identify areas of change, and supervised classification to classify forest and non-forest areas. Post-classification accuracy assessment was conducted using ground truth data collected from field surveys and high-resolution imagery. The results provided valuable insights for conservation efforts and land management decisions. My work helped quantify the precise area impacted by deforestation, enabling more efficient resource allocation for reforestation initiatives.

Q 20. How do you handle large datasets in remote sensing?

Handling large remote sensing datasets requires strategic approaches:

- Cloud Computing: Platforms like Google Earth Engine or Amazon Web Services provide scalable computing resources to process massive datasets efficiently. These services allow parallel processing which dramatically speeds up analysis.

- Data Subsetting: Processing only the relevant portion of the data reduces computational demands and improves efficiency. Instead of processing a terabyte of data at once, I focus on smaller areas relevant to my analysis.

- Data Compression: Lossless compression techniques minimize storage space and transfer times while preserving data integrity. This is essential when working with massive files.

- Optimized Algorithms: Efficient algorithms and data structures are key to maximizing performance. Choosing the right algorithms for the task reduces processing time significantly.

Combining these methods ensures that analysis remains feasible and timely, even with extremely large datasets.

Q 21. Explain your understanding of different coordinate reference systems (CRS).

Coordinate Reference Systems (CRS) define how geographic coordinates are represented on a map. Understanding CRS is fundamental to remote sensing and GIS. A CRS specifies a datum (a reference ellipsoid approximating the Earth’s shape), a projection (a method for representing a 3D surface on a 2D plane), and units of measurement. Different CRS are suitable for different geographic areas and applications. For example:

- Geographic Coordinate Systems (GCS): These use latitude and longitude to define locations on the Earth’s surface. WGS 84 is a widely used GCS.

- Projected Coordinate Systems (PCS): These transform latitude and longitude to planar coordinates. Common projections include UTM (Universal Transverse Mercator) and Albers Equal-Area Conic. Each projection has distortions, and the choice depends on the application and area of interest. For example, UTM is best for areas that are relatively long and narrow, while Albers is better for large areas spanning many latitudes.

Incorrect CRS handling can lead to inaccurate spatial analysis and overlay errors. A common error is mismatched CRS between different datasets, resulting in spatial misalignment. Careful attention to CRS throughout the workflow is crucial for accurate results.

Q 22. What are the ethical considerations involved in using remote sensing data?

Ethical considerations in using remote sensing data are multifaceted and crucial for responsible application. They revolve around privacy, security, and the potential for misuse. For instance, high-resolution imagery could inadvertently capture private property details, raising concerns about individual rights. Furthermore, the data could be manipulated or used for malicious purposes, such as surveillance or military applications. Responsible data handling necessitates adhering to strict protocols, obtaining informed consent where appropriate, and using data anonymization techniques to safeguard privacy. Data provenance and transparency regarding data usage are equally vital for ethical considerations. Think of it like this: we have a powerful tool; we must ensure its use is ethically sound.

- Privacy: Protecting individuals’ identities and preventing unauthorized access to sensitive information.

- Security: Safeguarding data from theft, unauthorized access, and alteration.

- Transparency: Openly disclosing the source, processing methods, and intended use of the data.

- Informed Consent: Obtaining permission from individuals whose data is being collected.

- Data Anonymization: Removing or modifying identifying information to protect privacy.

Q 23. How do you stay up-to-date with the latest advancements in remote sensing technology?

Staying current in the rapidly evolving field of remote sensing requires a multi-pronged approach. I actively participate in professional organizations like the IEEE Geoscience and Remote Sensing Society and actively attend conferences such as IEEE IGARSS. This provides access to the latest research and networking opportunities. I subscribe to leading journals such as Remote Sensing of Environment and IEEE Transactions on Geoscience and Remote Sensing, regularly reviewing articles to stay abreast of new techniques and algorithms. Furthermore, I utilize online resources, including NASA’s Earthdata website and ESA’s Copernicus Open Access Hub, to access new datasets and explore new applications. Finally, I leverage online learning platforms like Coursera and edX to broaden my skillset in specialized areas, such as deep learning for remote sensing. Continuous learning is paramount in a field as dynamic as remote sensing.

Q 24. Describe your experience with different data sources (e.g., Landsat, Sentinel, Planet).

My experience spans a variety of data sources, each with its unique strengths and limitations. I’ve extensively worked with Landsat data, leveraging its long-term archive for time-series analysis of land cover change. For example, I used Landsat to monitor deforestation rates in the Amazon rainforest over two decades. Sentinel data, particularly Sentinel-2 with its high spatial resolution, has been instrumental in urban planning projects, aiding in detailed land-use classification and change detection. I’ve also incorporated PlanetScope imagery, appreciating its high frequency and near real-time capabilities for monitoring dynamic events like wildfire spread or rapid urbanization. The selection of the dataset is always based on the specific application’s needs, considering factors such as spatial and temporal resolution, spectral range, and data availability.

Q 25. Explain your understanding of spatial statistics.

Spatial statistics are essential for analyzing geographically referenced data, such as that obtained from remote sensing. It allows us to move beyond simple descriptive statistics and explore spatial patterns, relationships, and dependencies. I’m proficient in techniques like spatial autocorrelation analysis (using Moran’s I or Geary’s c), which helps detect spatial clustering or dispersion of features. Kriging is another technique I utilize for spatial interpolation, estimating values at unsampled locations based on the spatial correlation of neighboring points. Geostatistical techniques such as variogram analysis are fundamental in understanding the spatial structure of the data before interpolation. In a practical sense, I used spatial regression to model the relationship between vegetation indices derived from Landsat imagery and environmental variables like rainfall and soil type, allowing for more accurate prediction of biomass across a landscape.

Q 26. How do you handle data uncertainty and errors?

Data uncertainty and errors are inherent in remote sensing. I employ a multi-step approach to address them. First, I carefully assess data quality by examining metadata and performing visual inspections for outliers and artifacts. Atmospheric correction is crucial to minimize the influence of atmospheric effects on the spectral signals. Radiometric and geometric corrections are applied to ensure data accuracy and consistency. Uncertainty quantification is vital; I incorporate methods like error propagation and Monte Carlo simulations to estimate the uncertainty associated with my results. Finally, rigorous validation using ground truth data, such as field measurements or high-accuracy reference datasets, is crucial to evaluate the accuracy and reliability of my analyses. The goal is not to eliminate uncertainty entirely but to quantify and manage it, thus increasing the reliability and trustworthiness of the findings.

Q 27. What are your strengths and weaknesses in the field of remote sensing and data acquisition?

My strengths lie in my deep understanding of remote sensing principles and my proficiency in various data processing and analysis techniques. I’m adept at handling large datasets and developing robust workflows for data acquisition, preprocessing, and analysis. I possess strong problem-solving skills and experience in applying remote sensing to diverse environmental applications. However, one area I’m continuously working on is enhancing my expertise in advanced machine learning algorithms for remote sensing. While I understand their application, I aim to deepen my understanding of their theoretical foundations and practical implementation to broaden the scope of my analyses and improve the efficiency of my workflows. Continuous self-improvement is key in this rapidly advancing field.

Q 28. Where do you see yourself in 5 years in the field of remote sensing?

In five years, I envision myself as a leading expert in applying advanced remote sensing techniques to address critical environmental challenges. I aim to be involved in cutting-edge research, perhaps leading projects that involve the integration of remote sensing with other data sources (e.g., IoT sensors, in-situ measurements). My goal is to contribute to the development of innovative solutions for environmental monitoring and management, such as improved deforestation detection, precision agriculture, or real-time disaster response systems. I see myself mentoring younger professionals, sharing my knowledge and experience to foster the next generation of remote sensing scientists and contribute significantly to the field’s advancement.

Key Topics to Learn for Remote Sensing and Data Acquisition Interview

- Electromagnetic Spectrum and Sensor Principles: Understand the interaction of electromagnetic radiation with the Earth’s surface and the operating principles of various remote sensing sensors (e.g., optical, thermal, radar).

- Data Acquisition Techniques: Familiarize yourself with different data acquisition methods, including aerial photography, satellite imagery, LiDAR, and UAV-based sensing. Understand the advantages and limitations of each.

- Image Preprocessing and Enhancement: Grasp the concepts of geometric correction, atmospheric correction, and image enhancement techniques to improve data quality and interpretation.

- Remote Sensing Data Analysis: Master techniques for analyzing remote sensing data, including spectral analysis, image classification (supervised and unsupervised), object-based image analysis (OBIA), and change detection.

- Geographic Information Systems (GIS): Develop proficiency in using GIS software for data visualization, spatial analysis, and integration of remote sensing data with other geospatial datasets.

- Practical Applications: Be prepared to discuss applications of remote sensing and data acquisition in various fields, such as environmental monitoring, precision agriculture, urban planning, disaster management, and resource exploration. Consider specific examples and case studies.

- Data Management and Quality Control: Understand the importance of metadata, data quality assessment, and error analysis in remote sensing projects.

- Emerging Technologies: Stay updated on advancements in remote sensing, such as hyperspectral imaging, multispectral imaging, and the use of AI and machine learning in data analysis.

Next Steps

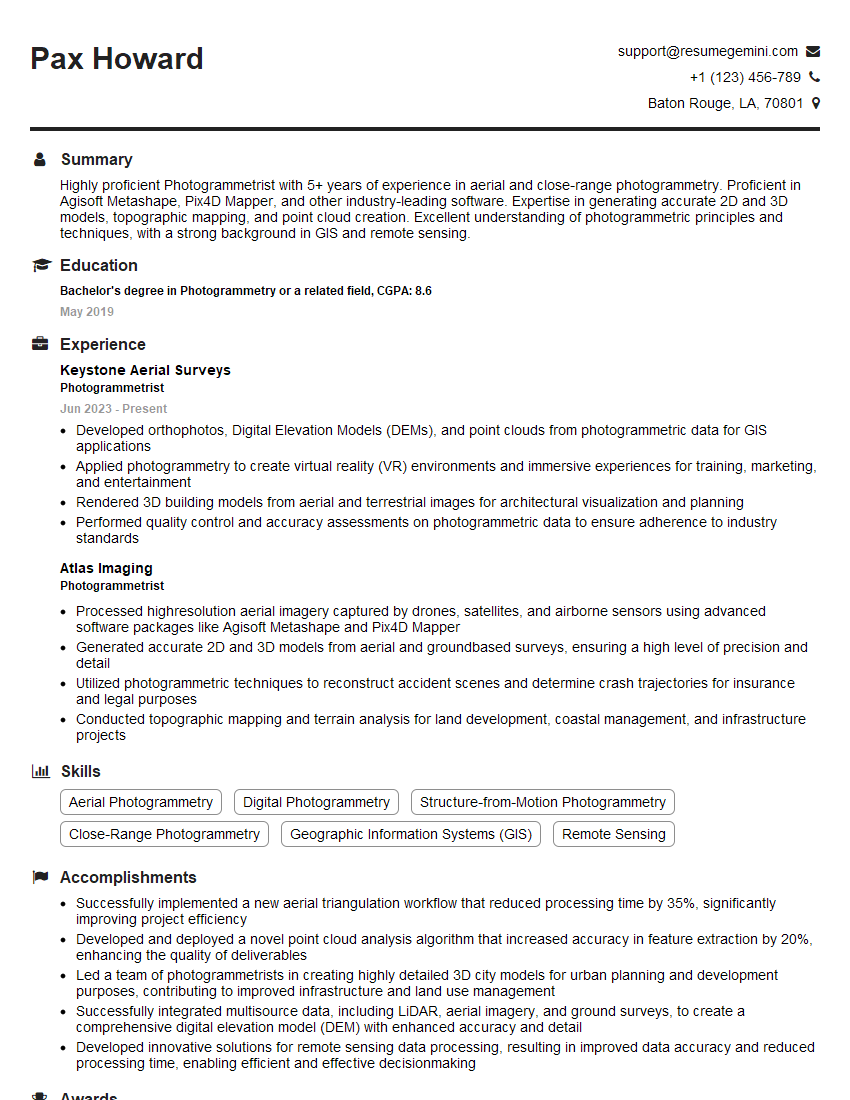

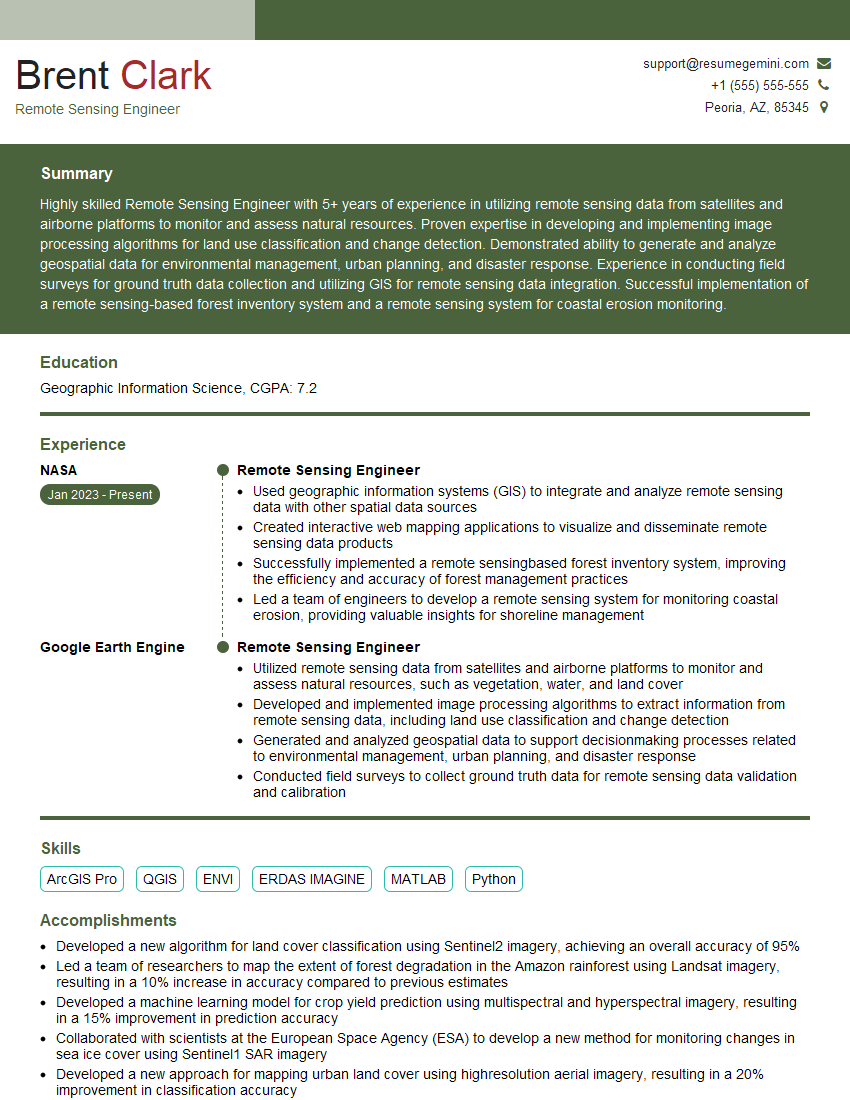

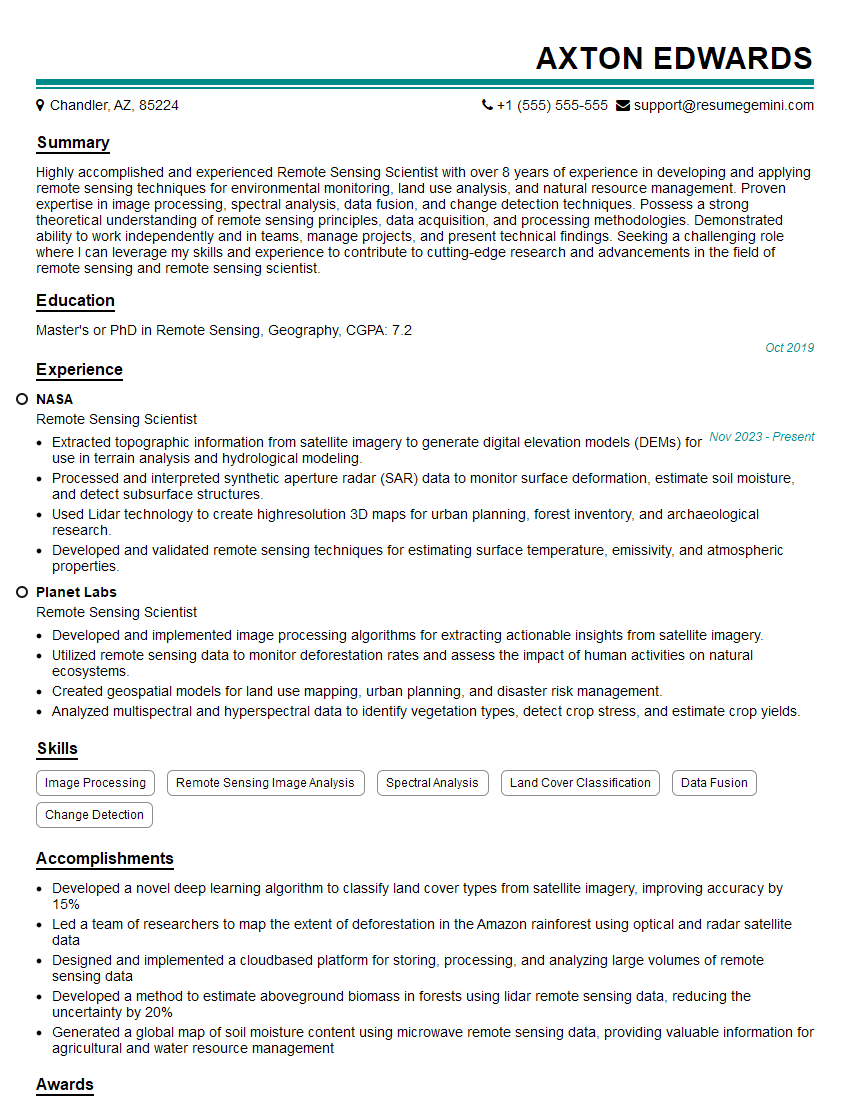

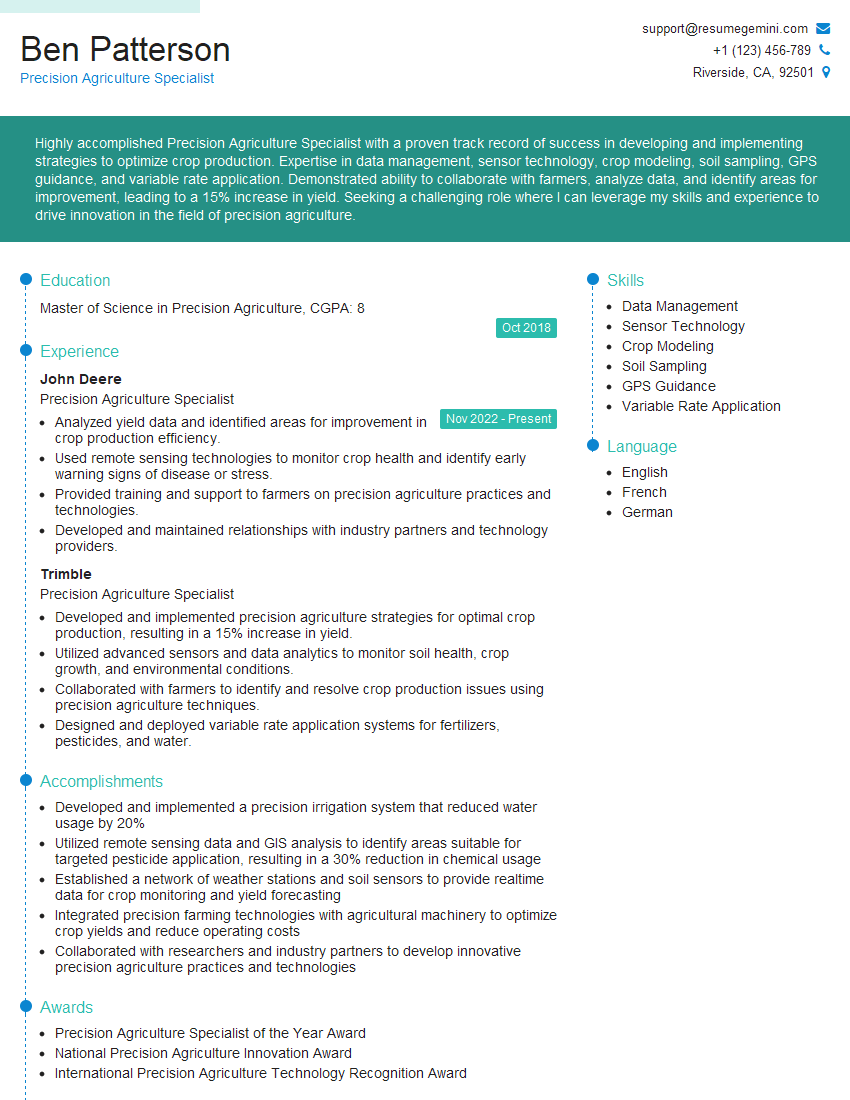

Mastering Remote Sensing and Data Acquisition opens doors to exciting and impactful careers in diverse fields. A strong foundation in these areas is highly valued by employers and significantly enhances your career prospects. To make the most of your job search, creating an Applicant Tracking System (ATS)-friendly resume is crucial. This ensures your qualifications are effectively communicated to potential employers. We strongly recommend using ResumeGemini to build a professional and impactful resume. ResumeGemini provides valuable tools and resources, including examples of resumes tailored to Remote Sensing and Data Acquisition, helping you present your skills and experience in the best possible light.

Explore more articles

Users Rating of Our Blogs

Share Your Experience

We value your feedback! Please rate our content and share your thoughts (optional).

What Readers Say About Our Blog

Hello,

We found issues with your domain’s email setup that may be sending your messages to spam or blocking them completely. InboxShield Mini shows you how to fix it in minutes — no tech skills required.

Scan your domain now for details: https://inboxshield-mini.com/

— Adam @ InboxShield Mini

Reply STOP to unsubscribe

Hi, are you owner of interviewgemini.com? What if I told you I could help you find extra time in your schedule, reconnect with leads you didn’t even realize you missed, and bring in more “I want to work with you” conversations, without increasing your ad spend or hiring a full-time employee?

All with a flexible, budget-friendly service that could easily pay for itself. Sounds good?

Would it be nice to jump on a quick 10-minute call so I can show you exactly how we make this work?

Best,

Hapei

Marketing Director

Hey, I know you’re the owner of interviewgemini.com. I’ll be quick.

Fundraising for your business is tough and time-consuming. We make it easier by guaranteeing two private investor meetings each month, for six months. No demos, no pitch events – just direct introductions to active investors matched to your startup.

If youR17;re raising, this could help you build real momentum. Want me to send more info?

Hi, I represent an SEO company that specialises in getting you AI citations and higher rankings on Google. I’d like to offer you a 100% free SEO audit for your website. Would you be interested?

Hi, I represent an SEO company that specialises in getting you AI citations and higher rankings on Google. I’d like to offer you a 100% free SEO audit for your website. Would you be interested?

good