Every successful interview starts with knowing what to expect. In this blog, we’ll take you through the top Digital Fires interview questions, breaking them down with expert tips to help you deliver impactful answers. Step into your next interview fully prepared and ready to succeed.

Questions Asked in Digital Fires Interview

Q 1. Explain the difference between live and dead forensics.

Live forensics, also known as real-time forensics, involves analyzing a system while it’s actively running. Think of it like watching a live sporting event – you’re seeing everything unfold in real time. This is crucial for capturing volatile data like RAM contents, network connections, and running processes, which disappear when the system is shut down. Dead forensics, on the other hand, focuses on a system after it’s been powered off. It’s more like reviewing a recording of the game after it’s finished. While you miss the live action, you can still analyze stored data on hard drives, SSDs, and other persistent storage devices. The choice between live and dead forensics depends on the situation and the type of evidence you’re seeking.

For example, imagine investigating a suspected ransomware attack. Live forensics might be essential to identify the source of the attack, stop the malware’s spread, and recover encrypted files while they are still accessible in memory. After neutralizing the immediate threat, dead forensics could be used to analyze the system for remnants of the malware, understand its attack vector, and build a stronger case for prosecution.

Q 2. Describe the process of securing a crime scene involving digital evidence.

Securing a digital crime scene is paramount to preserving the integrity of evidence. It’s a multi-step process that begins with isolating the affected system(s) from any network connection to prevent data alteration or deletion. This might involve physically disconnecting network cables and removing power sources (for non-volatile evidence). Next, you should document everything: take photographs, make detailed notes of the system’s configuration, and create a chain of custody document. This ensures a clear and unbroken record of who handled the evidence and when. Once documented, the system should be imaged using forensic tools that create a bit-by-bit copy of the storage devices, leaving the original untouched. The image is then used for the actual investigation, ensuring the original evidence remains pristine.

Consider a case involving a stolen laptop. First, you’d disconnect it from the network, photograph its location and state, and note down any visible damage. Next, you’d create a forensic image of the hard drive, creating a ‘clone’ for analysis. Finally, you’d meticulously document the entire process, including who handled the laptop and at what times. This documented chain of custody is vital in ensuring the admissibility of the evidence in court.

Q 3. What are the common file systems and their vulnerabilities?

Several file systems are commonly used, each with its own vulnerabilities. NTFS (New Technology File System), prevalent in Windows, is susceptible to data carving attacks (recovering deleted files) and metadata manipulation. FAT32 (File Allocation Table 32), older and simpler, is vulnerable to accidental data overwriting and has limited file size capabilities. ext4 (fourth extended file system), common in Linux, is relatively robust but can still be affected by data recovery techniques and potential flaws in its journaling system (which tracks file changes). APFS (Apple File System) is designed for modern macOS and iOS devices. While considered secure, its proprietary nature means vulnerabilities might take longer to identify and patch.

For instance, data carving can be used to recover deleted files from all mentioned systems. If deleted data is not overwritten, its remnants might persist on the disk, allowing forensic experts to recover it. Metadata manipulation can alter timestamps and file attributes in NTFS, making it difficult to determine the exact time of file creation or modification. This can potentially mislead investigators about when a specific event took place.

Q 4. How do you handle encrypted data during a digital forensics investigation?

Handling encrypted data requires specialized techniques and, in some cases, court orders. The approach depends on whether the encryption key is known. If the key is available, decryption is straightforward; the encrypted data is decrypted and investigated. If not, more advanced techniques are needed. These might include password cracking (which can take considerable time and computational resources), brute-force attempts, or using specialized tools to exploit vulnerabilities in the encryption algorithm itself. However, it’s crucial to understand that decryption attempts should be conducted ethically and legally, always respecting the suspect’s privacy rights unless legally permitted.

Imagine encountering an encrypted hard drive seized during a drug trafficking investigation. If a suspect provides the password or the investigators can legally obtain it, the drive is decrypted, and the contents investigated for evidence. If the decryption key is unavailable, investigators might try password cracking, but legal and ethical considerations around the use of these techniques are crucial. They must ensure they do not overstep their legal boundaries.

Q 5. What are the different types of volatile and non-volatile memory?

Volatile memory, like RAM (Random Access Memory), loses its data when the power is off. Think of it like a whiteboard – its contents disappear once erased. This makes recovering data from RAM crucial during live forensics. Non-volatile memory, on the other hand, retains data even when power is lost. Hard drives (HDDs), solid-state drives (SSDs), and ROM (Read-Only Memory) are examples of non-volatile storage. They’re more like a permanent record book.

In investigating a cybercrime, the contents of RAM (volatile memory) – containing running processes, open files, and network connections – might reveal the perpetrator’s actions at the time of the crime. Meanwhile, data stored on the computer’s hard drive (non-volatile memory) provides a longer-term record of files, system configuration and activity logs that can be analyzed even after the computer is shut down.

Q 6. Explain the concept of chain of custody in digital forensics.

Chain of custody is a meticulous record-keeping process that documents the handling of evidence from the moment it’s collected until it’s presented in court. It ensures the integrity and authenticity of the evidence by creating an unbroken trail showing who had access to the evidence, when, and under what conditions. This includes detailed documentation of every person who handles the evidence, the date and time, and any changes made to its state. Any discrepancies in the chain of custody can severely weaken the evidence’s admissibility in court.

Imagine a case involving a mobile phone found at a crime scene. The chain of custody document would meticulously trace the phone’s journey: from its initial discovery by the officer, its secure packaging and transport to the forensics lab, the analysis performed, and finally, its transportation to the courtroom. Each step is documented, including signatures and timestamps, creating an auditable trail.

Q 7. What are some common methods for data recovery?

Data recovery techniques vary depending on the cause of data loss. For deleted files, simple undelete tools can often restore data from the Recycle Bin or by recovering deleted file entries. If the data is lost due to drive failure, specialized tools can attempt to recover data from damaged hard drives by scanning the disk surface and reconstructing file fragments. For overwritten data, more sophisticated techniques, such as data carving, are employed, which aims to identify and reconstruct file fragments even if the file system metadata is lost.

For instance, if a user accidentally deletes important files, a simple recovery tool can often retrieve them from the file system’s unallocated space. If a hard drive is physically damaged, specialized data recovery tools employing sophisticated algorithms and advanced hardware might still recover parts of the lost information. If data is overwritten, data carving is used as a last resort, attempting to reconstruct files from the raw data on the disk.

Q 8. How do you identify and analyze malware?

Identifying and analyzing malware involves a multi-step process that combines automated tools and expert analysis. First, we need to establish the presence of malicious code. This often begins with observing suspicious behavior on a system, such as unusual network activity, sluggish performance, or unexpected program executions. Then we use various techniques.

Static Analysis: Examining the malware without executing it. This involves inspecting the code’s structure, identifying functions, and looking for known malicious patterns or signatures using tools like VirusTotal. This is safer but might miss polymorphic or obfuscated malware.

Dynamic Analysis: Observing the malware’s behavior in a controlled environment (like a sandbox). This reveals how it interacts with the system, what network connections it establishes, and what files it modifies. Tools like Cuckoo Sandbox assist with this. This method is better at detecting advanced malware but requires more resources and expertise.

Behavioral Analysis: This focuses on understanding the malware’s objectives, such as data exfiltration, system compromise, or network disruption. This requires detailed analysis of logs, network traffic, and system processes to uncover the malware’s intentions and impact.

For example, imagine finding a suspicious executable. Static analysis might reveal the presence of known malicious code strings. Dynamic analysis within a sandbox would show attempts to connect to a command-and-control server, revealing its malicious nature. Combining both provides a comprehensive picture.

Q 9. What are the legal and ethical considerations in digital forensics?

Legal and ethical considerations are paramount in digital forensics. We must adhere to strict rules to ensure the admissibility of evidence in court and protect individual rights. Key aspects include:

Chain of Custody: Maintaining a meticulous record of who handled the evidence, when, and where. Any break in the chain can compromise the evidence’s integrity and admissibility.

Data Privacy: Respecting the privacy of individuals involved. Only access and analyze data relevant to the investigation, and comply with relevant data protection laws like GDPR or CCPA.

Search Warrants: Obtaining appropriate legal authorization before searching and seizing digital devices. Unauthorized access is a serious offense.

Data Integrity: Ensuring that the digital evidence is not altered or corrupted during the investigation. Using write-blocking devices and forensic tools helps maintain integrity.

Professional Ethics: Maintaining objectivity and avoiding bias in our analysis. Documenting our findings thoroughly and honestly, irrespective of potential outcomes.

For example, if investigating a company’s data breach, we need to ensure that we only access data relevant to the breach and have the necessary legal authority to do so. We must also rigorously maintain the chain of custody to ensure the evidence’s admissibility in court.

Q 10. Describe your experience with forensic tools (e.g., EnCase, FTK, Autopsy).

I have extensive experience with several leading forensic tools. My proficiency includes:

EnCase: I’ve used EnCase for disk imaging, data recovery, and keyword searching across large datasets. Its advanced features are crucial for investigating complex cases. For example, I’ve used EnCase’s timeline feature to reconstruct the sequence of events leading up to a data breach.

FTK (Forensic Toolkit): I’ve leveraged FTK for its efficient processing of large volumes of data, particularly emails and web history. Its integrated features for hash analysis and data carving are invaluable for extracting evidence.

Autopsy: Autopsy’s open-source nature and integration with The Sleuth Kit (TSK) make it a versatile tool. I’ve utilized Autopsy for its ease of use in analyzing image files and identifying deleted files or metadata.

I’m comfortable using these tools independently and can adapt to different scenarios and needs. The selection of the appropriate tool depends on the specific case and available resources.

Q 11. Explain the process of network forensics investigation.

Network forensics investigations focus on identifying and analyzing network traffic to uncover malicious activities or security breaches. The process usually involves:

Data Acquisition: Capturing network traffic using packet sniffers like Wireshark or tcpdump. This often involves setting up mirrored ports or utilizing network taps for non-intrusive monitoring.

Data Analysis: Examining the captured network packets to identify suspicious patterns, malicious connections, data exfiltration, or unauthorized access attempts. Protocol analysis is key here.

Correlation: Combining network data with other sources of evidence, such as logs from servers or endpoints, to build a comprehensive picture of the incident.

Incident Reconstruction: Reassembling the sequence of events that led to the breach. This might involve tracing the attacker’s actions, identifying compromised systems, and determining the extent of the damage.

For instance, investigating a suspected denial-of-service attack would involve capturing network traffic, analyzing the volume and source of malicious packets, and correlating this data with server logs to pinpoint the attack’s origin and impact.

Q 12. How do you investigate data breaches?

Investigating data breaches requires a systematic approach:

Containment: Immediately isolating affected systems to prevent further data loss or compromise. This might involve disconnecting servers from the network or blocking malicious IPs.

Evidence Collection: Gathering all relevant data, including logs, system configurations, and network traffic captures, while ensuring data integrity. This might involve creating forensic images of affected hard drives.

Incident Response: Working with incident responders to restore affected systems and implement security measures to prevent future breaches. This may involve patching vulnerabilities, updating security software, and strengthening access controls.

Root Cause Analysis: Identifying the cause of the breach, such as exploited vulnerabilities, weak passwords, or social engineering attacks.

Reporting: Documenting findings and recommendations for preventing future incidents.

For example, if a database has been compromised, the investigation will look at network traffic to identify how attackers accessed the system, examine server logs to see what actions were taken by the attackers, and check access logs to understand how unauthorized access was gained.

Q 13. How do you handle cloud-based digital forensics investigations?

Cloud-based digital forensics investigations present unique challenges due to the distributed nature of cloud environments and the involvement of third-party service providers. The approach includes:

Legal Holds: Securing relevant data from cloud providers, often requiring legal processes and cooperation.

Data Acquisition: Utilizing cloud forensics tools to collect data from cloud storage services, virtual machines, and other cloud resources. This may involve working with the cloud provider’s APIs or using specialized tools.

Data Analysis: Analyzing cloud logs and metadata to trace events and identify malicious activities.

Collaboration: Working closely with cloud providers to access and analyze data within their environment. Collaboration with the provider can greatly expedite investigation.

For example, if a data breach occurred on an Amazon Web Services (AWS) instance, we would need to work with AWS to obtain logs, access the virtual machine image, and analyze configurations to understand how the breach occurred.

Q 14. What are the different types of digital evidence?

Digital evidence encompasses a broad range of data types:

Computer Forensics: Hard drive contents, registry keys, file metadata, and deleted files.

Network Forensics: Network packets, logs from routers and firewalls, and DNS records.

Mobile Forensics: Data from mobile devices like smartphones and tablets, including call logs, text messages, and GPS data.

Cloud Forensics: Logs and data from cloud-based services.

Database Forensics: Data from databases, including logs and transactional data.

Email and Web Forensics: Email content, web browser history, and website logs.

Social Media Forensics: Data from social media platforms, including posts, messages, and profiles.

The admissibility of digital evidence depends on its authenticity, integrity, and relevance to the investigation. Proper chain of custody and adherence to legal procedures are essential for ensuring the acceptance of evidence in a court setting.

Q 15. Explain your experience with various hashing algorithms (MD5, SHA).

Hashing algorithms like MD5 and SHA are crucial in digital forensics for verifying data integrity. They create a unique ‘fingerprint’ (hash) for a file. Any change, however small, to the file results in a completely different hash. This is essential for ensuring that evidence hasn’t been tampered with.

MD5 (Message Digest Algorithm 5) was widely used but is now considered cryptographically broken due to vulnerabilities in its design. It’s susceptible to collisions – meaning different files can produce the same MD5 hash. We generally avoid using MD5 in critical forensic investigations.

SHA (Secure Hash Algorithm) offers a family of stronger algorithms, such as SHA-1, SHA-256, and SHA-512. SHA-256 and SHA-512 are currently preferred for their enhanced security and resistance to collisions. In my work, I routinely use SHA-256 to generate hashes of evidence files, recording them in my chain of custody documentation. This ensures that if a file is altered, I will immediately notice the hash mismatch. This rigorous approach is critical to maintaining the admissibility of evidence in court.

For example, imagine investigating a data breach. I would hash all the files on a suspect’s computer and then compare those hashes to hashes of files from the victim’s system. Matching hashes provide strong evidence of data transfer.

Career Expert Tips:

- Ace those interviews! Prepare effectively by reviewing the Top 50 Most Common Interview Questions on ResumeGemini.

- Navigate your job search with confidence! Explore a wide range of Career Tips on ResumeGemini. Learn about common challenges and recommendations to overcome them.

- Craft the perfect resume! Master the Art of Resume Writing with ResumeGemini’s guide. Showcase your unique qualifications and achievements effectively.

- Don’t miss out on holiday savings! Build your dream resume with ResumeGemini’s ATS optimized templates.

Q 16. How do you handle deleted files and data recovery?

Deleted files aren’t truly gone; they simply become marked as available space on the storage device. Their data remains until overwritten. Data recovery involves using specialized tools to retrieve this data. The success rate depends on how much the space has been overwritten.

My approach to handling deleted files and data recovery involves:

- Imaging the drive: Creating a forensic copy (image) of the entire drive is the first step. This preserves the original evidence and prevents accidental alteration.

- Using data recovery software: Several tools like FTK Imager, Autopsy, and EnCase can recover deleted files, analyzing slack space and unallocated clusters.

- Analyzing file system metadata: Information like deleted file names and timestamps often remain in the file system’s metadata, which aids recovery.

- Carving: If file system metadata is damaged, file carving techniques can identify and reconstruct files based on their internal signatures (like header and footer data).

A real-world example: I once recovered crucial financial records from a seemingly ‘wiped’ hard drive in a fraud investigation, significantly aiding the case. The data had not been fully overwritten, allowing recovery through careful data carving techniques.

Q 17. What is the significance of timestamps in digital forensics?

Timestamps are incredibly significant in digital forensics as they provide a chronological record of file activity. They offer valuable context for analyzing events and establishing timelines. Think of them as the breadcrumbs left behind in the digital world.

Different types of timestamps exist: creation, modification, and access times. These times can help establish when a file was created, last modified, or last accessed. Inconsistencies between these timestamps can highlight potential evidence tampering or manipulation.

Practical Applications:

- Establishing timelines: By analyzing timestamps of emails, documents, and log files, investigators can reconstruct the sequence of events leading to a crime.

- Identifying anomalies: Unexpected timestamps – a file accessed at 3 AM, for instance – may indicate suspicious activity.

- Corroborating evidence: Timestamps can corroborate or contradict witness statements or other evidence.

Accurate time synchronization is critical. Timestamps must be properly handled and documented within the chain of custody to avoid challenges to their validity in a court of law.

Q 18. Explain your understanding of steganography and its detection.

Steganography is the art of concealing information within other files, like hiding a secret message in an image or an audio file. It differs from cryptography, which scrambles the information to make it unreadable. Steganography aims to make the hidden data’s existence invisible.

Steganography Detection: Detecting steganography involves analyzing the carrier file (the file hiding the data) for inconsistencies. Changes in file size, unusual patterns in file metadata, or subtle alterations in the data itself can be indicators. Specialized tools use statistical analysis and algorithms to detect these anomalies.

Methods of Detection:

- Statistical analysis: Analyzing the distribution of pixel values in an image or frequencies in audio can reveal hidden data.

- Frequency analysis: Detecting unusual patterns in the frequency spectrum of an audio file.

- Chi-square test: Testing for statistical deviations from expected randomness in the carrier file.

A real-world scenario might involve detecting a hidden command and control channel used by malware, concealed within an apparently innocent image file sent through email.

Q 19. Describe different methods of data hiding.

Data hiding techniques are diverse and constantly evolving. Methods range from simple to extremely sophisticated, utilizing various types of media.

Common Methods:

- Least Significant Bit (LSB) Insertion: This method hides data in the least significant bits of image pixels or audio samples, causing minimal visible or audible distortion.

- Spread Spectrum: Distributing the hidden data across a broader range of frequencies or pixel values, making it harder to detect.

- Data Hiding in Metadata: Embedding data within the metadata of files, such as image EXIF data or document properties.

- Covering Data within Redundant Information: Using spaces or other redundant information within a file to hide data.

- Null Ciphers: Hiding data by arranging or removing specific elements like spaces in text files.

The choice of method depends on factors like the desired level of security, the size of the data to hide, and the type of carrier file used.

Q 20. How do you perform a memory analysis?

Memory analysis is a crucial part of digital forensics, particularly in malware investigations and incident response. It involves examining the contents of a computer’s RAM (Random Access Memory) to identify active processes, running malware, network connections, and other crucial information which may not be found elsewhere.

The Process:

- Acquiring Memory: This requires specialized tools and techniques to create a forensic image of the RAM, ideally done while the system is powered off to prevent data loss or alteration.

- Analyzing Memory Images: Software like Volatility is used to parse the memory image. This involves identifying processes, analyzing their memory regions, and reconstructing system activity.

- Identifying Malware: Memory analysis can reveal malicious code and its behavior, even if it’s not present on the hard drive.

- Network Forensics: Analyzing network connections and activity within memory can reveal communication with command-and-control servers.

- Credentials Extraction: Memory analysis often uncovers passwords, encryption keys, and other sensitive information stored in RAM.

Memory is volatile, so capturing its contents is time-sensitive and requires careful planning and execution. Imagine a situation where malware is actively encrypting files. Analyzing the memory at that moment could uncover the encryption key before the process completes.

Q 21. Explain the concept of disk imaging and its importance.

Disk imaging is the process of creating an exact bit-by-bit copy of a hard drive or other storage device. This copy, the ‘forensic image,’ is a critical step in digital investigations because it preserves the original evidence in its entirety.

Importance:

- Preservation of Evidence: The original drive should remain untouched to maintain its integrity as evidence. The image becomes the primary source for analysis.

- Prevention of Data Alteration: Any analysis is performed on the image, protecting the original from accidental or intentional modification.

- Multiple Analysis: Multiple investigators can work on the same case concurrently without risking data conflicts or corruption by working on independent copies.

- Chain of Custody: The creation and handling of the image are meticulously documented as part of the chain of custody.

Commonly used imaging tools include FTK Imager, EnCase, and dd (a command-line utility). I always use hashing to verify the image’s integrity, ensuring it’s a perfect copy of the original. In essence, disk imaging is the foundation for any credible digital forensic investigation.

Q 22. How do you create a forensic write blocker and why is it crucial?

A forensic write blocker is a hardware or software device that prevents any data from being written to a storage device during a forensic investigation. Think of it as a one-way mirror for data – you can read information from the drive, but you absolutely cannot modify or delete anything. This is crucial because any alteration to the original data compromises its integrity and can render it inadmissible in court.

Creating a write blocker often involves specialized hardware with circuitry that intercepts write commands and prevents them from reaching the storage device. Software-based solutions may leverage operating system features to achieve a similar outcome, usually by mounting the drive in a read-only mode. The effectiveness relies on completely isolating the drive from any writing processes, even unintended ones such as automatic updates or system logs.

For example, imagine investigating a suspect’s hard drive. Without a write blocker, simply connecting the drive to a computer for analysis could unintentionally overwrite critical data – a seemingly innocuous action like the operating system creating swap files or log entries could overwrite crucial evidence. A write blocker eliminates this risk, ensuring the digital evidence remains in its original state.

Q 23. What are the challenges of investigating mobile device forensics?

Mobile device forensics presents unique challenges compared to traditional computer forensics. The biggest challenges include:

- Data Encryption: Many modern mobile devices utilize strong encryption by default, making access to data difficult without the correct passwords or passcodes. Bypassing this encryption often requires specialized tools and techniques, and can be time-consuming.

- Data Volatility: Data on a mobile device, particularly RAM, is highly volatile and can be lost easily. Quickly securing the device and its data is critical to avoid data loss.

- Device Fragmentation: The vast array of mobile operating systems, manufacturers, and models creates significant device fragmentation. Investigators need to be familiar with numerous operating systems and their specific forensic techniques, which can add significant complexity.

- Cloud Integration: Modern mobile devices heavily rely on cloud storage, which makes it difficult to capture all relevant data from the device alone. Investigating the associated cloud accounts becomes necessary, often requiring legal processes.

- Data Hiding Techniques: Users often employ various data hiding techniques, making evidence difficult to find. This might include using hidden partitions, encrypted containers, or employing steganography.

Consider a scenario where a stolen phone is recovered. Accessing its encrypted data requires specialized software and potentially court orders to access cloud backups. Then, data extraction is needed, followed by analysis, correlating phone data with other sources.

Q 24. How do you analyze log files for suspicious activities?

Analyzing log files for suspicious activities involves a systematic approach. First, it is critical to identify the relevant log files, which may include system logs, application logs, web server logs, or network logs. The location and format of these logs vary based on the operating system and the application generating them.

Next, we look for patterns and anomalies. This might involve searching for keywords related to known attacks (e.g., SQL injection attempts, brute-force logins) or analyzing unusual timestamps, IP addresses, or user activity. Tools like grep (on Linux/macOS) or specialized log management systems (e.g., Splunk, ELK stack) can greatly assist in this process.

For instance, if we suspect a data breach, we would look for failed login attempts, unusually large data transfers, or accesses from unexpected locations. We would correlate these findings with other evidence, such as network traffic logs, to establish a timeline and understand the attack’s nature. Analyzing the log entries sequentially is vital, as the order of events is key in piecing together the sequence of actions performed by the attacker. Time correlation is particularly important when looking for patterns across multiple log files from various sources.

grep "failed login" /var/log/auth.logThe above command searches for the string “failed login” within the auth.log file, a common location for login attempts on Linux systems.

Q 25. What are the different types of computer attacks and their countermeasures?

Computer attacks come in various forms. Some common types include:

- Malware: This includes viruses, worms, trojans, ransomware, and spyware. Countermeasures involve antivirus software, firewalls, intrusion detection systems, and user education.

- Phishing: Tricking users into revealing sensitive information through deceptive emails or websites. Countermeasures include employee training, email filtering, and multi-factor authentication.

- Denial-of-Service (DoS) attacks: Overwhelming a system with traffic to make it unavailable. Countermeasures include robust network infrastructure, rate limiting, and DDoS mitigation services.

- SQL Injection: Exploiting vulnerabilities in database applications to gain unauthorized access. Countermeasures include input validation, parameterized queries, and secure coding practices.

- Man-in-the-Middle (MitM) attacks: Intercepting communication between two parties. Countermeasures involve using HTTPS, VPNs, and strong encryption.

Consider a scenario where a company experiences a ransomware attack. The countermeasures might include restoring data from backups, investigating the attack vector, patching vulnerabilities, and implementing stronger security protocols to prevent future attacks. Each attack type requires a specific set of countermeasures tailored to the situation.

Q 26. Describe your experience with incident response methodologies.

My experience with incident response methodologies follows a well-established framework, often based on NIST’s Cybersecurity Framework or similar methodologies. I am proficient in handling all phases of incident response, which are typically:

- Preparation: Developing incident response plans, establishing procedures, and conducting regular training and security assessments.

- Identification: Detecting and identifying security incidents through monitoring tools and alerts.

- Containment: Isolating affected systems to prevent further damage or spread of the incident.

- Eradication: Removing the threat and restoring affected systems to a secure state.

- Recovery: Restoring data and systems to full functionality.

- Post-incident activity: Conducting a thorough analysis of the incident, documenting findings, and implementing corrective actions to prevent future occurrences.

In a real-world scenario, I might be called upon to respond to a data breach. This would involve immediately isolating affected systems, collecting forensic evidence, identifying the source of the breach, restoring data from backups, and then implementing enhanced security measures (such as multi-factor authentication) to prevent similar events in the future.

Q 27. Explain your knowledge of various operating systems and their file systems.

I possess extensive knowledge of various operating systems and their file systems. This includes:

- Windows: Familiar with NTFS, FAT32, and exFAT file systems, including their metadata structures, journaling features, and forensic analysis techniques.

- macOS: Proficient with the Apple File System (APFS), HFS+, and their characteristics, including how data is stored and recovered.

- Linux: Experienced with ext2, ext3, ext4, and Btrfs file systems, understanding their differences in terms of journaling, metadata, and data structures.

- Android: Knowledgeable about Android’s file system structure, including how applications store data, and techniques for extracting data from encrypted devices.

- iOS: Familiar with the iOS file system and how data is stored and managed on iOS devices, including techniques for extracting data from backups.

Understanding these file systems is critical for effective data recovery and forensic analysis. For example, knowing the location of metadata within a specific file system allows efficient extraction of crucial timestamps, file attributes, and other essential information related to digital evidence.

Q 28. How do you stay updated with the latest digital forensics techniques and tools?

Staying updated in the dynamic field of digital forensics requires a multi-pronged approach. I regularly engage in the following activities:

- Industry Conferences and Workshops: Attending conferences such as SANS Institute events and specialized digital forensics conferences to learn about the latest techniques and tools from leading experts.

- Professional Certifications: Pursuing and maintaining certifications like Certified Forensic Computer Examiner (CFCE) to demonstrate expertise and stay current with best practices.

- Professional Publications and Journals: Reading industry journals and publications to keep abreast of emerging threats, trends, and innovative solutions.

- Online Courses and Training: Participating in online courses and training programs offered by reputable organizations to enhance specific skills and deepen my knowledge in niche areas.

- Networking with Peers: Engaging with other digital forensics professionals through online forums, professional organizations, and networking events to share knowledge and learn from experiences.

This continuous learning ensures I’m always equipped with the latest knowledge, tools, and techniques to handle the challenges posed by evolving cyber threats and digital investigation methodologies.

Key Topics to Learn for Digital Fires Interview

- Digital Forensics Fundamentals: Understanding the legal and ethical aspects, data acquisition methods, and chain of custody principles.

- Network Forensics: Analyzing network traffic, identifying intrusions, and reconstructing attack timelines using tools like Wireshark.

- Disk Forensics: Mastering techniques for data recovery, file carving, and analyzing file system metadata.

- Mobile Device Forensics: Extracting data from smartphones and tablets, understanding mobile operating systems, and using specialized forensic tools.

- Cloud Forensics: Investigating data breaches and security incidents in cloud environments, understanding cloud storage and service models.

- Malware Analysis: Identifying and analyzing malicious software, understanding malware behavior and techniques for reverse engineering.

- Data Recovery Techniques: Practical application of data recovery methods, including file recovery and partition recovery, for various storage media.

- Incident Response Methodology: Understanding and applying established incident response frameworks (e.g., NIST) to real-world scenarios.

- Report Writing and Presentation: Developing clear, concise, and professional reports summarizing findings and evidence.

- Tools and Technologies: Familiarity with commonly used forensic tools (e.g., Autopsy, FTK Imager) and their applications.

Next Steps

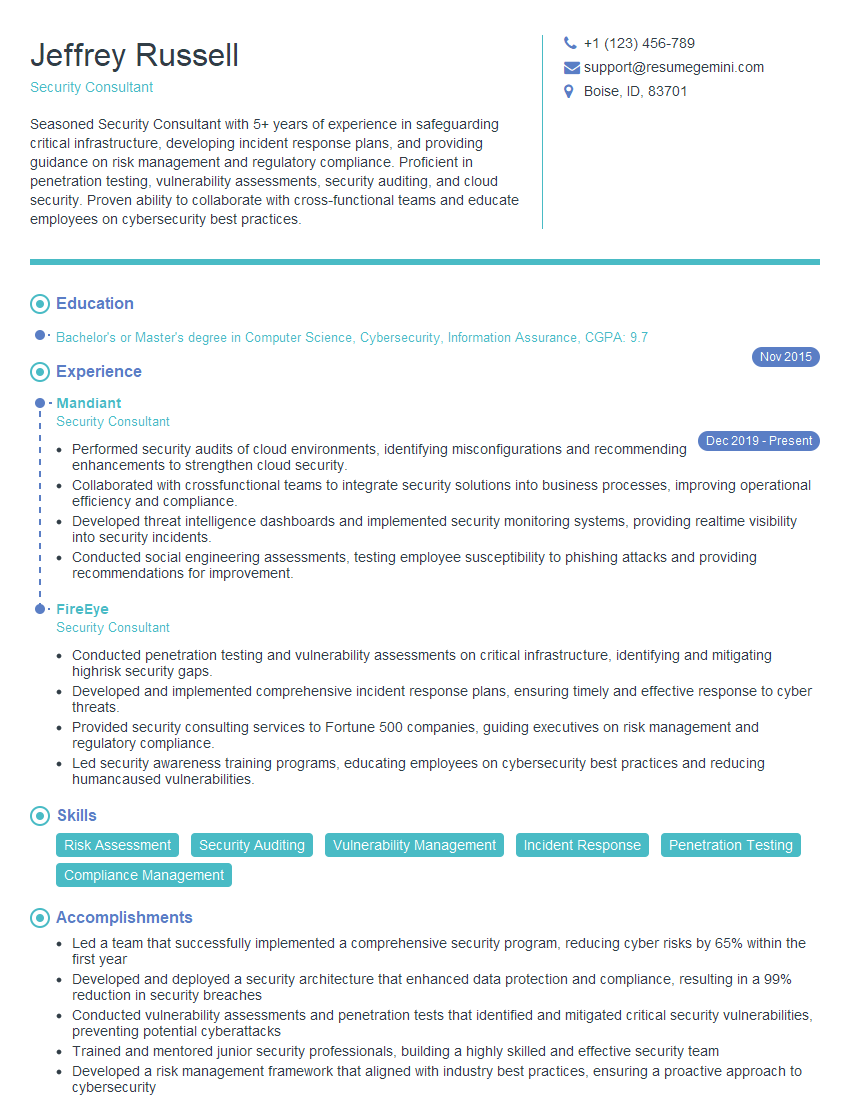

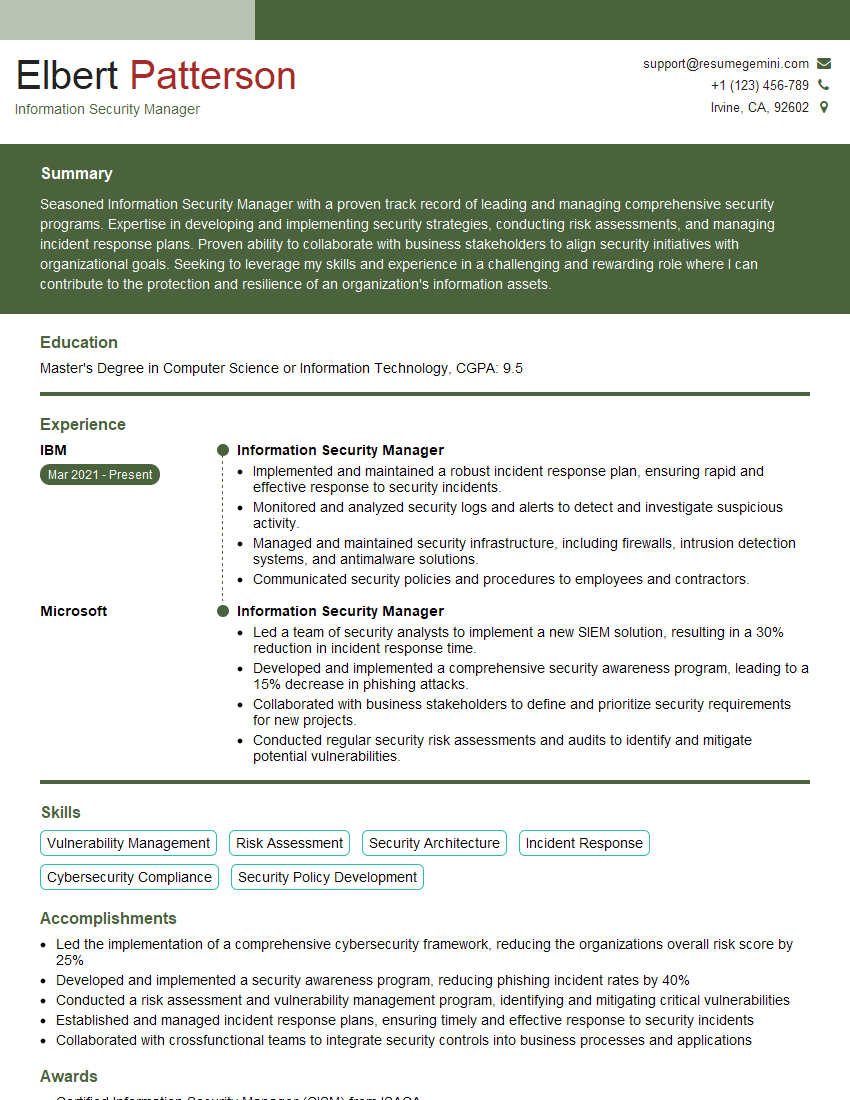

Mastering Digital Fires skills opens doors to exciting and impactful career opportunities in cybersecurity and investigations. To significantly boost your job prospects, crafting an ATS-friendly resume is crucial. This ensures your application gets noticed by recruiters and hiring managers. We highly recommend using ResumeGemini to build a professional and effective resume. ResumeGemini provides valuable tools and resources to help you create a compelling resume that highlights your skills and experience in Digital Fires. Examples of resumes tailored to Digital Fires are available to guide your resume creation process.

Explore more articles

Users Rating of Our Blogs

Share Your Experience

We value your feedback! Please rate our content and share your thoughts (optional).

What Readers Say About Our Blog

Hi, I represent an SEO company that specialises in getting you AI citations and higher rankings on Google. I’d like to offer you a 100% free SEO audit for your website. Would you be interested?

good