Preparation is the key to success in any interview. In this post, we’ll explore crucial Automation and Technology Integration interview questions and equip you with strategies to craft impactful answers. Whether you’re a beginner or a pro, these tips will elevate your preparation.

Questions Asked in Automation and Technology Integration Interview

Q 1. Explain your experience with different automation frameworks (e.g., Selenium, Robot Framework, UiPath).

My experience spans several automation frameworks, each with its strengths and weaknesses. Selenium, for instance, is a powerful tool for web UI automation, particularly effective for testing web applications. I’ve extensively used it with Java and Python, creating test suites for complex web applications. One project involved automating regression testing for an e-commerce platform, significantly reducing testing time and improving accuracy. Robot Framework, on the other hand, provides a keyword-driven approach, making it easier for teams with varying technical skills to collaborate on test automation. I’ve used it to integrate various testing tools and create reusable test libraries. Finally, UiPath, a Robotic Process Automation (RPA) tool, is great for automating repetitive tasks within desktop applications. I used UiPath to automate data entry from various spreadsheets into a CRM system, freeing up employees for higher-value tasks. The choice of framework always depends on the specific project requirements – the complexity of the application, the team’s skillset, and the overall objectives.

- Selenium: Excellent for web UI automation, supports multiple languages and browsers.

- Robot Framework: Keyword-driven approach, great for collaboration and reusability.

- UiPath: RPA focused, ideal for automating desktop applications and repetitive tasks.

Q 2. Describe your experience with API integration using REST or SOAP.

API integration is a core component of many automation projects. I have extensive experience with both REST and SOAP APIs. REST APIs, using HTTP methods like GET, POST, PUT, and DELETE, are my go-to for most projects due to their simplicity and widespread adoption. For example, I’ve built automation scripts that interact with RESTful APIs to retrieve data from a weather service, process it, and update a database. This involved handling JSON or XML responses and managing authentication tokens. SOAP APIs, while less common now, still find application in enterprise systems. I’ve worked with SOAP APIs using tools like Apache Axis2, handling XML-based requests and responses. A project involved automating the integration of two legacy systems via a SOAP API, reducing manual data transfer and improving data consistency.

Example (REST API call in Python using requests library):

import requests

response = requests.get('https://api.example.com/data', headers={'Authorization': 'Bearer my_token'})

data = response.json()Q 3. How do you handle exceptions and errors in automation scripts?

Robust error handling is critical for reliable automation. My approach involves a multi-layered strategy. Firstly, I use try-except blocks to catch specific exceptions like network errors, file not found errors, or API errors. Secondly, I implement logging mechanisms to record all actions, exceptions, and error messages, which are crucial for debugging and identifying the root cause of issues. Thirdly, I use assertions to verify the expected outcomes of each step in the automation process. If an assertion fails, it indicates a problem. Finally, I often incorporate mechanisms for retrying failed operations. For instance, if a network request fails, the script might retry it a few times before failing permanently. This helps to improve the resilience of the automation scripts, making them less susceptible to temporary interruptions.

Example (Python try-except block):

try:

# Code that might raise an exception

except FileNotFoundError:

print('File not found')

except Exception as e:

print(f'An error occurred: {e}')Q 4. What are the benefits and challenges of cloud-based automation?

Cloud-based automation offers significant advantages, primarily scalability and cost-effectiveness. You can easily scale your automation infrastructure up or down based on demand, avoiding the upfront investment in hardware. Cloud platforms also provide a readily available pool of resources for parallel execution, accelerating the automation process. However, there are challenges. Security and data privacy are paramount concerns; you need to ensure your cloud environment is adequately secured. Dependency on internet connectivity can also be an issue. Downtime can impact the automation process. Network latency can also introduce delays. Moreover, migrating existing automation solutions to the cloud requires careful planning and execution. I’ve seen projects where insufficient planning led to increased costs and delays during migration. A well-defined strategy, including cost analysis and a comprehensive security plan, is crucial for successful cloud-based automation.

Q 5. Explain your experience with CI/CD pipelines and automation.

CI/CD pipelines are essential for automating the software development lifecycle, and I have extensive experience integrating automation testing into these pipelines. I typically use tools like Jenkins, GitLab CI, or Azure DevOps to build automated pipelines that trigger automated tests upon code commits. These pipelines include automated builds, unit tests, integration tests, and even UI tests. This ensures that code changes are thoroughly tested before deployment, reducing the risk of introducing bugs into production. For instance, I’ve implemented a CI/CD pipeline where every code push automatically triggers a series of automated tests, generating detailed reports that are used to monitor the quality of the software. This drastically reduced the time it takes to identify and resolve bugs.

Q 6. Describe your experience with different scripting languages (e.g., Python, PowerShell, JavaScript).

My scripting language proficiency includes Python, PowerShell, and JavaScript. Python is my preferred language for many automation tasks due to its versatility, large ecosystem of libraries (like `requests` for APIs, `selenium` for web automation, and `pytest` for testing), and readability. PowerShell excels for automating tasks within the Windows environment, managing systems, and interacting with Active Directory. I’ve used it to automate system administration tasks, like user provisioning and software deployment. JavaScript is mainly used for front-end web automation and tasks involving browser interactions. The choice of language depends greatly on the specific automation task and the environment it operates within. For instance, Python is ideal for cross-platform API testing and data processing, whereas PowerShell is perfect for managing Windows systems.

Q 7. How do you ensure the scalability and maintainability of your automation solutions?

Scalability and maintainability are crucial aspects of any successful automation solution. To ensure scalability, I design my automation scripts in a modular fashion, using reusable components that can be easily scaled up to handle increasing volumes of data or tasks. For instance, instead of having a single script for all tasks, I create smaller, independent modules. Maintainability involves writing clear, well-documented code, following coding best practices, and using version control systems (like Git). I also incorporate regular code reviews to identify potential issues and ensure the codebase remains clean and easy to understand. Properly structured code makes it simpler to modify, debug, and extend the automation solutions, ensuring longevity and reducing future maintenance efforts. Furthermore, using well-defined naming conventions and establishing a clear coding style guide enhances both readability and maintainability.

Q 8. How do you test and debug automation scripts?

Testing and debugging automation scripts is crucial for ensuring reliability and accuracy. My approach involves a multi-layered strategy combining unit testing, integration testing, and end-to-end testing.

Unit testing focuses on individual components or functions of the script. I use frameworks like pytest (Python) or JUnit (Java) to write tests that verify the functionality of small, isolated parts. For example, if I have a function that extracts data from an email, I’d write unit tests to ensure it correctly handles different email formats and data types.

Integration testing checks how different components interact. This often involves mocking external services (databases, APIs) to isolate the interaction between components.

End-to-end testing simulates the entire automation process from start to finish. This verifies the script’s overall functionality and catches potential issues stemming from the interaction of multiple components or external systems.

Debugging typically involves using logging statements strategically placed throughout the script. Debuggers built into IDEs like VS Code or IntelliJ are also invaluable for stepping through the code line-by-line to identify errors. Error messages and stack traces provide invaluable clues to the root cause of the problem.

For example, if an API call fails, I’d first check the network logs to determine if the request was sent correctly and if the server returned an error. I then look at the API documentation to understand the error and correct my script accordingly.

Q 9. Explain your experience with different integration patterns (e.g., message queues, event-driven architecture).

I have extensive experience with various integration patterns, tailoring my choice to the specific needs of the system. Message queues, like RabbitMQ or Kafka, are ideal for asynchronous communication and decoupling systems. This is particularly useful when dealing with systems that have varying response times or when dealing with high volumes of data. Imagine an e-commerce platform; order processing, inventory updates, and shipping notifications can all be handled asynchronously through a message queue, making the system more resilient and scalable.

Event-driven architectures (EDA) build upon this concept. Systems react to events published to an event bus. This promotes loose coupling and allows for greater flexibility and scalability. For instance, a change in inventory levels might trigger an event that updates the website’s product availability in real-time, or triggers a re-ordering process automatically.

I’ve also worked with synchronous approaches like REST APIs for tighter integration where immediate responses are required. The choice depends on factors like performance requirements, system complexity, and error handling needs.

My experience encompasses designing and implementing systems that leverage these integration patterns effectively, considering factors like message durability, ordering guarantees, and fault tolerance to build robust and reliable systems.

Q 10. How do you choose the right automation tool for a specific task?

Selecting the right automation tool is crucial for project success. My process involves analyzing several factors:

- Task complexity: Simple tasks might only require scripting languages like Python or PowerShell, while complex ones might benefit from Robotic Process Automation (RPA) tools like UiPath or Automation Anywhere.

- Target systems: The technology stack of the systems you’re integrating with will significantly influence the choice. If you’re working with web applications, Selenium might be suitable. For legacy systems, tools with specific integrations might be necessary.

- Team expertise: Choose tools that align with the team’s skills and experience. A tool with a steep learning curve can slow down the project.

- Scalability and maintainability: Consider the long-term maintainability of the automation. A well-structured, modular approach using a robust framework is often preferable.

- Cost and licensing: Evaluate the cost and licensing implications of the tools. Open-source options offer flexibility, while commercial tools may offer better support and features.

For example, if I needed to automate data entry from a spreadsheet into a CRM, a scripting solution combined with an API might suffice. However, if I needed to automate interactions with a legacy system’s user interface, an RPA tool might be more suitable.

Q 11. Describe your experience with database integration (e.g., SQL, NoSQL).

Database integration is a frequent component of my automation projects. I have extensive experience working with both SQL and NoSQL databases. With SQL databases (like MySQL, PostgreSQL, SQL Server), I use standard SQL queries and stored procedures for data retrieval, manipulation, and insertion. I’m proficient in using database connectors and libraries (e.g., psycopg2 for PostgreSQL in Python) to integrate my automation scripts seamlessly.

For NoSQL databases (like MongoDB, Cassandra), the approach differs slightly. I leverage the respective database drivers and APIs to interact with the data. The query language and data model are different, requiring a good understanding of the specific NoSQL database being used. For example, I’d use pymongo (Python) for interaction with MongoDB, utilizing JSON-like documents for data storage and retrieval.

In both cases, security best practices are paramount. I always ensure that database credentials are managed securely, and I use parameterized queries or prepared statements to prevent SQL injection vulnerabilities. Transaction management is also crucial to ensure data consistency.

Q 12. Explain your approach to automating a complex business process.

Automating a complex business process requires a structured approach. I typically follow these steps:

- Process mapping: Thoroughly map the current business process, identifying all steps, actors, inputs, and outputs. This often involves collaborating with business stakeholders to gain a deep understanding of the process.

- Decomposition: Break down the complex process into smaller, manageable sub-processes. This simplifies the design, testing, and maintenance of the automation.

- Technology selection: Choose appropriate technologies and tools for each sub-process based on factors mentioned in the previous question.

- Development and testing: Develop and thoroughly test each component individually and as an integrated system, using the testing methodologies discussed earlier.

- Deployment and monitoring: Deploy the automated system to the production environment, and actively monitor its performance and effectiveness.

- Iteration and improvement: Continuously monitor, evaluate, and improve the automated process based on feedback and performance data.

For example, automating an accounts payable process might involve decomposing it into tasks like invoice data extraction (OCR), validation against purchase orders, payment processing, and reporting. Each task can be handled by a separate component, simplifying development and maintenance.

Q 13. How do you manage dependencies between different automation components?

Managing dependencies between automation components is critical for maintainability and scalability. I employ several strategies:

- Version control: Utilizing a version control system like Git helps track changes to different components and manage different versions. This allows for easier rollbacks and collaboration.

- Dependency management tools: Tools like pip (Python), npm (Node.js), or Maven (Java) help manage external libraries and dependencies. They ensure consistency across different environments and simplify updates.

- Modular design: Designing components as independent, reusable modules reduces dependencies and simplifies integration. Each module has well-defined interfaces, minimizing dependencies between internal implementation details.

- Containerization: Containerization technologies like Docker package components and their dependencies into isolated containers, ensuring consistent execution across different environments. This simplifies deployment and avoids conflicts.

- Configuration management: Centralized configuration management tools allow modification of parameters without changing the code, reducing dependencies on hardcoded values.

For instance, using Docker to package components ensures consistent runtime environments, irrespective of the underlying operating system or infrastructure.

Q 14. Describe your experience with monitoring and logging in automated systems.

Monitoring and logging are vital for the ongoing health and maintenance of automated systems. My approach involves a multi-faceted strategy:

- Centralized logging: I use centralized logging solutions (e.g., ELK stack, Splunk) to aggregate logs from different components into a single location for easier analysis and troubleshooting. This enables efficient search and correlation of log entries for quicker problem identification.

- Structured logging: I utilize structured logging formats (like JSON) to make log analysis easier. This allows for efficient filtering and querying of logs based on specific fields.

- Alerting and notifications: I set up alerts based on critical events (e.g., errors, exceptions, performance thresholds) to promptly identify and address issues. Notifications are sent through appropriate channels (email, Slack, PagerDuty).

- Metrics and dashboards: I use monitoring tools to track key metrics (e.g., execution time, error rates, resource utilization) and create dashboards for visualizing the system’s health. This proactive approach helps to identify potential issues before they escalate.

- Automated testing and reports: Regular automated tests provide insights into the health of the automation systems. Reports from these tests combined with monitoring data provide a holistic view of the system.

For example, if a specific API call consistently experiences high latency, the monitoring dashboards and logs help to pinpoint the problem, perhaps leading to optimization of the API interaction or scaling of the target service.

Q 15. How do you handle security concerns in automation scripts?

Security is paramount in automation. Think of it like securing your home – you wouldn’t leave the doors unlocked! We employ a multi-layered approach. First, we adhere to the principle of least privilege, granting scripts only the necessary permissions to perform their tasks. This limits the damage if a script is compromised. Second, we use secure coding practices to prevent vulnerabilities like SQL injection or cross-site scripting. This includes input validation, parameterized queries, and escaping special characters. Third, we leverage robust authentication and authorization mechanisms. This could involve integrating with existing security systems, using API keys, or implementing multi-factor authentication where appropriate. Finally, we regularly monitor our scripts for suspicious activity and implement logging and auditing to track all actions. For example, in a financial automation script, we would never hardcode sensitive credentials directly into the code; instead, we’d use a secure vault or environment variables.

Career Expert Tips:

- Ace those interviews! Prepare effectively by reviewing the Top 50 Most Common Interview Questions on ResumeGemini.

- Navigate your job search with confidence! Explore a wide range of Career Tips on ResumeGemini. Learn about common challenges and recommendations to overcome them.

- Craft the perfect resume! Master the Art of Resume Writing with ResumeGemini’s guide. Showcase your unique qualifications and achievements effectively.

- Don’t miss out on holiday savings! Build your dream resume with ResumeGemini’s ATS optimized templates.

Q 16. What are the key performance indicators (KPIs) you track for automation projects?

KPIs for automation projects depend on the specific goals, but some common ones include:

- Automation Rate: The percentage of tasks automated. For example, automating 80% of data entry tasks.

- Error Rate: The percentage of tasks with errors. Ideally, this should be near zero. If it’s consistently above a certain threshold, it signals a need for review and improvement.

- Throughput: The number of tasks completed per unit of time. This measures efficiency gains from automation.

- Cost Savings: The reduction in operational costs achieved through automation.

- Cycle Time: The time taken to complete a task or process. Automation usually significantly reduces this.

- Defect Density: Number of defects found per unit of code, crucial for software automation.

We regularly track these metrics using dashboards and reporting tools to monitor progress and identify areas for improvement. A sudden spike in the error rate, for instance, would immediately trigger an investigation.

Q 17. How do you ensure the accuracy and reliability of automated data processing?

Accuracy and reliability in automated data processing are critical. We achieve this through a combination of techniques. Firstly, we employ rigorous data validation at each step of the process. This includes checks for data type, format, range, and consistency. Secondly, we implement robust error handling and exception management. If an error occurs, the system should gracefully handle it, preventing data corruption or unexpected behavior. Thirdly, we use checksums or hash functions to verify data integrity. These functions generate a unique signature for the data, which can be compared later to ensure that the data hasn’t been altered. Fourthly, we conduct thorough testing, including unit testing, integration testing, and user acceptance testing. This helps identify and fix any inaccuracies or bugs before deployment. Finally, we utilize data reconciliation techniques to compare processed data against source data to identify any discrepancies.

Imagine a payroll system. Data validation ensures that employee IDs are numeric, salaries are positive numbers, and dates are in the correct format. Error handling ensures that the system doesn’t crash if it encounters an invalid entry. Checksums make sure that data transmitted between systems is not corrupted during transfer.

Q 18. Describe your experience with robotic process automation (RPA).

I have extensive experience with Robotic Process Automation (RPA). I’ve used tools like UiPath and Automation Anywhere to automate repetitive, rule-based tasks across various applications. For example, I automated a client’s invoice processing system, which previously involved manual data entry from emails and PDFs into their ERP system. The RPA bot extracted data using OCR, validated it, and then populated the ERP system, resulting in a significant reduction in processing time and human error. Another project involved automating customer onboarding, extracting information from various forms and automatically creating accounts and assigning them to the appropriate teams. My work has focused on identifying suitable processes for automation, designing the automation workflows, developing and testing the bots, and deploying and maintaining them. I’m proficient in configuring bot schedulers, exception handling, and integrating RPA with other systems.

Q 19. How do you manage changes in automated systems?

Managing changes in automated systems requires a structured approach. We typically use a change management process that includes impact assessment, testing, and rollbacks. Before making any changes, we assess the potential impact on other systems and processes. This ensures that changes in one part of the system don’t cause unintended consequences elsewhere. We then implement the changes in a controlled environment, such as a staging or test environment, before deploying them to production. Thorough testing is crucial to ensure that the changes function as expected and don’t introduce new errors. Finally, we implement a rollback plan in case of unexpected issues. This might involve reverting to a previous version of the system or having a backup readily available. This process is similar to how software updates are managed, ensuring minimal disruption.

Q 20. Explain your experience with version control systems (e.g., Git).

I’m highly proficient with Git, and I use it daily for version control in automation projects. I understand branching strategies, merging, pull requests, and conflict resolution. For example, I recently used Git to manage the development of an automation script that integrated multiple APIs. Each developer worked on different aspects of the integration, using separate branches. We used pull requests to review and merge changes, ensuring that the code remained clean and functional. Git’s ability to track changes, revert to previous versions, and facilitate collaboration is invaluable for managing complex automation projects. It’s like having a detailed history of every change made, allowing for easy troubleshooting and collaboration.

Example: git checkout -b feature/api-integration # Create a new branchQ 21. Describe a time you had to troubleshoot a complex automation issue.

In one project, we were automating a data migration process. The script failed intermittently, producing inconsistent results. After initial debugging, we couldn’t pinpoint the issue. We systematically investigated by:

- Enhanced Logging: We increased the level of logging to capture detailed information about each step of the process.

- Data Analysis: We meticulously analyzed the input and output data, looking for patterns or anomalies.

- Environment Check: We verified the environment variables and configurations to rule out issues with external systems.

- Testing: We created smaller, isolated tests to isolate the failing component.

Eventually, we discovered that a third-party API we were using had intermittent latency issues that affected the data processing. By implementing retry mechanisms and error handling within the script, we successfully resolved the issue and ensured reliable data migration. This experience highlighted the importance of comprehensive debugging techniques and the value of thorough logging and monitoring in troubleshooting complex automation issues.

Q 22. How do you ensure the security and compliance of automated systems?

Ensuring the security and compliance of automated systems is paramount. It’s not just about building the automation; it’s about building it securely and responsibly from the ground up. This involves a multi-layered approach.

Secure Coding Practices: Employing secure coding principles throughout the development lifecycle is fundamental. This includes input validation, output encoding, and preventing SQL injection and cross-site scripting (XSS) vulnerabilities. Regular security code reviews and penetration testing are crucial.

Access Control and Authentication: Robust access control mechanisms are vital to restrict access to sensitive data and system functionalities. Strong authentication methods like multi-factor authentication (MFA) should be implemented. Principle of least privilege should be strictly followed, granting users only the necessary permissions.

Data Encryption: Protecting data at rest and in transit is critical. This involves employing strong encryption algorithms for sensitive data stored in databases, files, and during transmission over networks. Regular key management practices are also necessary.

Compliance Frameworks: Adherence to relevant industry standards and compliance frameworks (e.g., ISO 27001, HIPAA, GDPR) is vital. This necessitates documenting processes, implementing security controls, and undergoing regular audits.

Monitoring and Logging: Real-time monitoring of automated systems allows for the detection of anomalies and security threats. Comprehensive logging helps in identifying security breaches, tracking access attempts, and troubleshooting issues. Security Information and Event Management (SIEM) systems can be valuable here.

Vulnerability Management: Regularly scanning for and patching security vulnerabilities in software and hardware components is crucial. This involves utilizing vulnerability scanners and maintaining an up-to-date inventory of all systems and software.

For example, in a financial automation project, we might implement end-to-end encryption for sensitive transaction data, use role-based access control to restrict access to financial reports based on employee roles, and regularly audit the system’s logs for suspicious activity. Failure to address security from the outset can lead to significant financial and reputational damage.

Q 23. Explain your understanding of different integration methodologies (e.g., Agile, Waterfall).

Integration methodologies define how we approach the process of combining different systems or components. Two prominent approaches are Agile and Waterfall.

Waterfall: This is a sequential approach where each phase (requirements, design, implementation, testing, deployment, maintenance) must be completed before the next begins. It’s highly structured and well-documented, making it suitable for projects with stable requirements. However, it can be inflexible and less adaptable to changing requirements.

Agile: This iterative and incremental approach emphasizes flexibility and collaboration. Projects are broken down into smaller iterations (sprints), with continuous feedback and adaptation. It’s better suited for projects with evolving requirements, but requires strong communication and collaboration.

The choice depends on the project’s nature and complexity. For instance, integrating a legacy system with a new CRM might benefit from a Waterfall approach due to the well-defined nature of the legacy system’s interface. In contrast, building a new automated testing framework might be better suited to an Agile approach to allow for flexibility and iterative improvements based on testing results.

Q 24. How do you collaborate with other teams (e.g., developers, testers, business analysts) in automation projects?

Collaboration is the cornerstone of successful automation projects. I approach this by fostering open communication and clear expectations.

Regular Meetings: Conducting regular meetings (daily stand-ups, weekly progress meetings) with developers, testers, and business analysts ensures everyone is aligned on progress, challenges, and upcoming tasks.

Shared Tools and Platforms: Utilizing collaborative tools like project management software (Jira, Asana), version control systems (Git), and communication platforms (Slack, Microsoft Teams) facilitates efficient information sharing and teamwork.

Clear Roles and Responsibilities: Defining clear roles and responsibilities upfront prevents confusion and ensures accountability. This also includes establishing a clear escalation path for resolving conflicts or roadblocks.

Feedback Mechanisms: Establishing mechanisms for regular feedback (e.g., code reviews, sprint retrospectives) ensures continuous improvement and identifies potential issues early.

For example, in a recent project involving RPA (Robotic Process Automation) implementation, I worked closely with developers to design the automation workflows, with testers to create test cases and scenarios, and with business analysts to ensure the automation aligned with business requirements. This collaborative approach ensured that the automation met the needs of all stakeholders.

Q 25. How do you document your automation processes and solutions?

Comprehensive documentation is crucial for maintainability, scalability, and knowledge transfer. My approach involves several key aspects:

Process Documentation: Clearly documenting the automation processes, including workflows, inputs, outputs, error handling, and decision points. This often includes flowcharts, diagrams, and written descriptions.

Code Documentation: Writing clear and concise code comments explaining the purpose, functionality, and logic of the code. Following consistent coding standards also improves readability and maintainability.

Technical Specifications: Documenting technical specifications, such as system architecture, hardware and software requirements, and data formats.

User Manuals and Training Materials: Creating user manuals and training materials for users and maintainers of the automation system.

Version Control: Utilizing a version control system (like Git) to track changes to the code and documentation, enabling easy rollback and collaboration.

I typically use a combination of wikis, documentation software, and version control systems to manage documentation. For example, a detailed wiki might explain the overall automation architecture, while code comments would explain the specific logic within individual scripts. Well-maintained documentation ensures smooth handover to other teams and simplifies troubleshooting down the line.

Q 26. Describe your experience with different automation testing strategies (e.g., unit testing, integration testing).

Automation testing strategies are crucial for ensuring the quality and reliability of automated systems. Different strategies are employed at different stages.

Unit Testing: Testing individual components or modules of the automation system in isolation. This involves writing test cases to verify the functionality of each unit. Examples include testing individual functions or methods within a script using tools like JUnit or pytest.

Integration Testing: Testing the interaction between different components or modules of the system. This verifies that the components work together correctly. For example, testing the integration between an automated data extraction process and a database update process.

System Testing: Testing the entire system as a whole to ensure it meets the specified requirements. This includes functional testing, performance testing, security testing, and usability testing.

Regression Testing: Testing the system after changes or updates to ensure that existing functionality hasn’t been broken. This is crucial when introducing new features or bug fixes.

The choice of strategy depends on the complexity of the system. In a complex system, a combination of these approaches is often employed. For instance, in a recent project involving an API integration, we conducted unit tests on individual API calls, integration tests to verify the interaction between the API and the backend system, and system tests to verify the end-to-end functionality of the entire API integration flow.

Q 27. How do you measure the ROI of automation projects?

Measuring the ROI of automation projects involves quantifying both the costs and benefits. Key metrics include:

Reduced Labor Costs: Calculating the reduction in labor costs due to automation. This requires estimating the time saved per task and multiplying it by the hourly or annual cost of human labor.

Increased Efficiency and Productivity: Measuring the improvement in efficiency and productivity due to automation. This can be expressed in terms of increased throughput, reduced processing time, or improved accuracy.

Reduced Errors and Defects: Quantifying the reduction in errors and defects caused by human intervention. This often translates to lower costs associated with rework, corrections, and customer dissatisfaction.

Improved Compliance and Risk Mitigation: Assessing the benefits of improved compliance and reduced risks associated with manual processes. This can be challenging to quantify but is often a significant advantage.

Return on Investment (ROI): Calculating the ROI by comparing the total cost of the automation project to the total benefits achieved. This can be expressed as a percentage or a payback period.

For example, in a customer service automation project, we measured the ROI by comparing the cost of implementing chatbots to the reduction in human agent workload, the improvement in response times, and the increase in customer satisfaction. A detailed cost-benefit analysis is vital for justifying automation initiatives.

Q 28. What are your future goals in the field of automation and technology integration?

My future goals in automation and technology integration center around leveraging emerging technologies to solve complex problems and create innovative solutions. This includes:

Deepening Expertise in AI/ML for Automation: Exploring the application of Artificial Intelligence and Machine Learning to create more intelligent and adaptive automation systems. This includes developing self-learning algorithms and predictive models to improve efficiency and decision-making within automation processes.

Exploring Cloud-Native Automation Architectures: Developing and implementing scalable, resilient, and cost-effective cloud-native automation architectures using services like serverless computing and containerization technologies.

Focusing on Hyperautomation: Developing expertise in hyperautomation, which combines different automation technologies (RPA, AI/ML, BPM) to automate end-to-end business processes. This necessitates a holistic approach to automation, covering all aspects of the process from start to finish.

Promoting Responsible Automation: Championing ethical considerations and responsible implementation of automation technologies, ensuring fairness, transparency, and accountability in all automation initiatives.

Ultimately, I aim to contribute to the development of automation solutions that not only increase efficiency but also enhance the human experience and create a more sustainable future.

Key Topics to Learn for Automation and Technology Integration Interview

- Process Automation Fundamentals: Understanding different automation approaches (RPA, BPM, etc.), their strengths and weaknesses, and suitable use cases within various industries.

- Integration Architectures: Familiarity with various integration patterns (e.g., message queues, APIs, ETL processes) and their application in building robust and scalable systems. Practical experience designing and implementing integration solutions is crucial.

- Data Integration and Management: Mastering data transformation techniques, data quality management, and experience with data integration tools (e.g., Informatica, Talend). Understanding data governance principles is highly valuable.

- Cloud-Based Integration Platforms: Knowledge of cloud-based integration services (e.g., AWS, Azure, GCP) and their role in modern automation strategies. Hands-on experience with at least one major cloud provider’s integration services is beneficial.

- API Management and Design: Understanding RESTful APIs, API security best practices, and experience with API gateway technologies. Ability to design and document APIs effectively is highly sought after.

- Security Considerations in Automation: Knowledge of security protocols and best practices related to automation and integration, including authentication, authorization, and data encryption. Addressing security concerns from the outset is vital.

- Troubleshooting and Problem-Solving: Demonstrate your ability to diagnose and resolve issues in complex automated systems. Highlight your experience with debugging tools and techniques.

- Emerging Technologies: Stay updated on advancements in AI, machine learning, and their applications in automation and integration. Showcasing awareness of future trends demonstrates forward-thinking.

Next Steps

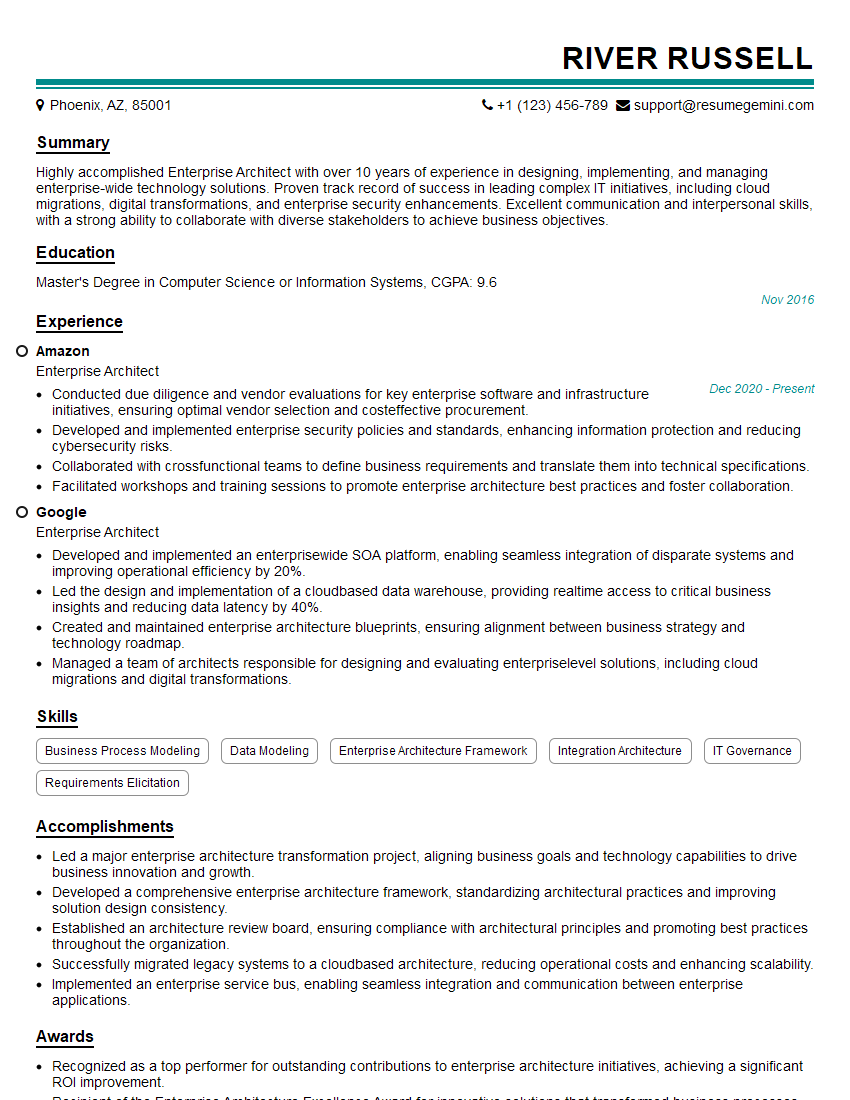

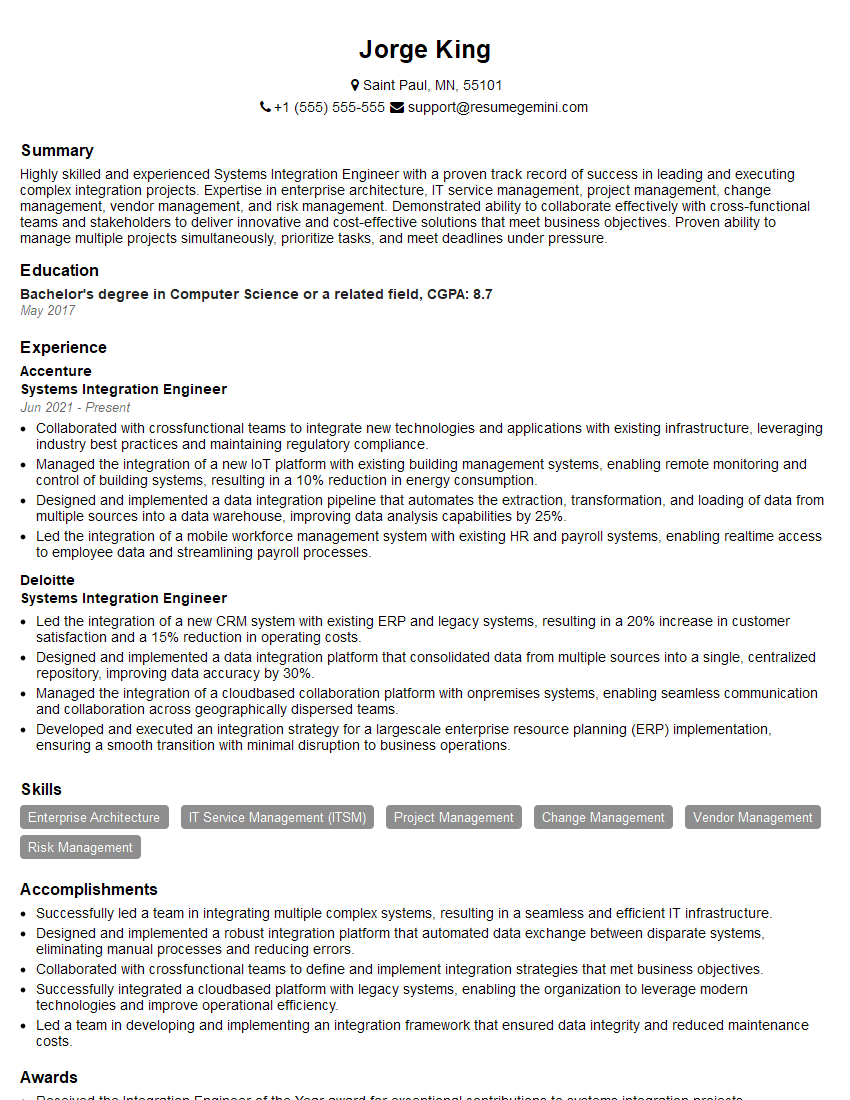

Mastering Automation and Technology Integration opens doors to exciting and high-demand roles, significantly boosting your career trajectory. To stand out, crafting a compelling and ATS-friendly resume is paramount. ResumeGemini is a trusted resource that can help you build a professional and impactful resume, ensuring your skills and experience shine. Examples of resumes tailored specifically to Automation and Technology Integration are available to guide you. Invest time in creating a strong resume—it’s your first impression with potential employers.

Explore more articles

Users Rating of Our Blogs

Share Your Experience

We value your feedback! Please rate our content and share your thoughts (optional).

What Readers Say About Our Blog

Hi, I represent an SEO company that specialises in getting you AI citations and higher rankings on Google. I’d like to offer you a 100% free SEO audit for your website. Would you be interested?

good