Preparation is the key to success in any interview. In this post, we’ll explore crucial Data Manipulation (Java, Groovy) interview questions and equip you with strategies to craft impactful answers. Whether you’re a beginner or a pro, these tips will elevate your preparation.

Questions Asked in Data Manipulation (Java, Groovy) Interview

Q 1. Explain the difference between ArrayList and LinkedList in Java.

Both ArrayList and LinkedList are dynamic arrays in Java, implementing the List interface, but they differ significantly in their underlying implementation and consequently, their performance characteristics. Think of it like this: an ArrayList is like a train – adding or removing cars (elements) from the middle is difficult and time-consuming; accessing a specific car (element) by its number is quick and easy. A LinkedList, on the other hand, is like a chain – adding or removing links (elements) is easy regardless of position, but finding a specific link by its number requires traversing the chain, link by link.

- ArrayList: Uses a dynamically resizing array. Accessing elements by index (

get(index)) is O(1), constant time, very fast. Adding or removing elements in the middle is O(n), linear time, slow as it requires shifting elements. - LinkedList: Uses a doubly linked list. Accessing elements by index is O(n), linear time, potentially slow, because you have to traverse the list. Adding or removing elements is O(1), constant time, fast, regardless of position.

In summary, choose ArrayList for frequent element access by index and fewer additions/removals in the middle. Choose LinkedList for frequent additions/removals, especially in the middle, even if element access by index is less frequent. The choice depends heavily on your application’s needs.

Q 2. How do you handle null values in Java during data manipulation?

Handling null values is crucial to prevent NullPointerExceptions (NPEs), a common source of Java errors. The best approach depends on the context. Imagine you’re processing a database record, and some fields might be empty.

- Null Checks: The simplest method. Before accessing any member of an object, explicitly check for

null.if (myObject != null && myObject.getValue() != null) { ... } - Optional: Introduced in Java 8,

Optionalprovides a more elegant way to represent values that may be absent. It avoids the need for nestedifstatements and improves code readability.Optionalname = Optional.ofNullable(myObject.getName()); name.ifPresent(System.out::println); - The Null Object Pattern: Create a special object that represents the absence of a value but behaves like a regular object in terms of method calls. This avoids the need for many null checks.

- Defensive Programming and Exception Handling: Wrap potentially null-causing operations in

try-catchblocks to handleNullPointerExceptionsgracefully. Log the error, provide default values, or take other recovery actions.

Choosing the right approach depends on your specific needs. For simple cases, null checks are sufficient; For more complex situations, Optional or the Null Object Pattern improves code readability and maintainability.

Q 3. Describe different ways to sort a list in Java.

Java offers several ways to sort lists. The choice depends on the data type, sorting algorithm preferences (speed, memory usage), and whether you want to modify the original list or create a sorted copy.

Collections.sort(): This utility method uses a highly optimized merge sort (for most data types) and sorts the list in-place. It’s simple and efficient for most cases.Collections.sort(myList);List.sort()(Java 8+): A more modern approach using streams. This method also modifies the list in-place and leverages the power of lambdas for custom comparators.myList.sort((a, b) -> a.compareTo(b));- Custom Comparator: For custom sorting logic (e.g., sorting objects based on a specific field), create a class implementing the

Comparatorinterface.myList.sort(new MyCustomComparator()); - Streams with

sorted(): To create a new sorted list without modifying the original, use streams:ListsortedList = myList.stream().sorted().collect(Collectors.toList());

For large datasets, consider the performance implications of each method. Collections.sort() and List.sort() are generally efficient, but for extremely large lists or specific performance requirements, other algorithms (like quicksort or mergesort) might be explored.

Q 4. How do you efficiently search for elements within a large dataset in Java?

Searching efficiently in large datasets is crucial for performance. A naive linear search (checking each element one by one) is terribly slow for large datasets. Appropriate data structures and algorithms are vital.

- Binary Search: If the dataset is sorted, binary search is incredibly efficient (O(log n)). It repeatedly divides the search interval in half. Java’s

Arrays.binarySearch()works directly on arrays (must be sorted); for lists, you’d need to sort them first. - Hash Table (HashMap): For unsorted datasets where you need to search by a unique key, a

HashMapprovides average O(1) search time. It’s extremely fast, provided you have a good hash function. - Tree-based Structures (TreeSet, TreeMap): These offer logarithmic (O(log n)) search time for sorted datasets and provide additional functionalities such as range queries.

- Indexing and Databases: For extremely large datasets, using databases with appropriate indexing strategies is crucial. Database systems are designed to handle efficient searches on massive amounts of data.

The optimal approach depends heavily on the data structure, the nature of the search (exact match, range search, fuzzy search), and the size of the dataset. If you expect frequent searches, selecting an appropriate data structure upfront will pay off immensely.

Q 5. What are the advantages and disadvantages of using HashMaps in Java for data manipulation?

HashMaps are fundamental to Java data manipulation, offering key-value storage with fast average-case access times. Let’s consider its advantages and disadvantages.

- Advantages:

- Fast average-case lookup, insertion, and deletion: O(1) time complexity makes them incredibly efficient for frequent access.

- Flexible key-value pairs: You can store almost any data type as keys and values.

- Dynamic resizing: They automatically grow to accommodate more entries.

- Disadvantages:

- Unordered:

HashMaps don’t guarantee any specific order of elements. Iteration order can change. - Worst-case performance: In situations of many hash collisions (keys mapping to the same bucket), performance degrades to O(n).

- Not thread-safe: For concurrent access, use

ConcurrentHashMap. - Null key and value handling:

HashMapallows only one null key and multiple null values.

- Unordered:

Consider HashMaps if you require fast access based on unique keys and order isn’t essential. For ordered key-value storage, use TreeMap.

Q 6. Explain the concept of generics in Java and their use in data manipulation.

Generics are a powerful feature in Java that allows you to write type-safe code. Imagine you have a method that operates on a list. Without generics, you’d write it to accept a raw List, accepting any object type. With generics, you specify the type of objects it should handle. This helps prevent runtime errors and improves code readability.

For example, a simple method to print a list’s elements would look like this without generics:

void printList(List list) { for (Object o : list) { System.out.println(o); } }This is unsafe, as you might try to print elements that aren’t strings or numbers and risk casting exceptions. With generics, it becomes:

void printList(List<String> list) { for (String s : list) { System.out.println(s); } }Now, the compiler ensures that only lists of strings can be passed, preventing type-related errors at compile time. Generics are widely used in collections (ArrayList<T>, HashMap<K,V>) improving type safety and making your code cleaner and less error-prone.

Q 7. How do you handle exceptions during data processing in Java?

Exception handling is essential for robust data processing. Unhandled exceptions can crash your application. Java’s try-catch-finally blocks are used to handle exceptions gracefully.

Example: Imagine reading data from a file. The file might not exist or might be corrupted.

try { // Code that might throw exceptions FileReader reader = new FileReader("myFile.txt"); // ... process data } catch (FileNotFoundException e) { System.err.println("File not found: " + e.getMessage()); // Handle file not found } catch (IOException e) { System.err.println("IO error: " + e.getMessage()); // Handle other IO errors } finally { // Code that always executes (e.g., closing resources) if (reader != null) { try { reader.close(); } catch (IOException e) { System.err.println("Error closing file: " + e.getMessage()); } } }The try block contains code that might throw exceptions. catch blocks handle specific exception types. The finally block (optional) executes regardless of whether an exception occurred; it’s perfect for releasing resources (like closing files or database connections). Using specific exception types in catch blocks provides more targeted error handling. Avoid generic catch(Exception e) if possible, as it may mask unexpected errors.

Q 8. Describe your experience with different Java data structures (e.g., Sets, Maps, Queues).

Java offers a rich collection of data structures, each tailored for specific tasks. Understanding their strengths and weaknesses is crucial for efficient data manipulation. Let’s explore some key players:

- Sets: Unordered collections of unique elements. Think of a set as a bag where you only keep one of each item.

HashSetprovides fast lookups (O(1) on average), whileTreeSetkeeps elements sorted (allowing for efficient range queries), but at a slightly higher cost. I’ve usedHashSetextensively in scenarios needing quick checks for duplicate entries, like validating unique user IDs in a system. - Maps: Store data in key-value pairs. Imagine a dictionary – you look up a word (key) to find its definition (value).

HashMapoffers fast average-case performance for lookups, insertions, and deletions, whileTreeMapmaintains sorted order by key. I’ve usedHashMapfrequently to represent configuration data or to quickly access objects based on a unique identifier. - Queues: Follow the FIFO (First-In, First-Out) principle. Like a real-world queue, the first element added is the first one removed.

LinkedListcan be used as a queue, providing good performance for both adding and removing elements.PriorityQueueadds elements based on priority, useful in scheduling algorithms or managing tasks.

Choosing the right data structure significantly impacts performance. For instance, using a HashSet to check for duplicates is far more efficient than iterating through an array.

Q 9. Explain how to implement a custom comparator in Java for sorting.

Creating a custom comparator in Java allows you to define how objects should be sorted according to specific criteria. This is incredibly powerful when you need sorting beyond the default behavior (e.g., sorting objects based on a non-default field). It involves implementing the Comparator interface.

public class Person {

String name;

int age;

// Constructor, getters, and setters

}

class PersonComparator implements Comparator<Person> {

@Override

public int compare(Person p1, Person p2) {

return Integer.compare(p1.getAge(), p2.getAge()); // Sort by age

}

}

// Usage:

List<Person> people = new ArrayList<>();

// ... populate people list ...

Collections.sort(people, new PersonComparator());

In this example, we sort a list of Person objects based on age. The compare method defines the sorting logic: a negative value indicates p1 is smaller than p2, 0 means they are equal, and a positive value means p1 is larger. You can adapt this to sort by any criteria relevant to your objects.

Q 10. How do you perform data validation in Java?

Data validation is paramount to ensure data integrity and prevent errors. In Java, I typically use a combination of techniques:

- Input Validation: Check data at the point of entry, using methods like regular expressions or simple type checking. For example, ensuring a phone number has the correct format or that an age is a positive integer.

- Annotations: Using validation annotations like

@NotNull,@Size,@Patternfrom the Bean Validation API (part of Jakarta EE) simplifies validation, often by integrating seamlessly with frameworks like Spring. - Custom Validation Logic: For complex rules, create custom validation methods. For instance, checking if a username is already in use within a database.

- Libraries: Libraries like Apache Commons Validator provide pre-built validation routines for common data types and formats.

A layered approach, combining input validation with more robust validation later in the process (e.g., before data persistence), is a best practice. This catches errors early, improving user experience and avoiding costly downstream problems.

Q 11. What are your preferred methods for debugging data manipulation code in Java?

Debugging data manipulation code requires a multifaceted approach. My preferred methods include:

- Logging: Strategic placement of

System.out.printlnstatements (or better yet, using a proper logging framework like Log4j or SLF4j) at critical points allows tracking data transformations. This is simple and often surprisingly effective. - Debuggers (e.g., IntelliJ IDEA debugger): Stepping through code line by line, inspecting variable values, and setting breakpoints lets me pinpoint precisely where errors occur. This is invaluable for complex scenarios.

- Unit Tests: Writing comprehensive unit tests with assertions helps uncover data manipulation bugs early and prevents regressions. This is arguably the most important aspect of robust development.

- Data Inspection Tools: When dealing with larger datasets, tools that allow data inspection (e.g., database query tools or specialized data viewers) provide a higher-level view to identify data anomalies.

A mix of these techniques, depending on the situation’s complexity, usually allows me to quickly and efficiently identify and resolve issues.

Q 12. Explain the concept of closures in Groovy and how you use them for data manipulation.

Groovy’s closures are anonymous functions that can be passed around and executed later. They’re incredibly useful for data manipulation because they allow for concise and flexible code. A closure encapsulates both code and its surrounding state (i.e. the variables available at the time it’s created).

List numbers = [1, 2, 3, 4, 5]

// Closure to square a number

squareClosure = { num -> num * num }

// Using the closure with collect()

List squaredNumbers = numbers.collect(squareClosure)

println squaredNumbers // Output: [1, 4, 9, 16, 25]

Here, squareClosure is passed to collect(), which applies the closure to each element of the list. This is much more readable and concise than writing an equivalent Java loop. Closures are also useful with methods like each for iterating and performing actions on elements, and findAll for filtering.

Q 13. How do you handle large datasets efficiently in Groovy?

Handling large datasets efficiently in Groovy often involves leveraging its interoperability with Java and using appropriate libraries. Key strategies include:

- Streaming APIs: Using Java’s Streams API (available in Groovy) allows processing data in chunks rather than loading everything into memory at once. This is crucial for datasets exceeding available RAM.

- Groovy’s

eachLinemethod: For text-based data, processing line by line usingeachLineis far more memory-efficient than reading the whole file into a list. - External Libraries: Libraries like Apache Commons CSV or Jackson can efficiently handle parsing and processing large CSV or JSON files, avoiding manual parsing which can be inefficient for large files.

- Database Interaction: For data stored in databases, querying only the necessary data and using efficient database operations (using prepared statements) is vital. Groovy makes it easy to use JDBC or other database connection tools.

The core idea is to avoid loading the entire dataset into memory. Process the data in manageable chunks, using appropriate libraries for optimal performance.

Q 14. What are the benefits of using Groovy for data manipulation compared to Java?

Groovy offers several advantages over Java for data manipulation:

- Conciseness: Groovy’s syntax is more concise, leading to shorter, more readable code, especially when working with collections.

- Closures: As discussed, closures provide a powerful mechanism for expressing data transformations elegantly.

- Dynamic Typing: While not always advantageous, dynamic typing can simplify development, particularly during prototyping or when dealing with data of unknown structure.

- Built-in Support for Collections: Groovy provides many helpful methods for collections (lists, maps), making common data manipulation tasks easier.

- Interoperability with Java: Groovy seamlessly integrates with Java libraries, giving you access to the vast Java ecosystem when needed.

However, the choice between Groovy and Java depends on the project’s specific requirements. Java provides better performance for some scenarios, while Groovy’s expressiveness is a significant advantage for rapid prototyping or projects focusing on data manipulation and scripting.

Q 15. Describe your experience with Groovy’s built-in data structures (e.g., lists, maps).

Groovy’s built-in data structures, like Lists and Maps, are incredibly flexible and powerful tools for data manipulation. Lists are ordered collections of objects, similar to Java’s ArrayLists, but with added syntactic sugar. Maps are key-value pairs, much like Java’s HashMaps, but again, Groovy makes working with them significantly easier.

For example, creating and populating a list is straightforward:

List myList = [1, 'apple', 3.14, true]Accessing elements is also intuitive:

println myList[0] //Prints 1Maps are equally convenient:

Map myMap = [name: 'John Doe', age: 30, city: 'New York']Retrieving values is done using the key:

println myMap.name //Prints John DoeGroovy’s support for dynamic typing means you don’t need to explicitly declare the type of elements within a List or the keys/values in a Map, increasing development speed. This is a huge advantage compared to the more verbose nature of Java’s collections.

In my experience, this ease of use drastically reduces development time and makes code more readable, especially when dealing with complex data structures.

Career Expert Tips:

- Ace those interviews! Prepare effectively by reviewing the Top 50 Most Common Interview Questions on ResumeGemini.

- Navigate your job search with confidence! Explore a wide range of Career Tips on ResumeGemini. Learn about common challenges and recommendations to overcome them.

- Craft the perfect resume! Master the Art of Resume Writing with ResumeGemini’s guide. Showcase your unique qualifications and achievements effectively.

- Don’t miss out on holiday savings! Build your dream resume with ResumeGemini’s ATS optimized templates.

Q 16. How do you use Groovy’s metaprogramming features for data manipulation?

Groovy’s metaprogramming capabilities are a game-changer for data manipulation. Features like property accessors, builders, and closures allow you to manipulate data dynamically and create elegant, concise code.

For instance, let’s say I need to add a new property to an existing object without modifying its class. With metaprogramming, I can do this easily:

class Person { String name }def person = new Person(name: 'Jane')person.age = 25 //Dynamically adding the 'age' propertyprintln person.age //Prints 25This avoids the need to create new classes or alter existing ones, crucial in situations where you might be dealing with external data structures that you can’t modify directly.

Closures are another powerful tool. They allow for concise manipulation of collections:

List numbers = [1, 2, 3, 4, 5]List evenNumbers = numbers.findAll { it % 2 == 0 }This single line uses a closure to filter the list, producing only the even numbers. This is far more readable and concise than equivalent Java code.

In my professional work, metaprogramming has been invaluable for creating flexible and maintainable data processing solutions.

Q 17. Explain how you would implement a data transformation pipeline in Groovy.

Implementing a data transformation pipeline in Groovy is best done using a chain of operations on collections. Groovy’s support for method chaining, combined with its powerful collections, makes this incredibly efficient and readable.

Imagine you have a CSV file containing customer data that needs cleaning and transforming. The pipeline could look like this:

def customerData = readCsv('customers.csv') //Read CSV into a list of mapsdef cleanedData = customerData.collect { it.name = it.name.trim(); it } //Clean up namesdef transformedData = cleanedData.groupBy { it.city } //Group by citydef aggregatedData = transformedData.collectEntries { k,v -> [k, v.size()] } //Count customers per cityThis pipeline reads, cleans, groups, and aggregates the data in a clear and concise manner. Each step operates on the output of the previous one, forming a neat and efficient transformation chain. This approach is highly readable and easy to maintain, allowing for easy addition or modification of transformation steps.

Q 18. How do you perform data filtering and aggregation in Groovy?

Groovy offers several ways to perform data filtering and aggregation. The most common approaches leverage Groovy’s collection methods and closures.

Filtering is done using methods like findAll, find, and grep. For example, to find all customers from a specific city:

def newYorkCustomers = customerData.findAll { it.city == 'New York' }Aggregation uses methods like sum, max, min, and groupBy.

To calculate the total age of all customers:

def totalAge = customerData.sum { it.age }To group customers by age and count the number in each age group:

def ageGroups = customerData.groupBy { it.age }.collectEntries { k, v -> [k, v.size()] }These methods, combined with Groovy’s concise syntax, allow for expressive and efficient data processing.

Q 19. How do you handle errors and exceptions in Groovy during data processing?

Error handling in Groovy during data processing is crucial for robustness. The standard try-catch block works as expected, but Groovy offers some additional convenience features.

For example, you can use the try-catch block with the Elvis operator (?:) to provide default values in case of exceptions:

def age = data.age ?: 0 //Uses 0 if data.age is null or throws an exceptionThis makes the code more compact. You can also handle exceptions more gracefully using custom exception types and logging. In my projects, I frequently use a logging framework (like Logback or SLF4j) to record error details, enabling debugging and monitoring of the data pipeline.

A well-structured approach incorporates logging at various stages of processing. This allows for pinpointing the exact location and nature of any error within the data pipeline, making debugging significantly easier and quicker.

Q 20. Describe your experience with using Groovy for working with external data sources (e.g., databases, CSV files).

Groovy integrates seamlessly with external data sources. For databases, I frequently use JDBC or ORM frameworks like GORM (Groovy Object Relational Mapping) to interact with databases. For CSV files, Groovy provides built-in functionalities to read and write data easily.

Example using Groovy’s CSV support:

def customerData = new File('customers.csv').readLines().collect { line -> line.split(',').collect { it.trim() } }This code reads each line of a CSV, splits it into fields using the comma as a delimiter, trims whitespace, and converts it into a List of Lists (or a list of maps if the header is available). For databases, GORM simplifies the interaction considerably. For example, reading all customers from a database table might be as simple as:

def customers = Customer.findAll()(assuming you’ve configured GORM to connect to your database and define a Customer domain class).

This simplifies data access considerably compared to working directly with JDBC.

Q 21. Compare and contrast Java and Groovy for data manipulation tasks.

Java and Groovy are both powerful languages for data manipulation, but their approaches differ significantly. Java provides strong typing, compile-time checks, and excellent performance. However, its verbosity can make data manipulation tasks more cumbersome. Groovy, on the other hand, offers dynamic typing, a concise syntax, and powerful metaprogramming features, making code significantly more compact and readable.

Consider a simple task like summing a list of numbers. In Java, you’d likely need a loop or stream processing. In Groovy, it’s a single line:

List numbers = [1, 2, 3, 4, 5]; int sum = numbers.sum()Groovy’s advantages become more pronounced with complex data transformations and when dealing with dynamic or evolving data structures. Java’s strength lies in performance-critical applications requiring robust type safety and compile-time error detection. For prototyping and rapid development, however, Groovy shines.

In practice, I often use Groovy for rapid prototyping and data exploration, then use Java when performance or robust type-safety is paramount. Sometimes, even a hybrid approach works best – leveraging Groovy’s scripting capabilities for parts of the data processing pipeline and using Java for performance-sensitive sections.

Q 22. What are some common performance bottlenecks in data manipulation and how do you address them?

Performance bottlenecks in data manipulation often stem from inefficient algorithms, inadequate data structures, or I/O limitations. Imagine trying to sort a massive deck of cards by hand – it would take forever! Similarly, poorly written code can cripple data processing.

Inefficient Algorithms: Using algorithms with poor time complexity (e.g., nested loops for large datasets instead of optimized algorithms like merge sort or quicksort) significantly impacts performance. For example, searching a large unsorted list with a linear search is far slower than using a binary search on a sorted list.

Inappropriate Data Structures: Choosing the wrong data structure can dramatically affect performance. Using an ArrayList to frequently insert or delete elements in the middle is far less efficient than using a LinkedList. Similarly, searching a large unsorted list is much slower than searching a HashMap or TreeSet.

I/O Bottlenecks: Frequent reads and writes to disk or network can be a major bottleneck. Batching operations, using efficient I/O libraries (like buffered readers/writers), and employing techniques like caching can drastically reduce this overhead.

Memory Management: Poor memory management can lead to garbage collection pauses, severely affecting performance. Using efficient data structures and minimizing unnecessary object creation helps here. In Java, understanding weak references and memory-mapped files can also prove beneficial.

Addressing Bottlenecks: Profiling tools (like Java VisualVM or YourKit) are essential for identifying performance bottlenecks. Once identified, strategies like algorithm optimization, data structure selection, database indexing, caching, and code refactoring can significantly improve performance. For instance, switching from a nested loop to a more efficient algorithm can reduce processing time from hours to minutes.

Q 23. How do you ensure data integrity during data manipulation?

Data integrity is paramount in data manipulation. It’s like building a house – a single weak brick can bring the whole structure down. Ensuring data integrity involves techniques that prevent data corruption, inconsistency, or loss.

Input Validation: Always validate data at the input stage. Check for data types, ranges, formats, and consistency before processing. This prevents invalid data from corrupting the system. For example, if you expect an integer age, you’ll need to handle potential non-numeric input.

Data Constraints: Define constraints (e.g., using database constraints or Java annotations) to enforce rules on data values (e.g., primary key, foreign key, unique constraints, or data type restrictions). These enforce data relationships and prevent invalid insertions or updates.

Transactions: Use transactions (particularly in database operations) to ensure atomicity. A transaction is a sequence of operations that are treated as a single unit; either all operations succeed, or none do. This maintains data consistency even in case of errors.

Error Handling: Implement robust error handling to catch and manage exceptions during data manipulation. Log errors, perform rollback operations in transactions, and alert users or administrators about issues.

Data Versioning: Consider implementing data versioning for tracking changes and enabling rollbacks. This is particularly important for critical data.

For example, in Groovy, using constraints with GORM for database interaction helps to ensure data integrity at the database level.

Q 24. Explain your experience with unit testing data manipulation code.

Unit testing is crucial for data manipulation code. It’s like testing individual components of a car before assembling it – you want to make sure each part works perfectly before combining them. I typically use JUnit or Spock (for Groovy) to write unit tests.

My approach involves writing tests that cover various scenarios: edge cases (empty inputs, null values, boundary conditions), normal cases (valid data processing), and error cases (handling exceptions). I aim for high test coverage, ensuring that most code paths are tested. I use mocking frameworks (like Mockito) to isolate the code under test from external dependencies (databases, file systems), making tests more reliable and faster.

Example (JUnit):

@Test

public void testTransformData() {

DataManipulator manipulator = new DataManipulator();

List<String> input = Arrays.asList("apple", "banana", "cherry");

List<String> expected = Arrays.asList("Apple", "Banana", "Cherry");

List<String> actual = manipulator.transformData(input);

assertEquals(expected, actual);

}

This simple example shows a JUnit test verifying the transformation of a list of strings. Comprehensive unit tests ensure the reliability and correctness of the data manipulation logic.

Q 25. How do you optimize data manipulation code for speed and efficiency?

Optimizing data manipulation code involves focusing on algorithmic efficiency, data structures, and I/O operations. It’s akin to streamlining a factory assembly line – each improvement boosts overall productivity.

Algorithm Selection: Choose algorithms with optimal time and space complexity. For example, use merge sort or quicksort instead of bubble sort for large datasets.

Data Structures: Select appropriate data structures for specific operations. Hashmaps provide O(1) average time complexity for lookups, while linked lists excel at insertions and deletions.

Batch Processing: Process data in batches instead of individually to reduce I/O overhead. This is especially effective when dealing with databases or files.

Caching: Implement caching mechanisms to store frequently accessed data in memory to reduce database or file system access.

Code Profiling and Refactoring: Use profiling tools to identify performance bottlenecks and refactor code accordingly. This involves identifying and eliminating inefficient code sections.

Parallel Processing: Leverage multi-core processors by using parallel processing techniques (discussed in the next answer). This can significantly speed up data processing for large datasets.

Example: In Java, using streams with parallel processing (.parallelStream()) can significantly improve the performance of operations like filtering or mapping on large collections.

Q 26. Describe your experience with parallel processing for data manipulation.

Parallel processing is a powerful technique for accelerating data manipulation, especially when dealing with large datasets. It’s like having multiple workers assembling different parts of a product simultaneously, significantly reducing overall assembly time.

My experience with parallel processing involves using Java’s concurrency utilities (java.util.concurrent) and frameworks like Fork/Join. I’ve worked with both thread pools and parallel streams to efficiently divide data processing tasks among multiple threads. Careful consideration of thread safety, synchronization mechanisms (locks, semaphores), and potential race conditions are crucial when implementing parallel processing.

Example (Java Parallel Streams):

List<Integer> numbers = Arrays.asList(1, 2, 3, 4, 5, 6, 7, 8, 9, 10);

List<Integer> squaredNumbers = numbers.parallelStream()

.map(n -> n * n)

.collect(Collectors.toList());

This example demonstrates using parallel streams to square a list of numbers concurrently. The parallelStream() method automatically divides the task among multiple threads.

However, using parallel processing isn’t always beneficial. The overhead of creating and managing threads can outweigh the benefits for smaller datasets. Proper analysis is essential before introducing parallel processing to determine if it will actually improve performance.

Q 27. Explain your approach to handling different data formats (JSON, XML, CSV).

Handling different data formats (JSON, XML, CSV) requires using appropriate parsing and serialization libraries. It’s like having different tools for different types of screws – you wouldn’t use a screwdriver on a bolt.

JSON: For JSON processing, I typically use Jackson (

com.fasterxml.jackson.core) in Java. Its efficient and versatile. Groovy often integrates JSON handling directly via its built-in JSON support.XML: For XML, I’ve used libraries like JAXB (Java Architecture for XML Binding) or StAX (Streaming API for XML). These libraries provide ways to map XML structures to Java objects and vice-versa, making processing and manipulation convenient.

CSV: For CSV, simple libraries like Apache Commons CSV (

org.apache.commons:commons-csv) are often sufficient. They provide methods for reading and writing CSV files efficiently. Groovy’s built-in features for string manipulation can also be used for simpler CSV processing.

The choice of library depends on the complexity of the data and the desired level of control. For simple CSV files, basic string manipulation might suffice, but for complex JSON or XML structures, robust parsing libraries are essential. I always consider factors like performance, ease of use, and error handling when selecting a library.

Example (Jackson for JSON):

ObjectMapper objectMapper = new ObjectMapper();

JsonNode rootNode = objectMapper.readTree(jsonString);

String name = rootNode.path("name").asText();

This Jackson example shows reading a JSON string and extracting a value.

Key Topics to Learn for Data Manipulation (Java, Groovy) Interview

- Core Java Collections: Understanding Lists, Sets, Maps, and their practical applications in data handling. This includes knowing the differences in performance and appropriate use cases for each.

- Groovy Collections & Data Structures: Exploring Groovy’s enhanced collection capabilities and how they simplify data manipulation compared to Java. Focus on features like dynamic typing and concise syntax.

- Data Structures and Algorithms: Reviewing fundamental data structures like arrays, linked lists, trees, and graphs, and their relevance to efficient data processing. Practice implementing common algorithms like sorting and searching.

- Stream API (Java): Mastering the Java Stream API for efficient and concise data processing. Practice using functional programming concepts like filtering, mapping, and reducing.

- Groovy’s metaprogramming features: Understand how Groovy’s dynamic nature can be used to simplify data manipulation tasks. Explore concepts like closures and builders.

- Data Serialization & Deserialization: Familiarize yourself with techniques like JSON and XML processing in both Java and Groovy. Understand how to parse and manipulate data from these formats.

- Error Handling and Exception Management: Demonstrate a strong understanding of how to handle exceptions gracefully during data manipulation processes, ensuring robustness and preventing application crashes.

- Testing and Debugging: Be prepared to discuss your approach to testing data manipulation code using unit tests and debugging strategies for identifying and resolving issues.

- Database Interaction (JDBC/ORM): Review your knowledge of interacting with databases using JDBC or an Object-Relational Mapper (ORM) framework, such as Hibernate or GORM (Groovy).

- Big Data Concepts (Optional but advantageous): If relevant to the role, consider brushing up on basic concepts related to big data technologies and their application to data manipulation.

Next Steps

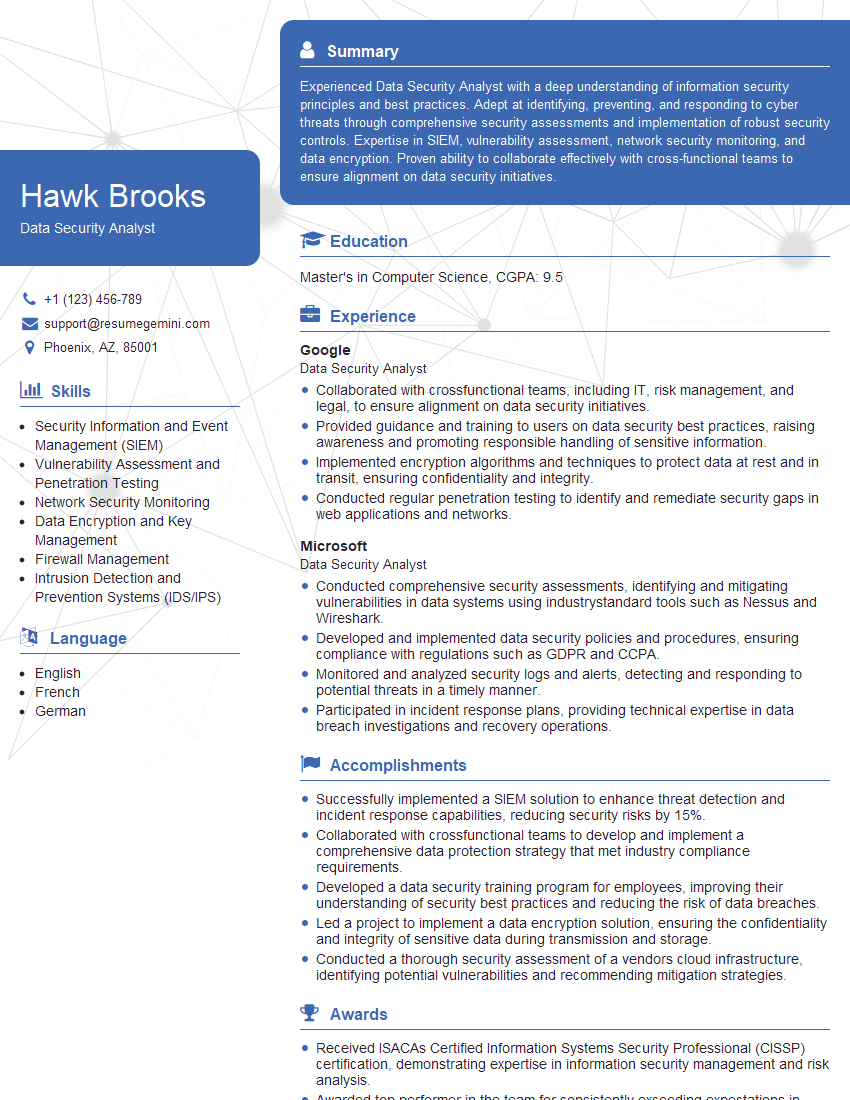

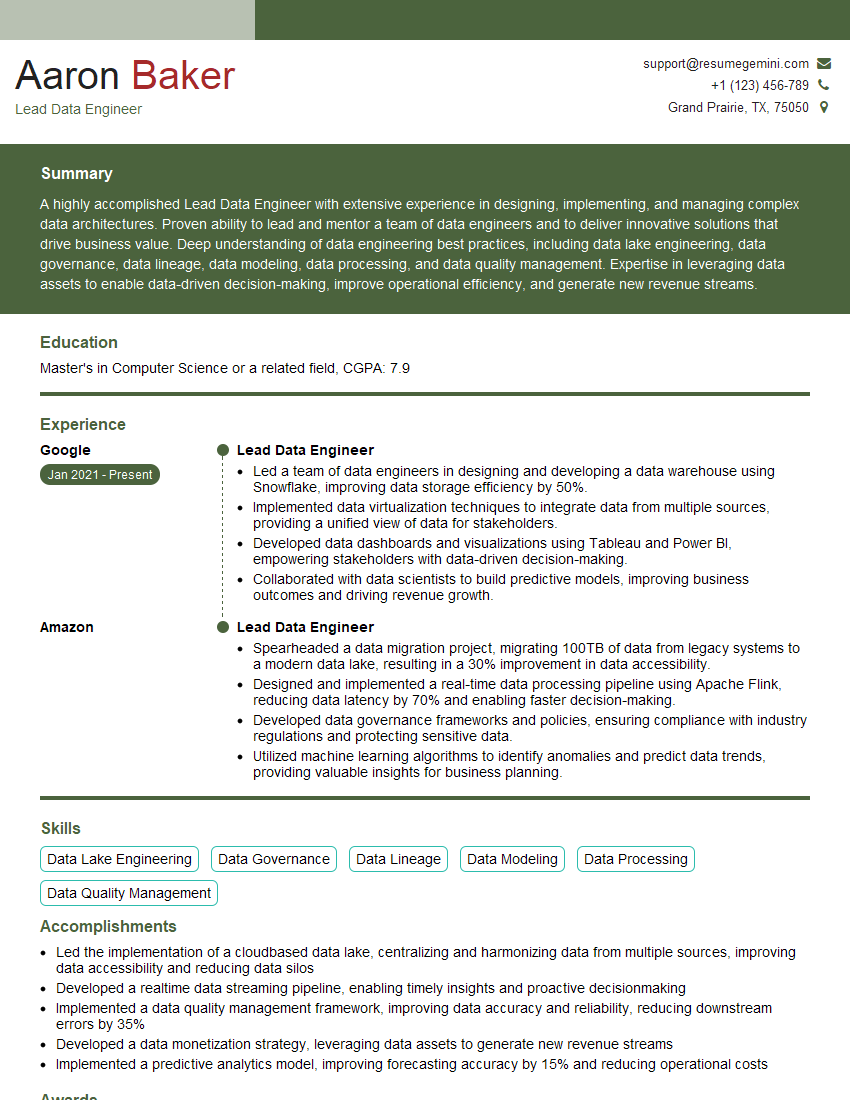

Mastering data manipulation in Java and Groovy is crucial for a successful career in software development, opening doors to diverse and challenging roles. A strong foundation in these skills demonstrates your ability to efficiently process and manage data—a highly sought-after attribute in today’s tech landscape. To further enhance your job prospects, invest time in crafting an ATS-friendly resume that effectively highlights your skills and experience. ResumeGemini is a trusted resource to help you build a professional and impactful resume. Examples of resumes tailored to Data Manipulation (Java, Groovy) roles are available to guide you.

Explore more articles

Users Rating of Our Blogs

Share Your Experience

We value your feedback! Please rate our content and share your thoughts (optional).

What Readers Say About Our Blog

Hello,

We found issues with your domain’s email setup that may be sending your messages to spam or blocking them completely. InboxShield Mini shows you how to fix it in minutes — no tech skills required.

Scan your domain now for details: https://inboxshield-mini.com/

— Adam @ InboxShield Mini

Reply STOP to unsubscribe

Hi, are you owner of interviewgemini.com? What if I told you I could help you find extra time in your schedule, reconnect with leads you didn’t even realize you missed, and bring in more “I want to work with you” conversations, without increasing your ad spend or hiring a full-time employee?

All with a flexible, budget-friendly service that could easily pay for itself. Sounds good?

Would it be nice to jump on a quick 10-minute call so I can show you exactly how we make this work?

Best,

Hapei

Marketing Director

Hey, I know you’re the owner of interviewgemini.com. I’ll be quick.

Fundraising for your business is tough and time-consuming. We make it easier by guaranteeing two private investor meetings each month, for six months. No demos, no pitch events – just direct introductions to active investors matched to your startup.

If youR17;re raising, this could help you build real momentum. Want me to send more info?

Hi, I represent an SEO company that specialises in getting you AI citations and higher rankings on Google. I’d like to offer you a 100% free SEO audit for your website. Would you be interested?

Hi, I represent an SEO company that specialises in getting you AI citations and higher rankings on Google. I’d like to offer you a 100% free SEO audit for your website. Would you be interested?

good