Unlock your full potential by mastering the most common Computer Systems interview questions. This blog offers a deep dive into the critical topics, ensuring you’re not only prepared to answer but to excel. With these insights, you’ll approach your interview with clarity and confidence.

Questions Asked in Computer Systems Interview

Q 1. Explain the difference between a process and a thread.

Processes and threads are both ways of executing a program, but they differ significantly in their resource usage and management. Think of a process as a complete, independent program with its own memory space, while a thread is a lightweight unit of execution *within* a process, sharing the same memory space.

- Process: A process has its own virtual address space, independent memory, and system resources. Creating a new process is relatively heavy, involving significant overhead. Processes are isolated from each other, enhancing stability; if one crashes, others usually continue running. Example: Opening multiple browser windows; each window is typically a separate process.

- Thread: Threads share the same memory space and resources of their parent process. Creating a new thread is much faster and lighter than creating a process. This shared memory allows for easy communication between threads, but also introduces the risk of race conditions (where multiple threads try to modify the same data simultaneously) if not carefully managed. Example: A word processor might have separate threads for spell checking, auto-saving, and displaying text.

In essence, a process is like a whole apartment building, while threads are like individual apartments within the same building. Each apartment (thread) can do its work independently, but they share the same infrastructure (memory and resources).

Q 2. Describe different types of computer system architectures.

Computer system architectures define how different components of a computer system interact. Several key architectures exist:

- Von Neumann Architecture: The most common architecture, where data and instructions are stored in the same memory space and accessed through a single address bus. This design simplifies the hardware but can lead to performance bottlenecks due to the shared bus. Think of it like a single lane road where data and instructions have to compete for access.

- Harvard Architecture: Separates data and instructions into different memory spaces, allowing simultaneous access to both. This enhances performance but necessitates more complex hardware. Imagine a multi-lane highway – dedicated lanes for instructions and data speed up the process.

- Modified Harvard Architecture: This is a hybrid approach. While generally keeping separate data and instruction spaces, it allows some communication between them. It balances the benefits of both architectures, providing increased efficiency without the significant hardware complexity of a pure Harvard architecture.

- Parallel Processing Architectures: Employ multiple processors working together to execute instructions simultaneously. This category includes architectures like multi-core processors, massively parallel processors (MPPs), and distributed systems. They are crucial for handling computationally intensive tasks like scientific simulations or large-scale data analysis.

The choice of architecture depends on the specific requirements of the system, such as performance needs, cost constraints, and power consumption.

Q 3. What are the key components of a computer system?

A computer system comprises several key components working together:

- Central Processing Unit (CPU): The brain of the computer, responsible for executing instructions.

- Memory (RAM): Provides temporary storage for data and instructions currently being used by the CPU. It’s volatile; data is lost when the power is turned off.

- Secondary Storage (Hard Drive, SSD): Provides persistent storage for data even when the power is off.

- Input/Output (I/O) Devices: Devices that allow the computer to interact with the outside world, such as keyboards, mice, monitors, printers, and network interfaces.

- Motherboard: A printed circuit board that connects all the components.

- Power Supply: Provides power to the computer system.

- Operating System (OS): Software that manages the hardware and software resources of the system.

These components interact to carry out tasks. The CPU fetches instructions from memory, processes them, and stores the results back in memory. I/O devices allow interaction with the user or other systems. The OS manages the resources efficiently.

Q 4. Explain the concept of virtualization and its benefits.

Virtualization is the creation of a virtual version of something, such as a computer hardware platform, operating system, storage device, or network resources. It allows multiple virtual machines (VMs) to run on a single physical machine, each with its own isolated resources.

- Benefits:

- Cost Savings: Reduces the need for multiple physical machines, saving on hardware costs and energy consumption.

- Increased Resource Utilization: Maximizes the use of existing hardware resources.

- Improved Flexibility: Easily create and manage different environments (e.g., testing new software in a virtual environment).

- Enhanced Security: Isolation between VMs prevents malware from spreading easily.

- Disaster Recovery: Provides backup and restoration capabilities.

Example: A server farm can host multiple VMs, each running a different application or operating system, sharing the same physical hardware.

Q 5. How does a computer’s operating system manage memory?

The operating system (OS) manages memory using various techniques to ensure efficient allocation and prevent conflicts between different processes and applications. Key techniques include:

- Memory Allocation: The OS divides available RAM into smaller blocks and allocates them to processes as needed. Different allocation algorithms (e.g., first-fit, best-fit) are used to optimize memory usage.

- Virtual Memory: Allows programs to use more memory than physically available. It uses a combination of RAM and hard drive space (swap space) to create a larger virtual address space. This allows running more applications than RAM capacity might otherwise permit.

- Paging: Divides both physical and virtual memory into fixed-size blocks (pages). When a process needs a page not currently in RAM, the OS loads it from the hard drive. This technique is more efficient than swapping entire processes.

- Segmentation: Divides memory into logical segments based on program modules. This offers better memory organization and protection.

- Memory Protection: Prevents one process from accessing or modifying the memory of another process, ensuring stability and security.

The OS constantly monitors memory usage and manages swapping to ensure smooth operation and prevent memory-related errors like segmentation faults.

Q 6. Describe different types of computer networks.

Computer networks can be classified in several ways based on their size, geographical reach, and topology:

- Personal Area Network (PAN): Connects devices within a small area, such as a person’s desk or a single room. Example: Bluetooth connection between a phone and headphones.

- Local Area Network (LAN): Connects devices within a limited geographical area, such as an office building or a home. Example: Ethernet network connecting computers in a home or office.

- Metropolitan Area Network (MAN): Connects devices within a larger geographical area, such as a city or metropolitan region. Example: A network connecting several university buildings across a city.

- Wide Area Network (WAN): Connects devices across a large geographical area, often spanning multiple countries. Example: The internet.

These networks can have various topologies (the physical or logical layout of the network), such as bus, star, ring, mesh, and tree. The choice of network type and topology depends on factors like scalability, cost, security requirements, and performance needs.

Q 7. What are the advantages and disadvantages of cloud computing?

Cloud computing offers significant advantages but also has drawbacks:

- Advantages:

- Scalability and Elasticity: Easily scale resources up or down as needed, paying only for what is used.

- Cost Savings: Reduce capital expenditure on hardware and IT infrastructure.

- Accessibility: Access data and applications from anywhere with an internet connection.

- Increased Collaboration: Enable easy collaboration among teams and individuals.

- High Availability: Cloud providers usually offer high availability and redundancy.

- Disadvantages:

- Security Concerns: Data security and privacy are concerns when relying on third-party providers.

- Vendor Lock-in: Migrating away from a cloud provider can be difficult and costly.

- Internet Dependency: Requires a reliable internet connection.

- Downtime: Although rare, outages can affect services.

- Cost Management: Uncontrolled usage can lead to unexpected costs.

Choosing cloud computing depends on carefully weighing these advantages and disadvantages against specific business needs and risk tolerance.

Q 8. Explain the concept of RAID and its different levels.

RAID, or Redundant Array of Independent Disks, is a data storage virtualization technology that combines multiple physical disk drive components into a single logical unit for the purposes of data redundancy, performance improvement, or both. Think of it like having multiple assistants working together to complete a task faster and more reliably.

- RAID 0 (Striping): Data is split across multiple disks, increasing read/write speeds. However, there’s no redundancy; if one disk fails, all data is lost. Imagine a book split into chapters across multiple volumes. If one volume is lost, the whole book is gone.

- RAID 1 (Mirroring): Data is duplicated across multiple disks, providing high redundancy. If one disk fails, the other disk contains an identical copy. It’s like having two identical copies of a document—if one is lost, you still have the other.

- RAID 5 (Striping with Parity): Data is striped across multiple disks, with parity information distributed across all disks. It provides both speed and redundancy. One disk can fail without data loss. This is similar to a puzzle where a piece can be rebuilt using information from the others.

- RAID 6 (Striping with Double Parity): Similar to RAID 5, but with double parity information, allowing for two simultaneous disk failures without data loss. This is like having a puzzle with extra pieces—you can still solve it even if a couple of pieces are missing.

- RAID 10 (Mirroring and Striping): Combines mirroring and striping, providing both speed and high redundancy. It’s a robust solution but requires more disks than other RAID levels. It provides both speed (striping) and safety (mirroring), akin to having multiple copies of a fast computer system.

The choice of RAID level depends on the specific needs of the system. For example, a database server might benefit from RAID 10 for both performance and data protection, while a media server might choose RAID 0 for speed, accepting the higher risk.

Q 9. How do you troubleshoot network connectivity issues?

Troubleshooting network connectivity issues involves a systematic approach, much like detective work. I start with the most basic checks and move towards more advanced troubleshooting techniques.

- Check the obvious: Are the cables properly connected? Is the device powered on? Is the network adapter enabled?

- Ping the device: Use the

pingcommand (e.g.,ping google.com) to check basic connectivity. A successful ping indicates that basic connectivity is established. - Check IP configuration: Verify the IP address, subnet mask, and default gateway are correctly configured on the device. Use commands like

ipconfig /all(Windows) orifconfig(Linux/macOS). - Examine network settings: Check firewall settings, both on the device and on the network router. Firewalls can sometimes block network connections.

- Use network monitoring tools: Tools like

tcpdumpor Wireshark can capture and analyze network traffic to identify potential issues. - Check DNS resolution: If you can’t access websites by name, check if the DNS server is resolving names correctly using

nslookupordig. - Check for connectivity issues with other devices: Is the problem limited to just one device, or are multiple devices affected? This helps isolate whether the problem is with the device itself or with the network.

- Contact your ISP: If the problem seems to be external to your local network, contact your internet service provider to check for outages.

The key is to be methodical and systematic in your approach. I typically document each step I take, allowing me to retrace my actions and understand the cause of the problem.

Q 10. Describe your experience with database management systems.

I have extensive experience with various database management systems (DBMS), including relational databases like MySQL, PostgreSQL, and Oracle, as well as NoSQL databases such as MongoDB and Cassandra. My experience encompasses database design, implementation, optimization, and administration.

For instance, in a previous role, I designed and implemented a high-availability PostgreSQL database cluster for a large e-commerce application. This involved careful consideration of data modeling, schema design, performance optimization techniques (such as indexing and query optimization), and disaster recovery planning. I also have experience using various database tools to monitor performance, troubleshoot issues and conduct regular backups.

I am comfortable working with SQL and NoSQL databases, selecting the most appropriate type based on the specific application needs. My experience also includes working with cloud-based database services such as Amazon RDS and Google Cloud SQL.

Q 11. Explain the importance of data backup and recovery.

Data backup and recovery are critical for business continuity and data protection. Data loss due to hardware failure, software errors, cyberattacks, or natural disasters can be catastrophic. A robust backup and recovery strategy mitigates these risks.

A good backup strategy should include multiple levels of protection, including:

- Regular backups: Frequent backups (daily, hourly, or even more frequently, depending on the criticality of the data) to ensure that minimal data is lost in case of failure.

- Multiple backup locations: Keeping backups in at least two geographically separate locations protects against disasters that could affect a single location (e.g., fire, flood).

- Different backup types: A combination of full, incremental, and differential backups allows for a balance between speed and storage space. Full backups are done periodically. Incremental backups store only the changes since the last backup while differential backups store changes since the last full backup.

- Regular testing: Regularly testing the restoration process ensures that the backups are valid and can be restored in case of an emergency. Otherwise, your recovery plan is only as good as your last successful restoration.

A well-defined recovery plan is equally essential. This plan should outline the steps needed to restore data from backup, including the time required, resources needed, and roles and responsibilities of the personnel involved.

Q 12. How do you ensure data security in a computer system?

Ensuring data security is a multifaceted challenge requiring a layered approach. It’s not a single solution, but a comprehensive strategy.

- Access control: Implementing strong authentication mechanisms (like multi-factor authentication) and authorization policies (limiting user access based on roles and responsibilities) prevents unauthorized access.

- Data encryption: Encrypting data both at rest (on storage devices) and in transit (during network transmission) protects data from unauthorized disclosure, even if it is intercepted.

- Regular security audits and penetration testing: Regularly evaluating the security posture of the system, identifying vulnerabilities, and proactively addressing them before they can be exploited by attackers.

- Intrusion detection and prevention systems (IDS/IPS): Implementing security tools to monitor network traffic and identify malicious activities, such as attempts to intrude into the system.

- Regular software updates and patching: Keeping the operating system, applications, and firmware up-to-date helps mitigate vulnerabilities that might be exploited by attackers.

- Data loss prevention (DLP): Implement measures to prevent sensitive data from being leaked, whether accidentally or intentionally, to unauthorized users or systems.

- Employee training: Educating employees about security best practices such as creating strong passwords, identifying phishing scams, and recognizing social engineering attempts is crucial in minimizing human error, a frequent cause of security breaches.

Data security is an ongoing process, not a one-time fix. Continuous monitoring, adaptation, and improvement are vital to maintain a strong security posture.

Q 13. What are the different types of operating systems?

Operating systems (OS) are the fundamental software that manages computer hardware and software resources and provides common services for computer programs. There are various types, categorized in several ways:

- By architecture: This refers to how the OS interacts with the hardware. Common architectures include x86 (used in most desktop and laptop computers), ARM (used in smartphones and embedded systems), and others.

- By purpose: Operating systems can be designed for specific purposes, such as embedded systems (used in appliances and IoT devices), real-time systems (used in critical applications demanding immediate response), or general-purpose systems (used for a broad range of tasks).

- By licensing model: The licensing model dictates how the OS is distributed and used. Examples include open-source OS (like Linux) and proprietary OS (like Windows and macOS). The difference largely boils down to source code availability and commercial licensing.

Some prominent examples include:

- Windows: A proprietary OS dominant in the desktop and server markets.

- macOS: A proprietary OS used primarily on Apple computers.

- Linux: A family of open-source operating systems known for its flexibility and customizability, used extensively in servers, embedded systems, and supercomputers.

- Android: An open-source OS based on Linux, primarily used on mobile devices.

- iOS: A proprietary OS used on Apple mobile devices.

The choice of operating system depends greatly on the specific requirements of the task. For instance, high performance and stability are vital for servers, often leading to the choice of Linux, while ease of use and a rich ecosystem of applications are key factors for desktop users, favoring Windows or macOS.

Q 14. Describe your experience with scripting languages (e.g., Bash, PowerShell).

I have substantial experience with scripting languages like Bash (on Linux/macOS systems) and PowerShell (on Windows systems). These languages are invaluable for automating tasks, managing systems, and enhancing productivity.

In Bash, I frequently use scripts for tasks such as automating backups, managing user accounts, deploying software, and monitoring system performance. For example, I’ve written scripts to automatically check disk space, send email alerts when critical thresholds are exceeded, and perform routine maintenance tasks. A simple example of a Bash script to list all files in a directory could look like this:

#!/bin/bash

ls -l /path/to/directoryIn PowerShell, I’ve worked on similar automation tasks within the Windows environment. PowerShell’s object-oriented approach allows for more powerful and flexible scripting compared to batch scripting. For example, I’ve built scripts to manage Active Directory users, automate software installations, and gather system information. Here’s a small snippet of PowerShell code that gets the current date and time:

Get-DateMy scripting skills allow me to efficiently manage systems, troubleshoot issues, and implement robust automation solutions, improving operational efficiency and reducing manual effort.

Q 15. Explain the concept of system calls.

System calls are the fundamental way a program interacts with the operating system’s kernel. Think of them as requests a program makes to the OS to perform tasks it can’t do on its own, like accessing files, managing memory, or handling network communication. They act as a bridge between user-space applications and the kernel, which has privileged access to system hardware and resources.

For example, if your application needs to read data from a file, it doesn’t directly interact with the hard drive. Instead, it makes a system call (e.g., read() in Unix-like systems) to the kernel. The kernel then handles the low-level details of reading the data from the disk and returns the data to your application. This ensures system stability and prevents applications from directly accessing hardware, which could lead to crashes or security vulnerabilities.

Different operating systems have their own sets of system calls, though many have analogous functions. The system call interface provides a consistent and well-defined way for applications to interact with the OS, regardless of the underlying hardware or architecture. This abstraction is crucial for portability and maintainability.

Career Expert Tips:

- Ace those interviews! Prepare effectively by reviewing the Top 50 Most Common Interview Questions on ResumeGemini.

- Navigate your job search with confidence! Explore a wide range of Career Tips on ResumeGemini. Learn about common challenges and recommendations to overcome them.

- Craft the perfect resume! Master the Art of Resume Writing with ResumeGemini’s guide. Showcase your unique qualifications and achievements effectively.

- Don’t miss out on holiday savings! Build your dream resume with ResumeGemini’s ATS optimized templates.

Q 16. How do you monitor system performance?

Monitoring system performance involves observing key metrics to identify bottlenecks, potential problems, and optimize resource utilization. My approach is multifaceted and depends on the system’s role and complexity. I typically use a combination of tools and techniques.

- Resource Monitoring: Tools like

top(Linux/macOS),Task Manager(Windows), or specialized monitoring agents track CPU usage, memory consumption, disk I/O, and network traffic. Analyzing trends in these metrics helps identify resource-intensive processes or potential hardware limitations. - System Logging: Examining system logs (e.g., `/var/log/syslog` on Linux) reveals errors, warnings, and other events that may indicate performance problems. Log analysis tools facilitate efficient review of large log files.

- Performance Profiling: Tools like

perf(Linux) allow detailed analysis of application performance, identifying specific code sections causing slowdowns. This is particularly useful for optimizing application code. - Synthetic Monitoring: Using automated scripts or tools to simulate user activity and measure response times provides an objective assessment of system responsiveness under load.

In addition to these technical approaches, I also emphasize proactive monitoring by establishing baselines and setting alerts for critical metrics. This allows for timely intervention before problems escalate significantly.

Q 17. Describe your experience with different types of hardware.

My experience spans a range of hardware, from embedded systems to large-scale server clusters. I’ve worked with various CPU architectures (x86, ARM), different storage solutions (HDDs, SSDs, NVMe), and diverse networking technologies (Ethernet, Fibre Channel).

For instance, I’ve deployed and managed servers using Intel Xeon processors and high-capacity RAID storage arrays in enterprise data centers. I’ve also worked with smaller embedded systems using ARM processors with limited memory and storage resources, requiring careful optimization of software and resource allocation. This diversity has equipped me with the skills to adapt to various hardware configurations and constraints.

Beyond processors and storage, I am also familiar with different types of network interfaces, including 10GbE and Infiniband, and have experience troubleshooting networking issues in high-performance computing environments. This includes working with switches, routers, and firewalls to optimize network performance and ensure security.

Q 18. Explain the concept of caching.

Caching is a technique used to store frequently accessed data in a faster, smaller, and more easily accessible storage location. Think of it like this: Imagine a library where the most popular books are kept near the entrance for easy access. This is analogous to caching data in a computer system.

In computer systems, caches are typically implemented at multiple levels, including:

- CPU Cache: Very fast memory integrated directly into the CPU, storing frequently used instructions and data.

- Memory Cache (RAM): Faster than disk storage, RAM acts as a cache for data frequently accessed from the hard drive or SSD.

- Web Browser Cache: Stores frequently accessed web pages and assets (images, CSS, etc.) to speed up browsing.

- Database Cache: Stores frequently queried data in memory for faster retrieval.

The primary benefit of caching is improved performance. By storing frequently accessed data closer to where it’s needed, applications can retrieve data significantly faster than if they had to access slower storage tiers. The trade-off is that caches have limited space, so effective cache management strategies (like least recently used (LRU) replacement algorithms) are crucial for maximizing efficiency.

Q 19. What are the different types of computer system security threats?

Computer system security threats are numerous and constantly evolving. They can broadly be categorized as:

- Malware: This encompasses viruses, worms, Trojans, ransomware, and spyware. Malware can infect systems, steal data, disrupt operations, or encrypt files for ransom.

- Phishing and Social Engineering: These attacks manipulate users into revealing sensitive information or installing malware by exploiting human psychology and trust.

- Denial-of-Service (DoS) Attacks: These attacks overwhelm a system or network with traffic, making it unavailable to legitimate users.

- Data Breaches: Compromises of databases or other systems that expose sensitive user data, leading to identity theft and financial losses.

- Insider Threats: Malicious or negligent actions by authorized personnel within an organization.

- Software Vulnerabilities: Flaws in software that can be exploited by attackers to gain unauthorized access or control.

- Network Attacks: Exploits of network security weaknesses, such as man-in-the-middle attacks, SQL injection, and cross-site scripting (XSS).

Addressing these threats requires a multi-layered security approach including strong passwords, firewalls, intrusion detection systems, regular software updates, employee training, and data encryption.

Q 20. How do you handle system failures?

Handling system failures requires a proactive and systematic approach. My strategy involves:

- Redundancy and Failover: Implementing redundant systems and failover mechanisms ensures continued operation even if one component fails. For example, using RAID for data storage or load balancing across multiple servers provides resilience.

- Monitoring and Alerting: Proactive monitoring allows for early detection of potential problems before they escalate into major failures. Automated alerts notify administrators of critical issues, allowing for timely intervention.

- Data Backup and Recovery: Regular backups are crucial for data protection and enable swift restoration in the event of a failure. Testing the recovery process is essential to ensure its effectiveness.

- Root Cause Analysis: After a failure, conducting a thorough root cause analysis is vital to prevent similar incidents in the future. This involves identifying the underlying causes of the failure and implementing corrective measures.

- Incident Response Plan: Having a documented incident response plan outlines the steps to take during and after a system failure, ensuring a coordinated and efficient response.

Furthermore, maintaining detailed documentation of system configurations and procedures greatly aids in troubleshooting and recovery efforts.

Q 21. Explain your experience with system logging and monitoring.

My experience with system logging and monitoring is extensive. I’ve worked with various logging systems, including syslog, centralized log management platforms like Elasticsearch, Logstash, and Kibana (ELK stack), and application-specific logging frameworks. I understand the importance of structured logging for efficient analysis.

I’ve used these tools to:

- Identify and troubleshoot performance bottlenecks: By analyzing log data, I can pinpoint the cause of slowdowns or errors, helping to improve system efficiency.

- Detect and respond to security incidents: Log monitoring helps identify suspicious activities, such as unauthorized access attempts or malware infections, allowing for timely response and mitigation.

- Track system changes and configurations: Logging provides an audit trail of system changes, aiding in security investigations and configuration management.

- Generate performance reports and dashboards: Visualizing log data through dashboards provides insights into system health and trends over time, facilitating proactive system maintenance.

I also have experience setting up automated alerts based on specific log events, allowing for prompt notification of critical issues. Efficient log management and analysis are key to ensuring system stability, security, and performance.

Q 22. Describe your experience with automation tools.

My experience with automation tools spans several years and encompasses a wide range of tools, from simple scripting languages like Bash and Python to sophisticated orchestration platforms such as Ansible and Terraform. I’ve used these tools extensively to automate repetitive tasks, streamline workflows, and improve efficiency in various aspects of system administration.

For instance, I used Ansible to automate the deployment and configuration of web servers across multiple data centers. This involved creating playbooks to handle tasks like installing software packages, configuring network settings, and deploying applications. The result was a significant reduction in deployment time and a consistent configuration across all servers, minimizing human error. In another project, I leveraged Python scripting to automate log analysis, identifying potential system issues proactively rather than reactively. This prevented service disruptions and improved overall system stability. My experience extends to CI/CD pipelines using Jenkins and GitLab CI, automating the build, testing, and deployment processes for software applications.

Q 23. Explain your experience with containerization technologies (e.g., Docker, Kubernetes).

Containerization technologies, particularly Docker and Kubernetes, are fundamental to my approach to modern system administration. Docker allows me to package applications and their dependencies into isolated containers, ensuring consistent execution across different environments. This solves the infamous “it works on my machine” problem. Kubernetes, on the other hand, provides an orchestration platform to manage and scale these Docker containers across a cluster of machines.

For example, I’ve used Docker to create and deploy microservices, breaking down monolithic applications into smaller, more manageable components. This approach improved scalability, resilience, and the overall maintainability of the applications. My experience with Kubernetes includes designing and implementing highly available and scalable deployments, leveraging features like deployments, services, and ingress controllers to manage traffic routing and resource allocation. I’ve also extensively used Kubernetes for managing persistent volumes, ensuring data durability for stateful applications.

# Example Dockerfile for a simple Node.js application FROM node:16 WORKDIR /app COPY package*.json ./ RUN npm install COPY . . EXPOSE 3000 CMD [ "npm", "start" ]Q 24. What is your experience with serverless computing?

Serverless computing represents a paradigm shift in application development and deployment. Instead of managing servers directly, developers focus on writing code that runs in response to events, without the burden of server provisioning and management. I’ve worked with several serverless platforms, including AWS Lambda and Azure Functions.

In a recent project, we migrated a batch processing job to AWS Lambda. This eliminated the need for constantly running servers, reducing operational costs significantly while improving scalability. The function triggered automatically upon new data arrival, processing it efficiently and scaling based on demand. The reduced operational overhead freed up resources to focus on improving the core application logic, rather than managing infrastructure.

Q 25. Explain the difference between hardware and software.

The difference between hardware and software is fundamental to computer science. Hardware refers to the physical components of a computer system – the tangible parts you can touch, such as the CPU, RAM, hard drives, monitor, keyboard, and motherboard. Software, conversely, is the set of instructions, data, or programs that tell the hardware what to do. It’s intangible, existing only as code and data stored on the hardware.

Think of it like a car: the hardware is the engine, chassis, wheels, and other physical components. The software is the driving instructions, the navigation system, and the entertainment system. The hardware provides the platform, while the software enables functionality and usability.

Q 26. What is your experience with different types of network topologies?

My experience encompasses a variety of network topologies, including bus, star, ring, mesh, and tree topologies. Each topology has its own strengths and weaknesses concerning scalability, reliability, and cost.

The star topology, for instance, is very common in local area networks (LANs), with all devices connected to a central hub or switch. This is simple to manage and troubleshoot but has a single point of failure. Mesh topologies, on the other hand, offer high redundancy and fault tolerance as they provide multiple paths between devices. While more complex to manage, they are ideal for critical systems where downtime is unacceptable. I’ve designed and implemented networks using these different topologies, selecting the most appropriate based on the specific requirements of the project, such as size, budget, and performance needs.

Q 27. Describe your experience with disaster recovery planning.

Disaster recovery planning is crucial for ensuring business continuity in the event of unexpected events like natural disasters, cyberattacks, or hardware failures. My experience includes developing and implementing comprehensive disaster recovery plans involving various techniques, such as data backups, replication, and failover mechanisms.

A key component of my approach is establishing clear recovery time objectives (RTOs) and recovery point objectives (RPOs), which define acceptable downtime and data loss. I’ve used various backup and recovery tools to ensure data integrity and rapid restoration. I’ve also implemented strategies like high-availability clustering and geographically dispersed data centers to enhance resilience and minimize the impact of disruptions. Regular testing and drills are essential parts of the process, verifying the effectiveness of the plan and identifying areas for improvement.

Q 28. How do you stay up-to-date with the latest technologies in computer systems?

Staying current in the rapidly evolving field of computer systems requires a multi-pronged approach. I regularly read industry publications like technical journals and blogs, attend conferences and webinars, and actively participate in online communities.

Hands-on experience is equally important, so I actively seek out opportunities to work with new technologies and tools. I regularly contribute to open-source projects, which allows me to learn from other developers and gain practical experience with cutting-edge technologies. Online courses and certifications also play a vital role, providing structured learning and formal recognition of acquired skills. Ultimately, continuous learning is a commitment, not just a task, in this dynamic field.

Key Topics to Learn for Computer Systems Interview

- Operating Systems: Understand core concepts like process management, memory management, file systems, and I/O operations. Explore practical applications in system administration and performance tuning.

- Computer Architecture: Grasp the fundamentals of CPU architecture, memory hierarchy (cache, RAM, disk), and input/output systems. Be prepared to discuss trade-offs and performance implications of different architectural choices.

- Networking: Familiarize yourself with networking protocols (TCP/IP, UDP), network topologies, and security considerations. Understand practical applications in network administration and troubleshooting.

- Data Structures and Algorithms: Master fundamental data structures (arrays, linked lists, trees, graphs) and algorithms (searching, sorting, graph traversal). Practice applying these to solve real-world problems in a computer systems context.

- Databases: Learn about relational and NoSQL databases, database design principles (normalization), and query optimization techniques. Understand practical applications in data management and analysis.

- Distributed Systems: Explore concepts like consistency, fault tolerance, and concurrency in distributed systems. Understand challenges and solutions related to distributed computing.

- System Security: Understand common security threats and vulnerabilities in computer systems. Explore security mechanisms and best practices for system protection.

- Virtualization and Cloud Computing: Learn about virtualization technologies and cloud computing platforms (AWS, Azure, GCP). Understand the benefits and challenges of using virtualized and cloud-based systems.

Next Steps

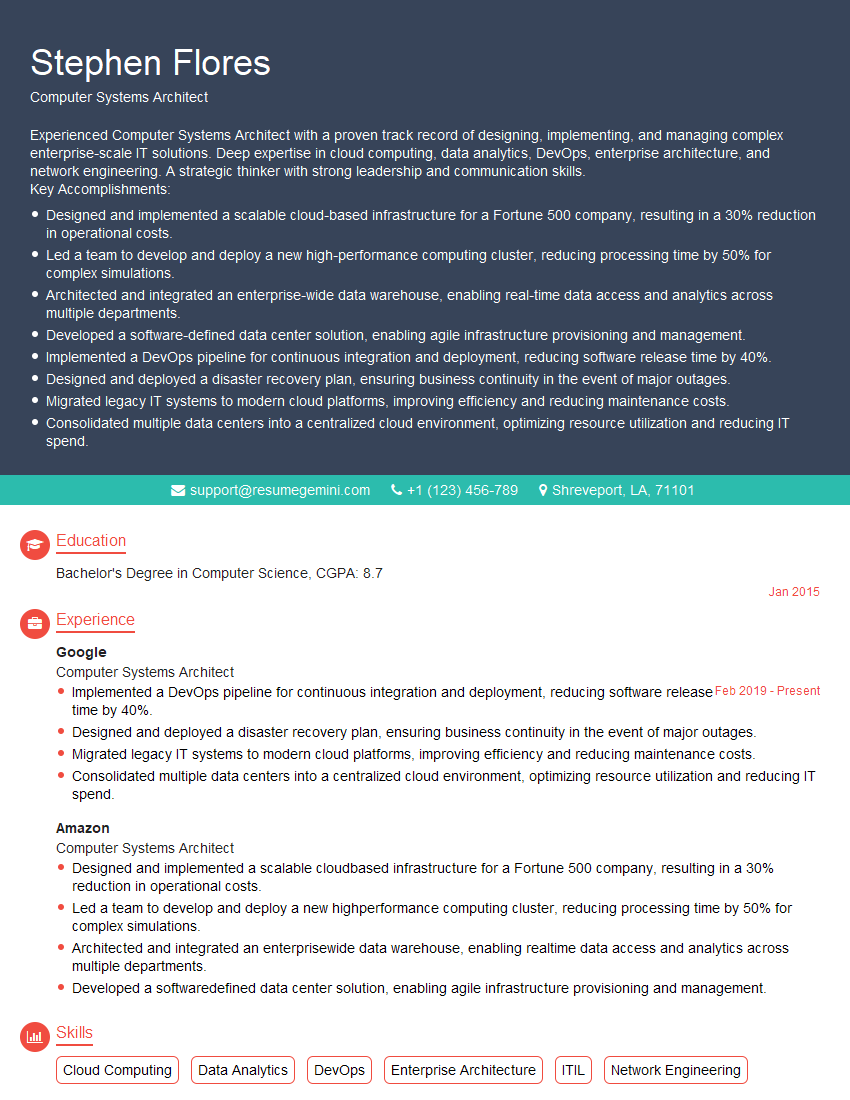

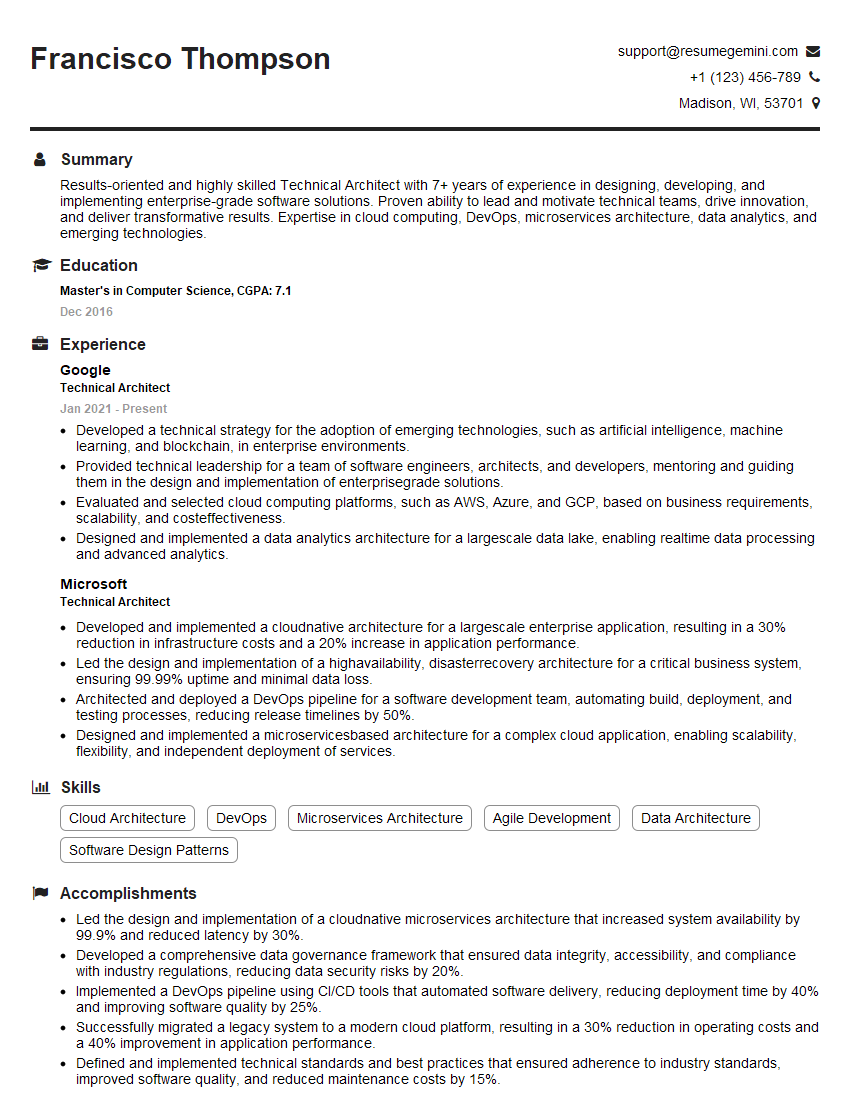

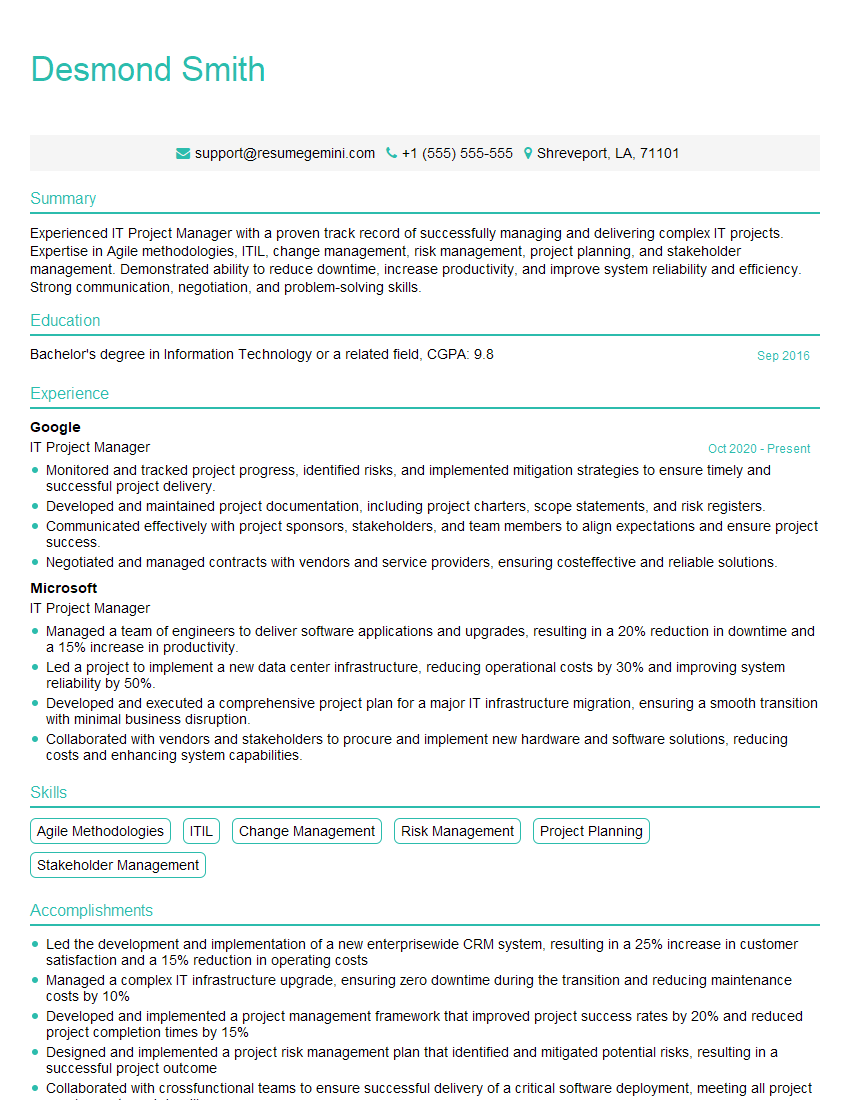

Mastering Computer Systems principles is crucial for a successful and rewarding career in technology. A strong understanding of these concepts opens doors to a wide range of exciting opportunities and allows you to contribute significantly to innovative projects. To maximize your job prospects, invest time in crafting an ATS-friendly resume that effectively highlights your skills and experience. ResumeGemini is a trusted resource that can help you build a professional and impactful resume. We provide examples of resumes tailored to Computer Systems roles to guide you through the process. Take the next step towards your dream career – build a resume that showcases your expertise!

Explore more articles

Users Rating of Our Blogs

Share Your Experience

We value your feedback! Please rate our content and share your thoughts (optional).

What Readers Say About Our Blog

Hello,

We found issues with your domain’s email setup that may be sending your messages to spam or blocking them completely. InboxShield Mini shows you how to fix it in minutes — no tech skills required.

Scan your domain now for details: https://inboxshield-mini.com/

— Adam @ InboxShield Mini

Reply STOP to unsubscribe

Hi, are you owner of interviewgemini.com? What if I told you I could help you find extra time in your schedule, reconnect with leads you didn’t even realize you missed, and bring in more “I want to work with you” conversations, without increasing your ad spend or hiring a full-time employee?

All with a flexible, budget-friendly service that could easily pay for itself. Sounds good?

Would it be nice to jump on a quick 10-minute call so I can show you exactly how we make this work?

Best,

Hapei

Marketing Director

Hey, I know you’re the owner of interviewgemini.com. I’ll be quick.

Fundraising for your business is tough and time-consuming. We make it easier by guaranteeing two private investor meetings each month, for six months. No demos, no pitch events – just direct introductions to active investors matched to your startup.

If youR17;re raising, this could help you build real momentum. Want me to send more info?

Hi, I represent an SEO company that specialises in getting you AI citations and higher rankings on Google. I’d like to offer you a 100% free SEO audit for your website. Would you be interested?

Hi, I represent an SEO company that specialises in getting you AI citations and higher rankings on Google. I’d like to offer you a 100% free SEO audit for your website. Would you be interested?

good