The right preparation can turn an interview into an opportunity to showcase your expertise. This guide to Operational Deployment Planning interview questions is your ultimate resource, providing key insights and tips to help you ace your responses and stand out as a top candidate.

Questions Asked in Operational Deployment Planning Interview

Q 1. Describe your experience with different deployment methodologies (e.g., Agile, Waterfall, DevOps).

My experience spans various deployment methodologies, each suited for different project needs. Waterfall, a traditional approach, is characterized by its sequential phases: requirements, design, implementation, testing, deployment, and maintenance. It’s best for projects with stable requirements and minimal expected changes. I’ve used it for large-scale enterprise system upgrades where predictability was paramount. In contrast, Agile methodologies, like Scrum and Kanban, emphasize iterative development and flexibility. I’ve successfully implemented Agile deployments in dynamic environments, prioritizing rapid feedback loops and continuous improvement. This approach proved invaluable for projects requiring adaptability to changing client needs or evolving market conditions. Finally, DevOps represents a cultural shift emphasizing collaboration between development and operations teams. Using DevOps principles, I’ve streamlined the deployment process significantly, achieving shorter release cycles and improved reliability through automation and continuous integration/continuous delivery (CI/CD) pipelines. For instance, in a recent project, transitioning from a purely Waterfall to a DevOps approach reduced our deployment time from weeks to days, minimizing downtime and allowing for faster response to market changes.

Q 2. Explain the process you use to define deployment success criteria.

Defining deployment success criteria is crucial for a smooth process. I use a multi-faceted approach focusing on three key areas: Functionality, Performance, and Stability. For Functionality, we ensure all features work as designed, validating against acceptance criteria defined earlier. We employ thorough testing, including unit, integration, and user acceptance testing (UAT). Performance is evaluated by monitoring key metrics like response times, throughput, and resource utilization, ensuring they meet pre-defined thresholds. This often involves load testing to simulate real-world scenarios. Lastly, Stability is assessed by monitoring error rates, uptime, and the overall system’s robustness. For example, for a e-commerce platform deployment, success would mean 99.9% uptime, average response times under 2 seconds, successful order processing, and no critical errors during peak traffic hours. The criteria are documented and shared with all stakeholders upfront, creating a shared understanding of success.

Q 3. How do you handle unexpected issues during a deployment?

Unexpected issues during deployment are inevitable. My approach involves a structured, prioritized response. First, we immediately initiate an incident response plan. This involves identifying the issue, determining its impact, and prioritizing mitigation. We utilize monitoring tools to gather real-time data to understand the problem’s scope. Next, we implement a rapid rollback strategy, reverting to the previous stable version if the problem is severe and impacts critical functionalities. Simultaneously, we begin debugging the root cause. This often includes analyzing logs, reviewing code changes, and collaborating with developers to understand the error. Communication is vital during this phase. Regular updates are provided to stakeholders, keeping them informed about the progress and expected resolution time. Post-incident reviews are mandatory; we thoroughly analyze the incident, identifying areas for improvement to prevent similar issues in the future and updating our contingency plans. This iterative approach improves our resilience to future disruptions.

Q 4. What tools and technologies are you familiar with for deployment automation?

My expertise encompasses a wide range of deployment automation tools and technologies. I have extensive experience with configuration management tools like Ansible and Puppet, enabling automated provisioning and configuration of servers. For CI/CD pipelines, I’m proficient with Jenkins, GitLab CI, and Azure DevOps, orchestrating automated builds, testing, and deployments. Containerization technologies like Docker and Kubernetes are key to my workflow, enabling consistent and scalable deployments across various environments. Cloud platforms like AWS and Azure are integral to my deployments, leveraging their services for infrastructure management, deployment automation, and scalability. For example, I recently used Ansible to automate the deployment of a microservices application across multiple AWS EC2 instances, significantly reducing deployment time and eliminating manual configuration errors.

Q 5. Describe your experience with rollback procedures and contingency planning.

Rollback procedures and contingency planning are critical parts of my deployment strategy. We establish clear rollback procedures, ensuring a quick and reliable reversion to a previous stable state in case of issues. This often involves using version control systems like Git to track changes and maintain backups of deployment artifacts. Our contingency plans address potential failure points, including network outages, database errors, and hardware failures. For example, we might have a failover mechanism for critical database servers or utilize redundant infrastructure components. Regular drills and simulations are part of the process, ensuring the team is well-versed in executing rollback and contingency plans and identifying any gaps or weaknesses in these procedures. Documentation is meticulously maintained, detailing the steps for rollback, contingency plans, and contact details for different scenarios. We always prioritize a clear communication plan to facilitate efficient action during emergencies.

Q 6. How do you ensure data integrity during a deployment?

Maintaining data integrity during deployments is paramount. We employ a variety of strategies, starting with robust database backups performed before any deployment commences. This provides a safety net in case of data corruption or accidental deletion. We use techniques like database migrations to manage schema changes in a controlled manner, ensuring data compatibility across different versions. Data validation and consistency checks are incorporated into the deployment process, verifying data accuracy and integrity after the update. For sensitive data, encryption and access controls are enforced throughout the deployment pipeline. Furthermore, rigorous testing, including data integrity tests, is conducted to ensure that data remains accurate and consistent. In cases involving large-scale data updates, we often employ techniques like phased rollouts to minimize the risk and allow for quicker identification of issues, ensuring that data corruption is kept to a minimal.

Q 7. How do you manage risks associated with operational deployment?

Risk management in operational deployment is a continuous process. We start by identifying potential risks throughout the deployment lifecycle, from planning to post-deployment monitoring. This involves brainstorming sessions with the team, reviewing past deployment incidents, and considering potential external factors. Risks are then analyzed and prioritized based on their likelihood and impact. Mitigation strategies are then developed for each high-priority risk, possibly involving technical solutions, process improvements, or contingency planning. Throughout the deployment, risks are monitored and assessed regularly, allowing for proactive adjustments. Post-deployment reviews are crucial for analyzing the effectiveness of risk mitigation strategies and identifying areas for improvement in our overall risk management approach. This iterative approach leads to more robust and secure deployments.

Q 8. Explain your experience with capacity planning for deployments.

Capacity planning for deployments is crucial to ensure the new system or updated application performs optimally after deployment. It involves accurately predicting the resource requirements – such as CPU, memory, network bandwidth, and storage – to handle the expected load. This requires a thorough understanding of the application’s behavior, anticipated user growth, and potential peak usage.

My approach involves a multi-step process. First, I analyze historical data and conduct performance testing to establish baseline metrics. Then, I create various capacity models, simulating different scenarios (e.g., a sudden surge in users or a specific transaction volume). These models help to determine the optimal hardware and software configurations needed. Finally, I create a buffer, adding extra capacity to accommodate unexpected spikes or unforeseen issues. For example, if a model predicts needing 10 servers, I might plan for 12 to account for potential problems or unexpected growth. This proactive approach minimizes the risk of performance bottlenecks and ensures a smooth user experience post-deployment.

In one project, we used a combination of load testing tools (like JMeter) and capacity planning software to simulate various user loads on a new e-commerce platform. This analysis identified a critical need to scale database resources more aggressively than initially planned, preventing potential performance degradation during the holiday shopping season.

Q 9. Describe your experience with monitoring and logging during and after deployments.

Monitoring and logging are critical for ensuring deployment success and identifying post-deployment issues. Effective monitoring provides real-time visibility into the system’s health, performance, and resource utilization. Logging, on the other hand, records detailed information about events that occur during and after deployment, creating an audit trail for troubleshooting. These two aspects are indispensable in maintaining and enhancing system stability and availability.

My experience involves implementing comprehensive monitoring solutions using tools like Prometheus, Grafana, and ELK stack (Elasticsearch, Logstash, Kibana). These tools allow for centralized monitoring of key metrics, such as CPU usage, memory consumption, response times, and error rates. I typically set up automated alerts triggered by threshold breaches, enabling proactive problem resolution. For example, an alert might be configured to notify the operations team if CPU usage exceeds 80% for more than 5 minutes. Thorough logging helps to pinpoint the root cause of issues, providing crucial data for post-mortem analysis and preventative measures.

In a recent project involving a microservices architecture, we leveraged distributed tracing to track requests across multiple services, enabling us to quickly identify bottlenecks and failures. The detailed logs allowed us to pinpoint a specific service failing under load and implement a fix promptly.

Q 10. How do you ensure security during the deployment process?

Security is paramount throughout the entire deployment lifecycle. My approach focuses on securing every step, from code deployment to infrastructure configuration. This requires a multi-layered strategy addressing vulnerabilities at all levels.

First, we utilize secure coding practices and conduct rigorous code reviews to minimize vulnerabilities in the application itself. Then, we employ infrastructure-as-code (IaC) tools such as Terraform or Ansible to automate and standardize the provisioning of infrastructure, ensuring consistent and secure configurations. Access control is critical, using role-based access control (RBAC) to restrict access to sensitive resources only to authorized personnel. Vulnerability scanning tools are used regularly to identify and address potential security weaknesses in the application and infrastructure. We also implement security monitoring and intrusion detection systems to proactively identify and respond to threats. Finally, all deployment processes are carefully documented and auditable.

For instance, we might use a secure container registry like Docker Hub, along with a secrets management tool like HashiCorp Vault, to securely store and manage sensitive information like API keys and database credentials.

Q 11. How do you handle communication and stakeholder management during a deployment?

Effective communication and stakeholder management are crucial for successful deployments. This involves keeping all stakeholders – including developers, operations teams, and business users – informed throughout the process. Transparency and proactive communication significantly reduce anxiety and uncertainty.

My approach involves creating a clear communication plan, outlining key milestones, timelines, and communication channels. We typically use a combination of tools such as email, instant messaging, and project management software to disseminate information effectively. Regular status updates, including both successes and challenges encountered, are provided to ensure everyone remains informed. Pre-deployment meetings and post-deployment reviews are essential for aligning expectations and gathering feedback.

In a large-scale deployment, I would utilize a communication matrix to define the target audience for each type of message. For instance, technical details would be shared primarily with the development and operations teams, while a high-level overview would be provided to business stakeholders.

Q 12. Explain your approach to testing during the deployment process.

Testing is a critical component of the deployment process, ensuring the new or updated system functions correctly and meets its requirements. It’s not a single event, but rather an integrated process throughout the deployment lifecycle.

My approach involves a multi-stage testing strategy. This includes unit tests, integration tests, system tests, and user acceptance testing (UAT). Automated testing is prioritized wherever possible to improve efficiency and reduce errors. Testing is often integrated with the CI/CD pipeline, ensuring that tests are run automatically as part of the deployment process. The results of the testing process are carefully reviewed and analyzed to identify any defects or issues before they reach production.

For instance, we might use tools like Selenium for UI testing, and Jest for unit testing, integrating them with a CI/CD pipeline to automatically run the test suites before each deployment.

Q 13. How do you measure the success of a deployment?

Measuring the success of a deployment goes beyond simply confirming that the system is operational. It involves evaluating the impact of the deployment on key performance indicators (KPIs).

We define success metrics beforehand, aligning them with the business goals of the deployment. These metrics might include things like uptime, application performance (response times, error rates), user satisfaction (through surveys or feedback), and key business metrics (e.g., conversion rates for an e-commerce site). Post-deployment monitoring and logging data are essential for tracking these metrics. A detailed post-mortem review is conducted after the deployment to identify areas of improvement and lessons learned for future deployments. This analysis helps refine our processes and improve the efficiency and effectiveness of future deployments.

For example, in a recent website upgrade, we measured success based on improvements in page load time, bounce rate, and conversion rates. The post-mortem analysis revealed a small performance bottleneck that was subsequently addressed, further improving the overall user experience.

Q 14. Describe your experience with different deployment environments (e.g., on-premises, cloud).

I have extensive experience with various deployment environments, including on-premises, cloud (AWS, Azure, GCP), and hybrid deployments. Each environment presents its unique challenges and considerations.

On-premises deployments require careful planning and management of the physical infrastructure, including hardware, networking, and security. Cloud deployments offer greater scalability and flexibility but necessitate a strong understanding of cloud-specific services and best practices. Hybrid environments leverage the advantages of both, but require careful orchestration to ensure seamless integration. My approach involves tailoring the deployment strategy to the specific environment, using appropriate tools and techniques to ensure a smooth and secure deployment process.

For example, when deploying to AWS, I would utilize tools like AWS CloudFormation or Terraform for infrastructure provisioning, and AWS Elastic Beanstalk or Kubernetes for application deployment. For an on-premises deployment, I might use configuration management tools like Ansible or Puppet for automated server provisioning and configuration.

Q 15. How do you handle conflicts between development and operations teams?

Conflict between development and operations teams, often termed as the “DevOps gap,” is a common challenge. It usually arises from differing priorities, communication breakdowns, and a lack of shared understanding of the deployment process. To address this, I champion a collaborative approach focusing on clear communication and shared responsibility.

- Establish a Shared Goal: I begin by ensuring both teams understand and agree on the overall objectives of a deployment. This means clear definition of success metrics and realistic timelines. For example, a successful deployment might be defined not just by successful code deployment, but also by minimal service disruption and achievement of performance benchmarks.

- Implement Collaborative Tools: Utilizing shared project management tools (Jira, Asana, etc.) and communication platforms (Slack, Microsoft Teams) allows for transparent tracking of progress and efficient issue resolution. Real-time updates and shared documentation ensure everyone is on the same page.

- Foster Cross-Functional Training: Encouraging cross-training between developers and operations engineers builds mutual understanding and empathy. Developers gain operational insight, and operations engineers gain development context. This fosters better problem-solving and reduces misunderstandings.

- Establish a Feedback Loop: Regular retrospectives after deployments allow both teams to identify pain points and areas for improvement. This continuous feedback loop iteratively refines the process and strengthens collaboration. For example, a retrospective might reveal a need for clearer documentation around database schema changes.

In a recent project, a conflict arose between developers pushing frequent, small updates and operations struggling to maintain system stability. By implementing a robust CI/CD pipeline (discussed later) and establishing a clear change management process, we successfully resolved the conflict, achieving both frequent deployments and stable service operations.

Career Expert Tips:

- Ace those interviews! Prepare effectively by reviewing the Top 50 Most Common Interview Questions on ResumeGemini.

- Navigate your job search with confidence! Explore a wide range of Career Tips on ResumeGemini. Learn about common challenges and recommendations to overcome them.

- Craft the perfect resume! Master the Art of Resume Writing with ResumeGemini’s guide. Showcase your unique qualifications and achievements effectively.

- Don’t miss out on holiday savings! Build your dream resume with ResumeGemini’s ATS optimized templates.

Q 16. What is your experience with Infrastructure as Code (IaC)?

Infrastructure as Code (IaC) is a crucial aspect of modern deployment planning. It involves managing and provisioning infrastructure through code, rather than manual processes. This offers significant advantages in terms of consistency, reproducibility, and automation.

- Experience with Terraform and Ansible: I have extensive experience utilizing Terraform for managing cloud infrastructure (AWS, Azure, GCP) and Ansible for automating server configuration and application deployments. I’m proficient in writing, testing, and version-controlling IaC code.

- Benefits of IaC: IaC enhances consistency by ensuring that infrastructure is provisioned identically across different environments (development, staging, production). It promotes reproducibility, allowing for easy recreation of infrastructure in case of failures. The version control aspect enables rollbacks and ensures auditability of infrastructure changes.

- Example: In a recent project, we used Terraform to define and manage our entire AWS environment, including EC2 instances, VPCs, and databases. This allowed us to automate the creation and destruction of environments, speeding up our development cycle and reducing manual effort.

IaC is not just about automation; it’s about treating infrastructure as code, subjecting it to the same rigorous testing and version control as application code. This approach improves quality, reliability, and efficiency.

Q 17. Describe your experience with CI/CD pipelines.

CI/CD pipelines are the backbone of modern, automated deployment processes. CI (Continuous Integration) focuses on integrating code changes frequently, while CD (Continuous Delivery/Deployment) automates the process of releasing those changes to different environments.

- Experience with Jenkins, GitLab CI, and Azure DevOps: I’ve worked extensively with various CI/CD tools, customizing pipelines to fit specific project needs. This includes building, testing, and deploying applications to various environments.

- Pipeline Stages: A typical pipeline consists of stages such as code build, unit testing, integration testing, deployment to staging, acceptance testing, and finally, production deployment. Each stage is automated and triggered by the successful completion of the previous stage.

- Automated Testing: An important aspect of my CI/CD approach is incorporating automated testing at each stage. This ensures that the code is thoroughly tested before it reaches production, minimizing the risk of bugs and failures.

- Monitoring and Rollback Strategies: Post-deployment monitoring is crucial. The pipelines are configured to automatically trigger alerts and rollbacks in case of issues or failures.

For example, in a recent project using Jenkins, we implemented a pipeline that automatically built, tested, and deployed our application to staging and production environments every time code was pushed to the main branch. This significantly reduced deployment time and risk.

Q 18. How do you manage dependencies between different components during a deployment?

Managing dependencies between components is critical for successful deployments. Without proper management, deploying a new component can break existing ones, leading to service disruptions. This requires careful planning and execution.

- Dependency Mapping: I begin by creating a clear dependency map illustrating how different components interact. This map highlights which components rely on other components and in what order they must be deployed.

- Version Control and Orchestration: Utilizing version control for all components ensures that compatible versions are used together. Tools like Kubernetes or Docker Swarm orchestrate the deployment of multiple components, ensuring they are deployed in the correct order and with the correct dependencies.

- Containerization: Containerization (Docker) isolates components and their dependencies, reducing conflicts and ensuring consistent execution environments across different deployment stages.

- Configuration Management: Configuration management tools, like Ansible or Puppet, manage the configuration of each component, ensuring that all the necessary dependencies and settings are correctly configured.

For instance, in an e-commerce application, the payment gateway might depend on the product catalog and order processing systems. Using Docker and Kubernetes, we can containerize each component and deploy them in a coordinated manner, ensuring that the payment gateway only starts after the dependencies are operational.

Q 19. How do you ensure compliance during a deployment?

Compliance is paramount during deployments. Failing to meet regulatory requirements can have serious consequences. My approach involves integrating compliance checks throughout the deployment process.

- Security Scans: Automated security scans are performed at various stages of the pipeline to identify vulnerabilities. This involves static code analysis, dynamic application security testing (DAST), and software composition analysis (SCA).

- Configuration Audits: Regular audits ensure that infrastructure configurations and application settings comply with organizational policies and industry standards.

- Access Control and Authorization: Strict access control and authorization mechanisms ensure that only authorized personnel can initiate deployments and access sensitive data.

- Compliance Documentation: Detailed documentation tracks all deployment activities and ensures traceability to demonstrate compliance with regulations (e.g., HIPAA, GDPR, PCI DSS).

For example, before deploying a healthcare application, we’d perform rigorous security scans and configuration checks to ensure compliance with HIPAA regulations. Any vulnerabilities or non-compliant configurations would be addressed before the deployment is allowed to proceed.

Q 20. Explain your experience with disaster recovery and business continuity planning for deployments.

Disaster recovery and business continuity planning are essential for ensuring high availability and minimizing downtime during deployments. My experience includes developing and implementing comprehensive plans to address various failure scenarios.

- Disaster Recovery Plan: I develop detailed disaster recovery plans that outline steps to recover from various outages, including hardware failures, natural disasters, and cyberattacks. This plan encompasses data backups, failover mechanisms, and recovery procedures.

- High Availability Architecture: Deployments are designed with high availability in mind. This often involves using redundant systems, load balancers, and geographically distributed infrastructure.

- Testing and Drills: Regular disaster recovery drills simulate failure scenarios and test the effectiveness of recovery plans. This ensures that all procedures are well-understood and that the systems can be recovered quickly and efficiently.

- Business Continuity Plan: A business continuity plan outlines strategies for maintaining business operations during disruptions. This includes identifying critical business functions, developing contingency plans, and establishing communication protocols.

In a previous role, we implemented a disaster recovery plan that involved replicating our database to a separate geographic location. During a major outage in our primary data center, we were able to swiftly failover to the backup location, minimizing service disruption.

Q 21. Describe your experience with automating deployment processes.

Automating deployment processes is fundamental to efficient and reliable deployments. My experience encompasses various automation techniques and tools.

- CI/CD Pipelines (as discussed previously): I use CI/CD pipelines to automate the entire deployment process, from code build to production deployment.

- Infrastructure as Code (IaC): IaC automates the provisioning and management of infrastructure, ensuring consistent environments across different stages.

- Configuration Management Tools: Ansible, Puppet, Chef, etc., automate the configuration of servers and applications.

- Scripting (Bash, Python): I utilize scripting to automate repetitive tasks and integrate different tools within the deployment process.

For example, in a recent project, we automated the entire deployment process using a combination of Jenkins, Ansible, and Terraform. This reduced our deployment time from days to minutes, significantly improving our agility and efficiency.

Q 22. How do you prioritize tasks during a complex deployment?

Prioritizing tasks in a complex deployment hinges on a clear understanding of dependencies and risk. I typically employ a combination of methods. First, I use a prioritization matrix, often a MoSCoW method (Must have, Should have, Could have, Won’t have), to categorize tasks based on their business value and criticality. ‘Must-have’ tasks are non-negotiable and form the core of the deployment. ‘Should-have’ features add significant value but are less critical. ‘Could-have’ features are desirable but can be postponed if time or resources are constrained. ‘Won’t-have’ features are excluded from this deployment cycle.

Second, I consider dependencies. Tasks that are prerequisites for others are prioritized higher. For example, database schema changes might need to be completed before application code deployment. I visualize these dependencies using a dependency graph or Gantt chart to ensure a logical sequence. This prevents bottlenecks and allows for parallel execution where possible. Finally, risk assessment plays a crucial role. Tasks with higher potential for failure or significant impact are given priority, allowing for more time to address potential issues.

For instance, during a recent e-commerce website deployment, we prioritized database updates (Must have) and core shopping cart functionality (Must have) before deploying new marketing features (Should have). This ensured the core business functions were operational before introducing potentially less critical changes.

Q 23. What metrics do you use to track deployment performance?

Tracking deployment performance involves monitoring several key metrics across different stages. I focus on speed, success rate, and impact. Speed metrics include deployment frequency (how often deployments occur), lead time (time from code commit to deployment), and deployment duration (time taken for a single deployment). A high deployment frequency indicates an efficient and agile process. Short lead times and deployment durations demonstrate efficiency and reduce risk.

Success rate is measured by the percentage of successful deployments without critical failures. This is crucial for evaluating the reliability and stability of the deployment pipeline. Impact metrics assess the effects of the deployment on the system. These include key performance indicators (KPIs) such as application response time, error rates, and resource utilization (CPU, memory). We also monitor user experience metrics like page load times and error rates reported from our monitoring tools.

For example, we might track the average deployment time, the percentage of deployments completed without rollback, and changes to application response time post-deployment to evaluate the overall performance of our deployment process. This data helps us identify areas of improvement and optimize our workflow for future releases.

Q 24. How do you handle change requests during a deployment?

Handling change requests during a deployment requires a rigorous process to balance agility and stability. A formal change management process is essential, typically involving a Change Advisory Board (CAB) to review and approve any changes requested during the deployment window. The impact of each change request is carefully evaluated, considering its potential effects on other components and the overall deployment schedule. Urgent, low-risk changes can sometimes be accommodated through a fast-track process. However, high-risk or significant changes are usually deferred to the next deployment cycle unless critically required.

Effective communication is critical. The development and operations teams need to collaborate effectively to prioritize and manage change requests. Transparent communication with stakeholders keeps everyone informed about any changes impacting timelines or functionality. A well-defined change control process will help mitigate any disruptions or risks during deployment.

For instance, if a critical bug emerges during deployment, we’ll assess its severity. If it requires an immediate fix, a CAB review might be expedited to approve a hotfix deployment; otherwise, it would be scheduled for a later, planned deployment.

Q 25. Describe a time you had to troubleshoot a critical deployment issue.

During a large-scale e-commerce platform update, we encountered a critical issue where the new version failed to connect to the external payment gateway, leading to a complete outage of the checkout process. This resulted in significant revenue loss and customer dissatisfaction. The initial troubleshooting involved verifying network connectivity, reviewing logs, and examining the application’s configuration files. We found that a misconfiguration in the new application deployment script was preventing the correct settings from being transferred to the production environment.

Our initial approach focused on identifying the root cause. This involved a systematic review of the deployment logs, a comparison of the configuration files between the staging and production environments, and a check of the payment gateway’s service status. Once the misconfiguration was identified, we initiated a rollback to the previous stable version to restore functionality quickly, minimizing further downtime. A parallel effort was launched to correct the misconfiguration in the deployment script. Following this correction, we re-deployed the corrected version in a controlled, staged rollout to mitigate further risk.

Post-incident, a thorough post-mortem analysis was conducted to identify the root cause, create corrective actions, and develop preventative measures. This included improving the deployment scripts and introducing more robust testing procedures, ensuring that such an issue would not repeat. This event emphasized the need for rigorous testing, monitoring, and a robust rollback plan.

Q 26. How do you manage the documentation for deployment procedures?

Maintaining comprehensive documentation is paramount for operational efficiency and successful deployments. We utilize a version-controlled wiki, coupled with automated documentation generation. This wiki serves as a central repository for all deployment-related documents. It includes detailed step-by-step instructions, diagrams illustrating the deployment architecture, scripts used for automating deployments, and troubleshooting guides. Version control allows us to track changes, revert to previous versions if necessary, and maintain a clear audit trail.

Automated documentation generation is a key aspect. Our CI/CD pipeline automatically generates deployment reports, including timestamps, success/failure status, and any encountered errors. These reports provide valuable insights and assist in analyzing deployment performance. We also incorporate documentation directly into our deployment scripts and infrastructure-as-code (IaC) definitions, ensuring consistency and accuracy. This ‘as-code’ approach creates a single source of truth that enhances maintainability and reduces errors.

Regular reviews of the documentation by the deployment team ensure that it remains accurate, up-to-date, and easy to understand. This collaborative process promotes clarity and reduces risks associated with outdated or incomplete instructions.

Q 27. What is your experience with different deployment strategies (e.g., blue/green, canary)?

I have extensive experience with various deployment strategies, each with its own advantages and disadvantages. Blue/green deployments minimize downtime by deploying a new version alongside the existing one. Once the new version is fully tested and validated, traffic is switched from the old (blue) to the new (green) environment. This ensures zero downtime and rapid rollback capability. Canary deployments offer a gradual rollout, releasing the new version to a small subset of users or servers. This allows for early detection of issues in a controlled environment, reducing the impact of widespread failures. Other strategies I’ve utilized include rolling deployments (gradually updating servers one by one), and A/B testing (releasing different versions to different user groups to compare performance).

The choice of strategy depends on factors such as the application’s criticality, the risk tolerance, and the complexity of the update. For instance, for critical systems with high availability requirements, blue/green deployments are often preferred due to their minimal downtime and easy rollback capabilities. Canary deployments are advantageous when testing new features or significant changes that require careful monitoring of user impact. Rolling deployments are good for simple updates, while A/B testing helps determine the optimal configuration from multiple alternatives.

Q 28. How do you stay up-to-date with the latest deployment technologies and best practices?

Staying current in the dynamic field of deployment technologies requires a multi-faceted approach. I actively participate in online communities, such as those on Stack Overflow and dedicated forums, engaging in discussions and learning from others’ experiences. Attending industry conferences and webinars provides valuable insights into the latest trends and best practices. Following key industry influencers and thought leaders on social media and through their publications keeps me abreast of the newest developments.

Moreover, I regularly review technical documentation and white papers from leading cloud providers (AWS, Azure, GCP) and DevOps tool vendors (e.g., Jenkins, GitLab CI, Kubernetes). I actively experiment with new tools and technologies in controlled environments, implementing proof-of-concept projects to gain hands-on experience. This allows me to assess the practical applicability of new approaches before incorporating them into production workflows. Finally, continuous learning is crucial, and I invest time in online courses and certifications to strengthen my skills and knowledge base.

Key Topics to Learn for Operational Deployment Planning Interview

- Deployment Strategies: Understanding various deployment methodologies (e.g., blue/green, canary, rolling) and their practical implications for different systems and environments. Consider the trade-offs of each approach.

- Risk Management & Mitigation: Developing strategies to identify, assess, and mitigate potential risks throughout the deployment lifecycle. This includes considering failure scenarios and recovery plans.

- Resource Allocation & Optimization: Efficiently planning and allocating resources (servers, network bandwidth, personnel) to ensure successful and timely deployments. Discuss techniques for optimizing resource utilization.

- Monitoring & Logging: Implementing robust monitoring and logging systems to track deployment progress, identify issues, and facilitate troubleshooting. Explain the importance of observability.

- Automation & CI/CD: Leveraging automation tools and implementing Continuous Integration/Continuous Delivery (CI/CD) pipelines to streamline the deployment process and reduce manual intervention.

- Rollback & Recovery Planning: Defining clear procedures for rolling back deployments in case of failures and ensuring rapid recovery to a stable state. Explore different rollback strategies.

- Capacity Planning & Scaling: Forecasting future needs and planning for scalability to accommodate growth in user traffic or data volume. Discuss vertical and horizontal scaling.

- Security Considerations: Integrating security best practices throughout the deployment process to protect systems and data from vulnerabilities and threats. Mention security scanning and penetration testing.

- Communication & Collaboration: Effectively communicating deployment plans and progress to stakeholders, fostering collaboration among team members, and managing expectations.

Next Steps

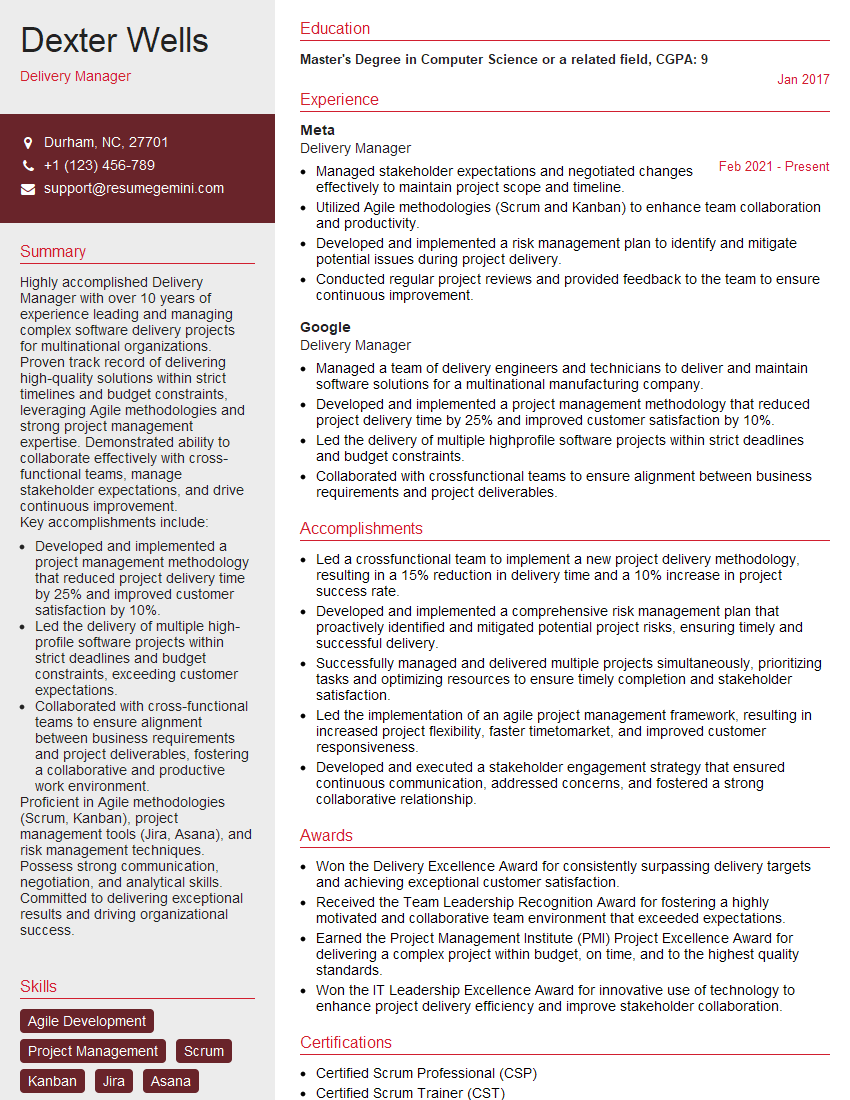

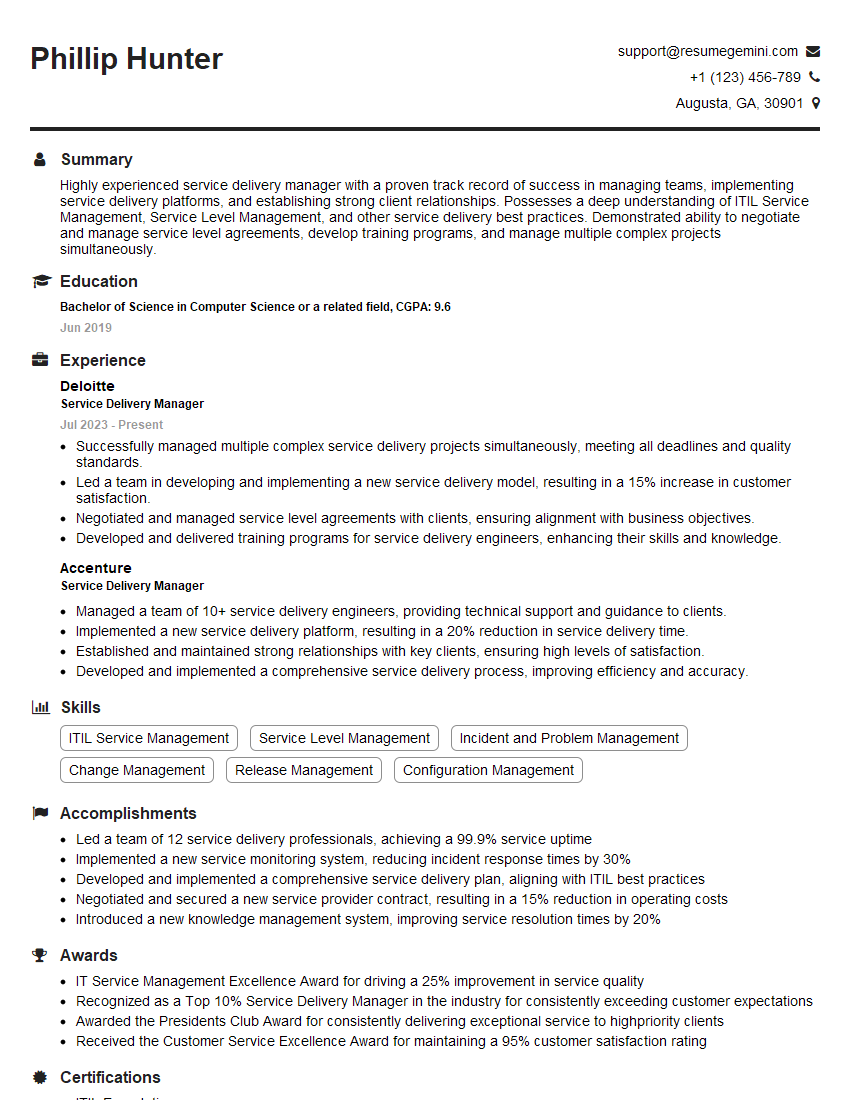

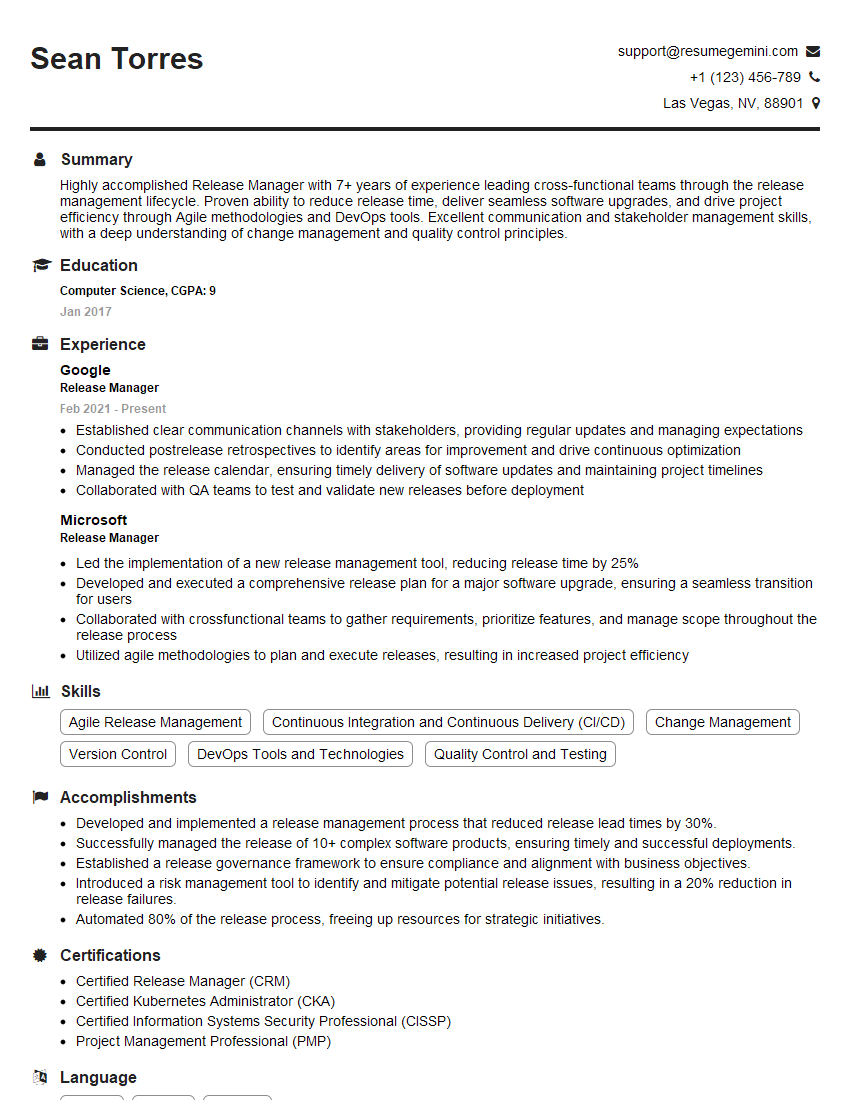

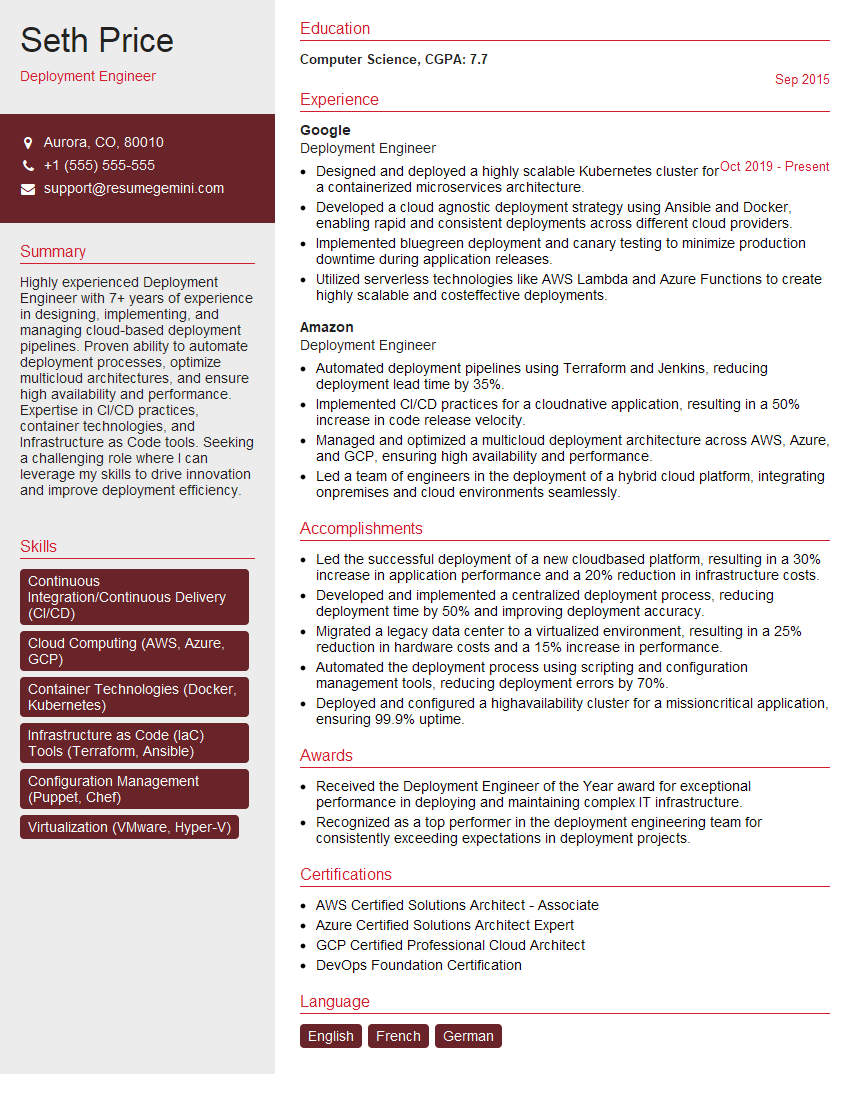

Mastering Operational Deployment Planning is crucial for career advancement in technology, opening doors to leadership roles and high-impact projects. An ATS-friendly resume is essential to highlight your skills and experience to potential employers. To create a compelling and effective resume that showcases your expertise in Operational Deployment Planning, leverage ResumeGemini. ResumeGemini offers a powerful platform to build professional resumes, and examples tailored to Operational Deployment Planning are available to help you get started. Take the next step towards your dream job!

Explore more articles

Users Rating of Our Blogs

Share Your Experience

We value your feedback! Please rate our content and share your thoughts (optional).

What Readers Say About Our Blog

Hello,

We found issues with your domain’s email setup that may be sending your messages to spam or blocking them completely. InboxShield Mini shows you how to fix it in minutes — no tech skills required.

Scan your domain now for details: https://inboxshield-mini.com/

— Adam @ InboxShield Mini

Reply STOP to unsubscribe

Hi, are you owner of interviewgemini.com? What if I told you I could help you find extra time in your schedule, reconnect with leads you didn’t even realize you missed, and bring in more “I want to work with you” conversations, without increasing your ad spend or hiring a full-time employee?

All with a flexible, budget-friendly service that could easily pay for itself. Sounds good?

Would it be nice to jump on a quick 10-minute call so I can show you exactly how we make this work?

Best,

Hapei

Marketing Director

Hey, I know you’re the owner of interviewgemini.com. I’ll be quick.

Fundraising for your business is tough and time-consuming. We make it easier by guaranteeing two private investor meetings each month, for six months. No demos, no pitch events – just direct introductions to active investors matched to your startup.

If youR17;re raising, this could help you build real momentum. Want me to send more info?

Hi, I represent an SEO company that specialises in getting you AI citations and higher rankings on Google. I’d like to offer you a 100% free SEO audit for your website. Would you be interested?

Hi, I represent an SEO company that specialises in getting you AI citations and higher rankings on Google. I’d like to offer you a 100% free SEO audit for your website. Would you be interested?

good