Unlock your full potential by mastering the most common Developing and Implementing Training Programs interview questions. This blog offers a deep dive into the critical topics, ensuring you’re not only prepared to answer but to excel. With these insights, you’ll approach your interview with clarity and confidence.

Questions Asked in Developing and Implementing Training Programs Interview

Q 1. Describe your experience developing training programs using ADDIE or a similar model.

The ADDIE model (Analysis, Design, Development, Implementation, Evaluation) is the cornerstone of my training program development approach. It provides a structured framework ensuring a systematic and effective process.

- Analysis: This stage involves identifying the training need, analyzing the target audience’s knowledge and skills, and defining learning objectives. For example, if a company is experiencing high employee turnover, I’d analyze the root causes – perhaps a lack of training in conflict resolution or communication – to inform the training content.

- Design: Here, I develop the training plan, select appropriate instructional methods (lectures, group activities, simulations), and create storyboards or outlines for the training materials. This might involve choosing between an in-person workshop and an online course, depending on the audience and subject matter.

- Development: This is where the actual training materials are created – presentations, handbooks, eLearning modules, etc. For instance, I might develop interactive eLearning modules incorporating quizzes and simulations to reinforce learning.

- Implementation: This stage focuses on delivering the training, which includes scheduling sessions, preparing the training environment, and providing support to participants. I always ensure adequate resources and a conducive learning environment are in place.

- Evaluation: This final stage involves assessing the effectiveness of the training program. This may involve pre and post-training assessments, surveys, or observation of on-the-job performance. The evaluation data informs future improvements and demonstrates ROI.

I’ve successfully utilized ADDIE in numerous projects, including developing a comprehensive sales training program that resulted in a 15% increase in sales conversions within six months. This involved a thorough needs analysis, identifying key sales skills gaps, and designing interactive training modules complemented by in-person coaching sessions.

Q 2. How do you assess the effectiveness of a training program?

Assessing training effectiveness goes beyond simply asking participants if they enjoyed the program. A robust evaluation considers Kirkpatrick’s four levels of evaluation:

- Reaction: Measuring participant satisfaction and engagement through surveys and feedback forms. This gives a general sense of the training’s acceptance.

- Learning: Assessing whether participants acquired the knowledge and skills taught. This could be done using pre and post-tests, quizzes, or knowledge checks within the training.

- Behavior: Observing whether participants apply what they learned on the job. This may involve performance reviews, 360-degree feedback, or direct observation by supervisors.

- Results: Measuring the impact of training on organizational goals. This might involve tracking improvements in productivity, sales, customer satisfaction, or other key metrics. For example, if the goal was to reduce errors, we would track the error rate before and after training.

By using a multi-faceted approach incorporating all four levels, we gain a comprehensive understanding of the training’s true impact.

Q 3. What methods do you use to identify training needs?

Identifying training needs is crucial for creating effective programs. I employ a variety of methods, including:

- Performance appraisals: Reviewing employee performance reviews to identify skill gaps and areas for improvement.

- Surveys and questionnaires: Gathering feedback from employees, managers, and customers to understand their perspectives on training needs.

- Interviews: Conducting one-on-one interviews with employees and managers to gain in-depth insights into their training requirements. This allows for more nuanced understanding of individual needs.

- Focus groups: Facilitating group discussions to gather diverse perspectives and identify common training needs. This can be particularly helpful in identifying hidden or unspoken issues.

- Observation: Observing employees on the job to identify areas where training could improve their performance. This direct observation often reveals nuances not apparent through other methods.

- Analysis of business data: Examining data such as sales figures, customer satisfaction scores, and accident rates to pinpoint areas where training may improve outcomes. For example, a high number of customer complaints might indicate a need for customer service training.

A combination of these methods provides a holistic view of the training needs, ensuring the program addresses the most critical areas for improvement.

Q 4. Explain your experience in creating engaging and effective eLearning content.

Creating engaging eLearning content requires a blend of instructional design principles and multimedia techniques. I focus on:

- Microlearning: Breaking down large topics into smaller, easily digestible modules. This improves knowledge retention and reduces cognitive overload.

- Interactive elements: Incorporating quizzes, simulations, branching scenarios, and gamification elements to keep learners actively involved. For example, a simulation allowing learners to practice handling customer complaints in a safe environment.

- Multimedia: Utilizing visuals, audio, and video to cater to different learning styles and enhance comprehension. A combination of text, images, and audio narration can reinforce learning.

- Storytelling: Using narratives and real-world examples to connect with learners emotionally and make the content relatable.

- Accessibility: Designing eLearning materials to be accessible to learners with disabilities, ensuring compliance with WCAG (Web Content Accessibility Guidelines). This might involve using alt text for images, providing transcripts for videos, and using appropriate color contrast.

In a recent project, I developed an eLearning course on cybersecurity awareness using gamified scenarios and interactive modules. Learner engagement was significantly higher compared to traditional training methods, with a 20% improvement in knowledge retention scores.

Q 5. How do you adapt training materials for diverse learning styles?

Accommodating diverse learning styles is paramount for effective training. I utilize several strategies:

- Multiple learning modalities: Employing a variety of methods such as visual aids, auditory explanations, hands-on activities, and written materials to cater to visual, auditory, kinesthetic, and reading/writing learners.

- Personalized learning paths: Allowing learners to choose learning paths that suit their preferences and learning styles, providing flexible options for pacing and content delivery.

- Differentiated instruction: Adjusting the teaching methods and materials to meet the individual needs of learners. For example, providing additional support or more challenging tasks to cater to varying skill levels.

- Universal design for learning (UDL): Applying UDL principles to ensure the training is accessible and engaging for all learners, regardless of their abilities or learning styles. This involves providing multiple means of representation, action and expression, and engagement.

For instance, when designing a leadership training program, I’d incorporate role-playing exercises for kinesthetic learners, case studies for analytical learners, and video presentations for visual learners.

Q 6. What strategies do you use to ensure participant engagement during training?

Keeping participants engaged throughout training is crucial for effective learning. My strategies include:

- Interactive activities: Incorporating group discussions, brainstorming sessions, games, and quizzes to promote active participation and knowledge application.

- Real-world examples and case studies: Relating the training content to real-world scenarios to make it more relevant and engaging.

- Storytelling and humor: Using narratives and humor to connect with participants emotionally and maintain their interest.

- Collaboration and peer learning: Encouraging participants to work together, share ideas, and learn from each other.

- Regular feedback and recognition: Providing regular feedback and acknowledging participants’ contributions to boost morale and motivation.

- Technology integration: Utilizing interactive technology such as simulations, gamification, and virtual reality to enhance engagement and create immersive learning experiences.

For example, in a customer service training, I might use a role-playing exercise where participants simulate handling difficult customer situations, thereby making learning practical and memorable.

Q 7. Describe your experience with needs analysis and performance improvement.

Needs analysis and performance improvement are intrinsically linked. Needs analysis identifies the gap between current performance and desired performance, while performance improvement focuses on bridging that gap through targeted interventions, including training.

My experience involves conducting thorough needs analyses using the methods described previously (surveys, interviews, data analysis). This helps identify root causes of performance issues, whether it’s a lack of knowledge, skills, or resources. Once the needs are identified, I design and implement training programs or other interventions aimed at improving performance.

For example, I once worked with a manufacturing company experiencing high rates of product defects. Through needs analysis, I discovered a lack of proper training in quality control procedures. The subsequent training program, which included hands-on workshops and on-the-job coaching, resulted in a significant reduction in product defects and a demonstrable improvement in overall efficiency.

Performance improvement is an iterative process. I regularly monitor progress using key performance indicators (KPIs) and adjust the interventions as needed to maximize their effectiveness. This continuous improvement cycle ensures long-term, sustainable performance enhancement.

Q 8. How do you manage the budget for a training program?

Budget management for a training program is crucial for its success. It involves a detailed process starting with needs assessment to determine the scope and resources required. This includes identifying all costs, from instructor fees and materials to venue rental and technology. I begin by creating a comprehensive budget spreadsheet, categorizing expenses and projecting revenue (if applicable through participant fees or internal chargebacks). This spreadsheet serves as a dynamic tool, regularly updated throughout the program lifecycle.

For example, if developing a training program on project management, I’d factor in costs for training materials (workbooks, online resources), instructor compensation, potentially software licenses for project management tools used during the training, and room rental or virtual platform fees. Contingency funds are also essential – around 10-15% of the total budget – to account for unexpected expenses. Regular monitoring against the budget is paramount, flagging potential overruns and enabling proactive adjustments.

I also look for cost-saving opportunities. This could involve leveraging existing company resources, negotiating favorable rates with vendors, or utilizing free or open-source tools. Finally, I present a clear, well-documented budget proposal to stakeholders for approval, ensuring transparency and accountability throughout the process.

Q 9. How do you measure the ROI of a training program?

Measuring the Return on Investment (ROI) of a training program isn’t simply about the numbers; it’s about demonstrating the value added to the organization. I use a multi-faceted approach, combining quantitative and qualitative data. Quantitative data might include metrics like improved employee performance (measured through performance appraisals or key performance indicators – KPIs), reduced errors, increased efficiency, or cost savings resulting from improved skills.

For instance, if a sales training program results in a 15% increase in average sales per employee, that’s quantifiable and easily translates into a financial return. Qualitative data, however, is equally important. This includes feedback gathered through post-training surveys, focus groups, or one-on-one interviews with participants and their managers. This provides insights into employee satisfaction, knowledge gain, skill application on the job, and overall program impact. I then analyze this data using a variety of methods, such as cost-benefit analysis and calculating the return on investment (ROI) using a formula that considers the costs of the program against the benefits.

Ultimately, a successful ROI demonstration requires a clear understanding of the program’s objectives, a well-defined method for measuring those objectives, and a comprehensive analysis of both quantitative and qualitative data. Presenting this information in a clear, concise, and compelling way to stakeholders is crucial to showing the true value of the investment.

Q 10. What software or tools do you use for developing and delivering training?

My toolkit for developing and delivering training is diverse and adapts to the program’s needs. For course content creation, I extensively use Microsoft PowerPoint for presentations, Articulate Storyline 360 and Adobe Captivate for creating engaging e-learning modules, and Camtasia for screen recordings and video editing. For managing learning content and tracking progress, Moodle or Learning Management Systems (LMS) like Canvas are invaluable. These platforms facilitate online delivery, assignments, quizzes, and automated feedback.

For in-person training, tools like Mentimeter or Kahoot! enhance engagement through interactive quizzes and polls. Collaboration tools like Microsoft Teams or Google Workspace streamline communication and collaboration amongst participants and instructors. I also make use of project management software like Asana or Trello to manage tasks, deadlines, and resources effectively during development and delivery of the training program. The specific tools chosen always depend on the program’s learning objectives, budget, and target audience.

Q 11. How do you handle difficult participants or unexpected challenges during training?

Handling difficult participants or unexpected challenges requires a proactive and adaptable approach. First, prevention is key. I establish clear expectations and ground rules at the start of the training. This includes outlining participation norms, communication guidelines, and addressing potential disruptive behaviors. For example, I’d emphasize active listening, respectful communication, and the importance of creating a safe learning environment for all participants.

If challenges arise, I address them directly but diplomatically. A private conversation with the participant often helps to understand the root cause of their behavior. If it’s related to the training content, I adjust my delivery to address their concerns. If the behavior is disruptive, a firm but respectful reminder of the ground rules might be necessary. In extreme cases, I might need to involve HR or management. Unexpected challenges, such as technical glitches during online training or an unexpected absence of a key participant, are handled by having backup plans in place. This might involve pre-recorded content, alternative delivery methods, or a revised schedule.

Flexibility and empathy are essential in navigating these situations, remembering that each participant brings a unique perspective and experience to the learning environment. My goal is always to create a positive and productive learning experience for everyone.

Q 12. Describe your experience with different training delivery methods (e.g., online, in-person, blended).

I have extensive experience with various training delivery methods, recognizing the strengths and weaknesses of each. In-person training excels in fostering immediate interaction, facilitating group discussions, and building rapport. I’ve led numerous in-person workshops and seminars, focusing on creating interactive and engaging sessions through activities, group work, and role-playing. However, in-person training can be expensive and logistically challenging.

Online training, through platforms like Moodle or custom e-learning modules, offers flexibility and scalability. It’s cost-effective and accessible to a wider audience regardless of geographical location. I’ve designed and delivered many online courses, leveraging interactive elements, videos, and assessments to maintain participant engagement. However, online learning can lack the immediate feedback and social interaction of in-person training. Blended learning, combining the best of both worlds, is often the most effective approach. It might involve an online introductory module followed by an in-person workshop or vice-versa. This strategy provides flexibility while also retaining the benefits of direct interaction.

The choice of method is determined by factors such as the training objectives, target audience, budget, technological capabilities, and learning styles.

Q 13. How do you ensure the quality and consistency of training materials?

Maintaining the quality and consistency of training materials is paramount. My approach is multifaceted, beginning with a rigorous development process involving multiple reviews and quality checks. I start with a clear learning objective and design materials that are aligned with those objectives. This involves creating a detailed outline, writing clear and concise content, and using appropriate visuals and interactive elements to enhance understanding.

The materials undergo several rounds of review, starting with a self-review, followed by peer review by other training professionals, and finally, a review by subject matter experts (SMEs) to ensure both pedagogical soundness and accuracy of content. Consistent formatting, branding, and style guidelines are strictly adhered to throughout the development process. Version control is critical, using tools like Google Docs or dedicated content management systems to track changes and maintain the most up-to-date version of the materials. Following the development phase, a pilot test with a small group of participants helps identify and address any gaps or areas for improvement before widespread deployment. Regular updates and revisions are undertaken based on feedback and changes in industry best practices.

Q 14. How do you incorporate feedback from participants to improve training programs?

Participant feedback is invaluable for program improvement. I employ various methods to collect feedback, including post-training surveys, focus groups, and informal feedback sessions. Surveys are structured to gather quantitative data on participant satisfaction, perceived learning gains, and areas for improvement. Open-ended questions allow for qualitative feedback, providing valuable insights into participant experiences and suggestions.

Focus groups provide opportunities for more in-depth discussions and interactions among participants, allowing for a collaborative exploration of training strengths and weaknesses. Informal feedback, gathered through conversations and observations, provides immediate insights into participant reactions and can help identify immediate areas needing attention. All feedback is carefully analyzed, categorized, and prioritized based on its impact on the training’s effectiveness. This analysis informs revisions to training materials, delivery methods, and overall program design. A feedback loop mechanism, where changes based on feedback are implemented and communicated, helps build trust and improves the overall quality of future training programs.

Q 15. Describe your experience with creating assessments to evaluate training effectiveness.

Creating effective assessments is crucial for evaluating the impact of training programs. My approach involves a multi-faceted strategy that goes beyond simple knowledge checks. I design assessments aligned with the learning objectives, using a variety of methods to gauge different levels of learning – from knowledge recall to application and analysis.

For example, in a training program on project management, I wouldn’t just use a multiple-choice test. Instead, I’d incorporate a combination of methods: a pre-training assessment to identify existing knowledge gaps, a post-training test to measure knowledge acquisition, practical exercises simulating real-world project scenarios, and finally, a follow-up assessment several weeks later to evaluate long-term retention and application of learned skills. This approach provides a comprehensive picture of training effectiveness.

I often use Kirkpatrick’s Four Levels of Evaluation as a framework. This model assesses: Reaction (how participants felt about the training), Learning (what participants learned), Behavior (how participants apply learning on the job), and Results (the impact of the training on organizational goals). Each level requires different assessment methods, ensuring a thorough evaluation.

Career Expert Tips:

- Ace those interviews! Prepare effectively by reviewing the Top 50 Most Common Interview Questions on ResumeGemini.

- Navigate your job search with confidence! Explore a wide range of Career Tips on ResumeGemini. Learn about common challenges and recommendations to overcome them.

- Craft the perfect resume! Master the Art of Resume Writing with ResumeGemini’s guide. Showcase your unique qualifications and achievements effectively.

- Don’t miss out on holiday savings! Build your dream resume with ResumeGemini’s ATS optimized templates.

Q 16. How do you stay current with the latest trends in training and development?

Staying current in the dynamic field of training and development is paramount. I achieve this through a multi-pronged approach. I actively participate in professional development opportunities, such as attending conferences (like ATD’s ICE) and webinars hosted by industry leaders. This keeps me abreast of new methodologies, technologies, and best practices.

I also subscribe to relevant journals and publications, such as Training magazine and follow thought leaders and organizations on social media platforms like LinkedIn and Twitter. This ensures I’m exposed to a wide range of perspectives and cutting-edge research. Additionally, I actively seek out opportunities for peer learning and knowledge sharing by networking with other professionals in the field.

Finally, I dedicate time to researching new technologies and tools relevant to training delivery, such as new LMS features and innovative e-learning platforms. Continuous learning is essential for remaining competitive and delivering high-quality training programs.

Q 17. What is your experience with learning management systems (LMS)?

My experience with Learning Management Systems (LMS) is extensive. I’m proficient in using several popular platforms, including Moodle, Canvas, and Cornerstone OnDemand. My expertise encompasses more than just basic navigation; I understand how to leverage LMS features to optimize the learning experience. This includes creating and managing courses, tracking learner progress, administering assessments, and generating reports to analyze training effectiveness.

Beyond the technical aspects, I’m adept at integrating various training materials, including videos, interactive exercises, and documents, into an LMS environment. I also understand the importance of creating a user-friendly experience for learners, ensuring intuitive navigation and engaging content. My experience extends to customizing LMS settings to meet specific organizational needs and integrating the LMS with other HR systems.

Q 18. How do you design training programs for specific learning objectives?

Designing training programs for specific learning objectives is a systematic process. It starts with a thorough needs analysis, identifying the knowledge, skills, and attitudes (KSAs) required for successful performance. This analysis involves gathering data through interviews, surveys, and performance reviews to understand the performance gap.

Once the learning objectives are clearly defined, I use instructional design models like ADDIE (Analysis, Design, Development, Implementation, Evaluation) or SAM (Successive Approximation Model) to structure the program. The design phase involves selecting appropriate training methods (e.g., lectures, workshops, simulations, e-learning) and developing engaging and effective content that directly addresses the learning objectives. I always ensure the content is relevant, practical, and aligned with the learners’ needs and learning styles.

For example, if the objective is to improve customer service skills, the training might include role-playing exercises, scenario-based learning, and feedback sessions. The program’s effectiveness would be measured by observing improved customer satisfaction scores and employee performance reviews.

Q 19. How do you ensure accessibility in your training materials?

Accessibility is a core principle in my training material design. I ensure all materials are compliant with WCAG (Web Content Accessibility Guidelines) standards to make them usable by people with disabilities. This involves several key considerations:

- Alternative text for images: Providing descriptive alt text for all images allows screen readers to convey the image’s content to visually impaired learners.

- Captions and transcripts for videos: Captions make videos accessible to deaf and hard-of-hearing learners, while transcripts provide another way to access the content.

- Keyboard navigation: Ensuring all interactive elements are navigable using only a keyboard is vital for learners with motor impairments.

- Color contrast: Using sufficient color contrast between text and background ensures readability for learners with visual impairments.

- Structural markup: Using proper heading tags (

<h1>to<h6>), lists (<ul>,<ol>), and other semantic HTML elements makes the content more easily parsed by assistive technologies.

Furthermore, I provide multiple formats for learning materials, such as offering text-based versions alongside videos or providing audio recordings of key information. This approach ensures broader accessibility for diverse learning needs.

Q 20. Explain your experience with creating training for technical subjects.

I have extensive experience developing training for technical subjects, particularly in areas like software development, data analytics, and cybersecurity. My approach focuses on breaking down complex topics into manageable chunks, using clear and concise language, and employing various instructional techniques to cater to diverse learning styles.

I frequently use visual aids like diagrams, flowcharts, and simulations to illustrate technical concepts. Hands-on activities, such as coding exercises or lab simulations, allow learners to practice their skills in a safe environment. Real-world case studies and examples make the learning relevant and relatable.

For example, when developing training on a new software application, I might begin with an overview of the software’s architecture, then progress to hands-on modules focusing on specific features. Each module would include clear instructions, examples, and opportunities for learners to practice and receive feedback. The process would be iterative, allowing for adjustments based on learner feedback and assessment results.

Q 21. Describe a time you had to develop a training program under tight deadlines.

I once had to develop a comprehensive training program for a new software rollout within a tight three-week deadline. This required a highly efficient and collaborative approach. The first step was prioritizing the most critical learning objectives, focusing on the core functionalities of the software that employees needed to master immediately.

I employed a rapid prototyping methodology, developing short, focused training modules and testing them iteratively with a small group of beta testers. Their feedback informed immediate adjustments, ensuring the final product addressed the key learning needs efficiently. We used readily available assets and templates whenever possible to save time on design and development. I also leveraged online collaboration tools to facilitate communication and feedback sharing among the team.

While the time constraints were challenging, the collaborative approach and focus on core objectives allowed us to deliver a high-quality training program that effectively prepared employees for the software rollout. The success was validated by positive employee feedback and a smooth transition to the new system.

Q 22. How do you handle conflict or disagreements among team members involved in training development?

Conflict is inevitable in team projects, especially when diverse perspectives are involved in training development. My approach prioritizes open communication and collaborative problem-solving. I start by creating a safe space where team members feel comfortable expressing their opinions and concerns. This often involves establishing clear ground rules for respectful dialogue and active listening.

If a disagreement arises, I facilitate a structured discussion, ensuring everyone has a chance to articulate their viewpoint. I focus on understanding the underlying reasons for the conflict, rather than just the surface-level disagreement. This might involve asking clarifying questions like, “Can you tell me more about why you feel that way?” or “What are the potential implications of this approach?”

Once the root causes are identified, we collaboratively brainstorm solutions. This might involve using techniques like compromise, negotiation, or even voting on different options. Throughout the process, I emphasize finding a solution that aligns with the overall training objectives and meets the needs of the target audience. Finally, we document the agreed-upon solution and incorporate it into the training plan. For instance, in a past project developing leadership training, a conflict arose about the best methodology for a specific module. Through facilitated discussion, we identified differing preferences in learning styles. This led to the development of a hybrid module incorporating both lecture and interactive group activities, addressing the concerns of all team members.

Q 23. What are your preferred methods for tracking participant progress and completion?

Tracking participant progress and completion is crucial for evaluating training effectiveness. My preferred methods utilize a blended approach leveraging technology and traditional methods. This includes Learning Management Systems (LMS) such as Moodle or Canvas for online courses.

These platforms allow for automated tracking of course completion, quiz scores, and assignment submissions. For in-person training, I often use attendance sheets, signed evaluations, and progress tracking sheets where participants self-report their progress on specific assignments. These sheets allow participants to self-monitor their own progress and help identify any areas where they might need additional support.

Beyond these, I also incorporate post-training surveys to gauge learner satisfaction and identify areas for improvement. This allows me to gain qualitative feedback to complement the quantitative data from the LMS and progress sheets. I find combining these methods offers a well-rounded understanding of learner progress, making sure we’re capturing both completion rates and learning outcomes. For example, in a recent sales training program, the LMS tracked completion rates while post-training surveys revealed valuable feedback about the effectiveness of the sales techniques taught, allowing us to adjust the training for the next cohort.

Q 24. How do you ensure that training materials are aligned with organizational goals?

Aligning training materials with organizational goals is paramount to ensuring the training investment delivers a return. My approach begins with a thorough needs analysis that clearly identifies the organization’s strategic objectives and the skills gap that needs to be addressed through training.

I work closely with stakeholders from different departments to understand their perspectives and align the training content with their priorities. This often involves reviewing strategic plans, conducting interviews with key personnel, and analyzing existing performance data. The resulting training objectives should directly contribute to achieving the organization’s broader goals.

For instance, if the organization’s goal is to increase customer satisfaction, training might focus on improving customer service skills, conflict resolution, or product knowledge. Throughout the development process, I regularly check for alignment, ensuring that every module, activity, and assessment directly contributes to achieving those specific objectives. This ensures the training is not merely an isolated activity but rather a strategic initiative to support the organization’s overall success.

Q 25. Explain your experience with different training evaluation methods (e.g., Kirkpatrick’s model).

Kirkpatrick’s four levels of training evaluation provide a comprehensive framework for assessing training effectiveness. I have extensive experience applying this model to evaluate various training programs.

- Level 1: Reaction: This involves measuring participants’ reactions to the training, typically through post-training surveys or feedback sessions. This helps assess learner satisfaction and identifies areas for improvement in delivery or content.

- Level 2: Learning: This level assesses the knowledge and skills gained by participants. Methods include pre- and post-training tests, quizzes, or practical assessments. This determines whether the training effectively transferred knowledge and skills.

- Level 3: Behavior: This level evaluates whether participants apply their learning on the job. This requires observing participants’ performance, analyzing their work output, or gathering feedback from supervisors. This demonstrates the actual impact on workplace performance.

- Level 4: Results: This level measures the overall impact of the training on organizational outcomes. This often involves analyzing metrics like improved productivity, reduced errors, or increased sales. This demonstrates the ultimate value of the training investment.

I often use a combination of these levels to gain a holistic view of training effectiveness. For example, in a recent leadership development program, we utilized pre- and post-tests (Level 2), 360-degree feedback from peers and supervisors (Level 3), and analyzed team performance metrics after the training (Level 4) to determine the overall program success.

Q 26. How do you ensure that training programs are scalable and adaptable to future needs?

Scalability and adaptability are crucial for long-term training effectiveness. I design training programs with these factors in mind from the outset. This involves using modular design principles, where the training is broken down into smaller, self-contained units.

This allows for flexibility in delivery – some modules can be delivered online, others in person, and some can be adapted for different audiences. I also utilize technology that supports scalability, such as Learning Management Systems (LMS) that can handle a large number of participants and easily adapt to different devices and learning platforms. Furthermore, I ensure that the training content is easily updated and revised based on feedback, new developments, or changes in organizational needs.

For example, a compliance training program I designed used a modular approach. The core modules were delivered online through an LMS, allowing for self-paced learning and accessibility across locations. However, we also included in-person workshops for specific, high-risk areas, offering tailored support and interaction. This modular design allowed us to easily scale the training to a larger number of employees across different geographical locations while adapting to specific training needs.

Q 27. Describe your experience with developing and implementing a training program for a specific target audience.

I recently developed and implemented a customer service training program for a team of call center agents. The target audience included agents with varying levels of experience, ranging from entry-level to those with several years of experience.

The needs analysis revealed a need for improved product knowledge, conflict resolution skills, and efficient call handling techniques. The training program incorporated a blended learning approach, combining online modules, role-playing exercises, and live coaching sessions. The online modules provided foundational knowledge on product features and company policies, while role-playing allowed agents to practice handling difficult customer interactions in a safe environment. Live coaching sessions focused on personalized feedback and advanced techniques.

We measured the effectiveness of the program using a combination of Kirkpatrick’s four levels. Post-training surveys indicated high levels of satisfaction (Level 1), knowledge tests demonstrated significant improvements in product knowledge (Level 2), observations of call recordings showed noticeable improvements in call handling techniques (Level 3), and analysis of customer satisfaction scores revealed a significant increase after the training (Level 4). The success of this program was largely due to careful consideration of the target audience’s needs, the use of varied learning methods, and a robust evaluation strategy.

Q 28. How do you utilize data and analytics to inform training program design and improvement?

Data and analytics play a crucial role in informing training program design and improvement. I leverage data from various sources to make data-driven decisions. This includes data from Learning Management Systems (LMS), performance management systems, and employee surveys.

LMS data provides insights into completion rates, time spent on modules, quiz scores, and overall learner engagement. Performance management data reveals correlations between training completion and improved workplace performance. Employee surveys provide qualitative feedback on areas of strength and weakness in the training program. By analyzing these data points, I can identify trends, patterns, and areas that need improvement.

For example, if the LMS shows a high dropout rate on a specific module, it indicates a potential problem with the content or delivery method. This would prompt me to revisit the module, making it more engaging or breaking it down into smaller, more manageable parts. Similarly, if performance data show no correlation between training and improved performance, it might necessitate revisiting the training objectives or delivery methods. Data analysis allows me to move beyond intuition and make informed, evidence-based improvements to the training programs, maximizing their impact and effectiveness.

Key Topics to Learn for Developing and Implementing Training Programs Interview

- Needs Analysis: Identifying learning gaps and organizational needs through surveys, interviews, and performance data. Practical application: Designing a needs analysis questionnaire for a specific department requiring upskilling.

- Learning Objectives & Outcomes: Defining measurable and achievable learning goals using SMART criteria. Practical application: Crafting specific, measurable, achievable, relevant, and time-bound objectives for a new employee onboarding program.

- Curriculum Design & Development: Structuring training content logically, incorporating various learning methods (e.g., lectures, simulations, group activities), and selecting appropriate training materials. Practical application: Developing a training outline for a software program using a blended learning approach.

- Instructional Design Principles: Applying established theories (e.g., ADDIE model, Kirkpatrick’s Four Levels of Evaluation) to create engaging and effective training. Practical application: Evaluating the effectiveness of a past training program using Kirkpatrick’s model and suggesting improvements.

- Training Delivery Methods: Choosing the best delivery method (e.g., instructor-led, online, blended) based on learner needs and resources. Practical application: Justifying the selection of a specific delivery method for a particular training program.

- Training Evaluation & Measurement: Assessing the effectiveness of training programs using various methods (e.g., pre- and post-tests, surveys, observations). Practical application: Designing a comprehensive evaluation plan to measure the impact of a leadership development program.

- Technology Integration in Training: Utilizing technology (e.g., LMS, e-learning platforms, virtual reality) to enhance training experiences. Practical application: Recommending appropriate technology for delivering a specific training program and outlining its integration strategy.

- Budgeting and Resource Allocation: Developing realistic budgets and managing resources effectively for training initiatives. Practical application: Creating a detailed budget proposal for a new training program, justifying all costs.

- Stakeholder Management: Effectively communicating with and managing expectations of various stakeholders (e.g., management, trainees, trainers). Practical application: Developing a communication plan to keep stakeholders informed about the progress of a training program.

Next Steps

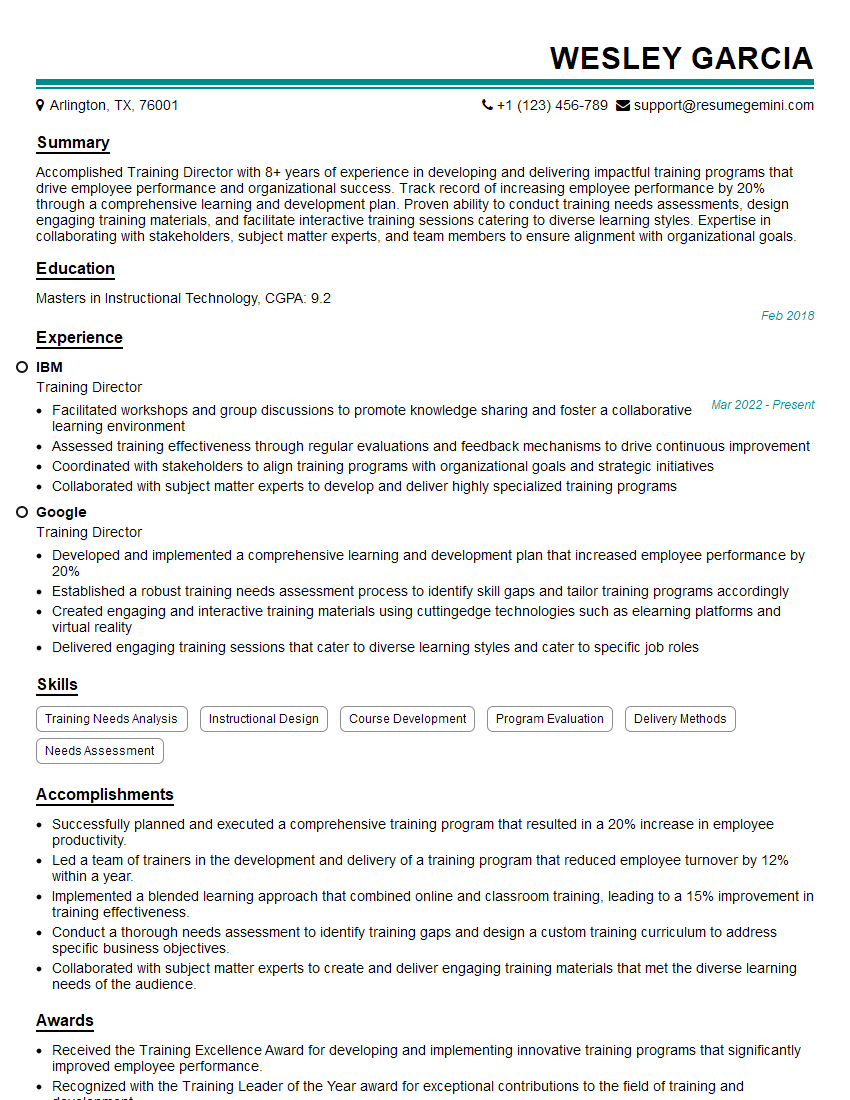

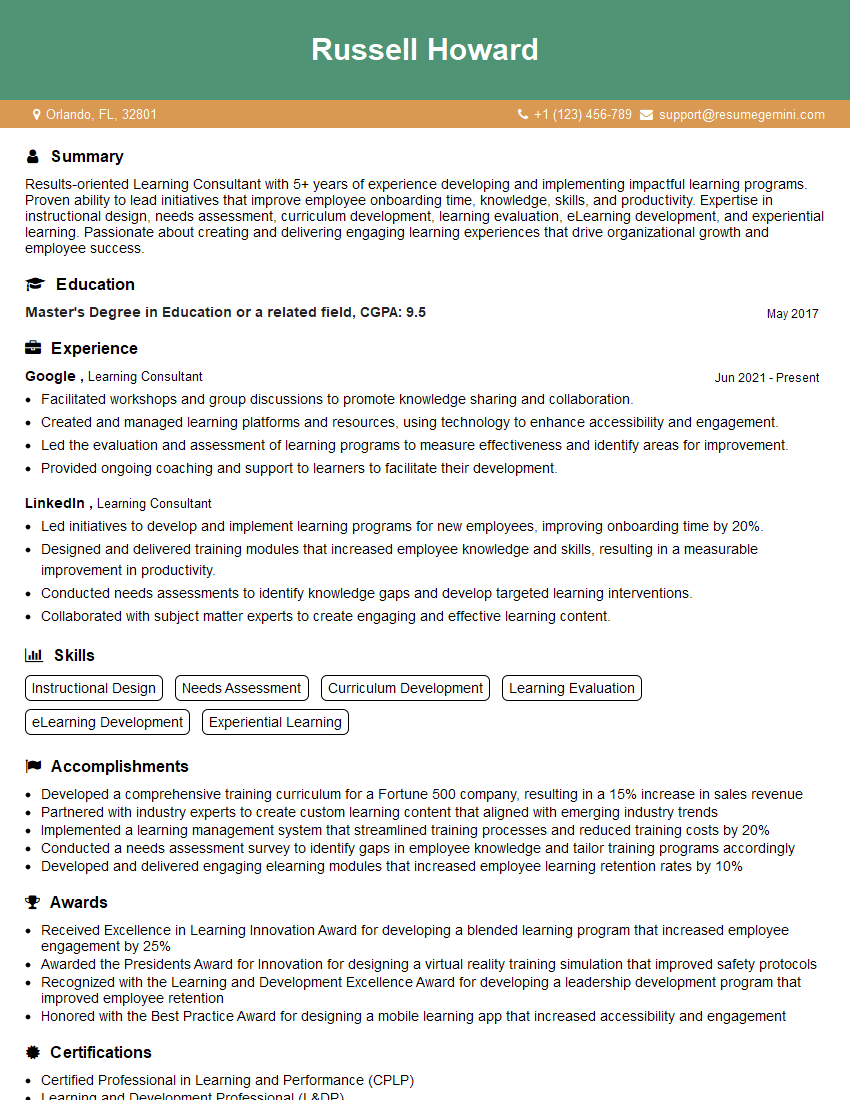

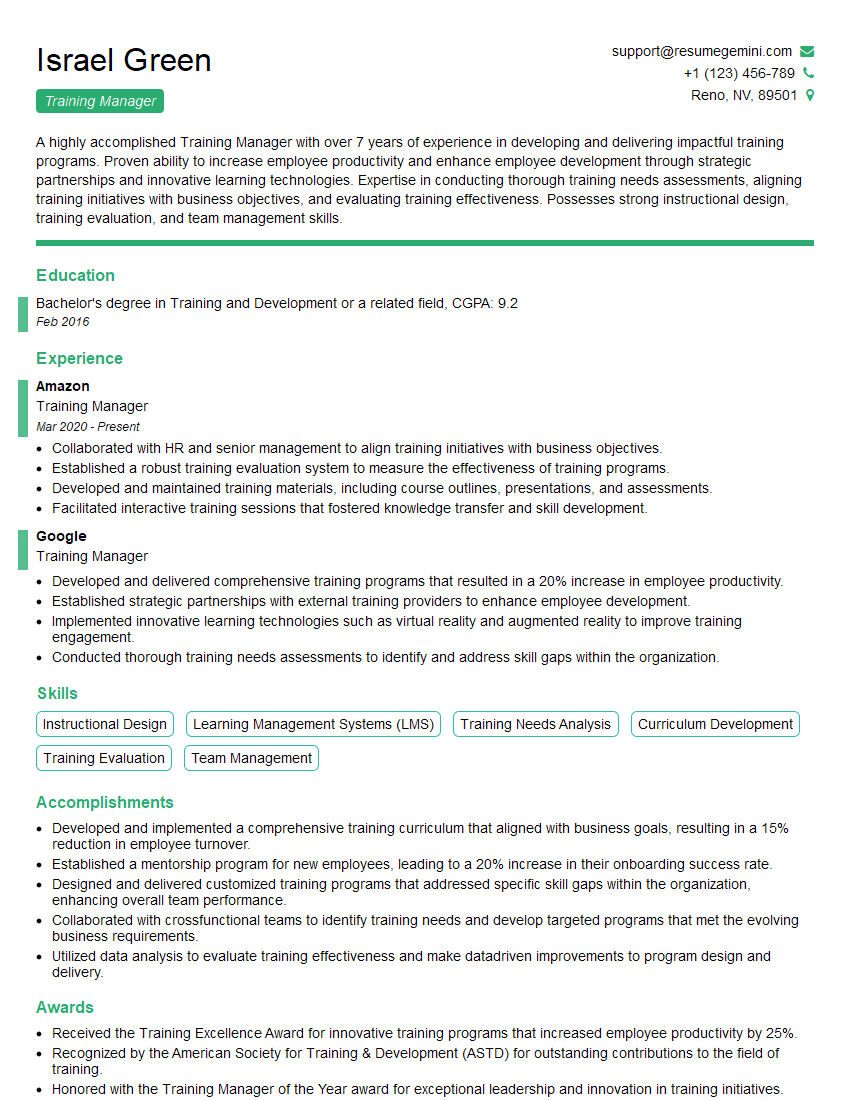

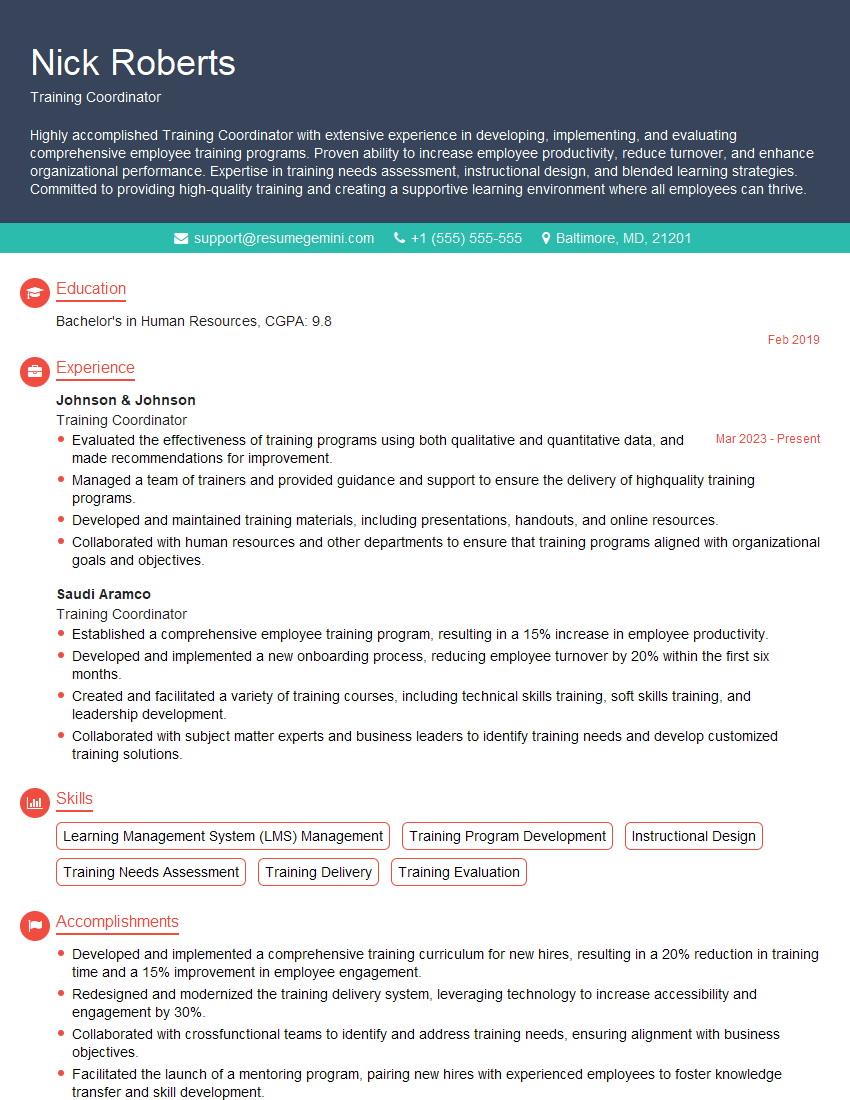

Mastering the development and implementation of training programs is crucial for career advancement in many fields, opening doors to leadership roles and demonstrating your ability to drive organizational growth and improve employee performance. To maximize your job prospects, create an ATS-friendly resume that highlights your skills and experience. ResumeGemini is a trusted resource to help you build a professional and impactful resume that showcases your abilities effectively. Examples of resumes tailored to Developing and Implementing Training Programs are available to guide you. Invest the time to create a compelling application – your future success depends on it!

Explore more articles

Users Rating of Our Blogs

Share Your Experience

We value your feedback! Please rate our content and share your thoughts (optional).

What Readers Say About Our Blog

Hello,

we currently offer a complimentary backlink and URL indexing test for search engine optimization professionals.

You can get complimentary indexing credits to test how link discovery works in practice.

No credit card is required and there is no recurring fee.

You can find details here:

https://wikipedia-backlinks.com/indexing/

Regards

NICE RESPONSE TO Q & A

hi

The aim of this message is regarding an unclaimed deposit of a deceased nationale that bears the same name as you. You are not relate to him as there are millions of people answering the names across around the world. But i will use my position to influence the release of the deposit to you for our mutual benefit.

Respond for full details and how to claim the deposit. This is 100% risk free. Send hello to my email id: [email protected]

Luka Chachibaialuka

Hey interviewgemini.com, just wanted to follow up on my last email.

We just launched Call the Monster, an parenting app that lets you summon friendly ‘monsters’ kids actually listen to.

We’re also running a giveaway for everyone who downloads the app. Since it’s brand new, there aren’t many users yet, which means you’ve got a much better chance of winning some great prizes.

You can check it out here: https://bit.ly/callamonsterapp

Or follow us on Instagram: https://www.instagram.com/callamonsterapp

Thanks,

Ryan

CEO – Call the Monster App

Hey interviewgemini.com, I saw your website and love your approach.

I just want this to look like spam email, but want to share something important to you. We just launched Call the Monster, a parenting app that lets you summon friendly ‘monsters’ kids actually listen to.

Parents are loving it for calming chaos before bedtime. Thought you might want to try it: https://bit.ly/callamonsterapp or just follow our fun monster lore on Instagram: https://www.instagram.com/callamonsterapp

Thanks,

Ryan

CEO – Call A Monster APP

To the interviewgemini.com Owner.

Dear interviewgemini.com Webmaster!

Hi interviewgemini.com Webmaster!

Dear interviewgemini.com Webmaster!

excellent

Hello,

We found issues with your domain’s email setup that may be sending your messages to spam or blocking them completely. InboxShield Mini shows you how to fix it in minutes — no tech skills required.

Scan your domain now for details: https://inboxshield-mini.com/

— Adam @ InboxShield Mini

Reply STOP to unsubscribe

Hi, are you owner of interviewgemini.com? What if I told you I could help you find extra time in your schedule, reconnect with leads you didn’t even realize you missed, and bring in more “I want to work with you” conversations, without increasing your ad spend or hiring a full-time employee?

All with a flexible, budget-friendly service that could easily pay for itself. Sounds good?

Would it be nice to jump on a quick 10-minute call so I can show you exactly how we make this work?

Best,

Hapei

Marketing Director

Hey, I know you’re the owner of interviewgemini.com. I’ll be quick.

Fundraising for your business is tough and time-consuming. We make it easier by guaranteeing two private investor meetings each month, for six months. No demos, no pitch events – just direct introductions to active investors matched to your startup.

If youR17;re raising, this could help you build real momentum. Want me to send more info?

Hi, I represent an SEO company that specialises in getting you AI citations and higher rankings on Google. I’d like to offer you a 100% free SEO audit for your website. Would you be interested?

Hi, I represent an SEO company that specialises in getting you AI citations and higher rankings on Google. I’d like to offer you a 100% free SEO audit for your website. Would you be interested?

good