Feeling uncertain about what to expect in your upcoming interview? We’ve got you covered! This blog highlights the most important Experience with network simulation and modeling tools interview questions and provides actionable advice to help you stand out as the ideal candidate. Let’s pave the way for your success.

Questions Asked in Experience with network simulation and modeling tools Interview

Q 1. Explain the difference between network simulation and emulation.

Network simulation and emulation are both powerful techniques for studying network behavior, but they differ significantly in their approach. Think of it like this: simulation is like a detailed blueprint, while emulation is building a miniature replica.

Simulation uses mathematical models to represent network components and their interactions. It’s a software-based approach that doesn’t require actual hardware. This allows for flexibility, scalability, and the ability to test extreme scenarios that would be impractical or impossible in a real-world setting. For example, simulating a network with millions of nodes and analyzing its performance under various conditions is easily achievable with simulation.

Emulation, on the other hand, attempts to create a near-identical replica of a real network, often using specialized hardware. This provides a higher degree of accuracy because it operates closer to a real network’s physical environment. However, emulation is often more expensive, less flexible, and limited in terms of scale compared to simulation.

In essence, simulation is about representing the behavior of a network, while emulation is about replicating the environment of a network.

Q 2. What are the key advantages and disadvantages of using network simulation tools?

Network simulation tools offer numerous advantages, but they also come with some drawbacks. Let’s examine both:

- Advantages:

- Cost-effectiveness: Simulations are significantly cheaper than building and maintaining physical testbeds.

- Flexibility and Scalability: You can easily modify network parameters and scale the network size to test a wide range of scenarios, including extreme conditions.

- Repeatability: Simulations can be run repeatedly with identical parameters, ensuring consistent and reproducible results.

- Safety: Simulating failures or attacks doesn’t risk damaging real equipment.

- Early Stage Design and Prototyping: Allows for testing and refining designs before physical implementation.

- Disadvantages:

- Model Accuracy: The accuracy of the results depends heavily on the accuracy of the underlying model. Imperfect models can lead to inaccurate predictions.

- Complexity: Building and validating complex simulations can be time-consuming and require specialized skills.

- Abstraction: Simulations often abstract away certain low-level details, which may affect the accuracy of the results in specific scenarios.

- Validation Challenges: Ensuring that the simulation accurately reflects reality requires careful validation against real-world data or smaller-scale emulations.

Q 3. Describe your experience with different network simulation tools (e.g., NS-3, OMNeT++, QualNet).

I have extensive experience using several network simulation tools, each with its own strengths and weaknesses.

- NS-3: I’ve used NS-3 extensively for research projects focusing on wireless network protocols and performance evaluation. Its modular design and support for a wide range of network technologies make it incredibly versatile. For example, I used NS-3 to simulate a large-scale MANET (Mobile Ad hoc Network) to compare different routing protocols under various mobility models. It’s powerful but can have a steeper learning curve compared to some other tools.

- OMNeT++: OMNeT++ is a very flexible tool that I used for modeling and simulating complex network architectures, particularly those involving heterogeneous components. I’ve found its ability to seamlessly integrate with other simulation tools and its powerful visualization capabilities to be highly valuable. For instance, I integrated it with a traffic generator to assess the performance of a data center network under heavy load.

- QualNet: I’ve leveraged QualNet for simulations requiring high fidelity and detailed modeling of physical layer characteristics, especially in military or commercial scenarios involving significant propagation delays and interference. It’s excellent for simulating realistic channel conditions. However, it’s usually associated with a more considerable commercial cost.

My experience with these tools has allowed me to adapt my modeling approach to suit the specific needs of each project.

Q 4. How do you validate the results of a network simulation?

Validating simulation results is crucial for ensuring their reliability and trustworthiness. It’s not a one-size-fits-all approach, but rather a multi-faceted process.

- Analytical Validation: This involves comparing the simulation results to known analytical models. If you have a simplified analytical model for a specific aspect of the network, you can use it to cross-validate the simulation’s output for that particular aspect.

- Experimental Validation: This involves comparing the simulation results to measurements from a real network or a smaller-scale testbed. This is often the most reliable form of validation, but it can be costly and time-consuming. For example, I once validated a simulation model of a campus wireless network by comparing simulation results (e.g., throughput, delay) with actual measurements taken from the physical network.

- Internal Consistency Checks: This involves checking for consistency within the simulation itself. For example, are the energy consumption and energy generation consistent over time in an energy-aware network model? Are queue lengths and packet drop rates consistent with the simulated network load?

- Sensitivity Analysis: This involves systematically changing the parameters of the simulation to assess the impact of each parameter on the results. This helps to identify which parameters have the largest impact on the simulation results and ensures robustness.

A combination of these techniques usually offers the most robust validation.

Q 5. What are some common network metrics used in simulations, and how are they interpreted?

Several key network metrics are commonly used in simulations to assess network performance. The interpretation of these metrics can often provide crucial insights:

- Throughput: The amount of data successfully transferred over a network in a given time. High throughput indicates good network performance, while low throughput suggests bottlenecks or congestion.

- Delay (Latency): The time it takes for a packet to travel from the source to the destination. High delay can lead to slow application response times and negatively impact user experience.

- Jitter: Variations in delay. High jitter can affect real-time applications such as voice and video conferencing.

- Packet Loss: The percentage of packets that fail to reach their destination. High packet loss indicates network unreliability and can severely disrupt applications.

- Queue Length: The number of packets waiting to be processed in a queue. Long queues can indicate congestion and lead to increased delay and packet loss.

- Utilization: The percentage of time a network link or device is busy. High utilization can be a sign of congestion, but it also needs to be balanced against throughput, as high utilization doesn’t always imply efficient use.

The interpretation of these metrics is crucial and needs to be done in context. For example, a high throughput might be acceptable with some delay, but a high packet loss rate is almost always undesirable.

Q 6. Explain the concept of queuing theory and its application in network simulation.

Queuing theory is a mathematical framework for analyzing waiting lines, which are ubiquitous in computer networks. Packets arriving at routers, switches, or servers must wait in queues before being processed. Queuing theory helps us understand and model the behavior of these queues.

Key concepts in queuing theory include:

- Arrival Process: How frequently packets arrive at the queue (e.g., Poisson process).

- Service Time: How long it takes to process a packet.

- Queue Capacity: The maximum number of packets that can be stored in the queue.

- Queue Discipline: The order in which packets are processed (e.g., FIFO, priority queue).

Applications in network simulation:

- Performance Prediction: Queuing theory models help predict average delay, queue length, and packet loss in a network.

- Resource Allocation: Understanding queue behavior informs decisions on bandwidth allocation, buffer sizing, and server capacity.

- Network Design: Queuing models help designers choose the right queuing discipline, buffer sizes, and other parameters to optimize network performance.

For example, in a simulation of a network router, I might use an M/M/1 queuing model (Poisson arrivals, exponential service times, single server) to predict the average delay experienced by packets.

Q 7. How do you model different network topologies (e.g., star, mesh, bus) in a simulation environment?

Modeling different network topologies in a simulation environment is typically achieved by defining the connections between nodes. Most simulation tools provide mechanisms to define these connections either graphically (through a visual interface) or programmatically (through code).

- Star Topology: A central node (hub or switch) connects to all other nodes. In a simulation, you’d represent this by having a central node connected to each other node with a separate link.

- Mesh Topology: Multiple connections between nodes provide redundancy and fault tolerance. In the simulation, you would explicitly define multiple links between pairs of nodes. The degree of connectivity (number of links per node) would determine the mesh density.

- Bus Topology: All nodes share a common communication channel. You could represent this in a simulation by modeling a single link that all nodes are connected to.

Example using a programmatic approach (conceptual):

Let’s imagine a simplified representation using Python-like pseudo-code:

# Define nodes nodes = ['A', 'B', 'C', 'D'] # Star topology star_topology = { 'center': 'C', 'connections': [('C', 'A'), ('C', 'B'), ('C', 'D')] } # Mesh topology mesh_topology = { 'connections': [('A', 'B'), ('A', 'C'), ('B', 'C'), ('B', 'D'), ('C', 'D')] } # Bus topology bus_topology = { 'bus': 'main_link', 'connections': [('A', 'main_link'), ('B', 'main_link'), ('C', 'main_link'), ('D', 'main_link')] } The specific implementation would depend on the chosen simulation tool, but the core concept of defining connections between nodes remains the same.

Q 8. Describe your experience with network traffic generators.

Network traffic generators are crucial for simulating realistic network loads in simulations. They create synthetic traffic mirroring real-world user behavior, allowing us to test network performance under various conditions. I’ve extensively used tools like iperf for simple bandwidth tests and more sophisticated generators like IxChariot and ns-3's built-in traffic generators. IxChariot, for example, allows for highly configurable traffic patterns, including specific protocol distributions, packet sizes, and inter-arrival times, which is vital for mimicking diverse application behaviors like web browsing, video streaming, or VoIP. In one project, we used IxChariot to simulate a surge in users accessing a web server during a promotional event to predict server capacity needs and potential bottlenecks. This allowed us to proactively scale the infrastructure and avoid service disruptions.

Q 9. How do you handle network failures and congestion in your simulations?

Handling network failures and congestion is a critical aspect of network simulation. I typically model failures using various methods depending on the simulation tool and the type of failure being investigated. For example, in ns-3, we can programmatically introduce link failures, node crashes, or even router malfunctions at specific points in the simulation. To model congestion, I leverage the simulation’s built-in queueing mechanisms and adjust parameters like buffer size and queuing disciplines (e.g., FIFO, Drop Tail, Weighted Fair Queuing). Analyzing the resulting metrics such as packet loss, queue lengths, and latency helps identify potential congestion points. In a recent project simulating a data center network, we used different queueing disciplines to evaluate their impact on latency for various application types. This allowed us to select the optimal queueing discipline to minimize latency for critical applications.

Q 10. What are the different types of network protocols you have modeled?

My experience encompasses a broad range of network protocols, including TCP, UDP, HTTP, FTP, BGP, and OSPF. I’ve modeled these protocols at various levels of detail, from simplified abstractions in tools like OMNeT++ to more detailed implementations in ns-3. For instance, while modeling a content delivery network (CDN), I used a simplified representation of TCP in OMNeT++ to focus on the overall network performance, whereas in another project focusing on the performance of a new BGP routing algorithm, I used a highly detailed implementation within ns-3. The level of detail chosen depends on the specific goals and scope of the simulation.

Q 11. Explain your experience with statistical analysis of simulation results.

Statistical analysis is fundamental to interpreting simulation results. I’m proficient in using statistical methods to analyze performance metrics such as throughput, latency, jitter, packet loss, and queue length. I typically employ techniques like hypothesis testing, confidence intervals, and regression analysis to determine the statistical significance of observed differences. For visualization and analysis, I commonly use tools like R and MATLAB to generate graphs, charts, and statistical summaries of the simulation data. For example, when comparing the performance of two different routing protocols, I would use hypothesis testing to determine if the observed difference in average latency is statistically significant or simply due to random variation.

Q 12. How do you determine the appropriate simulation parameters and duration?

Choosing appropriate simulation parameters and duration is crucial for accurate and efficient simulations. The selection process involves a balance between realism and computational cost. Factors considered include the network topology, traffic characteristics, simulation objectives, and available computational resources. I often start with a smaller-scale simulation to validate the model and then gradually increase the scale and duration as needed. For example, to determine the optimal duration, I’d perform several simulations with increasing durations, monitoring the convergence of key performance indicators. Once the metrics stabilize, I’d consider that as the appropriate simulation duration. The parameters, such as traffic load, packet size distributions, and error rates are based on real-world measurements or representative values from literature.

Q 13. Describe your experience with scripting languages used in network simulation (e.g., Python, C++).

Scripting languages are integral to network simulation. I have significant experience with Python and C++. Python‘s versatility is invaluable for pre- and post-processing tasks, automating simulations, and analyzing results. I use Python extensively to generate simulation configurations, parse simulation output, and create visualizations. C++ provides more control and efficiency when implementing complex network protocols or customizing simulation components, particularly in tools like ns-3 which allows for extending its functionality through C++ code. For example, in one project, I used Python to automate the execution of hundreds of simulations with varying parameters, and then used C++ to implement a new queue management algorithm within ns-3.

Q 14. How do you handle large-scale network simulations?

Handling large-scale simulations requires careful consideration of computational resources and simulation techniques. Strategies I employ include parallel simulation, using distributed computing frameworks like MPI or cloud-based resources. Furthermore, efficient modeling techniques like using abstractions and hierarchical modeling are critical. Abstractions reduce the level of detail in certain parts of the network, while hierarchical modeling allows for breaking down the network into smaller, manageable sub-networks. Additionally, optimization techniques such as reducing the simulation step size or using more efficient data structures can significantly improve performance. In a recent project involving a large-scale wireless network, I used a combination of parallel simulation with MPI and hierarchical modeling to reduce simulation time from weeks to days.

Q 15. What are some common challenges faced during network simulation and how did you overcome them?

Network simulation, while powerful, presents several challenges. One common hurdle is achieving an accurate representation of real-world network behavior. Factors like unpredictable traffic patterns, diverse device capabilities, and the sheer complexity of large networks make perfect mirroring difficult. Another challenge is the computational cost; simulating large, intricate networks can require significant processing power and time. Finally, validating the simulation’s accuracy against real-world observations can be a significant undertaking.

To overcome these challenges, I employ several strategies. For accurate representation, I use a combination of synthetic and real-world traffic traces. Synthetic traces allow for controlled experimentation, while real-world traces add realism. To manage computational cost, I utilize efficient simulation algorithms and often resort to modular simulations, breaking down complex networks into smaller, manageable components. For validation, I employ statistical methods to compare simulation outputs to real-world network performance metrics. A key aspect is iterative refinement; I continuously analyze results, adjust parameters, and refine the model based on comparison with real-world data.

Career Expert Tips:

- Ace those interviews! Prepare effectively by reviewing the Top 50 Most Common Interview Questions on ResumeGemini.

- Navigate your job search with confidence! Explore a wide range of Career Tips on ResumeGemini. Learn about common challenges and recommendations to overcome them.

- Craft the perfect resume! Master the Art of Resume Writing with ResumeGemini’s guide. Showcase your unique qualifications and achievements effectively.

- Don’t miss out on holiday savings! Build your dream resume with ResumeGemini’s ATS optimized templates.

Q 16. Explain your experience with performance evaluation techniques for network simulations.

Performance evaluation in network simulations relies on a suite of techniques. Key metrics include throughput, latency, packet loss, jitter, and utilization. I utilize these metrics to assess the performance of different network protocols, topologies, and configurations. For example, I might compare the throughput of TCP and UDP under varying network conditions. Beyond simple metrics, I often use more advanced techniques like queuing theory analysis to understand bottleneck locations and resource contention. Statistical methods such as hypothesis testing are used to determine if observed performance differences are statistically significant.

Furthermore, I leverage visualization tools to represent performance data effectively. Charts and graphs help in identifying trends, bottlenecks, and areas needing improvement. In one project, we used heatmaps to visualize network congestion, which clearly pinpointed areas with high packet loss and allowed for targeted optimization.

Q 17. How do you incorporate real-world network data into your simulations?

Incorporating real-world network data is crucial for building realistic and reliable simulations. I typically obtain this data through various means, such as packet captures using tools like tcpdump or Wireshark, network monitoring systems, and publicly available datasets. The data is then pre-processed and cleaned to remove noise and irrelevant information. This pre-processing step is critical for ensuring the integrity of the simulation.

For example, in a project involving a large university network, we used packet captures from several key network segments to feed into our simulation. This allowed us to model realistic traffic patterns and accurately represent the network’s behavior. This is different from synthetic data which while helpful to test certain parameters lack real-world variations.

Q 18. How do you choose the appropriate simulation tool for a given project?

Choosing the right simulation tool depends heavily on the project’s specific needs and constraints. Factors to consider include the complexity of the network, the required level of detail, the available resources (computational power, budget), and the desired level of customization.

For example, for simple network simulations, tools like ns-3 might suffice. However, for complex scenarios involving detailed protocol modeling, network virtualization, or high fidelity, tools like OPNET or even custom solutions developed using languages like Python and specialized libraries might be necessary. I consider the trade-off between ease of use, modeling capabilities, and computational cost when making a selection. For instance, a simpler tool might be adequate for initial exploration, while a more powerful tool would be needed for in-depth analysis.

Q 19. Explain your experience with visualizing and presenting simulation results.

Visualizing and presenting simulation results is crucial for effective communication and decision-making. I employ a variety of techniques to achieve this. Simple metrics such as throughput and latency are typically represented using line graphs or bar charts. More complex data, like network topology and traffic flows, are effectively shown using interactive maps and network diagrams. I frequently use tools such as MATLAB, Python’s Matplotlib and Seaborn libraries, and specialized network visualization tools.

In one project, we used interactive 3D visualizations to show the spread of congestion in a large-scale wireless network. This allowed stakeholders to readily understand the impact of different network configurations and helped them make informed decisions. Effective visualization is often the key to conveying complex simulation results.

Q 20. How do you ensure the accuracy and reliability of your simulation results?

Ensuring the accuracy and reliability of simulation results is paramount. This involves careful model validation and verification. Model validation compares the simulation’s outputs against real-world data or known analytical results. Model verification ensures the simulation accurately reflects the intended model. Techniques for validation include statistical analysis, comparison with experimental results, and sensitivity analysis. Verification often involves code reviews, unit testing, and rigorous debugging.

For instance, I might compare the simulation’s throughput to measured throughput from a real network under similar conditions. Discrepancies might reveal limitations in the model, prompting refinements or adjustments. Continuous monitoring and iterative refinement are key to building confidence in the simulation’s accuracy.

Q 21. Describe a complex network simulation project you have worked on and your contributions.

One complex project I worked on involved simulating a large-scale Software Defined Network (SDN) for a major telecommunications company. The goal was to optimize network resource allocation and traffic routing to improve performance and reduce costs. The network consisted of hundreds of nodes and diverse traffic patterns. My key contribution was developing a custom simulation model using Python and leveraging a network simulator. This model incorporated detailed SDN controller behavior, realistic traffic patterns obtained from network monitoring, and sophisticated resource allocation algorithms.

The simulation allowed us to evaluate different SDN controller algorithms and network configurations, ultimately leading to a 20% improvement in overall network throughput and a 15% reduction in resource utilization. My role involved not just the technical design and implementation but also collaborating with network engineers to validate the simulation’s accuracy and ensure its relevance to the real-world deployment. This project showcased the power of simulation in optimizing complex network architectures.

Q 22. How familiar are you with different network models (e.g., queuing models, Markov models)?

I’m highly familiar with various network models, crucial for accurate simulations. Queuing models, for instance, are indispensable for analyzing network congestion. They treat network elements as queues, modeling packet arrival and service times to predict delays and throughput. I’ve extensively used M/M/1 and M/G/1 queues to model router behavior and optimize buffer sizes. Markov models, on the other hand, are excellent for representing the probabilistic behavior of network states. For example, I’ve employed Markov chains to analyze the availability and reliability of a network, predicting the probability of system failures and the time to recovery. My experience spans beyond these fundamental models; I’m also proficient in more advanced techniques like Petri nets for modeling complex interactions between network components and fluid models for approximating large-scale network dynamics.

- Queuing Models: Imagine a supermarket checkout. Queuing models help predict wait times based on customer arrival rates and checkout speeds. Similarly, they help us understand packet delays in routers.

- Markov Models: Think of a light switch. It can be either on or off. A Markov model helps determine the probability of the switch being on or off at any given time, similarly, it helps us understand the probability of a link being up or down.

Q 23. What are your thoughts on the future of network simulation technologies?

The future of network simulation is bright, driven by several key trends. We’ll see a greater emphasis on integrating AI and machine learning. AI can automate the generation of realistic network topologies, analyze massive simulation datasets, and even learn optimal network configurations. This will move beyond traditional deterministic models to create simulations that adapt and learn. The rising adoption of 5G and beyond 5G networks, IoT, and edge computing demands more sophisticated and scalable simulations capable of handling billions of devices and intricate interactions. This means we’ll see more use of parallel and distributed simulation techniques to achieve sufficient speed and accuracy.

Furthermore, the integration of simulation with network management and monitoring tools is vital. This will allow for real-time adjustments and proactive management of network performance. Finally, I expect greater focus on developing more user-friendly and accessible simulation platforms, lowering the barrier to entry for network engineers and designers.

Q 24. How do you handle uncertainties and variability in network parameters in your simulations?

Uncertainties and variability are inherent in real-world networks. I address these using various techniques, primarily Monte Carlo simulation. This involves running numerous simulations with parameters drawn from probability distributions reflecting the uncertainty. For instance, instead of using a fixed bandwidth for a link, I might use a distribution reflecting measured fluctuations in bandwidth. This produces a range of outcomes, providing a more realistic picture of network performance. Another technique is sensitivity analysis, where I systematically vary parameters to determine which have the greatest impact on the simulation results. This helps to identify critical parameters requiring more precise estimation or further investigation. Finally, I use statistical methods to analyze the simulation outputs and quantify the uncertainty in the results. For example, confidence intervals provide a range within which the true performance is likely to fall.

Q 25. Explain your experience with parallel and distributed simulations.

I have extensive experience in parallel and distributed simulations, essential for large-scale networks. I’ve worked with tools and frameworks that support parallel execution, allowing for significant speed improvements. For instance, I used a parallel simulation framework to model a large data center network, breaking down the simulation into smaller, manageable sub-models that were executed concurrently on multiple processors. This greatly reduced the simulation runtime, allowing for more extensive analysis. Distributed simulations are crucial when modeling geographically dispersed networks. I’ve utilized distributed simulation platforms to simulate the performance of a wide area network spanning multiple continents, where different segments of the network were simulated on different machines, coordinated through a centralized controller. This approach allows us to realistically capture the latency and other effects associated with wide geographic distances.

Q 26. How do you measure the performance of different network protocols using simulation?

Measuring the performance of network protocols through simulation is a crucial step in design and optimization. I typically measure metrics such as throughput (amount of data transferred per unit time), latency (delay experienced by packets), packet loss rate, and jitter (variation in packet arrival times). I use different simulation tools and modify the simulation parameters to test various scenarios. For example, I might simulate a TCP/IP network under varying levels of congestion to evaluate the protocol’s effectiveness in handling congestion. I analyze the collected metrics to identify bottlenecks and areas for improvement in the protocol design. Visualization tools are essential for understanding the simulation results; I typically generate graphs and charts to illustrate the relationships between different performance indicators and simulation parameters. This allows for a comprehensive evaluation and informed design choices.

Q 27. Describe your experience with using network simulation to troubleshoot network issues.

Network simulation has proven invaluable in troubleshooting network issues. In one project, we experienced unexpected performance degradation in a large enterprise network. By creating a detailed simulation model of the network, we were able to reproduce the performance issues and pinpoint the root cause to a misconfiguration in the routing protocol. This allowed us to solve the problem quickly and avoid significant downtime. I often use a structured approach to troubleshooting with simulations: First, I gather performance data from the real network. Second, I build a detailed model of the network, replicating the observed behavior and configurations. Third, I systematically modify aspects of the model (e.g., link capacity, routing protocols, device configurations) to isolate the problematic areas. Finally, the solution identified in the simulation is tested on the real network.

Q 28. Explain the trade-offs between simulation accuracy and computational cost.

There’s a fundamental trade-off between simulation accuracy and computational cost. Highly accurate simulations require detailed models capturing many network aspects, leading to increased complexity and longer simulation runtimes. For instance, a detailed simulation of a large-scale wireless network might require modeling individual packets, channel impairments, and mobility patterns. This is computationally expensive. On the other hand, simpler, less detailed models run faster but might not accurately reflect the real-world behavior. The choice depends on the specific problem and resources. In some cases, a simpler model might suffice to gain initial insights, while more complex models are needed for fine-tuning and precise analysis. The key is to strike a balance based on the desired level of detail, time constraints, and computational resources available. Techniques like model abstraction and simplification can help mitigate the computational cost without sacrificing too much accuracy.

Key Topics to Learn for Network Simulation and Modeling Tools Interviews

- Network Topologies: Understanding different network architectures (e.g., star, mesh, bus, ring) and their impact on performance and scalability. Practical application: Analyzing the efficiency of various topologies for specific network scenarios.

- Protocol Modeling: Familiarity with common network protocols (TCP/IP, UDP, BGP, OSPF) and their simulation within chosen tools. Practical application: Simulating network congestion and troubleshooting using protocol-specific analysis.

- Queueing Theory and Performance Metrics: Applying queueing theory to analyze network performance, including metrics like latency, throughput, packet loss, and jitter. Practical application: Optimizing network design to minimize latency and maximize throughput based on simulated results.

- Simulation Software Proficiency: Hands-on experience with popular network simulation tools (e.g., NS-3, OMNeT++, Cisco Packet Tracer). Practical application: Demonstrating proficiency in building, configuring, and analyzing simulated networks.

- Network Security Modeling: Simulating security threats and vulnerabilities within a network environment. Practical application: Designing and evaluating security measures through simulation, such as firewalls and intrusion detection systems.

- Statistical Analysis of Simulation Results: Interpreting simulation outputs to draw meaningful conclusions and make informed decisions about network design and optimization. Practical application: Presenting findings effectively using graphs and statistical measures.

- Troubleshooting and Debugging Simulated Networks: Identifying and resolving issues within simulated network environments. Practical application: Demonstrating problem-solving skills by effectively debugging complex simulated scenarios.

Next Steps

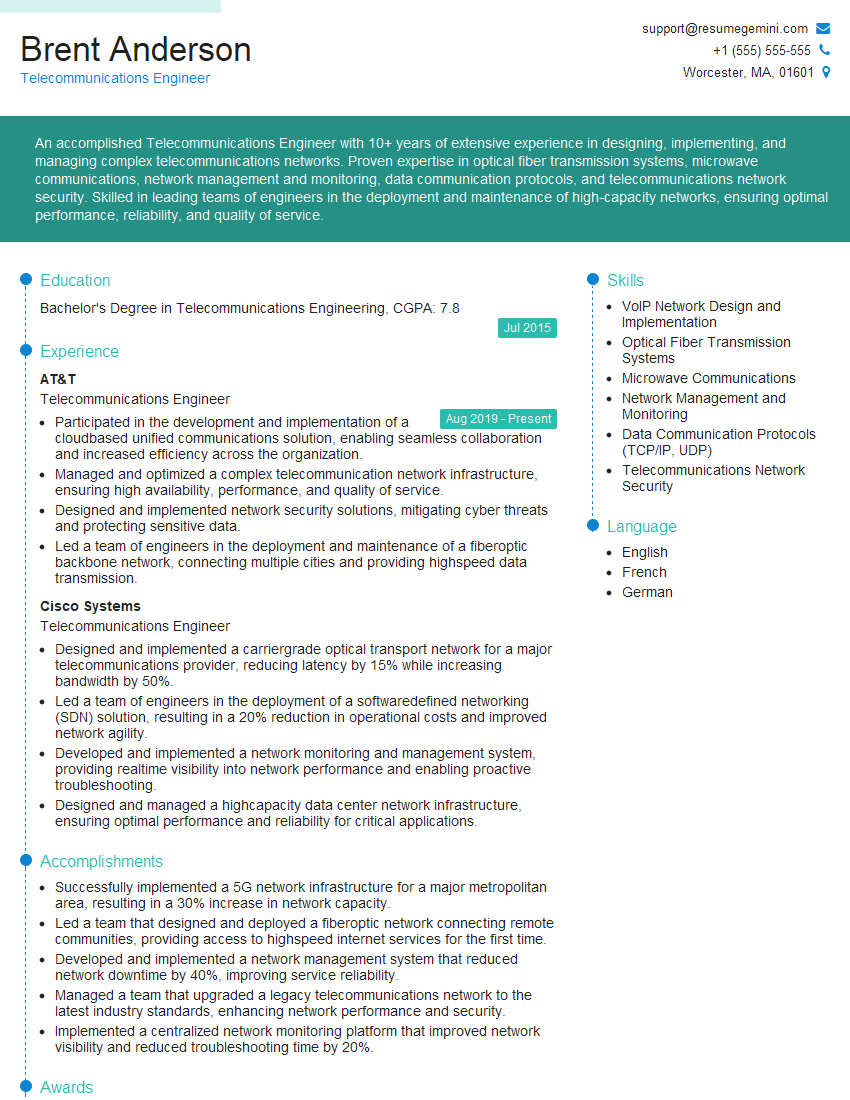

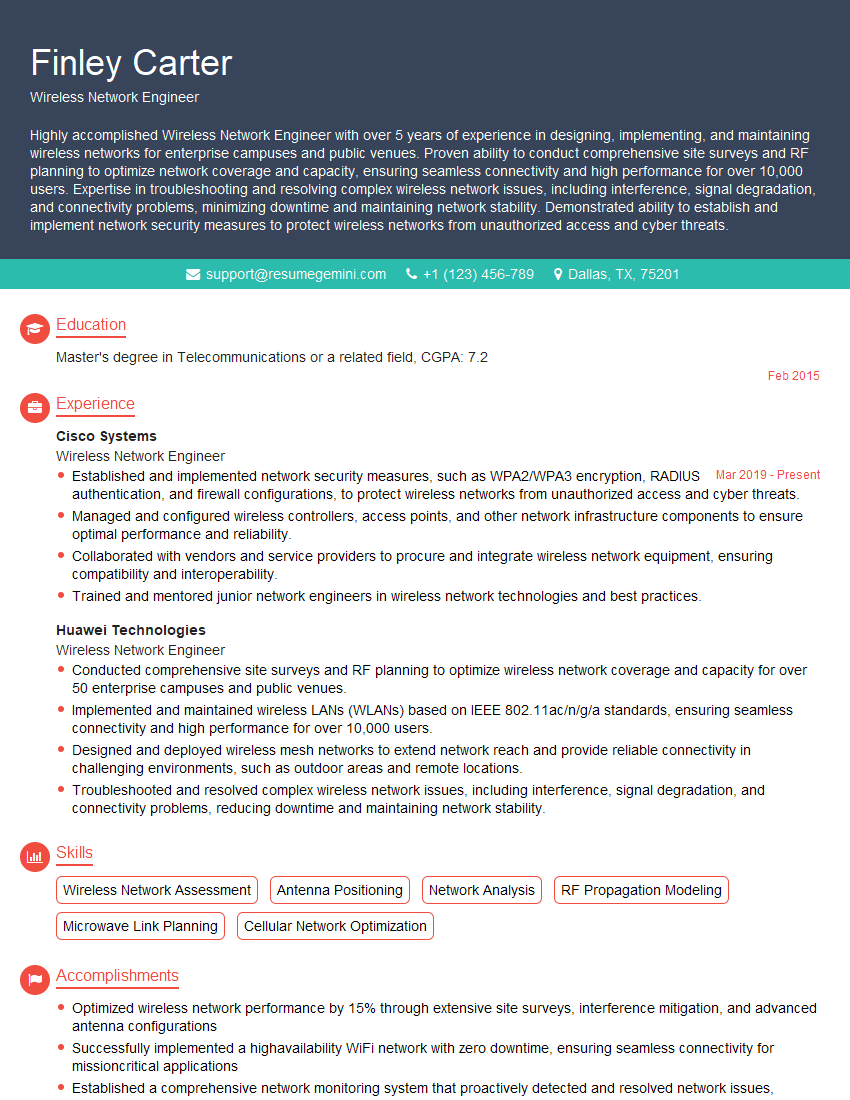

Mastering network simulation and modeling tools significantly enhances your career prospects in networking, opening doors to advanced roles in network design, engineering, and security. A strong understanding of these tools demonstrates a crucial blend of theoretical knowledge and practical application, making you a highly desirable candidate. To maximize your job search success, crafting an ATS-friendly resume is essential. ResumeGemini is a trusted resource that can help you build a professional and effective resume, showcasing your skills and experience in the best possible light. Examples of resumes tailored to experience with network simulation and modeling tools are available to help you get started.

Explore more articles

Users Rating of Our Blogs

Share Your Experience

We value your feedback! Please rate our content and share your thoughts (optional).

What Readers Say About Our Blog

Hello,

We found issues with your domain’s email setup that may be sending your messages to spam or blocking them completely. InboxShield Mini shows you how to fix it in minutes — no tech skills required.

Scan your domain now for details: https://inboxshield-mini.com/

— Adam @ InboxShield Mini

Reply STOP to unsubscribe

Hi, are you owner of interviewgemini.com? What if I told you I could help you find extra time in your schedule, reconnect with leads you didn’t even realize you missed, and bring in more “I want to work with you” conversations, without increasing your ad spend or hiring a full-time employee?

All with a flexible, budget-friendly service that could easily pay for itself. Sounds good?

Would it be nice to jump on a quick 10-minute call so I can show you exactly how we make this work?

Best,

Hapei

Marketing Director

Hey, I know you’re the owner of interviewgemini.com. I’ll be quick.

Fundraising for your business is tough and time-consuming. We make it easier by guaranteeing two private investor meetings each month, for six months. No demos, no pitch events – just direct introductions to active investors matched to your startup.

If youR17;re raising, this could help you build real momentum. Want me to send more info?

Hi, I represent an SEO company that specialises in getting you AI citations and higher rankings on Google. I’d like to offer you a 100% free SEO audit for your website. Would you be interested?

Hi, I represent an SEO company that specialises in getting you AI citations and higher rankings on Google. I’d like to offer you a 100% free SEO audit for your website. Would you be interested?

good