Are you ready to stand out in your next interview? Understanding and preparing for Network Analytics and Machine Learning interview questions is a game-changer. In this blog, we’ve compiled key questions and expert advice to help you showcase your skills with confidence and precision. Let’s get started on your journey to acing the interview.

Questions Asked in Network Analytics and Machine Learning Interview

Q 1. Explain the difference between supervised and unsupervised machine learning in the context of network analysis.

In network analysis, both supervised and unsupervised machine learning techniques are powerful tools, but they differ significantly in their approach. Supervised learning uses labeled data – data where we already know the outcome we’re interested in. Think of it like teaching a child to identify different types of fruits: you show them apples, bananas, and oranges, labeling each one. The algorithm learns from these labeled examples and creates a model to predict the type of fruit given a new, unseen example. In network analysis, this could be used for intrusion detection, where labeled network traffic data (normal vs. malicious) trains a model to classify new traffic.

Unsupervised learning, on the other hand, works with unlabeled data. It’s like giving the child a basket of assorted fruits and asking them to group similar fruits together. The algorithm identifies patterns and structures in the data without prior knowledge of the outcomes. This is invaluable for tasks like community detection in social networks, where we identify groups of closely connected users without pre-defined groups. We let the algorithm uncover hidden relationships within the network data itself.

- Supervised Learning Examples in Network Analysis: Intrusion detection, link prediction, QoS prediction.

- Unsupervised Learning Examples in Network Analysis: Community detection, anomaly detection, network clustering.

Q 2. Describe common network data sources and their relevance in machine learning applications.

Network data sources are incredibly diverse, each providing unique insights for machine learning applications. Here are some common ones:

- Network Flow Data: This captures information about network traffic flows, including source and destination IP addresses, ports, protocols, packet counts, and bytes transferred. It’s crucial for tasks like anomaly detection and performance analysis. Think of it as a detailed record of every conversation happening on your network.

- Network Topology Data: Describes the structure of the network, including devices (routers, switches, servers), their connections, and their characteristics. This helps us understand network design and identify potential bottlenecks.

- Log Data: Includes events from various network devices and applications, such as authentication attempts, error messages, and security alerts. This data is vital for security monitoring and troubleshooting.

- System Monitoring Data: This involves CPU usage, memory consumption, disk I/O, and other performance metrics from network devices. Machine learning can predict potential failures based on these metrics.

- Social Network Data: Representing relationships between users in online platforms, this data is crucial for understanding community structures, influence spread, and information dissemination.

The relevance of these data sources hinges on the specific machine learning task. For instance, intrusion detection relies heavily on network flow data and log data, while predicting network failures uses system monitoring data and topology data.

Q 3. How would you handle missing data in a network dataset?

Missing data is a common challenge in network analysis. Ignoring it can lead to biased results and inaccurate models. Here’s a multi-pronged approach:

- Deletion: Simply remove data points with missing values. This is the simplest but can lead to significant data loss if missing data is not random.

- Imputation: Replace missing values with estimated values. This can be done using various methods:

- Mean/Median/Mode Imputation: Replace with the average, median, or mode of the non-missing values. Simple but can distort the distribution.

- K-Nearest Neighbors (KNN) Imputation: Estimate missing values based on the values of similar data points. More sophisticated than mean/median/mode.

- Multiple Imputation: Create multiple plausible imputed datasets and analyze each one separately, combining the results.

- Model-based imputation: Use a predictive model (e.g., regression) to predict the missing values based on other features.

The best strategy depends on the type of missing data (missing completely at random, missing at random, missing not at random) and the amount of missing data. For example, if missing data is minimal and appears random, simple deletion or mean imputation might suffice. However, for significant or non-random missingness, more advanced imputation techniques like KNN or multiple imputation are necessary. It is also crucial to document the imputation method used and evaluate its impact on the analysis.

Q 4. What are some common network visualization techniques and their applications?

Network visualization is crucial for understanding complex network structures and gaining insights from data. Here are some common techniques:

- Node-link diagrams: Represent nodes (entities) and links (relationships) visually. Variations exist, such as force-directed layouts (nodes repel and attract based on connections) and hierarchical layouts (organizing nodes in a tree-like structure). These are great for understanding network topology and community structures.

- Matrix representations: Show relationships as a matrix, where rows and columns represent nodes, and cells indicate the presence or strength of connections. Useful for identifying dense subgraphs or patterns.

- Heatmaps: Visualize data using color gradients, useful for displaying correlation matrices or other numerical data related to nodes or edges.

- Sankey diagrams: Illustrate flows of data or information between nodes, useful for tracking traffic patterns or information dissemination.

Applications: Analyzing social networks, visualizing website traffic, identifying vulnerabilities in network infrastructure, understanding information spread on social media platforms. Choosing the right technique depends on the data and the specific insights we want to obtain.

Q 5. Explain various network topology types and their impact on data analysis.

Network topology significantly impacts data analysis. Different topologies influence how data flows and how easily we can detect patterns and anomalies.

- Star Topology: All nodes connect to a central node (e.g., a hub or switch). Simple to manage, but a failure in the central node brings down the entire network.

- Bus Topology: All nodes connect to a single cable. Cheap and easy to implement, but prone to single points of failure.

- Ring Topology: Nodes connect to form a closed loop. Data travels in one direction, which can lead to bottlenecks. Failure in one node can severely impact the entire ring.

- Mesh Topology: Nodes connect to multiple other nodes, creating redundancy and fault tolerance. More complex and expensive but provides high reliability.

- Tree Topology: Hierarchical structure resembling a tree, often used in larger networks. Data flows along branches, often towards the root. Provides efficient organization but can have single points of failure at higher levels.

Impact on data analysis: The topology influences how algorithms need to be adapted. For example, shortest path algorithms might be efficiently applied on tree topology but needs modifications for mesh topology. Anomaly detection can be easier in topologies with less redundancy (star, bus) while complex in a mesh network where redundancy complicates analysis.

Q 6. How would you use machine learning to detect anomalies in network traffic?

Machine learning excels at detecting anomalies in network traffic. Several techniques can be used:

- Supervised Learning: If you have labeled data (normal vs. anomalous traffic), you can train a classifier (e.g., Support Vector Machines (SVM), Random Forests, Neural Networks) to identify new anomalous traffic. This requires a well-labeled dataset, which can be a challenge in practice.

- Unsupervised Learning: For unlabeled data, techniques like clustering (k-means, DBSCAN) can group similar network flows, with outliers potentially representing anomalies. One-class SVM can also be used to model ‘normal’ traffic and detect deviations. Autoencoders, a type of neural network, are also very effective in detecting subtle anomalies.

- Statistical Methods: Simple statistical methods like calculating the standard deviation of certain network metrics (e.g., packet size, inter-arrival time) can identify values significantly deviating from the norm.

Example using autoencoders: Autoencoders learn a compressed representation of normal traffic. When presented with anomalous traffic, the reconstruction error will be high, indicating an anomaly. This method is particularly powerful as it can adapt to changing network conditions.

Q 7. Describe different methods for predicting network failures using machine learning.

Predicting network failures is critical for proactive maintenance. Machine learning offers powerful tools:

- Time series analysis: Network performance metrics (CPU utilization, memory usage, packet loss) are time-series data. Algorithms like ARIMA, LSTM (Long Short-Term Memory) neural networks, or Prophet can model these time series and predict future values. High predicted values could indicate an impending failure.

- Classification: Based on historical data of network failures and relevant features (e.g., performance metrics, configuration changes), train a classifier (e.g., logistic regression, decision trees, random forests) to predict the probability of failure.

- Survival analysis: This statistical method focuses on the time until an event occurs (e.g., network failure). It’s useful for predicting the remaining lifetime of network devices or components.

Example using LSTM networks: LSTM networks are adept at handling long-term dependencies in time-series data, making them suitable for predicting failures which might be influenced by events that happened long before the failure.

It’s essential to select appropriate features that are strongly indicative of failures. Feature engineering plays a vital role in the accuracy of failure prediction models. Regular model retraining and evaluation are also crucial to maintain accuracy and adapt to changing network conditions.

Q 8. What are the ethical considerations of using machine learning in network security?

Ethical considerations in using machine learning (ML) for network security are paramount. We must be mindful of potential biases in the data used to train models, which can lead to discriminatory outcomes. For instance, a model trained primarily on data from one geographic region might perform poorly or unfairly on data from another, potentially misidentifying legitimate traffic from underrepresented regions as malicious.

Privacy is another critical concern. ML models often process sensitive network data, requiring robust anonymization and data protection measures to comply with regulations like GDPR. Transparency and explainability are also vital. We need to understand how a model arrives at its conclusions, especially in security applications where decisions have significant consequences. A ‘black box’ model that flags suspicious activity without providing insights into its reasoning could hinder investigations and potentially lead to false positives, causing disruptions to legitimate services.

Finally, accountability is key. Who is responsible when an ML system makes a mistake? Clearly defined roles and responsibilities are necessary to address potential harm caused by faulty or biased systems. Continuous monitoring and auditing are also essential to detect and mitigate ethical issues proactively.

Q 9. Explain your understanding of graph databases and their applications in network analysis.

Graph databases excel at representing relationships between data points, making them ideal for network analysis where the focus is on connections. Unlike relational databases, which use tables and rows, graph databases use nodes (representing entities like computers, users, or applications) and edges (representing relationships like communication flows or data transfers).

In network analysis, we can model a network’s topology as a graph, where nodes are devices and edges represent connections. This allows us to visualize network architecture, analyze traffic patterns, identify vulnerabilities, and detect anomalies. For instance, a graph database can be used to pinpoint a compromised device by analyzing the unusually high number of connections emanating from it or the nature of the data being transferred. Furthermore, they are very efficient at traversing and querying relationships. Finding all devices connected to a specific server is a much more efficient task in a graph database than in a relational one.

Examples of graph databases used for network analysis include Neo4j and Amazon Neptune. These databases offer powerful querying languages (like Cypher in Neo4j) that facilitate complex network analyses.

Q 10. How do you evaluate the performance of a machine learning model for network data?

Evaluating the performance of an ML model for network data requires a multifaceted approach. It’s not enough to simply look at accuracy. We must consider the context of network security, which emphasizes minimizing both false positives (flagging benign activity as malicious) and false negatives (missing actual malicious activity).

Common metrics include:

- Precision: Out of all the events flagged as malicious, what percentage were actually malicious?

- Recall (Sensitivity): Out of all the actual malicious events, what percentage did the model correctly identify?

- F1-score: The harmonic mean of precision and recall, providing a balanced measure of performance.

- AUC-ROC (Area Under the Receiver Operating Characteristic Curve): Measures the model’s ability to distinguish between malicious and benign activity across different thresholds.

- Accuracy: While important, accuracy alone can be misleading with imbalanced datasets (more benign than malicious events).

Beyond these metrics, it’s crucial to perform thorough testing on unseen data (a test set separate from the training data) to assess the model’s generalization ability. We also need to consider the model’s explainability. Can we understand why it made certain classifications? This helps build confidence and trust in the model’s decisions.

Q 11. What are some common performance metrics used to assess network performance?

Network performance is evaluated using several metrics, focusing on different aspects of network health and efficiency.

- Latency: The time delay in transmitting data between two points. High latency can indicate congestion or other network problems.

- Throughput: The amount of data transmitted per unit of time. Low throughput can be a sign of bottlenecks.

- Packet Loss: The percentage of data packets that are lost during transmission. High packet loss indicates unreliable connections.

- Jitter: Variation in latency, which can impact the quality of real-time applications like video conferencing.

- Bandwidth: The maximum rate of data transmission across a network link.

- CPU Utilization: The percentage of CPU resources used by network devices. High utilization can indicate overload.

- Memory Utilization: Similar to CPU utilization, but for memory resources.

These metrics are often monitored using network monitoring tools like Nagios, Zabbix, or PRTG. Analyzing trends in these metrics helps identify performance issues and predict potential problems before they impact users.

Q 12. Discuss the challenges of applying machine learning to large-scale network datasets.

Applying ML to large-scale network datasets presents several challenges:

- Data Volume: The sheer size of network data requires efficient storage and processing techniques. Distributed computing frameworks like Spark or Hadoop are often necessary.

- Data Velocity: Network data arrives at high speed, demanding real-time or near real-time processing capabilities. This necessitates the use of streaming platforms like Kafka.

- Data Variety: Network data comes in various formats (e.g., flow records, packet captures). Data cleaning and preprocessing become crucial steps to ensure model compatibility.

- Computational Resources: Training complex ML models on large datasets requires significant computational power, potentially involving specialized hardware like GPUs.

- Model Complexity: Finding the right balance between model complexity and performance is crucial. Overly complex models may overfit the training data and perform poorly on unseen data.

- Feature Engineering: Extracting meaningful features from raw network data is crucial for model performance, requiring domain expertise.

Addressing these challenges often involves a combination of techniques such as data sampling, feature selection, model optimization, and distributed computing frameworks.

Q 13. How would you handle imbalanced datasets in network anomaly detection?

Imbalanced datasets, where one class (e.g., malicious activity) is significantly underrepresented compared to another (e.g., benign activity), are a common problem in network anomaly detection. Standard ML models often perform poorly on the minority class (malicious activity), leading to many false negatives.

Here are some techniques to handle imbalanced datasets:

- Resampling Techniques: Oversampling the minority class (creating synthetic data) or undersampling the majority class can balance the dataset. However, oversampling can lead to overfitting, and undersampling can lose valuable information.

- Cost-Sensitive Learning: Assign higher weights or penalties to misclassifications of the minority class during model training. This encourages the model to pay more attention to the rare events.

- Anomaly Detection Algorithms: Algorithms specifically designed for anomaly detection, such as One-Class SVM or Isolation Forest, can be particularly effective with imbalanced datasets since they focus on learning the characteristics of the majority class (normal behavior).

- Ensemble Methods: Combining multiple models trained on different resampled versions of the dataset can improve overall performance.

The best approach depends on the specific dataset and the nature of the anomaly. Often, a combination of these techniques is employed for optimal results.

Q 14. What are some techniques to reduce dimensionality in network data?

Reducing dimensionality in network data is crucial for improving model performance, reducing computational costs, and enhancing interpretability. High-dimensional data can lead to the ‘curse of dimensionality’, where models become less efficient and more prone to overfitting.

Several techniques can be used:

- Principal Component Analysis (PCA): A linear transformation that projects the data onto a lower-dimensional subspace while preserving as much variance as possible. It’s a widely used method for dimensionality reduction, but it assumes linear relationships in the data.

- t-distributed Stochastic Neighbor Embedding (t-SNE): A nonlinear dimensionality reduction technique that’s particularly useful for visualizing high-dimensional data in two or three dimensions. It’s less effective for high-dimensional data that is very sparse.

- Autoencoders: Neural network architectures trained to reconstruct the input data. By using a bottleneck layer with fewer neurons than the input layer, the autoencoder learns a lower-dimensional representation of the data. This is particularly useful for capturing non-linear relationships.

- Feature Selection: Instead of transforming the data, this involves selecting the most relevant features and discarding the less important ones. This can be done using filter methods (e.g., correlation analysis), wrapper methods (e.g., recursive feature elimination), or embedded methods (e.g., L1 regularization).

The choice of technique depends on the characteristics of the data and the specific goals of the analysis. Often, a combination of techniques provides the best results.

Q 15. Describe different feature engineering techniques for network data.

Feature engineering for network data involves transforming raw network data into informative features that machine learning models can effectively utilize. This is crucial because raw data like adjacency matrices or edge lists aren’t directly interpretable by many algorithms. We aim to capture essential network properties. Techniques include:

- Node Features: These describe individual nodes. Examples include degree (number of connections), betweenness centrality (how often a node lies on shortest paths), eigenvector centrality (influence within the network), clustering coefficient (density of connections around a node), and PageRank (importance based on inbound links). We can also incorporate external data like user demographics or device characteristics.

- Edge Features: These describe relationships between nodes. Examples include edge weight (representing connection strength or traffic volume), time stamps (indicating when a connection was established), type of connection (e.g., TCP, UDP), and communication latency.

- Graph-Level Features: These describe the overall network structure. Examples include network density (proportion of existing links to all possible links), diameter (longest shortest path between any two nodes), average path length, and network motifs (recurring subgraphs).

- Structural Features derived from Subgraphs: Analyzing local structures around nodes or communities unveils valuable information. For instance, we can calculate the number of triangles a node participates in, reflecting the node’s embeddedness in densely connected communities.

- Time-Series Features: For dynamic networks, capturing temporal changes is key. We can derive features like the rate of change in node degree, edge creation/deletion frequencies, and the evolution of centrality measures over time.

For example, in a social network, we might engineer features like the number of followers a user has (node feature), the frequency of interactions between users (edge feature), and the overall network clustering coefficient (graph-level feature). Effective feature engineering significantly improves the performance and interpretability of machine learning models applied to network data.

Career Expert Tips:

- Ace those interviews! Prepare effectively by reviewing the Top 50 Most Common Interview Questions on ResumeGemini.

- Navigate your job search with confidence! Explore a wide range of Career Tips on ResumeGemini. Learn about common challenges and recommendations to overcome them.

- Craft the perfect resume! Master the Art of Resume Writing with ResumeGemini’s guide. Showcase your unique qualifications and achievements effectively.

- Don’t miss out on holiday savings! Build your dream resume with ResumeGemini’s ATS optimized templates.

Q 16. Explain the concept of network flow analysis and its applications.

Network flow analysis examines the movement of data or entities through a network. It’s like tracking the flow of traffic on a highway system. We analyze patterns of data transmission, communication paths, and resource utilization. This involves studying the volume, direction, and timing of flows.

Applications are vast:

- Network Security: Identifying anomalous traffic patterns indicative of intrusions or attacks. For example, detecting unusually high traffic volume to a specific server could signal a DDoS attack.

- Traffic Engineering: Optimizing network routing to reduce congestion and improve performance. By analyzing flow patterns, network operators can identify bottlenecks and adjust routing protocols.

- Social Network Analysis: Understanding information diffusion, influence spread, and community structures. Analyzing communication patterns reveals how information flows through social groups.

- Supply Chain Management: Tracking goods movement and optimizing logistics. Analyzing flows in a supply chain reveals bottlenecks and areas for improvement.

- Financial Networks: Detecting fraudulent transactions or systemic risks by monitoring money flows.

Tools like NetFlow and sFlow are commonly used to collect network flow data. Algorithms like those used in shortest path routing or maximum flow calculations are applied to analyze the collected data.

Q 17. How can you apply machine learning to optimize network routing?

Machine learning can significantly enhance network routing optimization by learning from historical traffic patterns and adapting to dynamic network conditions. Instead of relying on static routing tables, we can use ML models to predict future traffic demands and proactively adjust routes. Here’s how:

- Predictive Modeling: Time series models (ARIMA, LSTM) can forecast future traffic loads on different links. This information can be used to preemptively adjust routing tables, ensuring sufficient bandwidth on heavily used paths.

- Reinforcement Learning: This powerful technique allows the network to learn optimal routing policies through trial and error. The network acts as an agent, choosing routes, receiving rewards (e.g., reduced latency, improved throughput), and updating its routing strategies based on these rewards. This adapts dynamically to changing network conditions.

- Clustering and Anomaly Detection: Machine learning can identify groups of similar traffic patterns. This helps in better understanding network behavior and detecting anomalies that may disrupt traffic flow. Anomaly detection alerts network administrators to potential problems.

For example, an LSTM model might be trained on past traffic data to predict traffic loads for the next hour. This prediction can then be used to adjust the routing protocol (like OSPF or BGP) to optimize traffic flow. Reinforcement learning could be used to train a system that dynamically adjusts routes based on real-time feedback from the network, adapting to sudden traffic surges or link failures.

Q 18. What are some common algorithms used for community detection in network analysis?

Community detection aims to identify groups of densely interconnected nodes in a network. Imagine finding clusters of close friends in a social network. Several algorithms excel at this:

- Louvain Algorithm: A very popular and efficient greedy algorithm that iteratively improves a community structure by optimizing a modularity score (a measure of the quality of the community structure). It’s known for its speed and ability to find high-quality communities even in large networks.

- Label Propagation Algorithm: A simple and fast algorithm that propagates labels (community assignments) through the network. Each node adopts the most frequent label among its neighbors, leading to the formation of clusters.

- Girvan-Newman Algorithm: This algorithm iteratively removes edges with high betweenness centrality. By removing these edges, it breaks apart the network into distinct communities. It’s more computationally expensive than Louvain, but often produces high-quality results.

- Infomap Algorithm: This algorithm uses information theory principles to map the network into communities. It excels at finding communities with high compression efficiency, which often aligns with natural community structures.

The choice of algorithm depends on the network size, desired accuracy, and computational resources. Louvain is often a good starting point for its speed and effectiveness. For very large networks, label propagation offers a fast solution, while Girvan-Newman might be preferred when higher accuracy is required but computational cost is less of a constraint. The Infomap algorithm presents a unique perspective based on information theory that may reveal community structures not identified by other methods.

Q 19. Explain the difference between centrality measures in network analysis.

Centrality measures quantify the importance or influence of nodes within a network. Different measures capture different aspects of importance:

- Degree Centrality: The simplest measure, counting the number of direct connections a node has. Think of it as popularity – a node with many connections is highly central.

- Betweenness Centrality: Measures how often a node lies on the shortest paths between other nodes. High betweenness means a node controls information flow, akin to a gatekeeper.

- Closeness Centrality: Measures the average distance of a node to all other nodes in the network. A node with high closeness centrality can quickly reach other nodes, representing efficiency in communication.

- Eigenvector Centrality: Considers the importance of a node’s neighbors. A node is central if it is connected to other central nodes, highlighting influential nodes in interconnected communities.

- PageRank: Similar to eigenvector centrality, but considers the inbound links with more weight. This is widely used in search engine algorithms.

For example, in a social network, a highly influential person might have high eigenvector centrality, while a person connecting disparate groups might have high betweenness centrality. The choice of centrality measure depends on what aspect of importance you want to capture. Often using a combination of centrality measures provides a more complete picture.

Q 20. How would you use machine learning to improve network security?

Machine learning offers powerful tools to enhance network security. It can detect anomalies, predict attacks, and respond proactively:

- Intrusion Detection Systems (IDS): Machine learning algorithms (e.g., Support Vector Machines, Random Forests, Neural Networks) can be trained on network traffic data to identify malicious patterns. These systems learn to distinguish between normal and anomalous traffic, flagging suspicious activities for investigation.

- Anomaly Detection: Unsupervised learning techniques (e.g., clustering, autoencoders) can identify unusual network behaviors that deviate from established norms. This is useful for detecting zero-day attacks or previously unseen threats.

- Predictive Modeling: Time series analysis and other predictive models can forecast potential attack vectors or vulnerabilities, allowing for proactive mitigation strategies.

- Threat Intelligence Integration: Machine learning can integrate threat intelligence data (information about known attacks and vulnerabilities) to enhance its detection capabilities.

- Vulnerability Prediction: Machine learning models can analyze software code and network configurations to predict potential vulnerabilities before they are exploited.

For example, an autoencoder can be trained on normal network traffic. Deviations between the input and reconstructed data indicate anomalies, potentially suggesting an attack in progress. This approach can catch attacks that traditional signature-based IDS might miss.

Q 21. Describe your experience with various programming languages and tools used in network analytics.

My experience in network analytics spans several programming languages and tools. I’m highly proficient in Python, utilizing libraries like NetworkX, igraph, and scikit-learn for network analysis and machine learning. NetworkX provides powerful tools for graph manipulation and analysis, while igraph offers a highly optimized alternative, especially for large graphs. Scikit-learn provides a comprehensive suite of machine learning algorithms. I also have experience with R, particularly using packages like igraph and the caret package for statistical modeling.

For data visualization, I frequently use tools like Gephi and Cytoscape, which allow for interactive exploration of network structures. These tools are vital for interpreting results and communicating findings effectively. I’m familiar with various database technologies, including SQL and NoSQL databases, necessary for managing and querying large network datasets. Furthermore, I have experience with command-line tools such as tcpdump and Wireshark for capturing and analyzing raw network traffic data, and experience with cloud computing platforms like AWS and Azure for handling large-scale network analytics tasks.

Q 22. Explain your understanding of different network protocols and their relevance to data analysis.

Network protocols are the set of rules that govern communication between devices on a network. Understanding them is crucial for network analytics because the data we analyze originates from these protocols. Different protocols carry different types of information, and this information dictates the analysis techniques we employ.

- TCP/IP (Transmission Control Protocol/Internet Protocol): The foundational protocol suite of the internet. TCP provides reliable, ordered data delivery, while IP handles addressing and routing. Analyzing TCP/IP data allows us to understand application performance, identify bottlenecks, and detect anomalies. For example, analyzing TCP packet sizes and retransmission rates can reveal congestion issues.

- HTTP (Hypertext Transfer Protocol): Used for web communication. Analyzing HTTP logs allows us to understand website traffic patterns, user behavior, and identify potential security threats. For example, we can analyze the frequency of requests to specific pages to understand user engagement.

- DNS (Domain Name System): Translates domain names (like google.com) into IP addresses. Analyzing DNS queries can reveal information about users’ browsing habits and potential malware infections. A sudden spike in DNS queries to suspicious domains could indicate a compromised machine.

- SNMP (Simple Network Management Protocol): Used for managing network devices. Analyzing SNMP data provides insights into device performance, resource utilization, and potential failures. We can monitor CPU usage, memory consumption, and network interface statistics to predict potential issues.

In essence, each protocol provides a unique lens through which we can view network behavior. The choice of analysis techniques depends heavily on the specific protocol and the data it provides.

Q 23. How would you design a machine learning model to predict user behavior on a network?

Designing a machine learning model to predict user behavior on a network requires a multi-step process, starting with data collection and feature engineering.

- Data Collection: Gather relevant data from various sources like network logs (HTTP, DNS, etc.), user activity data, and device information. This could involve using tools like Wireshark, tcpdump, or dedicated network monitoring systems.

- Feature Engineering: Extract relevant features from the raw data. This might involve creating features such as the frequency of website visits, average session duration, types of websites visited, time of day of activity, location data, and the volume of data transferred. Feature scaling and transformation are also critical steps here.

- Model Selection: Choose an appropriate machine learning model based on the nature of the problem. For predicting user behavior, which is often a classification or regression problem, several models are suitable:

- Recurrent Neural Networks (RNNs): Excellent for sequential data like user browsing history.

- Random Forests or Gradient Boosting Machines (GBMs): Robust and effective for handling a large number of features.

- Support Vector Machines (SVMs): Powerful for high-dimensional data.

- Training and Evaluation: Train the chosen model using a labeled dataset (e.g., users labeled as ‘high-engagement’ or ‘low-engagement’). Rigorously evaluate the model’s performance using appropriate metrics such as precision, recall, F1-score (for classification) or RMSE, MAE (for regression). Cross-validation techniques are vital to prevent overfitting.

- Deployment and Monitoring: Deploy the trained model to a production environment and continuously monitor its performance. Regular retraining and model updates are crucial to maintain accuracy as user behavior evolves.

For example, an RNN could predict which users are likely to click on a specific advertisement based on their past browsing patterns and demographics.

Q 24. Discuss your experience with cloud-based network analytics platforms.

I have extensive experience with cloud-based network analytics platforms like AWS CloudTrail, Google Cloud’s Network Intelligence Center, and Azure Monitor. These platforms offer scalable and cost-effective solutions for analyzing large volumes of network data. My experience includes:

- Data Ingestion and Processing: Using these platforms’ tools to ingest network logs, flow data, and other relevant information from various sources, and then processing this data using their built-in capabilities or custom scripts.

- Data Visualization and Reporting: Creating dashboards and reports to visualize network performance metrics, identify security threats, and monitor user behavior. This often involves using the platforms’ built-in visualization tools or integrating with third-party business intelligence platforms.

- Anomaly Detection: Leveraging the platforms’ built-in machine learning capabilities or integrating custom machine learning models to detect unusual network activity that may indicate security breaches or performance issues.

- Security Analysis: Utilizing these platforms to analyze network traffic for malicious activity, such as intrusion attempts, malware infections, and data exfiltration. This includes correlating events from different sources to identify complex attacks.

The scalability and flexibility of these cloud platforms are invaluable for handling the ever-increasing volume and complexity of network data. The ability to seamlessly integrate with other cloud services further enhances their utility in a modern network infrastructure.

Q 25. How can you apply time series analysis to network data?

Time series analysis is crucial for understanding network data’s temporal dynamics. Network traffic, device performance metrics, and security events all exhibit time-dependent patterns. Applying time series analysis allows us to identify trends, seasonality, and anomalies in network behavior.

- Trend Analysis: Identifying long-term patterns in network traffic, such as increasing data volume over time. This can inform capacity planning and network upgrades.

- Seasonality Detection: Recognizing recurring patterns within specific time intervals. For example, higher network traffic during peak business hours or specific days of the week. This helps optimize resource allocation and predict potential bottlenecks.

- Anomaly Detection: Identifying unusual deviations from the established patterns. This could signal security breaches, equipment failures, or other critical events requiring immediate attention.

Techniques like ARIMA models, Exponential Smoothing, and Prophet can be used. For example, we could use ARIMA to predict future network bandwidth requirements based on historical data, or Prophet to forecast daily network traffic, accounting for seasonality and trend.

Furthermore, techniques like change point detection can be particularly useful in identifying sudden shifts in network behavior, which could be indicative of a cyberattack or a hardware failure. These insights enable proactive network management and quicker responses to critical incidents.

Q 26. Explain your understanding of different network optimization algorithms.

Network optimization algorithms aim to improve network performance by efficiently allocating resources and managing traffic flow. These algorithms address various aspects of network management, from routing to resource allocation.

- Shortest Path Algorithms (e.g., Dijkstra’s algorithm, Bellman-Ford algorithm): These find the optimal path between nodes in a network, minimizing latency or cost. This is fundamental for routing protocols.

- Linear Programming: Used to optimize network resource allocation, such as bandwidth allocation or server placement. This is often used in conjunction with other techniques to find the most efficient use of resources.

- Genetic Algorithms: Evolutionary algorithms used to find near-optimal solutions to complex network optimization problems, especially when dealing with a large number of variables or constraints.

- Simulated Annealing: A probabilistic technique that explores the solution space to find a good solution, even if not the absolute best. This is beneficial when dealing with complex and non-convex optimization problems.

The choice of algorithm depends on the specific optimization problem. For instance, Dijkstra’s algorithm is efficient for finding the shortest path in a static network, while genetic algorithms are better suited for dynamic networks where conditions frequently change.

Q 27. What are your experiences with deep learning techniques applied to network data?

Deep learning techniques, particularly Convolutional Neural Networks (CNNs) and Recurrent Neural Networks (RNNs), have shown great promise in analyzing network data. Their ability to automatically learn complex patterns from large datasets makes them particularly valuable.

- CNNs for Image-based Network Analysis: Representing network topology as an image allows CNNs to identify patterns and anomalies that might be missed by traditional methods. For example, a CNN can be trained to identify malicious traffic patterns based on network flow visualizations.

- RNNs for Sequential Data Analysis: RNNs, especially LSTMs and GRUs, excel at analyzing sequential data like network traffic logs. They can capture temporal dependencies to predict future network behavior, detect anomalies, and identify intrusion attempts.

- Autoencoders for Anomaly Detection: Autoencoders can learn a compressed representation of normal network traffic. Deviations from this representation, reconstructed with higher error, indicate anomalies that could signal security breaches or performance problems.

However, the application of deep learning requires significant computational resources and expertise in model training and evaluation. Proper data preprocessing and feature engineering are also critical to ensure the model’s accuracy and efficiency.

Q 28. Discuss a challenging network analytics project you’ve worked on and how you overcame the challenges.

In a previous project for a large telecommunications company, we faced the challenge of detecting and mitigating Distributed Denial-of-Service (DDoS) attacks in real time. The sheer volume of malicious traffic made traditional methods ineffective.

The challenge stemmed from the high volume and varied nature of DDoS attacks. Simply filtering based on IP addresses was insufficient because attackers often used botnets to mask their origins. Additionally, the attacks were sophisticated, using various techniques to evade detection.

To overcome this, we implemented a multi-layered approach:

- Data Collection and Preprocessing: We collected network flow data from multiple points in the network using high-speed data collection tools and performed extensive data cleaning and preprocessing to handle noisy data and outliers.

- Anomaly Detection with Machine Learning: We trained a machine learning model (a combination of an autoencoder for anomaly detection and a Random Forest classifier for attack type classification) on historical network traffic data to identify deviations from normal behavior. The model was specifically designed to handle the high dimensionality and variability of DDoS attacks. Features included packet inter-arrival time, packet size distribution, and source-destination IP address pairs.

- Real-time Response System: We integrated the trained model into a real-time monitoring system that could automatically detect and mitigate attacks within milliseconds. This involved developing a system that could trigger automatic responses, such as rate limiting or blackholing, based on the model’s predictions.

- Continuous Monitoring and Refinement: We established a continuous monitoring system to track the model’s performance and make necessary adjustments. Regular retraining of the model with new data was crucial to maintain its effectiveness against evolving attack techniques.

This multi-faceted approach significantly improved the company’s ability to detect and mitigate DDoS attacks, minimizing service disruptions and safeguarding their network infrastructure.

Key Topics to Learn for Network Analytics and Machine Learning Interview

- Network Fundamentals: Understanding network topologies, protocols (TCP/IP, BGP, OSPF), and network performance metrics (latency, jitter, packet loss). Practical application: Analyzing network traffic patterns to identify bottlenecks.

- Data Collection and Preprocessing: Techniques for gathering network data (e.g., NetFlow, sFlow, packet captures), cleaning, and preparing it for machine learning algorithms. Practical application: Building a robust data pipeline for network anomaly detection.

- Machine Learning Algorithms: Familiarity with supervised (classification, regression) and unsupervised (clustering, anomaly detection) learning algorithms relevant to network analytics. Practical application: Implementing a model to predict network failures.

- Time Series Analysis: Understanding and applying time series analysis techniques to analyze network data, including forecasting and anomaly detection. Practical application: Predicting network traffic spikes.

- Network Security Analytics: Applying machine learning to identify and mitigate security threats, including intrusion detection and prevention. Practical application: Developing a model to detect malicious network activity.

- Model Evaluation and Tuning: Understanding key metrics for evaluating machine learning models (precision, recall, F1-score, AUC) and techniques for optimizing model performance. Practical application: Fine-tuning a model for optimal accuracy and efficiency.

- Cloud Networking and Analytics: Understanding the specifics of cloud networking architectures (AWS, Azure, GCP) and how machine learning is applied within these environments. Practical application: Optimizing resource allocation in a cloud-based network.

- Big Data Technologies: Familiarity with big data technologies like Hadoop, Spark, and NoSQL databases for handling large network datasets. Practical application: Processing terabytes of network logs efficiently.

Next Steps

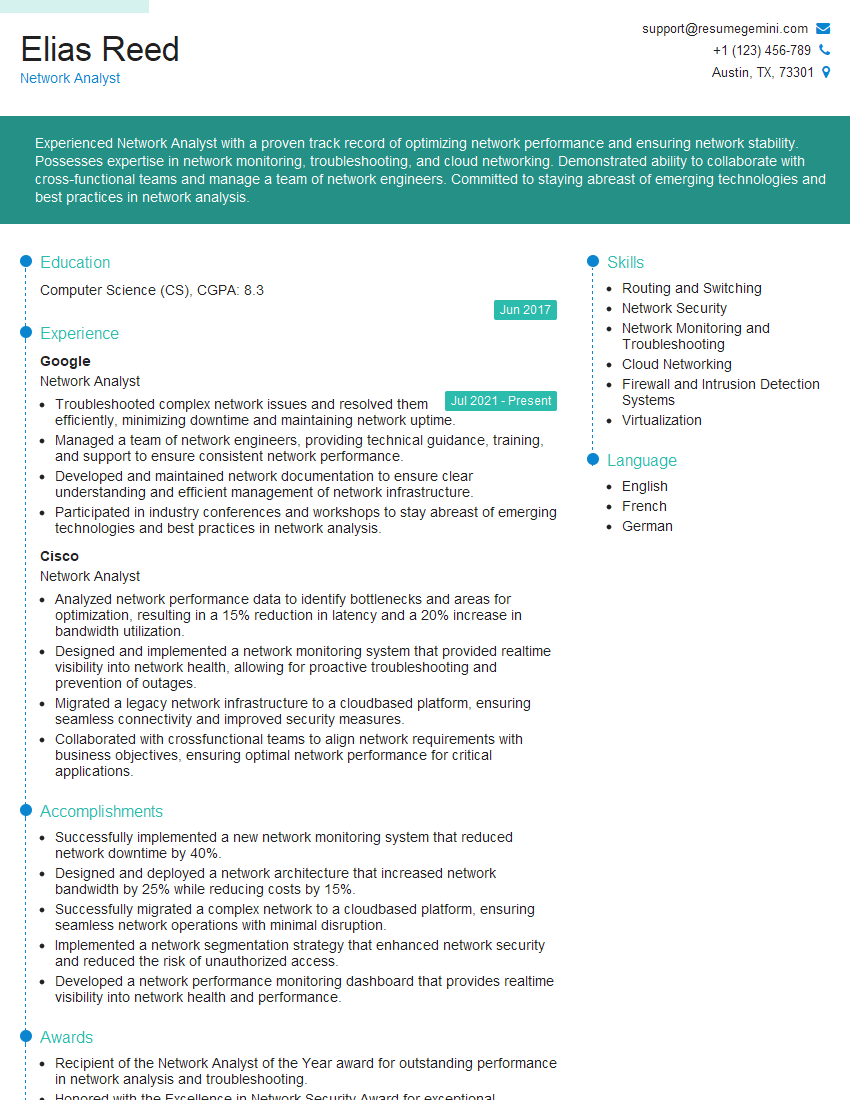

Mastering Network Analytics and Machine Learning significantly enhances your career prospects in a rapidly evolving technological landscape. These skills are highly sought after in various industries, offering exciting opportunities for growth and innovation. To maximize your job search success, it’s crucial to craft a compelling and ATS-friendly resume that highlights your skills and experience effectively. ResumeGemini is a trusted resource to help you build a professional and impactful resume. We provide examples of resumes tailored to Network Analytics and Machine Learning to help you get started. Invest the time to create a strong resume – it’s your first impression with potential employers.

Explore more articles

Users Rating of Our Blogs

Share Your Experience

We value your feedback! Please rate our content and share your thoughts (optional).

What Readers Say About Our Blog

Hello,

We found issues with your domain’s email setup that may be sending your messages to spam or blocking them completely. InboxShield Mini shows you how to fix it in minutes — no tech skills required.

Scan your domain now for details: https://inboxshield-mini.com/

— Adam @ InboxShield Mini

Reply STOP to unsubscribe

Hi, are you owner of interviewgemini.com? What if I told you I could help you find extra time in your schedule, reconnect with leads you didn’t even realize you missed, and bring in more “I want to work with you” conversations, without increasing your ad spend or hiring a full-time employee?

All with a flexible, budget-friendly service that could easily pay for itself. Sounds good?

Would it be nice to jump on a quick 10-minute call so I can show you exactly how we make this work?

Best,

Hapei

Marketing Director

Hey, I know you’re the owner of interviewgemini.com. I’ll be quick.

Fundraising for your business is tough and time-consuming. We make it easier by guaranteeing two private investor meetings each month, for six months. No demos, no pitch events – just direct introductions to active investors matched to your startup.

If youR17;re raising, this could help you build real momentum. Want me to send more info?

Hi, I represent an SEO company that specialises in getting you AI citations and higher rankings on Google. I’d like to offer you a 100% free SEO audit for your website. Would you be interested?

Hi, I represent an SEO company that specialises in getting you AI citations and higher rankings on Google. I’d like to offer you a 100% free SEO audit for your website. Would you be interested?

good