Feeling uncertain about what to expect in your upcoming interview? We’ve got you covered! This blog highlights the most important Technical Proficiency in Intelligence Software interview questions and provides actionable advice to help you stand out as the ideal candidate. Let’s pave the way for your success.

Questions Asked in Technical Proficiency in Intelligence Software Interview

Q 1. Explain your experience with data mining techniques used in intelligence analysis.

Data mining in intelligence analysis involves extracting valuable insights from large datasets to identify patterns, trends, and anomalies relevant to national security or other intelligence objectives. I’ve extensively used techniques like association rule mining (e.g., finding links between seemingly unrelated events or individuals), clustering (grouping similar entities together for easier analysis), and classification (categorizing data points into predefined groups, such as threat levels). For example, I used association rule mining to identify connections between seemingly disparate financial transactions and known terrorist organizations, leading to the disruption of a funding network.

Another crucial technique is anomaly detection, which pinpoints unusual activity that might signal a threat. Imagine a network of communication where a sudden spike in encrypted messages from an unusual source could be flagged as an anomaly. This could indicate the setup of a covert operation. I’ve employed various algorithms for this, from simple statistical methods to more sophisticated machine learning models.

- Association Rule Mining: Used to uncover relationships between variables in large datasets.

- Clustering: Grouping similar data points together to reveal underlying structures.

- Classification: Categorizing data into predefined classes based on learned patterns.

- Anomaly Detection: Identifying outliers or unusual data points that warrant further investigation.

Q 2. Describe your proficiency in using specific intelligence software platforms (e.g., Palantir, Tableau).

My experience spans several leading intelligence software platforms. I’m highly proficient in Palantir Gotham, a powerful platform renowned for its ability to integrate and analyze massive, disparate datasets. I’ve used it extensively for link analysis, building visual representations of relationships between individuals, organizations, and events. This allows for quick identification of key players and potential vulnerabilities in a complex network. For example, I used Palantir to map a complex criminal organization, revealing previously unknown connections and facilitating successful law enforcement operations.

Furthermore, I possess significant experience with Tableau for data visualization and reporting. Tableau’s strength lies in creating interactive dashboards and reports that can effectively communicate complex intelligence findings to non-technical audiences. I’ve used Tableau to create intuitive visualizations of threat trends, allowing analysts and policymakers to quickly grasp critical information and make informed decisions.

Q 3. How do you handle large datasets in an intelligence context? What tools/techniques do you employ?

Handling large datasets in intelligence analysis requires a combination of robust software and efficient data processing techniques. One key strategy is distributed computing, leveraging clusters of machines to process data in parallel. This significantly reduces processing time for tasks like data cleaning, transformation, and analysis. Hadoop and Spark are powerful frameworks for this purpose. I have experience implementing these frameworks to process datasets exceeding terabytes in size.

Another critical aspect is data sampling. Instead of analyzing the entire dataset, which can be computationally expensive and time-consuming, I often create representative samples that accurately reflect the characteristics of the full dataset. This allows for faster analysis without sacrificing the integrity of the results.

Furthermore, efficient data storage is paramount. Databases optimized for large datasets, such as NoSQL databases, are crucial for managing and querying large volumes of information efficiently. I’ve used technologies like MongoDB and Cassandra in intelligence applications.

Q 4. Describe your experience with data visualization for intelligence reporting.

Data visualization is crucial for transforming raw intelligence data into actionable insights. I’ve used various techniques to create compelling and informative visualizations. For instance, network graphs are essential for showing relationships between entities, while heatmaps highlight patterns and anomalies in geographic or temporal data. I’ve created interactive dashboards that allow users to drill down into specific data points and explore relationships dynamically.

My experience includes designing visualizations for diverse audiences—from technical analysts to senior policymakers. This requires tailoring the presentation style and complexity to match the audience’s level of understanding. For example, for senior leadership, I’ve focused on high-level summaries and key takeaways, whereas for technical analysts, more granular detail has been provided.

Tools like Tableau, Power BI, and even custom visualizations using programming languages like Python (with libraries such as Matplotlib and Seaborn) are critical for effectively communicating intelligence findings.

Q 5. How do you ensure the accuracy and reliability of intelligence data?

Ensuring data accuracy and reliability is paramount in intelligence work. This requires a multi-faceted approach. First, we need to rigorously evaluate the sources of information, considering their credibility, biases, and potential for manipulation. Triangulation – confirming information from multiple independent sources – is a critical technique.

Data validation and cleaning are also crucial. This involves identifying and correcting errors, inconsistencies, and missing values in the data. This can be a time-consuming process, often involving automated scripts and manual review. For example, I’ve developed Python scripts to automatically detect and flag potential inconsistencies in data entries.

Finally, maintaining a detailed audit trail of data processing steps is vital for transparency and accountability. This allows us to track changes made to the data and retrace steps if necessary.

Q 6. Explain your understanding of data security and privacy within an intelligence setting.

Data security and privacy are paramount in intelligence work, especially given the sensitivity of the information involved. My experience encompasses handling data in accordance with strict security protocols and regulations, such as those governing classified information. This involves understanding and implementing robust access control mechanisms, encryption techniques (both at rest and in transit), and data loss prevention (DLP) strategies.

I’m familiar with various security best practices, including the principle of least privilege (granting users only the access they need), regular security audits, and incident response planning. My experience also includes working with secure databases and utilizing encryption technologies to protect sensitive intelligence data.

Furthermore, I understand the importance of adhering to privacy regulations and guidelines, ensuring data is handled responsibly and ethically, and protecting the privacy rights of individuals mentioned in intelligence reports.

Q 7. How familiar are you with different data formats used in intelligence gathering (e.g., XML, JSON, CSV)?

I’m proficient in handling various data formats commonly used in intelligence gathering. This includes structured formats such as XML and JSON, which are often used for exchanging data between systems, and CSV, a simple yet widely used format for tabular data. I’ve written scripts to parse and process these formats, transforming them into suitable structures for analysis. For example, I’ve used Python libraries like xml.etree.ElementTree for XML parsing and json for JSON data manipulation.

I can also work with semi-structured and unstructured data formats, including plain text documents, emails, and social media posts. Techniques such as natural language processing (NLP) are crucial for extracting meaningful information from these types of data. I’ve used NLP libraries like NLTK and spaCy for tasks like text classification, sentiment analysis, and named entity recognition.

# Example Python code snippet for JSON parsing: import json with open('data.json') as f: data = json.load(f) # Accessing data elements print(data['name']) Q 8. Describe your experience with scripting languages (e.g., Python, R) for data analysis in intelligence.

Scripting languages like Python and R are indispensable for data analysis in intelligence work. Their flexibility and extensive libraries allow for efficient data manipulation, statistical analysis, and visualization. Python, with libraries like Pandas and NumPy, excels at handling large datasets, cleaning data, and performing complex calculations. R, with its statistical focus and packages like ggplot2, is powerful for visualizing trends and patterns. For example, I’ve used Python to process large geospatial datasets of intercepted communications, identifying communication patterns between suspected individuals. In another project, I used R to model the probability of certain events based on various intelligence inputs, allowing us to prioritize investigations.

Specifically, I’ve utilized Python’s pandas library for data manipulation and cleaning, scikit-learn for machine learning tasks like classification and regression, and matplotlib and seaborn for data visualization. In R, I’ve extensively used dplyr for data wrangling, ggplot2 for creating informative visualizations, and various statistical modeling packages.

Q 9. How do you approach anomaly detection in large datasets relevant to intelligence work?

Anomaly detection in large intelligence datasets involves identifying unusual patterns or outliers that deviate significantly from established norms. My approach is multi-faceted and leverages a combination of statistical methods and machine learning techniques. It starts with data preprocessing—cleaning, transforming, and normalizing the data to remove noise and inconsistencies. Then, I employ a range of techniques. Statistical methods like Z-score or IQR (Interquartile Range) can highlight outliers based on their deviation from the mean or median. For more complex datasets, I utilize machine learning algorithms, such as One-Class SVM (Support Vector Machine) or Isolation Forest, which are particularly effective in identifying anomalies in high-dimensional spaces.

For example, I once worked on a project where we needed to detect unusual financial transactions potentially linked to illicit activities. We used Isolation Forest to identify transactions that fell outside the typical patterns of legitimate transactions. The algorithm effectively highlighted transactions that were suspicious in terms of amount, frequency, or location. The results were then further investigated by human analysts to verify the findings.

Q 10. Explain your understanding of different intelligence gathering methodologies and how software supports them.

Intelligence gathering methodologies are diverse, ranging from open-source intelligence (OSINT) to human intelligence (HUMINT), signals intelligence (SIGINT), and more. Software plays a crucial role in supporting each of these. For OSINT, tools can automate web scraping, social media monitoring, and data aggregation from publicly available sources. For HUMINT, software can aid in managing sources, securing communications, and analyzing information obtained from human contacts. SIGINT tools may involve signal processing, decryption, and traffic analysis to extract valuable information from intercepted communications.

Imagine using a specialized software suite to analyze social media data. This could involve searching for keywords related to a specific threat, analyzing sentiment, and identifying potential networks. Or consider a database system designed to securely store and manage HUMINT data, ensuring confidentiality and access control.

- OSINT: Software for web scraping, social media monitoring, and data aggregation.

- HUMINT: Secure communication platforms, database management systems for source information.

- SIGINT: Signal processing software, decryption tools, traffic analysis systems.

- IMINT: Image analysis software, geospatial intelligence systems.

Q 11. Describe your experience with building and maintaining databases for intelligence information.

Building and maintaining databases for intelligence information requires a robust and secure system capable of handling sensitive data while ensuring efficient querying and analysis. I have extensive experience designing and implementing relational databases (using SQL) and NoSQL databases, choosing the most suitable approach based on the specific needs of the project. Security is paramount, so I prioritize measures such as encryption, access control, and data auditing. The choice between relational and NoSQL databases depends heavily on the nature of the data and the types of queries that will be performed. Relational databases excel in structured data with defined relationships, while NoSQL databases are more flexible for semi-structured or unstructured data.

For instance, I built a relational database to store structured intelligence reports, linking them to associated individuals, organizations, and events. We implemented stringent access controls to ensure that only authorized personnel could access specific data.

Q 12. How would you design a system to track and analyze specific threats or patterns of interest?

Designing a system to track and analyze specific threats or patterns requires a layered approach. First, define the threats or patterns of interest clearly. This involves identifying key indicators (e.g., keywords, locations, individuals, financial transactions) that are characteristic of the threat. Then, design a data ingestion pipeline to collect relevant data from various sources (e.g., news feeds, social media, databases). This could involve using APIs or web scraping techniques. Next, implement a data processing and analysis engine capable of identifying patterns and anomalies. This will likely involve machine learning algorithms, statistical modeling, and visualization tools.

Finally, establish a system for alert generation and reporting. The system should automatically notify analysts of potentially significant events that match the predefined threat patterns. The system also needs a robust user interface for analysts to interact with the data, conduct investigations, and generate reports.

An example could be a system that tracks online extremist groups. The system ingests data from various online forums and social media platforms, identifies keywords and patterns associated with radicalization, and alerts analysts of potential threats. It also maps the connections between individuals within these groups, providing a comprehensive overview of the network.

Q 13. How would you address ethical considerations related to the use of intelligence software and data?

Ethical considerations are paramount when working with intelligence software and data. Privacy, accuracy, and potential for misuse are central concerns. It’s crucial to adhere strictly to relevant laws and regulations (like the Fourth Amendment in the US) concerning data collection, storage, and use. Privacy-preserving techniques, such as data anonymization and differential privacy, should be incorporated wherever possible. Transparency and accountability are also vital; audit trails should be maintained to track data access and modifications. Regular ethical reviews of software and data practices are essential to ensure compliance and prevent potential harm.

For example, before deploying any new intelligence software, it’s imperative to conduct a thorough risk assessment evaluating the potential for privacy violations or biases in algorithms. We need to establish clear protocols for handling sensitive data and ensure that all personnel receive training on ethical considerations.

Q 14. Describe your experience with integrating different intelligence data sources.

Integrating diverse intelligence data sources is a critical aspect of effective intelligence analysis. This often involves working with data in various formats and from different sources. Data standardization and transformation are essential first steps. This might involve converting data into a common format (e.g., JSON or XML), cleaning inconsistencies, and handling missing values. Data fusion techniques can then be employed to combine information from different sources, potentially enhancing accuracy and completeness.

For example, integrating data from financial transactions, communication intercepts, and social media activity requires careful consideration of data formats and structures. Data warehousing techniques, such as ETL (Extract, Transform, Load) processes, can help streamline this integration. Furthermore, developing a data schema that effectively represents the relationships between different data sources is crucial for efficient querying and analysis.

Q 15. Explain your approach to data cleaning and preprocessing in an intelligence context.

Data cleaning and preprocessing in intelligence analysis is crucial for ensuring the accuracy and reliability of our insights. It’s like preparing ingredients before cooking a complex meal – you wouldn’t start cooking without cleaning and chopping your vegetables, right? My approach involves several key steps:

- Data Identification and Validation: First, I meticulously identify the data sources and rigorously validate their authenticity and provenance. This often involves cross-referencing information from multiple sources to confirm accuracy.

- Handling Missing Data: Missing data is common in intelligence work. I employ various strategies, including imputation (using statistical methods to estimate missing values) and removal (if the missing data significantly impacts analysis). The choice depends on the context and the nature of the missing data.

- Data Transformation: This step involves converting data into a suitable format for analysis. This might include converting date formats, standardizing units of measurement, or cleaning up inconsistent text entries. For example, I might use regular expressions to standardize addresses or phone numbers.

- Outlier Detection and Treatment: Outliers, or data points significantly different from others, can skew the analysis. I use statistical methods and visualization techniques to identify and handle outliers, either by removing them (if they represent errors) or investigating their potential significance.

- Data Reduction: In intelligence, we often deal with massive datasets. I employ techniques like feature selection and dimensionality reduction (e.g., Principal Component Analysis) to manage data volume and improve model efficiency.

For instance, in one project involving social media data, I used Python libraries like Pandas and Scikit-learn to clean and preprocess a large dataset of tweets, removing duplicates, handling missing geolocation data, and standardizing hashtags.

Career Expert Tips:

- Ace those interviews! Prepare effectively by reviewing the Top 50 Most Common Interview Questions on ResumeGemini.

- Navigate your job search with confidence! Explore a wide range of Career Tips on ResumeGemini. Learn about common challenges and recommendations to overcome them.

- Craft the perfect resume! Master the Art of Resume Writing with ResumeGemini’s guide. Showcase your unique qualifications and achievements effectively.

- Don’t miss out on holiday savings! Build your dream resume with ResumeGemini’s ATS optimized templates.

Q 16. How do you prioritize and manage multiple tasks related to intelligence analysis and software development?

Prioritizing and managing multiple tasks in intelligence analysis and software development requires a structured and adaptable approach. I utilize several techniques:

- Project Management Methodologies: I leverage Agile principles, employing tools like Scrum or Kanban to break down large projects into smaller, manageable tasks. This allows for iterative development and flexible adaptation to changing priorities.

- Task Prioritization Matrix: I use a matrix based on urgency and importance to rank tasks. High-urgency, high-importance tasks receive immediate attention, while low-urgency tasks are scheduled accordingly.

- Time Management Techniques: I use timeboxing, allocating specific time slots for dedicated tasks to improve focus and efficiency. The Pomodoro Technique is particularly useful for maintaining concentration.

- Collaboration and Communication: Open communication with team members and stakeholders is essential. Regular meetings, progress reports, and clear communication channels ensure everyone is aligned and informed.

- Tools and Technologies: I utilize project management software like Jira or Trello to track progress, assign tasks, and manage deadlines effectively.

For example, when working on a project that involved both developing a new data visualization tool and analyzing incoming intelligence reports, I prioritized the critical analysis tasks based on their impact on immediate operational needs while simultaneously allocating time for the software development sprints.

Q 17. Explain your experience with software development methodologies (e.g., Agile) in an intelligence setting.

In the intelligence domain, Agile methodologies are invaluable. The iterative nature of Agile allows for rapid adaptation to evolving intelligence needs and feedback from stakeholders. My experience with Agile includes:

- Scrum: I’ve participated in several Scrum projects, taking on roles such as Scrum Master or Developer. I’m familiar with sprint planning, daily stand-ups, sprint reviews, and retrospectives. The iterative approach ensures constant feedback loops.

- Kanban: I’ve used Kanban boards to visualize workflows and track progress. This approach is particularly useful for managing multiple, concurrent tasks with varying priorities.

- Test-Driven Development (TDD): I embrace TDD to ensure software quality and reliability. Writing tests first guides the development process and ensures the code meets the requirements.

- Continuous Integration/Continuous Delivery (CI/CD): I’m proficient in implementing CI/CD pipelines to automate testing and deployment, facilitating faster release cycles.

In a recent project developing a real-time threat detection system, we employed Scrum to break down development into two-week sprints, enabling us to incorporate feedback from analysts and adjust functionality as needed.

Q 18. How do you handle incomplete or contradictory data in your analysis?

Incomplete or contradictory data is a frequent challenge in intelligence analysis. My approach involves a combination of techniques:

- Source Verification and Triangulation: I prioritize verifying data from multiple independent sources to identify potential biases or inaccuracies. This triangulation helps to determine the most reliable information.

- Data Fusion and Integration: I use data fusion techniques to combine information from various sources, resolving contradictions where possible. This often involves weighting data based on its reliability and source credibility.

- Statistical Analysis: Employing statistical methods to identify patterns and trends despite incomplete data. For example, I might use regression analysis or machine learning techniques to predict missing values or identify relationships between variables despite data gaps.

- Qualitative Analysis: I use qualitative analysis techniques to interpret the context and meaning behind incomplete or contradictory data. This involves understanding the potential motivations and biases of sources.

- Transparency and Documentation: I meticulously document any assumptions or limitations imposed by incomplete or contradictory data. This transparency ensures that the analysis is appropriately interpreted.

For example, when analyzing conflicting reports about a potential terrorist threat, I cross-referenced information from multiple intelligence agencies, open-source intelligence, and social media, carefully documenting the discrepancies and explaining the reasoning behind my conclusions.

Q 19. How familiar are you with natural language processing (NLP) techniques applied to intelligence data?

I’m highly familiar with NLP techniques applied to intelligence data. NLP is essential for extracting insights from unstructured text data, such as news articles, social media posts, and intercepted communications.

- Named Entity Recognition (NER): I use NER to identify and classify named entities (e.g., people, organizations, locations) in text data. This helps to identify key players and locations in intelligence reports.

- Sentiment Analysis: I leverage sentiment analysis to determine the emotional tone of text, identifying positive, negative, or neutral sentiments. This can help assess the overall sentiment towards certain events or entities.

- Topic Modeling: I use topic modeling techniques (e.g., Latent Dirichlet Allocation) to identify underlying themes and topics within large volumes of text data. This helps to discover hidden patterns and insights.

- Machine Translation: I utilize machine translation tools to overcome language barriers and analyze multilingual data sources.

- Text Summarization: Automatic text summarization helps efficiently process large amounts of text data, providing concise summaries of key information.

In one project, I used Python libraries like NLTK and spaCy to perform sentiment analysis on social media posts to gauge public opinion regarding a specific geopolitical event. This helped identify potential flashpoints and public anxieties.

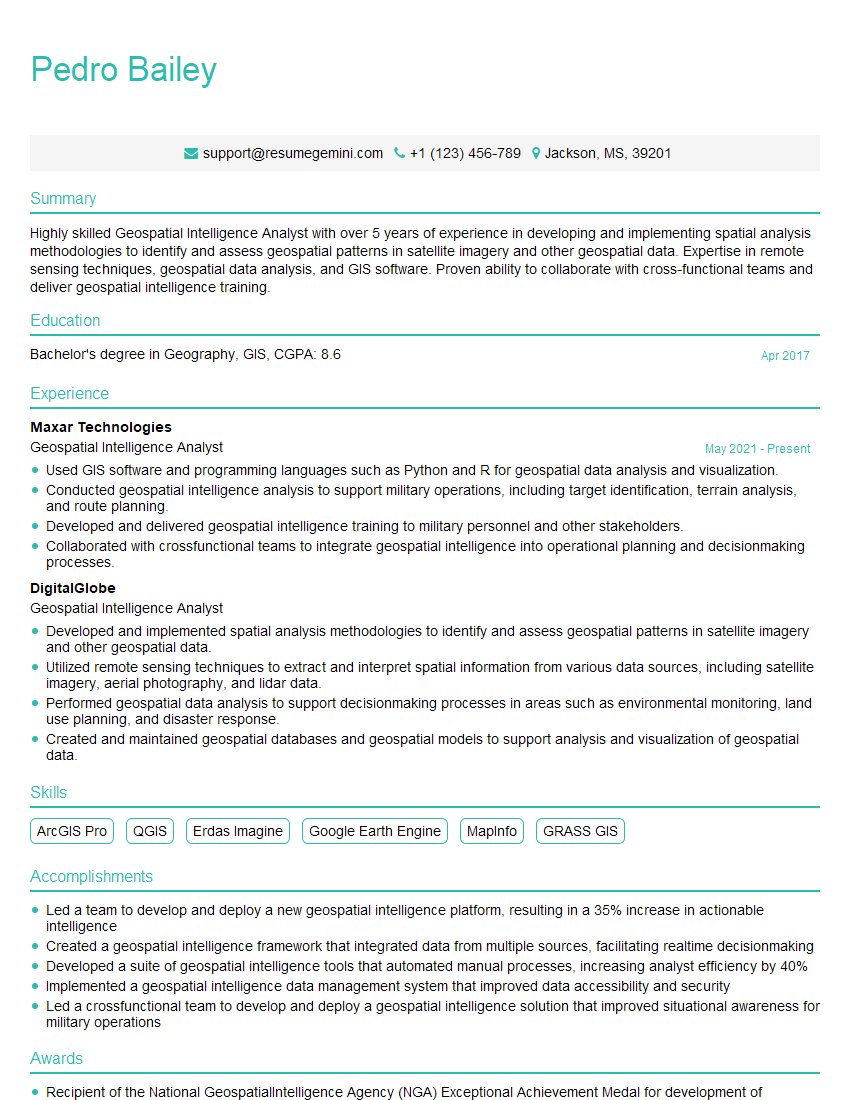

Q 20. Describe your experience working with geospatial data and software.

I possess significant experience working with geospatial data and software. Geospatial intelligence (GEOINT) plays a vital role in understanding events and activities on the Earth’s surface. My experience includes:

- GIS Software: Proficiency in using GIS software such as ArcGIS and QGIS to visualize, analyze, and map geospatial data.

- Data Formats: Experience working with various geospatial data formats, including shapefiles, GeoJSON, and KML.

- Spatial Analysis: Applying spatial analysis techniques such as proximity analysis, overlay analysis, and network analysis to extract meaningful insights from geospatial data.

- Remote Sensing: Familiarity with remote sensing data and techniques, including satellite imagery analysis.

- Geospatial Databases: Experience working with geospatial databases such as PostGIS.

For example, in a recent project, I used ArcGIS to map the movement of suspected smugglers across a region, combining GPS data from intercepted vehicles with satellite imagery to identify patterns and key locations. This visualization aided in the development of effective counter-smuggling strategies.

Q 21. How do you evaluate the effectiveness of your intelligence analysis?

Evaluating the effectiveness of intelligence analysis is crucial to ensure that our efforts are providing actionable insights. My approach involves several key aspects:

- Actionable Intelligence: The most important measure is whether the analysis leads to concrete actions that impact the mission. Did the analysis contribute to disrupting a criminal network? Did it influence a policy decision? These are key indicators of success.

- Accuracy and Reliability: A thorough assessment of the accuracy and reliability of the analysis is essential. This involves comparing the analysis findings to subsequent events or data to assess the accuracy of predictions or assessments.

- Timeliness: Intelligence needs to be timely to be effective. Did the analysis provide information when it was needed most? Delays can significantly diminish the impact of the analysis.

- Clarity and Conciseness: The analysis should be clearly communicated and easily understood by the intended audience. The use of effective visualization techniques can greatly enhance clarity and communication.

- Feedback Mechanisms: Establishing clear feedback mechanisms is critical. Regular communication with stakeholders helps gather feedback and improve the quality and effectiveness of future analyses.

For example, we regularly track the effectiveness of our threat assessments by monitoring whether they accurately predicted incidents and whether the information influenced counter-terrorism strategies. This continuous evaluation and feedback cycle is vital in refining our processes and improving our future analyses.

Q 22. Explain your understanding of different types of intelligence (e.g., SIGINT, HUMINT, OSINT).

Intelligence gathering relies on various sources and methods, categorized into different types. Understanding these types is crucial for effective intelligence analysis and operations. Let’s explore three key types:

- SIGINT (Signals Intelligence): This involves intercepting and analyzing electronically transmitted information, such as communications (radio, satellite, internet), radar signals, and other electronic emissions. Think of it like listening in on conversations or monitoring electronic activity to uncover hidden information. For example, analyzing intercepted radio communications between suspected terrorist groups can reveal their plans and locations.

- HUMINT (Human Intelligence): This focuses on gathering information from human sources. This might involve recruiting informants, conducting interviews, or using undercover agents to gain insights directly from individuals. Imagine a spy gathering intelligence by cultivating relationships with people close to a target organization. A classic example is the use of double agents to infiltrate enemy organizations.

- OSINT (Open-Source Intelligence): This leverages publicly available information, including news reports, social media, academic research, and government publications. Think of it as being a skilled detective who gathers clues from publicly available sources. For example, analyzing social media posts to understand public sentiment or tracking a company’s movements through its press releases.

In practice, intelligence agencies often combine these types of intelligence, cross-referencing data from multiple sources to build a more complete picture. This triangulation of information allows for stronger, more reliable conclusions.

Q 23. Describe your experience with predictive modeling using intelligence data.

Predictive modeling with intelligence data involves using statistical techniques and machine learning algorithms to forecast future events or behaviors based on historical data. My experience includes developing models using various techniques such as time series analysis, Bayesian networks, and machine learning classifiers (like random forests or support vector machines). For example, I worked on a project predicting the likelihood of civil unrest in a specific region by analyzing various factors such as social media sentiment, economic indicators, and historical conflict patterns. The model identified key indicators that significantly increased the probability of unrest, allowing for proactive intervention.

The process typically starts with data cleaning and preprocessing, followed by feature engineering (identifying and selecting relevant variables). This is followed by model selection, training, and validation. Finally, the model is deployed and monitored for accuracy and efficacy. Model evaluation often involves metrics like precision, recall, and F1-score, chosen based on the specific problem. For instance, to prevent false positives that might lead to unnecessary interventions, we might prioritize precision.

#Example code snippet (Python): from sklearn.ensemble import RandomForestClassifier # ... data preprocessing and feature engineering ... model = RandomForestClassifier() model.fit(X_train, y_train) predictions = model.predict(X_test)Q 24. How do you ensure the scalability and maintainability of your intelligence software solutions?

Scalability and maintainability are paramount in intelligence software. To achieve this, I employ several strategies. First, I utilize a modular design, breaking down the software into independent components that can be easily scaled and updated individually. This prevents large-scale refactoring and reduces the risk of cascading failures. Imagine a well-organized toolbox—each tool serves a specific purpose and can be easily replaced or upgraded without affecting the others.

Second, I leverage cloud-based infrastructure for scalability and flexibility. Cloud services allow for on-demand resource allocation, ensuring the software can handle fluctuating workloads. Third, I follow robust coding practices, including version control (e.g., Git) and thorough documentation. Comprehensive documentation makes the software easier to maintain and understand, even for developers who weren’t originally involved in the project. Additionally, consistent use of design patterns and well-defined APIs ensures interoperability and code reusability.

Finally, automated testing is crucial. Continuous integration and continuous deployment (CI/CD) pipelines ensure that any changes are thoroughly tested before deployment, minimizing the risk of introducing bugs and maintaining high software quality.

Q 25. Explain your experience with testing and quality assurance of intelligence software.

Testing and quality assurance (QA) in intelligence software is critical due to the high stakes associated with the information processed. My approach involves a multi-faceted strategy encompassing different testing levels. This starts with unit testing, focusing on individual software components. Integration testing ensures different components work seamlessly together. System testing verifies the entire system functions correctly and meets requirements. User acceptance testing (UAT) involves real users to validate usability and functionality within realistic scenarios.

Beyond functional testing, I also prioritize security testing, penetration testing, and performance testing. Security testing identifies vulnerabilities that could be exploited by adversaries. Penetration testing simulates real-world attacks to find weaknesses. Performance testing ensures the system can handle expected and peak loads. Furthermore, thorough documentation of testing procedures and results, including bug tracking and resolution, is vital for maintaining accountability and identifying potential areas for improvement. This meticulous approach ensures software is reliable, secure, and efficient in its mission-critical tasks.

Q 26. How familiar are you with cloud-based solutions for intelligence data storage and analysis?

I am highly familiar with cloud-based solutions for intelligence data storage and analysis. Cloud platforms offer significant advantages, including scalability, cost-effectiveness, and enhanced security features. I have experience with services such as Amazon Web Services (AWS), Microsoft Azure, and Google Cloud Platform (GCP), leveraging their data warehousing, analytics, and machine learning capabilities. For instance, I’ve used AWS S3 for secure data storage, AWS EMR for large-scale data processing, and AWS SageMaker for building and deploying machine learning models.

When working with cloud-based solutions, securing data is paramount. This involves implementing robust access controls, encryption at rest and in transit, and regular security audits to ensure compliance with relevant regulations. Cloud platforms provide a range of security features, but implementing them correctly and maintaining ongoing vigilance is crucial.

Q 27. Describe your understanding of data governance and compliance in the intelligence field.

Data governance and compliance are crucial in the intelligence field due to the sensitive nature of the data handled. This involves establishing clear policies and procedures for data handling, storage, access, and disposal. It’s not just about technical measures but also about establishing a robust organizational culture focused on data integrity and security. Compliance requires adherence to both internal policies and external regulations, which vary significantly depending on the jurisdiction and the type of intelligence activity. Examples include laws related to privacy (e.g., GDPR, CCPA), data security, and national security.

My understanding encompasses implementing access control lists (ACLs), data encryption, and data loss prevention (DLP) measures. Regular data audits ensure adherence to established policies. Data lineage tracking helps understand data flow and provenance. Furthermore, thorough documentation of data governance processes and compliance efforts is critical for demonstrating accountability and transparency. This holistic approach safeguards sensitive information while ensuring responsible data usage.

Key Topics to Learn for Technical Proficiency in Intelligence Software Interview

- Data Analysis & Visualization: Understanding techniques for analyzing large datasets, identifying patterns, and effectively visualizing intelligence findings using tools like Tableau or Power BI. Consider exploring different data visualization best practices for optimal communication of insights.

- Database Management Systems (DBMS): Proficiency in querying and manipulating data within relational (SQL) and NoSQL databases. Practice writing efficient queries and optimizing database performance is crucial. Familiarize yourself with common database architectures.

- Programming Languages (Python, R, etc.): Demonstrate competency in at least one relevant programming language, focusing on data manipulation, analysis, and automation tasks within the intelligence context. Prepare examples of your coding skills and problem-solving abilities.

- Machine Learning & AI Applications: Understanding the application of machine learning algorithms (e.g., classification, clustering) for tasks such as anomaly detection, predictive modeling, and pattern recognition within intelligence data. Be prepared to discuss relevant algorithms and their limitations.

- Geospatial Analysis & Mapping: Familiarity with GIS software and techniques for analyzing location-based data, visualizing geographic patterns, and integrating geospatial information into intelligence assessments. Think about how you would use this knowledge to solve real-world problems.

- Data Security & Privacy: Understanding data security protocols, encryption methods, and ethical considerations related to handling sensitive intelligence data. This is a critical area in any intelligence role.

- Open Source Intelligence (OSINT) Techniques: Demonstrate knowledge of methods for collecting and analyzing publicly available information from various sources to support intelligence gathering. Practice explaining your OSINT methodology and its limitations.

Next Steps

Mastering technical proficiency in intelligence software is paramount for a successful and rewarding career in this dynamic field. It unlocks opportunities for impactful work and significant career advancement. To maximize your job prospects, crafting a compelling and ATS-friendly resume is crucial. ResumeGemini offers a trusted platform to build a professional resume that highlights your skills and experience effectively. Take advantage of our resources and examples of resumes tailored to Technical Proficiency in Intelligence Software to showcase your capabilities and land your dream job.

Explore more articles

Users Rating of Our Blogs

Share Your Experience

We value your feedback! Please rate our content and share your thoughts (optional).

What Readers Say About Our Blog

Hello,

We found issues with your domain’s email setup that may be sending your messages to spam or blocking them completely. InboxShield Mini shows you how to fix it in minutes — no tech skills required.

Scan your domain now for details: https://inboxshield-mini.com/

— Adam @ InboxShield Mini

Reply STOP to unsubscribe

Hi, are you owner of interviewgemini.com? What if I told you I could help you find extra time in your schedule, reconnect with leads you didn’t even realize you missed, and bring in more “I want to work with you” conversations, without increasing your ad spend or hiring a full-time employee?

All with a flexible, budget-friendly service that could easily pay for itself. Sounds good?

Would it be nice to jump on a quick 10-minute call so I can show you exactly how we make this work?

Best,

Hapei

Marketing Director

Hey, I know you’re the owner of interviewgemini.com. I’ll be quick.

Fundraising for your business is tough and time-consuming. We make it easier by guaranteeing two private investor meetings each month, for six months. No demos, no pitch events – just direct introductions to active investors matched to your startup.

If youR17;re raising, this could help you build real momentum. Want me to send more info?

Hi, I represent an SEO company that specialises in getting you AI citations and higher rankings on Google. I’d like to offer you a 100% free SEO audit for your website. Would you be interested?

Hi, I represent an SEO company that specialises in getting you AI citations and higher rankings on Google. I’d like to offer you a 100% free SEO audit for your website. Would you be interested?

good