The thought of an interview can be nerve-wracking, but the right preparation can make all the difference. Explore this comprehensive guide to Advanced Manufacturing Systems Modeling interview questions and gain the confidence you need to showcase your abilities and secure the role.

Questions Asked in Advanced Manufacturing Systems Modeling Interview

Q 1. Explain the different types of manufacturing simulation models (discrete event, agent-based, etc.).

Manufacturing simulation models are powerful tools for analyzing and optimizing production processes. Different types of models cater to various levels of complexity and detail. Two prominent types are:

- Discrete Event Simulation (DES): This approach models a system as a sequence of discrete events occurring over time. Each event causes a state change in the system. Think of it like a timeline where you track individual parts moving through a factory. For instance, the arrival of a raw material, the start of a machine operation, or the completion of an assembly are all discrete events. DES is excellent for analyzing throughput, bottlenecks, and resource utilization in manufacturing.

- Agent-Based Simulation (ABS): ABS takes a more granular approach, modeling individual agents (e.g., machines, workers, or even individual parts) that interact with each other and their environment. Agents have their own behaviors, decision-making capabilities, and can adapt to changes. Imagine simulating a whole factory floor where each robot, worker, and conveyor belt is an individual agent making choices and responding to situations. ABS is useful for exploring complex interactions and emergent behavior that might not be apparent with a simpler DES model. For instance, you could use it to study the effects of different communication protocols between agents on overall efficiency.

Other types exist, such as continuous simulation (suitable for chemical processes) and system dynamics (modeling long-term trends), but DES and ABS are most common in manufacturing.

Q 2. Describe your experience with discrete event simulation software (e.g., AnyLogic, Arena, Simio).

I have extensive experience with Arena and AnyLogic, two leading discrete event simulation software packages. In my previous role at XYZ Manufacturing, I used Arena to model and optimize our assembly line process. We identified a bottleneck at a specific workstation, which was causing significant delays. The simulation allowed us to experiment with different solutions – adding an extra machine, adjusting worker assignments, or re-sequencing tasks – before implementing any costly changes. This resulted in a 15% increase in throughput and a 10% reduction in lead time.

With AnyLogic, I’ve worked on more complex projects involving agent-based modeling, including a simulation of a large distribution network. This enabled us to optimize inventory levels, transportation routes, and warehouse operations for improved efficiency and reduced costs. The ability of AnyLogic to handle both discrete event and agent-based modeling made it the ideal choice for a project with such multifaceted requirements.

I’m also proficient in Simio, and I’m comfortable using it for both simple and complex projects based on project requirements and client preferences.

Q 3. How do you validate and verify a manufacturing simulation model?

Validation and verification are crucial steps to ensure the credibility of a simulation model. They are distinct processes:

- Verification: This process confirms that the model is built correctly and functions as intended. It checks for coding errors, logical inconsistencies, and whether the model accurately reflects the designed system. Techniques include code reviews, debugging, and unit testing. A simple example would be checking if the simulation correctly calculates the time it takes for a part to move between two workstations based on the defined speed.

- Validation: This process confirms that the model accurately represents the real-world system it’s meant to simulate. This often involves comparing the simulation output to real-world data. For example, we might compare the simulated throughput to historical production data. If there’s a significant discrepancy, the model needs to be refined. Statistical methods like regression analysis can help to quantify the goodness of fit.

A common approach is iterative. We start with a simplified model, verify its correctness, and then progressively add complexity, validating at each stage against real-world observations. This ensures that the final model is both accurate and reliable.

Q 4. What are the key performance indicators (KPIs) you would track in a manufacturing simulation?

The key performance indicators (KPIs) tracked in a manufacturing simulation vary based on the project’s objectives, but common metrics include:

- Throughput: The number of units produced per unit time.

- Lead time: The time it takes for a product to move through the entire manufacturing process.

- Work-in-progress (WIP): The amount of inventory at different stages of the process.

- Utilization: The percentage of time machines or workers are actively working.

- Cycle time: The time it takes to complete a single unit of production.

- Bottleneck identification: Pinpointing areas in the production process that limit overall capacity.

- Inventory costs: The cost associated with holding raw materials and finished goods.

- Cost per unit: The total cost of production divided by the number of units produced.

By monitoring these KPIs, we can identify areas for improvement and assess the impact of different changes on the overall performance of the manufacturing system.

Q 5. Explain the concept of a digital twin in manufacturing.

A digital twin in manufacturing is a virtual representation of a physical asset or system. It’s a dynamic model that mirrors the behavior of its real-world counterpart, allowing for real-time monitoring, analysis, and optimization. Imagine having a virtual copy of your entire factory, updated constantly with real-time data from sensors and other sources.

This virtual copy can be used for a wide range of purposes, including:

- Predictive maintenance: Identifying potential equipment failures before they occur.

- Process optimization: Simulating different scenarios to identify optimal operating parameters.

- Training and education: Providing a safe and cost-effective environment for operator training.

- Virtual commissioning: Testing and validating new equipment or processes before they’re deployed in the real world.

The digital twin leverages simulation techniques to predict the impact of changes, allowing for more informed decision-making and continuous improvement.

Q 6. How can simulation be used to optimize production scheduling?

Simulation is a powerful tool for optimizing production scheduling. By modeling the entire manufacturing process, we can experiment with different scheduling algorithms and strategies without disrupting real-world operations. For example, we might compare the performance of:

- First-come, first-served (FCFS): A simple approach where jobs are processed in the order they arrive.

- Shortest processing time (SPT): Prioritizing jobs with the shortest processing times to minimize overall completion time.

- Earliest due date (EDD): Prioritizing jobs based on their due dates to reduce tardiness.

- Critical ratio scheduling (CRS): Considering both remaining processing time and remaining due time to determine job priority.

The simulation allows us to evaluate each scheduling approach under various conditions (e.g., machine breakdowns, varying job arrival rates, changes in product mix) and choose the strategy that maximizes throughput, minimizes lead times, reduces inventory, and balances other objectives.

Q 7. Describe your experience with optimization algorithms used in manufacturing.

I have experience with several optimization algorithms relevant to manufacturing. These algorithms are often used in conjunction with simulation to find optimal solutions. Some examples include:

- Linear Programming (LP): Used for problems where the objective function and constraints are linear. This is suitable for optimizing resource allocation, production planning, and transportation networks, particularly when dealing with a simpler, well-defined problem.

- Mixed Integer Programming (MIP): An extension of LP that handles both continuous and integer variables. This is useful when dealing with discrete decisions, such as machine scheduling or capacity planning.

- Genetic Algorithms (GA): Evolutionary algorithms that mimic natural selection to find near-optimal solutions to complex problems. They are particularly well-suited for problems with non-linearity and many constraints, such as optimizing complex production sequences.

- Simulated Annealing (SA): A probabilistic metaheuristic that explores the solution space by accepting some worse solutions with a certain probability, allowing it to escape local optima. This is beneficial for escaping local solutions which are often found in more complex systems.

The choice of algorithm depends on the specific optimization problem and the characteristics of the manufacturing system. Often, a combination of different techniques may be employed to achieve the best results. I am skilled in selecting and applying the most appropriate algorithm for any given manufacturing optimization challenge.

Q 8. How do you handle uncertainty and variability in manufacturing simulation models?

Uncertainty and variability are inherent in manufacturing. We can’t predict exactly when a machine will fail or how long a task will take. In simulation, we address this using several techniques. One common approach is Monte Carlo simulation, where we use probability distributions to model uncertain parameters. Instead of using single values for things like processing times or machine uptimes, we define ranges and probabilities. The simulation then runs many times, each time sampling from these distributions, generating a range of possible outcomes. This provides a much more realistic picture than a deterministic model would.

For instance, if a machine’s downtime is usually between 1 and 3 hours, we wouldn’t just use 2 hours. Instead, we might use a triangular distribution with a minimum of 1 hour, a maximum of 3 hours, and a most likely value of 2 hours. The simulation software then randomly selects a downtime value from this distribution for each run, reflecting the real-world randomness. Another powerful technique is to incorporate historical data directly into the model, which can help to create very realistic variability. We can also use techniques like bootstrapping to generate samples from our limited real-world data.

Beyond Monte Carlo, we can use techniques like discrete event simulation (DES) to model the dynamic nature of the system, considering the timing of events that trigger changes in the system. This allows us to account for unexpected delays or interruptions realistically.

Q 9. Explain the role of data analytics in manufacturing system modeling.

Data analytics plays a crucial role in making manufacturing system models more accurate, insightful, and useful. It provides the foundation for realistic simulation. Think of it as providing the ‘fuel’ for the simulation ‘engine’.

Firstly, data analytics helps in parameter estimation. We leverage historical production data – machine run times, defect rates, material usage, and downtime – to accurately populate the simulation model. This data is used to build probability distributions (as mentioned before) or to directly input empirical distributions. This makes the simulation much more aligned with reality.

Secondly, data analytics helps in model validation. After running the simulation, we compare the output (e.g., throughput, cycle time, inventory levels) to actual historical data. This comparison helps us assess how well the model represents the real manufacturing system. Significant discrepancies point to areas that need refinement in the model.

Thirdly, data analytics can be used to identify patterns and trends in the production data, which might not be immediately apparent. This can highlight potential areas for improvement or risks, further informing the design and parameters of the simulation model.

For example, if we are modeling an injection molding machine, data analytics could reveal that failures tend to occur more frequently on Mondays after long weekends, which should be incorporated in the model for accurate representation of the machine’s behavior.

Q 10. How do you identify bottlenecks in a manufacturing process using simulation?

Identifying bottlenecks in a manufacturing process using simulation is a straightforward yet powerful application. The key is to measure resource utilization and queue lengths.

A bottleneck is a point in the process that limits the overall throughput or efficiency. During a simulation run, the software tracks how busy each resource (machines, workers, storage areas) is. A resource with consistently high utilization (close to 100%) and a large queue of jobs waiting to be processed is likely a bottleneck. The simulation also allows us to observe the flow of materials and products, helping us visually identify these bottlenecks.

Step-by-step process:

- Run the simulation: Simulate the manufacturing process under normal operating conditions.

- Analyze resource utilization: Identify resources with consistently high utilization (near 100%).

- Examine queue lengths: Observe the length of queues in front of high-utilization resources. Long queues indicate a significant bottleneck.

- Analyze throughput: Observe the overall throughput of the system. A bottleneck will significantly limit throughput.

- Investigate further: Once bottlenecks are identified, the simulation can be used to test different solutions such as adding capacity, improving efficiency of the bottleneck resource, or re-sequencing tasks.

For example, if a simulation shows a specific assembly station consistently at 95% utilization and a large queue of partially assembled products, this clearly pinpoints a bottleneck. This then allows for targeted interventions like adding another worker to the station, optimizing the assembly steps, or investing in a faster assembly machine.

Q 11. Describe your experience with different types of manufacturing layouts (e.g., U-shaped, cellular).

I have extensive experience with various manufacturing layouts. The choice of layout significantly impacts efficiency and workflow. Let’s look at U-shaped and cellular layouts as examples.

U-shaped layouts are effective for smaller production volumes and are designed to minimize material handling and movement. The U-shape allows for improved worker interaction, easier flow of work, and reduced travel distance. This layout is particularly useful for lean manufacturing principles, as it fosters continuous improvement and visual management. I’ve used simulation to compare U-shaped layouts to linear layouts in various projects, showing consistent improvements in throughput and reduced lead times for U-shaped configurations.

Cellular layouts group machines and workers together to produce a family of similar parts. This reduces material handling, setup times, and work-in-progress inventory. It’s well-suited for mass customization, where a variety of products are produced in relatively small batches. The simulation’s role is to optimize the size and organization of these cells. We can test different groupings of machines and even assign workers to cells in various combinations to find the layout that maximizes output and minimizes changeover times. In one project, simulating various cell arrangements led to a 15% reduction in cycle time for a specific product family.

Beyond U-shaped and cellular, I’m also proficient in modeling fixed-position, process, and product layouts, tailoring the choice to the specific manufacturing process being simulated.

Q 12. How do you incorporate human factors into manufacturing simulation models?

Incorporating human factors into manufacturing simulation models is critical for realism and accuracy. Ignoring human elements like fatigue, variability in work performance, or breaks can lead to unrealistic simulation results.

Several ways to achieve this include:

- Modeling worker performance variability: Instead of assuming a constant work rate, we can use probability distributions to model the variability in individual worker performance based on factors such as experience, skill, and fatigue. This adds realism to the simulation.

- Modeling breaks and downtime: We can incorporate scheduled and unscheduled breaks for workers, factoring in time for lunch, restroom breaks, and other factors. This significantly impacts overall productivity.

- Modeling human errors: Simulation can incorporate the probability of human errors, such as incorrect assembly, and the resulting delays or rework. This aspect is often crucial for ensuring the model isn’t overly optimistic.

- Ergonomics and workspace layout: Simulation can help optimize workspace layout, considering factors like reach distances, posture, and movement to minimize physical strain and improve worker comfort and efficiency.

- Using agent-based modeling: For more complex human-machine interaction, agent-based modeling can simulate the behavior of individual workers, taking into account their decision-making and interactions with each other and the machines.

For example, in a car assembly line simulation, incorporating human error rates, fatigue effects from repetitive tasks, and scheduled breaks can significantly impact the simulated production rate, making the simulation more realistic and the results more valuable for decision-making.

Q 13. Explain the concept of throughput time and its importance in manufacturing.

Throughput time is the total time it takes for a product or job to move through the entire manufacturing process, from start to finish. This includes processing time, queueing time (waiting time), and transportation time. It’s a critical metric for assessing the efficiency and responsiveness of a manufacturing system.

Its importance stems from several factors:

- Inventory management: A shorter throughput time reduces work-in-progress (WIP) inventory, freeing up capital and reducing storage costs.

- Lead time reduction: Faster throughput times enable quicker delivery to customers, improving customer satisfaction and competitiveness.

- Cost reduction: Reducing throughput time generally translates into lower production costs due to lower inventory holding costs and less time spent on processing each unit.

- Improved efficiency: A shorter throughput time signals greater efficiency in utilizing resources and optimizing the manufacturing process.

For example, imagine a bakery. The throughput time is the total time from when the ingredients are received until the finished bread is ready for sale. Reducing this time might involve optimizing oven scheduling, streamlining mixing processes, or improving the flow of baked goods to the sales area. In a simulation, we can model various process improvements to see their impact on the throughput time and make data-driven decisions to optimize the process.

Q 14. How do you use simulation to evaluate the impact of different equipment configurations?

Simulation is invaluable for evaluating the impact of different equipment configurations. Before investing in new equipment or rearranging existing machinery, we can use simulation to predict the effects on key performance indicators (KPIs).

The process typically involves:

- Baseline model: First, we build a detailed simulation model of the current manufacturing system.

- Alternative configurations: Then, we create alternative models representing different equipment configurations. This might involve adding new machines, upgrading existing ones, rearranging the layout, or changing the number of workers assigned to different areas.

- Simulation runs: We run the simulation for each configuration, collecting data on KPIs such as throughput, cycle time, utilization rates, and work-in-progress inventory.

- Comparison and analysis: Finally, we compare the results from different configurations to identify the best option, based on the chosen KPI criteria.

For instance, consider a company considering buying a new, faster milling machine. Using simulation, we can model the current system with and without the new machine and compare the throughput and cycle times for various product types. The simulation will show the potential improvement in production, allowing informed decisions on the financial viability of the investment. The simulation will also highlight potential bottlenecks that could emerge as a result of the new machine, allowing us to proactively address them.

Q 15. Describe your experience with designing experiments for manufacturing simulations.

Designing experiments for manufacturing simulations involves a structured approach to extract meaningful insights. It starts with clearly defining the objectives – what specific questions are we trying to answer? Are we optimizing throughput, minimizing defect rates, or evaluating the impact of a new machine? Once the objectives are clear, we identify the key input parameters that influence the outcome. These might include machine speeds, buffer sizes, arrival rates of raw materials, or even the skill levels of operators.

Next, we design the experimental design itself. Common techniques include Design of Experiments (DOE) methodologies like full factorial designs (testing all possible combinations of input parameters), fractional factorial designs (a more efficient approach for a large number of parameters), or Taguchi methods (robust design optimization). The choice of design depends on the complexity of the model and the resources available. Each experiment involves setting the input parameters to specific values and running the simulation. The results, often multiple performance metrics, are then analyzed statistically to understand the impact of each input parameter and their interactions.

For example, in a simulation of a semiconductor fabrication plant, we might use a fractional factorial design to investigate the effect of different wafer processing times, equipment maintenance schedules, and operator training programs on overall production yield and cycle time. Analyzing the simulation results would then help us identify the most effective combination of these factors.

Career Expert Tips:

- Ace those interviews! Prepare effectively by reviewing the Top 50 Most Common Interview Questions on ResumeGemini.

- Navigate your job search with confidence! Explore a wide range of Career Tips on ResumeGemini. Learn about common challenges and recommendations to overcome them.

- Craft the perfect resume! Master the Art of Resume Writing with ResumeGemini’s guide. Showcase your unique qualifications and achievements effectively.

- Don’t miss out on holiday savings! Build your dream resume with ResumeGemini’s ATS optimized templates.

Q 16. How do you present simulation results to stakeholders?

Presenting simulation results to stakeholders requires clear and concise communication, tailored to their level of technical expertise. I avoid overwhelming them with raw data. Instead, I focus on visually appealing dashboards and reports that highlight key findings. This often involves using charts and graphs to illustrate trends, performance metrics, and the impact of different scenarios.

For example, instead of presenting a massive table of simulation data, I might use a bar chart to show the improvement in throughput achieved by implementing a proposed change. Similarly, I might use a line chart to illustrate the impact of varying buffer sizes on inventory levels. Animations or interactive visualizations can also greatly enhance understanding, especially for less technical stakeholders. A concise executive summary that summarizes the key insights and recommendations is crucial. I also make sure to address any questions or concerns they might have in a clear and accessible manner, fostering a dialogue rather than simply presenting findings.

Q 17. Explain the difference between deterministic and stochastic simulation models.

The core difference lies in how they handle uncertainty. A deterministic simulation model uses fixed, known values for all input parameters. The output is therefore predictable and repeatable. Every time you run the simulation with the same inputs, you get the same results. Think of a simple calculator – you input numbers, and you get a predictable output. In manufacturing, this could model a perfectly efficient assembly line with no variation in processing times or material defects.

In contrast, a stochastic simulation model incorporates randomness and uncertainty. Input parameters are represented by probability distributions, reflecting real-world variability. This means that each simulation run produces slightly different results, even with the same initial conditions. Imagine simulating customer arrival at a restaurant – arrival times aren’t perfectly predictable; a stochastic model would reflect this using a probability distribution. In manufacturing, stochastic models better represent real-world scenarios like machine breakdowns, variations in raw material quality, and unpredictable worker performance.

Q 18. How do you handle unexpected events or disruptions in a simulation model?

Handling unexpected events or disruptions in a simulation model requires incorporating elements of flexibility and robustness into the model itself. One approach is to explicitly model these disruptions as part of the input parameters. For example, we might include a probability of machine failure based on historical maintenance data. This allows the simulation to naturally account for these events and their impact on the overall system performance.

Another approach is to use a discrete-event simulation approach which allows for dynamic adjustments based on events. When a disruption occurs (e.g., a machine malfunction), the model can adjust its parameters or execution path to reflect the change and continue the simulation. This might involve diverting work to other machines, adjusting production schedules, or analyzing the impact on overall throughput. In certain cases, sophisticated agent-based models can simulate human decision-making in response to these disruptions.

Finally, sensitivity analysis is crucial. By understanding how sensitive the system is to different types of disruptions, we can prioritize mitigation strategies and identify system vulnerabilities. This allows for proactive planning and improved resilience in the face of unexpected events.

Q 19. Describe your experience with model calibration and parameter estimation.

Model calibration and parameter estimation are critical steps in developing accurate and reliable simulation models. Calibration involves adjusting model parameters to match the behavior observed in real-world data. Parameter estimation involves determining the values of these parameters based on available data, often using statistical techniques like maximum likelihood estimation or Bayesian methods.

For instance, let’s say we’re simulating a chemical reactor. We have historical data on the reaction rate and product yield. We would then calibrate the model’s kinetic parameters (e.g., reaction rate constants) to match this historical data. This could involve adjusting parameters iteratively, comparing simulated results against real-world data, and using statistical measures to quantify the goodness of fit. Bayesian methods are especially useful when prior knowledge or expert opinions about parameters are available.

The goal is to find a set of parameters that minimizes the discrepancy between the simulated and real-world data while maintaining a reasonable level of complexity in the model. This process ensures that our simulation reflects the real-world system as accurately as possible.

Q 20. How do you assess the sensitivity of a simulation model to input parameters?

Assessing the sensitivity of a simulation model to input parameters is crucial to understand which parameters have the most significant impact on the output. This helps prioritize efforts in data collection, parameter estimation, and model validation. Several techniques are used for sensitivity analysis. One common approach is to systematically vary each input parameter individually, while holding others constant. We then observe the resulting changes in the output variables. This helps identify parameters with high sensitivity – small changes in these parameters lead to large changes in the output.

More sophisticated techniques like variance-based methods (e.g., Sobol indices) can quantify the contribution of individual parameters and their interactions to the output variance. These methods are particularly useful for models with many input parameters and complex interactions. The results of the sensitivity analysis are usually summarized in tables or charts, showing which parameters are most influential. This information guides model simplification (reducing the number of parameters) and informs resource allocation for data collection and validation.

For example, in a supply chain simulation, sensitivity analysis might reveal that lead times are far more influential than unit costs, suggesting that improving lead time prediction accuracy is a higher priority.

Q 21. Explain your experience with different types of manufacturing processes (e.g., batch, continuous, job shop).

I have extensive experience modeling various manufacturing processes. Batch processing, common in pharmaceuticals or food production, involves producing goods in discrete batches. Simulation focuses on optimizing batch sizes, setup times, and processing sequences to maximize throughput and minimize cycle time. I’ve used Arena and AnyLogic to model these scenarios, focusing on factors like equipment utilization and in-process inventory.

Continuous processing, typical in chemical or oil refining, involves a continuous flow of materials. Models concentrate on process stability, optimizing flow rates, and maintaining product quality. My experience includes using specialized simulation tools to model chemical reactions and fluid dynamics within these continuous processes.

Finally, job shop processing, prevalent in machine shops or custom manufacturing, involves diverse products with varying processing routes. Modeling focuses on scheduling optimization, minimizing makespan (total time to complete all jobs), and balancing machine utilization. I’ve used simulation software like FlexSim to optimize job sequencing and resource allocation in job shop environments. My experience spans various software platforms and techniques, allowing me to adapt to the specific requirements of each manufacturing process.

Q 22. What are the limitations of manufacturing simulation models?

Manufacturing simulation models, while powerful tools, have inherent limitations. One key limitation is the inherent simplification of reality. Models rely on assumptions and abstractions, and the accuracy of the results directly depends on the validity of these assumptions. For example, a model might assume constant processing times for a machine, while in reality, these times can fluctuate due to various factors like tool wear, material variations, and operator skill.

Another limitation stems from data availability and quality. Accurate simulation requires comprehensive and reliable data on machine capabilities, production volumes, material handling times, and potential bottlenecks. Incomplete or inaccurate data can lead to misleading or unreliable results. Similarly, unforeseen events, like machine breakdowns or unexpected supply chain disruptions, are often difficult to incorporate realistically into a model.

Finally, the complexity of the model itself can be a limiting factor. Highly detailed models can be computationally expensive and time-consuming to build and run. Finding the right balance between model detail and computational feasibility is a crucial challenge. Oversimplification leads to inaccurate results, while excessive detail may render the model impractical.

Q 23. How do you choose the appropriate level of detail for a manufacturing simulation?

Choosing the appropriate level of detail for a manufacturing simulation is a critical decision. It’s a balancing act between accuracy and efficiency. Too much detail can lead to a computationally expensive and time-consuming model, while too little detail may fail to capture important aspects of the system. The selection depends heavily on the objectives of the simulation.

For example, if the goal is to assess the impact of a new machine on overall throughput, a high level of detail might be necessary for that specific machine, but other areas can be represented more simply. Conversely, if the objective is to evaluate the effectiveness of a new layout, a more detailed representation of the physical layout and material handling systems might be crucial.

A common approach is to start with a simplified model and incrementally add detail as needed. This allows for early validation of the model and avoids getting bogged down in unnecessary complexity. Sensitivity analysis can help identify which parameters significantly affect the results, guiding the prioritization of detail in subsequent iterations. Using a modular approach, where different parts of the system can be modeled with varying levels of detail, also enhances flexibility and control.

Q 24. Describe your experience with integrating simulation models with other enterprise systems (e.g., ERP, MES).

I have extensive experience integrating manufacturing simulation models with enterprise systems like ERP and MES. In one project, we linked a discrete event simulation model of a semiconductor fabrication plant with the client’s MES system. This integration allowed us to directly feed real-time production data, such as machine downtime and work-in-progress inventory, into the simulation model. This enhanced the model’s accuracy and provided a more dynamic and realistic representation of the manufacturing process.

The integration typically involves developing custom interfaces and APIs to exchange data between the simulation model and the enterprise systems. Data transformation and validation are crucial steps to ensure data consistency and integrity. Security considerations are also paramount to protect sensitive data. We used standard data formats like XML and JSON to facilitate interoperability. This allowed our simulations to incorporate real-time constraints, and we could then use the simulation to predict potential bottlenecks and optimize production schedules, ultimately improving overall equipment effectiveness (OEE).

Another project involved connecting a simulation model to an ERP system to predict material requirements planning (MRP). By simulating various production scenarios, we could accurately forecast demand and ensure that sufficient materials were available to meet production targets. The benefit here was reduced inventory costs and prevention of production stoppages due to material shortages.

Q 25. How do you measure the return on investment (ROI) of a manufacturing simulation project?

Measuring the ROI of a manufacturing simulation project requires a clear understanding of the project’s objectives and the associated costs and benefits. Costs include software licenses, consultant fees, data acquisition and preparation, and the time spent by internal personnel. Benefits, on the other hand, can be more challenging to quantify. They often include improvements in throughput, reduced lead times, lower inventory costs, increased efficiency, avoidance of costly mistakes, better capacity planning, and improved resource utilization.

To quantify these benefits, we often use metrics like the reduction in production costs per unit, the improvement in OEE, the decrease in inventory holding costs, and the avoidance of potential production delays. We might also consider the cost savings from preventing a production line shutdown. These quantifiable benefits are then compared to the project’s total costs to calculate the ROI. A simple ROI calculation would be (Total Benefits – Total Costs) / Total Costs. However, we also perform a qualitative analysis to assess benefits that are harder to put into numbers, like improved decision-making and employee training.

It’s crucial to establish clear, measurable objectives upfront to effectively measure the ROI. Without clear goals, it’s difficult to determine whether the simulation project has achieved its intended outcomes.

Q 26. What are some common challenges faced when implementing simulation models in a manufacturing environment?

Implementing simulation models in a manufacturing environment often presents several challenges. One common challenge is resistance to change. Many people are hesitant to adopt new technologies, especially when they involve changes to existing processes and workflows. Effective communication and training are crucial to address this.

Another challenge is the need for high-quality data. Accurate simulation models require detailed and reliable data on various aspects of the manufacturing process. Data collection, cleaning, and validation can be time-consuming and resource-intensive. Missing or inaccurate data can lead to unreliable simulation results, rendering the entire project useless.

Furthermore, validation and verification of the model are crucial steps. Ensuring the model accurately reflects the real-world manufacturing system can be complex and require significant expertise. Incorrect model calibration can lead to inaccurate predictions and misleading conclusions.

Finally, the integration of simulation models with existing enterprise systems can also be challenging. Ensuring seamless data exchange and maintaining data integrity require careful planning and execution. Compatibility issues and security concerns must be addressed appropriately.

Q 27. How would you approach optimizing a manufacturing process with multiple competing objectives?

Optimizing a manufacturing process with multiple competing objectives, such as maximizing throughput while minimizing costs and reducing lead times, requires the use of multi-objective optimization techniques. These techniques aim to find a set of Pareto optimal solutions, where no single objective can be improved without sacrificing another.

One approach is to use weighted sum methods, where each objective is assigned a weight reflecting its relative importance. The weighted sum of the objectives is then minimized or maximized. However, the choice of weights can significantly influence the outcome and may be subjective.

Another approach is to use goal programming, which involves setting target values for each objective and minimizing deviations from these targets. This method allows for more flexibility in handling different priorities. Yet another powerful technique is to use evolutionary algorithms such as genetic algorithms or particle swarm optimization, which are well-suited to handle complex, non-linear, and multi-objective problems.

Regardless of the specific method chosen, a thorough sensitivity analysis is essential to understand the trade-offs between different objectives and to assess the robustness of the optimized solution. Visualizing the Pareto front, which represents the set of Pareto optimal solutions, is also helpful in making informed decisions. Finally, practical constraints and limitations must always be considered when interpreting and implementing the results.

Q 28. Describe your experience with using simulation to support capacity planning decisions.

Simulation has proven invaluable in supporting capacity planning decisions. In a recent project for a large automotive parts manufacturer, we used simulation to evaluate different capacity expansion scenarios. The manufacturer was facing increasing demand and needed to determine the optimal way to expand its production capacity. We built a detailed simulation model of their existing production lines, incorporating data on machine capabilities, production rates, and material handling times.

We then simulated various expansion scenarios, including adding new machines, upgrading existing equipment, and implementing lean manufacturing techniques. The simulation allowed us to compare the cost and benefits of each scenario, considering factors such as initial investment costs, operating costs, throughput improvements, and lead time reductions. By visualizing different “what-if” scenarios, we determined the best strategy for expanding production capacity.

The results helped the company make informed decisions about capital investments and resource allocation, ensuring that their capacity expansion strategy aligned with their overall business objectives. Furthermore, by incorporating data on anticipated future demand increases, the simulation model could predict potential bottlenecks, and we identified the necessary capacity adjustments to prevent future production shortages.

Key Topics to Learn for Advanced Manufacturing Systems Modeling Interview

- Discrete-Event Simulation (DES): Understanding various DES methodologies (e.g., Arena, AnyLogic) and their application in optimizing manufacturing processes. Practical application includes analyzing bottleneck identification and throughput improvement in a production line.

- Agent-Based Modeling (ABM): Applying ABM to simulate complex interactions within a manufacturing system, such as material flow, resource allocation, and human-robot collaboration. Consider exploring case studies focusing on flexible manufacturing systems.

- System Dynamics Modeling: Using system dynamics to model the long-term behavior of manufacturing systems, considering factors like inventory levels, production capacity, and market demand. A practical example would be forecasting production needs based on sales trends.

- Queueing Theory: Applying queueing theory to analyze and optimize waiting times in manufacturing processes. This includes understanding different queueing models and their implications for resource allocation and efficiency.

- Data Analytics in Manufacturing: Leveraging data analytics techniques (e.g., statistical process control, predictive maintenance) to improve manufacturing system performance. This could involve analyzing sensor data to predict equipment failures and optimize maintenance schedules.

- Optimization Techniques: Familiarity with optimization algorithms (e.g., linear programming, integer programming) for solving manufacturing optimization problems, such as production scheduling and resource allocation.

- Model Validation and Verification: Understanding the importance of validating and verifying models to ensure their accuracy and reliability. This includes techniques for comparing model outputs to real-world data.

Next Steps

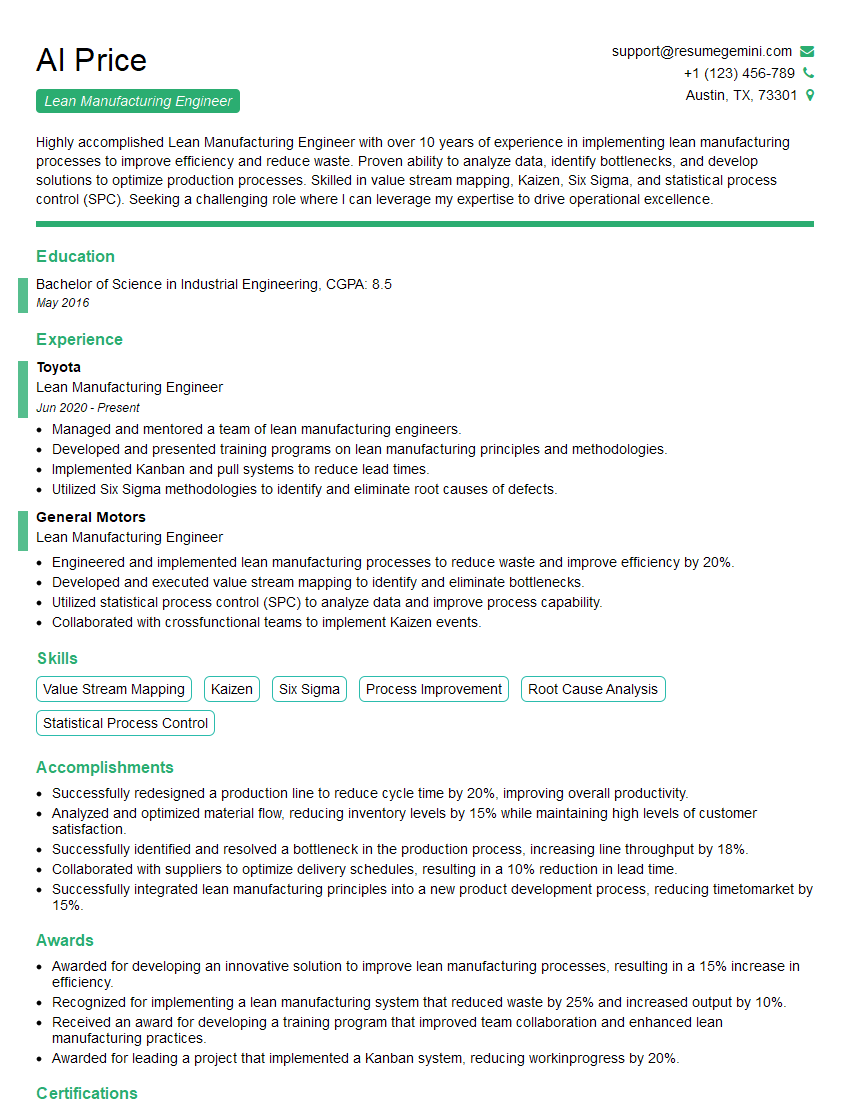

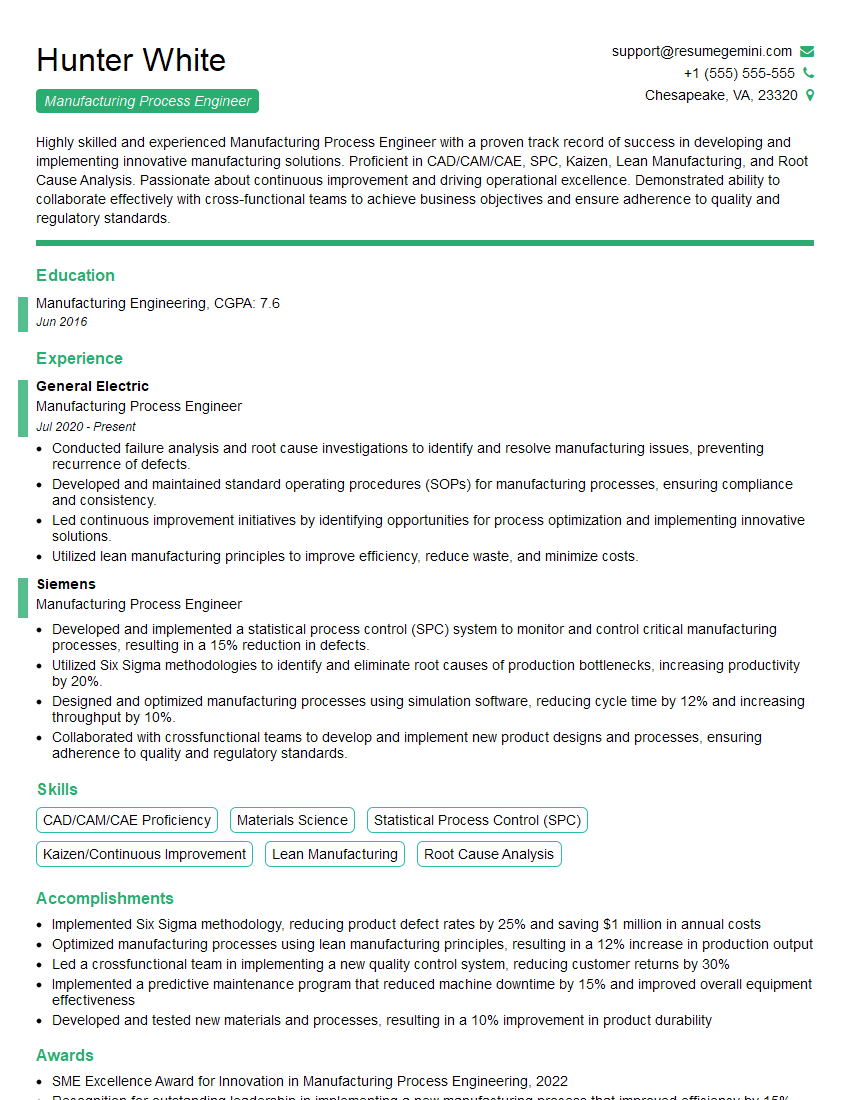

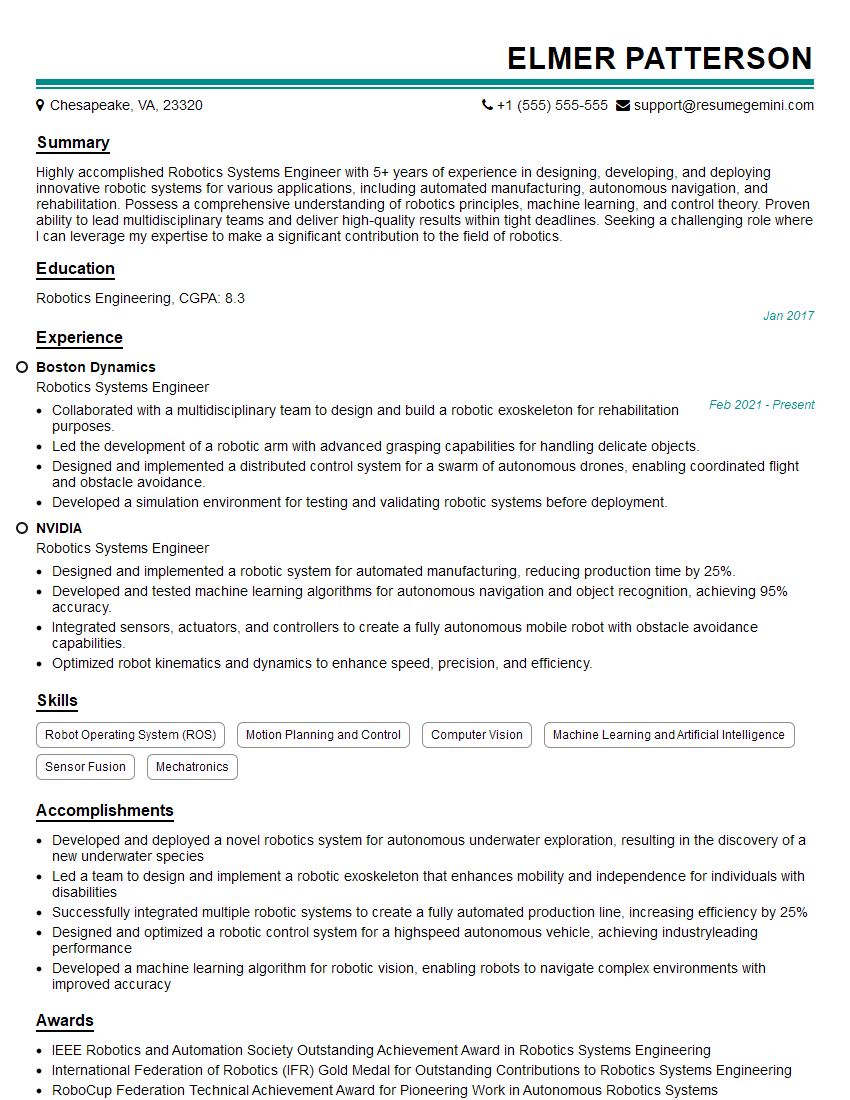

Mastering Advanced Manufacturing Systems Modeling is crucial for career advancement in today’s competitive landscape. Proficiency in these techniques demonstrates a valuable skillset highly sought after by leading manufacturing organizations. To significantly improve your job prospects, creating an ATS-friendly resume is essential. This ensures your application gets noticed by recruiters and hiring managers. We strongly recommend using ResumeGemini to build a professional and impactful resume. ResumeGemini provides a user-friendly platform and offers examples of resumes tailored to Advanced Manufacturing Systems Modeling, helping you showcase your skills and experience effectively.

Explore more articles

Users Rating of Our Blogs

Share Your Experience

We value your feedback! Please rate our content and share your thoughts (optional).

What Readers Say About Our Blog

Hello,

We found issues with your domain’s email setup that may be sending your messages to spam or blocking them completely. InboxShield Mini shows you how to fix it in minutes — no tech skills required.

Scan your domain now for details: https://inboxshield-mini.com/

— Adam @ InboxShield Mini

Reply STOP to unsubscribe

Hi, are you owner of interviewgemini.com? What if I told you I could help you find extra time in your schedule, reconnect with leads you didn’t even realize you missed, and bring in more “I want to work with you” conversations, without increasing your ad spend or hiring a full-time employee?

All with a flexible, budget-friendly service that could easily pay for itself. Sounds good?

Would it be nice to jump on a quick 10-minute call so I can show you exactly how we make this work?

Best,

Hapei

Marketing Director

Hey, I know you’re the owner of interviewgemini.com. I’ll be quick.

Fundraising for your business is tough and time-consuming. We make it easier by guaranteeing two private investor meetings each month, for six months. No demos, no pitch events – just direct introductions to active investors matched to your startup.

If youR17;re raising, this could help you build real momentum. Want me to send more info?

Hi, I represent an SEO company that specialises in getting you AI citations and higher rankings on Google. I’d like to offer you a 100% free SEO audit for your website. Would you be interested?

Hi, I represent an SEO company that specialises in getting you AI citations and higher rankings on Google. I’d like to offer you a 100% free SEO audit for your website. Would you be interested?

good