Interviews are more than just a Q&A session—they’re a chance to prove your worth. This blog dives into essential Process Engineering and Optimization interview questions and expert tips to help you align your answers with what hiring managers are looking for. Start preparing to shine!

Questions Asked in Process Engineering and Optimization Interview

Q 1. Explain your experience with process simulation software (e.g., Aspen Plus, HYSYS).

Throughout my career, I’ve extensively utilized process simulation software, primarily Aspen Plus and HYSYS, for designing, optimizing, and troubleshooting chemical processes. Aspen Plus, for instance, excels in rigorous thermodynamic calculations and its ability to model complex unit operations like distillation columns and reactors with great accuracy. I’ve used it to predict the performance of new designs, identify potential operational problems, and optimize existing processes for improved efficiency and yield. HYSYS, on the other hand, offers a more user-friendly interface, making it ideal for quick steady-state simulations and preliminary design evaluations. In one project, I used Aspen Plus to model a new methanol synthesis reactor, optimizing the operating conditions to maximize methanol production while minimizing energy consumption. The simulation allowed us to identify an optimal temperature and pressure range, ultimately leading to a 15% increase in yield compared to the initial design. In another project, HYSYS helped us quickly assess the impact of feedstock variations on an existing ethylene cracker, allowing for proactive adjustments to maintain product quality.

Q 2. Describe your approach to identifying bottlenecks in a manufacturing process.

Identifying bottlenecks in a manufacturing process requires a systematic approach. I typically begin with data analysis, examining process parameters like production rates, cycle times, equipment utilization, and defect rates. This data helps pinpoint areas of low efficiency. Visual management tools like Pareto charts and process flow diagrams are invaluable here. For instance, a Pareto chart can reveal the critical few factors contributing to the majority of production delays. Next, I conduct on-site observations and interviews with operators to understand the process better and identify hidden constraints – these are often non-quantifiable factors impacting production. Once potential bottlenecks are identified, I employ statistical process control (SPC) techniques like control charts to analyze variability and identify root causes. For example, if a specific machine consistently operates below its rated capacity, I’d investigate issues like maintenance needs, operator training, or material quality. Finally, I quantify the impact of the identified bottlenecks using simulation software to determine the improvement potential of various mitigation strategies.

Q 3. How would you optimize a process with limited data?

Optimizing a process with limited data requires a more creative and iterative approach. I would start by leveraging available data as effectively as possible, using techniques like Design of Experiments (DOE) to maximize the information gained from a small dataset. DOE allows for targeted experimentation to understand the influence of key variables on the process outcome. For example, a fractional factorial design could be used to explore several factors with relatively few experiments. If historical data is sparse, I would combine data analysis with expert knowledge and process understanding to develop a simplified model of the process. This model, while less accurate than one based on abundant data, still allows for the identification of potential improvement areas. Furthermore, I would focus on low-hanging fruit – quick, inexpensive changes that can yield significant improvement before investing in extensive data collection or complex modeling. Implementing these changes can then generate more data to refine the process understanding and further optimize the process iteratively.

Q 4. What are your preferred statistical methods for process analysis?

My preferred statistical methods for process analysis are diverse and depend on the specific problem. For analyzing process capability and detecting shifts in process performance, I rely heavily on control charts (e.g., X-bar and R charts, CUSUM charts). To understand the relationships between process variables, regression analysis (linear and non-linear) is a staple in my toolkit. For identifying significant factors affecting process performance and optimizing process parameters, I use ANOVA (Analysis of Variance) and DOE techniques (e.g., Taguchi methods, factorial designs). In addition, I regularly employ principal component analysis (PCA) for dimensionality reduction and exploratory data analysis, particularly when dealing with high-dimensional datasets. For example, in a recent project, PCA helped us identify latent variables influencing product quality, enabling us to focus on a smaller set of key process parameters for optimization.

Q 5. Explain your understanding of Lean Manufacturing principles.

Lean Manufacturing focuses on eliminating waste and maximizing value for the customer. It’s guided by the principles of identifying and eliminating seven types of waste (muda): transport, inventory, motion, waiting, overproduction, over-processing, and defects. In practice, this involves streamlining workflows, improving layout and material flow, reducing inventory levels, and empowering employees to identify and resolve problems. I’ve applied Lean principles in several projects, focusing on value stream mapping to visualize the entire production process and identify areas for improvement. For instance, in a previous project, we identified significant waiting time between processing steps in a food production line. By reorganizing the line layout and implementing Kanban systems, we drastically reduced lead times and improved throughput. The core of Lean is continuous improvement, involving regular review of processes, and the active engagement of all team members in identifying and addressing inefficiencies.

Q 6. Describe your experience with Six Sigma methodologies (DMAIC, DMADV).

I have significant experience with Six Sigma methodologies, specifically DMAIC (Define, Measure, Analyze, Improve, Control) and DMADV (Define, Measure, Analyze, Design, Verify). DMAIC is a data-driven approach used to improve existing processes, while DMADV is used for designing new processes or products. In a DMAIC project involving a semiconductor manufacturing process, we focused on reducing defect rates. The Define phase clarified the project goal, the Measure phase established baseline defect rates, the Analyze phase identified root causes using statistical tools like ANOVA and control charts, the Improve phase involved implementing solutions (e.g., process parameter adjustments, operator training), and the Control phase established monitoring systems to ensure sustained improvements. DMADV, on the other hand, would be used for designing a new product or process from scratch, ensuring robust design and minimizing variability from the start.

Q 7. How do you handle process deviations and unexpected issues?

Handling process deviations and unexpected issues requires a calm, systematic approach. My first step is to ensure the safety of personnel and equipment. Then, I initiate a thorough investigation to understand the root cause of the deviation. Data analysis, coupled with on-site observations and interviews with operators, plays a crucial role. Depending on the severity of the deviation, I may implement temporary corrective actions to stabilize the process while conducting the investigation. Root cause analysis tools like fishbone diagrams and 5 Whys help identify the underlying issues contributing to the deviation. Once the root cause is identified, I implement appropriate corrective actions, which may involve process parameter adjustments, equipment repairs, or operator retraining. Finally, I implement preventive measures to prevent similar incidents from occurring in the future, potentially including process control enhancements or revised operating procedures. Documentation is crucial throughout the entire process, ensuring lessons learned are captured and shared across the organization.

Q 8. Explain your understanding of Process Control strategies (PID control, advanced control).

Process control strategies aim to maintain a process variable at a desired setpoint. Two main categories are PID control and advanced control. PID (Proportional-Integral-Derivative) control is a widely used feedback control loop mechanism. It uses three terms to calculate the manipulated variable (e.g., valve position): Proportional (P) responds to the current error, Integral (I) addresses accumulated error, and Derivative (D) anticipates future error based on the rate of change. Imagine a thermostat: P adjusts the heating immediately based on the current temperature difference from the setpoint, I accounts for any lingering coldness, and D anticipates temperature changes based on the rate of heating/cooling. Advanced control strategies build upon PID, employing more sophisticated algorithms. Examples include Model Predictive Control (MPC), which uses a process model to predict future behavior and optimize control actions over a prediction horizon, resulting in better performance and constraint handling. Another example is cascade control, where multiple PID loops are nested, with one loop controlling the setpoint of another, improving control precision and stability. For example, in a chemical reactor, one loop might control the temperature, and a nested loop would control the flow rate of coolant to achieve the desired temperature.

Q 9. How do you ensure process safety in your work?

Process safety is paramount in my work. My approach is multifaceted and begins with a thorough understanding of hazards and risks using techniques like HAZOP (Hazard and Operability Study) and What-If analysis. These studies identify potential hazards and develop mitigating strategies. I ensure that all processes adhere to stringent safety standards (e.g., OSHA, IEC) and best practices. Implementing robust safety instrumented systems (SIS) with redundant components is critical. This includes emergency shutdown systems and interlocks to prevent dangerous situations. Furthermore, comprehensive training programs for operators and technicians are essential. Regular process audits and safety reviews are conducted to proactively identify and address potential hazards, and I champion a strong safety culture where reporting near misses and incidents are encouraged without blame.

Q 10. Describe your experience with process validation and qualification.

Process validation and qualification are crucial for ensuring consistent product quality and safety. Qualification focuses on confirming that equipment and systems perform as intended. This involves installation qualification (IQ), operational qualification (OQ), and performance qualification (PQ). IQ verifies correct installation, OQ checks system functionality under controlled conditions, and PQ demonstrates consistent performance under actual operating conditions. Validation verifies that a process consistently produces a product meeting predefined specifications. This often involves designing and executing experiments to demonstrate process capability. For example, in pharmaceutical manufacturing, we’d validate sterilization processes, ensuring that the desired sterility assurance level is consistently achieved. My experience includes developing validation protocols, executing validation studies, compiling validation reports, and managing deviations to ensure compliance with regulatory requirements like GMP (Good Manufacturing Practices).

Q 11. Explain your understanding of different process control strategies (e.g., PID, Model Predictive Control).

As previously mentioned, PID control is a foundational feedback control strategy that adjusts a manipulated variable based on the error between the setpoint and the measured process variable. Model Predictive Control (MPC) is an advanced control strategy that utilizes a process model (mathematical representation) to predict the future behavior of the process. This allows MPC to optimize the manipulated variables over a defined prediction horizon, taking into account constraints like equipment limits and product specifications. MPC is particularly useful for complex processes with multiple interacting variables and constraints. For instance, in an oil refinery, MPC might optimize the operating conditions of a distillation column to maximize product yield while respecting energy constraints and product quality specifications. Other strategies include feedforward control, where control actions are based on anticipated disturbances, and ratio control, which maintains a constant ratio between two process variables.

Q 12. How would you design an experiment to optimize a specific process parameter?

To optimize a specific process parameter, I’d employ a structured experimental design approach. This might involve a factorial design if multiple parameters need optimization, allowing for investigation of interactions between variables. Alternatively, a response surface methodology (RSM) could be used to model the relationship between the process parameters and the response variable (the parameter being optimized). The design would include replications to assess experimental error. The chosen design depends on the complexity of the system and available resources. For example, if we want to optimize the yield of a chemical reaction by adjusting temperature and reactant concentration, we might use a 2² factorial design (two variables at two levels each) to explore the impact of each variable and their interaction. We’d then use statistical analysis (ANOVA) to determine significant effects and build an empirical model to find the optimal parameter settings that maximize yield. Data analysis software like Minitab or JMP is often used for this purpose.

Q 13. What are your experiences with different types of process instrumentation?

My experience encompasses a wide range of process instrumentation, including temperature sensors (thermocouples, RTDs), pressure sensors (pressure transmitters, differential pressure cells), flow meters (Coriolis, orifice plates, rotameters), level sensors (radar, ultrasonic), and analytical instruments (gas chromatographs, spectrometers). I’m familiar with both analog and digital instrumentation, signal conditioning, and data acquisition systems. Understanding the limitations and characteristics of each instrument is crucial for accurate process control. For instance, the selection of a suitable temperature sensor depends on the temperature range, accuracy requirements, and the environment. Similarly, understanding the pressure drop across an orifice plate is key to accurately measuring flow rate. Experience with calibration and maintenance procedures is vital for ensuring the accuracy and reliability of the measurements.

Q 14. How do you interpret and utilize process data for optimization purposes?

Process data is the lifeblood of optimization. My approach involves several steps. First, data quality is essential. I’d perform data validation and cleaning, identifying and addressing outliers or missing values. Then, I’d utilize statistical methods (e.g., correlation analysis, regression analysis) to identify relationships between process variables and key performance indicators (KPIs). Data visualization techniques are crucial—scatter plots, histograms, and control charts help to visualize trends, patterns, and deviations from normal operation. Advanced techniques like multivariate statistical process control (MSPC) can identify subtle patterns in high-dimensional data to diagnose process problems early. This data-driven approach enables predictive maintenance, proactive identification of process upsets, and development of improved control strategies. Software packages like Aspen InfoPlus.21 or PI System are commonly used for this purpose. Ultimately, the goal is to translate insights from data analysis into actionable changes to the process, leading to improved efficiency, reduced costs, and enhanced product quality.

Q 15. Explain your understanding of root cause analysis techniques (e.g., Fishbone diagram, 5 Whys).

Root cause analysis is crucial for identifying the fundamental reasons behind process problems, preventing recurrence, and achieving lasting improvements. Two popular techniques are the Fishbone diagram (Ishikawa diagram) and the 5 Whys.

Fishbone Diagram: This visual tool helps brainstorm potential causes categorized by major contributing factors (e.g., manpower, machinery, materials, methods, measurement, environment). Each ‘bone’ branches out from a central problem statement, representing a potential cause. We then delve deeper into each cause, exploring sub-causes. For example, if the problem is ‘low yield in a chemical reaction’, a ‘materials’ bone might branch into ‘impure reactants’ and ‘incorrect reactant ratios’. This structured approach ensures comprehensive exploration of possibilities.

5 Whys: This iterative questioning technique aims to uncover the root cause by repeatedly asking ‘Why?’ Starting with the initial problem, each answer leads to a new ‘Why?’ question, digging deeper until the fundamental issue is identified. For instance: Problem: ‘Reactor pressure is unstable.’ Why? ‘Pressure sensor is faulty.’ Why? ‘Sensor calibration is overdue.’ Why? ‘Calibration procedure wasn’t followed.’ Why? ‘Training on calibration procedures was insufficient.’ The final ‘why’ reveals the root cause: inadequate training.

In practice, I often use both methods. The Fishbone diagram provides a broad overview, while the 5 Whys focuses on a specific cause identified through the diagram, providing a deeper understanding. Combining them offers a powerful approach to root cause analysis.

Career Expert Tips:

- Ace those interviews! Prepare effectively by reviewing the Top 50 Most Common Interview Questions on ResumeGemini.

- Navigate your job search with confidence! Explore a wide range of Career Tips on ResumeGemini. Learn about common challenges and recommendations to overcome them.

- Craft the perfect resume! Master the Art of Resume Writing with ResumeGemini’s guide. Showcase your unique qualifications and achievements effectively.

- Don’t miss out on holiday savings! Build your dream resume with ResumeGemini’s ATS optimized templates.

Q 16. How do you balance process efficiency with product quality?

Balancing process efficiency and product quality is a constant challenge in process engineering. It’s not a zero-sum game; maximizing one doesn’t necessitate sacrificing the other. Instead, it requires a holistic approach focusing on optimization.

Efficiency often revolves around maximizing throughput, minimizing waste, and reducing cycle times. This might involve streamlining steps, automating tasks, or improving resource allocation. However, pushing efficiency too hard can compromise quality if shortcuts are taken or quality checks are neglected.

Quality encompasses meeting specifications, minimizing defects, and ensuring consistent performance. Maintaining high quality might seem to lower efficiency initially, but long-term it significantly reduces rework, waste, and customer complaints. Investing in robust quality control and improved process design ultimately leads to greater overall efficiency.

The balance is achieved through statistical process control (SPC). SPC uses data analysis and control charts to monitor process variation and identify deviations from the target. By understanding the sources of variation (common cause vs. special cause), we can improve efficiency without compromising quality. For example, if we identify excessive variation in a specific step due to faulty equipment (special cause), we can address it directly, improving both efficiency and quality. If variation is due to inherent process variability (common cause), we can improve the process itself.

Q 17. What are your experiences with different types of process modeling techniques?

I have extensive experience with various process modeling techniques, each suited for different purposes and scales. These range from simple spreadsheet models to complex simulations.

- Spreadsheet Models: Useful for simple processes, material balances, and preliminary cost estimations. I’ve used Excel extensively to create these, particularly in early-stage project development.

- Process Simulators (Aspen Plus, HYSYS): For complex systems requiring detailed thermodynamic and kinetic modeling, these are indispensable. I’ve used these for designing and optimizing chemical reactors, distillation columns, and entire process plants. For example, simulating different operating conditions to identify optimal reaction temperatures and pressures for maximizing yield while minimizing energy consumption.

- Dynamic Simulation (MATLAB/Simulink): Essential for understanding transient behavior and control system design. I’ve used these to model the dynamic responses of process units to disturbances and evaluate the effectiveness of control strategies. For instance, designing a control system to maintain a stable temperature in a reactor despite fluctuations in feed flow rate.

- Agent-Based Modeling: Useful for discrete event systems and complex supply chains. I’ve explored this for modeling logistics and supply chain optimization problems, simulating the interactions between different actors in the system (e.g., suppliers, manufacturers, distributors).

The choice of modeling technique depends heavily on the complexity of the process, the available data, and the goals of the modeling effort.

Q 18. Explain your experience with process automation technologies (e.g., PLCs, SCADA).

My experience with process automation technologies is extensive, encompassing both Programmable Logic Controllers (PLCs) and Supervisory Control and Data Acquisition (SCADA) systems.

PLCs: These are the workhorses of industrial automation, controlling individual equipment and machinery. I’ve worked with various PLC platforms (e.g., Allen-Bradley, Siemens) programming ladder logic (LD) and function block diagrams (FBD) to automate tasks such as valve control, motor operation, and data acquisition from sensors. For example, I programmed a PLC to control the feed rate of reactants to a chemical reactor based on temperature and pressure readings.

SCADA: These systems provide a centralized view of the entire process, monitoring and controlling multiple PLCs and other equipment. I’ve been involved in designing and implementing SCADA systems for large-scale industrial processes, utilizing HMI (Human-Machine Interface) software to visualize process data, manage alarms, and provide operators with a comprehensive overview of the plant. For instance, I developed a SCADA system that monitored and controlled a complete wastewater treatment plant, including pumps, valves, and treatment units.

My experience also includes integrating PLCs and SCADA systems with advanced process control (APC) techniques, leading to improved efficiency, safety, and product quality. This often involves the use of model predictive control (MPC) algorithms to optimize process parameters in real-time.

Q 19. How would you implement a process improvement project using a structured methodology?

Implementing a process improvement project requires a structured methodology. I typically follow a DMAIC (Define, Measure, Analyze, Improve, Control) approach, a variation of the broader Six Sigma framework.

- Define: Clearly define the project goals, scope, and metrics for success. What process needs improvement? What are the key performance indicators (KPIs)? What are the desired improvements in these KPIs?

- Measure: Gather baseline data to understand the current process performance. This often involves collecting data on various KPIs and identifying key process variables.

- Analyze: Analyze the data to identify the root causes of process problems. Techniques like Fishbone diagrams, 5 Whys, and statistical analysis are valuable here.

- Improve: Develop and implement solutions to address the root causes identified in the analysis phase. This may involve redesigning processes, implementing new technologies, or training employees.

- Control: Implement controls to sustain the improvements and prevent regression. This includes monitoring KPIs, implementing standard operating procedures (SOPs), and establishing regular process reviews.

For example, in a manufacturing plant experiencing high defect rates, DMAIC might involve defining the reduction of defect rate by 50% as the goal, measuring the current defect rate, analyzing the root causes through data analysis and process mapping, implementing improvements such as employee training and equipment upgrades, and finally establishing controls using SPC charts to ensure sustained improvements.

Q 20. Describe your experience with process monitoring and alarm management systems.

Process monitoring and alarm management are critical for ensuring safe and efficient operations. Effective systems provide real-time visibility into process parameters and alert operators to potential problems.

My experience includes working with various process monitoring systems, ranging from basic data loggers to sophisticated distributed control systems (DCS). I have extensive knowledge of developing and configuring alarm management systems, using techniques like alarm rationalization to minimize alarm floods and prioritize critical alarms. Alarm floods, where too many alarms occur simultaneously, are detrimental as they can overwhelm operators and lead to delayed responses to actual critical situations. I’ve applied alarm rationalization to reduce the number of alarms, increase the effectiveness of alarms through improved alarm settings, and minimize the delay of alarm acknowledgment.

I am proficient in using historian systems to store and analyze historical process data, which is crucial for troubleshooting, performance analysis, and regulatory compliance. I’ve used this data for trend analysis to predict equipment failures, for example, identifying patterns in sensor readings that indicate impending equipment malfunction. This proactive approach minimizes downtime and potential safety hazards.

Q 21. What are your experiences with different types of process reactors?

My experience encompasses a wide range of process reactors, each suited for different types of chemical reactions and operating conditions.

- Batch Reactors: These are suitable for small-scale production and reactions requiring precise control of parameters. I have experience designing and optimizing batch reactor processes for pharmaceuticals and specialty chemicals.

- Continuous Stirred Tank Reactors (CSTRs): These are ideal for large-scale production of homogeneous reactions. I’ve worked with CSTRs in the production of polymers and petrochemicals, focusing on optimizing residence time and maximizing yield.

- Plug Flow Reactors (PFRs): These are suitable for reactions that benefit from long residence times and minimal mixing. I’ve used PFR models for the design of catalytic reactors in refining processes.

- Fluidized Bed Reactors: These are well-suited for gas-solid reactions with high heat transfer requirements. I’ve worked with these in the design of catalytic cracking units in refineries.

The selection of a reactor type depends on factors such as reaction kinetics, heat transfer requirements, scalability, and cost considerations. My expertise lies in understanding these factors and choosing the optimal reactor configuration for a given process.

Q 22. How do you manage competing priorities in a process optimization project?

Managing competing priorities in process optimization is akin to navigating a complex river system. You have multiple currents (priorities) pulling you in different directions, and you need a strategic plan to reach your destination (optimized process). My approach involves a multi-step process:

Prioritization Matrix: I use a matrix like MoSCoW (Must have, Should have, Could have, Won’t have) to categorize the priorities based on their urgency and importance. This helps visualize trade-offs and makes tough decisions more manageable. For example, improving safety is always a ‘Must have,’ while increasing throughput by a small margin might be a ‘Could have’ if it impacts safety or budget.

Stakeholder Alignment: I actively engage stakeholders early on to understand their perspectives and concerns. Open communication is key to identifying and addressing conflicting interests. A shared understanding of the project goals and constraints is crucial for making informed decisions.

Resource Allocation: Based on the prioritization and stakeholder input, I develop a detailed plan that allocates resources (time, budget, personnel) effectively. This includes setting clear milestones and deadlines to keep the project on track. For example, if budget is limited, we might prioritize low-cost, high-impact improvements initially.

Adaptive Planning: Process optimization is rarely a linear journey. I embrace iterative planning, incorporating feedback and adjusting the approach as new information emerges. Flexibility is key to navigating unexpected challenges and ensuring the project stays relevant.

Using this systematic approach ensures that the project stays focused while considering the various demands and constraints within the project.

Q 23. How would you handle a situation where a process is consistently underperforming?

When a process consistently underperforms, it’s like a car that keeps stalling. A systematic diagnostic approach is needed to identify the root cause. My strategy involves:

Data Collection and Analysis: First, I gather data on key performance indicators (KPIs) to quantify the underperformance. This might involve reviewing historical data, conducting process mapping, and gathering feedback from operators. For example, if a production line is consistently below target, we analyze production rates, defect rates, downtime, and material usage.

Root Cause Analysis (RCA): Techniques like the 5 Whys, Fishbone diagrams (Ishikawa diagrams), or Fault Tree Analysis help pinpoint the underlying reasons for the underperformance. This involves investigating potential causes, such as equipment malfunctions, process inefficiencies, operator errors, or material quality issues.

Solution Development and Implementation: Based on the RCA, we develop and implement solutions. This might include upgrading equipment, revising operating procedures, retraining operators, improving quality control, or redesigning the process flow. For example, if operator error is the root cause, we might invest in better training or ergonomic improvements.

Monitoring and Evaluation: After implementing the solution, we monitor KPIs to evaluate its effectiveness. Continuous monitoring ensures the improvements are sustained and allows for further adjustments if needed. This is like regularly checking the car’s engine performance after a repair.

This systematic approach minimizes guesswork and ensures effective and lasting improvements.

Q 24. Describe your experience with data analytics in a process engineering context.

Data analytics is the engine that drives process optimization. In my experience, I’ve used various statistical and machine learning techniques to analyze process data and extract actionable insights. For example, in a chemical plant, I used regression analysis to model the relationship between process parameters (temperature, pressure, flow rate) and product quality. This allowed us to identify optimal operating conditions that maximized yield and minimized defects. Furthermore, I’ve employed statistical process control (SPC) techniques to identify and address process variations, preventing costly deviations from target specifications. In another project, I leveraged machine learning algorithms to predict equipment failures based on sensor data, enabling proactive maintenance and preventing costly downtime. The software packages I am proficient with include statistical software like R and Minitab, and data visualization tools like Tableau.

Q 25. Explain your understanding of different types of process flow diagrams (PFDs, P&IDs).

Process flow diagrams (PFDs) and piping and instrumentation diagrams (P&IDs) are essential tools for visualizing and understanding industrial processes. A PFD provides a simplified representation of the process flow, showing the major equipment and process streams. It’s like a roadmap, highlighting the overall process flow without intricate details. A P&ID, on the other hand, is a more detailed diagram that includes information on piping, instrumentation, and control systems. It’s like a detailed blueprint of a building, showing every pipe, valve, instrument, and control loop. The key differences lie in the level of detail: PFDs focus on the overall process flow, while P&IDs provide a detailed representation necessary for construction, operation, and maintenance.

Q 26. How would you assess the economic feasibility of a proposed process improvement?

Assessing the economic feasibility of a process improvement involves a thorough cost-benefit analysis. This is crucial to ensure the improvement justifies the investment. My approach includes:

Quantifying Costs: This involves estimating the initial investment costs (e.g., equipment upgrades, software licenses, training), operating costs (e.g., energy consumption, maintenance), and any potential downtime costs.

Quantifying Benefits: This includes calculating the increase in production rate, reduction in waste, improvement in product quality, and decrease in operating costs. It’s important to use realistic estimations based on data and expert knowledge.

Calculating Return on Investment (ROI): We calculate the ROI by comparing the total benefits to the total costs. A higher ROI indicates a more economically attractive improvement. Other metrics like Net Present Value (NPV) and Payback Period are also used to assess long-term profitability.

Sensitivity Analysis: To account for uncertainty, we conduct a sensitivity analysis to determine how changes in key parameters (e.g., raw material prices, production rates) affect the overall economic viability of the improvement. This helps assess the project’s robustness under different scenarios.

This comprehensive approach ensures that only economically sound process improvements are implemented.

Q 27. Describe your experience with process safety management systems (PSM).

Process safety management (PSM) systems are crucial for preventing accidents and protecting personnel and the environment. My experience involves working with PSM elements such as hazard identification and risk assessment (HAZOP studies, What-If analysis), development of safe operating procedures (SOPs), emergency response planning, and employee training. For example, I’ve participated in HAZOP studies to identify potential hazards in chemical processes and develop mitigation strategies. I’ve also been involved in designing and implementing alarm management systems to ensure effective communication during emergencies. Compliance with relevant regulations and standards (e.g., OSHA PSM standard) is a fundamental aspect of my work. A strong safety culture is essential, promoting proactive hazard identification and risk mitigation through regular training and audits.

Key Topics to Learn for Process Engineering and Optimization Interview

- Process Modeling and Simulation: Understanding different modeling techniques (e.g., steady-state, dynamic), software applications (e.g., Aspen Plus, MATLAB), and model validation. Practical application: Optimizing a chemical reactor’s operating conditions for maximum yield.

- Process Control and Instrumentation: Familiarity with control loops, PID controllers, and advanced control strategies (e.g., model predictive control). Practical application: Designing a control system to maintain temperature and pressure within specified limits in a distillation column.

- Optimization Techniques: Knowledge of linear and non-linear programming, gradient-based methods, and metaheuristic algorithms (e.g., genetic algorithms, simulated annealing). Practical application: Minimizing energy consumption in a refinery process using optimization algorithms.

- Process Safety and Hazard Analysis: Understanding hazard identification methods (e.g., HAZOP, What-If analysis) and safety instrumented systems (SIS). Practical application: Conducting a risk assessment for a new chemical plant design.

- Thermodynamics and Fluid Mechanics: A strong foundation in these core chemical engineering principles is crucial for understanding process behavior. Practical application: Calculating heat and mass transfer rates in a heat exchanger network.

- Data Analysis and Statistical Methods: Analyzing process data to identify trends, diagnose problems, and improve efficiency. Practical application: Using statistical process control (SPC) charts to monitor process performance and detect anomalies.

- Economic Evaluation and Project Management: Understanding cost estimation, profitability analysis, and project scheduling. Practical application: Evaluating the economic viability of a new process improvement project.

Next Steps

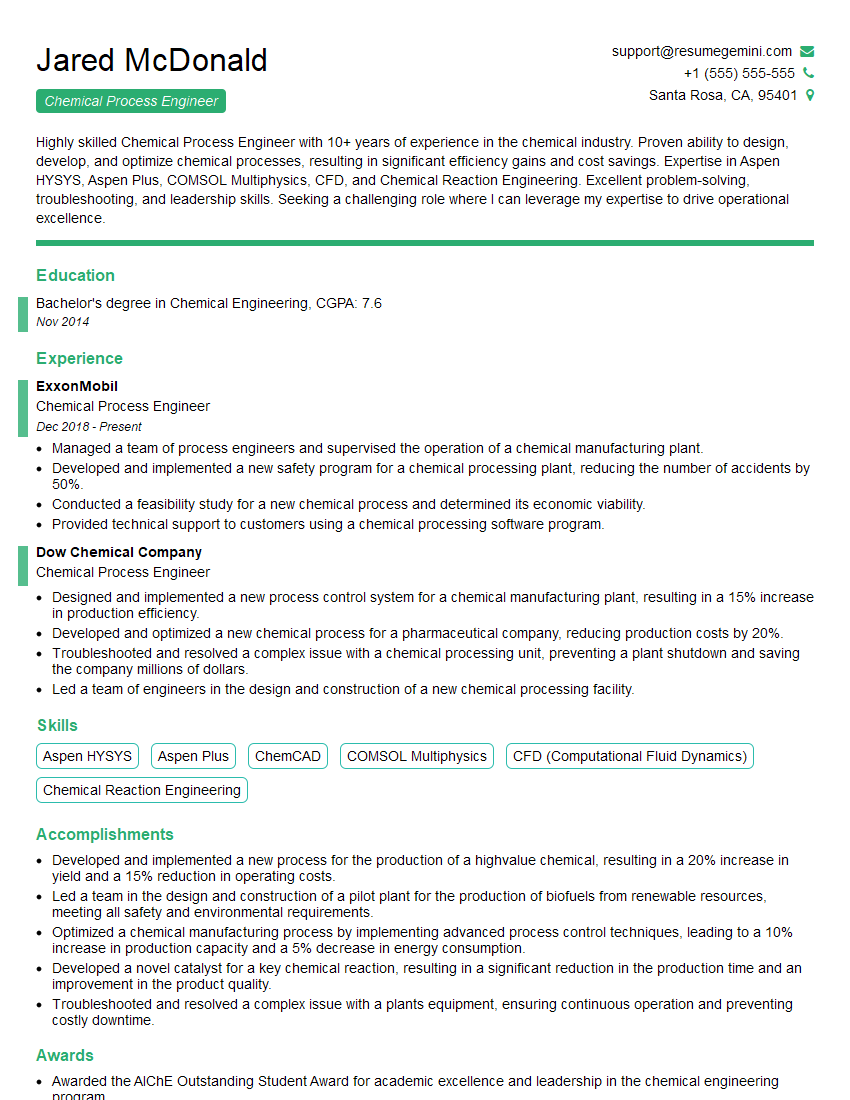

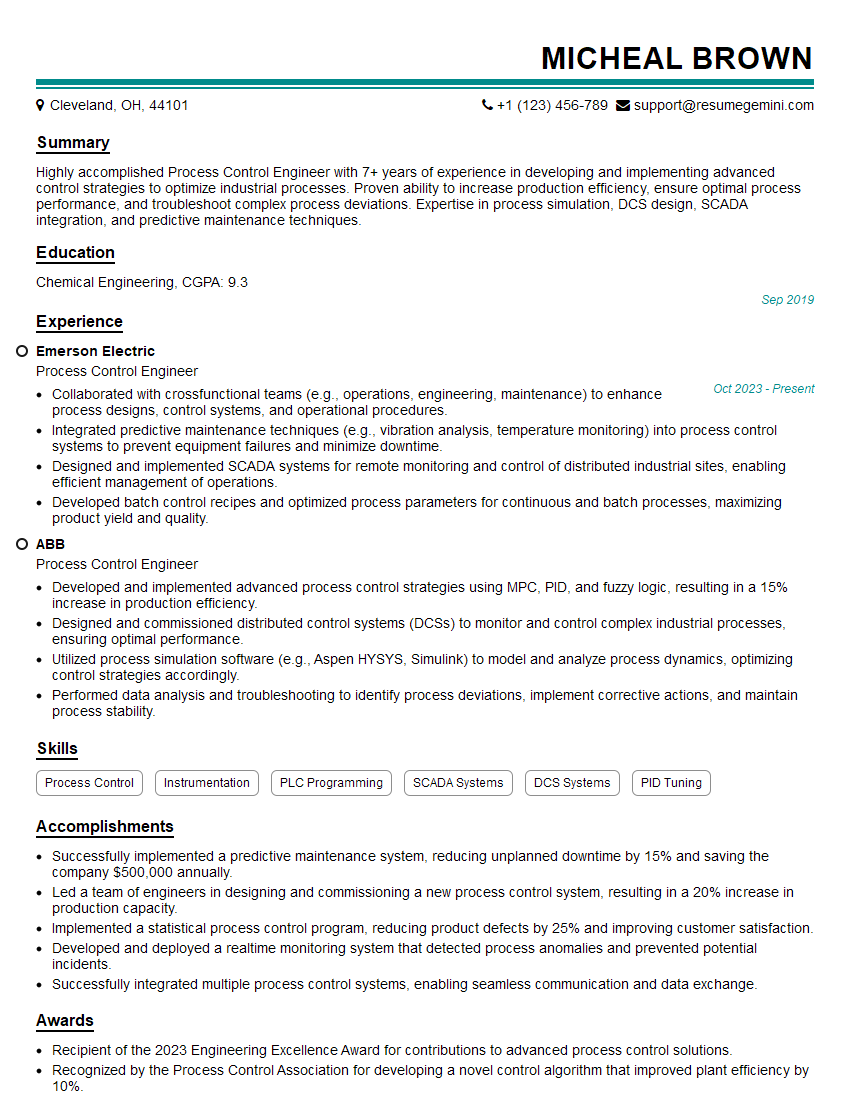

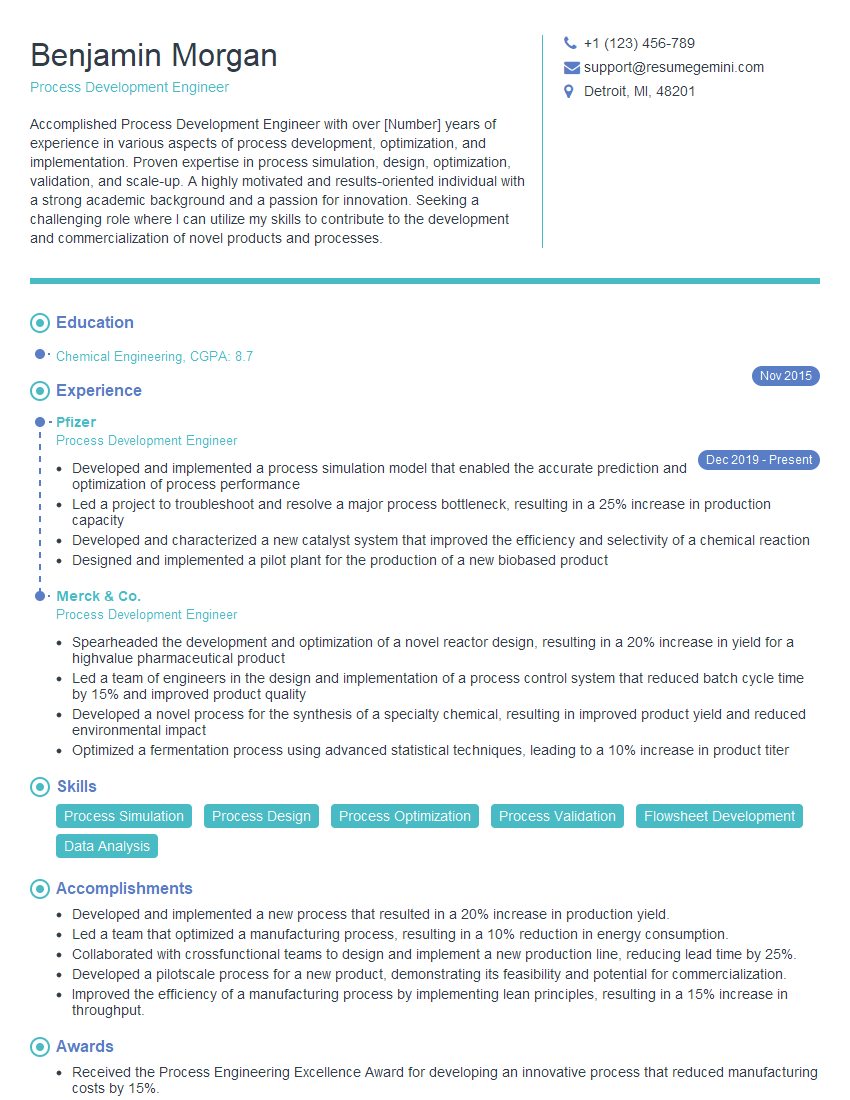

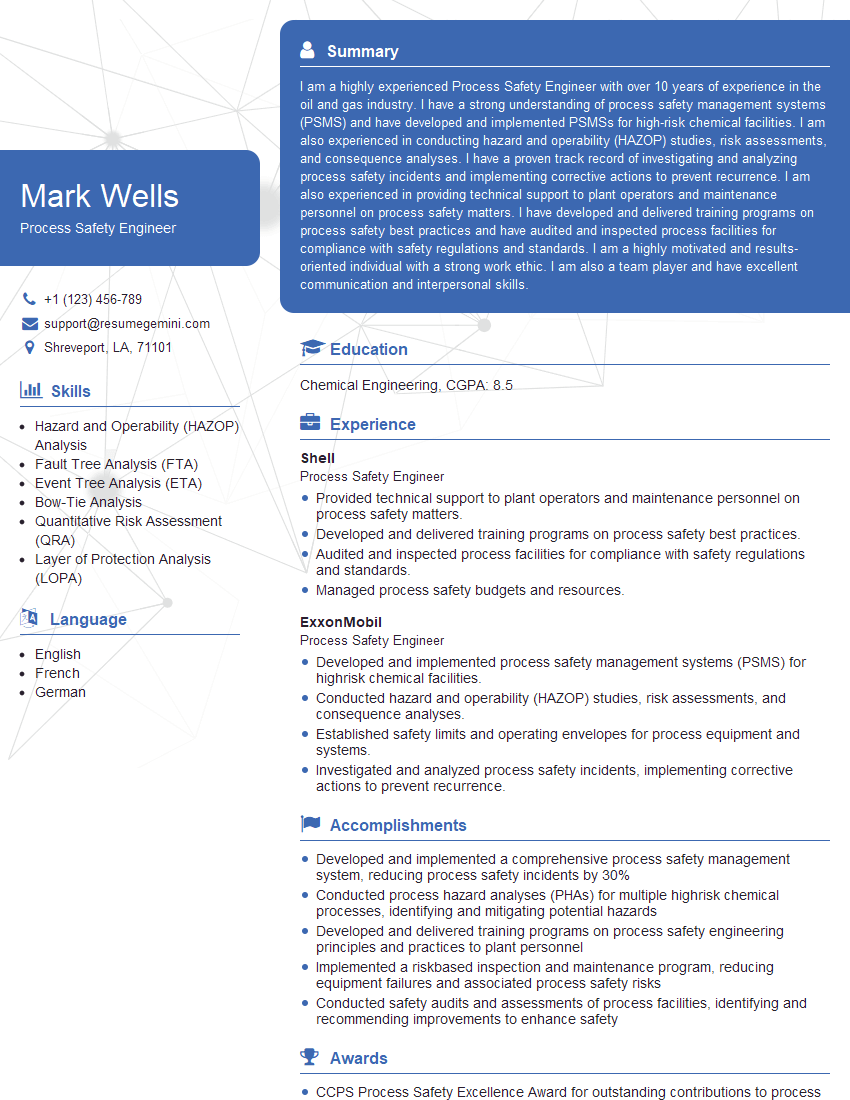

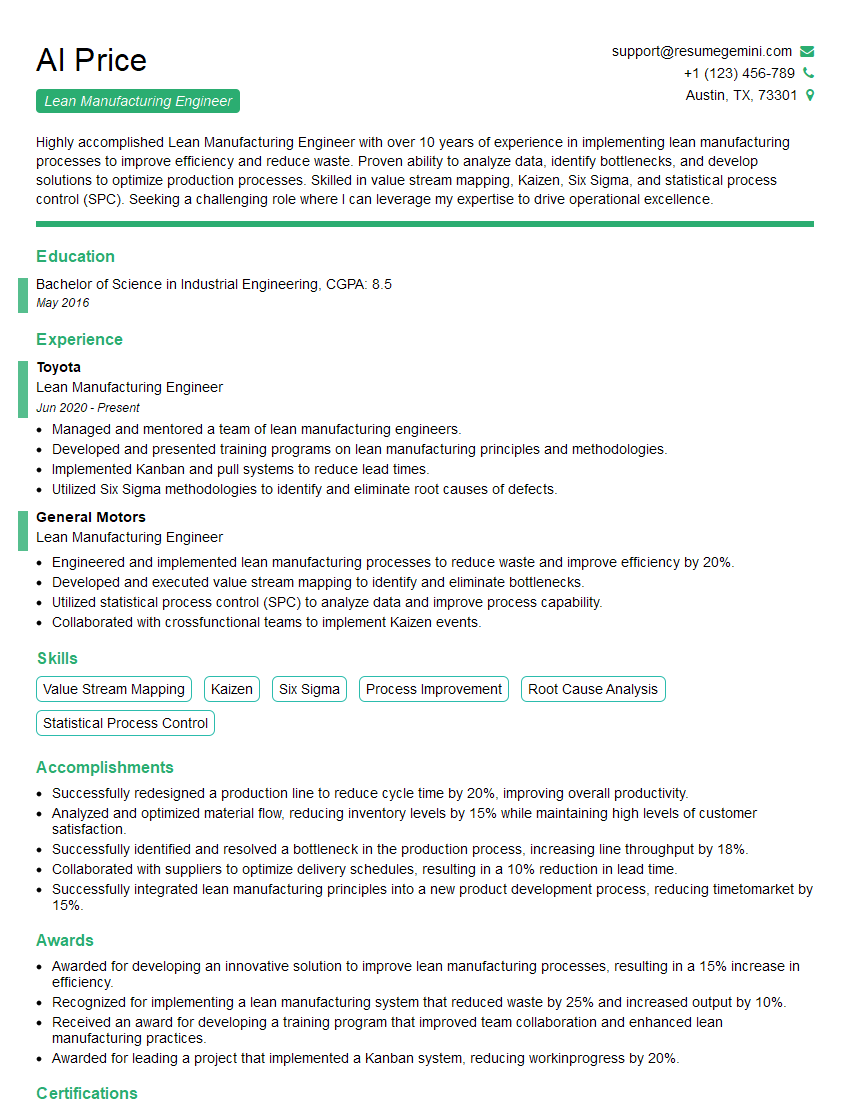

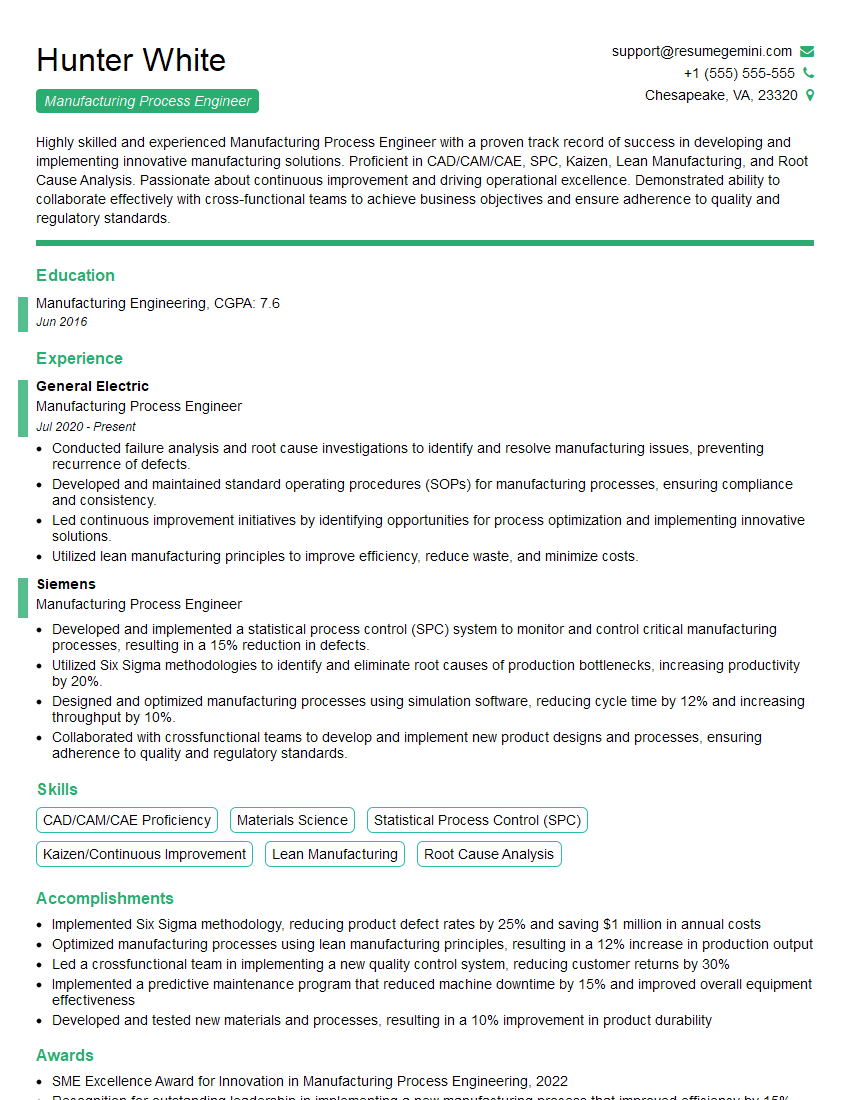

Mastering Process Engineering and Optimization is key to unlocking exciting career opportunities in various industries, offering significant growth potential and high demand. To maximize your job prospects, a well-crafted, ATS-friendly resume is essential. ResumeGemini is a trusted resource that can significantly enhance your resume-building experience. They provide examples of resumes specifically tailored to Process Engineering and Optimization roles, helping you present your skills and experience effectively to potential employers. Invest time in creating a compelling resume that showcases your expertise – it’s your first impression and a vital step toward landing your dream job.

Explore more articles

Users Rating of Our Blogs

Share Your Experience

We value your feedback! Please rate our content and share your thoughts (optional).

What Readers Say About Our Blog

Hello,

We found issues with your domain’s email setup that may be sending your messages to spam or blocking them completely. InboxShield Mini shows you how to fix it in minutes — no tech skills required.

Scan your domain now for details: https://inboxshield-mini.com/

— Adam @ InboxShield Mini

Reply STOP to unsubscribe

Hi, are you owner of interviewgemini.com? What if I told you I could help you find extra time in your schedule, reconnect with leads you didn’t even realize you missed, and bring in more “I want to work with you” conversations, without increasing your ad spend or hiring a full-time employee?

All with a flexible, budget-friendly service that could easily pay for itself. Sounds good?

Would it be nice to jump on a quick 10-minute call so I can show you exactly how we make this work?

Best,

Hapei

Marketing Director

Hey, I know you’re the owner of interviewgemini.com. I’ll be quick.

Fundraising for your business is tough and time-consuming. We make it easier by guaranteeing two private investor meetings each month, for six months. No demos, no pitch events – just direct introductions to active investors matched to your startup.

If youR17;re raising, this could help you build real momentum. Want me to send more info?

Hi, I represent an SEO company that specialises in getting you AI citations and higher rankings on Google. I’d like to offer you a 100% free SEO audit for your website. Would you be interested?

Hi, I represent an SEO company that specialises in getting you AI citations and higher rankings on Google. I’d like to offer you a 100% free SEO audit for your website. Would you be interested?

good