Are you ready to stand out in your next interview? Understanding and preparing for Load Measurement interview questions is a game-changer. In this blog, we’ve compiled key questions and expert advice to help you showcase your skills with confidence and precision. Let’s get started on your journey to acing the interview.

Questions Asked in Load Measurement Interview

Q 1. Explain the difference between load testing, stress testing, and endurance testing.

Load testing, stress testing, and endurance testing are all crucial performance testing types, but they differ in their objectives and approaches. Imagine you’re testing a bridge’s strength:

Load Testing: This is like testing the bridge under its expected daily traffic. We apply a typical user load to determine if the system performs adequately under normal conditions. We look for response times, throughput, and resource utilization. For example, we might simulate 100 concurrent users browsing an e-commerce website and measure average page load time.

Stress Testing: This is like pushing the bridge beyond its designed limits. We gradually increase the load until the system breaks or performance significantly degrades to identify breaking points and understand the system’s behavior under extreme pressure. This helps identify vulnerabilities and potential failure points. We might simulate 1000 concurrent users to see where the system bottlenecks.

Endurance Testing (Soak Testing): This simulates long-term usage, akin to the bridge withstanding continuous traffic for months or years. We run a steady load for an extended period (hours or days) to detect memory leaks, resource exhaustion, or other problems that might surface over time. We might simulate 50 concurrent users over 72 hours to monitor server stability.

Q 2. Describe your experience with various load testing tools (e.g., JMeter, LoadRunner, Gatling).

I have extensive experience with JMeter, LoadRunner, and Gatling. Each has its strengths:

JMeter: An open-source tool, JMeter is highly versatile and customizable. I’ve used it extensively for testing REST APIs, web applications, and databases. Its scripting capabilities in Groovy are powerful, allowing for intricate test scenarios. For instance, I used JMeter to simulate thousands of concurrent users accessing a payment gateway, ensuring its stability under peak load.

LoadRunner: A commercial tool, LoadRunner offers robust features for complex scenarios and detailed analysis. Its correlation capabilities are superior, handling dynamic content in web applications efficiently. In a recent project, I leveraged LoadRunner’s advanced analytics to pinpoint performance bottlenecks in a large-scale ERP system.

Gatling: Gatling’s Scala-based scripting language provides a more developer-friendly experience and often leads to more concise and readable test scripts. Its focus on performance makes it ideal for scenarios requiring high-throughput simulations. I used Gatling to effectively simulate a massive influx of users to a social media platform during a marketing campaign.

My choice of tool depends on project requirements, budget, and team expertise. For instance, open-source JMeter is often preferred for smaller projects or when budget is constrained, while LoadRunner’s sophisticated features might be necessary for large enterprise-level applications.

Q 3. How do you determine the appropriate number of virtual users for a load test?

Determining the appropriate number of virtual users (VUs) is critical. It’s not just about throwing as many VUs as possible. Instead, we need a strategic approach:

Baseline Testing: Start with a small number of users to establish a baseline performance.

Understanding Business Requirements: How many concurrent users do we expect during peak times? This forms the basis of our testing.

Incremental Load: Gradually increase the number of VUs while monitoring system performance metrics. We look for performance degradation points. This helps identify thresholds, like the number of users before response time increases drastically.

Capacity Planning: Determine how many users the system can handle before reaching performance degradation, giving valuable input for capacity planning.

Real-World Scenarios: Consider realistic user behaviors. For an e-commerce site, this might involve simulating shopping carts, adding items, and checkout processes.

For example, if a website expects 500 concurrent users at peak, we might start with 100, gradually increasing to 500, and then pushing beyond to 750-1000 to see system capacity and breaking points. We analyze response times and resource usage at each stage.

Q 4. Explain different load testing methodologies (e.g., spike testing, soak testing).

Various load testing methodologies exist, catering to different testing objectives:

Spike Testing: Simulates sudden surges in user traffic, like a flash sale or a viral social media campaign. We suddenly increase the load to observe how the system reacts to rapid changes.

Soak Testing (Endurance Testing): As previously discussed, this simulates long-term, sustained usage, identifying potential issues like memory leaks or resource exhaustion.

Volume Testing: Tests the system’s ability to handle large amounts of data. This might involve uploading massive files or processing a high volume of transactions.

Capacity Testing: This is designed to determine the maximum load a system can handle before performance degrades significantly. This helps define system limits.

The choice of methodology depends on the application and its anticipated usage patterns. For example, a social media platform might benefit from spike and endurance testing due to unpredictable surges and continuous user engagement.

Q 5. How do you identify performance bottlenecks in an application?

Identifying performance bottlenecks requires a systematic approach:

Monitor Server Metrics: Observe CPU utilization, memory consumption, disk I/O, and network traffic on servers. High CPU or memory usage often signals bottlenecks.

Database Analysis: Check query performance and database resource usage. Slow queries can significantly impact response times.

Application Logs: Examine application logs for errors, exceptions, or slow operations. This helps pinpoint code-level issues.

Profiling Tools: Employ profiling tools to identify performance hotspots within the application code.

Network Analysis: Analyze network traffic to detect slow network connections or network congestion.

For instance, during a load test, if we observe high CPU usage on the database server and slow query execution times, it points to a database bottleneck. We can then optimize database queries or upgrade the database hardware to resolve it.

Q 6. What are the key performance indicators (KPIs) you track during load testing?

Key performance indicators (KPIs) tracked during load testing include:

Response Time: Time taken for the system to respond to a user request.

Throughput: Number of requests processed per unit of time.

Error Rate: Percentage of failed requests.

Resource Utilization: CPU, memory, and disk I/O usage on servers and databases.

Virtual User Response Times: Measures of how long it takes a simulated user to complete a task.

These KPIs give a holistic view of system performance under load. For example, a high error rate might indicate problems with the application logic, while high CPU utilization on the web server suggests the need for more powerful hardware.

Q 7. Describe your experience with monitoring tools during load tests.

My experience encompasses various monitoring tools, including:

Application Performance Monitoring (APM) tools: Tools like Dynatrace or New Relic provide real-time insights into application performance, helping identify bottlenecks and pinpoint problematic code sections. I used Dynatrace to monitor a microservices architecture during load testing, easily identifying slow-performing services.

System monitoring tools: Tools like Nagios or Zabbix monitor system resources (CPU, memory, disk I/O, network traffic) across servers and other infrastructure components. These are crucial to track resource saturation under load.

Log management tools: Tools like ELK stack (Elasticsearch, Logstash, Kibana) aggregate and analyze logs from various sources, providing valuable contextual data for troubleshooting. This is indispensable for identifying patterns within error logs during and after load tests.

The choice of monitoring tool is highly contextual, depending on the scale and complexity of the system being tested. For distributed systems, APM tools are very useful, while system monitoring tools provide comprehensive system-level visibility.

Q 8. How do you analyze load test results and generate reports?

Analyzing load test results involves a multi-step process focused on understanding system behavior under stress. First, I examine key performance indicators (KPIs) like response times, throughput, resource utilization (CPU, memory, network), and error rates. I use tools to visualize these metrics, often creating graphs and charts to identify trends and bottlenecks. For example, a sudden spike in response time might indicate a database query issue.

Next, I delve deeper into error logs and identify specific error messages. This helps pinpoint the root causes of failures. A common strategy is to correlate these errors with specific load levels to determine thresholds where the system becomes unstable.

Finally, I generate comprehensive reports summarizing findings, including key metrics, charts illustrating performance trends, and recommendations for performance improvements. These reports often include sections on potential bottlenecks, recommendations for scaling, and a comparison of the system’s performance against predefined service level agreements (SLAs).

For example, I once discovered a significant database bottleneck by analyzing response time graphs and correlating them with database server resource utilization data from the load test, leading to database optimization and a 30% improvement in overall application performance.

Q 9. How do you handle unexpected errors or failures during a load test?

Handling unexpected errors during a load test requires a systematic approach. My first step is to immediately pause the test to prevent further damage and data corruption. Then, I carefully analyze the error logs and monitoring data to identify the root cause. Is it a server-side issue, a network problem, or a flaw in the test script itself?

Once identified, I implement a solution. This might involve restarting a failed server, adjusting network configurations, or debugging and correcting the test script. For example, a sudden increase in memory usage could require scaling up resources in the cloud.

After resolving the issue, I resume the test, ensuring it’s safe to continue. Post-mortem analysis helps us understand why the error occurred and prevents similar incidents in the future. This includes documenting root causes and implementing preventative measures. For instance, setting up robust monitoring and alerting systems can significantly reduce downtime and improve response time to unexpected events.

Q 10. Explain your experience with scripting load tests.

I have extensive experience scripting load tests using various tools like JMeter, LoadRunner, and Gatling. My scripting skills go beyond simply recording user actions. I understand the importance of creating robust and maintainable scripts that accurately simulate real-world user behavior. This involves parameterization to handle varying data inputs, using transactions to measure performance, and implementing appropriate assertions to validate responses.

For example, when testing an e-commerce site, I would parameterize the product IDs to simulate multiple users browsing and purchasing different items. Using JMeter, I’d create a test plan with separate thread groups for different user behaviors like browsing, searching, and adding items to a cart. I also incorporate realistic pauses and think times to mimic human interactions accurately. The scripts are designed to be easily scalable to simulate different load levels.

I’m adept at handling complex scenarios, such as simulating user authentication, handling dynamic content, and working with various protocols (HTTP, HTTPS, WebSockets).

Q 11. How do you design a load test plan?

Designing a load test plan is crucial for a successful test. It begins with clearly defining the objectives. What are we trying to achieve? Are we testing for scalability, identifying bottlenecks, or validating performance against SLAs? Next, we identify the critical user flows and transactions that need to be tested.

Then, we determine the load profile – the number of concurrent users, the ramp-up time, and the test duration. This is often informed by historical data on user traffic patterns or projections of future usage. I use various techniques to model user behavior, ensuring the test accurately reflects real-world scenarios. For instance, a realistic load profile wouldn’t have all users performing the same action at the same time.

The plan also outlines the test environment, the metrics to be collected, and the criteria for success or failure. A well-defined plan ensures consistency and provides a framework for analyzing the results and drawing meaningful conclusions. The final step involves creating a detailed schedule and assigning responsibilities for the test execution and reporting phases.

Q 12. Describe your experience with different load test environments (e.g., cloud, on-premise).

I’m proficient in designing and executing load tests in both cloud and on-premise environments. Cloud-based load testing (using services like AWS, Azure, or Google Cloud) offers scalability and flexibility. It’s ideal for simulating massive user loads that would be difficult to achieve on-premise. However, it requires careful consideration of network latency and costs.

On-premise load testing uses internal infrastructure. It provides better control over the environment but can be limited in terms of scalability. It’s often preferred for security-sensitive applications or when dealing with legacy systems. The choice depends on the project’s specific requirements, budget, and security concerns.

In either environment, I focus on setting up accurate load generation infrastructure and ensuring monitoring and logging are well-integrated to collect comprehensive performance data.

Q 13. How do you correlate load test results with real-world user behavior?

Correlating load test results with real-world user behavior involves a combination of techniques. First, we need accurate data on real user behavior. This often comes from analytics tools like Google Analytics or custom logging systems that track user actions, response times, and error rates.

Next, we design the load test to mimic this real-world behavior as closely as possible. This includes using realistic load profiles, including pauses and think times, and simulating various user scenarios. The key is to ensure the load test accurately reflects the distribution of user activity, such as the proportion of users performing different tasks.

Once the test is complete, we compare the load test results (response times, error rates, etc.) with the real-world metrics. Differences might highlight areas for improvement, indicating that the load test is not adequately simulating real-world usage or pointing to performance issues in the application. This iterative approach of comparing and refining the load tests ensures that results remain relevant to actual application performance.

Q 14. What is your experience with performance tuning and optimization?

Performance tuning and optimization is an integral part of my load testing process. Once a load test identifies bottlenecks, I collaborate with developers to address them. This involves analyzing resource utilization, code profiling, database query optimization, and caching strategies.

For example, I might identify a slow database query that’s impacting overall response time. By working with the database administrator, we could optimize the query, add indexes, or implement caching mechanisms to improve performance. Similarly, code profiling can reveal inefficient algorithms or memory leaks that can be addressed through code refactoring and optimization.

After implementing these improvements, I perform another round of load testing to validate the effectiveness of the changes. This iterative process ensures that performance issues are systematically addressed and application performance is optimized. I also focus on proactive performance tuning, anticipating potential bottlenecks before they impact the application’s performance in production.

Q 15. How do you ensure the accuracy and reliability of your load tests?

Ensuring accurate and reliable load tests hinges on meticulous planning and execution. It’s not just about throwing a lot of virtual users at a system; it’s about understanding the system’s behavior under stress. We achieve this through several key steps:

- Realistic Test Scenarios: We begin by creating realistic test scenarios mirroring real-world user behavior. This includes simulating various user actions, data input patterns, and concurrent activity levels. For example, if we’re testing an e-commerce site, we’d model scenarios like browsing products, adding items to carts, proceeding to checkout, and making payments, replicating the expected distribution of user actions. Ignoring this can lead to skewed results.

- Representative Test Data: Using realistic data is crucial. We generate or prepare data sets that mimic the size, structure, and characteristics of production data. Using small or synthetic data can lead to inaccurate performance assessments.

- Proper Infrastructure Setup: The load testing environment should accurately mirror the production environment. This includes network configuration, hardware specifications (CPU, memory, network bandwidth), and database settings. Differences between test and production environments introduce variables that invalidate test results.

- Controlled Experimentation: We use a scientific approach. We conduct multiple runs, vary the load gradually (ramp-up), and monitor key performance indicators (KPIs). This helps us understand the system’s behavior across different load levels, identify bottlenecks, and verify results.

- Validation and Verification: We validate our results against historical data and baseline metrics. We also verify that the test environment is stable and consistent throughout the test execution, ensuring no external factors influence our results.

- Monitoring and Logging: Robust monitoring and logging during the test is essential. This captures system behavior across all levels (application, server, network) for post-test analysis and root cause diagnosis.

For instance, in a recent project testing a financial trading platform, we meticulously replicated their peak trading hours, using realistic market data and user interaction patterns. This allowed us to accurately assess the system’s ability to handle extreme load and identify potential performance bottlenecks before they impacted real users.

Career Expert Tips:

- Ace those interviews! Prepare effectively by reviewing the Top 50 Most Common Interview Questions on ResumeGemini.

- Navigate your job search with confidence! Explore a wide range of Career Tips on ResumeGemini. Learn about common challenges and recommendations to overcome them.

- Craft the perfect resume! Master the Art of Resume Writing with ResumeGemini’s guide. Showcase your unique qualifications and achievements effectively.

- Don’t miss out on holiday savings! Build your dream resume with ResumeGemini’s ATS optimized templates.

Q 16. Explain your approach to capacity planning based on load test results.

Capacity planning based on load test results is a crucial step in ensuring system scalability and performance. It’s an iterative process involving several key phases:

- Analyzing Load Test Results: We thoroughly analyze the results, focusing on key metrics like response times, throughput, resource utilization (CPU, memory, network), and error rates. We identify the load level at which the system starts to degrade and pinpoint potential bottlenecks.

- Identifying Bottlenecks: Based on the analysis, we pinpoint the parts of the system causing performance degradation. This might be the database, application server, network infrastructure, or even the code itself. This step is often aided by performance profiling tools.

- Extrapolating to Future Load: We extrapolate the results to project future needs. Considering predicted growth in user base, traffic patterns, and transaction volumes, we forecast the infrastructure requirements to handle projected loads. We might use forecasting models or statistical analysis depending on the data.

- Recommending Capacity Adjustments: Based on the projections, we recommend adjustments to the infrastructure (hardware upgrades, software optimizations, database tuning). This could involve increasing server capacity, adding more network bandwidth, optimizing database queries, or upgrading software components. We also assess the feasibility of these changes and estimate their costs.

- Iterative Refinement: Capacity planning isn’t a one-time effort. We continuously monitor the system’s performance after capacity adjustments, regularly conduct load tests, and fine-tune the infrastructure as needed.

For example, if our load tests show the database becoming a bottleneck under peak load, we might recommend upgrading the database server hardware, optimizing database queries, or implementing database caching strategies. This iterative approach ensures the system remains performant and scalable.

Q 17. How do you handle different types of load (e.g., uniform, ramp-up, spike)?

Load tests often need to simulate different user behavior patterns. We handle this by carefully designing test scenarios that incorporate various load profiles:

- Uniform Load: This simulates a consistent level of user activity over a defined period. It’s useful for establishing a baseline performance under steady-state conditions. We use this to establish the system’s capacity to handle a sustained level of traffic. The load generator might be configured to maintain a constant number of virtual users making requests.

- Ramp-up Load: This starts with a low load and gradually increases it over time, mimicking how user activity builds up during the day. This helps identify breaking points where the system struggles to keep up with the increasing load. The load generator increases the number of virtual users at a pre-defined rate.

- Spike Load: This simulates sudden bursts of activity. It’s designed to test the system’s resilience to unexpected surges in traffic. We’d use this to test the system’s ability to handle flash crowds or unexpected events. The load generator would simulate a very rapid increase in user activity followed by a decrease.

- Combination Load: In most real-world situations, we see a combination of these patterns. Our test scenarios often incorporate all three to capture the nuances of realistic user behavior, generating complex load profiles.

Consider an online gaming server; we would use a ramp-up test to mimic the increase in players during game launch, a spike test to simulate a sudden influx of players during a game event, and a uniform load to test the steady-state capacity during regular gameplay.

Q 18. What is your experience with using synthetic monitoring tools?

Synthetic monitoring tools are invaluable for proactively identifying performance issues. My experience involves using tools like Datadog, New Relic, and Dynatrace. These tools allow us to simulate real-user requests from various geographical locations and continuously monitor key performance indicators (KPIs). This is distinct from load testing, which is typically a more focused, intensive effort.

- Proactive Issue Detection: Synthetic monitors continuously run, detecting performance degradation before users report problems. This allows for quicker identification and resolution of issues.

- Performance Baselines: They help establish baselines for performance, enabling us to identify deviations and trigger alerts.

- Geographical Coverage: Testing from various locations helps identify performance issues related to network latency or geographic limitations.

- Integration with Alerting Systems: These tools often integrate with alerting systems, instantly notifying teams of potential problems. This allows for swift intervention, minimizing user impact.

For example, using Datadog, we’ve been able to set up synthetic monitors that mimic user login attempts from various locations. If response times exceed pre-defined thresholds, we’re alerted immediately, allowing us to investigate and resolve issues before they affect many users.

Q 19. Describe your experience with different types of load generators.

I have extensive experience with various load generators, each with its own strengths and weaknesses:

- JMeter: An open-source tool ideal for simulating various types of user behavior. It’s highly customizable but can be complex to set up for large-scale tests.

- LoadRunner: A commercial tool offering robust features and scalability, but it’s expensive and requires specialized training.

- k6: A modern open-source tool with a focus on developer experience and JavaScript scripting. It’s well-suited for modern applications and integrates well with CI/CD pipelines.

- Gatling: Another open-source tool that utilizes Scala for scripting and offers good performance.

- BlazeMeter: A cloud-based load testing platform that scales easily to extremely high load levels. It simplifies test setup and provides excellent reporting.

The choice of load generator often depends on the specific needs of the project. For example, for a small project with a simple application, JMeter might suffice. For large-scale, complex projects requiring extreme scalability, BlazeMeter might be a better choice.

Q 20. How do you manage and troubleshoot load test infrastructure?

Managing and troubleshooting load test infrastructure requires a structured approach:

- Test Environment Setup: We establish a dedicated, isolated environment for load testing. This prevents interference with production systems and ensures consistent test results. This often involves using virtual machines or cloud resources.

- Load Generator Configuration: Proper configuration of load generators is crucial. This involves setting the number of virtual users, ramp-up rate, test duration, and the specific user scenarios to simulate.

- Monitoring Tools: We use system monitoring tools (like those mentioned earlier) to track server performance (CPU, memory, disk I/O), network traffic, and application metrics during the test. This helps identify potential bottlenecks.

- Logging and Analysis: Detailed logging is essential for identifying and diagnosing problems. This includes server logs, application logs, and load generator logs. We use tools to analyze these logs, correlating errors with system performance.

- Troubleshooting Strategies: Troubleshooting involves a systematic approach, starting with identifying the point of failure (network, server, application). We use network monitoring tools, performance profilers, and debugging techniques to pin down the root cause.

For example, if we observe high CPU utilization on a database server during a load test, we’d analyze database queries, consider adding more resources to the server, or optimize database schema. If we see network latency, we’d investigate network configuration and connectivity.

Q 21. Explain your understanding of different performance testing metrics (e.g., response time, throughput, error rate).

Understanding performance testing metrics is crucial for accurate assessment and reporting. Here are some key metrics:

- Response Time: The time it takes for the system to respond to a request. This is often measured as average response time, but also percentiles (e.g., 90th percentile) are important to understand tail latencies (slowest responses). Low response times indicate high performance.

- Throughput: The number of requests processed per unit of time. Higher throughput indicates greater efficiency. For example, requests per second (RPS).

- Error Rate: The percentage of requests that fail. A high error rate points to system instability or bugs. We look at both the raw error count and the percentage.

- Resource Utilization: This measures the consumption of system resources such as CPU, memory, disk I/O, and network bandwidth. High resource utilization might indicate bottlenecks and potential areas for optimization. Often expressed as percentage.

- Concurrency: The number of users simultaneously accessing the system. High concurrency puts greater strain on the system.

Interpreting these metrics requires understanding their interrelationships. For example, high throughput might be accompanied by high response times if the system is struggling to keep up. Similarly, a low error rate doesn’t necessarily mean good performance if response times are slow. Analyzing these metrics together provides a comprehensive understanding of system performance.

Q 22. How do you integrate load testing into the CI/CD pipeline?

Integrating load testing into a CI/CD pipeline is crucial for ensuring application stability and performance under expected load. It’s not just about adding a single load test; it’s about creating a robust, automated process. This typically involves using a load testing tool that can be triggered via the CI/CD system, such as Jenkins, GitLab CI, or Azure DevOps.

The process usually involves these steps:

- Trigger: The load test is initiated automatically upon code deployment or at specific points in the CI/CD pipeline (e.g., after successful unit and integration tests).

- Execution: The load testing tool simulates user traffic against the deployed application, using predefined scenarios and load profiles.

- Results Analysis: The tool collects performance metrics (response times, error rates, resource utilization) and generates reports. These reports can be automatically checked for performance thresholds.

- Feedback and Integration: The results are integrated into the CI/CD pipeline. If performance thresholds are breached, the pipeline might halt, preventing the deployment of a potentially unstable application. This ensures that only applications that pass load tests are released.

For example, you might use JMeter or k6 to run load tests, configuring them to connect to your CI/CD system’s API and receive deployment notifications as triggers. Automated reporting and threshold checks can be achieved using tools such as Grafana and Prometheus.

Q 23. Explain your understanding of network protocols and their impact on performance.

Network protocols significantly impact application performance. Understanding their intricacies is crucial for effective load testing and performance optimization. Protocols like TCP (Transmission Control Protocol) and UDP (User Datagram Protocol) have different characteristics impacting performance differently.

TCP, a connection-oriented protocol, provides reliable data transmission with acknowledgments and error correction. However, this reliability comes at the cost of overhead, potentially leading to higher latency. UDP, a connectionless protocol, offers faster transmission but sacrifices reliability. Packet loss can occur, making it unsuitable for applications requiring data integrity.

Furthermore, the choice of HTTP versions (HTTP/1.1 vs. HTTP/2) affects performance. HTTP/2, with features like multiplexing and header compression, delivers significant performance improvements over HTTP/1.1.

Other protocols, such as DNS (Domain Name System) and database protocols (e.g., MySQL, PostgreSQL), also play roles. DNS resolution delays can significantly impact overall response time. Inefficient database queries, poorly configured database connections, and inappropriate database technologies can drastically affect application performance.

During load testing, we monitor network metrics like packet loss, latency, and throughput to identify bottlenecks. Tools like Wireshark can be used to capture and analyze network traffic to pinpoint protocol-related performance issues.

Q 24. Describe your experience with database performance tuning and optimization.

Database performance tuning is critical for overall application performance, especially under load. My experience includes optimizing database schemas, query performance, and connection pools.

Schema optimization involves designing efficient tables, indexes, and relationships. Poorly designed schemas can lead to slow queries and excessive resource consumption. For example, using appropriate data types and creating indexes on frequently queried columns can dramatically improve query speed.

Query optimization involves analyzing and improving slow SQL queries. Tools like database explain plans help identify performance bottlenecks within queries. Techniques like query rewriting, adding indexes, and optimizing joins are frequently employed.

Connection pool management involves configuring the appropriate number of database connections. Too few connections can lead to queueing delays, while too many can exhaust database resources. I’ve used various monitoring tools to analyze connection pool utilization and adjust configuration accordingly.

Finally, selecting the appropriate database technology for the application workload is essential. For instance, NoSQL databases might be better suited for certain types of applications than relational databases, depending on the application’s read/write ratio and data structure.

Q 25. How do you identify and mitigate performance issues related to caching?

Caching is a critical technique for enhancing performance by storing frequently accessed data in a readily accessible location, such as memory. When caching strategies aren’t properly implemented or maintained, performance issues can arise.

Identifying caching problems often involves analyzing slow response times, especially for data that should be cached. Monitoring tools can show cache hit ratios; a low hit ratio indicates that caching isn’t effective. This might be due to a cache that’s too small, improperly configured cache invalidation, or code that doesn’t utilize the cache correctly.

Mitigating caching issues might involve:

- Increasing cache size: More memory allocated to the cache might improve the hit ratio.

- Optimizing cache eviction policies: Adjusting policies to ensure that frequently accessed data remains in the cache.

- Improving cache invalidation: Ensuring that outdated data is promptly removed from the cache.

- Debugging cache usage in the application code: Ensuring the application is correctly utilizing the cache and handling cache misses efficiently.

For example, if you observe a large number of cache misses for a specific data item, you might need to adjust the cache’s eviction policy or increase the cache size to accommodate that data. Proper logging and monitoring are vital to diagnose and address caching problems effectively.

Q 26. What is your experience with using APM tools for performance analysis?

APM (Application Performance Monitoring) tools are indispensable for performance analysis. I have extensive experience using tools like New Relic, Dynatrace, and AppDynamics. These tools provide real-time visibility into application performance, allowing for the identification and resolution of bottlenecks.

These tools offer various features, including:

- Real-time monitoring of application metrics: Response times, error rates, resource utilization (CPU, memory, I/O).

- Transaction tracing: Tracking the execution path of individual transactions to pinpoint slow components.

- Profiling: Identifying performance bottlenecks within application code or database queries.

- Alerting: Notifying teams of performance issues as they occur.

I’ve utilized these tools to identify performance bottlenecks, such as slow database queries, inefficient code, or network latency. The detailed information provided by APM tools allows for targeted optimization efforts, resulting in significant performance improvements. For example, using transaction tracing, I once identified a poorly performing API call that was causing significant delays in a critical application flow. This helped prioritize the optimization effort.

Q 27. How do you ensure your load testing strategy aligns with business objectives?

Aligning load testing strategy with business objectives is paramount. Load testing shouldn’t be a standalone exercise; it must directly support business goals.

This alignment begins with understanding the business context: What are the critical success factors for the application? What are the expected user loads and usage patterns? What are the acceptable performance thresholds (e.g., response time, error rate)? These should be defined collaboratively with stakeholders, including product managers, developers, and operations teams.

For example, for an e-commerce platform, business objectives might include handling peak holiday traffic without service disruptions. This will shape the load test scenarios, focusing on simulating high concurrency and validating the system’s capacity to withstand such stress. Conversely, a mission-critical system might have stringent latency requirements, leading to a more precise focus on response time during load testing. The load testing strategy should be designed to quantitatively measure the application’s ability to meet these objectives. This means defining meaningful metrics, establishing clear pass/fail criteria, and reporting results in a business-friendly way, highlighting the impact of performance on key business indicators.

Q 28. Describe a challenging performance issue you solved and your approach to resolving it.

I once encountered a significant performance bottleneck in a high-traffic e-commerce application during a peak shopping event. The application experienced unacceptable response times, leading to customer frustration and lost sales.

My approach involved a systematic investigation:

- Monitoring: We used APM tools and system monitoring to identify the affected components and pinpoint areas with high resource utilization.

- Load Testing: We conducted targeted load tests to simulate the real-world traffic patterns and isolate the bottleneck. We discovered that a critical database query was taking an excessively long time to execute under high load.

- Database Optimization: We analyzed the problematic query using database explain plans and optimized it by adding appropriate indexes and rewriting the query for better efficiency.

- Caching: We implemented caching strategies to store frequently accessed data, reducing the load on the database.

- Scaling: We scaled up the database server’s resources to handle the increased load more effectively.

Through these steps, we significantly improved the application’s response time, ensuring a smooth user experience even during peak demand. The key was the systematic approach, using monitoring and load testing to pinpoint the problem before applying targeted solutions. Post-optimization, we conducted further load tests to validate the improvements and ensure the system’s stability under pressure.

Key Topics to Learn for Load Measurement Interview

- Understanding Load Types: Differentiate between different types of load (e.g., static, dynamic, concurrent) and their impact on system performance.

- Performance Testing Methodologies: Familiarize yourself with various performance testing methodologies like Load Testing, Stress Testing, Endurance Testing, and Spike Testing. Understand their applications and limitations.

- Load Testing Tools: Gain practical experience with popular load testing tools (mentioning specific tools is avoided to encourage independent research).

- Metrics and KPIs: Master key performance indicators (KPIs) such as response time, throughput, resource utilization (CPU, memory, network), and error rates. Understand how to interpret and analyze these metrics.

- Analyzing Performance Bottlenecks: Develop skills in identifying and analyzing performance bottlenecks in applications and infrastructure. Learn common bottleneck causes and debugging strategies.

- Load Testing Strategies: Understand different approaches to designing and executing effective load tests, including test planning, data generation, and result analysis.

- Scalability and Capacity Planning: Learn how load testing informs scalability and capacity planning decisions. Understand concepts like vertical and horizontal scaling.

- Reporting and Communication: Practice presenting load testing results and recommendations to both technical and non-technical audiences. Develop clear and concise communication skills.

- Cloud-Based Load Testing: Explore the use of cloud-based services for load testing and their advantages in scalability and cost-effectiveness.

Next Steps

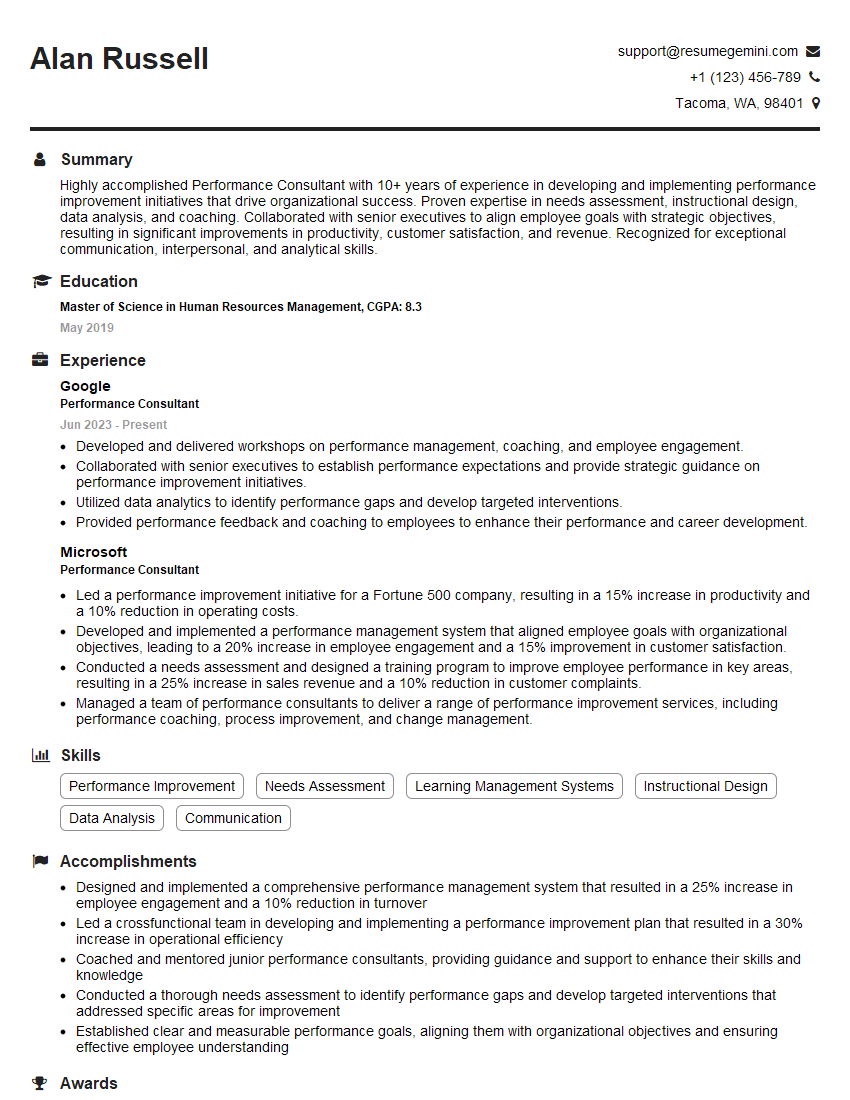

Mastering load measurement is crucial for a successful career in software engineering and related fields. A strong understanding of performance testing and optimization is highly sought after by employers. To maximize your job prospects, it’s essential to have a well-crafted, ATS-friendly resume that highlights your skills and experience. ResumeGemini is a trusted resource that can help you build a professional and impactful resume. Examples of resumes tailored to Load Measurement are available to help you get started.

Explore more articles

Users Rating of Our Blogs

Share Your Experience

We value your feedback! Please rate our content and share your thoughts (optional).

What Readers Say About Our Blog

Hello,

We found issues with your domain’s email setup that may be sending your messages to spam or blocking them completely. InboxShield Mini shows you how to fix it in minutes — no tech skills required.

Scan your domain now for details: https://inboxshield-mini.com/

— Adam @ InboxShield Mini

Reply STOP to unsubscribe

Hi, are you owner of interviewgemini.com? What if I told you I could help you find extra time in your schedule, reconnect with leads you didn’t even realize you missed, and bring in more “I want to work with you” conversations, without increasing your ad spend or hiring a full-time employee?

All with a flexible, budget-friendly service that could easily pay for itself. Sounds good?

Would it be nice to jump on a quick 10-minute call so I can show you exactly how we make this work?

Best,

Hapei

Marketing Director

Hey, I know you’re the owner of interviewgemini.com. I’ll be quick.

Fundraising for your business is tough and time-consuming. We make it easier by guaranteeing two private investor meetings each month, for six months. No demos, no pitch events – just direct introductions to active investors matched to your startup.

If youR17;re raising, this could help you build real momentum. Want me to send more info?

Hi, I represent an SEO company that specialises in getting you AI citations and higher rankings on Google. I’d like to offer you a 100% free SEO audit for your website. Would you be interested?

Hi, I represent an SEO company that specialises in getting you AI citations and higher rankings on Google. I’d like to offer you a 100% free SEO audit for your website. Would you be interested?

good