Unlock your full potential by mastering the most common Computer Graphics and Visualization interview questions. This blog offers a deep dive into the critical topics, ensuring you’re not only prepared to answer but to excel. With these insights, you’ll approach your interview with clarity and confidence.

Questions Asked in Computer Graphics and Visualization Interview

Q 1. Explain the difference between rasterization and ray tracing.

Rasterization and ray tracing are two fundamentally different approaches to rendering 3D scenes. Think of it like this: rasterization is like painting a picture, while ray tracing is like taking a photograph.

Rasterization is a technique that projects 3D objects onto a 2D screen by breaking down the scene into individual pixels. It works by determining which polygons are visible at each pixel and then assigning color values based on shading calculations. It’s incredibly efficient for real-time rendering, making it the dominant method in games and interactive applications. Imagine a painter filling in pixels on a canvas, one at a time, to create the image.

Ray tracing, on the other hand, simulates light rays traveling from the camera, through the scene, and to the light sources. For each pixel, it traces a ray back into the scene, checking for intersections with objects. Based on these intersections, it calculates the color of the pixel by considering lighting, reflections, and refractions. This produces incredibly realistic images with accurate lighting and shadows but is computationally expensive, making real-time ray tracing still challenging for complex scenes, although rapidly improving with dedicated hardware.

In short: Rasterization is fast but can lack realism; ray tracing is slow but highly realistic.

Q 2. Describe the process of creating a realistic texture map.

Creating a realistic texture map involves several steps. First, you need a high-resolution image, ideally a photograph, of the surface you want to represent. This image forms the base of your texture.

Next, you might need to process this image. This often involves adjustments for color balance, contrast, and sharpness. You might also perform noise reduction or other filtering techniques to improve the quality. Sophisticated techniques like photogrammetry can be used to automatically create high-quality textures from multiple photographs of a real-world object.

Then, you might need to consider the texture’s purpose. For instance, a texture map for a brick wall might require additional steps to ensure the bricks are properly aligned and the mortar is accurately represented. This often involves manually painting or editing certain elements to enhance details. Tools like Photoshop or Substance Painter are commonly used for this process.

Finally, the processed image is saved in a suitable file format, often a compressed format like JPEG or a format that supports various channels like PNG (for alpha transparency) or DDS (DirectDraw Surface, often used in game development). The choice of file format depends on the target application and desired level of quality and compression.

For example, creating a realistic wood texture requires capturing the grain, knots, and variations in color. This often necessitates shooting high-resolution images of various wood samples under controlled lighting conditions.

Q 3. What are the advantages and disadvantages of different shading models (e.g., Phong, Blinn-Phong, Cook-Torrance)?

Different shading models offer varying levels of realism and computational cost. Let’s compare Phong, Blinn-Phong, and Cook-Torrance.

- Phong Shading: A simple model that calculates the diffuse and specular components separately. It’s fast but can produce noticeable discontinuities (Mach bands) and lacks the subtlety of more advanced models. It uses a simple specular highlight calculation based on the angle between the light, surface normal, and viewer.

- Blinn-Phong Shading: An improvement over Phong, it uses a halfway vector to calculate specular highlights. This results in smoother highlights and reduces the Mach banding effect while still maintaining relatively low computational cost. The halfway vector is the vector halfway between the light and view vectors.

- Cook-Torrance Shading: A physically-based model that considers microfacet theory to simulate surface roughness and the distribution of light reflections. This creates far more realistic and detailed results, but it is significantly more computationally expensive. It accounts for factors like Fresnel reflections (light reflecting differently at different angles) and shadowing/masking due to surface roughness.

In essence: Phong is fast but simple; Blinn-Phong is a good balance of speed and quality; Cook-Torrance is slow but highly realistic. The choice depends on the desired visual fidelity and performance constraints of the application.

Q 4. How does Z-buffering work and what are its limitations?

Z-buffering, or depth buffering, is a method used to solve the hidden surface problem in 3D graphics. Imagine looking at a stack of cards; you only see the card on top. Z-buffering works similarly.

It uses a buffer (an array) of the same size as the screen, where each element stores the depth (z-coordinate) of the closest object visible at that pixel. As polygons are rasterized, their depth is compared to the value already stored in the Z-buffer. If the polygon is closer (smaller z-value), its depth and color are written to the buffer, overwriting the previous value. Otherwise, the polygon is hidden and ignored.

Limitations:

- Z-fighting: When two surfaces are extremely close together, their depth values might be so similar that the Z-buffer cannot accurately resolve which one is in front, leading to flickering or visual artifacts.

- Limited Precision: The Z-buffer has a finite number of bits to represent depth, leading to precision limitations, especially in scenes with a large range of depth values. This can manifest as depth inaccuracies or visual glitches.

- Overdraw: Polygons that are further away but still within the viewing frustum might be processed needlessly before being discarded due to depth testing. This wastes processing power.

Despite these limitations, Z-buffering remains a fundamental and efficient technique widely used in real-time rendering.

Q 5. Explain the concept of a shader and its role in rendering.

A shader is a small program that runs on the graphics processing unit (GPU) and is responsible for various aspects of rendering, from calculating colors and lighting to applying textures and effects.

Shaders operate on individual vertices (the corners of polygons) or fragments (pixels), applying transformations and calculations to create the final image. Vertex shaders manipulate vertex positions and attributes, while fragment shaders determine the color of each pixel. They use specialized languages like HLSL (High-Level Shading Language) or GLSL (OpenGL Shading Language).

Role in Rendering: Shaders are essential for modern rendering techniques because they provide a highly flexible and programmable way to control the visual aspects of a scene. They allow developers to implement complex lighting models, realistic materials, and custom visual effects that would be impossible or incredibly difficult to achieve with fixed-function pipelines.

For example, a simple shader might apply a texture to a surface, while a more complex shader could simulate realistic subsurface scattering, simulating how light penetrates a material (like skin or marble).

Q 6. Describe different interpolation techniques used in computer graphics.

Interpolation techniques are crucial for smoothly transitioning between values in computer graphics. They’re used extensively to create smooth surfaces, animations, and other visual effects.

Several common techniques exist:

- Linear Interpolation: The simplest method, which calculates a weighted average between two values. It’s computationally inexpensive but can produce noticeable artifacts in certain cases. For example, linearly interpolating between two colors might produce a slightly off-color result.

- Bilinear Interpolation: Extends linear interpolation to two dimensions. It’s commonly used for texture mapping, smoothly interpolating colors between pixels on a texture. It’s more computationally expensive than linear interpolation but produces smoother results.

- Cubic Interpolation: Uses cubic polynomials to achieve smoother transitions than linear interpolation. Techniques like cubic Bézier curves are used for this purpose, offering more control over the shape of the curve. The more complex calculation offers greater visual quality.

- Spline Interpolation: Uses piecewise polynomial curves (splines) to create smooth curves passing through a set of control points. This is commonly used for creating smooth animations or paths.

The choice of interpolation technique depends on the application’s requirements for speed and quality. Simple linear interpolation might suffice for basic applications, while complex spline interpolation is often necessary for high-quality animations and modeling.

Q 7. What are Bézier curves and how are they used in computer graphics?

Bézier curves are mathematically defined curves used extensively in computer graphics for creating smooth shapes and paths. They’re defined by a set of control points, and the curve passes through the first and last points but is influenced by the intermediate points.

How they’re used:

- Curve Design: Bézier curves are widely used for designing smooth curves in vector graphics editors, CAD software, and font design. The ability to control the curve’s shape through control points makes them highly versatile.

- Animation: They define smooth animation paths for objects or cameras. By changing the control points over time, you can create complex, natural-looking movements.

- Surface Modeling: Patching together Bézier curves in two dimensions creates Bézier surfaces, which are used to model complex 3D shapes.

Cubic Bézier curves are particularly common, defined by four control points. The mathematical formula calculates the position on the curve based on a parameter (usually ‘t’ ranging from 0 to 1), providing precise control over the curve’s shape.

For example, many fonts use Bézier curves to define the shape of characters. The smooth curves create the elegant forms we see in many typefaces.

Q 8. Explain the difference between forward and deferred rendering.

Forward and deferred rendering are two fundamental approaches to rendering 3D scenes. Think of it like painting a room: forward rendering paints each object individually, while deferred rendering first paints the scene’s properties onto surfaces and then paints the objects on top.

Forward Rendering: In forward rendering, the lighting calculations are performed for each object individually, each time the object’s fragment shader is executed. For each light source, the shader computes the lighting contribution and adds it to the final color. This process repeats for every light affecting the object. It’s simple to implement but computationally expensive, especially with many lights, as each light must be processed for each object.

Deferred Rendering: Deferred rendering separates the geometry pass (drawing the scene’s geometry and storing data like position, normal, and material properties into G-Buffers) from the lighting pass. In the lighting pass, the pixel shader samples the G-Buffers to determine the properties of the surface at that pixel and then performs the lighting calculations only once for each pixel, regardless of the number of lights. This is significantly more efficient with many light sources.

Key Differences Summarized:

- Light Calculations: Forward rendering calculates lighting per object, per light; deferred rendering calculates lighting per pixel, regardless of the light count.

- Efficiency: Forward rendering is efficient with few lights; deferred rendering is efficient with many lights.

- Complexity: Forward rendering is simpler to implement; deferred rendering is more complex.

In short: Choose forward rendering for scenes with few lights and simpler shaders, and deferred rendering for complex scenes with many lights and detailed shading effects.

Q 9. What are the different types of projection matrices and when would you use each?

Projection matrices transform 3D coordinates from camera space to 2D screen space. Several types exist, each suited to specific needs:

- Perspective Projection: This is the most common type, simulating how we see the world. Objects farther away appear smaller. It’s defined by a field of view (FOV), aspect ratio, near and far clipping planes. The near plane defines the closest point that will be rendered, and the far plane defines the furthest.

- Orthographic Projection: This type maintains parallel lines and doesn’t have perspective distortion. Objects remain the same size regardless of distance. It’s used for technical drawings, CAD software, and top-down views in games.

- Oblique Projection: This is a less common projection where the projection plane is not parallel to any of the coordinate axes. It combines elements of perspective and orthographic projections, offering a unique perspective which is less commonly used.

When to use each:

- Perspective Projection: Use for most realistic 3D scenes, games, and simulations where the perspective effect is desired.

- Orthographic Projection: Use for technical drawings, architectural visualizations, or game elements such as mini-maps where a perspective-free view is needed.

- Oblique Projection: Use for specialized scenarios requiring a unique perspective, such as visualizing certain architectural or engineering designs.

The choice depends on the desired visual effect and the application’s purpose. The projection matrix is crucial for rendering the 3D world correctly onto the 2D screen.

Q 10. Describe your experience with a specific 3D modeling software (e.g., Maya, Blender, 3ds Max).

I have extensive experience with Blender, an open-source 3D creation suite. I’ve used it for various projects, from modeling intricate characters to creating complex environments. My skills encompass:

- Modeling: I’m proficient in creating high-poly and low-poly models using a variety of techniques, including extrusion, sculpting, and remeshing. I’m comfortable working with different model types, like NURBS and meshes.

- Texturing: I can create and apply both procedural and image-based textures using Blender’s internal tools and external applications like Substance Painter. I understand UV unwrapping and its importance for efficient texture mapping.

- Rigging and Animation: I can create rigs for characters and objects using armature and constraints. I’ve animated characters, objects, and camera movements, understanding keyframes, curves, and various animation techniques.

- Lighting and Rendering: I have experience setting up lighting scenes in Blender’s Cycles and Eevee render engines, leveraging both physical-based rendering principles (PBR) and stylized approaches. I’m familiar with different shaders, materials, and lighting techniques, for creating realistic or stylized visuals.

- Compositing: I can combine rendered elements, adjust colors, and add post-processing effects to enhance the final output.

For example, I recently used Blender to model and animate a short film featuring a fantasy character. This involved creating a high-fidelity character model, rigging it for animation, designing environments, creating textures, lighting the scene, and finally rendering the animation sequences.

Q 11. Explain your experience with a specific rendering engine (e.g., Unreal Engine, Unity, RenderMan).

My experience primarily lies with Unreal Engine, a real-time 3D creation tool renowned for its capabilities in game development, architecture visualization, and film production. I’m proficient in:

- Blueprint Visual Scripting: I can create complex game logic and interactive systems using Unreal Engine’s visual scripting system, eliminating the need for extensive coding in many instances.

- C++ Programming: I can extend Unreal Engine’s functionality by writing custom C++ code for more performance-critical tasks and advanced features.

- Material Editing: I understand and utilize various material nodes to create realistic and stylized materials for surfaces, making use of PBR workflows and custom shader creation.

- Lighting and Post-Processing: I have experience setting up lighting scenes for different moods and atmospheres and utilizing post-processing effects to enhance the overall visual quality and cinematic look.

- Level Design: I’m familiar with creating and optimizing game levels for performance and playability, focusing on efficient resource management, collision handling, and visual appeal.

One project involved creating a virtual reality experience for architectural walkthroughs. This required optimizing the scene for VR performance, creating interactive elements, and implementing realistic lighting to showcase the building’s design effectively.

Q 12. How would you optimize a scene for real-time rendering?

Optimizing a scene for real-time rendering involves a multi-faceted approach focusing on reducing the computational load on the GPU and CPU. Here’s a breakdown of key strategies:

- Level of Detail (LOD): Use different polygon counts for objects based on their distance from the camera. Far-away objects can use simpler models, reducing polygon count and draw calls.

- Draw Call Optimization: Batch similar objects together to reduce the number of draw calls. This is often achieved through techniques like instancing.

- Texture Optimization: Use appropriately sized textures and utilize texture compression techniques like DXT or ASTC. Consider using texture atlases to reduce the number of texture binds.

- Shader Optimization: Optimize shader code for efficiency, avoiding unnecessary calculations and using optimized data types.

- Occlusion Culling: Use occlusion culling techniques to prevent rendering objects that are hidden behind others. This is especially crucial in complex scenes.

- Frustum Culling: Don’t render objects that fall outside the camera’s view frustum.

- Shadow Optimization: Utilize efficient shadow mapping techniques like cascaded shadow maps or shadow volumes to reduce the performance impact of shadows.

- Light Optimization: Limit the number of lights in the scene, using techniques like light probes or lightmaps for static lighting. Utilize deferred rendering for better performance when handling many lights.

The specific optimization techniques will depend on the scene’s complexity, target platform, and performance requirements. Profiling tools are essential for identifying performance bottlenecks and guiding optimization efforts.

Q 13. How do you handle memory management in large-scale 3D applications?

Memory management in large-scale 3D applications is critical for preventing crashes and maintaining smooth performance. Key techniques include:

- Streaming: Load and unload assets (models, textures, etc.) dynamically based on their proximity to the camera. This prevents loading everything at once and keeps memory usage under control.

- Level of Detail (LOD): Utilizing different levels of detail, as previously described, minimizes the amount of polygon data in memory.

- Memory Pooling: Allocate large blocks of memory upfront and reuse them efficiently, avoiding frequent allocations and deallocations.

- Reference Counting: Track how many parts of the application reference each asset. When the count reaches zero, the asset can be safely unloaded.

- Garbage Collection (where applicable): Utilize garbage collection if the rendering engine supports it. This helps automatically reclaim unused memory.

- Data Compression: Compress assets (textures, meshes) to reduce their memory footprint without significant quality loss.

- Data Structures: Employ efficient data structures (e.g., spatial partitioning) to quickly access and manage assets.

Effective memory management often requires a deep understanding of the application’s memory usage patterns and careful planning. Profiling tools are invaluable in identifying memory leaks and optimizing memory allocation strategies.

Q 14. Explain your understanding of normal mapping and its benefits.

Normal mapping is a texture mapping technique that adds surface detail to a model without increasing the polygon count. Instead of defining detail through geometry, we use a normal map – a texture that stores surface normal vectors for each pixel. These vectors are then used during the lighting calculation to simulate the effect of high-frequency details.

How it works: The normal map is sampled at each pixel, and its normal vector is used to calculate the lighting effect. This effectively tricks the lighting shader into thinking the surface is much more detailed than the underlying geometry. This allows for incredibly detailed surfaces without the performance cost of having a high-poly model.

Benefits:

- Increased Detail: Adds significant surface detail without increasing polygon count, improving performance.

- Improved Visual Fidelity: Creates more realistic and visually appealing surfaces.

- Performance Gains: Significantly reduces the number of polygons needed for a given level of detail, leading to faster rendering times.

Practical Application: Normal maps are widely used in games, film, and other 3D applications to achieve high visual fidelity with efficient performance. Imagine a brick wall: Instead of modeling each individual brick, we can use a low-poly wall and a normal map to simulate the bumps and indentations of the bricks, drastically reducing the polygon count without sacrificing the visual realism.

Q 15. What are the challenges of creating photorealistic images?

Creating photorealistic images is a complex undertaking that pushes the boundaries of computer graphics. The challenge lies in accurately simulating the intricate interplay of light, shadow, material properties, and atmospheric effects that define a real-world scene. Think about the subtle variations in color and texture on a piece of fruit, the way light refracts through water, or the delicate scattering of light in a foggy forest – replicating these details requires a deep understanding of physics and advanced rendering techniques.

- Subsurface Scattering: Accurately modeling how light penetrates and scatters beneath the surface of translucent materials like skin or leaves is computationally expensive but crucial for realism. Approximations exist, but achieving perfect accuracy is a persistent challenge.

- Global Illumination: This refers to the complex interaction of light bouncing off multiple surfaces. While techniques like path tracing aim to simulate this realistically, rendering times can be extremely long for high-resolution images. Finding the right balance between realism and render time is a key consideration.

- Micro-details and Texture: The small details, like pores in skin or the grain in wood, significantly impact realism. Creating and efficiently rendering these details requires high-resolution textures and efficient techniques like normal mapping and displacement mapping. However, managing the enormous data associated with extremely high resolution is still a challenge.

- Atmospheric Effects: Factors like fog, haze, and scattering of light in the atmosphere significantly influence the overall appearance of a scene. Accurately representing these effects requires advanced techniques and a good understanding of atmospheric physics.

In essence, the challenge is less about individual techniques and more about the comprehensive and accurate simulation of the whole physical process of light interacting with the scene.

Career Expert Tips:

- Ace those interviews! Prepare effectively by reviewing the Top 50 Most Common Interview Questions on ResumeGemini.

- Navigate your job search with confidence! Explore a wide range of Career Tips on ResumeGemini. Learn about common challenges and recommendations to overcome them.

- Craft the perfect resume! Master the Art of Resume Writing with ResumeGemini’s guide. Showcase your unique qualifications and achievements effectively.

- Don’t miss out on holiday savings! Build your dream resume with ResumeGemini’s ATS optimized templates.

Q 16. Discuss your experience with different animation techniques (e.g., keyframing, motion capture).

My experience spans a range of animation techniques, from traditional keyframing to cutting-edge motion capture. Keyframing, the foundation of animation, involves manually setting key poses at specific points in time, with the computer interpolating the intermediate frames. This allows for fine-grained control over movement and expression, but it’s time-consuming and requires a strong artistic sense. I’ve worked extensively on projects using this method, animating everything from subtle character expressions to complex character rigs.

Motion capture, on the other hand, captures real-world movement using specialized sensors or cameras. This approach provides realistic movement but requires post-processing, cleaning, and often retargeting to fit character rigs. For instance, I worked on a project where we used motion capture data for a complex fight scene, then refined it in post-production to add more stylized movements and emotion to the characters’ performances. I’m familiar with both optical and inertial motion capture systems and their respective advantages and limitations. Often, a hybrid approach, combining keyframing and motion capture, is employed to achieve the optimal results.

Q 17. Describe your experience with lighting techniques (e.g., global illumination, ambient occlusion).

My experience with lighting techniques encompasses a wide spectrum, from simple directional lights to sophisticated global illumination methods. Global illumination, in particular, has always been a passion of mine. I understand the different techniques for its approximation, such as photon mapping and radiosity. These techniques allow for realistic light bounces and indirect lighting, creating a sense of depth and realism that isn’t achievable with simple lighting models. For instance, I remember working on a project that required extremely realistic lighting in a cathedral; global illumination was crucial in capturing the complex light interactions from stained glass windows across the entire scene.

Ambient occlusion is another technique I use frequently to enhance the realism of my scenes. It simulates the darkening of surfaces in areas where light is obstructed. The effect is subtle but powerful in conveying the shape and volume of objects. I often combine global illumination and ambient occlusion to achieve a realistic and visually appealing final result. I also have experience with image-based lighting, using HDR images to capture realistic lighting conditions and environment maps, as well as physically-based rendering (PBR) which ensures realistic material interaction with light.

Q 18. How do you approach creating realistic water or fire effects?

Creating realistic water and fire effects relies heavily on particle systems and fluid simulation techniques. For water, I often employ techniques like metaballs or signed distance fields to simulate the surface, combined with particle systems to simulate splashes, foam, and ripples. Realistic water simulation often involves simulating its physical properties like viscosity, surface tension and buoyancy. This requires sophisticated algorithms that often come with a high computational cost. I’ve experimented with both procedural and physically-based approaches, selecting the method most appropriate for the project’s scope and render time requirements. For a quick visual effect, a procedural method might suffice. For highly accurate simulations however, a physically-based approach might be preferable.

Fire, similarly, is commonly created with particle systems. Each particle represents a burning ember or piece of smoke, with its properties (size, color, velocity) changing over time to simulate the dynamics of combustion. Advanced techniques also model the effects of wind, heat dissipation, and smoke interaction, creating complex visual effects. Understanding and simulating the thermodynamics of fire is challenging, however, it’s crucial to achieve a level of realism that looks convincing.

Q 19. Explain your experience with particle systems.

Particle systems are an indispensable tool in my workflow, enabling me to create a wide range of effects, from simple sparks and dust to complex simulations of liquids and explosions. My experience includes building custom particle systems from scratch as well as using pre-built engines, allowing me to tailor the performance to meet the project’s requirements. I understand the importance of efficient particle management, including techniques like spatial partitioning to handle large numbers of particles without compromising performance. For example, in a recent project simulating a crowd of people, efficient particle management was key to achieving a realistic density of individuals while still ensuring acceptable frame rates.

I’m also familiar with various particle behaviours and forces. I can create realistic-looking particle behaviors that reflect real-world phenomena such as gravity, wind and collisions. Furthermore, I can incorporate different rendering techniques to create visually appealing effects. This includes using textures or shaders to add visual detail to the particles.

Q 20. What are your preferred methods for creating believable characters?

Creating believable characters requires a multi-faceted approach. I believe in starting with a strong concept and design, focusing on anatomy, proportion, and personality. I’ll usually begin with concept art to establish the visual direction and character design details. Then, I focus on creating a realistic and expressive 3D model. I use advanced techniques such as high-polygon modeling and sculpting to capture fine details. Subdivision surface modeling often proves useful in capturing the nuances of human anatomy while allowing for efficient rendering.

Next, I focus on texturing and rigging. High-quality textures, using techniques like normal and displacement mapping, are essential for realism. The rigging process is vital in enabling realistic movement and facial expressions. Weight painting, skinning and rigging techniques are employed to ensure the flexibility and proper deformation of the character’s skin and muscles. Finally, animation brings the character to life, combining techniques like keyframing and motion capture for fluid and expressive movements.

Beyond technical skill, understanding human anatomy and behaviour is crucial. Observing real people and understanding their movement, facial expressions, and nuances is what separates a merely adequate character from a believable one. This knowledge is essential for generating convincing animations and overall performances.

Q 21. Discuss your understanding of color spaces and color management.

Color spaces and color management are fundamental aspects of computer graphics. A thorough understanding is crucial for ensuring that colors are displayed accurately across different devices and workflows. A color space is a mathematical model that describes a range of colors. Common examples include sRGB (used for web and most displays) and Adobe RGB (used for print). Color management involves converting colors between different color spaces to ensure consistency. I’m proficient in using color profiles and ICC (International Color Consortium) profiles to accurately manage color throughout the entire pipeline, from capture (e.g., using a calibrated camera) to display (e.g., calibrated monitor).

Ignoring color management can lead to significant discrepancies in how colors are perceived across devices. For instance, a color that looks perfect on a calibrated monitor might appear washed out or oversaturated on an uncalibrated screen, or during print. Therefore, I always ensure that color profiles are correctly embedded and that color conversions are handled appropriately using tools like color management systems (CMS).

My experience encompasses working with different color spaces and adjusting color settings for displays and printers to achieve optimal color accuracy. Understanding gamma correction and linear workflows is also a critical element of my understanding of color management. This includes mastering techniques for achieving consistent color across different platforms and technologies.

Q 22. Explain your experience with version control systems (e.g., Git).

Version control systems, like Git, are indispensable for managing code and assets in collaborative projects. Think of it as a sophisticated ‘undo’ button for your entire project, allowing you to track changes, revert to previous versions, and collaborate seamlessly with others. I’ve extensively used Git for years, both on personal projects and in large team environments. My experience encompasses branching strategies (like Gitflow), merging, resolving conflicts, and using remote repositories like GitHub and GitLab. For instance, during the development of a real-time rendering system, Git enabled us to manage concurrent development of different features by multiple team members, efficiently merging their contributions without overwriting each other’s work. I’m proficient in using various Git commands for tasks like committing, pushing, pulling, rebasing, and cherry-picking. This allows for a robust and efficient workflow, preventing data loss and fostering collaborative development.

Q 23. Describe your experience with collaborative workflows in a CG team.

Collaborative workflows in CG are crucial for success. In my experience, we typically utilize a combination of tools and processes. We use a project management system (like Jira or Trello) to define tasks, track progress, and manage deadlines. For asset management, we often rely on centralized repositories (like Perforce or Shotgun) to keep track of models, textures, and animations, ensuring everyone works with the latest approved versions. Communication is key, and we regularly use tools like Slack or Discord for quick updates and discussions. A typical workflow involves artists submitting their work for review, which is then marked up and iterated on until approval. Version control is vital here, allowing us to review specific revisions and revert if necessary. For example, on a recent animation project, we used a pipeline that included automated asset building and deployment processes, ensuring consistency and efficient collaboration across modeling, texturing, rigging, and animation teams.

Q 24. What is your experience with debugging graphics-related issues?

Debugging graphics issues requires a methodical approach combining technical skills and problem-solving. My approach involves systematically isolating the problem using a combination of techniques. I often start by checking the rendering pipeline for errors—checking shaders, vertex data, and texture bindings. I might use visual debugging tools to examine the rendering process step-by-step. Tools like RenderDoc are invaluable, allowing me to inspect framebuffers and identify discrepancies between expected and actual output. If the issue involves performance, profiling tools (like NVIDIA Nsight or Intel VTune) can pinpoint bottlenecks. For example, on a project involving physically-based rendering, I successfully tracked down a performance bottleneck to inefficient shader code by using profiling tools, and subsequently optimized the code, resulting in a significant performance improvement. Knowing the specifics of the graphics API (OpenGL, DirectX, Vulkan) is crucial, and I am adept at utilizing debugging APIs to examine rendering state and catch errors early. This combination of practical debugging skills, understanding of the underlying technology, and the use of appropriate tools makes me effective at resolving graphics related problems.

Q 25. Explain your understanding of different image file formats and their uses.

Understanding image file formats is essential in computer graphics. Each format offers a unique balance of compression, color depth, and features. For example, .jpg (JPEG) uses lossy compression, ideal for photographs where some data loss is acceptable for smaller file sizes. .png (Portable Network Graphics) offers lossless compression, preserving image quality better but resulting in larger files—perfect for graphics with sharp edges like logos or UI elements. .tiff (Tagged Image File Format) is a versatile format often used for high-quality images with support for various color spaces and compression options. .exr (OpenEXR) is a high-dynamic-range (HDR) image format frequently employed in professional CG pipelines, handling a wider range of brightness levels than standard formats, making it ideal for realistic lighting and rendering. The choice of format depends on the specific needs of the project, balancing file size, quality, and compatibility.

Q 26. How familiar are you with GPU programming (e.g., CUDA, OpenCL)?

I have significant experience with GPU programming using CUDA and OpenCL. CUDA is NVIDIA’s parallel computing platform and programming model, allowing me to leverage the massive parallelism of NVIDIA GPUs for tasks like ray tracing, physics simulations, and image processing. OpenCL is an open standard for heterogeneous computing, enabling cross-platform development across various GPU and CPU architectures. I’ve used both to accelerate computationally intensive tasks. For instance, I developed a CUDA-based ray tracer that significantly outperformed its CPU-only counterpart, rendering complex scenes in a fraction of the time. Understanding memory management, parallel algorithms, and optimization techniques for GPU architectures is crucial, and I’m comfortable writing, debugging, and optimizing kernels for both CUDA and OpenCL. This allows me to create efficient and visually stunning applications that would be impractical without GPU acceleration.

Q 27. What are some common performance bottlenecks in computer graphics and how do you address them?

Performance bottlenecks in computer graphics often stem from various sources. Fill rate limitations can occur when the GPU struggles to write pixels fast enough, leading to slow frame rates. Vertex processing bottlenecks can arise from complex geometry or inefficient vertex shaders. Memory bandwidth limitations restrict the speed at which data is transferred between the GPU’s memory and processing units. Shader performance can be hampered by poorly written shaders or excessive calculations. Addressing these bottlenecks requires a combination of profiling, code optimization, and algorithmic changes. For example, I once optimized a game by using level of detail (LOD) techniques to reduce the polygon count of distant objects, resolving a vertex processing bottleneck. Other strategies include using more efficient algorithms, optimizing shader code, and reducing texture resolutions where appropriate. Profiling tools are crucial in identifying the root cause of performance issues, enabling targeted optimization efforts.

Q 28. Describe your experience with virtual and augmented reality technologies.

I’ve worked with both virtual reality (VR) and augmented reality (AR) technologies, understanding the unique challenges and opportunities they present. VR focuses on creating immersive, fully simulated environments, while AR overlays digital information onto the real world. My experience includes developing VR applications using Unity and Unreal Engine, leveraging their VR toolkits for interaction and rendering optimization. In AR, I’ve explored technologies like ARKit and ARCore for mobile AR applications. For instance, I developed a VR architectural walkthrough application, optimizing the rendering process for smooth performance. Understanding the limitations of VR/AR headsets—such as frame rate requirements and motion sickness—is critical. Effective use of techniques like spatial audio and efficient rendering are key to creating comfortable and engaging experiences. The choice of technology—VR or AR—depends on the desired level of immersion and the application’s purpose.

Key Topics to Learn for Computer Graphics and Visualization Interview

- 3D Transformations: Understanding translation, rotation, scaling, and their matrix representations is fundamental. Practical applications include modeling and animation in game development and virtual reality.

- Rendering Techniques: Explore rasterization, ray tracing, and path tracing. Be prepared to discuss their strengths, weaknesses, and real-world applications in film, architecture visualization, and scientific visualization.

- Shading and Lighting Models: Master the concepts of diffuse, specular, and ambient lighting. Understand different shading techniques like Phong and Blinn-Phong shading and their impact on realism.

- Texture Mapping and Image Processing: Discuss various texture mapping techniques (e.g., bump mapping, normal mapping) and their applications in creating detailed and realistic surfaces. Familiarity with image processing techniques is beneficial.

- Geometric Modeling: Understand different representations of 3D models (e.g., polygon meshes, NURBS surfaces) and their advantages and disadvantages. Be prepared to discuss algorithms for mesh simplification and manipulation.

- Data Visualization Techniques: Explore different methods for visualizing large datasets, including volume rendering, scatter plots, and heatmaps. Discuss the principles of effective data visualization and how to choose appropriate techniques for different datasets.

- Shader Programming (GLSL, HLSL): Understanding shader programming is crucial for advanced graphics work. Be ready to discuss your experience with writing shaders for various effects.

- Computer Graphics Pipelines: A strong grasp of the graphics pipeline stages (vertex processing, rasterization, pixel processing) is essential for efficient and optimized graphics development.

- Real-time Rendering Optimizations: Discuss techniques for optimizing performance in real-time applications, such as level of detail (LOD) and frustum culling.

- Advanced Topics (Depending on experience): Explore areas like physically based rendering (PBR), global illumination techniques, or advanced animation techniques (e.g., skeletal animation, motion capture).

Next Steps

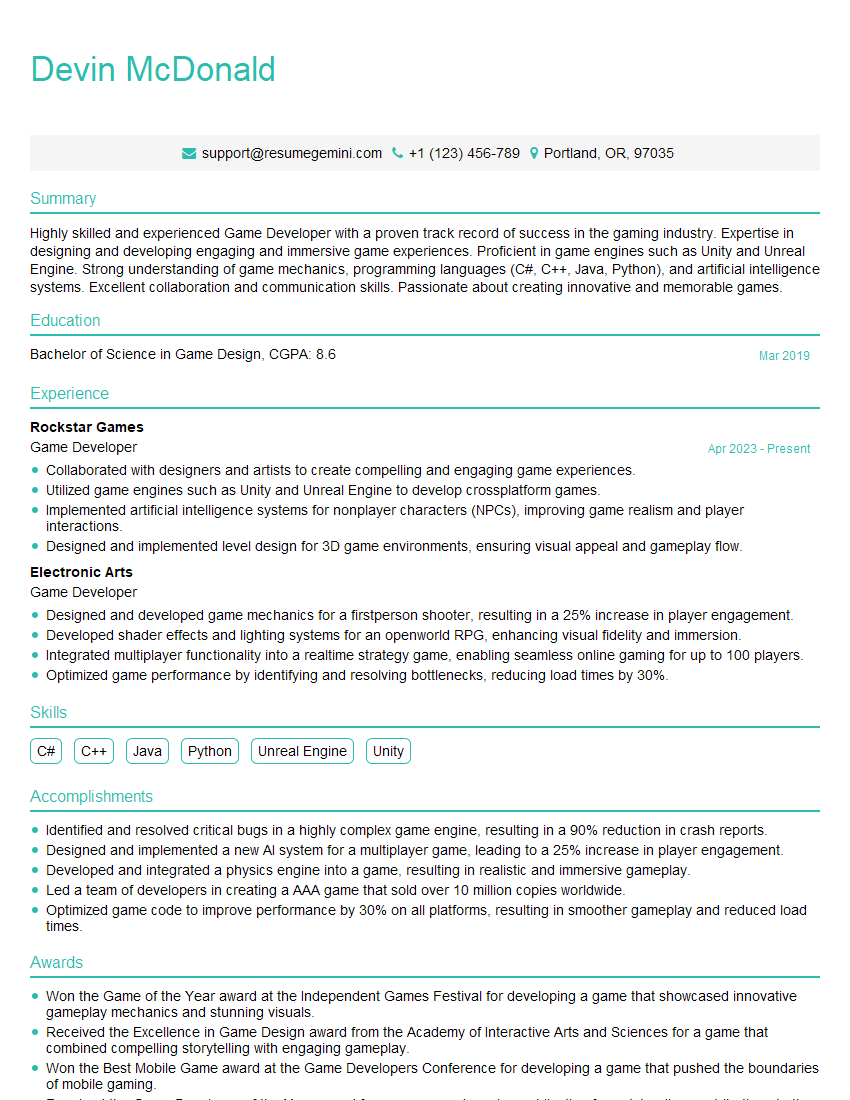

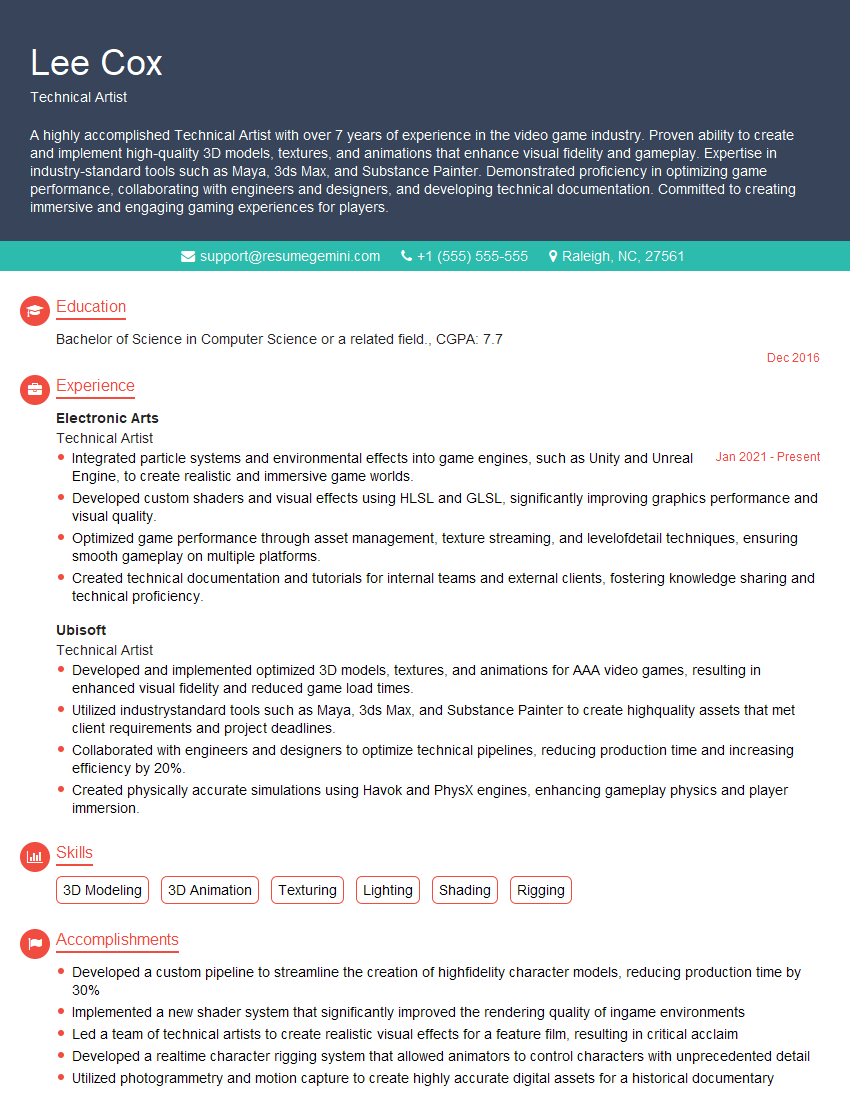

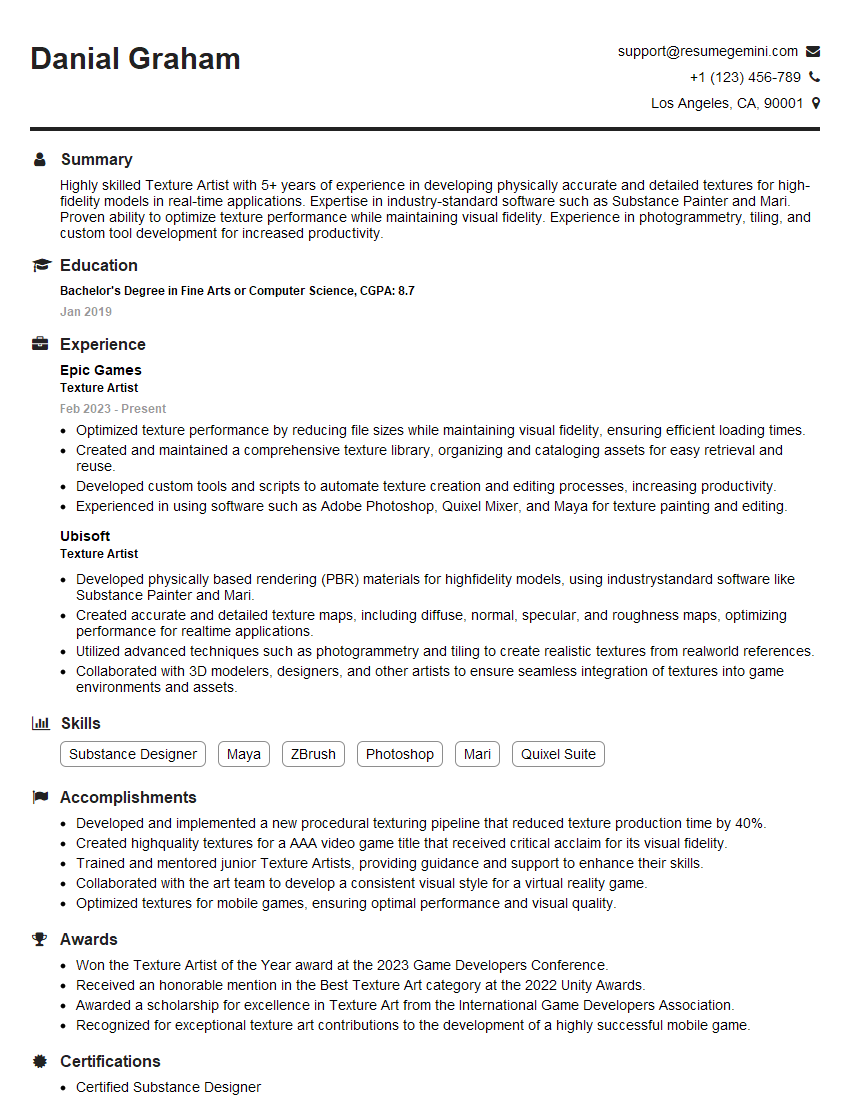

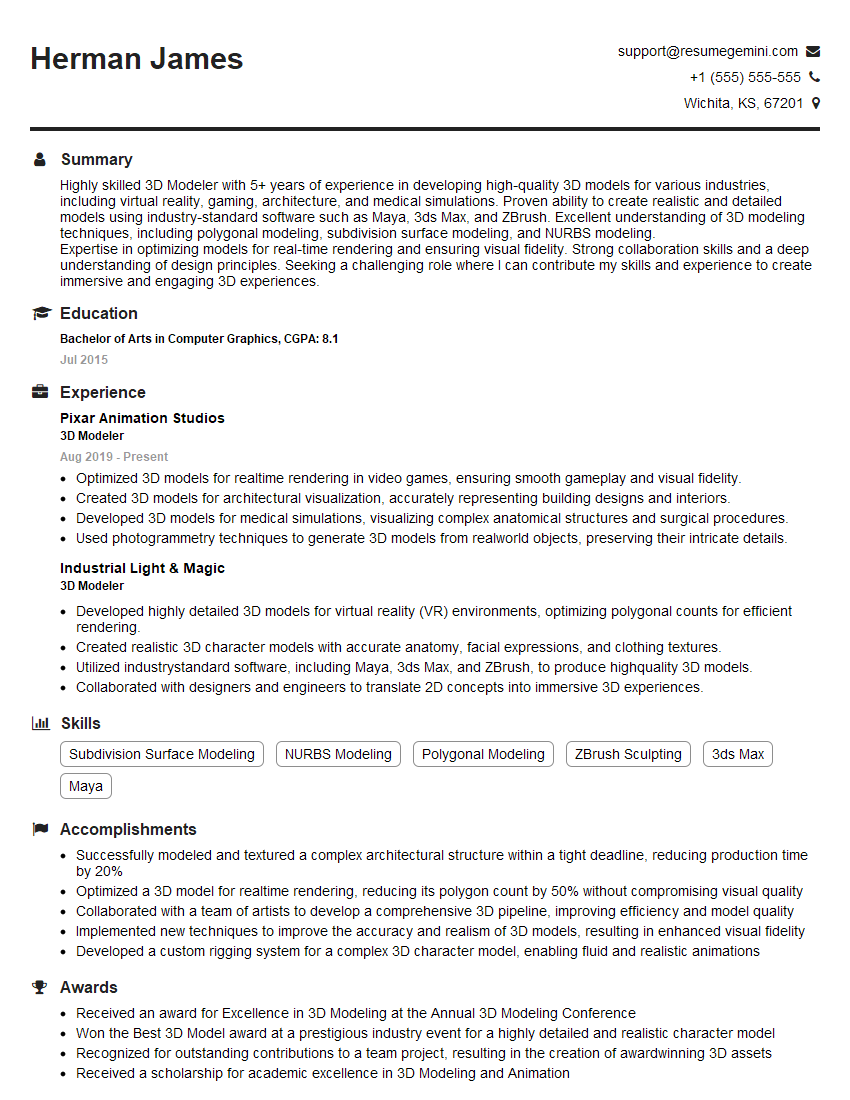

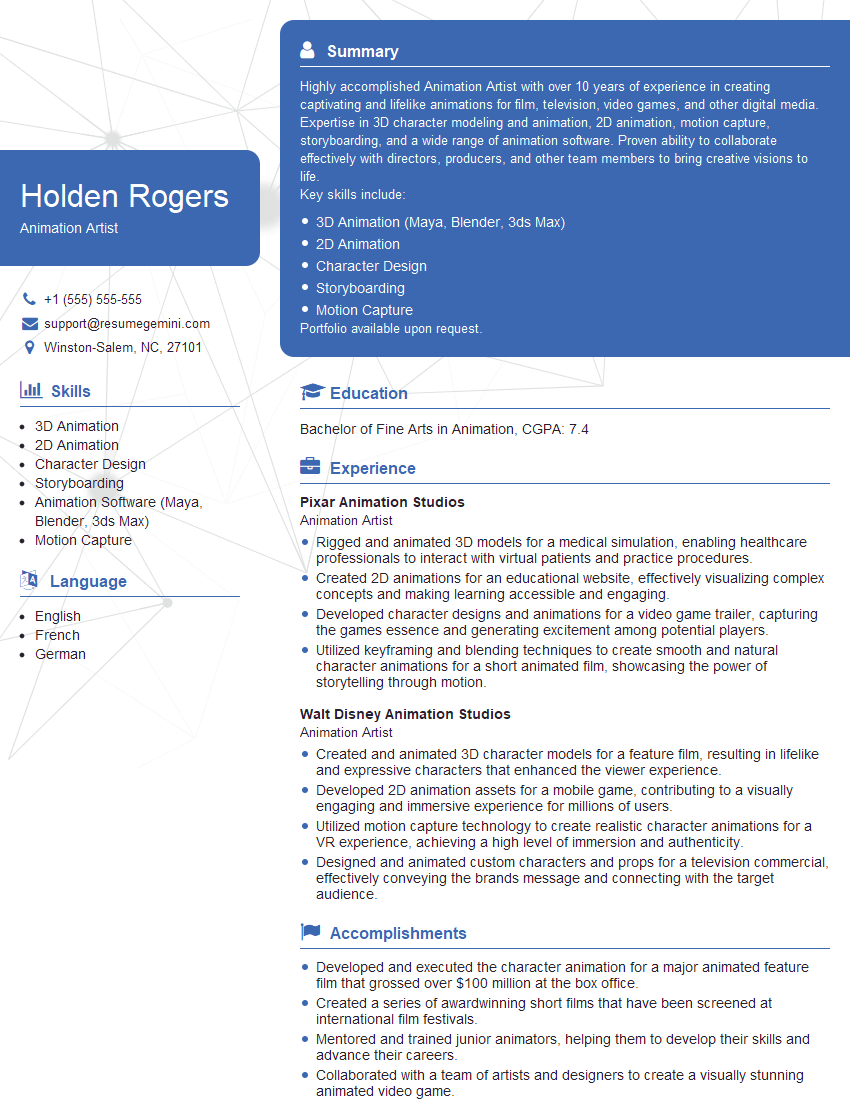

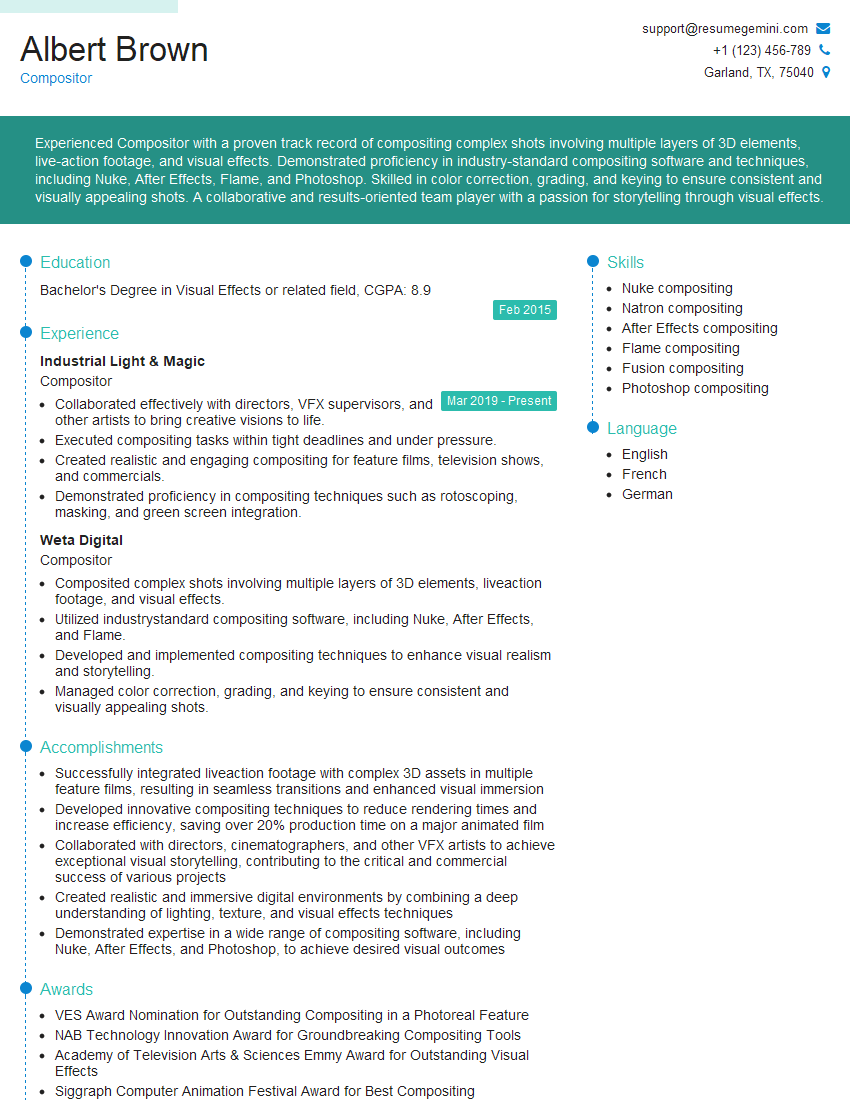

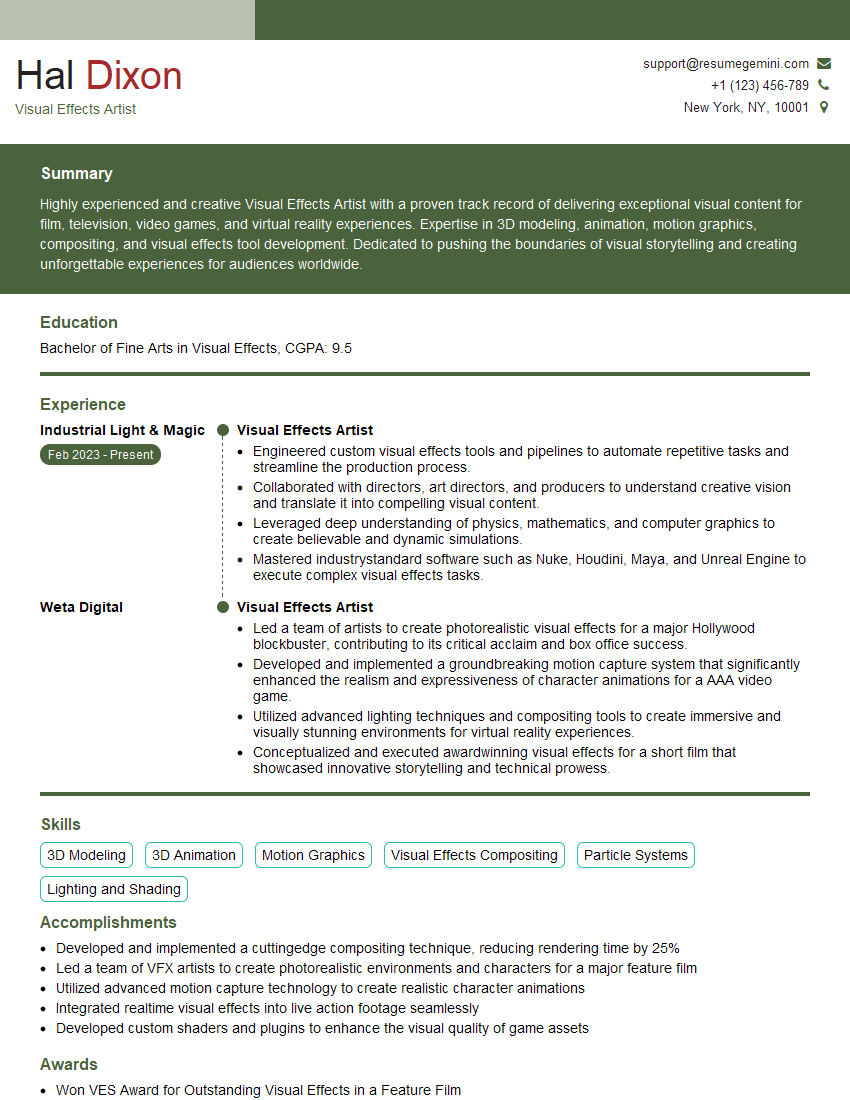

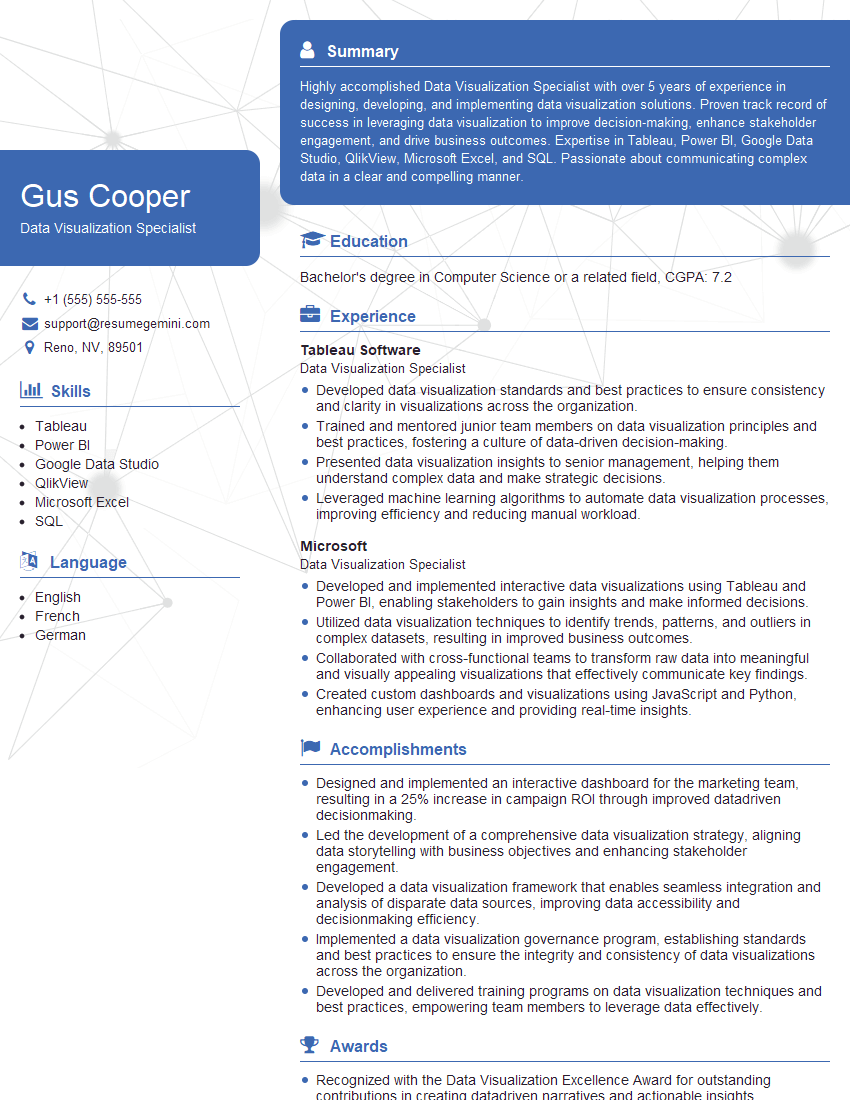

Mastering Computer Graphics and Visualization opens doors to exciting and rewarding careers in gaming, film, architecture, scientific research, and more. A strong foundation in these key areas significantly increases your job prospects. To make your application stand out, create an ATS-friendly resume that effectively highlights your skills and experience. ResumeGemini is a trusted resource to help you build a professional and impactful resume. We provide examples of resumes tailored to Computer Graphics and Visualization to guide you in crafting your own compelling application.

Explore more articles

Users Rating of Our Blogs

Share Your Experience

We value your feedback! Please rate our content and share your thoughts (optional).

What Readers Say About Our Blog

Hello,

We found issues with your domain’s email setup that may be sending your messages to spam or blocking them completely. InboxShield Mini shows you how to fix it in minutes — no tech skills required.

Scan your domain now for details: https://inboxshield-mini.com/

— Adam @ InboxShield Mini

Reply STOP to unsubscribe

Hi, are you owner of interviewgemini.com? What if I told you I could help you find extra time in your schedule, reconnect with leads you didn’t even realize you missed, and bring in more “I want to work with you” conversations, without increasing your ad spend or hiring a full-time employee?

All with a flexible, budget-friendly service that could easily pay for itself. Sounds good?

Would it be nice to jump on a quick 10-minute call so I can show you exactly how we make this work?

Best,

Hapei

Marketing Director

Hey, I know you’re the owner of interviewgemini.com. I’ll be quick.

Fundraising for your business is tough and time-consuming. We make it easier by guaranteeing two private investor meetings each month, for six months. No demos, no pitch events – just direct introductions to active investors matched to your startup.

If youR17;re raising, this could help you build real momentum. Want me to send more info?

Hi, I represent an SEO company that specialises in getting you AI citations and higher rankings on Google. I’d like to offer you a 100% free SEO audit for your website. Would you be interested?

Hi, I represent an SEO company that specialises in getting you AI citations and higher rankings on Google. I’d like to offer you a 100% free SEO audit for your website. Would you be interested?

good