Unlock your full potential by mastering the most common Audio Engineering and Acoustics Knowledge interview questions. This blog offers a deep dive into the critical topics, ensuring you’re not only prepared to answer but to excel. With these insights, you’ll approach your interview with clarity and confidence.

Questions Asked in Audio Engineering and Acoustics Knowledge Interview

Q 1. Explain the difference between reverberation and echo.

Reverberation and echo are both reflections of sound waves, but they differ significantly in their characteristics. Think of it like this: an echo is a distinct, repeating sound, like a single clap echoing back from a canyon wall. Reverberation, on the other hand, is a more diffuse, blended collection of reflections that gradually decay. It’s the ‘wash’ of sound you hear in a large, empty hall.

More technically, an echo is a single, delayed reflection of a sound that’s easily distinguishable from the original. The delay is typically long enough (above 50ms) for our brains to perceive it as a separate sound event. Reverberation, however, involves numerous reflections arriving at the listener’s ears within a shorter time frame and at varying intensities. These reflections overlap and blend, creating a sense of spaciousness and ambience. The decay time (how long it takes for the reflections to fade) is a key characteristic of reverberation.

In recording studios, we use artificial reverb units to simulate the acoustic properties of different spaces. Engineers control the decay time, pre-delay (the initial delay before the reverb starts), and other parameters to enhance recordings. Understanding the distinction between echo and reverb is crucial for achieving the desired acoustic character of a recording.

Q 2. Describe the frequency response of the human ear.

The human ear’s frequency response isn’t uniform; our sensitivity to different frequencies varies. We’re most sensitive to frequencies in the mid-range (around 1kHz to 4kHz), which is why voices sound prominent in this range. Our hearing range typically extends from around 20Hz to 20kHz, although this can vary with age and individual differences. Sensitivity drops off significantly at both the very low and very high ends of this range.

At lower frequencies (below 100Hz), we perceive sounds more as vibrations felt in the body than as distinct pitches. At higher frequencies (above 10kHz), our sensitivity gradually declines, resulting in a reduced ability to discern details in high-frequency sounds. This is partly why some recordings might emphasize the mid-range to ensure clarity and intelligibility.

The perception of loudness is also not linear. We perceive changes in loudness logarithmically; a small change in sound pressure level (SPL) at low levels is more noticeable than a similar change at high levels. This logarithmic relationship is accounted for using the decibel (dB) scale, the standard unit for measuring sound intensity.

Q 3. What are the common types of microphones and their applications?

Microphones convert sound waves into electrical signals. Several types exist, each suitable for different applications:

- Dynamic Microphones: Rugged, reliable, and handle high sound pressure levels (SPLs) well. They use a moving coil in a magnetic field to generate the signal. Commonly used for live sound reinforcement (vocals, instruments), broadcasting, and recording drums.

- Condenser Microphones: More sensitive and detailed than dynamic mics, often capturing a wider frequency range. They require phantom power (48V) to operate. Frequently used in recording studios for vocals, acoustic instruments, and detailed instrument miking.

- Ribbon Microphones: Use a thin metal ribbon suspended in a magnetic field. They are known for their smooth, warm sound, and can be very sensitive to high SPLs. Often used in recording for vocals, acoustic guitar, and orchestral instruments.

- Boundary Microphones (PZM): Designed to be mounted on a flat surface; they pick up sound from a wide area, making them useful for conference calls, recording meetings, and smaller venues.

The choice of microphone depends heavily on the application. For instance, a dynamic microphone is ideal for a loud live rock concert, whereas a condenser microphone might be preferred for capturing subtle nuances in a classical recording.

Q 4. How does a compressor work, and what are its parameters?

An audio compressor reduces the dynamic range of an audio signal. Imagine a recording with very loud peaks and very quiet passages – a compressor smooths out these differences, making the overall level more consistent. It works by attenuating signals that exceed a predetermined threshold. The amount of attenuation is controlled by the compression ratio.

Key parameters of a compressor include:

- Threshold: The level at which compression begins.

- Ratio: The ratio of input level change to output level change (e.g., a 2:1 ratio means that for every 2dB increase in input level, the output only increases by 1dB).

- Attack Time: How quickly the compressor reacts to a signal exceeding the threshold.

- Release Time: How quickly the compressor returns to its normal state after the signal falls below the threshold.

- Knee: Controls the transition between uncompressed and compressed signals (soft knee offers gradual compression).

- Output Gain (Make-up Gain): Compensates for the reduction in level caused by the compression.

Compressing audio improves loudness, reduces unwanted peaks that can cause distortion, and creates a more consistent and polished sound in many genres of music.

Q 5. Explain the concept of impedance matching in audio systems.

Impedance matching is critical for efficient power transfer in audio systems. Impedance is the resistance to the flow of electrical current, and it’s measured in ohms (Ω). When a signal source (like a microphone or preamp) and its load (like an amplifier or audio interface) have mismatched impedances, power loss occurs and the signal can become weak or distorted.

In an ideal scenario, the impedance of the source should be significantly higher than the impedance of the load. This maximizes power transfer, minimizing signal loss and maintaining signal quality. Mismatched impedances can lead to signal attenuation, unwanted frequency response changes, and signal reflection. For example, if you connect a low-impedance microphone to a high-impedance input, the signal will be attenuated, resulting in a weak output. Therefore, matching impedance is essential for proper audio signal transmission across components.

Q 6. What is signal-to-noise ratio (SNR), and why is it important?

Signal-to-noise ratio (SNR) is the ratio of the desired signal’s power to the power of the unwanted noise present in the signal. It’s usually expressed in decibels (dB). A higher SNR indicates a cleaner signal with less noise interference. In audio engineering, noise includes any unwanted sound like hiss, hum, or background interference.

A high SNR is crucial because it directly impacts the quality of the audio. Low SNR leads to audible noise, masking the subtleties of the audio and reducing the overall quality of the recording or reproduction. High SNR is particularly essential in professional settings where the fidelity of the audio is paramount, such as in mastering, film production, and broadcasting.

Imagine recording a quiet acoustic guitar in a noisy environment. A low SNR would mean the background noise would be more prominent in the recording, making it difficult to hear the delicate nuances of the instrument. A higher SNR would minimize the noise, allowing for a much clearer and more pleasing final product.

Q 7. Describe different types of equalizers and their uses.

Equalizers (EQs) shape the frequency response of an audio signal. They boost or cut specific frequencies to enhance or correct the sound. There are several types:

- Graphic Equalizers: Feature multiple sliders controlling a range of frequencies in octaves or fractional octaves, allowing for visual adjustments. They’re often used for room correction, live sound, and general tonal shaping.

- Parametric Equalizers: Offer more control over each frequency band. You adjust not only the gain (boost or cut) but also the center frequency and the Q factor (bandwidth) of each band. They provide more precision in shaping a signal, often used for surgical adjustments in mixing and mastering.

- Shelving Equalizers: Affect the gain of frequencies above or below a specific cutoff frequency, useful for adjusting the overall tone (e.g., boosting low frequencies or cutting high frequencies).

- Peak Equalizers: Similar to parametric but primarily for narrow adjustments in a single frequency.

For example, a parametric EQ might be used to boost the presence of a vocal at a specific frequency (cutting out muddiness), while a graphic EQ could be used to correct the frequency imbalances of a room’s acoustics.

Q 8. What are the principles of room acoustics and how do they affect sound quality?

Room acoustics are the study of how sound behaves within an enclosed space. It’s governed by several key principles that directly impact sound quality. These include:

- Reflection: Sound waves bounce off surfaces (walls, ceilings, floor). The material and shape of these surfaces determine the nature of the reflection – some create clear, crisp reflections while others cause diffuse, scattered sounds.

- Absorption: Materials absorb sound energy, reducing its intensity. Carpets, curtains, and acoustic panels are examples of absorptive materials. Too much absorption creates a ‘dead’ sound, while too little leads to excessive reverberation.

- Diffraction: Sound waves bend around obstacles. This is why you can still hear sound from a source even if it’s partially obstructed.

- Refraction: Sound waves change direction when passing through different mediums (e.g., from air to a solid). This effect is less pronounced in typical room acoustics but can influence sound at high frequencies.

- Reverberation: This is the persistence of sound after the source has stopped, caused by multiple reflections. The time it takes for the sound to decay is called the reverberation time (RT60). An appropriate RT60 is crucial for good sound; too much makes it muddy and unclear, too little sounds dry and lifeless.

- Standing Waves: These are patterns of sound pressure formed by interfering sound waves. They result in certain frequencies being amplified or cancelled, causing uneven frequency response. Poor room design can lead to strong standing waves in certain frequencies resulting in uneven audio.

For example, a recording studio needs precisely controlled acoustics to ensure accurate reproduction of sound, while a concert hall requires a longer reverberation time for a more spacious and resonant sound. A poorly treated room can result in muddy bass frequencies due to standing waves, overly bright high frequencies due to excessive reflection from hard surfaces, or a completely dead and unnatural sound.

Q 9. Explain the concept of psychoacoustics.

Psychoacoustics is the study of how humans perceive sound. It bridges the gap between the physical properties of sound (frequency, intensity, etc.) and our subjective experience of it (loudness, pitch, timbre). It’s not just about the physics of sound waves, but also the complex neural processing in our brain.

For example, two sounds with the same physical intensity might be perceived as having different loudness depending on their frequency. Our hearing is less sensitive to very low and very high frequencies. Psychoacoustics also explains phenomena like the masking effect, where a louder sound can obscure a quieter one, even if they occur simultaneously, and the precedence effect, whereby our brain prioritizes the sound from the nearest source in a reverberant environment.

This knowledge is crucial in audio engineering for tasks like equalisation (EQ), where we manipulate the frequency balance to optimize the perceived sound, or creating surround sound, where we leverage how our brain locates sound sources using time differences and intensity differences between our ears.

Q 10. What are the different types of audio file formats and their characteristics?

Numerous audio file formats exist, each with its strengths and weaknesses. Here are some common ones:

- WAV (Waveform Audio File Format): A lossless format that retains all original audio data. It’s large in file size but provides the highest quality. Often used for studio work and archiving.

- AIFF (Audio Interchange File Format): Another lossless format, similar to WAV but primarily used on Apple systems.

- MP3 (MPEG Audio Layer III): A lossy format that uses compression to reduce file size. It’s widely used for music distribution due to its smaller size and compatibility but sacrifices some audio quality in the process.

- AAC (Advanced Audio Coding): A lossy format generally considered to offer better audio quality than MP3 at comparable bitrates. Used in iTunes and many streaming services.

- FLAC (Free Lossless Audio Codec): A lossless compression format offering smaller file sizes than WAV while preserving all audio data. It’s gaining popularity as a good balance between quality and size.

- Ogg Vorbis: A royalty-free, open-source lossy format. Offers comparable quality to MP3 and AAC at similar bitrates.

The choice of format depends on the application. For professional work, lossless formats are preferred to preserve audio quality. For online streaming or distribution where file size is critical, lossy formats like MP3 or AAC are used.

Q 11. How do you troubleshoot feedback in a sound reinforcement system?

Feedback in a sound reinforcement system is that annoying howling sound caused by a microphone picking up the amplified sound from speakers. Troubleshooting involves a systematic approach:

- Identify the source: Determine which microphone is causing the feedback. Mute mics one by one to isolate the culprit.

- Reduce gain: Lower the gain (volume) of the affected microphone channel and the main output level. Less amplification means less signal to feed back.

- Adjust microphone position: Move the microphone away from speakers. Pointing the microphone away from loudspeakers reduces the amount of sound reflecting back into the microphone.

- EQ the offending frequency: Use a graphic equalizer (EQ) to attenuate the specific frequency causing the feedback. This ‘notch’ filter eliminates the feedback frequency, which is typically a narrow band. Use a real-time analyzer (RTA) to visually see which frequency is problematic.

- Check microphone placement and cabling: Ensure that the cables are properly shielded to prevent unwanted signals from entering the system. Also, ensure the microphones are at an appropriate distance from the speakers and reflective surfaces.

- Use directional microphones: Cardioid or supercardioid mics are less sensitive to sounds from behind, reducing the likelihood of feedback.

- Improve room acoustics: Treat the room’s acoustics to reduce unwanted reflections using absorptive materials. This can significantly help lower feedback.

Often, a combination of these strategies is needed to resolve feedback issues. It’s a balance between maximizing gain to get a desirable volume and minimizing the risk of feedback. Remember to always prioritize the safety of the ears of performers and audience members!

Q 12. Describe the process of mixing a song.

Mixing a song is the process of combining and balancing all the individual tracks (vocals, instruments, etc.) to create a cohesive and well-balanced final product. It involves creative decisions about levels, panning, EQ, dynamics processing, and effects. Think of it as sculpting a sonic landscape.

The process typically involves:

- Gain staging: Setting appropriate levels for each track to prevent clipping and maximize dynamic range.

- EQ: Adjusting the frequency balance of each track to enhance certain frequencies and attenuate others. For example, boosting the presence of vocals, cutting out muddiness in bass, or adding brightness to the cymbals.

- Dynamics processing: Using compressors, limiters, and gates to control the dynamic range of the tracks, creating a more consistent and powerful sound.

- Panning: Positioning instruments in the stereo field to create a wider and more immersive sound. For example, placing drums centrally, lead vocals slightly to the centre, and guitars panned slightly left and right.

- Effects: Applying effects like reverb, delay, chorus, and flanger to add depth, space, and character to individual instruments and the overall mix.

- Automation: Using automation to control parameters over time. For example, creating dynamic builds and drops, or fading in or out instruments.

- Mixing in different environments: Listening to the mix on different playback systems helps identify and correct any flaws in the mix as it would sound differently on your monitor speakers compared to earbuds or a car stereo.

Mixing is an iterative process, requiring listening and adjusting to achieve a well-balanced and pleasing result. It demands a strong understanding of both musicality and the technical aspects of sound.

Q 13. Explain the role of mastering in audio production.

Mastering is the final stage of audio production, where the mixed audio is prepared for distribution. Unlike mixing, which focuses on individual tracks, mastering treats the entire mix as a single unit, ensuring it’s optimized for different playback systems and formats. It involves subtle adjustments to make the sound polished and consistent across all listening environments.

Key aspects of mastering include:

- Level matching: Ensuring the overall loudness of the track is consistent with other commercially released music.

- Equalization (EQ): Making subtle adjustments to the overall frequency balance to improve clarity and presence.

- Stereo imaging: Optimizing the stereo width and placement of elements to create a well-balanced stereo image that translates well across different systems.

- Dynamic range processing: Carefully using compression and limiting to control the dynamic range and maximize loudness without sacrificing quality.

- Dithering: Adding a small amount of noise to reduce distortion when reducing the bit depth of the audio. This is commonly done during format conversion from a 24-bit to a 16-bit format.

- Format conversion: Preparing the audio for specific formats (CD, digital downloads, streaming services).

Mastering requires a critical ear, technical expertise, and an understanding of the target medium. A well-mastered track will translate well across different playback systems, offering a consistent and enjoyable listening experience.

Q 14. What are some common digital audio workstations (DAWs)?

Numerous Digital Audio Workstations (DAWs) are available, each with its strengths and weaknesses. The best choice depends on individual preferences and workflow. Here are some popular options:

- Pro Tools: Industry-standard DAW widely used in professional recording studios. It has a steep learning curve but offers comprehensive features and extensive plugin support.

- Logic Pro X: Powerful and user-friendly DAW exclusive to macOS, known for its intuitive interface and extensive features. It often requires less processing power compared to Pro Tools.

- Ableton Live: Popular for electronic music production, known for its intuitive workflow and loop-based approach. Its session view allows for spontaneous improvisation and arrangement.

- Cubase: Long-standing DAW with a loyal following, known for its precision and advanced features. It’s also suited to both composing and recording music.

- FL Studio: Popular DAW particularly within the hip-hop and EDM community, known for its intuitive piano roll and pattern-based workflow. It’s excellent for beat creation and electronic music production.

- GarageBand: User-friendly, free DAW that is great for beginners. It comes pre-installed on many Apple computers.

Many other excellent DAWs exist, such as Studio One, Reaper, and Bitwig Studio, each offering unique features and workflows. It’s recommended to try out a few free trials or demos to find the one that best suits your needs and style.

Q 15. What are the principles of sound absorption and reflection?

Sound absorption and reflection are fundamental principles governing how sound waves interact with surfaces. Absorption refers to the conversion of sound energy into other forms of energy, primarily heat, when a sound wave encounters a material. Reflection, on the other hand, involves the bouncing of sound waves off a surface, with the angle of incidence equaling the angle of reflection. The extent of absorption or reflection depends on the material’s properties, specifically its porosity, density, and thickness.

Think of a soft, porous material like acoustic foam. Its porous structure traps sound energy, converting it into heat, leading to high absorption. In contrast, a hard, smooth surface like a concrete wall reflects most of the sound energy, resulting in echoes and reverberation. The balance between absorption and reflection is crucial in room acoustics, influencing the clarity, loudness, and overall quality of sound within a space.

- Absorption: Materials like acoustic panels, carpets, curtains, and even strategically placed furniture absorb sound energy.

- Reflection: Hard, smooth surfaces such as glass windows, concrete walls, and tile floors reflect sound, leading to potential problems like unwanted echoes and standing waves.

Career Expert Tips:

- Ace those interviews! Prepare effectively by reviewing the Top 50 Most Common Interview Questions on ResumeGemini.

- Navigate your job search with confidence! Explore a wide range of Career Tips on ResumeGemini. Learn about common challenges and recommendations to overcome them.

- Craft the perfect resume! Master the Art of Resume Writing with ResumeGemini’s guide. Showcase your unique qualifications and achievements effectively.

- Don’t miss out on holiday savings! Build your dream resume with ResumeGemini’s ATS optimized templates.

Q 16. How do you measure sound levels using a sound level meter?

Measuring sound levels involves using a sound level meter, a device that converts sound pressure into a measurable electrical signal. The meter typically displays the sound pressure level (SPL) in decibels (dB), a logarithmic scale that represents the relative intensity of sound. To measure accurately, you’ll need to understand the different weighting networks available on most sound level meters:

- A-weighting: This filter mimics the human ear’s response to sound at different frequencies, weighting lower frequencies less prominently. It’s the most common weighting for general environmental noise measurements.

- C-weighting: This filter has a flatter frequency response and is often used for measuring high-intensity sounds or impulsive noises.

- Z-weighting (Linear): This is a linear weighting, with no emphasis on any frequencies, and is often used for laboratory or precision measurements.

When using a sound level meter, hold it at the desired measurement point, ensuring the microphone isn’t obstructed or shielded. Take multiple readings to get an average and record the weighting used (A, C, or Z). Remember that ambient noise can influence the readings, so try to minimize background sound as much as possible.

Q 17. Explain the concept of sound insulation and its importance in building design.

Sound insulation, also known as acoustic insulation, is the reduction of sound transmission through building structures. Its importance in building design cannot be overstated, especially in spaces requiring noise control, like residential buildings, recording studios, and offices. Good sound insulation minimizes the transmission of noise between different areas, improving privacy and reducing noise pollution. It’s achieved through careful material selection, structural design, and construction techniques.

Materials with high mass and density, such as concrete or dense drywall, are better sound insulators than lightweight materials. Air gaps, resilient channels (used to decouple the wall from the structure), and sealed joints are also crucial for preventing sound leakage. Double- or triple-glazed windows, for instance, provide superior sound insulation compared to single-pane windows. The science behind it lies in the material’s ability to impede sound waves’ passage; a massive, dense structure is less likely to transmit sound energy compared to a less dense structure.

Q 18. What are the different types of filters used in audio processing?

Filters in audio processing are used to modify the frequency content of a signal, selectively attenuating or boosting specific frequencies. There are many types, but some of the most commonly used are:

- High-pass filters: These allow high frequencies to pass through while attenuating low frequencies. Think of a high-pass filter as a ‘low-cut’ – it removes rumble or unwanted low-end frequencies.

- Low-pass filters: These allow low frequencies to pass through while attenuating high frequencies. They can be used to smooth a signal or reduce harsh high-end sounds.

- Band-pass filters: These allow a specific range of frequencies to pass while attenuating frequencies above and below this range. This is excellent for isolating a specific instrument or vocal.

- Band-reject (notch) filters: These attenuate a specific range of frequencies while allowing others to pass. They are used to remove unwanted resonant frequencies or noise, such as hum or buzz.

- Parametric EQ filters: These are highly adjustable filters allowing control over the center frequency, bandwidth (Q), and gain. This allows for precision control over specific frequencies within an audio signal.

These filters are essential for various audio tasks, from mixing and mastering to noise reduction and sound design.

Q 19. Describe the Nyquist-Shannon sampling theorem.

The Nyquist-Shannon sampling theorem is a fundamental principle in digital signal processing stating that to accurately represent a continuous analog signal digitally, the sampling rate must be at least twice the highest frequency present in the signal. In simpler terms, if your audio signal contains frequencies up to 20 kHz (the upper limit of human hearing), you must sample it at a minimum of 40 kHz to avoid information loss.

Sampling at a rate lower than the Nyquist rate (twice the highest frequency) results in aliasing, which is the distortion of the original signal due to higher frequencies being incorrectly interpreted as lower frequencies. This can lead to undesirable artifacts, particularly when digitizing audio. Therefore, adhering to the Nyquist-Shannon theorem is crucial for accurate digital audio reproduction.

Q 20. Explain the concept of phase cancellation and how it affects audio quality.

Phase cancellation occurs when two or more sound waves of the same frequency arrive at a listener’s ear out of phase. This means their peaks and troughs are misaligned, resulting in a reduction of the overall amplitude, even to the point of complete cancellation if the waves are perfectly out of phase (180 degrees). Imagine two identical waves – if one is shifted by half a cycle, they effectively cancel each other out.

In audio, phase cancellation can lead to a significant loss of signal strength, a thinner or ‘hollow’ sound, and a lack of clarity. It’s often a problem when using multiple microphones to record a sound source, where different paths to the microphones introduce varying phase shifts. Careful microphone placement and signal processing techniques are crucial to minimize phase cancellation and maintain audio quality.

Q 21. What are some common problems encountered in recording studios and how do you address them?

Recording studios often face several acoustic problems that can significantly affect the quality of recordings. Here are a few common issues and their solutions:

- Excessive reverberation/echoes: This is caused by excessive sound reflections from hard surfaces. Solution: Treat the room with sound-absorbing materials such as acoustic panels, bass traps, and diffusers to control reflections and create a more controlled acoustic environment.

- Standing waves: These are resonant frequencies that build up in a room due to reflections between parallel surfaces. Solution: Strategically position bass traps and other acoustic treatment to break up these standing waves and flatten the frequency response.

- Noise pollution: External or internal noises can intrude upon recordings. Solution: Use effective sound isolation techniques, such as double-walled construction, soundproof windows, and door seals. Consider using noise gates or other audio processing to remove unwanted background noise during post-production.

- Acoustic imbalances: Uneven frequency responses in different areas of the room. Solution: Careful acoustic treatment and measurement with sound level meters and analysis software can identify and correct these issues.

Addressing these issues requires a blend of careful room design, appropriate acoustic treatment, and proficient use of audio processing techniques. Regular maintenance and calibration of equipment are also essential.

Q 22. How do you design a listening room for optimal acoustics?

Designing a listening room for optimal acoustics involves controlling reflections, minimizing resonances, and achieving a balanced frequency response. Think of it like sculpting sound – we want to shape the sound waves to ensure they reach your ears as accurately and cleanly as possible.

Room Shape and Dimensions: Avoid parallel walls, which create standing waves (areas of constructive and destructive interference). Asymmetrical shapes and diffusers help scatter sound, reducing unwanted reflections. The ideal dimensions are often based on mathematical ratios to minimize resonant frequencies, but this is often constrained by practical space limitations.

Acoustic Treatment: This is crucial. Absorption materials (like bass traps in corners, acoustic panels on walls) soak up sound energy, reducing reverberation and echoes. Diffusion panels scatter sound waves, preventing harsh reflections and creating a more natural and spacious sound. The amount and type of treatment depends on room size, shape, and intended use (mixing, mastering, critical listening).

Speaker Placement: Speaker placement is vital for imaging (the perceived location of sounds) and minimizing unwanted interactions with room boundaries. Experimentation and precise measurement with a room analysis software is typically required.

Listening Position: The listening position should be carefully chosen, often at the ‘sweet spot’ where the sound is most balanced and accurate. This is usually in an equilateral triangle relationship with the speakers.

For example, in a home studio, strategically placed bass traps in the corners will significantly reduce low-frequency buildup. Acoustic panels on the walls will further help control reflections and create a more balanced listening environment. This ensures a more accurate representation of the sound being produced, crucial for mixing and mastering.

Q 23. Describe the process of creating a sound design for a video game.

Creating sound design for a video game is a multifaceted process that requires a deep understanding of audio technology and game mechanics. The goal is to create an immersive and engaging soundscape that complements the game’s visuals and enhances the player’s experience.

Concept and Design: First, I’d collaborate with the game designers and narrative team to establish the overall sound direction and mood. This includes identifying key sonic elements that match the game’s genre, setting, and intended emotional impact (e.g., a dark fantasy game versus a playful arcade game).

Sound Implementation: This involves using Digital Audio Workstations (DAWs) like Pro Tools, Logic Pro X, or Ableton Live. We create sounds that are associated with actions within the game: footsteps, weapon sounds, character voices, environmental ambience, and music. The process might involve recording sounds (foley), synthesizing sounds, or manipulating existing audio.

Sound Effects Design: For sound effects, we create multiple layers to add realism and depth. For example, a gunshot might have several layers: a primary blast, reverb, a metallic clang, and a tail-off. This adds richness and depth.

Sound Integration: The sounds are integrated within the game engine. We implement spatial audio (allowing sounds to appear to come from specific directions), audio triggers (that activate sounds based on game events), and sound mixing to fine-tune the soundscape for the specific platform.

Music Design and Implementation: Music plays an important role in setting the mood, driving action, and guiding the narrative. Game music often requires the creation of dynamic tracks that adapt to the game’s events.

For instance, in a racing game, the sound design might involve realistic engine sounds, tire squeals, and crowd cheers, all strategically timed to intensify the action. In a narrative-driven RPG, ambience and musical scoring might take center stage to build a specific atmosphere and guide the player’s emotional experience.

Q 24. What are some strategies for improving the clarity and intelligibility of speech?

Improving speech clarity and intelligibility involves optimizing both the recording and reproduction processes. Think of it as removing any obstacles that muddy the message.

Microphone Technique: Using a high-quality microphone appropriate for the situation is vital. Proper microphone placement relative to the speaker’s mouth is also crucial to minimize unwanted background noise and achieve optimal signal-to-noise ratio.

Room Acoustics: Controlling reflections and reverberation in the recording environment is essential. A treated room reduces echoes that can obscure speech.

EQ and Compression: Equalization (EQ) can boost or cut certain frequencies to enhance clarity. For instance, slightly boosting frequencies around 2-4 kHz can make speech crisper. Compression can control dynamic range and prevent peaks that could cause distortion.

De-essing: De-essing is a process to reduce harsh sibilant sounds (‘s’ and ‘sh’ sounds) that can be fatiguing to listen to.

Noise Reduction: Removing background noise is often vital for enhancing intelligibility. Software tools help to identify and reduce unwanted sounds while preserving the speech signal.

Audio Restoration: If working with older or damaged recordings, audio restoration techniques such as click removal and crackle reduction can significantly improve speech clarity.

For example, in a podcast, using a directional microphone with a pop filter, recording in a treated room, and then applying gentle EQ and compression in post-production will result in significantly clearer and more intelligible audio.

Q 25. Explain the differences between near-field, mid-field, and far-field monitoring.

Near-field, mid-field, and far-field monitoring refer to the distance between the listener and the loudspeakers. Each distance has its advantages and disadvantages, making them suitable for different applications in audio production.

Near-field Monitoring: This involves placing the monitors very close to the listener (typically within arm’s reach). The benefit is a more accurate representation of the sound, as room acoustics have less impact at such close proximity. This is ideal for mixing and detailed editing, but the sound pressure level is limited.

Mid-field Monitoring: Loudspeakers are placed further away from the listener (several feet), providing a more balanced soundstage, while still offering reasonable accuracy. This is a good compromise between accuracy and volume, often used in larger studios.

Far-field Monitoring: This involves listening from a greater distance. Used primarily for evaluating the overall sound of a mix in a less controlled environment, the sound will be significantly influenced by room acoustics, and typically less accurate representation of the actual sound.

Imagine listening to music: near-field monitoring is like having the speakers next to you. You hear the nuances but at lower volumes. Mid-field is like being several feet from the speakers, you still hear the details, but at higher volumes. Far-field would be like being at a concert; you get an impression of the overall sound but lack detail.

Q 26. How do you calibrate studio monitors for accurate sound reproduction?

Calibrating studio monitors is crucial to ensuring accurate sound reproduction. It involves adjusting the monitors to match a known reference point. This helps to minimize coloration and bias, allowing for more objective mixing and mastering decisions.

Room Correction Software: Software like Sonarworks Reference, Dirac Live, or Room EQ Wizard (REW) measure your room’s acoustic response and generate filters to compensate for frequency imbalances and other room-related issues. These are used in conjunction with a calibrated measurement microphone.

Calibration Microphone: A calibrated measurement microphone is essential for precise measurements of the frequency response. These microphones need regular calibration to ensure that the readings are still accurate.

Measurement and Analysis: The software measures the frequency response in multiple locations within the listening area, identifying peaks and dips that need to be addressed.

Filter Application: Based on the measurements, the software generates filters that are then applied to the monitors (either via the software itself or through a dedicated hardware unit). This effectively compensates for the room’s shortcomings.

Listening Tests: After calibration, it is crucial to do subjective listening tests to confirm the results and make fine adjustments.

Without calibration, your mixes might sound good in your studio but terrible elsewhere. Calibration ensures consistency and accuracy throughout the mix and mastering process.

Q 27. What are some examples of different types of audio effects and their uses?

Audio effects are tools used to modify or enhance audio signals. They can transform a sound in countless ways, adding creative flair or correcting flaws. They’re like the spices and seasonings of audio.

Reverb: Simulates the natural reflections of sound in a space. It adds depth and ambience, making sounds seem larger than life (e.g., a hall reverb on vocals). There are many types, such as plate reverb, spring reverb, and algorithmic reverb. Each produces a different sonic character.

Delay: Creates echoes by repeating a sound after a specific amount of time. It can be used creatively to add rhythmic interest or used subtly to thicken sounds.

Chorus: Creates a thicker, richer sound by slightly detuning and delaying multiple copies of the original signal. This often adds a ‘wider’ sound to instruments.

Flanger: A type of phasing effect that creates a whooshing or jet-plane-like sound by combining two slightly detuned copies of the signal, one of which is delayed. This effect is often used to add movement to a sound.

EQ (Equalization): Adjusts the balance of frequencies in a sound. It can be used to boost certain frequencies (making things sound brighter or fuller) or cut others (reducing muddiness or harshness).

Compression: Reduces the dynamic range of a signal. This makes the loud parts less loud and the quiet parts louder, resulting in a more even level and punchier sound.

Distortion: Adds harmonic overtones to a sound, creating a more gritty or saturated texture. This can range from subtle warmth to heavy fuzz.

For instance, adding reverb to a vocal track can make it sound more spacious and natural. Applying compression can make a drum track hit harder and be more consistent in volume. Distortion on a guitar can add a signature sound and aggression.

Q 28. Describe your experience with different audio editing software.

My experience encompasses a wide range of audio editing software, each with its strengths and weaknesses. I’m proficient in several industry-standard DAWs (Digital Audio Workstations).

Pro Tools: A mainstay in professional audio production, especially in film and television post-production. It’s known for its stability, extensive features, and industry-standard workflows.

Logic Pro X: A powerful and versatile DAW known for its intuitive interface and vast array of built-in instruments and effects. It is a popular choice among musicians and music producers.

Ableton Live: Often favored for electronic music production and live performance due to its session view, which allows for flexible arrangement and improvisation. It’s known for its loop-based workflow.

Cubase: Another established DAW often used in various music genres. It is known for its extensive MIDI capabilities.

Beyond DAWs, I’m also experienced with audio editing tools such as Audacity (a free, open-source option great for basic editing) and specialized tools for mastering, restoration, and sound design. My choice of software always depends on the project’s requirements and personal preference. Each DAW has its unique strengths and workflows, allowing for a tailored approach to each project.

Key Topics to Learn for Audio Engineering and Acoustics Knowledge Interview

- Fundamentals of Acoustics: Understanding sound waves, frequency, amplitude, wavelength, and their relationship to human perception. Practical application: Designing acoustically treated rooms for optimal recording and mixing.

- Signal Processing: Digital Signal Processing (DSP) concepts, including filtering, equalization, compression, and effects processing. Practical application: Analyzing and manipulating audio signals using DAW software (Digital Audio Workstation).

- Microphones and Recording Techniques: Different microphone types (dynamic, condenser, ribbon), polar patterns, microphone placement techniques, and recording strategies for various instruments and vocals. Practical application: Capturing high-quality audio recordings for various projects.

- Audio Mixing and Mastering: Principles of mixing, including equalization, compression, reverb, delay, and other effects. Mastering techniques for optimizing loudness and dynamic range. Practical application: Creating a professional-sounding final mix for music production, podcasts, or film.

- Room Acoustics and Treatment: Understanding the impact of room size and shape on sound quality. Methods for acoustical treatment, including absorption, diffusion, and isolation. Practical application: Designing or optimizing listening rooms, recording studios, or performance spaces.

- Loudspeaker Systems and Design: Principles of loudspeaker operation, including driver selection, crossover networks, and cabinet design. Practical application: Designing or specifying sound systems for live events, installations, or home theaters.

- Digital Audio Workstations (DAWs): Proficiency in at least one DAW (Pro Tools, Logic Pro X, Ableton Live, etc.). Practical application: Demonstrate your ability to record, edit, mix, and master audio projects.

- Audio for Media: Understanding the specific requirements for audio in film, television, video games, and other media. Practical application: Designing soundtracks, creating sound effects, and integrating audio into multimedia projects.

Next Steps

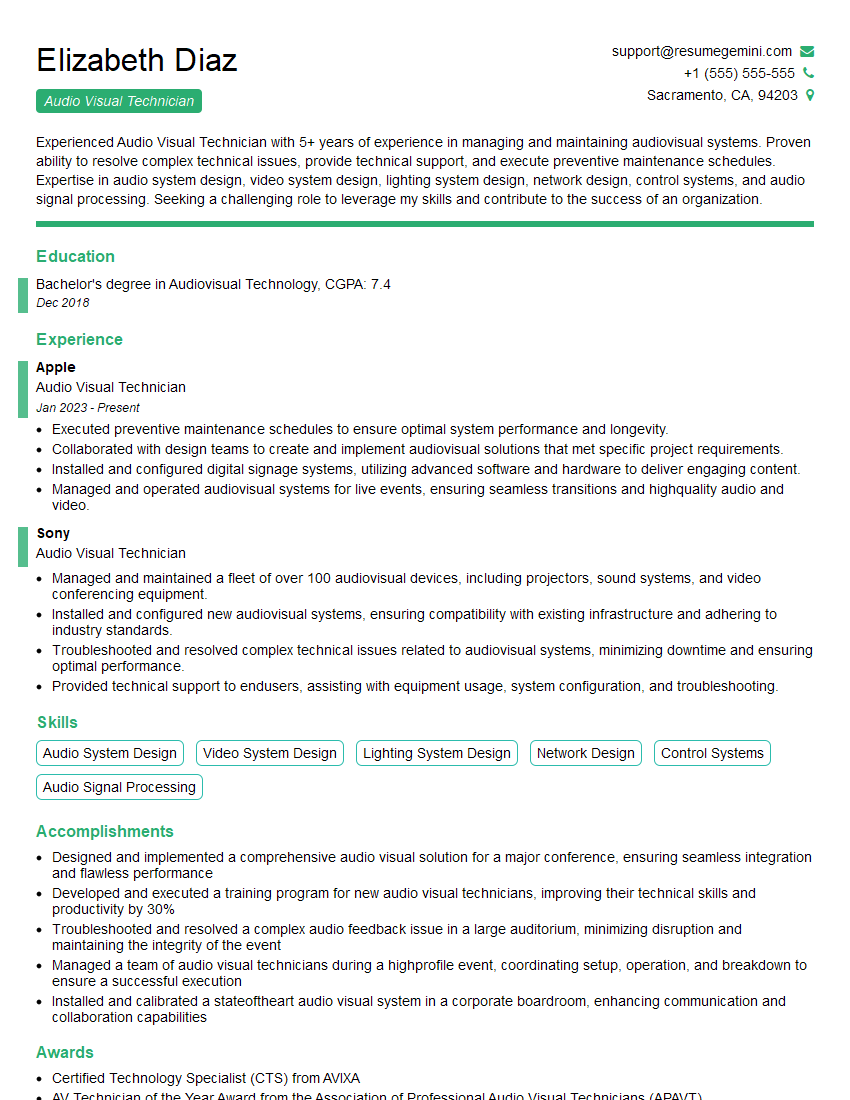

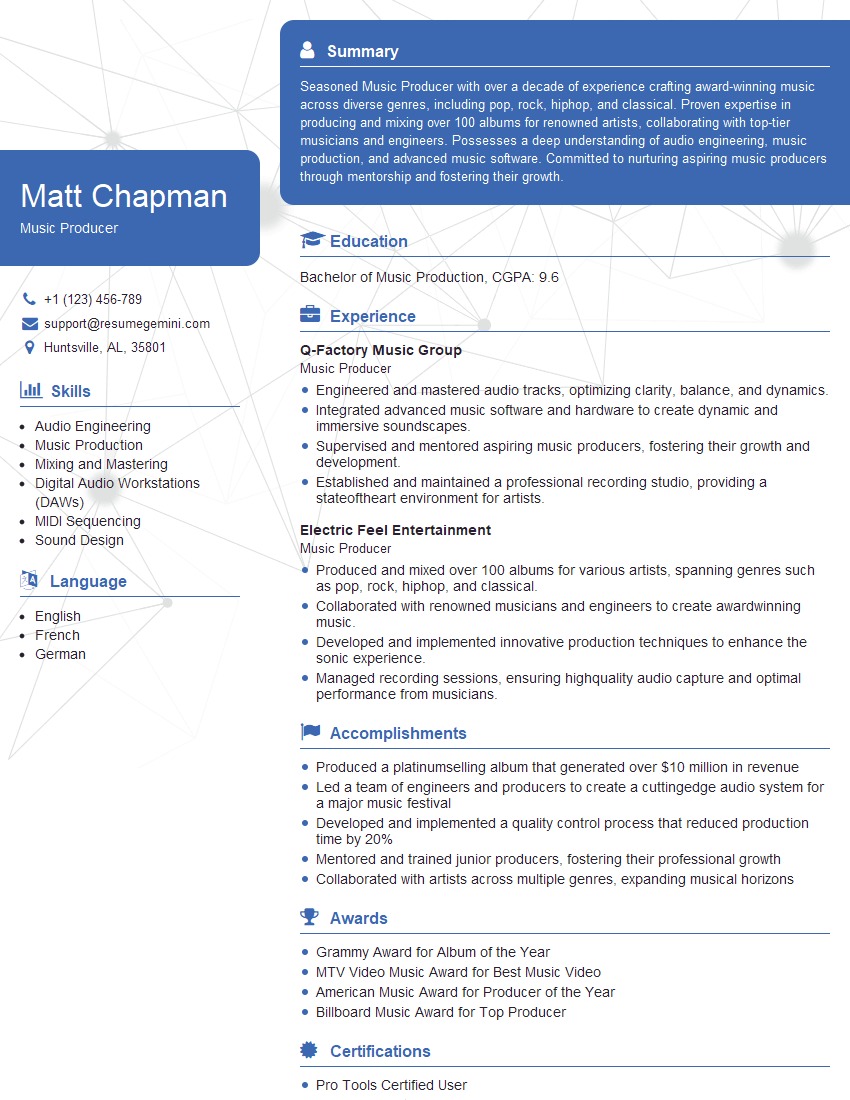

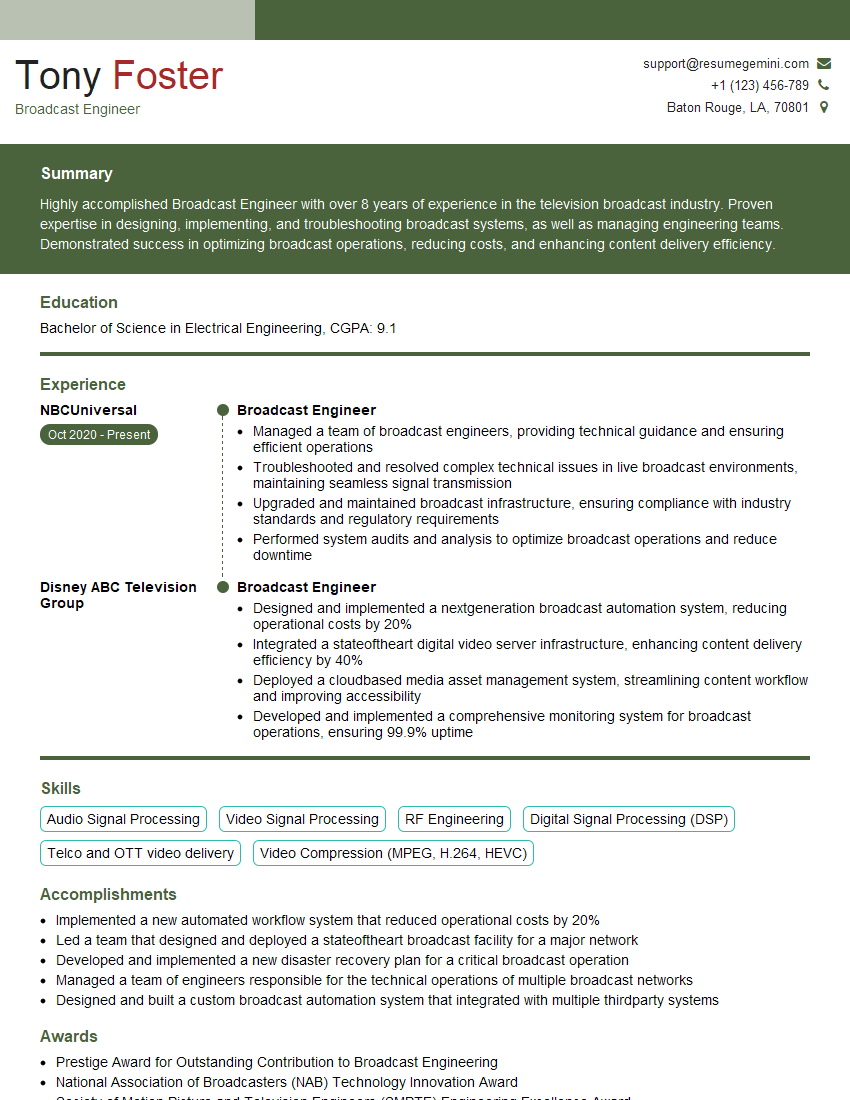

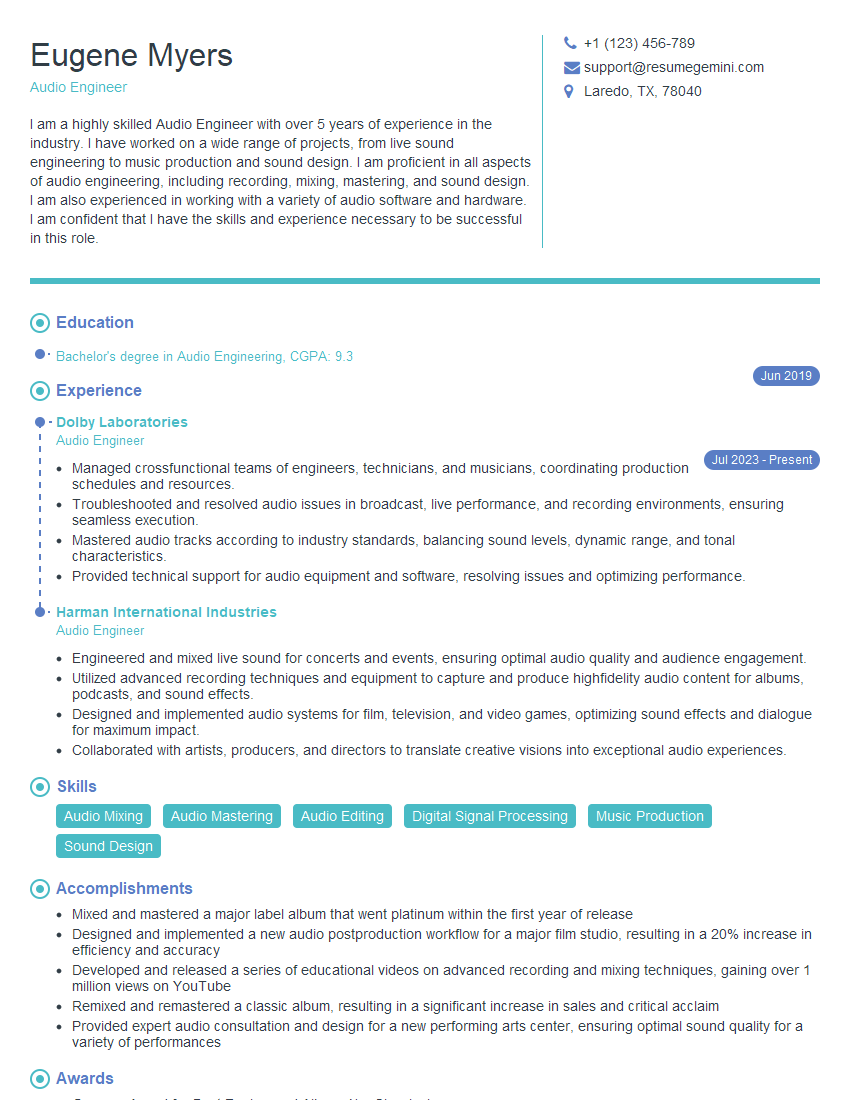

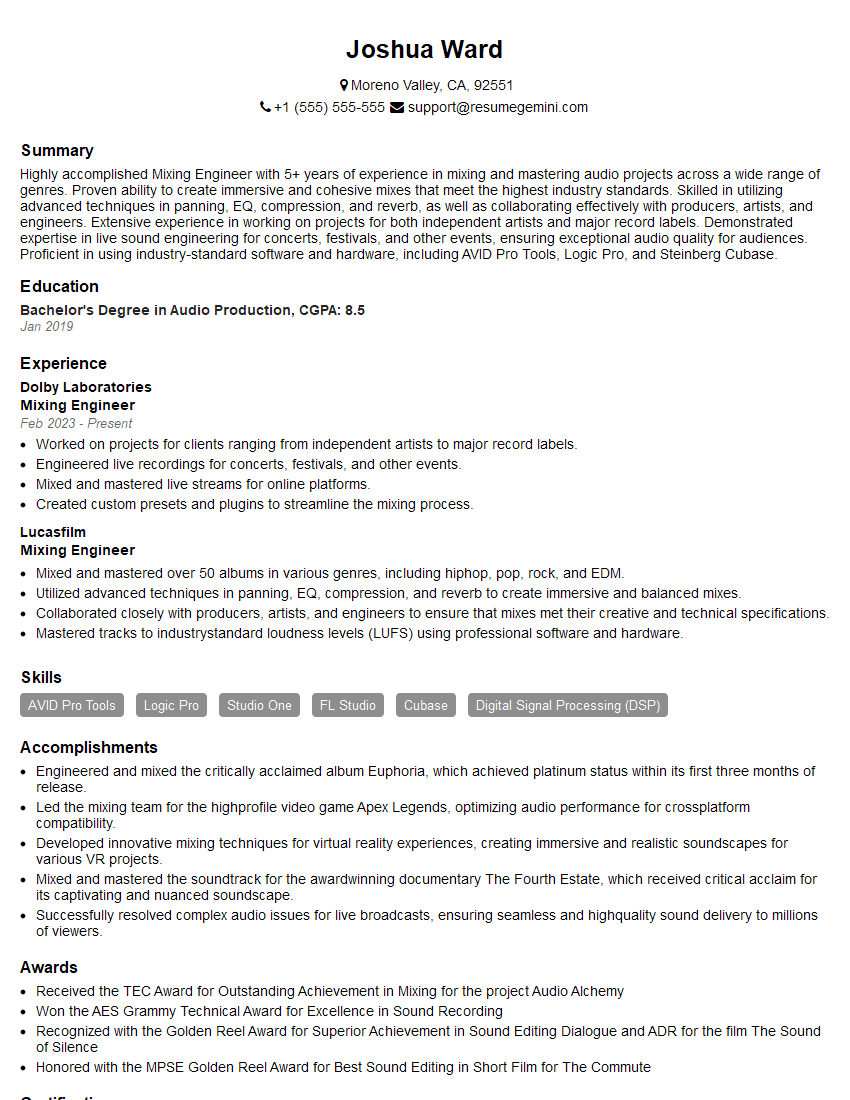

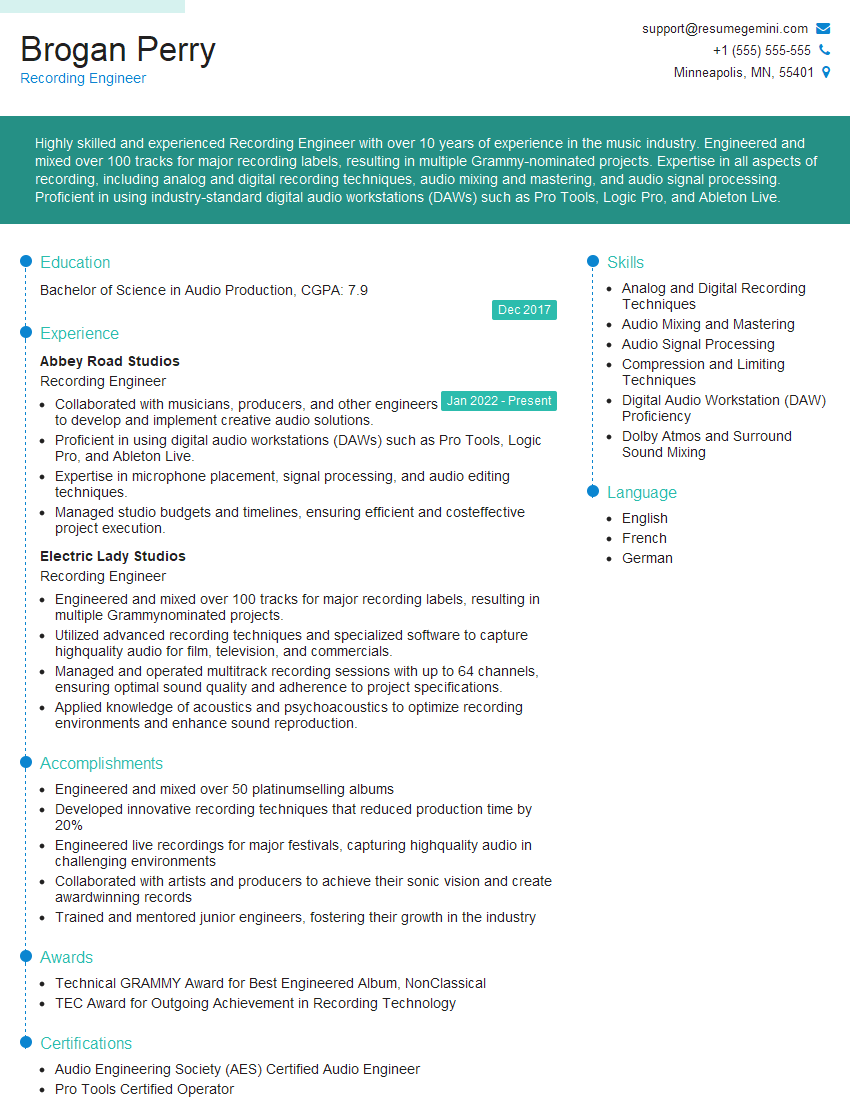

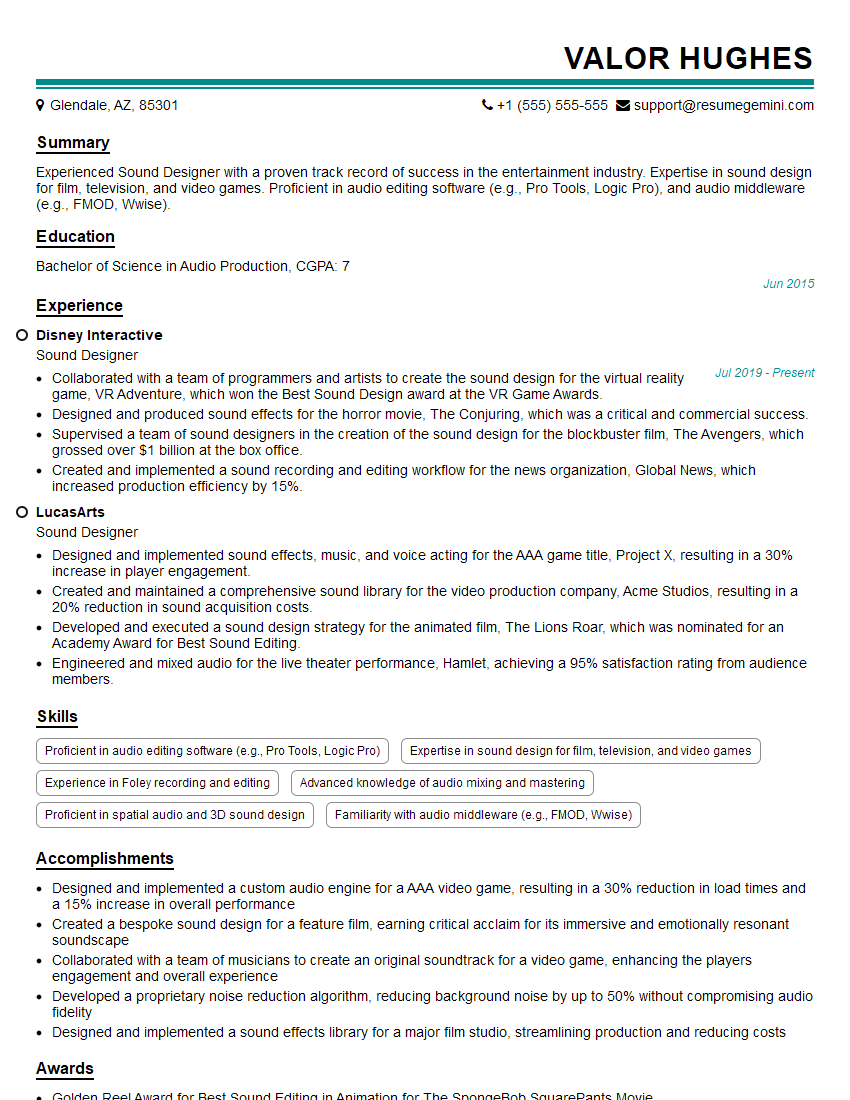

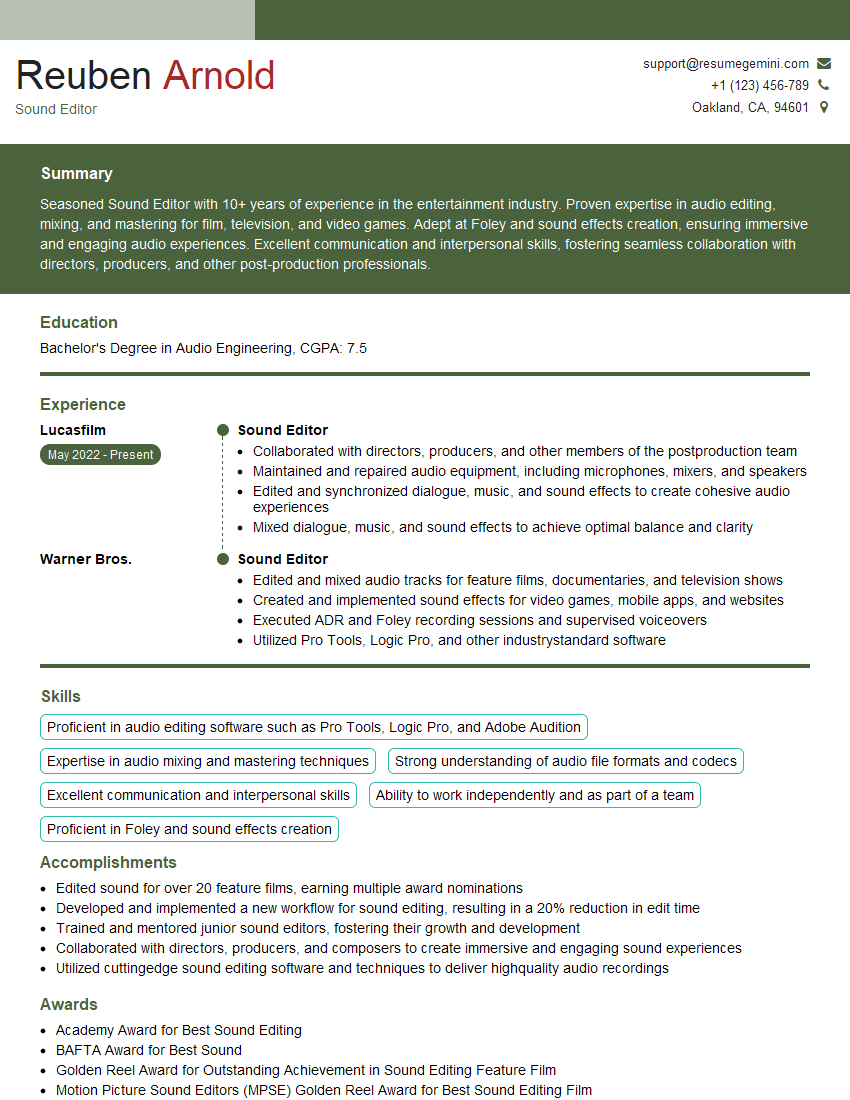

Mastering Audio Engineering and Acoustics Knowledge is crucial for career advancement in this exciting field. A strong understanding of these principles will significantly increase your chances of securing your dream role. To boost your job prospects, creating an ATS-friendly resume is essential. ResumeGemini is a trusted resource that can help you build a professional and impactful resume. We provide examples of resumes tailored to Audio Engineering and Acoustics Knowledge to guide you. Let ResumeGemini help you make a powerful first impression.

Explore more articles

Users Rating of Our Blogs

Share Your Experience

We value your feedback! Please rate our content and share your thoughts (optional).

What Readers Say About Our Blog

Hello,

We found issues with your domain’s email setup that may be sending your messages to spam or blocking them completely. InboxShield Mini shows you how to fix it in minutes — no tech skills required.

Scan your domain now for details: https://inboxshield-mini.com/

— Adam @ InboxShield Mini

Reply STOP to unsubscribe

Hi, are you owner of interviewgemini.com? What if I told you I could help you find extra time in your schedule, reconnect with leads you didn’t even realize you missed, and bring in more “I want to work with you” conversations, without increasing your ad spend or hiring a full-time employee?

All with a flexible, budget-friendly service that could easily pay for itself. Sounds good?

Would it be nice to jump on a quick 10-minute call so I can show you exactly how we make this work?

Best,

Hapei

Marketing Director

Hey, I know you’re the owner of interviewgemini.com. I’ll be quick.

Fundraising for your business is tough and time-consuming. We make it easier by guaranteeing two private investor meetings each month, for six months. No demos, no pitch events – just direct introductions to active investors matched to your startup.

If youR17;re raising, this could help you build real momentum. Want me to send more info?

Hi, I represent an SEO company that specialises in getting you AI citations and higher rankings on Google. I’d like to offer you a 100% free SEO audit for your website. Would you be interested?

Hi, I represent an SEO company that specialises in getting you AI citations and higher rankings on Google. I’d like to offer you a 100% free SEO audit for your website. Would you be interested?

good