Interviews are more than just a Q&A session—they’re a chance to prove your worth. This blog dives into essential System-Level Design and Architecture interview questions and expert tips to help you align your answers with what hiring managers are looking for. Start preparing to shine!

Questions Asked in System-Level Design and Architecture Interview

Q 1. Explain the difference between system architecture and system design.

System architecture and system design are closely related but distinct concepts. Think of architecture as the high-level blueprint of a building, outlining its major components and their relationships, while design is the detailed plan for constructing each component.

System architecture focuses on the overall structure, defining the key components (modules, services, databases), their interactions, and the technology choices (e.g., programming languages, platforms). It’s about the ‘what’ and ‘why’ of the system. A good architecture ensures the system meets its functional and non-functional requirements (scalability, security, maintainability).

System design, on the other hand, delves into the specifics of each component. It outlines the algorithms, data structures, and interfaces. It’s concerned with the ‘how’ – the detailed implementation of each architectural element. For example, the architecture might specify a microservice-based system, while the design would detail the individual microservices’ APIs, internal logic, and database interactions.

In short: Architecture is about the big picture; design is about the details.

Q 2. Describe your experience with different architectural patterns (e.g., microservices, layered architecture).

I have extensive experience with various architectural patterns. I’ve worked with:

- Microservices Architecture: I led the development of a large e-commerce platform using a microservices approach. This involved decomposing the system into independent, deployable services (e.g., catalog service, order service, payment service), each responsible for a specific business function. This allowed for independent scaling, faster development cycles, and improved fault isolation. We used Kubernetes for orchestration and API gateways for service discovery and routing.

- Layered Architecture (n-tier): In a previous project building a CRM system, we adopted a layered architecture with distinct layers for presentation (UI), business logic, data access, and database. This provided clear separation of concerns and enhanced maintainability. Changes in the database layer, for instance, had minimal impact on the UI.

- Event-Driven Architecture: I’ve used event-driven architectures to build real-time data processing pipelines. This involved services publishing events to a message queue (like Kafka), and other services subscribing to these events and reacting accordingly. This enabled asynchronous communication and loose coupling between services.

Each architecture has its own strengths and weaknesses. Choosing the right one depends on the project’s specific needs and constraints.

Q 3. How do you choose the right architecture for a given project?

Selecting the appropriate architecture is a crucial decision impacting the entire project lifecycle. My approach involves a thorough analysis considering several factors:

- Project Requirements: Understanding functional requirements (what the system should do) and non-functional requirements (scalability, performance, security, maintainability) is paramount. A system processing massive amounts of data needs a different architecture than a small, internal tool.

- Scalability Needs: Will the system need to handle a large number of users or data volume? Microservices or event-driven architectures often offer better scalability compared to monolithic systems.

- Team Expertise: The team’s experience and skills influence architectural choices. Choosing a complex architecture without the necessary expertise can lead to project failure.

- Technology Landscape: Existing infrastructure, available tools, and technology trends are important considerations. Leveraging existing infrastructure can reduce costs and development time.

- Cost and Time Constraints: Microservices can be more complex to implement initially but offer long-term benefits. A simpler architecture might be more suitable for projects with tight deadlines and budgets.

I often use architectural decision records (ADR) to document the rationale behind architecture choices, promoting transparency and facilitating future modifications.

Q 4. Explain the concept of scalability and how you would design for it.

Scalability refers to a system’s ability to handle increasing workloads (e.g., more users, more data) without significant performance degradation. Designing for scalability involves several key strategies:

- Horizontal Scaling: Adding more servers to distribute the workload. This is generally preferred over vertical scaling (adding resources to a single server).

- Load Balancing: Distributing incoming requests across multiple servers to prevent overload on any single server. This can involve techniques like round-robin, least connections, or IP hashing.

- Database Scaling: Employing techniques like sharding (partitioning the database across multiple servers) or read replicas to improve database performance.

- Caching: Storing frequently accessed data in memory or a dedicated cache server to reduce database load and improve response times. Techniques like CDN (Content Delivery Network) can also improve scalability.

- Asynchronous Processing: Processing tasks asynchronously (e.g., using message queues) allows for better resource utilization and improved responsiveness.

For example, in designing a social media platform, we’d use horizontal scaling to handle peak user loads during events. Load balancers would distribute incoming requests, and caching would improve performance for frequently accessed user profiles. The database would likely be sharded to manage large amounts of user data.

Q 5. Describe your experience with various design patterns (e.g., Singleton, Factory, Observer).

I’m familiar with a wide range of design patterns, and my experience includes:

- Singleton: Used when only one instance of a class should exist (e.g., a database connection pool). Ensures controlled access and prevents resource conflicts. However, overuse can hinder testability.

- Factory: Used to create objects without specifying their concrete classes. Provides flexibility and allows for easy addition of new object types without modifying existing code. This is essential for creating objects whose creation is complex or varies depending on conditions.

- Observer (Publish-Subscribe): Used to define a one-to-many dependency between objects. When one object changes state, all its dependents are notified and updated automatically. This promotes loose coupling and facilitates event-driven architectures. A good example would be a stock ticker system where various clients subscribe to price updates.

- Strategy: Used when algorithms vary, providing interchangeable algorithms. This promotes flexibility and allows for easy swapping of algorithms without modifying the core code.

- Decorator: Used to dynamically add responsibilities to an object without altering its structure. It’s commonly used to add functionality like logging or security to existing objects.

The choice of design pattern depends on the specific problem and context. Understanding their trade-offs and applicability is crucial for effective system design.

Q 6. How do you handle trade-offs between different design considerations (e.g., performance, security, maintainability)?

Handling trade-offs between design considerations like performance, security, and maintainability is a constant challenge in system design. It requires careful prioritization and compromise. My approach involves:

- Prioritization: Clearly defining the most critical requirements for the system. For example, a high-frequency trading system prioritizes performance over other factors, while a banking system prioritizes security.

- Cost-Benefit Analysis: Evaluating the costs and benefits of different design choices. For instance, adding extra security features might improve security but reduce performance. It’s important to quantify the impact of each choice.

- Iterative Design: Starting with a simpler design and iteratively improving it based on testing and feedback. This allows for early identification and mitigation of trade-offs.

- Compromise and Negotiation: Finding a balance between competing requirements. This often involves making difficult decisions, documenting the trade-offs, and ensuring stakeholder buy-in.

- Monitoring and Evaluation: Continuously monitoring system performance and security to identify and address potential issues caused by design trade-offs.

For instance, in designing a web application, we might choose a slightly less performant database solution if it offers better security features and is easier to maintain.

Q 7. Explain your approach to system decomposition.

System decomposition is the process of breaking down a complex system into smaller, manageable modules or components. My approach is guided by several principles:

- Separation of Concerns: Each module should have a specific responsibility, minimizing dependencies and improving maintainability. This often follows architectural patterns like layered architecture or microservices.

- Functional Decomposition: Breaking down the system based on its functions or features. Each module implements a specific function, making the system easier to understand and modify.

- Data-Driven Decomposition: Organizing modules around data entities. This can be beneficial when dealing with large datasets.

- Modular Design: Designing modules with well-defined interfaces, promoting loose coupling and allowing for independent development and testing.

- Dependency Management: Carefully managing dependencies between modules to minimize impact of changes in one module on others.

I often use diagrams (e.g., UML diagrams) to visualize the system decomposition and relationships between modules. The choice of decomposition strategy depends on the system’s complexity, requirements, and development environment.

Q 8. How do you ensure system security in your designs?

System security is paramount in any design. It’s not a bolt-on feature; it’s woven into the fabric of the system from the ground up. My approach involves a multi-layered strategy incorporating several key principles:

- Defense in Depth: Employing multiple security controls at different levels to prevent a single point of failure. This includes firewalls, intrusion detection systems, access control lists, and regular security audits. Think of it like a castle with multiple walls and guards – even if one layer is breached, others remain to protect the core.

- Least Privilege Principle: Granting users only the minimum access rights necessary to perform their tasks. This limits the damage a compromised account can inflict. For example, a database administrator shouldn’t have access to financial systems.

- Secure Coding Practices: Implementing secure coding standards throughout the development lifecycle to prevent vulnerabilities like SQL injection, cross-site scripting (XSS), and buffer overflows. This involves regular code reviews, static and dynamic analysis, and penetration testing.

- Data Encryption: Protecting data both in transit and at rest using appropriate encryption algorithms. This safeguards sensitive information from unauthorized access. For example, using HTTPS for communication and encrypting database backups.

- Regular Security Assessments: Conducting regular vulnerability scans, penetration testing, and security audits to proactively identify and remediate weaknesses. This is a continuous process, not a one-time event.

In a recent project involving a healthcare application, we implemented end-to-end encryption for patient data, used role-based access control to limit access to sensitive information, and conducted regular penetration testing to identify vulnerabilities before deployment. This layered approach ensured the system remained secure even in the face of potential threats.

Q 9. Describe your experience with different databases (e.g., relational, NoSQL) and how you would choose one for a project.

I have extensive experience with both relational (SQL) and NoSQL databases. The choice depends heavily on the specific needs of the project.

- Relational Databases (e.g., MySQL, PostgreSQL): These are ideal for structured data with well-defined relationships between entities. They excel at ACID properties (Atomicity, Consistency, Isolation, Durability), ensuring data integrity. They are a good fit for applications requiring complex joins and transactions, such as financial systems or e-commerce platforms.

- NoSQL Databases (e.g., MongoDB, Cassandra): These are better suited for unstructured or semi-structured data, handling large volumes of data with high velocity. They offer horizontal scalability and high availability, making them suitable for applications like social media platforms or real-time analytics dashboards. Different NoSQL databases (document, key-value, graph, column-family) cater to various data models.

When choosing a database, I consider factors like:

- Data Model: Is the data highly structured or unstructured?

- Scalability Requirements: How much data needs to be stored and how quickly does it need to be accessed?

- Transactionality: Are ACID properties crucial for data integrity?

- Cost and Maintenance: What’s the budget and available expertise?

For instance, in a project involving a social media application, we chose MongoDB for its scalability and flexibility in handling diverse user-generated content. For an inventory management system requiring strict data integrity, we opted for PostgreSQL. The selection always prioritizes aligning the database technology with the application’s specific needs.

Q 10. How do you design for fault tolerance and resilience?

Designing for fault tolerance and resilience ensures the system continues to function even when components fail. My strategy involves:

- Redundancy: Implementing redundant components (servers, networks, databases) to provide backup in case of failure. This can involve load balancing across multiple servers, mirroring databases, or using redundant network connections.

- Failover Mechanisms: Setting up automatic failover mechanisms to quickly switch to backup components in the event of a primary component failure. This minimizes downtime and ensures continued service.

- Error Handling and Logging: Implementing robust error handling and detailed logging to monitor system health and quickly identify and address issues. Comprehensive logging allows for root-cause analysis of failures and improvements to prevent recurrence.

- Circuit Breakers: Employing circuit breakers to prevent cascading failures by isolating faulty components and preventing repeated attempts to access them until they recover.

- Monitoring and Alerting: Using monitoring tools to track system performance, identify potential problems early, and generate alerts when critical thresholds are breached. This allows for proactive intervention to avoid major disruptions.

For example, in a financial transaction system, we implemented database replication with automatic failover, using a load balancer to distribute traffic among multiple servers. Comprehensive monitoring and alerting ensured we could quickly detect and react to any anomalies, minimizing disruptions to critical financial transactions.

Q 11. Explain the concept of modularity and its importance in system design.

Modularity in system design refers to breaking down a complex system into smaller, independent, and interchangeable modules. It is crucial because:

- Improved Maintainability: Changes or updates to one module don’t necessarily affect others, simplifying maintenance and reducing the risk of unintended consequences. Imagine repairing a car – you can replace individual parts (modules) without dismantling the entire vehicle.

- Increased Reusability: Modules can be reused in different projects, saving time and resources. A well-designed module, like a user authentication system, can be used across multiple applications.

- Enhanced Testability: Smaller, independent modules are easier to test individually, improving the overall quality and reliability of the system.

- Simplified Development: Breaking down the problem into smaller, manageable pieces makes the development process easier to manage and allows for parallel development.

- Scalability: Modules can be scaled independently based on the demand for each part of the system. For instance, you can scale the database independently from the web server.

I often use a microservices architecture, a prime example of modularity, where the system is built as a collection of small, independent services that communicate with each other. This approach significantly enhances flexibility, scalability, and maintainability.

Q 12. How do you handle legacy systems when designing new systems?

Handling legacy systems is a common challenge in system design. My approach involves a phased strategy:

- Assessment: Thoroughly assess the legacy system to understand its architecture, functionality, data structure, and dependencies. This may involve reverse engineering or interviewing stakeholders.

- Integration Strategies: Determine the best way to integrate the new system with the legacy system. Options include:

- Wrappers/Adapters: Create wrappers or adapters to bridge the gap between the old and new systems. This allows the new system to interact with the legacy system without requiring extensive modifications.

- Gradual Migration: Gradually migrate functionality from the legacy system to the new system over time, minimizing disruption and risk. This may involve migrating one module at a time.

- Replacement: Completely replace the legacy system with the new one. This is usually the most disruptive but can offer significant long-term benefits.

- Data Migration: Plan and execute a robust data migration strategy to transfer data from the legacy system to the new system. This often requires data cleansing and transformation.

- Testing: Thoroughly test the integration between the legacy and new systems to ensure seamless functionality and data integrity.

In a recent project, we used an adapter pattern to integrate a new e-commerce platform with a legacy order management system. This allowed us to deploy the new platform without immediately replacing the existing system, reducing risk and allowing for a phased migration.

Q 13. Describe your experience with different software development methodologies (e.g., Agile, Waterfall).

I’m proficient in both Agile and Waterfall methodologies. The best choice depends on project requirements.

- Waterfall: This is a sequential approach with clearly defined phases. It’s well-suited for projects with stable requirements and a low degree of uncertainty. It’s easier to manage from a planning perspective but less adaptable to changing needs.

- Agile: This is an iterative approach emphasizing flexibility and collaboration. It’s ideal for projects with evolving requirements or a high level of uncertainty. Agile allows for continuous feedback and adaptation, leading to a more responsive development process.

My experience includes leading projects using Scrum (an Agile framework) where we delivered working software in short sprints (typically 2-4 weeks). I also have experience managing projects using a modified Waterfall approach where iterative development cycles were incorporated to accommodate changes in requirements. The key is choosing the methodology best aligned with project context and team dynamics.

Q 14. How do you manage technical debt in a project?

Technical debt represents compromises made during development to accelerate delivery, often sacrificing long-term quality and maintainability. Managing it effectively is crucial for long-term project success.

- Identification: Regularly identify technical debt through code reviews, automated testing, and performance monitoring. Prioritize the most critical debt based on impact and risk.

- Prioritization: Not all technical debt is equal. Prioritize addressing debt that poses the highest risk (e.g., security vulnerabilities) or that impedes future development.

- Planning: Allocate time and resources to address technical debt in each development iteration. Don’t let it accumulate uncontrollably.

- Refactoring: Regularly refactor code to improve its structure, readability, and maintainability. This reduces future technical debt and makes it easier to maintain and enhance the system.

- Documentation: Maintain clear documentation of technical debt, including its nature, impact, and planned remediation. This ensures everyone is aware of the outstanding issues and facilitates informed decision-making.

In one project, we tracked technical debt using a spreadsheet, categorizing it by severity and impact. We dedicated a portion of each sprint to address high-priority items, preventing them from becoming major roadblocks later on. Regular code reviews and refactoring kept the codebase clean and maintainable, reducing the accumulation of future debt.

Q 15. Explain the concept of API design and your experience with RESTful APIs.

API design is the process of defining how different software systems or components will communicate with each other. A well-designed API is crucial for building robust, scalable, and maintainable systems. Think of it as a contract specifying how one system can request services from another. RESTful APIs are a popular architectural style for building APIs. They rely on standard HTTP methods (GET, POST, PUT, DELETE) to perform operations on resources, identified by URLs.

In my experience, I’ve designed and implemented numerous RESTful APIs using frameworks like Spring Boot (Java) and Node.js with Express.js. A key aspect of my approach is focusing on designing intuitive resource representations (often JSON) and using appropriate HTTP status codes to convey success or error conditions. For example, I might design an API endpoint /users/{userId} to retrieve a specific user’s information using a GET request. If the user is not found, a 404 Not Found status code is returned. Similarly, a POST request to /users would create a new user, returning a 201 Created status code and the newly created user’s information.

I prioritize versioning to ensure backward compatibility when making changes to the API, often using URL versioning (e.g., /v1/users, /v2/users). Thorough documentation, using tools like Swagger/OpenAPI, is also essential for making the API easy to understand and use by other developers.

Career Expert Tips:

- Ace those interviews! Prepare effectively by reviewing the Top 50 Most Common Interview Questions on ResumeGemini.

- Navigate your job search with confidence! Explore a wide range of Career Tips on ResumeGemini. Learn about common challenges and recommendations to overcome them.

- Craft the perfect resume! Master the Art of Resume Writing with ResumeGemini’s guide. Showcase your unique qualifications and achievements effectively.

- Don’t miss out on holiday savings! Build your dream resume with ResumeGemini’s ATS optimized templates.

Q 16. How do you conduct performance testing and optimization?

Performance testing and optimization are critical for ensuring the responsiveness and scalability of a system. My approach involves a multi-stage process. First, I define clear performance goals and metrics, such as response times, throughput, and resource utilization. Then, I use load testing tools like JMeter or Gatling to simulate realistic user loads and identify bottlenecks.

Once bottlenecks are identified (e.g., slow database queries, inefficient algorithms), I use profiling tools to pinpoint the precise areas for improvement. Optimization strategies might include database query tuning, code refactoring, caching, and load balancing. For example, if a database query is slow, I might add indexes to improve its performance. If a specific code section is computationally expensive, I might explore alternative algorithms or data structures. I iterate on testing and optimization until the system meets the defined performance goals.

Throughout the process, I carefully monitor resource usage (CPU, memory, network) to avoid unintended consequences. Performance testing is not a one-time activity; it’s an ongoing process integrated into the development lifecycle to proactively address performance issues.

Q 17. Describe your experience with cloud platforms (e.g., AWS, Azure, GCP).

I have extensive experience with all three major cloud platforms: AWS, Azure, and GCP. My experience spans various services, including compute (EC2, Azure VMs, Compute Engine), storage (S3, Azure Blob Storage, Cloud Storage), databases (RDS, Azure SQL Database, Cloud SQL), and networking (VPC, Virtual Networks, VPC).

I’ve worked on projects leveraging serverless technologies like AWS Lambda, Azure Functions, and Google Cloud Functions. I understand the trade-offs between different cloud services and can select the best option based on project requirements and cost considerations. For example, I might choose serverless functions for event-driven architectures, but opt for virtual machines for applications requiring more control over the environment. I’m also proficient in managing cloud resources using Infrastructure as Code (IaC) tools like Terraform and CloudFormation, ensuring infrastructure consistency and repeatability.

Beyond the technical aspects, I understand the importance of security best practices within cloud environments. I have experience configuring security groups, access control lists, and other security features to protect cloud resources.

Q 18. How do you design for different deployment environments (e.g., on-premises, cloud)?

Designing for different deployment environments requires careful consideration of factors like infrastructure, security, and scalability. Key aspects include:

- Abstraction: The system architecture should be designed to minimize dependencies on specific environments. This can be achieved through configuration management and using environment variables to manage settings specific to each environment.

- Configuration Management: Tools like Ansible, Chef, or Puppet are used to automate the deployment and configuration process, ensuring consistency across environments.

- Infrastructure as Code (IaC): Using tools like Terraform allows you to define and manage infrastructure in a declarative manner, making it easy to deploy and manage the same system across different environments.

- Containerization: Containerization technologies like Docker significantly improve portability by packaging applications and their dependencies into isolated containers.

- Security: Security considerations differ between on-premises and cloud environments. On-premises deployments might require more stringent firewall rules, while cloud deployments benefit from cloud-native security services.

For example, I’ve designed systems that run equally well on-premises using virtual machines and in AWS, utilizing configuration management to adapt to the different environments. This abstraction layer ensured smooth deployment and reduced the effort involved in managing multiple environments.

Q 19. Explain your experience with containerization technologies (e.g., Docker, Kubernetes).

I have substantial experience with Docker and Kubernetes. Docker allows me to package applications and their dependencies into containers, making them easily portable and deployable across different environments. Kubernetes, on the other hand, is a container orchestration platform that automates the deployment, scaling, and management of containerized applications.

I utilize Docker for building and distributing application images. For example, I might create a Dockerfile to build an image containing a web application and its dependencies. This image can then be deployed to any environment with a Docker engine. Kubernetes extends this by providing features like automated rollouts, load balancing, and self-healing, crucial for managing large-scale applications. I’ve used Kubernetes to deploy and manage microservices architectures, ensuring high availability and scalability.

I’m also familiar with various Kubernetes concepts, such as deployments, services, pods, and namespaces. I understand how to manage resource allocation, configure networking, and monitor the health of applications running in a Kubernetes cluster. My experience also includes utilizing Helm for managing Kubernetes applications and their configurations.

Q 20. How do you ensure system maintainability?

System maintainability is paramount for long-term success. My approach involves several key strategies:

- Modular Design: Breaking down the system into loosely coupled modules makes it easier to understand, modify, and maintain individual components without impacting the entire system.

- Code Quality: Adhering to coding standards, writing clean and well-documented code, and employing code reviews are crucial. Tools like linters and static analyzers help maintain code quality.

- Automated Testing: Comprehensive automated testing (unit, integration, system) ensures that changes don’t introduce regressions. This also speeds up the testing process, enabling faster iteration cycles.

- Monitoring and Logging: Implementing robust monitoring and logging mechanisms allows for proactive identification and resolution of issues. Tools like Prometheus and Grafana are used to collect and visualize system metrics.

- Documentation: Clear and up-to-date documentation is essential for anyone working with the system, making it easier to understand its functionality and maintenance procedures.

For instance, in a recent project, I implemented a microservices architecture with comprehensive unit and integration tests, drastically reducing maintenance time and improving the overall stability of the system.

Q 21. Describe your process for creating system documentation.

Creating system documentation is an iterative process that should happen throughout the system’s lifecycle. My approach incorporates various documentation types:

- Architectural Design Documents: These high-level documents describe the system’s overall architecture, including its components, interactions, and key technologies. Diagram tools like Lucidchart or draw.io are often used.

- API Documentation: Using tools like Swagger/OpenAPI, I generate comprehensive API documentation that includes detailed descriptions of endpoints, request/response formats, and usage examples.

- Code Documentation: Inline comments and docstrings are crucial for making the code easy to understand. Tools like JSDoc (for JavaScript) and Javadoc (for Java) automate documentation generation from code.

- User Manuals and Tutorials: These documents guide users on how to interact with and utilize the system.

- Maintenance and Troubleshooting Guides: This critical documentation outlines common issues, troubleshooting steps, and maintenance procedures.

I maintain a version control system (like Git) for all documentation, ensuring that changes are tracked and that everyone has access to the latest version. The documentation should be easily accessible to all stakeholders, and I aim for simplicity and clarity to ensure it’s truly useful and readily understood.

Q 22. Explain your experience with version control systems (e.g., Git).

Version control systems, like Git, are indispensable for collaborative software development. They track changes to code over time, allowing for easy collaboration, rollback to previous versions, and efficient management of different code branches. My experience spans several years, encompassing everything from basic branching and merging to advanced techniques like rebasing and cherry-picking.

I’m proficient in using Git for both individual and team projects. For instance, in a recent project designing a microservice architecture, we utilized Git’s branching capabilities extensively. Each microservice had its own branch, allowing developers to work independently. Pull requests facilitated code reviews, ensuring code quality and consistency before merging into the main branch. We also leveraged Git’s tagging functionality to mark significant milestones and releases. Understanding and effectively employing Git’s workflow, including resolving merge conflicts, is crucial for maintaining a clean and manageable codebase.

Furthermore, I’m comfortable using Git with various platforms like GitHub, GitLab, and Bitbucket, understanding the collaborative features offered by these platforms, such as issue tracking and continuous integration/continuous deployment (CI/CD) pipelines.

Q 23. How do you prioritize features in a system design?

Prioritizing features in system design is a crucial aspect, balancing user needs with technical feasibility and business goals. I typically employ a multi-faceted approach, combining several techniques:

- MoSCoW Method: This involves categorizing features into Must have, Should have, Could have, and Won’t have. This provides a clear hierarchy based on their importance and urgency.

- Value vs. Effort Matrix: I plot features based on their business value and the effort required to implement them. This helps to identify high-value, low-effort features that should be prioritized.

- Stakeholder Input: Gathering requirements and feedback from various stakeholders, including clients, product managers, and engineering teams, ensures that priorities align with overall project goals.

- Risk Assessment: Features with high technical risk or dependency on external factors may require prioritization to mitigate potential project delays.

For example, in a project building a high-traffic e-commerce platform, we prioritized features related to payment processing and product search (Must have) over features like advanced user analytics (Should have) or personalized recommendations (Could have). This ensured core functionality was launched first, minimizing risk and time to market. Regularly revisiting and adjusting priorities based on feedback and progress is essential for a successful project.

Q 24. Describe a time you had to make a difficult technical decision in a system design project.

In a recent project designing a distributed caching system, we faced a critical decision regarding the choice of caching technology. We were initially inclined towards a readily available, mature solution. However, after thorough analysis, we realized that its limitations in scalability and specific data structures might create bottlenecks in the long run.

The alternative was a less mature, open-source solution which offered better scalability and customization but had a steeper learning curve and potentially higher maintenance costs. We carefully weighed the pros and cons of both options, considering factors like performance requirements, team expertise, long-term maintainability, and potential risks. Ultimately, we opted for the open-source solution, investing time in thorough testing and documentation to mitigate the risks associated with its adoption. This decision proved beneficial, as the system scaled far beyond the initial expectations without experiencing performance degradation.

This experience highlighted the importance of considering both short-term gains and long-term scalability when making critical technical decisions. Thorough research, risk assessment, and clear communication within the team were crucial in navigating this challenging situation.

Q 25. How do you handle conflicts with other engineers in a design project?

Conflicts are inevitable in collaborative projects. My approach focuses on open communication and collaborative problem-solving. I believe in fostering a respectful environment where everyone feels comfortable expressing their opinions.

When a conflict arises, I first ensure everyone understands the context and the points of contention. I encourage active listening and strive to understand each perspective. Then, I try to find common ground and work towards a solution that satisfies everyone’s needs as much as possible. Sometimes, this requires compromise. Other times, a structured decision-making process, perhaps involving a neutral third party, can be helpful. Documentation plays a key role: keeping records of decisions and agreements prevents future misunderstandings.

For example, during a heated discussion about the optimal database design, I facilitated a meeting where each team member presented their reasoning. By understanding the underlying concerns, we found a hybrid solution that incorporated elements from each approach, resolving the conflict and resulting in a superior design.

Q 26. Explain your approach to system monitoring and logging.

System monitoring and logging are crucial for ensuring system health, identifying performance bottlenecks, and debugging issues. My approach is multifaceted and encompasses several key components:

- Centralized Logging: Employing a centralized logging system, such as Elasticsearch, Fluentd, and Kibana (the ELK stack), provides a unified view of all logs across different system components.

- Metrics Collection: Utilizing monitoring tools like Prometheus or Datadog to collect system metrics (CPU utilization, memory usage, network traffic, request latency) is essential for performance analysis and proactive identification of issues.

- Alerting: Setting up alerts based on predefined thresholds allows for timely responses to critical situations, such as high CPU usage or unexpected errors.

- Distributed Tracing: Implementing distributed tracing systems, like Jaeger or Zipkin, provides end-to-end visibility into request flow across various microservices, greatly aiding in troubleshooting and performance optimization.

- Log Aggregation and Analysis: Tools capable of log aggregation and analysis enable efficient identification of patterns and root causes of problems.

The specific tools and techniques used would depend on the system’s scale and complexity. For a smaller system, simple logging and basic metrics monitoring might suffice. However, larger, complex systems require more sophisticated approaches, as described above, ensuring robust monitoring and efficient issue resolution.

Q 27. How would you design a system to handle a high volume of concurrent users?

Designing a system for high volumes of concurrent users necessitates a scalable and robust architecture. Key considerations include:

- Horizontal Scaling: Utilizing multiple servers to distribute the load is essential for handling a large number of concurrent users. This allows for adding more servers as needed to accommodate increasing traffic.

- Load Balancing: A load balancer distributes incoming requests across multiple servers, preventing overload on any single server. Various load balancing strategies (round-robin, least connections, etc.) can be employed based on specific needs.

- Caching: Caching frequently accessed data (e.g., using Redis or Memcached) significantly reduces the load on the database and improves response times.

- Asynchronous Processing: Processing requests asynchronously (e.g., using message queues like RabbitMQ or Kafka) prevents bottlenecks and ensures responsiveness even under high load.

- Database Optimization: Proper database design, indexing, and query optimization are critical for efficient data retrieval and handling a large volume of transactions. Consider using a database that scales well, such as NoSQL databases.

- Microservices Architecture: Breaking down the application into independent microservices allows for independent scaling and easier maintenance.

Consider a social media platform; it needs to handle millions of concurrent users posting, liking, and commenting. Implementing all of these strategies would allow such a platform to gracefully handle peak loads and provide a responsive user experience.

Key Topics to Learn for System-Level Design and Architecture Interview

- Scalability and Performance: Understanding concepts like horizontal and vertical scaling, load balancing, caching strategies, and performance optimization techniques. Practical application includes designing systems capable of handling increasing user load and data volume.

- Distributed Systems: Mastering the principles of distributed systems, including consistency models (CAP theorem), fault tolerance, and data replication. Practical application involves designing robust and reliable systems that operate across multiple machines.

- Data Modeling and Databases: Choosing the right database technology (SQL, NoSQL) based on system requirements. Designing efficient schemas and understanding data consistency and integrity. Practical application involves designing databases for specific system functionalities.

- API Design and Microservices: Understanding RESTful APIs, microservices architecture, and service discovery. Practical application involves designing modular and scalable systems using microservices.

- Security Considerations: Implementing security best practices throughout the system design process, including authentication, authorization, and data encryption. Practical application includes designing secure systems that protect against vulnerabilities and threats.

- System Monitoring and Logging: Designing systems with robust monitoring and logging capabilities for troubleshooting and performance analysis. Practical application involves implementing tools and strategies for real-time system monitoring.

- Architectural Patterns: Familiarity with common architectural patterns like MVC, layered architecture, and event-driven architecture. Understanding their trade-offs and applicability to different scenarios.

Next Steps

Mastering System-Level Design and Architecture is crucial for career advancement in the tech industry, opening doors to senior roles with significantly higher compensation and responsibility. A strong understanding of these concepts demonstrates a deep technical aptitude and problem-solving ability highly valued by employers.

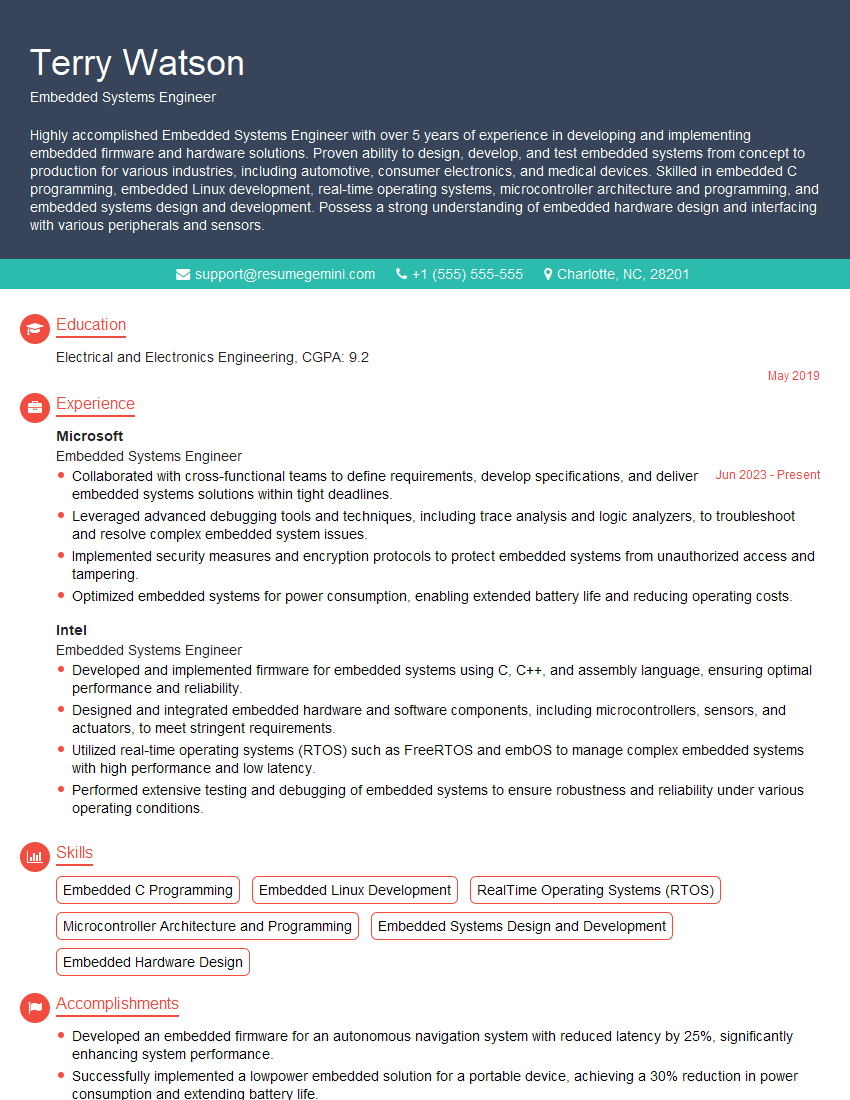

To maximize your job prospects, crafting an ATS-friendly resume is essential. A well-structured resume highlighting your relevant skills and experience will ensure your application gets noticed. We highly recommend using ResumeGemini to build a professional and effective resume. ResumeGemini provides tools and resources to create a compelling document that showcases your expertise. Examples of resumes tailored to System-Level Design and Architecture are available to guide you.

Explore more articles

Users Rating of Our Blogs

Share Your Experience

We value your feedback! Please rate our content and share your thoughts (optional).

What Readers Say About Our Blog

Hi, I represent an SEO company that specialises in getting you AI citations and higher rankings on Google. I’d like to offer you a 100% free SEO audit for your website. Would you be interested?

good