Interviews are opportunities to demonstrate your expertise, and this guide is here to help you shine. Explore the essential Experience with Reverse Engineering Techniques interview questions that employers frequently ask, paired with strategies for crafting responses that set you apart from the competition.

Questions Asked in Experience with Reverse Engineering Techniques Interview

Q 1. Explain the difference between static and dynamic analysis in reverse engineering.

Static and dynamic analysis are two fundamental approaches in reverse engineering, differing primarily in how they examine the target software.

Static analysis involves examining the software without executing it. Think of it like studying a blueprint of a house – you can understand its structure, rooms, and connections without actually living in it. Tools used include disassemblers (like IDA Pro or Ghidra) which translate machine code into human-readable assembly language, allowing us to analyze the code’s logic, data structures, and control flow. We can identify functions, variables, and potential vulnerabilities without ever running the program.

Dynamic analysis, on the other hand, involves running the software in a controlled environment (like a debugger) to observe its behavior in real-time. This is akin to actually living in the house – you experience how things work in practice. Debuggers like x64dbg allow us to set breakpoints, step through the code instruction by instruction, inspect registers and memory, and analyze program execution flow. This helps understand the program’s runtime behavior, identify hidden functionalities, and pinpoint vulnerabilities that might only manifest during execution.

For example, static analysis might reveal a potentially vulnerable function call, while dynamic analysis could confirm its exploitation during a specific user input scenario.

Q 2. Describe your experience with disassemblers and debuggers (e.g., IDA Pro, Ghidra, x64dbg).

I have extensive experience using IDA Pro, Ghidra, and x64dbg for reverse engineering tasks. IDA Pro, with its powerful scripting capabilities and extensive plugin ecosystem, is my go-to for complex binary analysis. I’ve used its graph view extensively to visualize control flow and identify critical sections of code. Ghidra, being open-source, provides a flexible and collaborative environment, particularly useful for team projects. Its decompilation capabilities are incredibly helpful in understanding high-level code structures. Finally, x64dbg excels in dynamic analysis, offering fine-grained control over program execution and deep memory inspection – invaluable for understanding runtime behavior and debugging exploits.

For instance, in a recent project analyzing a malware sample, I used IDA Pro’s powerful search capabilities to locate specific API calls indicating malicious behavior, and then used x64dbg to dynamically trace the execution flow of those calls and pinpoint how data was being manipulated. This provided a comprehensive understanding of the malware’s functionalities and attack vectors.

Q 3. How do you identify and handle obfuscation techniques in reverse-engineered code?

Obfuscation techniques aim to make reverse engineering difficult. They range from simple techniques like renaming variables to more complex methods like control flow obfuscation (making code execution paths hard to follow) and packing (compressing the code into a smaller, more difficult-to-understand form).

My approach involves a multi-layered strategy. First, I identify the type of obfuscation present. Then, I apply appropriate countermeasures. For example, if control flow is obfuscated, I utilize tools that can reconstruct the original flow graph. If the code is packed, I unpack it using tools like UPX or manually, depending on the packer used. String analysis and identifying API calls (even obfuscated ones) also help reveal the program’s purpose and functionalities. Sometimes, manual code analysis is unavoidable, requiring me to meticulously trace the execution path, often using the debugger to understand the logic behind the obfuscated code.

I recently encountered a program using extensive string encryption. By analyzing the encryption algorithm used, I was able to decrypt the strings and uncover hidden functions and their purpose.

Q 4. Explain the process of identifying vulnerabilities in a piece of software through reverse engineering.

Identifying vulnerabilities through reverse engineering requires a systematic approach. I typically start with static analysis to gain an overview of the software architecture, identifying potential vulnerabilities through pattern recognition (e.g., buffer overflows, SQL injection points, insecure cryptographic implementations). Then, I use dynamic analysis to test these potential vulnerabilities. For example, if static analysis reveals a function vulnerable to buffer overflow, I would use a debugger to craft specific inputs that trigger the overflow, observing the program’s behavior and identifying potential memory corruption or crashes.

Memory analysis is crucial. I look for memory leaks, improper access controls (e.g., reading from uninitialized memory), and race conditions that could be exploited. Furthermore, I analyze the interaction of the software with other components, such as operating systems or databases, to identify points of weakness.

Once potential vulnerabilities are identified, I meticulously document their nature, impact, and proof of concept, ensuring comprehensive reporting for appropriate remediation.

Q 5. What are some common techniques used to patch software vulnerabilities discovered through reverse engineering?

Patching vulnerabilities discovered through reverse engineering depends on the type and location of the vulnerability. Common techniques include:

- Code modification: Directly altering the assembly code to fix the vulnerability, for example, adding bounds checking to prevent buffer overflows.

- Function patching: Replacing a vulnerable function with a secure implementation. This could involve writing a new function or using a library function that handles the task securely.

- Data structure modification: Adjusting data structures to eliminate potential vulnerabilities, perhaps changing the size of buffers to prevent overflows.

- Control flow alteration: Changing the order of execution or adding conditional checks to prevent vulnerabilities from being triggered.

After patching, thorough testing is crucial to ensure the patch is effective and doesn’t introduce new problems. This typically involves retesting using the same exploitation techniques that were used to find the vulnerability.

Q 6. How do you approach analyzing packed or compressed executables?

Analyzing packed or compressed executables requires unpacking them first. Many packers use standard algorithms, and dedicated unpackers exist for common packers like UPX. However, custom packers require manual unpacking, a significantly more complex process.

My approach involves a combination of techniques. First, I attempt to identify the packer by analyzing file headers, imports, and the program’s behavior. If a dedicated unpacker is unavailable, I use a debugger to trace the program’s execution until I locate the unpacking routine. This typically involves setting breakpoints on known unpacking functions or monitoring memory allocation patterns. Once the unpacking routine is identified, I can either use a debugger to step through the unpacking process and reconstruct the unpacked code or use scripting to automate the process.

Manual unpacking requires deep understanding of assembly language and the packing algorithm, and is a time-consuming and challenging task requiring patience and meticulous attention to detail. I often use tools such as a hex editor to examine the raw data during the process and compare unpacked code with signatures for known packers.

Q 7. Describe your experience with different programming languages commonly used in reverse engineering (e.g., C, C++, Assembly).

Proficiency in C, C++, and Assembly is paramount in reverse engineering. C and C++ are frequently used to develop software, so understanding their compilation processes and common coding styles is crucial to interpret decompiled or disassembled code.

Assembly language is the foundation of reverse engineering, providing a low-level view of the code’s execution. My expertise in x86 and x64 assembly allows me to understand the inner workings of programs, identifying crucial operations and data manipulations. I frequently use assembly to fix bugs or patch vulnerabilities directly within binary files.

Beyond these, familiarity with other languages like Python is beneficial for scripting and automating tasks within reverse engineering tools. For example, Python is extensively used to write scripts for IDA Pro and Ghidra, automating repetitive tasks like analyzing large codebases or constructing reports.

Q 8. Explain your understanding of different types of memory (stack, heap, data segment).

Understanding memory segments—the stack, heap, and data segment—is fundamental to reverse engineering. Think of them as different areas within a program’s memory where data is stored and managed.

Stack: This is a LIFO (Last-In, First-Out) structure primarily used for managing function calls and local variables. When a function is called, its parameters and local variables are pushed onto the stack. When the function returns, these values are popped off. It’s crucial for tracing function calls and understanding variable lifecycles during reverse engineering. Imagine a stack of plates – the last plate you put on is the first one you take off.

Heap: This is a dynamically allocated memory region. Memory is requested from the heap as needed during program execution, often for objects and large data structures whose size isn’t known at compile time. Memory leaks and heap overflows are common vulnerabilities that can be identified through reverse engineering by analyzing heap allocation and deallocation patterns. Think of the heap as a large pool of available memory, from which you can request chunks as needed.

Data Segment: This holds globally declared variables and constants. It’s initialized at program startup and persists throughout the program’s execution. Analyzing the data segment reveals initial values and program configurations. Think of this as a storehouse of static information for the program.

In reverse engineering, examining these segments helps reconstruct the program’s execution flow and understand data handling. For instance, if I see repeated memory accesses in the heap, it could indicate a buffer overflow vulnerability.

Q 9. How do you handle anti-debugging techniques encountered during reverse engineering?

Anti-debugging techniques are designed to prevent reverse engineers from analyzing code. These techniques range from simple checks for debuggers to sophisticated obfuscation methods. My approach involves a combination of strategies:

Identifying Anti-Debugging Techniques: I use debuggers with features to identify common anti-debugging tricks. This includes examining system calls and API calls for unusual behavior. For example, a common technique is checking for the presence of a debugger by examining the process’s thread list.

Circumventing Techniques: Once identified, I employ various techniques to bypass them. This might involve patching the code (in a controlled environment, of course!), modifying the debugger’s behavior, or using advanced debugging techniques like hardware breakpoints.

Dynamic Analysis Techniques: I heavily rely on dynamic analysis techniques such as tracing the execution flow of the program. This helps me to understand the flow of execution even if the anti-debugging mechanisms try to obscure certain parts of the code.

Using Specialized Tools: Tools like debuggers (e.g., x64dbg, WinDbg), disassemblers (e.g., IDA Pro), and debuggers with anti-anti-debugging capabilities assist in detecting and overcoming these obstacles.

For example, if a program checks for the presence of a debugger by querying the IsDebuggerPresent() API, I might patch the API call to return false or use a debugger that hides itself from the program. This always needs to be done ethically and responsibly, respecting legal and ethical boundaries.

Q 10. How do you determine the function of a particular piece of code through reverse engineering?

Determining the function of a code snippet involves a combination of static and dynamic analysis. It’s like solving a puzzle, where you piece together clues from different sources.

Static Analysis: This involves examining the code without actually running it. I use disassemblers to see the machine code instructions. I then look for patterns: API calls, variable usage, data structures, etc. The context of the code within the surrounding functions is crucial for interpretation. Comments, if present (often not in malicious code!), can also be invaluable.

Dynamic Analysis: This involves running the code in a debugger and observing its behavior. I set breakpoints, step through the code, examine registers and memory, and track the flow of execution. This helps me understand the code’s interaction with other parts of the program and external systems. I can input various data to see how the function handles it.

Data Flow Analysis: Tracing the flow of data helps me understand how information is processed. It helps me identify how input variables influence the output and to spot errors or vulnerabilities.

For instance, if I see a series of instructions that involve comparing a string against several known patterns, I might hypothesize that it’s a validation function. Dynamic analysis would then allow me to test different inputs and verify this hypothesis.

Q 11. Describe your experience with using scripting languages (e.g., Python) for automating reverse engineering tasks.

Scripting languages like Python are indispensable in reverse engineering, mainly for automation. I use Python extensively to automate repetitive tasks, increasing efficiency and accuracy. Some examples:

Parsing Disassembly Output: Python can efficiently process the output of disassemblers (like IDA Pro) to extract relevant information, such as function calls or variable types. I can write scripts to automatically generate reports, helping me analyze large volumes of data quickly.

Generating Shellcode: Python can be used to create and modify shellcode (small programs designed to be executed in a target’s environment), which can be useful in penetration testing.

Automating Dynamic Analysis: I can create scripts to automate tasks like setting breakpoints, stepping through code, and examining memory during debugging sessions, thereby drastically reducing the time spent in manual analysis. This is especially useful when dealing with a lot of repetitive analyses.

Binary Patching: While more complex, scripts can automate simple binary patching tasks, improving the efficiency and accuracy of modifying code sections. One needs to exercise extreme caution when patching to avoid introducing unintended errors.

Example: A simple Python script using capstone to disassemble a given buffer.

import capstone

md = capstone.Cs(capstone.CS_ARCH_X86, capstone.CS_MODE_64)

code = b'\x48\x8b\x05\x00\x00\x00\x00'

for i in md.disasm(code, 0x1000):

print(f"0x{i.address:x}\t{i.mnemonic}\t{i.op_str}")Q 12. Explain the concept of code caves and how they are utilized.

A code cave is an unused or underutilized region of memory in a program. Think of it as a spare room in a house. Reverse engineers can use code caves to inject their own code. This is often used for instrumentation or to change program behavior.

Utilization:

Instrumentation: Inserting code to log events, track data, or modify variables. This can help in understanding the flow of execution.

Patching: To fix bugs, add features, or change functionality. This is especially useful when the source code is unavailable.

Hooking: To intercept function calls. This allows the injection of code to be executed before or after the original function.

Finding Code Caves: Identifying suitable code caves requires careful analysis. They often exist in large unused data sections. Finding the right location is vital to avoid causing errors or crashes.

Ethical Considerations: It’s crucial to remember that injecting code into a program without authorization is unethical and, in many cases, illegal.

Q 13. What is the purpose of a control flow graph (CFG) in reverse engineering?

A Control Flow Graph (CFG) is a visual representation of a program’s execution flow. It shows the possible paths a program might take, representing functions as nodes and the transitions between them as edges. It’s essentially a roadmap of the program.

Purpose in Reverse Engineering:

Understanding Program Structure: CFGs help visualize the program’s structure, making it easier to comprehend the relationships between different functions and code sections.

Identifying Control Flow Vulnerabilities: Analyzing the CFG can help identify vulnerabilities related to control flow, such as buffer overflows, which may lead to control flow hijacking.

Data Flow Analysis: CFGs can be used in conjunction with data flow analysis to understand how data moves throughout the program. This is important in identifying data leaks or vulnerabilities where sensitive data is improperly handled.

Automated Analysis: Many reverse engineering tools automatically generate CFGs, greatly assisting in code analysis. This reduces human effort in mapping out the code’s execution paths.

Imagine trying to understand a complex maze. The CFG is like a map of that maze, guiding you through its twists and turns.

Q 14. How do you identify and analyze API calls within reverse-engineered code?

Identifying and analyzing API calls is crucial in reverse engineering, as they reveal how the program interacts with the operating system and other software components. It’s like examining a program’s conversations with its environment.

Methods:

Using Debuggers: Debuggers can be used to set breakpoints on API calls, allowing examination of the arguments passed to and returned from the calls. This shows the context and usage.

String Analysis: Searching for API names within the program’s strings can give clues about the functions being used. This is a good starting point in identifying potential API calls.

Disassembler Analysis: Examining the assembly code for instructions that call system functions or other libraries provides detailed information about the API calls being made.

Import Table (for executables): The import table of an executable file lists all the imported APIs, which is readily available and helpful in getting a high-level overview of the functionality.

Analysis: Once identified, analyzing the arguments and return values provides crucial context about what the program is doing. For example, if I see a program making repeated calls to the CreateFile() API with specific file names, I might suspect it’s accessing or manipulating those files. Knowing the function of the API gives critical information to interpret the program’s behavior.

Q 15. Describe your experience with analyzing different file formats (e.g., PE, ELF, Mach-O).

Analyzing different file formats is fundamental to reverse engineering. Each format—like PE (Portable Executable) for Windows, ELF (Executable and Linkable Format) for Linux, and Mach-O (Mach Object) for macOS—has its own structure and conventions. My experience involves understanding these structures to extract valuable information about the software’s functionality and behavior.

For example, with PE files, I’d focus on the header information (like the entry point, sections, imports, and exports) to understand the program’s initialization and dependencies. In ELF files, I’d look at the program header table, section headers, and symbol tables to get a similar understanding. Mach-O files have their own specific segments and load commands. I utilize tools like IDA Pro, Ghidra, and radare2 to aid in this process, visualizing the file structure and disassembling the code to understand the underlying logic.

A real-world example: I once analyzed a suspicious PE file suspected of being malware. By examining the imports, I identified calls to known malicious network functions, confirming my suspicions. The export table revealed that the malware was designed to expose specific services which pointed to the way it could spread. This analysis allowed for effective mitigation.

Career Expert Tips:

- Ace those interviews! Prepare effectively by reviewing the Top 50 Most Common Interview Questions on ResumeGemini.

- Navigate your job search with confidence! Explore a wide range of Career Tips on ResumeGemini. Learn about common challenges and recommendations to overcome them.

- Craft the perfect resume! Master the Art of Resume Writing with ResumeGemini’s guide. Showcase your unique qualifications and achievements effectively.

- Don’t miss out on holiday savings! Build your dream resume with ResumeGemini’s ATS optimized templates.

Q 16. How do you deal with memory corruption vulnerabilities?

Memory corruption vulnerabilities, such as buffer overflows, use-after-free, and double-free errors, are critical security flaws. Dealing with them involves a combination of static and dynamic analysis techniques.

Static analysis uses tools like static analyzers to identify potential vulnerabilities in the source code (if available). This helps identify patterns that might lead to memory corruption before the code is even executed. Dynamic analysis uses debuggers like GDB or WinDbg to observe program behavior in real time. By setting breakpoints and tracing memory access, you can pinpoint exactly where memory corruption occurs. Tools like Valgrind can also be used to detect memory errors during runtime.

My approach involves a combination of these methods. I start with static analysis to get an overview. Then, I use dynamic analysis to reproduce the vulnerability and examine the state of memory at the precise moment of the corruption. This detailed understanding allows for precise identification of the root cause and facilitates development of effective patches and mitigation strategies.

Q 17. What are some common techniques for software protection and how can they be bypassed?

Software protection techniques are employed to prevent unauthorized access, modification, or reverse engineering. Common techniques include obfuscation, code virtualization, anti-debugging, and software watermarking.

- Obfuscation: Makes the code harder to understand by renaming variables, inserting irrelevant instructions, and using complex control flow.

- Code Virtualization: Executes the code in a virtual machine, making it difficult to analyze directly.

- Anti-debugging: Detects debugging tools and attempts to prevent analysis.

- Software Watermarking: Embeds hidden markers to identify the source of the software.

Bypassing these techniques requires a layered approach. For obfuscation, I use deobfuscation tools and techniques to simplify the code. For code virtualization, I’ll often employ dynamic analysis to trace the execution flow within the virtual machine. Anti-debugging techniques need to be carefully circumvented; this might involve patching or using advanced debugging techniques. Finally, identifying and removing software watermarks can be a challenging task, usually requiring a deep understanding of the watermarking algorithm.

For example, I’ve successfully bypassed anti-debugging techniques in several instances by modifying the debugger or using a debugger with enhanced anti-debugging capabilities. This includes patching specific instructions to prevent the detection of common debugging flags.

Q 18. Explain your experience with hardware reverse engineering, such as chip analysis.

Hardware reverse engineering, particularly chip analysis, is a more challenging but rewarding aspect of the field. It involves physically examining and analyzing the hardware components of a device to understand its functionality. This can range from simple circuit board analysis to detailed microscopic examination of integrated circuits.

My experience includes using techniques such as:

- Decapsulation: Carefully removing the protective packaging from an integrated circuit to expose the internal structure.

- Microscopy: Using optical or electron microscopes to examine the circuit layout and components.

- Logic Analysis: Using logic analyzers to capture and analyze the signals between different parts of the circuit.

- Reverse Engineering tools and equipment: utilizing specialized equipment such as oscilloscopes and protocol analyzers to understand the data streams within the chip.

Ethical considerations are paramount here; accessing and analyzing a chip without authorization is illegal. My work in this area is strictly within legally and ethically acceptable bounds.

Q 19. Describe your experience with analyzing network traffic related to malware.

Analyzing network traffic related to malware involves examining the communication patterns between the malware and its command-and-control servers or other external resources. This can reveal important insights into the malware’s behavior, functionality, and the attackers’ infrastructure.

Tools like Wireshark, tcpdump, and specialized network security monitoring systems are invaluable. I focus on identifying suspicious patterns, including unusual ports, protocols, and data payloads. Analyzing the data requires an understanding of various network protocols and common malware communication techniques.

A recent case involved a piece of malware that used a sophisticated encryption scheme to communicate with its command-and-control server. By carefully dissecting the network traffic, I was able to identify and subsequently break down the encryption algorithm and reveal the communications between the malware and the attackers. This allowed us to not only understand its actions but also to identify and disrupt the attacker’s infrastructure.

Q 20. How do you document your reverse engineering findings effectively?

Effective documentation is crucial in reverse engineering. My approach uses a multi-faceted strategy combining structured reports with visual aids.

I typically start with a high-level overview of the target system, detailing its overall architecture and functionality. This is followed by detailed descriptions of individual components, algorithms, and data structures. I use diagrams (flowcharts, network graphs, and data structure visualizations) extensively. Disassembly listings (with comments) are included, as are code snippets to highlight significant sections. Detailed logs of experiments and results are maintained. Finally, I often use wikis or dedicated documentation systems to allow for easier collaboration and updates as the project progresses. This meticulous approach ensures that my findings are clear, concise, and easily understandable to others.

Q 21. What are some ethical considerations in reverse engineering?

Ethical considerations are paramount in reverse engineering. It’s crucial to operate within legal and ethical boundaries.

- Consent: Always obtain permission from the copyright holder before reverse engineering software or hardware. Reverse engineering software without authorization can lead to legal issues.

- Purpose: The purpose of reverse engineering must be ethically sound. It’s inappropriate to use the acquired knowledge for malicious purposes such as creating malware or exploiting vulnerabilities for personal gain.

- Confidentiality: Respect the confidentiality of any information discovered during the process, especially if it relates to sensitive data or intellectual property.

- Transparency: It is important to be transparent and open about the reverse engineering process and findings, particularly when working with others.

In my work, I always adhere to these principles. I only undertake projects where I have explicit permission or when it is legally permissible, for example, in security research to identify vulnerabilities and develop solutions.

Q 22. Explain your experience working with various debugging tools and techniques.

My experience with debugging tools spans a wide range, from the ubiquitous GDB (GNU Debugger) and LLDB (Low Level Debugger) for low-level analysis to more specialized tools like IDA Pro (Interactive Disassembler) for reverse engineering and WinDbg for Windows kernel-level debugging. I’ve also extensively used debuggers integrated into IDEs such as Visual Studio and Eclipse. My techniques involve setting breakpoints to halt execution at specific points in the code, examining memory contents using memory watches, stepping through code line by line (single stepping), and using call stacks to trace function calls. For instance, while analyzing a piece of software exhibiting unexpected behavior, I might set a breakpoint at the function suspected to be causing the issue and then step through its execution, examining registers and memory to identify the source of the problem. This allows me to pinpoint issues such as incorrect calculations or unintended memory access.

Beyond traditional debuggers, I’m proficient in using dynamic analysis techniques. This includes employing tools like strace (on Linux) or Process Monitor (on Windows) to observe system calls made by a program, revealing file access patterns, network connections, and other interactions with the operating system. This is incredibly useful in understanding the runtime behavior of a program and can help identify malicious activities or unexpected side effects. For example, I used strace to identify a seemingly benign program’s attempt to connect to a suspicious IP address, revealing a hidden backdoor.

Q 23. Describe your approach to analyzing encrypted data.

Analyzing encrypted data requires a multifaceted approach. My initial step involves identifying the encryption algorithm used. This can often be inferred from the context of the data and the application that uses it. Common indicators might include specific file headers or known encryption libraries linked within the software. Once identified, the next step depends heavily on the type of encryption.

For symmetric encryption algorithms (like AES or DES), where the same key is used for encryption and decryption, I would explore possibilities like brute-forcing the key (if feasible), finding the key embedded within the program, or searching for vulnerabilities in the encryption process. For asymmetric encryption (like RSA), which involves public and private keys, recovering the private key is generally much harder. I might investigate weaknesses in the key generation process or explore known vulnerabilities in the specific implementation being used.

In cases where the encryption algorithm is unknown, I might try frequency analysis (for simple substitution ciphers), or explore using tools that can identify common encryption algorithms based on patterns in the ciphertext. I always try to understand the context of the data, such as where it was found, what program it’s associated with, and any metadata associated with it, as this often provides valuable clues.

Q 24. How do you handle software that employs self-modifying code?

Self-modifying code presents a significant challenge in reverse engineering, as the code’s behavior changes dynamically during execution. My approach involves a combination of static and dynamic analysis techniques. Static analysis, which examines the code without executing it, is crucial to understand the initial structure and identify any self-modification routines. I might use disassemblers like IDA Pro to analyze the code and identify areas where self-modification occurs.

Dynamic analysis is equally important for tracking these changes. I might use debuggers like GDB or LLDB to step through the code’s execution, carefully monitoring memory changes. Techniques like memory breakpoints can be particularly helpful in detecting modifications to specific code segments. Furthermore, I would try to trace the modification routines to understand their logic and ultimately reconstruct the original code or a stable representation of the running code at a particular point in time.

Imagine a program that patches its own code to evade detection by anti-virus software. By using dynamic analysis and watching the memory regions during execution, I can identify the locations where the self-modification takes place and possibly determine the patched instructions, thereby understanding how it avoids detection. This would then help in the design of mitigation techniques.

Q 25. What are some common challenges you face during reverse engineering projects?

Reverse engineering projects often present numerous challenges. One common problem is dealing with obfuscation techniques used by developers to deliberately hinder analysis. This can involve code packing, encryption of code sections, and use of complex control-flow structures to make it difficult to understand the program’s logic.

Another significant challenge is handling different architectures and operating systems. A program compiled for an ARM processor, for example, will require different tools and techniques compared to one compiled for an x86 processor. Furthermore, the increasing sophistication of software, including the heavy use of dynamic libraries and virtual machines, makes analysis more complex.

Finally, time constraints and limited resources are often significant challenges. A thorough analysis can be time-consuming, demanding patience, meticulous attention to detail, and strong problem-solving skills. This is exacerbated by the constant evolution of software development techniques and obfuscation methods.

Q 26. Describe your experience with different types of malware and how to analyze them.

My experience encompasses various malware families, from simple viruses and worms to sophisticated rootkits and ransomware. The analysis process typically begins with careful containment within a sandboxed environment to prevent infection of the analysis system. Then, I use a combination of static and dynamic analysis techniques.

Static analysis involves using disassemblers to examine the malware’s code to identify its functions and capabilities. This helps to understand the overall structure and functionalities without actually executing the code. Dynamic analysis, on the other hand, involves executing the malware in a controlled environment, using tools like debuggers and sandboxes to monitor its behavior and network communications. I might use tools that capture system calls and network traffic to identify its malicious actions such as file deletion, data exfiltration, or attempts to communicate with command-and-control servers.

For example, when analyzing ransomware, I’d focus on identifying the encryption algorithm, the key generation process, and where the encrypted data is stored. For a botnet component, I’d concentrate on analyzing its communication channels with the command-and-control server to learn about its functionalities and its communication patterns. Every malware analysis requires a tailored approach based on its specific functionality and methods of operation.

Q 27. Explain your approach to identifying and analyzing rootkits.

Identifying and analyzing rootkits requires a thorough approach, due to their nature of hiding their presence from the operating system. The initial step is typically to use memory forensic tools to search for signs of rootkit activity within the system memory. This helps to find hidden processes, drivers, or other components that could indicate a rootkit’s presence. I often use Volatility, a memory forensics framework.

Static analysis of system files, such as drivers and bootloaders, is essential to identify any modifications that might have been made by the rootkit. Comparing these files against known clean versions is critical for detection. Dynamic analysis, including using system call monitors, helps to uncover any unusual system calls or activities that may suggest a rootkit’s presence. I might also run integrity checks on critical system files to pinpoint any unauthorized changes.

Furthermore, I might employ behavioral analysis techniques by observing the system’s behavior for anomalies. For example, a sudden drop in system performance or unusual network activity could be indicative of a rootkit attempting to communicate with a command-and-control server. Identifying and removing a rootkit typically involves a careful process of restoring system files to their clean states and removing any malicious processes or drivers.

Q 28. How do you prioritize tasks and manage your time effectively during a reverse engineering project?

Effective task prioritization and time management are vital in reverse engineering. My approach starts with a thorough understanding of the project’s objectives and scope. I break down the project into smaller, manageable tasks, focusing on the most critical ones first. This often involves prioritizing tasks based on their potential impact and complexity. For example, if dealing with a large binary, I might prioritize analyzing the entry point and critical functions before delving into less crucial code sections.

I use project management tools and techniques to track progress, deadlines, and dependencies between tasks. This ensures that I stay organized and focused on achieving the project goals. Regular reviews of my progress help identify potential delays or obstacles early on, allowing for adjustments to the schedule and task prioritization. Effective communication with stakeholders is also key, ensuring everyone is aligned with the progress and any emerging challenges.

In a real-world scenario, I might use a Kanban board to visually manage tasks, color-coding them based on priority. This visual representation helps maintain a clear overview of the progress and allows for adjustments to task order based on new information or shifting priorities during the project. The ability to adjust based on new findings is essential in reverse engineering due to its inherent exploratory nature.

Key Topics to Learn for Experience with Reverse Engineering Techniques Interview

- Disassembly and Decompilation: Understanding the process of converting machine code back into a higher-level representation (assembly or source code). Practical application: Analyzing malware for malicious behavior.

- Static Analysis: Examining software without executing it. Techniques include inspecting binaries, using debuggers, and employing static analysis tools. Practical application: Identifying vulnerabilities in software before deployment.

- Dynamic Analysis: Observing software behavior during execution. This involves using debuggers, tracing tools, and monitoring system calls. Practical application: Debugging runtime errors and identifying memory leaks.

- Software Security Principles: Understanding common vulnerabilities (buffer overflows, injection flaws, etc.) and how they can be exploited. Practical application: Designing more secure software and identifying weaknesses in existing systems.

- Debugging and Troubleshooting: Mastering the art of identifying and resolving software issues through various debugging techniques. Practical application: Effective problem-solving and root cause analysis in complex software systems.

- Specific Tools and Technologies: Familiarity with common reverse engineering tools (e.g., IDA Pro, Ghidra, radare2) and their functionalities. Practical application: Efficiently navigating and analyzing different software types.

- Operating System Internals: A solid grasp of how operating systems work, including memory management, process scheduling, and system calls. Practical application: Understanding the underlying mechanisms exploited by malware and vulnerabilities.

- Programming Language Knowledge: Proficiency in at least one programming language (C, C++, Python, etc.) is essential for effective reverse engineering. Practical application: Writing scripts to automate analysis tasks and enhance efficiency.

Next Steps

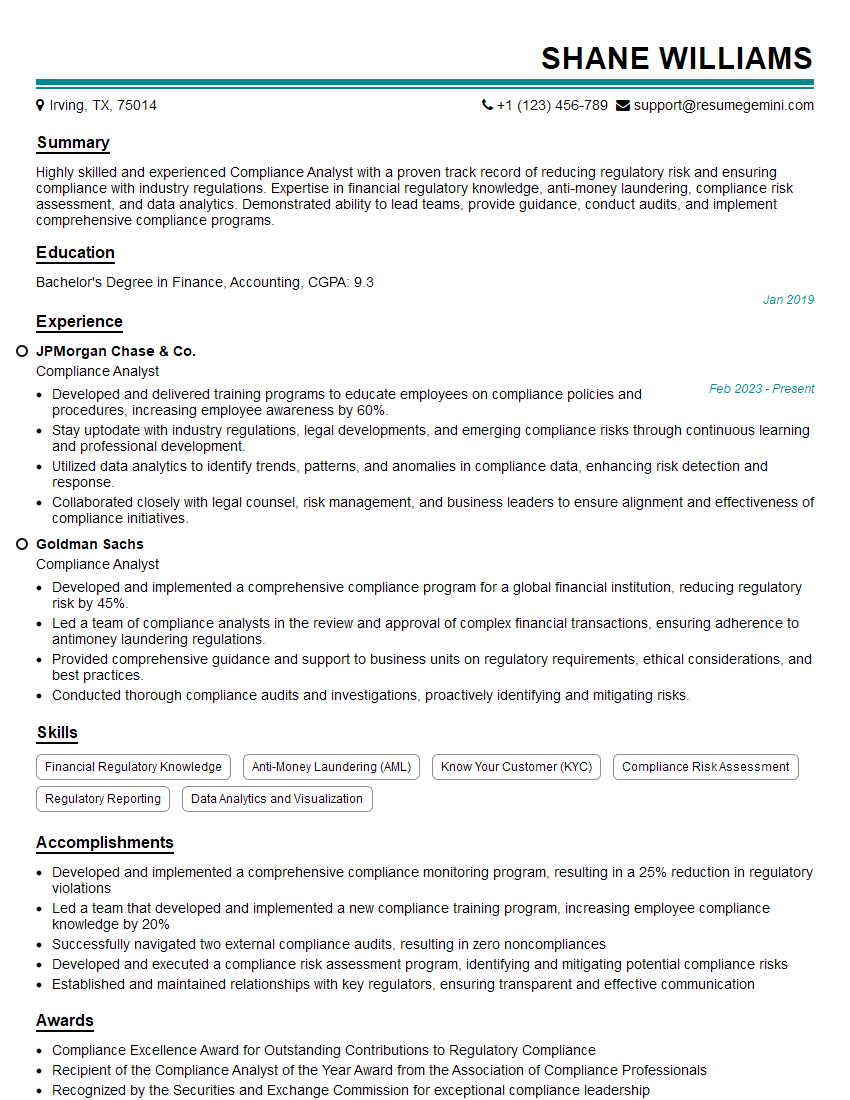

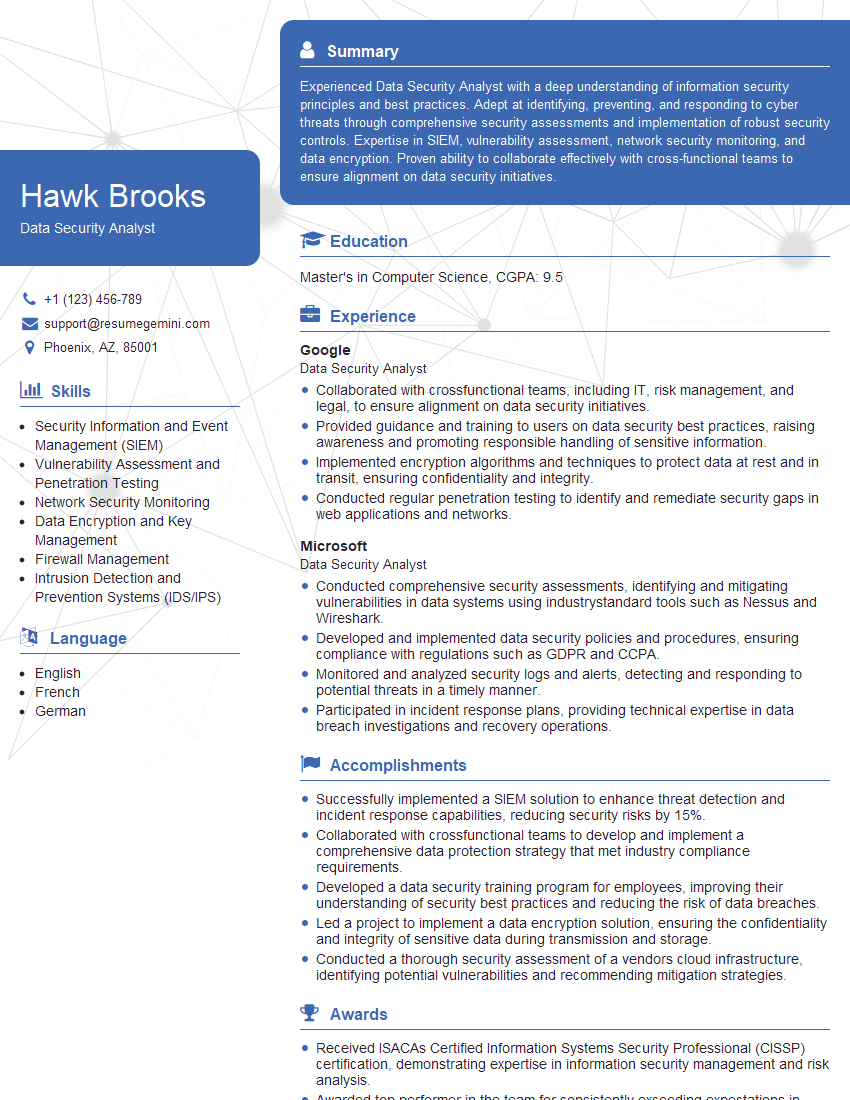

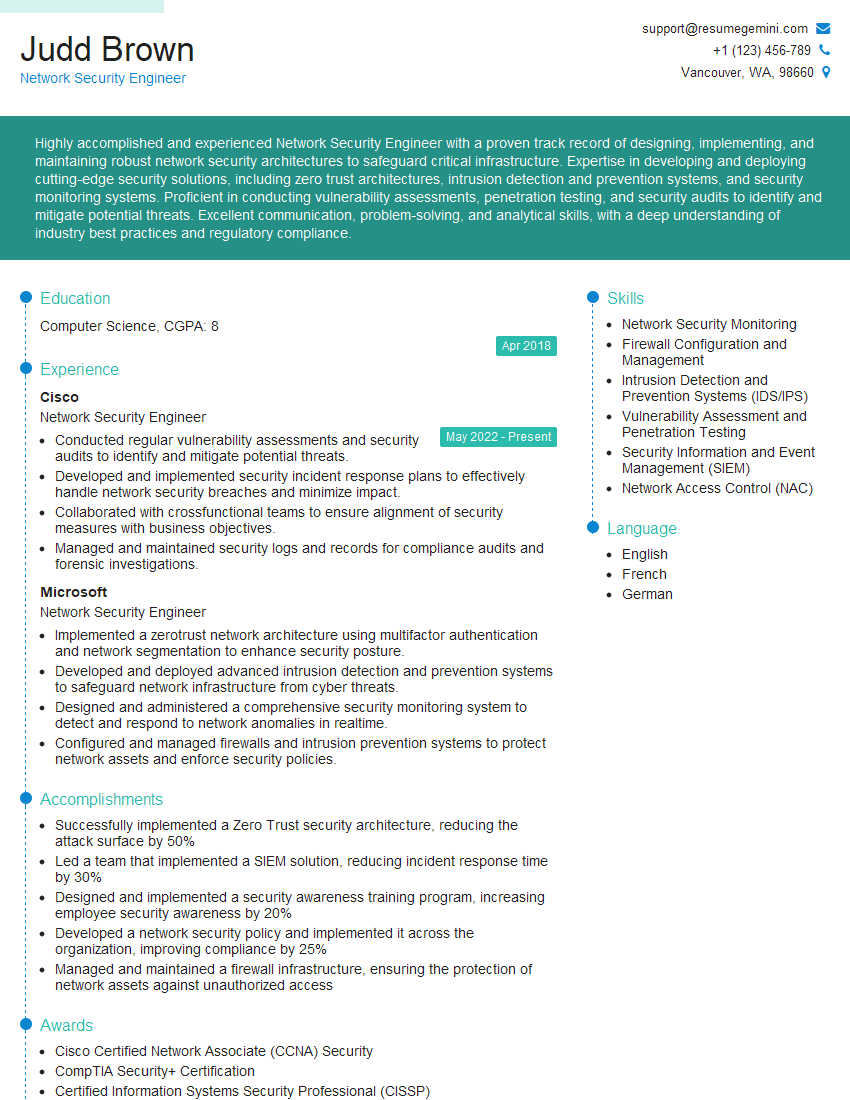

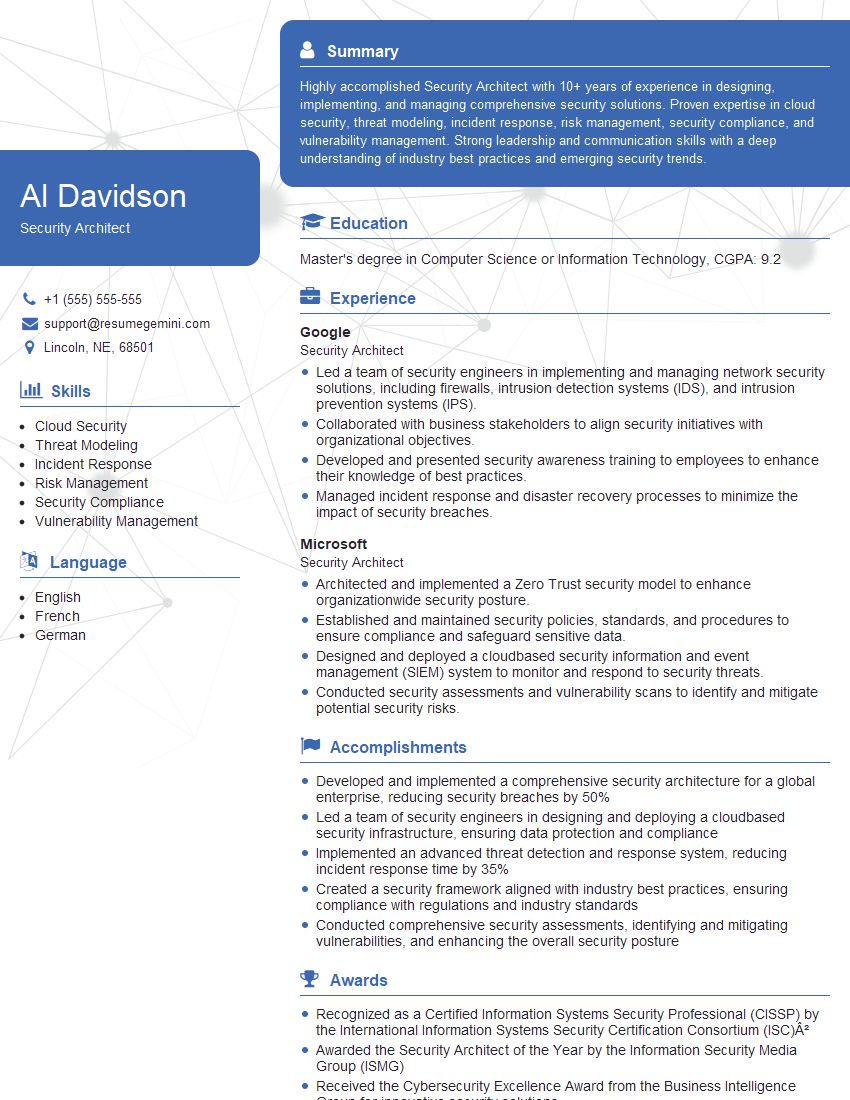

Mastering reverse engineering techniques significantly enhances your problem-solving skills and opens doors to exciting roles in cybersecurity, software development, and digital forensics. A strong understanding of these techniques is highly valued by employers and can significantly boost your career trajectory. To maximize your job prospects, focus on building an ATS-friendly resume that effectively showcases your expertise. ResumeGemini is a trusted resource to help you create a compelling and professional resume. Examples of resumes tailored to highlight experience with reverse engineering techniques are available to further guide you.

Explore more articles

Users Rating of Our Blogs

Share Your Experience

We value your feedback! Please rate our content and share your thoughts (optional).

What Readers Say About Our Blog

Hello,

We found issues with your domain’s email setup that may be sending your messages to spam or blocking them completely. InboxShield Mini shows you how to fix it in minutes — no tech skills required.

Scan your domain now for details: https://inboxshield-mini.com/

— Adam @ InboxShield Mini

Reply STOP to unsubscribe

Hi, are you owner of interviewgemini.com? What if I told you I could help you find extra time in your schedule, reconnect with leads you didn’t even realize you missed, and bring in more “I want to work with you” conversations, without increasing your ad spend or hiring a full-time employee?

All with a flexible, budget-friendly service that could easily pay for itself. Sounds good?

Would it be nice to jump on a quick 10-minute call so I can show you exactly how we make this work?

Best,

Hapei

Marketing Director

Hey, I know you’re the owner of interviewgemini.com. I’ll be quick.

Fundraising for your business is tough and time-consuming. We make it easier by guaranteeing two private investor meetings each month, for six months. No demos, no pitch events – just direct introductions to active investors matched to your startup.

If youR17;re raising, this could help you build real momentum. Want me to send more info?

Hi, I represent an SEO company that specialises in getting you AI citations and higher rankings on Google. I’d like to offer you a 100% free SEO audit for your website. Would you be interested?

Hi, I represent an SEO company that specialises in getting you AI citations and higher rankings on Google. I’d like to offer you a 100% free SEO audit for your website. Would you be interested?

good