The right preparation can turn an interview into an opportunity to showcase your expertise. This guide to Target Classification and Identification interview questions is your ultimate resource, providing key insights and tips to help you ace your responses and stand out as a top candidate.

Questions Asked in Target Classification and Identification Interview

Q 1. Explain the different methodologies used in target classification.

Target classification methodologies vary depending on the available data and the desired level of detail. Broadly, they fall into several categories:

- Signature-based classification: This relies on identifying unique characteristics or signatures of a target. For example, recognizing a specific type of aircraft based on its radar signature or a particular vehicle based on its engine sound. This method is efficient when signatures are well-defined and easily measurable.

- Machine learning-based classification: This approach uses algorithms to learn patterns from large datasets of target features. For instance, a neural network could be trained on images of various vehicles to classify them automatically. Machine learning excels when dealing with complex, high-dimensional data where manual signature definition is difficult.

- Knowledge-based classification: This method relies on expert knowledge and rules to classify targets. For example, a human analyst might use intelligence reports and geographical information to identify a specific building as a potential command center. This is valuable when dealing with incomplete or ambiguous data, but it can be subjective and time-consuming.

- Hybrid approaches: Often, a combination of these methods yields the most robust results. For example, a system might use machine learning for initial classification, followed by human review and knowledge-based refinement to ensure accuracy.

The choice of methodology depends heavily on factors such as the nature of the data, available resources (computational power, expert knowledge), and the desired level of accuracy and speed.

Q 2. Describe the process of target identification from sensor data.

Target identification from sensor data is a multi-step process. It begins with data acquisition from various sensors (radar, electro-optical, acoustic, etc.). This raw data then undergoes several stages:

- Preprocessing: This involves cleaning and normalizing the data to remove noise and artifacts. This might include filtering, smoothing, and calibration.

- Feature extraction: Relevant features are extracted from the preprocessed data. These features could include size, shape, speed, trajectory, spectral signature, or other relevant attributes depending on the sensor type and the target of interest.

- Classification: Using one of the methodologies described in the previous question (signature-based, machine learning, etc.), the extracted features are used to classify the target into a specific category (e.g., type of aircraft, type of vehicle).

- Identification: This final step involves pinpointing the specific identity of the target. For example, distinguishing a specific model of aircraft or a particular individual based on unique identifying features like registration numbers or biometric data. This often requires corroboration with other intelligence sources.

Imagine trying to identify a car from a distance: you might first classify it as a ‘car’ based on its overall shape, then identify it as a ‘red sedan’ based on colour and body style, and finally identify its specific make and model by examining details like the headlights or logo.

Q 3. How do you assess the reliability of different intelligence sources for target identification?

Assessing the reliability of intelligence sources is crucial for accurate target identification. We employ a multi-faceted approach:

- Source credibility: We evaluate the past performance and track record of the source. A source with a history of providing accurate information is considered more reliable than one with a history of inaccuracies or misinformation.

- Source corroboration: We cross-reference information from multiple independent sources to confirm its accuracy. If several independent sources report the same information, the reliability is significantly increased.

- Data validation: We verify the information against other available data sources, such as open-source intelligence (OSINT), geospatial data, and other sensor readings. Inconsistencies may indicate unreliable information.

- Bias identification: We assess the potential biases of the source, recognizing that sources may be influenced by political, ideological, or other factors that could distort the information.

- Methodological rigor: We evaluate the methods employed by the source to collect and process information. Rigorous methodologies increase confidence in the reliability of the information.

Think of it like a jury considering evidence: multiple witnesses supporting the same account are more believable than a single, potentially biased witness. We use a similar process of careful consideration and corroboration to make judgements about the reliability of intelligence sources.

Q 4. What are the key characteristics used to classify targets?

Key characteristics used to classify targets depend heavily on the context and the available data. Some common characteristics include:

- Physical attributes: Size, shape, color, texture, material composition, unique markings or features.

- Behavioral attributes: Speed, direction of movement, trajectory, patterns of activity, communication signals.

- Functional attributes: Intended purpose, operational capabilities, role within a larger system (e.g., command and control, weapons deployment).

- Temporal attributes: Time of appearance, duration of activity, frequency of appearance.

- Spatial attributes: Location, geographic coordinates, proximity to other targets.

- Spectral attributes: Infrared, radar, acoustic, or electromagnetic signatures.

For example, classifying an aircraft might involve its size, shape, radar cross-section, flight pattern, and the presence of specific weapons systems.

Q 5. Discuss the challenges of classifying targets in complex environments.

Classifying targets in complex environments presents several challenges:

- Clutter and occlusion: Obstructions such as buildings, trees, or weather conditions can obscure the target, making it difficult to obtain clear observations.

- Camouflage and deception: Targets may be designed to blend in with their surroundings or actively deceive sensors.

- Data ambiguity: Incomplete or conflicting sensor data can lead to uncertainty in classification.

- Dynamic environments: The target’s characteristics or behavior may change rapidly over time, leading to challenges in tracking and classification.

- Computational complexity: Processing large amounts of data from multiple sensors in real-time can be computationally intensive.

Imagine trying to identify a single soldier in a crowded marketplace – the clutter and movement make it significantly harder than identifying a large tank in an open field. Overcoming these challenges often requires advanced signal processing techniques, sophisticated algorithms, and careful integration of multiple intelligence sources.

Q 6. Explain the concept of target prioritization.

Target prioritization involves ranking targets based on their importance and urgency. This involves considering various factors:

- Threat level: The potential damage or harm the target can inflict.

- Vulnerability: The ease with which the target can be neutralized or disrupted.

- Value: The strategic or operational importance of the target.

- Urgency: The time sensitivity of addressing the threat posed by the target.

- Feasibility: The likelihood of successfully engaging the target given available resources and capabilities.

A simple example: In a military context, a high-value target like an enemy command center might be prioritized over a lower-value target like a supply depot, even if the supply depot is more vulnerable. Prioritization is a critical step in resource allocation and mission planning.

Q 7. How do you handle uncertainty and ambiguity in target classification?

Uncertainty and ambiguity in target classification are inherent challenges. We use several strategies to handle them:

- Probabilistic approaches: Instead of assigning a single definitive classification, we assign probabilities to different possible classifications. This allows us to quantify our uncertainty.

- Data fusion: Combining data from multiple sensors or sources can reduce uncertainty and resolve ambiguities. Consistent information across multiple sources increases confidence in the classification.

- Human-in-the-loop systems: Integrating human analysts into the process allows for the incorporation of expert judgment and contextual knowledge to resolve ambiguities that algorithms may struggle with.

- Adaptive algorithms: Algorithms that can learn and adapt to changing conditions, incorporate new information, and refine their classifications over time.

- Sensitivity analysis: Assessing how sensitive the classification is to changes in the input data or parameters. This helps in identifying potential sources of error and uncertainty.

Essentially, we embrace uncertainty as a reality and employ methods that allow us to manage it effectively, making informed decisions despite the incomplete information.

Q 8. What are the ethical considerations in target classification and identification?

Ethical considerations in target classification and identification are paramount. We’re not just dealing with data; we’re dealing with potential impacts on individuals, groups, and even national security. Bias in algorithms, for example, can lead to unfair or discriminatory outcomes. Imagine a system trained primarily on data from one demographic; it might misclassify individuals from other groups, leading to false positives or negatives with significant consequences.

Another key ethical consideration is privacy. The very act of classifying and identifying targets implies the collection and analysis of personal information. It’s crucial to have robust data protection measures in place and to adhere strictly to privacy regulations. Transparency is also vital; stakeholders should understand how the system works and what data is being used.

Finally, there’s the issue of accountability. Who is responsible if the system makes a mistake? Establishing clear lines of responsibility and implementing mechanisms for oversight and redress are essential to ensuring ethical and responsible use of these powerful technologies. These ethical frameworks guide the development and deployment of target classification systems, minimizing unintended harm and maximizing fairness and accuracy.

Q 9. Describe your experience with different target classification systems or software.

Throughout my career, I’ve worked with a variety of target classification systems, from rule-based expert systems to sophisticated machine learning models. Early in my career, I used primarily rule-based systems, where predefined rules and criteria determined target classifications. These systems, while simpler, required extensive manual effort to maintain and update the rules as new information emerged. For example, I worked on a system that classified ships based on their size, speed, and radar signature. This approach was effective for known vessels, but struggled with new or unconventional designs.

More recently, I’ve focused on machine learning approaches, specifically deep learning using convolutional neural networks (CNNs). These models can learn complex patterns from vast amounts of data, such as satellite imagery or sensor readings, achieving greater accuracy and adaptability compared to traditional rule-based systems. I was part of a project where we used a CNN to classify objects in aerial imagery, achieving over 95% accuracy in identifying vehicles and buildings. The flexibility of these systems is a significant advantage, allowing for continuous improvement through retraining with new data.

I’ve also worked with commercially available software packages such as ArcGIS for geospatial analysis and integration with various sensor data sources, and specialized platforms for image analysis and target tracking. This experience gives me a well-rounded understanding of the diverse approaches to target classification.

Q 10. How do you validate and verify target classifications?

Validating and verifying target classifications is crucial for ensuring accuracy and reliability. Validation involves assessing the system’s performance against a known standard or benchmark using a separate dataset not used in the training process. We typically employ metrics like precision, recall, and F1-score to quantify the system’s performance. A low precision score, for instance, might indicate many false positives, while a low recall score suggests many false negatives.

Verification, on the other hand, focuses on the overall integrity and trustworthiness of the classification process. This includes checking for bias in the data, evaluating the robustness of the algorithms to noise or adversarial attacks, and assessing the system’s resilience to various operational conditions. For example, we’d test how well the system performs under different weather conditions or with degraded sensor data.

A combination of quantitative metrics and qualitative assessments is often used. Expert review plays a vital role, with human analysts reviewing a sample of classifications to identify potential errors or biases that the automated metrics might miss. This iterative process of validation and verification ensures that the system is performing as expected and delivering reliable results.

Q 11. How do you integrate data from multiple sources for target identification?

Integrating data from multiple sources for target identification requires a systematic approach. It’s akin to piecing together a puzzle, where each data source provides a piece of the picture. This process usually starts with data fusion, combining data from different sensors and sources into a unified representation. For example, we might combine radar data (range, velocity), optical imagery (visual characteristics), and geospatial data (location) to create a comprehensive profile of a target.

Data normalization and standardization are critical steps to ensure compatibility. Different sensors might use different units or scales, so we need to convert them into a common format. This often involves transformations and scaling techniques. After the data is fused and normalized, we employ data analysis techniques to identify patterns and correlations which aid classification. Algorithms like machine learning models can be trained on this combined dataset to achieve more robust and accurate target identification than any single source alone could provide.

The choice of data fusion and analysis techniques depends on the specific application and data types involved. It’s often an iterative process, requiring experimentation and refinement to optimize performance and accuracy. A data management strategy that tracks the source, quality, and processing of each data element is crucial for maintaining data integrity and for auditing purposes.

Q 12. Describe a time you had to resolve a conflict in target classification.

In one project, we encountered a conflict in classifying a particular object detected in satellite imagery. One team, using a primarily shape-based classification algorithm, identified it as a storage facility. Another team, using spectral analysis, classified it as a military hangar. Both classifications had some supporting evidence, creating a discrepancy.

To resolve this, we implemented a structured approach. First, we carefully reviewed the data from both teams, comparing the methodologies, data sources, and supporting evidence. We then engaged a third-party expert in military infrastructure identification to review the imagery and provide an independent assessment. Through this collaborative review, we discovered a subtle but crucial detail in the spectral data—a pattern consistent with the use of specialized materials used in military hangars. The expert confirmed this, and the classification was updated to ‘military hangar’.

This experience highlighted the importance of rigorous data analysis, expert review, and transparent communication in resolving conflicts. The ability to objectively assess conflicting evidence and arrive at a consensus is essential for maintaining accuracy and confidence in the classification process. Collaboration and transparent communication are invaluable in these circumstances.

Q 13. Explain the role of geospatial information in target classification.

Geospatial information plays a fundamental role in target classification and identification. It provides the ‘where’ to complement the ‘what’ of the target. Think of it as adding context. For example, knowing the location of a detected object can significantly enhance our ability to classify it. A vehicle detected in a military base is much more likely to be military than one detected in a residential area.

Geospatial data, such as latitude and longitude coordinates, elevation, and proximity to other objects, can be integrated with other sensor data to improve the accuracy and reliability of classifications. It helps us establish relationships between targets, identify patterns, and understand the overall context of the operational environment. Techniques such as geospatial indexing and spatial analysis can be used to improve search efficiency and identification of targets.

In many applications, geospatial information is critical for prioritizing targets or determining the order of operations. For example, in disaster response, identifying the location of damaged infrastructure could assist in resource allocation. Similarly, in military operations, precise geolocation of enemy assets is crucial for effective targeting.

Q 14. What are the limitations of automated target recognition systems?

Automated target recognition (ATR) systems, while powerful, have limitations. One major limitation is their reliance on training data. If the training data is biased, incomplete, or doesn’t represent the full range of potential targets, the system’s performance will be compromised. This can lead to inaccurate classifications, particularly with novel or unexpected targets.

Another significant limitation is the susceptibility to environmental factors. Adverse weather conditions, such as fog or heavy rain, can significantly degrade the quality of sensor data, leading to misclassifications. Similarly, camouflage, concealment, and deception techniques can make it difficult for ATR systems to identify targets accurately. Variations in lighting, shadows, and sensor noise can also affect the performance.

Furthermore, ATR systems can be computationally expensive, requiring significant processing power and memory, which can be a limitation in resource-constrained environments. Finally, the ‘black box’ nature of some advanced machine learning models makes it difficult to understand the reasoning behind their classifications, making it challenging to diagnose errors or build trust.

Q 15. How do you deal with false positives and false negatives in target classification?

False positives and false negatives are inevitable in target classification, representing errors in our identification process. A false positive occurs when the system incorrectly identifies a non-target as a target (e.g., classifying a bird as a drone). A false negative is the opposite – failing to identify a true target (e.g., missing a crucial object in an image). Managing these errors requires a multi-pronged approach.

Improving the Classifier: This involves refining the algorithm, potentially using more sophisticated machine learning techniques, incorporating additional features (like spectral data or temporal information), or retraining the model with a larger, more diverse dataset. For example, if misclassifying birds as drones is an issue, adding images of various birds to the training data might improve accuracy.

Adjusting Thresholds: The classification system often uses a threshold to determine whether a potential target is classified as positive or negative. Adjusting this threshold can impact the balance between false positives and false negatives. A higher threshold reduces false positives but increases false negatives, and vice versa. This involves careful consideration of the risks associated with each type of error. In a security scenario, a false negative might be more concerning than a false positive.

Ensemble Methods: Combining multiple classifiers (each potentially using different techniques and features) can significantly improve performance. The final classification can be based on a consensus decision, or a weighted average of individual classifier outputs, thus reducing the impact of individual classifier errors.

Post-Processing Techniques: These techniques analyze the output of the classifier to identify and correct errors. For example, spatial or temporal consistency checks can eliminate unlikely classifications (e.g., identifying multiple objects classified as the same target that are impossibly close together).

The optimal balance between false positives and false negatives depends heavily on the specific application. In medical diagnosis, a false negative is far more critical than a false positive, while in spam filtering, a higher tolerance for false positives is often acceptable to minimize missed legitimate emails.

Career Expert Tips:

- Ace those interviews! Prepare effectively by reviewing the Top 50 Most Common Interview Questions on ResumeGemini.

- Navigate your job search with confidence! Explore a wide range of Career Tips on ResumeGemini. Learn about common challenges and recommendations to overcome them.

- Craft the perfect resume! Master the Art of Resume Writing with ResumeGemini’s guide. Showcase your unique qualifications and achievements effectively.

- Don’t miss out on holiday savings! Build your dream resume with ResumeGemini’s ATS optimized templates.

Q 16. Explain the difference between target classification and target recognition.

Target classification is the process of categorizing an object into a predefined class (e.g., car, truck, person). It answers the question: “What is this?” Target recognition, on the other hand, goes further. It involves not only classifying the target but also identifying specific instances within a class. It answers the question: “What is this, and which specific one is it?”

For example, target classification might identify an object as a ‘Boeing 747’, whereas target recognition would further identify it as a specific Boeing 747 with a particular registration number, say, N747XX.

In essence, recognition builds upon classification. A successful recognition system requires accurate classification as a fundamental prerequisite.

Q 17. Describe your experience with image analysis for target identification.

My experience in image analysis for target identification spans several projects. I’ve worked extensively with various image processing techniques, including:

Feature Extraction: Employing techniques like Scale-Invariant Feature Transform (SIFT) and Speeded-Up Robust Features (SURF) to extract distinctive features from images, even under varying lighting and viewpoint conditions.

Object Detection: Utilizing deep learning architectures like convolutional neural networks (CNNs) such as YOLO (You Only Look Once) and Faster R-CNN for precise object localization and classification within images.

Image Segmentation: Employing techniques like thresholding, region growing, and graph-cut algorithms to partition images into meaningful regions, isolating targets of interest from the background.

Image Registration: Aligning multiple images (e.g., from different sensors or viewpoints) to improve accuracy and completeness of target information.

In one project, we developed a system to automatically detect and classify vehicles in aerial imagery using CNNs. We addressed challenges like occlusions, variations in lighting, and varying vehicle types, ultimately achieving high accuracy in target identification.

Q 18. How do you stay current with advancements in target classification technology?

Staying current in the rapidly evolving field of target classification necessitates a proactive approach.

Academic Publications: I regularly follow leading journals and conferences (like IEEE Transactions on Pattern Analysis and Machine Intelligence, CVPR, and ICCV) to stay informed about the latest research breakthroughs.

Industry Events and Webinars: Participating in industry conferences and online webinars allows me to learn about practical applications and new technologies.

Online Courses and Tutorials: Platforms like Coursera, edX, and Udacity offer valuable resources to upskill in specific areas of machine learning and image processing.

Open-Source Projects: Engaging with open-source projects on platforms like GitHub provides hands-on experience with the latest tools and algorithms.

Professional Networks: Participating in professional organizations and networking with experts in the field provides access to knowledge and insights.

This multi-faceted approach ensures I remain up-to-date on the cutting edge of target classification technology.

Q 19. What are some common errors to avoid in target classification?

Common errors to avoid in target classification include:

Insufficient Training Data: A model trained on a small or biased dataset will likely underperform on unseen data. A diverse and representative training set is crucial.

Overfitting: When a model learns the training data too well, it fails to generalize to new data. Techniques like cross-validation and regularization can mitigate overfitting.

Ignoring Data Preprocessing: Neglecting steps like noise reduction, data normalization, and feature scaling can significantly degrade performance. Careful preprocessing is crucial.

Inadequate Feature Selection: Using irrelevant or redundant features can hinder model performance. Careful feature engineering and selection are vital.

Failure to Evaluate Performance: Not rigorously evaluating model performance using appropriate metrics (like precision, recall, F1-score) can lead to deploying poorly performing systems. Thorough testing and evaluation are paramount.

Careful consideration of these aspects is crucial for building robust and accurate target classification systems.

Q 20. Explain the importance of proper documentation in target classification processes.

Proper documentation is paramount in target classification processes, ensuring transparency, reproducibility, and maintainability. It serves several key purposes:

Reproducibility: Detailed documentation allows others (or even yourself in the future) to replicate the classification process, validating results and allowing for further development or modifications.

Traceability: It creates a clear audit trail, facilitating the tracking of data sources, algorithms, and parameters used throughout the entire process.

Maintainability: Well-documented systems are easier to maintain and update, as changes can be tracked and understood easily.

Collaboration: Thorough documentation enhances collaboration among team members, facilitating smooth knowledge transfer and efficient problem-solving.

Compliance: In many applications (especially those with security or safety implications), detailed documentation is crucial for meeting regulatory requirements.

Documentation should include a description of the data used, the algorithms employed, the model parameters, the evaluation metrics used, and any assumptions made throughout the process. Version control of code and data is also crucial.

Q 21. How do you ensure the accuracy and completeness of target data?

Ensuring the accuracy and completeness of target data is crucial for building a reliable target classification system. My approach involves several key strategies:

Data Acquisition: Choosing appropriate data acquisition methods is paramount. The quality of the data directly impacts the accuracy of the classification system. This includes careful sensor selection, calibration, and data collection protocols.

Data Cleaning: Raw data often contains noise, inconsistencies, and errors. A thorough data cleaning process involves techniques like outlier removal, data imputation, and error correction.

Data Validation: Rigorous data validation checks are necessary to ensure data accuracy. This could include cross-referencing data from multiple sources, verifying against ground truth data, and using data consistency checks.

Data Augmentation: Augmenting the dataset by creating synthetic data (e.g., by applying transformations like rotations, scaling, and noise to existing images) can help address data scarcity and improve model robustness.

Data Versioning: Using version control for the dataset allows tracking changes, ensuring reproducibility, and facilitating rollback to previous versions if needed.

By employing these strategies, I strive to ensure high-quality, reliable target data, which forms the foundation for accurate and robust classification systems.

Q 22. How do you handle conflicting information in target classification?

Conflicting information is a common challenge in target classification. Imagine trying to identify a vehicle based on blurry images and inconsistent sensor data – some sensors might suggest a truck, others a van. My approach involves a multi-step process. First, I carefully assess the source and reliability of each data point. Is the radar data from a known reliable source, or is it potentially corrupted? Are the visual images clear and well-lit, or are they obscured by weather conditions? Second, I use data fusion techniques, combining information from different sources and applying weighting based on confidence levels. For example, if high-resolution imagery strongly suggests a specific type of vehicle, I might give it more weight than conflicting, lower-quality sensor data. Finally, I might employ probabilistic methods, assigning probabilities to different classifications and accepting uncertainty. The final classification would then be the most probable outcome, potentially accompanied by a confidence score reflecting the degree of certainty.

A real-world example would be identifying a target during a maritime surveillance operation. Visual identification might be hampered by fog, while radar could provide a speed and size estimate. By combining radar data with potentially lower-quality visual information, and by considering sensor reliability, a more accurate classification could be obtained – perhaps identifying a fast-moving patrol boat instead of a slower-moving fishing vessel.

Q 23. Describe your experience with using data analytics for target classification.

Data analytics is integral to modern target classification. I have extensive experience leveraging various techniques to extract meaningful insights from diverse datasets. For instance, I’ve used machine learning algorithms like Support Vector Machines (SVMs) and Random Forests to train classification models on vast amounts of historical target data, including radar cross-sections, infrared signatures, and visual imagery. This allows for automatic classification of new targets with high accuracy. Furthermore, I have employed dimensionality reduction techniques like Principal Component Analysis (PCA) to reduce the complexity of high-dimensional data, making the classification process more efficient and improving model performance. In one project, we used anomaly detection techniques to identify targets that deviated significantly from established patterns, flagging them for further investigation. This proved crucial in detecting previously unseen or unusual threats.

# Example Python code snippet illustrating data preprocessing using PCA: from sklearn.decomposition import PCA import pandas as pd # Load the data data = pd.read_csv('target_data.csv') # Separate features and labels X = data.drop('target_type', axis=1) y = data['target_type'] # Apply PCA to reduce dimensionality pca = PCA(n_components=2) # Reduce to 2 principal components X_reduced = pca.fit_transform(X)Q 24. What are the key performance indicators (KPIs) for target classification?

Key Performance Indicators (KPIs) for target classification are crucial for evaluating the effectiveness of the system. These metrics vary depending on the specific application but generally include:

- Accuracy: The percentage of correctly classified targets. This is a fundamental measure of the system’s overall performance.

- Precision: The proportion of correctly identified targets among all targets predicted as a specific type. High precision is important when the cost of false positives is high (e.g., mistakenly identifying a friendly aircraft as hostile).

- Recall (Sensitivity): The proportion of actual targets of a specific type that are correctly identified. High recall is crucial when missing a target is highly undesirable (e.g., failing to detect an enemy missile).

- F1-score: The harmonic mean of precision and recall, offering a balanced measure that considers both false positives and false negatives.

- Processing Time: The time taken to classify a target. This is important for real-time applications where quick decisions are vital.

- False Positive Rate: The rate at which non-targets are misclassified as targets. A high false positive rate can lead to wasted resources and potential safety issues.

Choosing the most relevant KPIs depends heavily on the mission context. For example, in a search and rescue operation, maximizing recall (finding all potential survivors) might be prioritized over precision (avoiding false positives). In a military setting, precision and minimizing false positives may be paramount to avoid friendly fire incidents.

Q 25. How do you manage large datasets for target classification and identification?

Managing large datasets in target classification requires careful planning and the use of efficient techniques. Distributed computing frameworks like Hadoop or Spark are essential for processing datasets that exceed the capacity of a single machine. These frameworks allow for parallel processing, significantly reducing the computation time. Moreover, efficient data storage solutions, such as cloud-based object storage services (e.g., AWS S3, Azure Blob Storage), are necessary to manage the sheer volume of data. Data compression techniques can also reduce storage requirements and improve processing speed. Finally, feature selection and dimensionality reduction methods are crucial for managing the complexity of large datasets. Removing irrelevant or redundant features can simplify the classification process without significant loss of accuracy, improving computational efficiency and model performance. Regular data cleaning and validation steps are also vital to ensure the quality and reliability of the data being used.

Q 26. Explain your understanding of different target signatures (radar, visual, etc.)

Target signatures are the unique characteristics used to identify a target. Different sensor modalities provide different types of signatures:

- Radar Signatures: These are based on how a target reflects radar signals. Factors like size, shape, material composition, and orientation affect the reflected signal. Radar signatures can reveal information about the target’s size, speed, and even its type (e.g., aircraft, ship, missile).

- Visual Signatures: These are based on visual characteristics like shape, color, size, and markings. Visual identification heavily relies on image analysis techniques, often augmented by computer vision algorithms for automated target recognition. Optical imagery provides detailed information for human analysts or machine learning algorithms to classify the target.

- Infrared (IR) Signatures: These signatures are based on the heat emitted by a target. Hotter objects radiate more infrared energy, allowing for the detection and identification of targets based on their thermal profiles. This is particularly useful in low-light or nighttime operations.

- Electromagnetic (EM) Signatures: These encompass a broader range of electromagnetic emissions, including radio frequencies, which can reveal operational details of certain targets. For example, communication signals or radar emissions can help identify and classify electronic warfare systems or communications equipment.

Effective target classification often relies on fusing information from multiple signatures. For example, combining radar data (size, speed) with visual data (shape, markings) can provide a much more reliable classification than using either source independently.

Q 27. Describe your experience working with different classification algorithms.

My experience encompasses a wide range of classification algorithms. I’ve worked extensively with:

- Support Vector Machines (SVMs): Effective for high-dimensional data and complex classification problems. I’ve used SVMs in applications requiring high accuracy and robustness to noisy data.

- Random Forests: An ensemble learning method that combines multiple decision trees to improve accuracy and reduce overfitting. Random Forests are particularly useful when dealing with large datasets and high dimensionality.

- Neural Networks: Powerful models capable of learning complex patterns from data. I’ve used deep learning architectures, including Convolutional Neural Networks (CNNs) for image-based classification and Recurrent Neural Networks (RNNs) for time-series data analysis in sensor fusion applications.

- Naive Bayes: A simple probabilistic classifier based on Bayes’ theorem. While less powerful than more advanced methods, it can be very efficient for certain types of data and is useful as a baseline for comparison.

- K-Nearest Neighbors (KNN): A simple non-parametric method that classifies targets based on the majority class among its nearest neighbors in the feature space. Useful for initial exploration or when computational resources are limited.

The choice of algorithm depends on the specific characteristics of the data, the computational resources available, and the desired accuracy. Often, I’ll experiment with multiple algorithms and compare their performance to select the most suitable one for the specific task. This often involves techniques like cross-validation to prevent overfitting.

Q 28. How do you adapt your target classification techniques to different mission objectives?

Adapting target classification techniques to different mission objectives is paramount. The requirements for identifying a potential threat in a military scenario differ significantly from identifying wildlife during a conservation effort. My approach involves a tailored design process that considers:

- Prioritization of KPIs: As discussed earlier, the emphasis on precision versus recall, for example, changes depending on the mission. A high-precision system is needed to avoid false alarms in civilian applications, whereas in some military settings, high recall (even with a higher false positive rate) may be more critical.

- Data Selection and Preprocessing: The type of data collected and how it’s preprocessed will depend on the target and the mission. High-resolution imagery might be needed for wildlife identification, while radar data could be sufficient for maritime surveillance.

- Algorithm Selection: The choice of classification algorithm is dictated by mission-specific constraints. In a real-time application with limited processing power, a faster but potentially less accurate algorithm might be chosen over a more complex and accurate one.

- Threshold Adjustment: Decision thresholds influence the balance between sensitivity and specificity. A low threshold might be acceptable in a search-and-rescue operation to ensure high sensitivity, while a higher threshold will be appropriate in security systems where false positives are costly.

For example, a military target identification system will require high accuracy and low false negatives to avoid missing enemy threats, whereas a system for identifying commercial vessels would prioritize efficient processing speed to accommodate high traffic volumes and minimize delays.

Key Topics to Learn for Target Classification and Identification Interview

- Data Acquisition and Preprocessing: Understanding various data sources, data cleaning techniques (handling missing values, outliers), and feature engineering for optimal classification.

- Classification Algorithms: Familiarize yourself with supervised learning algorithms like Support Vector Machines (SVMs), Random Forests, and Neural Networks. Understand their strengths, weaknesses, and appropriate applications in target classification.

- Feature Selection and Dimensionality Reduction: Mastering techniques like Principal Component Analysis (PCA) and feature importance analysis to improve model accuracy and efficiency.

- Model Evaluation Metrics: Know how to assess model performance using precision, recall, F1-score, ROC curves, and AUC. Understand the trade-offs between different metrics and their relevance to the specific problem.

- Practical Applications: Explore real-world examples of target classification and identification, such as image recognition, fraud detection, medical diagnosis, or risk assessment. Be prepared to discuss how different algorithms and techniques are applied in these contexts.

- Bias and Fairness in Classification: Understand potential biases in data and algorithms and strategies for mitigating them to ensure fair and equitable outcomes.

- Explainability and Interpretability: Be prepared to discuss techniques for understanding how a classification model arrives at its predictions, especially for high-stakes applications.

Next Steps

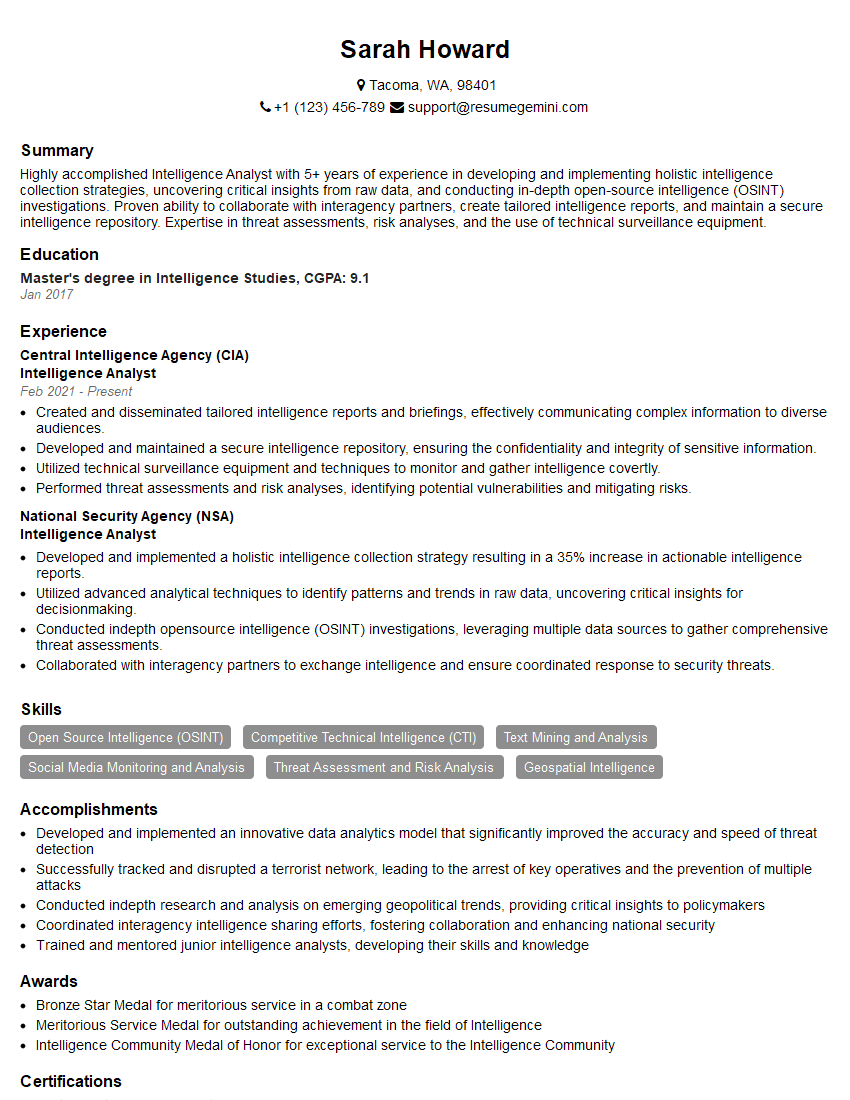

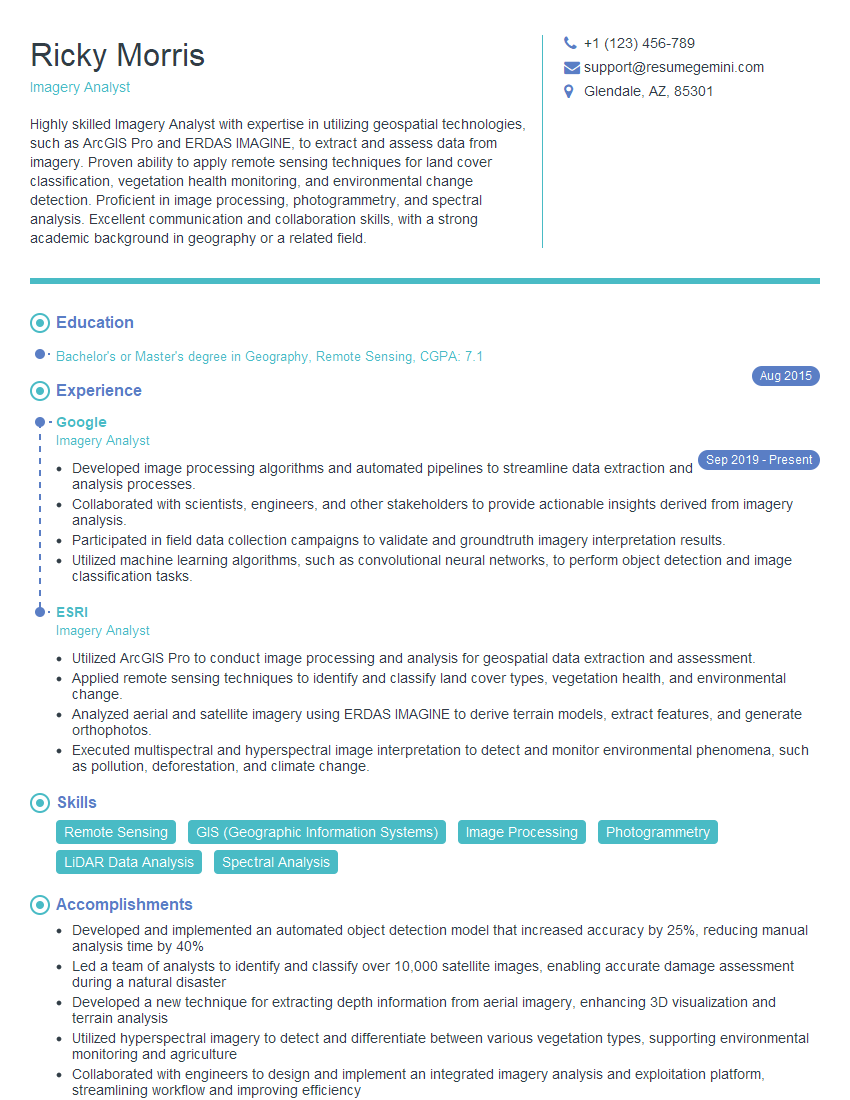

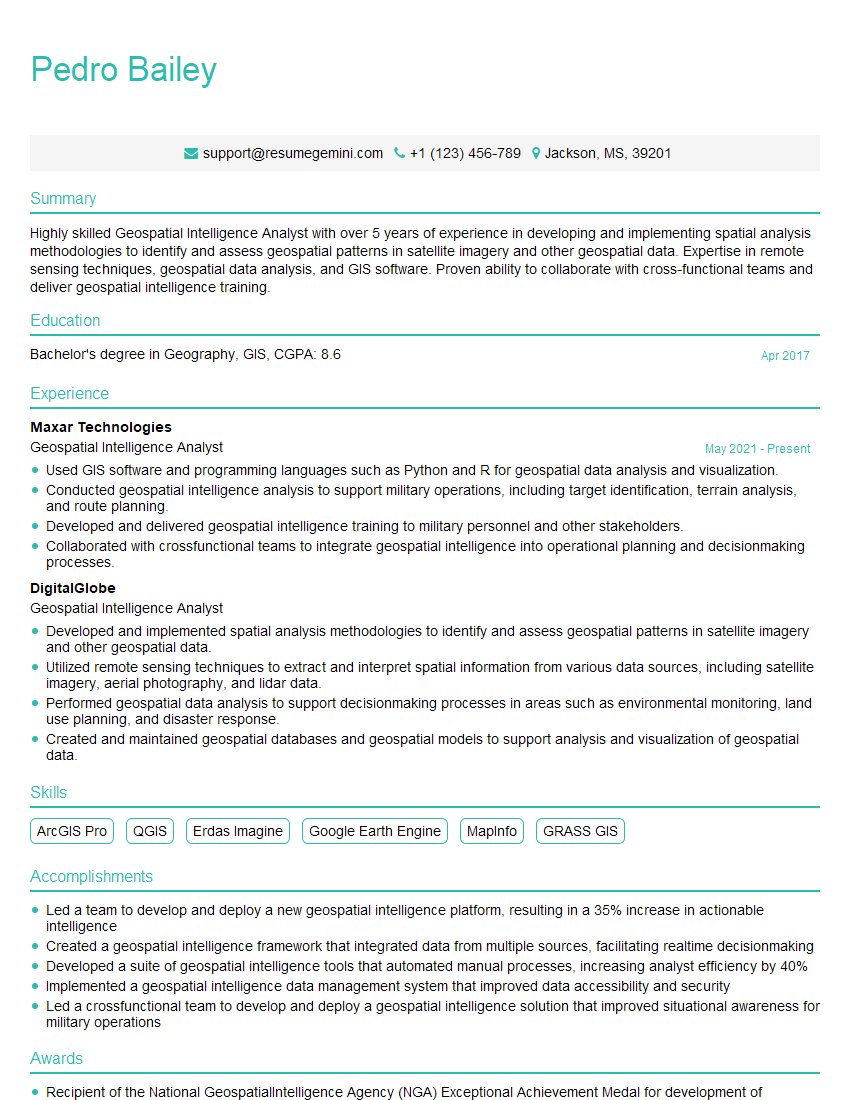

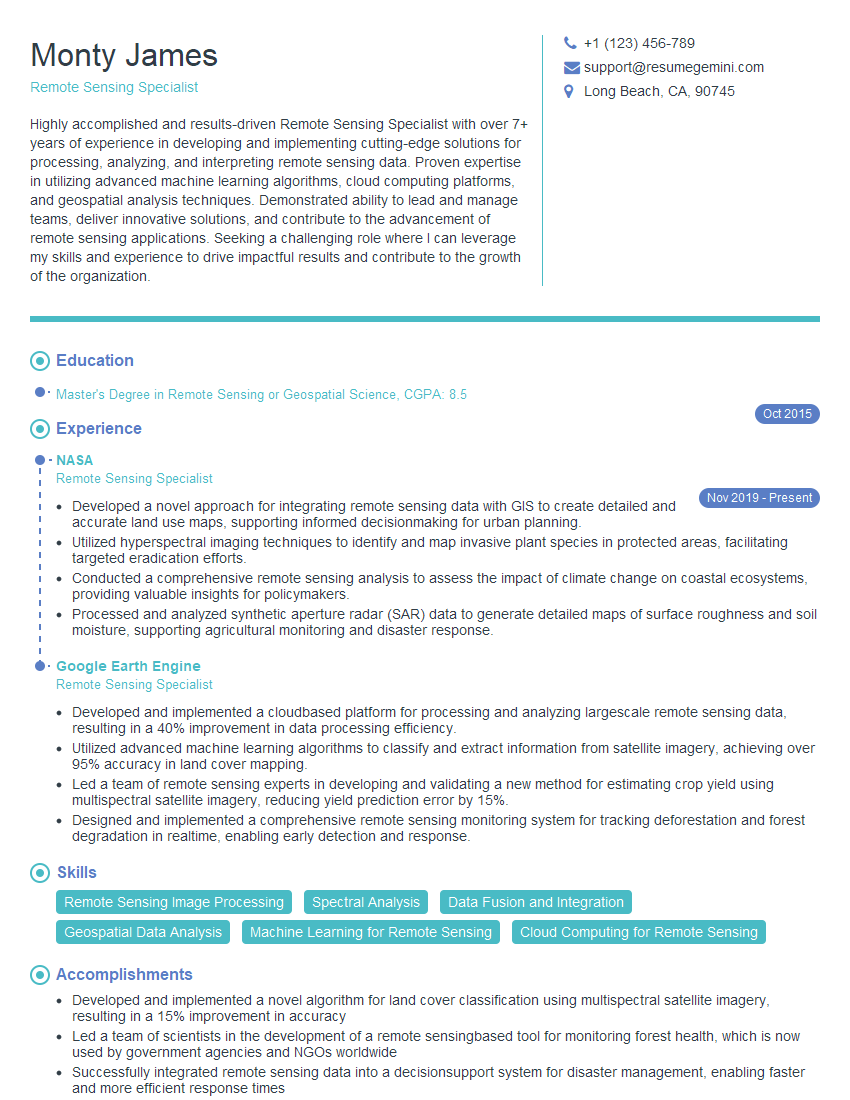

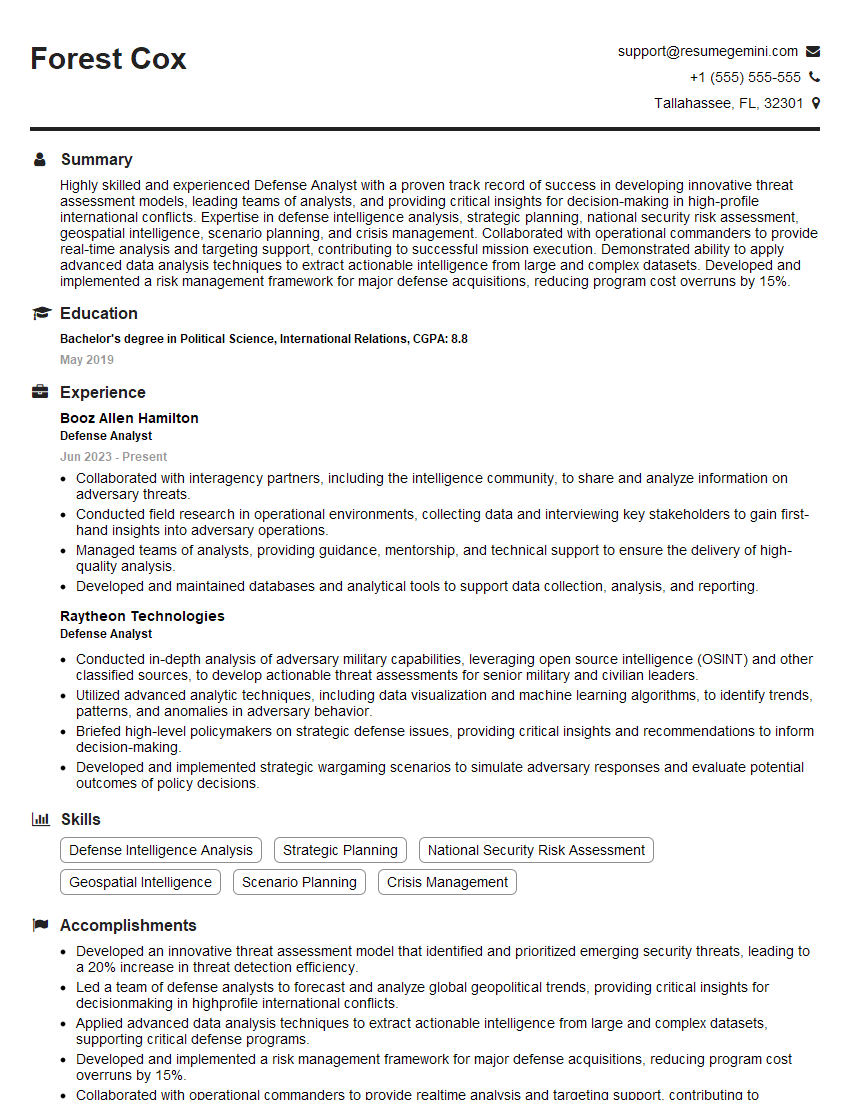

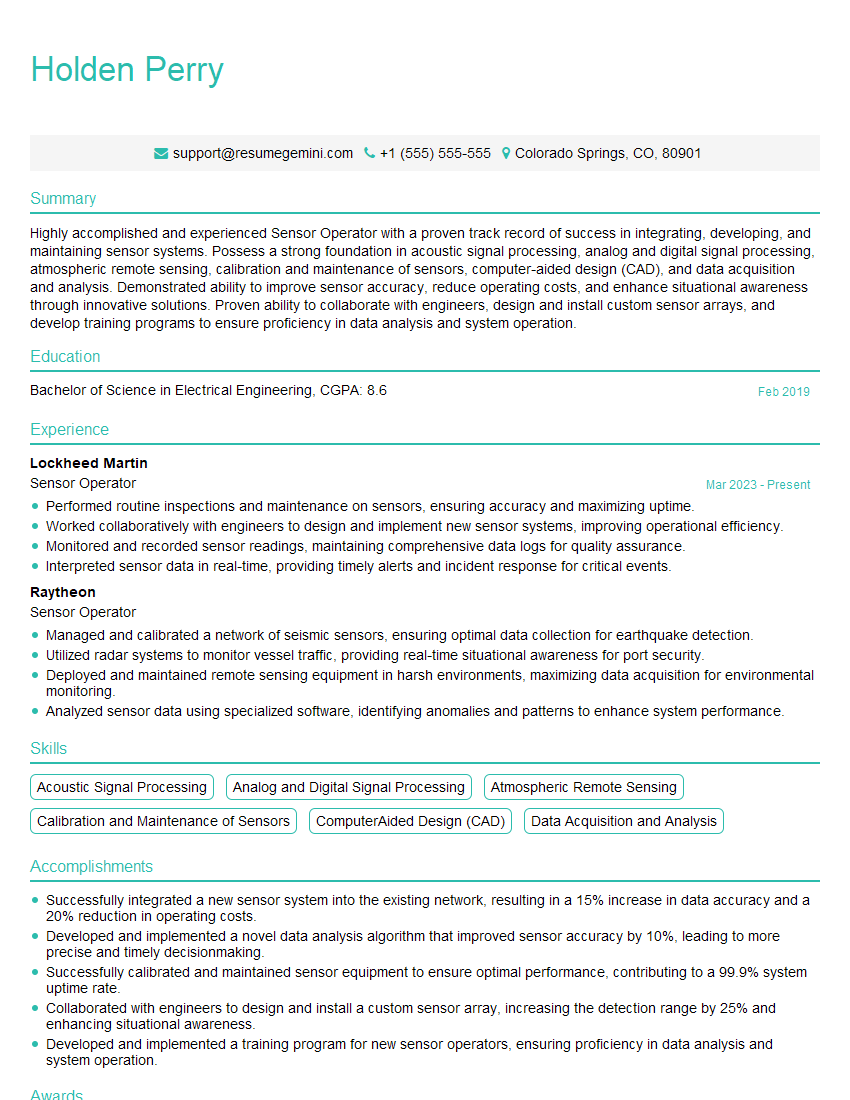

Mastering Target Classification and Identification opens doors to exciting and impactful careers in various fields. A strong foundation in these techniques is highly sought after, significantly enhancing your job prospects. To make your application stand out, it’s crucial to present your skills effectively. Crafting an ATS-friendly resume is key to ensuring your qualifications are recognized by recruiters. ResumeGemini is a trusted resource to help you build a professional and impactful resume that highlights your expertise. We provide examples of resumes tailored to Target Classification and Identification to guide you in showcasing your skills and experience. Take the next step towards your dream career – build a winning resume with ResumeGemini!

Explore more articles

Users Rating of Our Blogs

Share Your Experience

We value your feedback! Please rate our content and share your thoughts (optional).

What Readers Say About Our Blog

Hello,

We found issues with your domain’s email setup that may be sending your messages to spam or blocking them completely. InboxShield Mini shows you how to fix it in minutes — no tech skills required.

Scan your domain now for details: https://inboxshield-mini.com/

— Adam @ InboxShield Mini

Reply STOP to unsubscribe

Hi, are you owner of interviewgemini.com? What if I told you I could help you find extra time in your schedule, reconnect with leads you didn’t even realize you missed, and bring in more “I want to work with you” conversations, without increasing your ad spend or hiring a full-time employee?

All with a flexible, budget-friendly service that could easily pay for itself. Sounds good?

Would it be nice to jump on a quick 10-minute call so I can show you exactly how we make this work?

Best,

Hapei

Marketing Director

Hey, I know you’re the owner of interviewgemini.com. I’ll be quick.

Fundraising for your business is tough and time-consuming. We make it easier by guaranteeing two private investor meetings each month, for six months. No demos, no pitch events – just direct introductions to active investors matched to your startup.

If youR17;re raising, this could help you build real momentum. Want me to send more info?

Hi, I represent an SEO company that specialises in getting you AI citations and higher rankings on Google. I’d like to offer you a 100% free SEO audit for your website. Would you be interested?

Hi, I represent an SEO company that specialises in getting you AI citations and higher rankings on Google. I’d like to offer you a 100% free SEO audit for your website. Would you be interested?

good