The thought of an interview can be nerve-wracking, but the right preparation can make all the difference. Explore this comprehensive guide to Animation and Rendering interview questions and gain the confidence you need to showcase your abilities and secure the role.

Questions Asked in Animation and Rendering Interview

Q 1. Explain the difference between keyframing and tweening.

Keyframing and tweening are fundamental animation techniques. Think of it like drawing a flipbook: keyframes are the major drawings (the most important poses), and tweening fills in the gaps between them, creating the illusion of smooth motion.

Keyframing involves explicitly defining the pose of an object at specific points in time. These are the crucial poses that define the overall action. For example, in an animation of a bouncing ball, keyframes would be the highest point of the bounce and the lowest point before the next bounce. The animator carefully sets the position, rotation, and other properties at these keyframes.

Tweening is the process of automatically generating intermediate frames between keyframes. The software interpolates (smoothly blends) the values of the properties between the keyframes, creating the illusion of continuous movement. Without tweening, you’d only see the keyframes, and the animation would be jerky.

In practice, you’d set keyframes for the most important moments of an animation, and then rely on tweening to generate the in-betweens, making the animation process significantly more efficient.

Q 2. Describe your experience with different animation software (e.g., Maya, Blender, Houdini).

My experience spans several leading animation software packages. I’ve extensively used Maya for high-end character animation and rigging, leveraging its robust toolset for complex setups and its industry-standard pipeline integration. I’ve particularly enjoyed using Maya’s powerful animation layers for non-destructive workflows, making complex adjustments clean and easy.

I’m also proficient in Blender, appreciating its open-source nature and constantly evolving feature set. Blender’s sculpting tools are fantastic for creating organic shapes quickly. I frequently use Blender for prototyping, quick animations and even some production tasks due to its versatility and free accessibility.

Finally, I’ve worked with Houdini for procedural animation and visual effects. Its node-based system allows for incredible control and automation, perfect for creating complex simulations like fluid dynamics, destruction, or particle effects. I utilized Houdini to build a procedural system for generating realistic forest environments in a previous project, saving significant time compared to manual creation.

Q 3. What are the advantages and disadvantages of different rendering engines (e.g., Arnold, V-Ray, Redshift)?

Each rendering engine offers a unique balance of features, performance, and render quality. Arnold is known for its physically accurate lighting and excellent subsurface scattering, making it ideal for realistic characters and environments. However, it can be resource-intensive and require significant rendering time.

V-Ray boasts broad industry adoption and extensive plugin support. Its versatility and speed make it a good all-rounder, suitable for various projects. It’s often praised for its ease of use, although its physically accurate features might not be as polished as Arnold’s.

Redshift shines with its speed and GPU acceleration, making it a favorite for projects with tight deadlines. While its features might be slightly less extensive than Arnold or V-Ray, its speed advantage often outweighs this for production pipelines. The choice often depends on project needs, budget, and available hardware.

Q 4. How do you optimize a scene for faster rendering?

Optimizing a scene for faster rendering involves a multi-faceted approach. It’s like streamlining a factory line—every step matters. Here are key strategies:

- Reduce polygon count: Use lower-resolution models where appropriate, employing techniques like level of detail (LOD) to switch to simpler models at a distance.

- Optimize geometry: Avoid excessive geometry and use efficient modeling techniques. For instance, use subdivisions only where visually necessary.

- Simplify materials: Use simpler shaders where possible; complex shaders can significantly increase rendering time. Consider using procedural textures over image-based ones when feasible.

- Use proxy geometry: Replace high-poly models with lower-poly representations during lighting and camera setup.

- Efficient lighting: Avoid excessive lights. Use light linking, portals, or importance sampling techniques to speed up global illumination calculations.

- Render layers and passes: Separate elements into render layers to render components independently and combine them in post-processing. This allows for easier troubleshooting and renders only what’s necessary at any given time.

- Properly use render settings: Adjust render settings like sampling rates and ray bounces to balance render time and quality. Don’t over-sample unless necessary.

Ultimately, a combination of these strategies will lead to a significant improvement in rendering speed without compromising visual quality significantly.

Q 5. Explain the concept of global illumination.

Global illumination (GI) simulates how light bounces around a scene, creating realistic effects like indirect lighting, color bleeding, and ambient occlusion. Think of it as the light’s journey – not just from the light source directly to an object, but also the light bouncing off walls, floors, and other surfaces to illuminate the object indirectly.

Without GI, a scene would look flat and unrealistic, with only direct lighting affecting objects. GI adds realism by accurately calculating how light interacts with the scene’s geometry and materials, resulting in more natural-looking shadows, reflections, and ambient lighting. Different rendering engines use various methods to approximate GI, such as photon mapping, path tracing, and radiosity, each with its own strengths and weaknesses.

Q 6. Describe your experience with shaders and their applications.

Shaders are essentially miniature programs that define how light interacts with a surface. They determine the material’s appearance, including color, reflectivity, roughness, and more. Think of them as the ‘skin’ of the 3D models.

My experience includes creating and modifying shaders for various purposes. I’ve used shaders to create realistic skin, metallic surfaces, and stylized effects. For example, I created a custom shader for a project that simulated the iridescent effect of a beetle’s shell. This involved writing code to manipulate the color and reflection based on the viewing angle, producing a visually stunning result that wouldn’t have been possible with standard shaders.

I’m proficient in various shading languages, including HLSL and GLSL, and I understand the principles of physically-based rendering (PBR) and its importance in creating believable visuals.

Q 7. How do you handle complex character rigs?

Handling complex character rigs requires a methodical approach and a deep understanding of rigging principles. It’s like building a sophisticated puppet that needs to move convincingly.

My strategy often involves a modular approach, breaking down the rig into smaller, manageable sections (e.g., head, limbs, body). This allows for easier maintenance and modification. I use constraints and hierarchies to create a system of interconnected controls, enabling intuitive and precise animation. The choice of rigging techniques depends on the character’s complexity and the animation style, but I always prioritize clean, efficient, and easy-to-use rigs.

Solving issues with complex rigs involves careful debugging, checking for conflicting constraints or unexpected interactions between different parts of the rig. I use techniques like baking animations to reduce the complexity of the rig during rendering or when there are performance issues.

Q 8. What are your preferred methods for creating realistic hair and fur?

Creating realistic hair and fur is a challenging but rewarding aspect of animation and rendering. My preferred methods involve a combination of techniques, prioritizing realism and performance. I generally start with a hair or fur simulation system, such as those found in XGen (Maya) or Yeti (Houdini). These systems allow for the procedural generation of individual strands, giving me control over parameters like density, length, curl, and overall style. The key to realism lies in the details. I’ll often use multiple passes to simulate different aspects of hair behavior – a main simulation for overall shape and movement, and potentially a secondary simulation for finer details like wisps and flyaways.

Beyond the simulation, rendering is crucial. I utilize shaders that incorporate subsurface scattering to simulate the way light penetrates and scatters within the hair strands, adding depth and realism. This is especially important for achieving believable shine and translucency. Furthermore, I’ll often use techniques like displacement mapping or micro-polygons to add fine-scale detail that’s not achievable with simulation alone, enhancing the overall visual fidelity. For example, on a character with long, flowing hair, I might use a combination of a primary simulation for large-scale movements, then add smaller strands simulated separately to create subtle details that would be computationally expensive to include in the main simulation.

Finally, post-processing steps like color grading and adding subtle imperfections can further enhance the realism, making the hair look less ‘perfect’ and more natural.

Q 9. Explain your process for creating believable cloth simulations.

Creating believable cloth simulations requires a deep understanding of physics and material properties. My process begins with selecting the appropriate simulation software – RealFlow, Maya nCloth, or Marvelous Designer are all powerful tools, each with its own strengths. The choice depends heavily on the complexity of the cloth and the desired level of control. Once the software is chosen, I meticulously model the garment, paying close attention to its geometry and topology. A clean and efficient model is crucial for a smooth and stable simulation.

Next, I define the cloth’s physical properties: its mass, stiffness, damping, and friction. These parameters directly impact how the cloth behaves under different forces. Experimentation and iterative adjustments are critical here to achieve the desired realism. I might simulate the fabric falling naturally onto a character, adjusting parameters to mimic the drape of a specific material, such as silk or denim. For instance, I’d use a lower stiffness value for silk than I would for denim. The use of collision objects to represent characters or other objects within the scene is essential for realistic interactions. I’ll often refine the simulation by using cache systems or other optimization techniques to manage the computational demands, particularly for complex scenarios.

Finally, post-simulation, I refine the results in a 3D application to address any artifacts or minor inconsistencies, ensuring a seamless integration within the final rendered scene.

Q 10. How do you troubleshoot rendering errors and issues?

Rendering errors and issues can stem from numerous sources, requiring a systematic approach to troubleshooting. My first step is always to carefully examine the error messages or warnings generated by the renderer. These often provide valuable clues about the underlying problem. If the error isn’t immediately obvious, I start by isolating the problematic elements of the scene. This may involve progressively disabling parts of the scene (like lights, materials or geometry) to determine which component causes the issue.

Common rendering problems include texture mapping issues (incorrect UV mapping or missing textures), lighting problems (incorrect light settings or shadows), and geometry problems (overlapping or corrupted geometry). For texture errors, I carefully check the texture paths and ensure that the textures are correctly assigned and loaded. For lighting problems, I review my light sources’ intensities, shadows, and settings to ensure they’re properly configured. Geometric issues are often resolved by checking for problematic polygons, cleaning up the geometry, or employing tools for mesh optimization and repair.

If the problem persists, I might consider simplifying the scene, reducing the complexity to identify the core issue. Checking render settings, making sure the scene is correctly scaled and units are consistent, and looking for errors in the scene’s structure are other important troubleshooting steps. In some cases, it may be necessary to consult online resources, forums, or documentation to find solutions for specific render engine issues or unexpected behaviors.

Q 11. Describe your experience with compositing and post-processing.

Compositing and post-processing are essential for enhancing the final look of an animation. I have extensive experience using compositing software like Nuke and After Effects to combine different render passes, add visual effects, and perform color grading. This includes integrating elements from different renders, adjusting exposure and contrast, adding atmospheric effects (fog, haze), and incorporating additional visual effects such as motion blur or depth of field.

My workflow typically involves creating separate render passes for elements such as beauty, ambient occlusion, specular highlights, and depth. This layered approach allows me to manipulate individual elements non-destructively, allowing for flexible adjustments and experimentation. For example, I might use the depth pass to add a selective depth of field effect, blurring the background to draw focus to the main subject. The beauty pass provides the main image, while the other passes add details and realism.

Color grading is another critical aspect of post-processing. I carefully adjust the color balance, contrast, and saturation to ensure a consistent and visually appealing final result, creating a unified mood and look for the entire piece. Post-processing techniques are applied based on the artistic direction and overall aesthetic desired for the project.

Q 12. What are your preferred techniques for creating realistic lighting and shadows?

Realistic lighting and shadows are fundamental to creating believable scenes. I employ a variety of techniques depending on the desired level of realism and performance requirements. For global illumination effects, I often use path tracing or photon mapping techniques, which simulate the way light bounces around the scene, creating realistic indirect lighting and soft shadows.

I use image-based lighting (IBL) extensively, which involves using high-resolution HDRI (High Dynamic Range Imaging) environment maps to create realistic lighting environments. This method allows me to simulate the lighting conditions of a real-world location, such as an outdoor scene illuminated by the sun and sky, without manually placing light sources. For example, an interior scene may use an HDRI of an office space to light the scene realistically, rather than manually placing each light fixture. For direct lighting, I’ll often combine various light types such as area lights, point lights, and spotlights to accurately represent the illumination within the scene.

Careful consideration of shadow parameters is important. I’ll adjust the softness and intensity of shadows to create a visually believable representation. The shadow’s quality can greatly impact the scene’s realism, so I spend time optimizing shadow settings to balance realism and render time. Finally, post-processing steps such as color grading can further enhance the lighting and shadows to produce the desired artistic effect.

Q 13. Explain your understanding of different camera projection types.

Understanding different camera projection types is essential for creating accurate and visually compelling imagery. The most common types are perspective and orthographic projections. Perspective projection simulates how the human eye perceives the world – objects appear smaller as they get farther away, creating depth and perspective. This is the default setting for most cameras in 3D applications and is best suited for most scenarios where realism is a priority. The field of view (FOV) parameter within perspective projection governs how much of the scene is captured by the camera, affecting the perceived depth and perspective.

Orthographic projection, in contrast, doesn’t have perspective. Objects remain the same size regardless of their distance from the camera. This projection is commonly used for technical drawings, architectural visualizations, or creating a more flat, 2D-like look. It’s useful when a specific viewpoint without perspective distortion is needed. For example, blueprints are often represented in orthographic projection.

Other less commonly used projections include fisheye and stereographic projections. Fisheye projection is a highly distorted perspective projection often used to simulate wide-angle lenses, capturing a very large field of view with extreme distortion. Stereographic projection is used for creating panoramic images and maps.

Q 14. How do you manage large datasets and complex scenes?

Managing large datasets and complex scenes requires a strategic approach combining efficient modeling techniques, optimized rendering workflows, and effective asset management. The first step is optimization during the modeling phase itself. I strive to use low-poly modeling for static elements wherever possible, reserving high-poly models for only those parts of the scene needing extreme detail. Using proxy geometry for distant objects can substantially reduce scene complexity and render times. For example, replacing distant trees with simple geometry instead of full, detailed models is a common optimization strategy.

During rendering, I use techniques like instancing and level of detail (LOD) systems to reduce the number of polygons processed by the renderer. Instancing allows me to reuse the same object multiple times in the scene, significantly reducing memory usage. LODs dynamically switch between different polygon counts depending on the object’s distance from the camera. Render layers can help break down a scene into smaller, manageable chunks, allowing for more targeted rendering and compositing.

Efficient asset management is also critical. I meticulously organize my assets, using clear naming conventions and version control systems, ensuring easy access and avoiding duplication. Leveraging cloud storage solutions or network rendering systems enables collaborative work and distributes the computational load for large-scale projects. A well-defined pipeline, careful planning, and understanding of the render engine’s capabilities are essential for successful scene management.

Q 15. Describe your experience with version control systems (e.g., Git).

Version control systems, like Git, are fundamental to collaborative animation and rendering workflows. They track changes to files over time, allowing for easy collaboration, rollback to previous versions, and efficient management of large projects. My experience spans several years, using Git extensively for both individual projects and large team productions. I’m proficient in branching strategies (like Gitflow), merging, resolving conflicts, and using remote repositories like GitHub and Bitbucket. For instance, in a recent project, using Git’s branching feature, I worked on a specific character animation concurrently with other team members working on the environment, ensuring no conflicts arose until we integrated everything later. This streamlined our process significantly and prevented potentially disastrous overwrites.

I’m also comfortable with using Git’s command line interface for more granular control and efficiency, though I also use GUI clients like Sourcetree for simpler tasks or visual oversight.

Career Expert Tips:

- Ace those interviews! Prepare effectively by reviewing the Top 50 Most Common Interview Questions on ResumeGemini.

- Navigate your job search with confidence! Explore a wide range of Career Tips on ResumeGemini. Learn about common challenges and recommendations to overcome them.

- Craft the perfect resume! Master the Art of Resume Writing with ResumeGemini’s guide. Showcase your unique qualifications and achievements effectively.

- Don’t miss out on holiday savings! Build your dream resume with ResumeGemini’s ATS optimized templates.

Q 16. What are your preferred methods for creating realistic water effects?

Creating realistic water effects involves a multi-faceted approach combining simulation techniques, shaders, and particle systems. My preferred methods often involve a blend of these. For calmer waters, I might use procedural noise textures combined with displacement maps to subtly animate the surface, reflecting the environment and creating believable ripples. For more dynamic scenes, such as ocean waves or waterfalls, I’d lean towards physically-based simulation techniques. These simulations, often handled within a dedicated fluid dynamics engine or plugin, calculate the movement and interactions of water particles, providing realistic splashes, foam, and breaking waves. I’ll commonly then use a custom shader to refine the appearance, incorporating subsurface scattering for transparency and refractions, and adding details like foam and caustics (light patterns created by refracted light).

For example, in a recent project depicting a river, I used a combination of a fluid simulation for the river’s flow and a custom shader that incorporated normal maps for creating detailed surface textures, adding realistic roughness and displacement. Experimentation and iterative refinement are crucial, continuously tweaking parameters and shaders to achieve the desired visual fidelity.

Q 17. How do you collaborate effectively with other team members?

Effective collaboration hinges on clear communication, well-defined roles, and efficient use of project management tools. I prioritize regular check-ins with my team members, utilizing daily stand-up meetings or weekly progress reviews to stay aligned on goals and address any challenges. Version control (as discussed earlier) is paramount, ensuring everyone works with the most up-to-date assets and avoids conflicts. I actively participate in critiques, both giving and receiving feedback constructively, focusing on solutions rather than blame. I’m comfortable using communication tools like Slack or Discord for quick queries and updates, and project management software like Jira or Trello for task assignments and tracking progress.

One example where collaboration was critical involved a complex animation sequence. By using a collaborative online whiteboard and regular updates via Slack, the team (modelers, animators, riggers, and myself) could easily communicate issues and refine the scene together, far exceeding the capabilities of isolated work.

Q 18. Explain your understanding of normal maps and their use in rendering.

Normal maps are crucial for adding surface detail to 3D models without significantly increasing polygon count. They store surface normal vectors (representing the direction of a surface’s orientation) in a color-coded format. In rendering, these maps are used to simulate the light interaction with a surface as if it were highly detailed, creating the illusion of bumps, grooves, and other surface features. This significantly increases visual fidelity while maintaining performance. For example, a simple sphere with a normal map applied can look like a rough rock or a detailed piece of metal.

The normal map data is sampled during the rendering process, modifying the surface normal at each pixel. This influences the way light reflects and shadows are cast, producing a far more realistic representation of the surface than the base model alone would allow. I often use them in conjunction with other maps, such as displacement maps for more pronounced geometry changes or ambient occlusion maps to create realistic crevasses and shadows.

Q 19. How do you handle feedback and critiques on your work?

I see feedback and critiques as valuable opportunities for growth and improvement. I actively seek out constructive criticism and try to approach it with an open mind. I start by actively listening to the feedback, asking clarifying questions to ensure I fully understand the points being made. Then, I carefully analyze the feedback to determine its validity and relevance to the project goals. If the criticism is valid, I take the necessary steps to implement the suggested changes, documenting the modifications made. Even if I disagree with a suggestion, I try to understand the reasoning behind it to learn from the perspective of the reviewer.

For example, in a previous project, I received feedback that the animation of a character’s walk cycle felt unnatural. I spent time reviewing reference videos and adjusting the keyframes, making subtle changes to the timing and weight shift to address this feedback, which significantly improved the final product.

Q 20. Describe a time you had to overcome a technical challenge in animation or rendering.

During a recent project involving a large-scale environment with complex lighting, we encountered significant rendering time issues. The scene was extremely demanding, and rendering a single frame took hours. My initial approach involved optimizing the scene geometry and reducing the polygon count wherever possible. However, this wasn’t sufficient to resolve the problem. We then analyzed the lighting setup, identifying areas with overly complex light sources and unnecessary shadows. By carefully simplifying these elements, replacing some complex shaders with more efficient ones, and strategically using instance geometry (reusing the same geometry multiple times with slight variations) we were able to significantly reduce rendering times without sacrificing significant visual quality. This involved a methodical process of experimentation and measurement, monitoring render times at each step to assess the impact of our optimizations.

It highlighted the importance of a staged approach to optimization, starting with broad improvements and then focusing on incremental refinements. The final result not only significantly sped up the workflow but also enhanced the overall workflow efficiency of the entire production pipeline.

Q 21. Explain your understanding of different interpolation methods.

Interpolation methods determine how values change between keyframes in animation. Different methods produce varying degrees of smoothness and control. Common methods include linear interpolation, which creates a straight line between keyframes, resulting in a jerky movement, and cubic interpolation (such as Catmull-Rom or Bezier curves), which creates smoother transitions. Cubic interpolation methods allow for more control over the curve’s shape, resulting in more natural and fluid animation.

Linear interpolation is simple and computationally inexpensive, suitable for situations where smooth transitions aren’t critical. y = y1 + (x - x1) * ((y2 - y1) / (x2 - x1)) is a simple equation representing linear interpolation between two points. However, for character animation or complex movement, cubic splines offer far greater control, allowing animators to fine-tune the speed and acceleration of the animation. The choice of interpolation depends heavily on the context; for example, linear interpolation might suffice for simple UI animations, while complex animations might require a more sophisticated cubic spline approach, potentially requiring custom curves for extreme control.

Q 22. What are your experiences with motion capture data?

Motion capture (mocap) data is invaluable for creating realistic character animation. My experience encompasses working with various mocap systems, from optical systems using multiple cameras to inertial systems utilizing sensors on the actor. I’m proficient in processing and cleaning raw mocap data, which often involves removing noise, fixing inconsistencies, and retargeting the data to different character rigs. For example, I’ve worked on projects where we captured an actor’s performance and then applied it to a stylized cartoon character, requiring careful manipulation of the data to match the character’s unique movement style. This process involves scaling, adjusting joint rotations, and sometimes even adding secondary animation to enhance the realism or stylization.

Beyond the technical aspects, I understand the importance of working closely with the actors during the capture process to ensure optimal performance. Directing the actor’s performance and providing feedback is crucial to getting the best possible data. This collaboration ensures the animation remains expressive and natural, even after the post-processing stage.

Q 23. How familiar are you with different lighting techniques (e.g., three-point lighting)?

Lighting is fundamental to establishing mood, atmosphere, and realism in animation and rendering. Three-point lighting, a cornerstone technique, uses three lights: a key light (main light source), a fill light (softening shadows from the key light), and a back light (separating the subject from the background). I’m highly familiar with this and many other techniques, including:

- High-Key Lighting: Creates bright, cheerful scenes with minimal shadows, often used in comedies or upbeat settings.

- Low-Key Lighting: Employs strong contrasts between light and dark, ideal for dramatic or suspenseful scenes.

- Rim Lighting: Highlights the edges of a subject, providing separation and depth.

- Ambient Occlusion: Simulates the darkening of areas where surfaces are close together, adding realism to crevices and recesses.

- Global Illumination: Considers how light bounces and interacts with surfaces within a scene, leading to more realistic and subtle lighting.

I have extensive experience in utilizing these techniques in various rendering software packages like Arnold, Renderman, and V-Ray. I understand how to strategically place lights to enhance a character’s features or highlight specific elements within a scene. I also consider the impact of different light types (point, area, spot) and color temperatures on the overall aesthetic.

Q 24. Describe your understanding of color spaces and color management.

Color spaces define how colors are numerically represented, while color management ensures consistency across different devices and software. Understanding this is crucial for achieving accurate and visually appealing results. Common color spaces include sRGB (for web and most monitors), Adobe RGB (wider gamut for print), and Rec.709 (for HD video). I’m experienced in working with these spaces and converting between them to prevent color shifts during the pipeline.

Color management involves setting up profiles for each device (monitor, printer, scanner) and software, ensuring that the colors perceived on one device match as closely as possible on another. Failure to manage color can lead to significant discrepancies, where colors appear drastically different depending on the output device. I routinely utilize color management tools within my pipeline to maintain consistent color accuracy throughout the entire process from initial concept through final render.

For instance, in a recent project involving both digital painting and 3D rendering, proper color management ensured a seamless transition between the different stages, resulting in a final product with unified and accurate color representation.

Q 25. How do you approach creating believable character animation?

Creating believable character animation requires a blend of technical skill and artistic sensibility. It’s more than just moving limbs; it’s about conveying emotion, personality, and weight through movement. My approach involves:

- Understanding Anatomy and Physics: A solid understanding of how the human (or animal) body moves is paramount. This knowledge informs the animation, ensuring realistic weight, balance, and fluidity of motion.

- Reference Gathering: I meticulously gather video and photographic references of real-life movements. This helps me accurately capture subtle nuances and details that contribute to realism.

- Spacing and Timing: Precise control over spacing (distance between key poses) and timing (duration of each pose) is essential for generating convincing movement.

- Secondary Animation: This involves adding subtle details like clothing simulation, hair movement, and facial expressions to enhance realism and emotional impact. These details are often what separates great animation from good animation.

- Acting and Performance: I strive to understand the underlying emotion or intention of the character’s actions. This drives the animation and helps to create a compelling performance.

For example, I once animated a character experiencing grief. By carefully observing references and focusing on subtle movements like slumped posture and slow, deliberate actions, I was able to convey a sense of sadness and loss more effectively.

Q 26. What are your experiences with procedural animation?

Procedural animation uses algorithms and rules to generate animation automatically, often eliminating the need for manual keyframing. My experience includes using various techniques such as:

- Particle Systems: Generating effects like fire, smoke, and crowds.

- Physically-Based Simulation: Simulating cloth, hair, and rigid bodies using physics engines.

- Noise Functions: Creating organic-looking movements and textures.

- L-systems: Generating complex branching structures like plants and trees.

Procedural animation is particularly useful for creating repetitive or complex animations that would be time-consuming to animate manually. For example, I used a particle system to simulate a large crowd of people moving through a city square, significantly reducing the time and effort required compared to manually animating each individual. This also gives more control over variation and density compared to manually animating each individual.

Q 27. Explain your workflow for creating realistic textures.

Creating realistic textures involves a multi-step process that goes beyond simply applying a color map. My workflow typically includes:

- Photography and Scanning: Gathering high-resolution photographs or scans of real-world materials serves as a strong base for creating realistic textures.

- Image Manipulation: Using software like Photoshop or Substance Painter to clean up images, adjust colors, add detail, and create variations.

- Normal Maps, Displacement Maps, and other Maps: Creating these additional maps enhances the surface detail and realism. Normal maps simulate surface bumps and grooves, while displacement maps actually modify the geometry, providing even more detail.

- Procedural Texture Generation: Using software features to procedurally generate textures based on algorithms and patterns. This is useful for creating complex textures or variations on a base texture.

- Substance Designer (or similar): Utilizing specialized software such as Substance Designer allows for non-destructive workflow and highly efficient creation and modification of complex textures.

For instance, when creating a realistic wood texture, I might start with high-resolution photographs of different types of wood, then use image manipulation techniques to create variations in color, grain, and wear. I would then generate normal and displacement maps to add more surface detail and realism in the 3D rendering software.

Q 28. What are your strengths and weaknesses as an animator/rendering artist?

Strengths: My strengths lie in my strong foundation in traditional animation principles coupled with a deep understanding of modern rendering techniques. I’m adept at problem-solving, consistently finding creative solutions to complex technical challenges. I collaborate effectively within a team and communicate my ideas clearly. I’m also a fast learner, readily adapting to new software and techniques. My ability to blend artistic vision with technical expertise allows me to deliver high-quality, realistic results efficiently.

Weaknesses: While I possess a strong skill set, there’s always room for improvement. I’m currently working on expanding my knowledge of certain specialized areas like rigging complex characters for realistic simulations and improving my proficiency in physically-based simulations, especially with fluids. I am actively addressing these by pursuing relevant online courses and engaging with the broader animation community. Continuous learning is paramount in this rapidly evolving field.

Key Topics to Learn for Your Animation and Rendering Interview

- Principles of Animation: Understand the 12 principles of animation (squash and stretch, anticipation, staging, etc.) and how they apply to different animation styles. Consider practical applications like character animation or motion graphics.

- 3D Modeling Techniques: Familiarize yourself with polygon modeling, NURBS modeling, and sculpting techniques. Be prepared to discuss the strengths and weaknesses of each method and their application in different projects.

- Rigging and Character Animation: Mastering character rigging is crucial. Understand skeletal animation, skinning techniques, and how to create realistic and expressive character movements. Explore different rigging software and workflows.

- Rendering Techniques: Explore various rendering pipelines, including path tracing, ray tracing, and rasterization. Discuss the trade-offs between speed and quality in different rendering approaches. Understand concepts like global illumination and shadow mapping.

- Shader Creation and Material Design: Know the basics of shader programming (e.g., using shaders to create realistic materials and effects). Be ready to discuss different shader types and their applications.

- Lighting and Composition: Mastering lighting techniques is key to creating impactful visuals. Understand different light types, their properties, and how to use lighting to enhance mood and storytelling. Discuss the importance of composition in animation and rendering.

- Software Proficiency: Demonstrate a solid understanding of industry-standard software such as Maya, Blender, 3ds Max, Houdini, or others relevant to the specific job description. Highlight your proficiency in relevant pipelines and workflows.

- Problem-Solving and Troubleshooting: Be ready to discuss challenges you’ve faced in previous projects and how you overcame them. This showcases your problem-solving skills and technical aptitude.

- Workflow Optimization: Discuss techniques to improve your workflow, such as using scripting, automation tools, and efficient file management practices. This demonstrates your understanding of time management and efficiency.

Next Steps

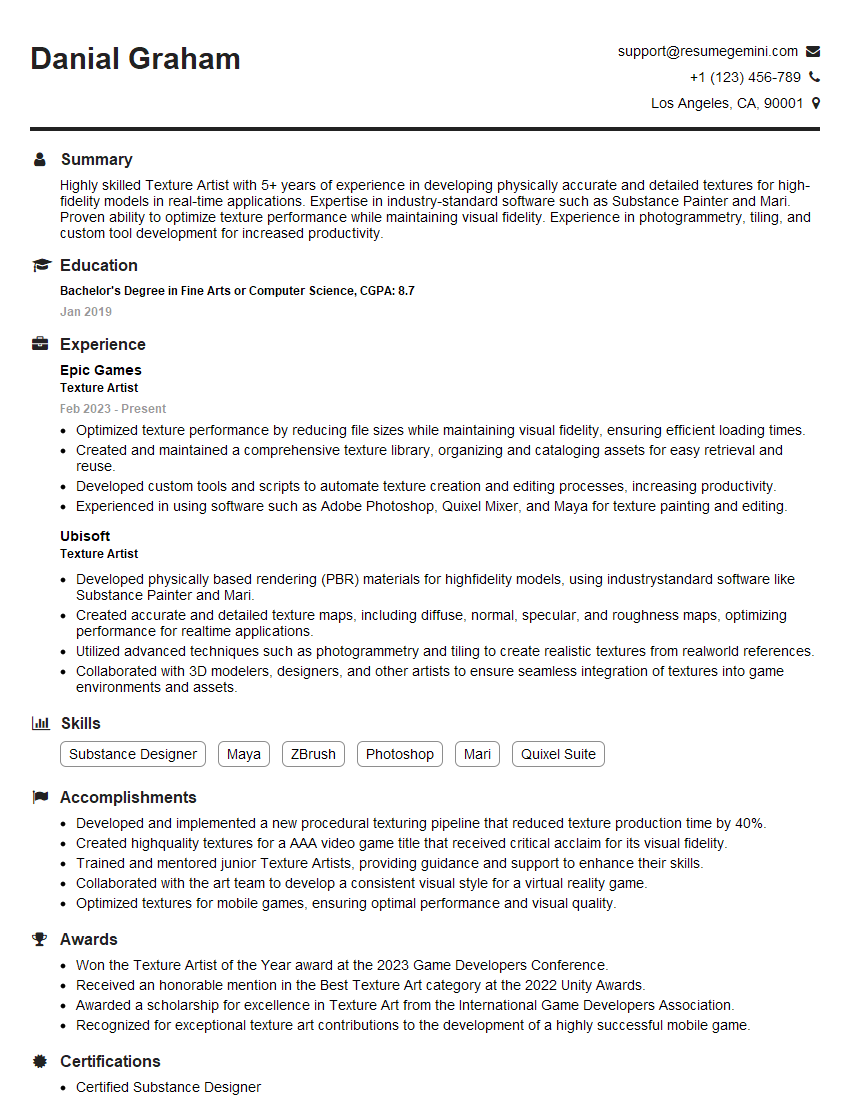

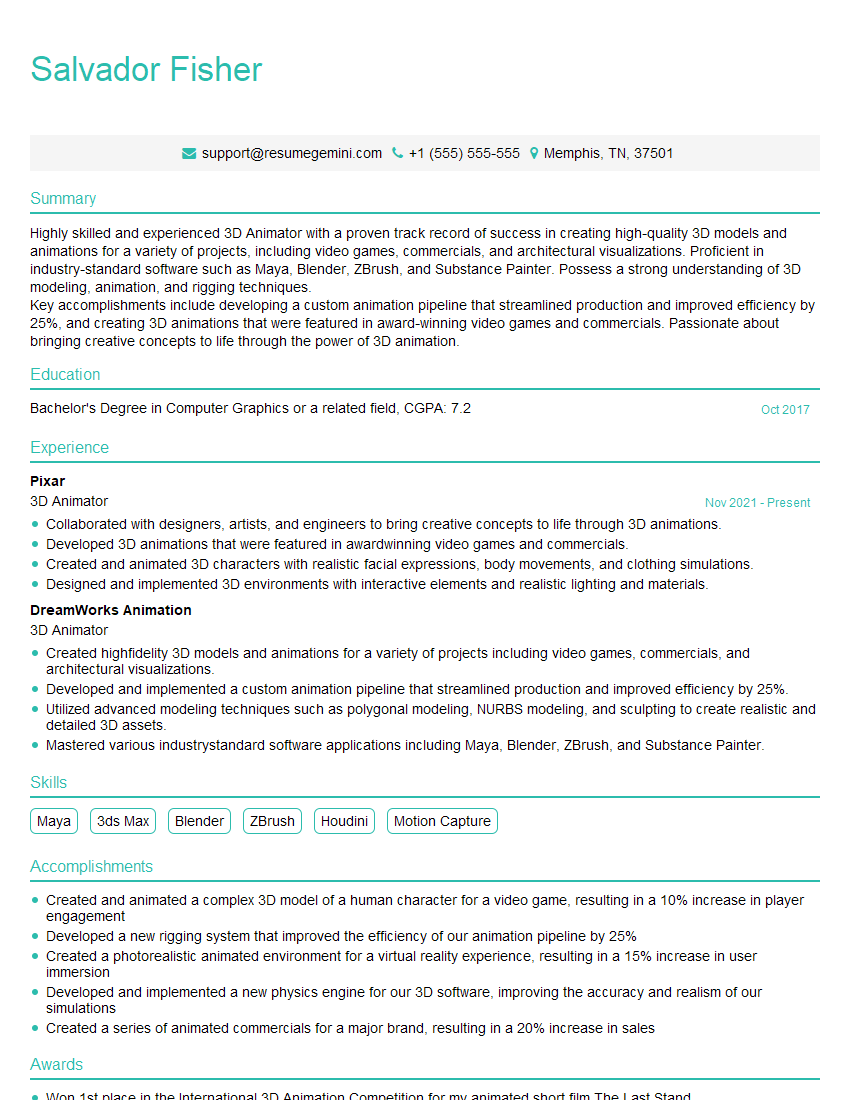

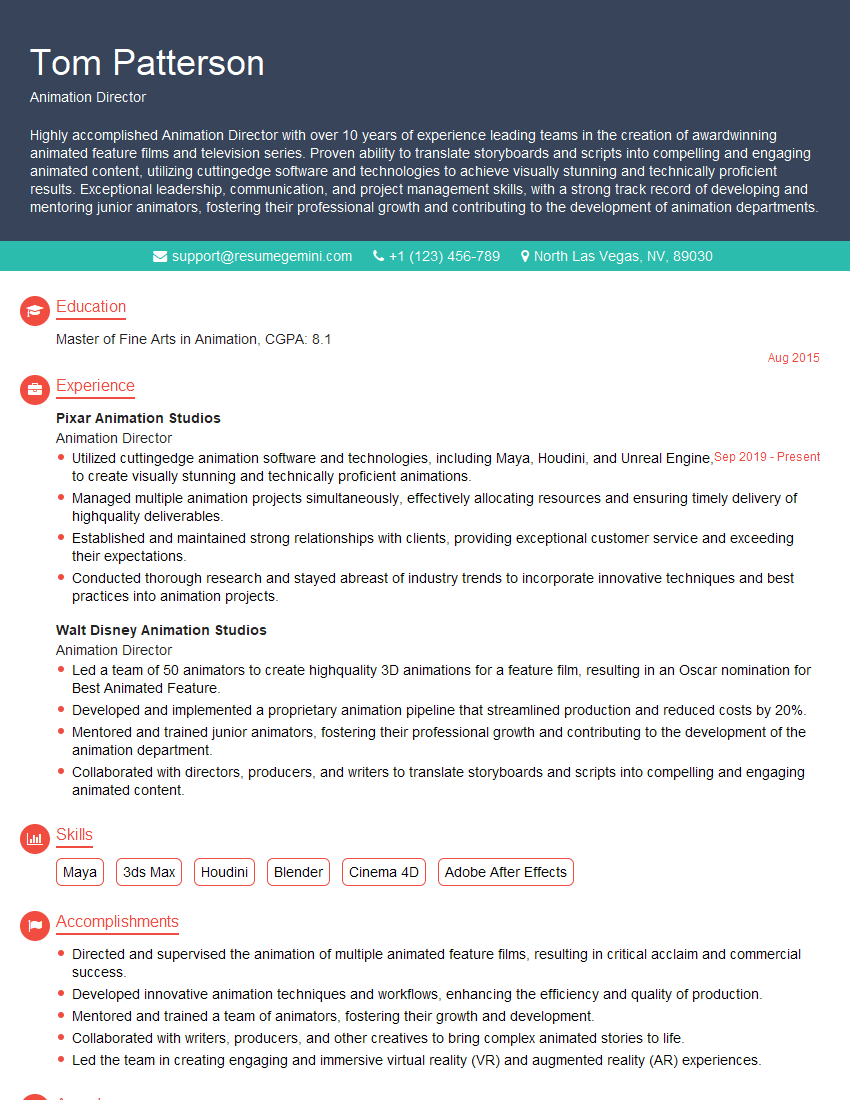

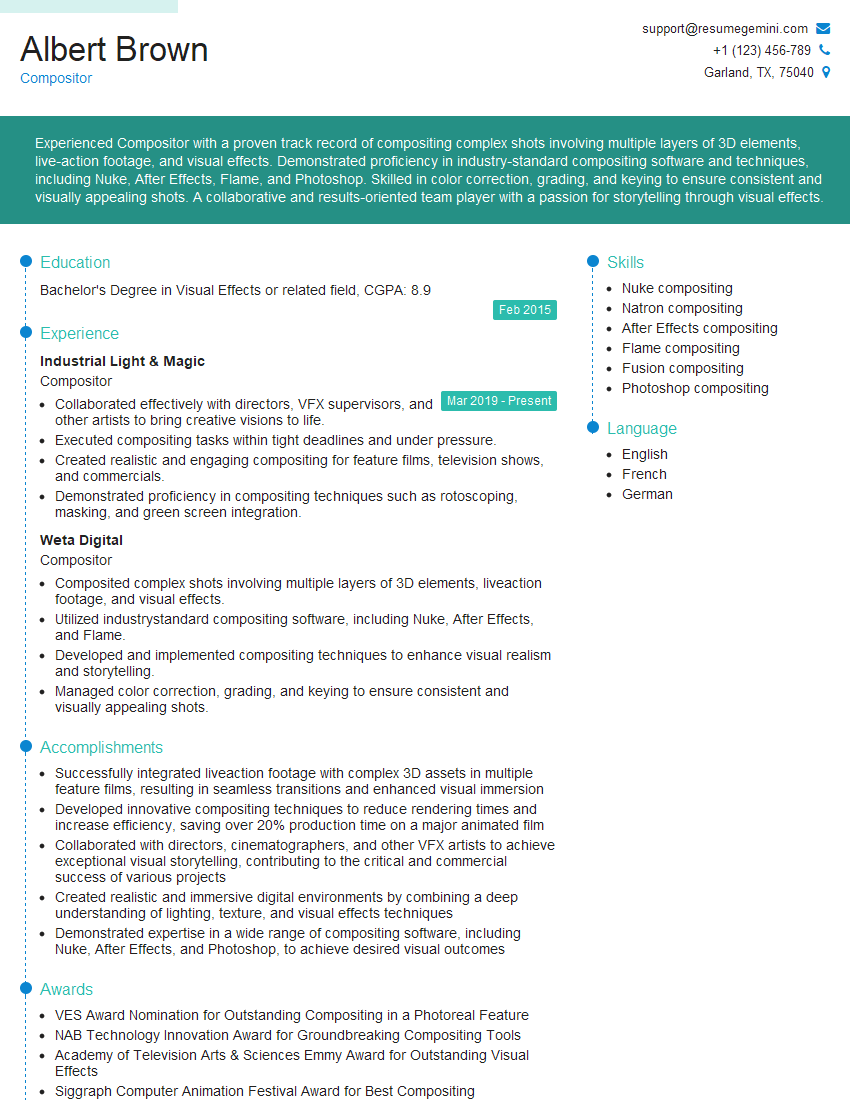

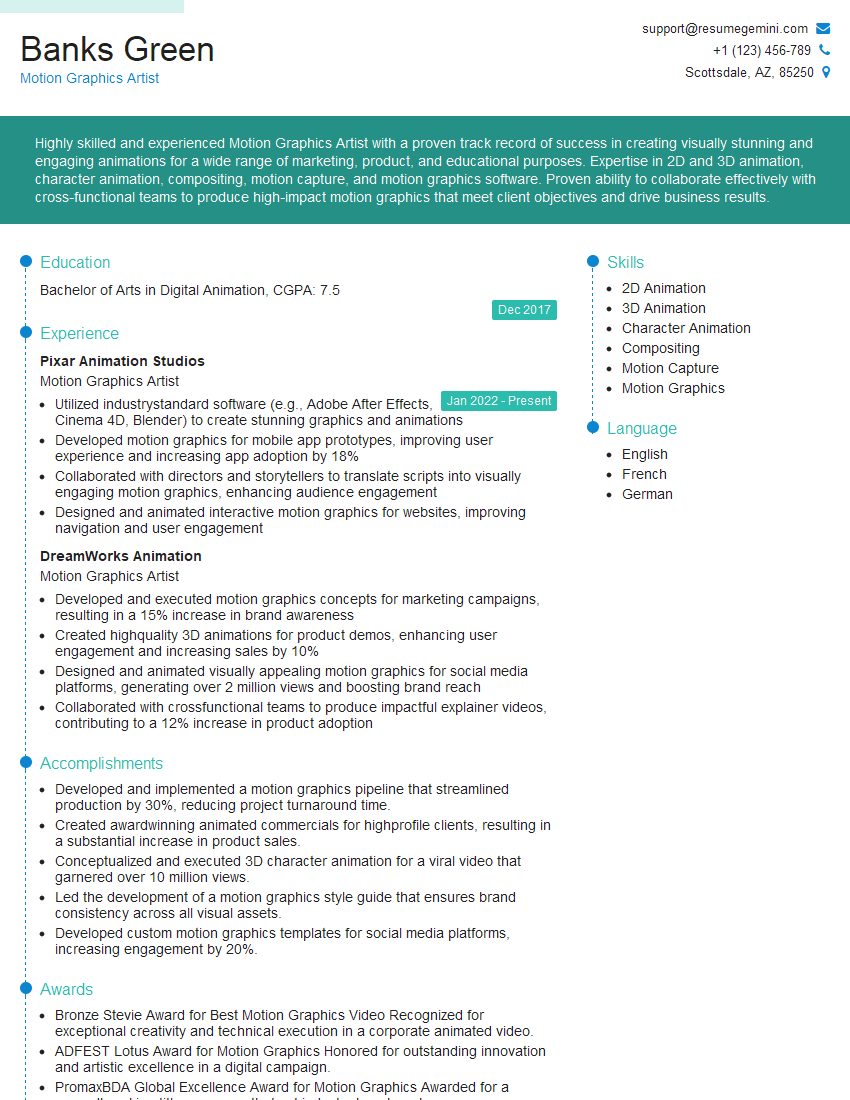

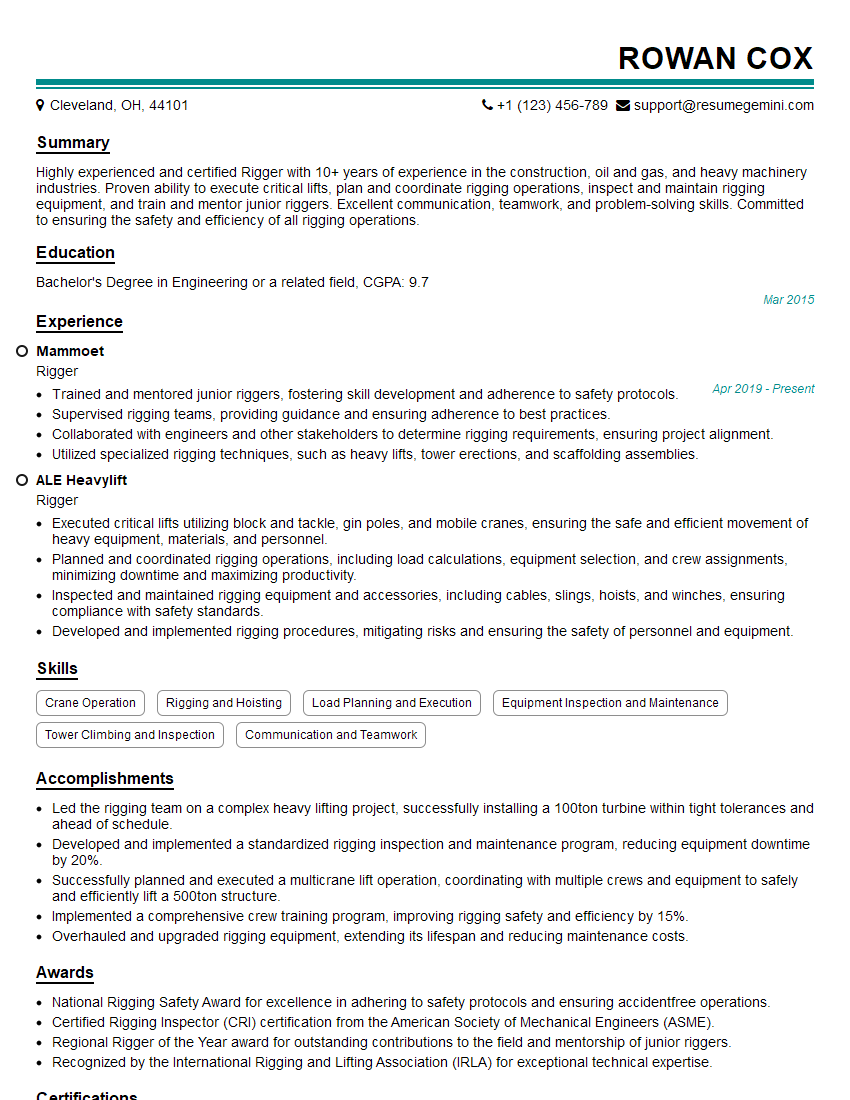

Mastering animation and rendering skills opens doors to exciting careers in film, games, advertising, and more! To maximize your job prospects, crafting an ATS-friendly resume is crucial. ResumeGemini is a trusted resource to help you build a professional and impactful resume that gets noticed by recruiters. We provide examples of resumes tailored to the Animation and Rendering industry to give you a head start. Invest the time to create a resume that truly showcases your skills and experience – it’s your first impression!

Explore more articles

Users Rating of Our Blogs

Share Your Experience

We value your feedback! Please rate our content and share your thoughts (optional).

What Readers Say About Our Blog

Hello,

We found issues with your domain’s email setup that may be sending your messages to spam or blocking them completely. InboxShield Mini shows you how to fix it in minutes — no tech skills required.

Scan your domain now for details: https://inboxshield-mini.com/

— Adam @ InboxShield Mini

Reply STOP to unsubscribe

Hi, are you owner of interviewgemini.com? What if I told you I could help you find extra time in your schedule, reconnect with leads you didn’t even realize you missed, and bring in more “I want to work with you” conversations, without increasing your ad spend or hiring a full-time employee?

All with a flexible, budget-friendly service that could easily pay for itself. Sounds good?

Would it be nice to jump on a quick 10-minute call so I can show you exactly how we make this work?

Best,

Hapei

Marketing Director

Hey, I know you’re the owner of interviewgemini.com. I’ll be quick.

Fundraising for your business is tough and time-consuming. We make it easier by guaranteeing two private investor meetings each month, for six months. No demos, no pitch events – just direct introductions to active investors matched to your startup.

If youR17;re raising, this could help you build real momentum. Want me to send more info?

Hi, I represent an SEO company that specialises in getting you AI citations and higher rankings on Google. I’d like to offer you a 100% free SEO audit for your website. Would you be interested?

Hi, I represent an SEO company that specialises in getting you AI citations and higher rankings on Google. I’d like to offer you a 100% free SEO audit for your website. Would you be interested?

good