Feeling uncertain about what to expect in your upcoming interview? We’ve got you covered! This blog highlights the most important GPS/GIS interview questions and provides actionable advice to help you stand out as the ideal candidate. Let’s pave the way for your success.

Questions Asked in GPS/GIS Interview

Q 1. Explain the difference between geographic coordinates and projected coordinates.

Geographic coordinates, also known as latitude and longitude, represent a location on the Earth’s spherical surface using angles. Latitude measures the north-south position relative to the Equator (0°), ranging from -90° (South Pole) to +90° (North Pole). Longitude measures the east-west position relative to the Prime Meridian (0°), ranging from -180° to +180°. Think of it like finding a specific seat in a giant, spherical stadium: latitude tells you the row, and longitude tells you the seat number.

Projected coordinates, on the other hand, transform those spherical coordinates onto a flat, two-dimensional plane. This is necessary because it’s impossible to perfectly flatten a sphere without distortion. Projected coordinates are usually expressed in Cartesian coordinates (X, Y), representing eastings and northings, based on a specific map projection. Imagine taking a photo of that spherical stadium; you’ll have a flat image with distorted perspectives, but each seat still has a position on that flat image, represented by X and Y coordinates.

Q 2. What are the different map projections and when would you use each?

There are many map projections, each with strengths and weaknesses depending on the application. The choice of projection depends critically on the area you are mapping and the type of analysis you are performing.

- Mercator Projection: Preserves angles, making it ideal for navigation. However, it severely distorts area, particularly at higher latitudes. Think of Greenland appearing much larger than South America on a typical world map – that’s the Mercator effect.

- Lambert Conformal Conic Projection: Minimizes distortion in areas with a relatively east-west orientation. Commonly used for mid-latitude regions in aeronautical and topographic mapping.

- Albers Equal-Area Conic Projection: Preserves area, making it suitable for thematic mapping where area comparisons are critical, such as showing population density or resource distribution across a country or continent. Shape distortion will increase away from the standard parallels.

- UTM (Universal Transverse Mercator): Divides the Earth into 60 zones, each using a transverse Mercator projection, minimizing distortion within each zone. Excellent for large-scale mapping where minimizing distortion is important over a relatively smaller area.

- Robinson Projection: A compromise projection that balances area and shape distortions. It’s often used for world maps intended for general use, emphasizing visual appeal over strict accuracy of area or shape.

For example, a navigation app would likely use a Mercator projection for its ease of use and direction finding, while a map showing the relative land areas of continents would use an equal-area projection like Albers.

Q 3. Describe the process of georeferencing a raster image.

Georeferencing is the process of assigning geographic coordinates (latitude and longitude) to a raster image (like a scanned map or aerial photo) so it can be integrated into a GIS. This involves aligning the image to a known coordinate system using control points.

- Identify Control Points: Select points on the image with known coordinates (obtained from a map with coordinate information or high-accuracy GPS data). These points should be distributed evenly across the image.

- Obtain Coordinates: Determine the geographic coordinates (latitude and longitude) of these control points using a reliable source, like a high-resolution base map with a known coordinate system.

- Transform the Image: Use georeferencing software (like ArcGIS, QGIS) to apply a transformation (e.g., polynomial, affine) to warp the image, aligning the control points in the image to their corresponding geographic coordinates. The software calculates the mathematical transformation parameters.

- Verify Accuracy: Assess the accuracy of the georeferencing by examining the RMS (Root Mean Square) error. A lower RMS error indicates a better fit and higher accuracy. You might need to add more control points or refine the transformation type to improve accuracy.

Think of it like fitting a puzzle: the control points are the pieces you align perfectly to put the entire puzzle (the raster image) in its correct geographic context. This is crucial for overlaying the image with other geospatial data and performing spatial analysis.

Q 4. What is a shapefile and what are its components?

A shapefile is a widely used geospatial vector data format for storing the location, shape, and attributes of geographic features. It’s not a single file but a collection of files that work together. Think of it like a database record for geographic features.

- .shp: The main file storing the feature geometry (points, lines, or polygons).

- .shx: The index file linking the geometry to the attribute data.

- .dbf: The attribute table storing descriptive information about each feature (e.g., name, population, land use).

- .prj: The projection file defining the coordinate system.

- .sbn and .sbx: Spatial index files for faster data access (optional).

For instance, a shapefile could represent road networks (lines), building footprints (polygons), or the locations of trees (points). Each feature would have associated attributes, such as road name, building address, or tree species, stored in the .dbf file.

Q 5. Explain the concept of spatial autocorrelation.

Spatial autocorrelation describes the degree to which features at one location are related to features at nearby locations. In simpler terms, it measures the similarity of nearby things. A high degree of spatial autocorrelation suggests that similar features tend to cluster together, while a low degree indicates random distribution.

For example, high house prices might show spatial autocorrelation if expensive homes tend to be located near each other in a particular neighborhood. Similarly, the occurrence of a disease might show spatial autocorrelation if cases cluster in a specific geographic area, potentially indicating a common source of infection.

Understanding spatial autocorrelation is crucial for accurate spatial analysis because ignoring it can lead to flawed conclusions. Techniques like Moran’s I statistic are commonly used to quantify the strength and significance of spatial autocorrelation.

Q 6. What are the different types of spatial analysis techniques?

Spatial analysis involves various techniques used to analyze and interpret spatial data. Here are some key examples:

- Buffering: Creating zones around features (e.g., a buffer around a river to show the flood plain).

- Overlay Analysis: Combining multiple layers to find spatial relationships (e.g., overlaying soil type and land use maps).

- Proximity Analysis: Calculating distances between features (e.g., finding the nearest hospital to an accident location).

- Network Analysis: Analyzing features within a network (e.g., finding the shortest route between two points on a road network).

- Spatial Interpolation: Estimating values at unsampled locations based on known values at sampled locations (e.g., estimating rainfall across a region based on measurements at weather stations).

- Density Analysis: Calculating the concentration of features (e.g., calculating population density).

The choice of technique depends heavily on the research question and available data. For example, to assess the impact of a proposed highway on nearby residential areas, one might use buffer analysis to define areas affected and overlay analysis to examine the interaction of the highway with existing land use patterns.

Q 7. How do you handle spatial data errors and inconsistencies?

Spatial data errors and inconsistencies can significantly impact the results of any analysis. Handling them requires a multi-step approach:

- Data Cleaning: This involves identifying and correcting errors such as incorrect coordinates, inconsistencies in attribute data, or duplicate features. This might require manual editing, using automated tools or scripts.

- Data Validation: Check the data for topological errors (e.g., overlapping polygons, gaps in lines). GIS software often provides tools to identify and resolve these issues.

- Error Propagation Assessment: Understand how errors in input data might affect the results of your analysis. This might involve error propagation models to estimate the uncertainty in your output.

- Data Transformation and Reclassification: Adjust the data or create derived variables to improve its quality or suitability for analysis. For instance, reclassify land use codes to simplify the analysis.

- Appropriate Analysis Techniques: Choose analysis methods robust to the known errors in your data. If you have noisy data with many errors, using robust statistical methods is critical.

For instance, if you’re analyzing crime data, missing or inaccurate location information would require careful data cleaning and error propagation analysis to make sure your crime mapping accurately reflects the reality on the ground.

Q 8. Describe your experience with different GIS software (e.g., ArcGIS, QGIS).

My GIS software experience spans several leading platforms. I’ve extensively used ArcGIS, particularly ArcGIS Pro, for complex geoprocessing tasks, spatial analysis, and creating high-quality cartographic outputs. ArcGIS’s powerful geodatabase management capabilities and extensive toolset are invaluable for large-scale projects. I’m also proficient in QGIS, an open-source alternative that provides a cost-effective solution for many applications. I appreciate QGIS’s flexibility, its plugin ecosystem, and its accessibility for users who might not have access to commercial software. In comparing the two, ArcGIS excels in its robustness and specialized extensions for specific industries, while QGIS offers unparalleled flexibility and community support. I’ve used both for projects ranging from environmental monitoring to urban planning, leveraging each platform’s strengths depending on the project requirements.

For example, in a recent environmental impact assessment, ArcGIS Pro’s 3D analysis tools were crucial for visualizing potential flood zones, while in another project involving community mapping, QGIS’s ease of use and open-source nature made it the ideal choice for collaboration with non-technical stakeholders.

Q 9. Explain the concept of a geodatabase.

A geodatabase is a structured repository for storing and managing geographic data. Think of it as a highly organized database specifically designed to handle the complexities of spatial information. Unlike simple shapefiles, a geodatabase offers superior data integrity, versioning capabilities, and the ability to relate different types of geographic data through a relational database model. This allows for efficient data management, especially in projects involving large datasets and multiple users. It’s essentially a container that provides a framework for organizing various spatial and attribute data, ensuring consistency and avoiding data redundancy.

For example, a geodatabase for a city might contain feature classes for streets, parcels, buildings, and utility lines, all linked together through relationships. This allows for complex queries like finding all properties within a certain distance of a proposed highway or identifying buildings within a specific flood zone—tasks that would be significantly more difficult with a simple collection of shapefiles.

Q 10. What are the different data models used in GIS?

GIS uses several data models to represent geographic features. The most common are:

- Vector Data Model: This model represents geographic features as points, lines, and polygons. Think of it as using precise coordinates to define the location and shape of features. This is ideal for representing discrete features like buildings, roads, and rivers.

- Raster Data Model: This model represents geographic features as a grid of cells or pixels, each with an assigned value. This is well-suited for representing continuous phenomena like elevation, temperature, or satellite imagery. Think of a digital photograph; each pixel has a color value.

- TIN (Triangulated Irregular Network): This model is a vector-based representation of a surface using a network of triangles. It’s particularly useful for representing terrain surfaces and allows for the interpolation of elevation values.

The choice of data model depends on the specific application. For example, a map of city streets would likely use a vector model, while a digital elevation model would use a raster model. Often, projects combine vector and raster data for a complete picture.

Q 11. How do you perform spatial joins and overlay analysis?

Spatial joins and overlay analysis are fundamental geoprocessing techniques used to combine information from different geographic datasets.

Spatial Join: This operation merges attributes from one feature class (the ‘join’ features) to another (the ‘target’ features) based on their spatial relationship. For instance, you might spatially join census data (join features) to a polygon layer representing neighborhood boundaries (target features) to assign census statistics to each neighborhood. The relationship could be based on containment (e.g., points within polygons), proximity (e.g., nearest neighbor), or intersection.

Overlay Analysis: This involves combining two or more spatial layers to create a new layer that contains information from both. Common overlay operations include:

- Union: Combines all features from the input layers.

- Intersect: Creates a new layer containing only the features that overlap in the input layers.

- Erase: Removes the areas of one layer that overlap with another.

- Clip: Extracts the portion of a layer that falls within the boundaries of another.

For example, an intersect operation between a land-use layer and a soil type layer would create a new layer showing the area of each soil type within each land-use zone. These techniques are essential for tasks such as site selection, impact assessment, and resource management.

Q 12. Describe your experience with GPS data collection and processing.

My experience with GPS data collection and processing is extensive. I am familiar with various GPS receivers, from handheld units to those integrated into vehicles or drones. Data collection often involves planning a survey design, including selecting appropriate sampling strategies and ensuring sufficient data density. During data collection, careful attention is given to obtaining high-quality positioning data, minimizing signal obstruction, and documenting any potential errors. Post-processing involves cleaning the data by removing outliers, correcting for systematic errors using differential GPS (DGPS) or other techniques, and converting the data into appropriate formats for analysis and visualization within GIS software.

For example, I’ve used GPS data to map trails in remote areas, track vehicle movements for transportation analysis, and monitor wildlife movements for ecological studies. The process typically includes the use of specialized GPS software for planning routes, managing waypoints, and collecting data in the field. Subsequently, I’ve used GIS software to visualize the collected data, perform spatial analysis, and generate maps and reports.

Q 13. What are some common GPS error sources and how can they be mitigated?

GPS data is inherently subject to various error sources. Understanding and mitigating these errors is crucial for accurate geospatial analysis.

- Atmospheric Effects: Ionospheric and tropospheric delays can affect signal propagation, leading to positional errors.

- Multipath Errors: Signals reflecting off surfaces like buildings or water bodies can create inaccurate position readings.

- Satellite Geometry (GDOP): The relative positions of the satellites being tracked influence the accuracy of the position estimate. Poor satellite geometry leads to higher error.

- Receiver Noise: Electronic noise in the receiver can introduce errors in the measurements.

- Clock Errors: Inaccuracies in the clocks of GPS satellites and the receiver contribute to errors.

These errors can be mitigated through various techniques:

- Differential GPS (DGPS): Using a reference station with known coordinates to correct for systematic errors.

- Real-Time Kinematic (RTK) GPS: Providing high-precision positioning through real-time correction signals.

- Post-processing kinematic (PPK): Using data from multiple reference stations to post-process GPS data and correct for errors.

- Data Filtering: Removing outliers and smoothing data to reduce the impact of noise.

Careful planning of data collection, appropriate receiver selection, and robust post-processing techniques are critical for minimizing GPS errors and achieving the desired level of accuracy.

Q 14. Explain the concept of coordinate systems and datums.

Coordinate systems and datums are fundamental concepts in GPS and GIS. They define how locations on the Earth’s surface are represented numerically.

Datum: A datum is a reference model of the Earth’s shape and size, used as a basis for defining coordinate systems. It defines the origin and orientation of a coordinate system and is crucial because the Earth is not perfectly spherical. Different datums exist because various organizations have developed their own models based on different survey data and mathematical approaches (e.g., WGS84, NAD83). The choice of datum influences the accuracy and consistency of spatial data.

Coordinate System: A coordinate system is a mathematical framework used to define the location of points on the Earth’s surface. It consists of a datum and a projection.

- Geographic Coordinate Systems (GCS): Use latitude and longitude to express coordinates. Latitude and longitude are angles measuring a position relative to the equator and prime meridian, respectively.

- Projected Coordinate Systems (PCS): Project the curved surface of the Earth onto a flat plane, introducing distortions. Different projections are designed to minimize different types of distortion depending on the application (e.g., UTM, State Plane).

Understanding and selecting the appropriate coordinate system and datum are essential for ensuring the accuracy and compatibility of geospatial data. Using inconsistent coordinate systems can lead to significant errors in spatial analysis and mapping. For example, using a UTM zone appropriate for the location is critical for accurate measurements and computations in regional and local projects, while WGS84 is suitable for global-scale applications.

Q 15. How do you ensure data accuracy and integrity in a GIS project?

Ensuring data accuracy and integrity in a GIS project is paramount. It’s like building a house – you need a solid foundation to avoid cracks later. We achieve this through a multi-faceted approach:

- Data Source Validation: I meticulously evaluate the reliability of data sources. This includes assessing the source’s reputation, methodology used for data collection (e.g., GPS accuracy, survey methods), and the potential for biases. For example, using data from a well-established government agency is generally more reliable than an unverified online source.

- Metadata Management: Comprehensive metadata documentation is crucial. This involves recording details about the data’s origin, projection, accuracy, and any processing steps applied. Think of it as a data passport, ensuring traceability and understandability.

- Data Cleaning and Transformation: Raw data often contains errors or inconsistencies. I employ techniques like spatial consistency checks (e.g., ensuring lines connect properly), attribute error correction (identifying and fixing incorrect values), and projection transformations to maintain uniformity. This is akin to editing a poorly written manuscript before publication.

- Data Validation and Quality Control: Regular data validation using automated checks and visual inspections is essential. I use both manual checks (visualizing the data on a map) and automated tools within GIS software to detect inconsistencies. For instance, I might use spatial queries to identify overlaps or gaps in polygon features.

- Version Control: Employing a version control system (like Git) allows tracking changes made to the data, enabling rollback to previous versions if necessary. This prevents accidental data loss and aids in collaborative work.

By combining these methods, I create a robust system for data quality assurance, ultimately leading to more accurate and reliable GIS projects.

Career Expert Tips:

- Ace those interviews! Prepare effectively by reviewing the Top 50 Most Common Interview Questions on ResumeGemini.

- Navigate your job search with confidence! Explore a wide range of Career Tips on ResumeGemini. Learn about common challenges and recommendations to overcome them.

- Craft the perfect resume! Master the Art of Resume Writing with ResumeGemini’s guide. Showcase your unique qualifications and achievements effectively.

- Don’t miss out on holiday savings! Build your dream resume with ResumeGemini’s ATS optimized templates.

Q 16. Describe your experience with spatial statistics.

My experience with spatial statistics is extensive, encompassing both descriptive and inferential techniques. I regularly use spatial statistics to understand patterns and relationships within geospatial data. Imagine trying to understand the spread of a disease—spatial statistics helps pinpoint hotspots and identify influencing factors.

- Descriptive Statistics: I frequently utilize tools such as spatial autocorrelation analysis (e.g., Moran’s I) to assess the degree of clustering or dispersion in a dataset. For instance, I might use this to analyze the spatial distribution of crime incidents to identify high-risk areas.

- Inferential Statistics: I use geostatistical methods like kriging to interpolate values at unsampled locations. This is very useful in environmental modeling, like estimating soil contamination levels based on a limited set of samples.

- Spatial Regression: I have experience building regression models that account for spatial dependence. For example, I might model the relationship between property values and proximity to parks, considering the spatial influence of neighboring properties.

Software packages like ArcGIS Spatial Analyst and R are my go-to tools for these analyses. I’ve successfully applied these techniques to projects involving environmental monitoring, urban planning, and public health.

Q 17. What is remote sensing and how is it integrated with GIS?

Remote sensing involves acquiring information about the Earth’s surface without physical contact. Think of it as getting a bird’s-eye view using satellites or airplanes. GIS, on the other hand, is the system for organizing, managing, and analyzing this spatial data. The integration of remote sensing and GIS is synergistic; they empower each other.

- Data Acquisition: Remote sensing provides the data—satellite imagery, aerial photos, LiDAR data—which is then processed and analyzed using GIS software.

- Data Processing: GIS tools are used to georeference (assign geographical coordinates) and process remotely sensed data, correcting for distortions and enhancing image quality. Imagine removing cloud cover or improving resolution.

- Spatial Analysis: GIS allows spatial analysis of remotely sensed data, enabling tasks such as land cover classification, change detection, and environmental monitoring. For example, we can analyze satellite images to track deforestation over time.

- Visualization and Mapping: GIS creates compelling visualizations to communicate insights derived from remote sensing data, fostering better understanding and decision-making.

A practical example is using satellite imagery to monitor the spread of wildfires. Remote sensing provides the imagery showing the fire’s progression, while GIS allows for analysis of its movement, impact, and prediction of its spread.

Q 18. How do you handle large spatial datasets?

Handling large spatial datasets requires strategic approaches. Think of it like managing a massive library—you need a system for efficient organization and retrieval. My strategies involve:

- Data Compression: Techniques like using tiled datasets and appropriate data formats (e.g., GeoTIFF instead of raw image formats) reduce storage space and improve processing speed.

- Database Management Systems (DBMS): Utilizing spatial DBMS (e.g., PostGIS, Oracle Spatial) allows efficient storage, querying, and retrieval of large spatial datasets. This is like having a sophisticated cataloging system for your library.

- Data Partitioning and Subsetting: Dividing large datasets into smaller, manageable chunks (partitioning) allows focusing analysis on specific areas of interest (subsetting). This is similar to organizing the library by subject matter.

- Cloud Computing: Cloud-based platforms (e.g., AWS, Azure, Google Cloud) provide scalable resources for processing and analyzing large datasets that would be too demanding for local machines.

- Parallel Processing: Utilizing parallel processing capabilities within GIS software or custom scripts can drastically reduce processing time, making analysis of large datasets feasible.

I routinely work with datasets exceeding hundreds of gigabytes, leveraging these strategies to ensure efficient and timely analysis.

Q 19. Explain your experience with creating maps and visualizations.

Map creation and visualization are core to my GIS expertise. It’s about communicating complex spatial information effectively – like translating a scientific paper into a visually compelling story.

- Software Proficiency: I’m proficient in various GIS software packages, including ArcGIS Pro, QGIS, and Mapbox Studio, utilizing their cartographic capabilities to produce high-quality maps.

- Cartographic Principles: I apply established cartographic principles, such as map design, symbolization, and labeling, to create clear, informative, and aesthetically pleasing maps. This ensures effective communication of geographic information.

- Data Visualization Techniques: Beyond static maps, I use various visualization techniques, including 3D visualizations, interactive web maps, and animated maps, depending on the project’s goals. This is akin to using different storytelling formats to suit different audiences.

- Customization: I tailor map design elements (e.g., color schemes, fonts, legends) to the specific needs of the audience and the data being presented.

For instance, I created interactive web maps for a city’s planning department, allowing citizens to explore proposed infrastructure projects and provide feedback. In another project, I used animated maps to demonstrate the impact of climate change on coastal erosion over time.

Q 20. What are the ethical considerations in handling geospatial data?

Ethical considerations in handling geospatial data are paramount. It’s not just about technology; it’s about responsibility and social impact. Think of it like wielding a powerful tool – you must use it wisely.

- Data Privacy and Security: Protecting sensitive personal information embedded within geospatial data (e.g., location data, demographic information) is crucial. This includes adhering to relevant privacy regulations and implementing secure data storage and access controls.

- Data Accuracy and Transparency: Ensuring data accuracy and transparency is essential to prevent bias and misrepresentation. Clearly communicating data limitations and potential sources of error is paramount.

- Data Ownership and Intellectual Property: Respecting data ownership and intellectual property rights is vital. Proper attribution of data sources and adherence to licensing agreements is non-negotiable.

- Social Equity and Justice: Geospatial data can be used to reinforce existing inequalities or create new ones if not handled carefully. I strive to use GIS for equitable outcomes, considering potential biases and their impact on marginalized communities.

- Misuse Prevention: Understanding how geospatial data could be misused and taking steps to prevent such misuse is essential. For example, location data could be exploited for harmful purposes, and it’s important to mitigate such risks.

I approach every project with an ethical framework, striving to use geospatial technology responsibly and for the benefit of society.

Q 21. Describe your experience with GIS programming (e.g., Python, R).

GIS programming is an essential part of my workflow, significantly enhancing my analytical and automation capabilities. I primarily use Python and R, employing them for various tasks.

- Python: I use Python with libraries like

geopandas,shapely, andrasteriofor data manipulation, spatial analysis, and automation of repetitive tasks. For example, I wrote a Python script to automate the process of converting large raster datasets into a more efficient format, saving significant time and effort. A snippet illustrating this would be: import rasteriofrom rasterio.warp import calculate_default_transform, reproject, Resampling# ... (code for opening and reprojecting raster data) ...- R: R, with packages such as

sfandsp, is my preferred tool for advanced spatial statistics and creating publication-quality maps. I’ve used R to develop complex spatial regression models to understand the factors influencing urban sprawl.

My programming skills allow me to tailor my GIS workflow to specific project needs, increasing efficiency and enabling analyses that are otherwise impossible with standard GIS software alone.

Q 22. How do you use GPS data for navigation and routing?

GPS data forms the backbone of navigation and routing systems. Essentially, a GPS receiver determines its location by receiving signals from a constellation of satellites. This location data, typically expressed in latitude and longitude coordinates, is then fed into a routing algorithm.

This algorithm uses a digital map (often stored as a road network dataset in a GIS), which contains information about roads, intersections, speed limits, and other relevant features. The algorithm calculates the shortest or fastest route between a starting point and a destination, considering various factors like traffic conditions (if available), road closures, and user preferences (e.g., avoiding highways).

For example, a navigation app on your smartphone receives your GPS coordinates, identifies your location on the digital map, and then computes the route to your chosen destination, providing turn-by-turn instructions based on the calculated path. This process is constantly updated as your location changes, allowing for dynamic rerouting if necessary.

Q 23. Explain your experience with different types of spatial interpolation.

Spatial interpolation is a crucial technique in GIS for estimating values at unsampled locations based on known values at sampled locations. I have extensive experience with several methods, each with its strengths and weaknesses.

- Inverse Distance Weighting (IDW): This is a simple and widely used method where the value at an unsampled point is a weighted average of the values at nearby sampled points. The weights are inversely proportional to the distance from the unsampled point. It’s easy to implement but can be sensitive to outliers.

- Kriging: A more sophisticated geostatistical method that takes into account the spatial autocorrelation of the data. It provides estimates along with associated uncertainties, which is a significant advantage. Different Kriging variants exist, such as ordinary Kriging and universal Kriging, each suitable for different data characteristics.

- Spline Interpolation: This method fits a smooth surface through the known data points. Different types of splines exist, offering varying degrees of smoothness and flexibility. It’s useful when a smooth surface is desired, but it can overfit the data if not carefully parameterized.

In my experience, the choice of interpolation method depends heavily on the nature of the data, the desired level of accuracy, and the computational resources available. For example, in a project involving soil contamination mapping, I opted for Kriging to account for the spatial correlation in contamination levels and provide uncertainty estimates for regulatory reporting.

Q 24. Describe your experience working with various map projections and coordinate systems.

Map projections and coordinate systems are fundamental in GIS. A map projection is a systematic transformation of the Earth’s three-dimensional surface onto a two-dimensional plane. This process inevitably introduces distortions in area, shape, distance, or direction. Different projections are optimized for different purposes and geographic regions.

I’ve worked extensively with various projections, including:

- Equidistant projections: Preserve accurate distances from one or more central points.

- Conformal projections: Preserve the shape of small areas, such as the Mercator projection (commonly used for navigation).

- Equal-area projections: Preserve the relative areas of features.

Coordinate systems define how locations are represented numerically. I am proficient with geographic coordinate systems (GCS), such as latitude and longitude, and projected coordinate systems (PCS), which transform geographic coordinates into a planar system. Understanding the implications of projection choices is critical to avoid errors in spatial analysis. For instance, measuring distances directly on a Mercator projection can be significantly inaccurate at high latitudes, as it distorts distances along the north-south axis.

In a project involving analyzing land use change, I carefully selected an appropriate projection (Albers Equal-Area Conic) to minimize distortions in area calculations.

Q 25. Explain your approach to problem-solving in a GIS context.

My approach to GIS problem-solving is systematic and iterative. I typically follow these steps:

- Problem Definition: Clearly define the problem, including the objectives, data requirements, and desired outputs.

- Data Acquisition and Preparation: Gather relevant data from various sources, ensuring data quality and consistency. This may involve data cleaning, transformation, and projection adjustments.

- Spatial Analysis: Apply appropriate GIS techniques (e.g., overlay analysis, buffer analysis, network analysis) to address the problem.

- Visualization and Interpretation: Create informative maps and charts to visualize the results and interpret their meaning. This often involves careful consideration of symbology and cartographic principles.

- Validation and Refinement: Validate the results against known information or independent data sources. Iterate on the process to refine the analysis and improve accuracy.

For example, when tasked with identifying suitable locations for a new hospital, I used a multi-criteria decision analysis approach, integrating data on population density, accessibility, proximity to existing healthcare facilities, and land availability to identify optimal sites.

Q 26. What is your experience with spatial decision support systems?

Spatial Decision Support Systems (SDSS) are computer-based systems that integrate GIS with other tools to assist in making location-based decisions. My experience includes developing and using SDSS for various applications.

For example, I developed an SDSS for optimizing the placement of new wind turbines, incorporating factors such as wind speed data, land use restrictions, and proximity to transmission lines. The system allowed users to interactively explore different scenarios and evaluate the potential impact of various placement strategies. This involved integrating GIS with optimization algorithms and creating a user-friendly interface for decision-makers.

Another example involved using an existing SDSS for transportation planning, helping to identify optimal locations for new bus stops based on population density, accessibility, and proximity to existing transit routes. The SDSS significantly streamlined the decision-making process by providing a quantitative basis for evaluating different options.

Q 27. How do you stay up-to-date with the latest advancements in GPS and GIS technology?

Staying current in the rapidly evolving fields of GPS and GIS is crucial. I employ a multi-pronged approach:

- Professional Conferences and Workshops: Attending conferences such as Esri User Conferences and participating in specialized workshops keeps me informed about the latest software and techniques.

- Peer-Reviewed Publications: Regularly reading journals like the International Journal of Geographical Information Science and Geoinformatics provides access to cutting-edge research.

- Online Courses and Webinars: Utilizing online platforms like Coursera and edX allows me to learn new skills and deepen my understanding of specific topics.

- Professional Networks: Engaging with other professionals through organizations such as the Urban and Regional Information Systems Association (URISA) fosters knowledge exchange and exposure to new ideas.

- Software Updates and Documentation: Keeping abreast of software updates and consulting online documentation ensures I am proficient with the latest functionalities.

This combination helps me maintain a high level of expertise and adapt to the constantly evolving technological landscape.

Q 28. Describe a challenging GIS project and how you overcame the difficulties.

One particularly challenging project involved creating a flood risk assessment model for a coastal city. The difficulty stemmed from the need to integrate diverse datasets of varying quality and spatial resolutions. These included high-resolution elevation data, historical flood extent maps, land use data, and sea-level rise projections.

The challenge was compounded by inconsistencies in the data’s coordinate systems and the need to account for the complex interactions between various factors influencing flood risk. To overcome these difficulties, I employed a rigorous data cleaning and pre-processing workflow, involving extensive georeferencing and projection adjustments to ensure data consistency. I used advanced spatial analysis techniques, such as hydrological modeling, to simulate flood inundation under various scenarios.

Crucially, I collaborated closely with stakeholders, including city officials, emergency management personnel, and local residents, to ensure that the model’s outputs were relevant, understandable, and useful for informing risk mitigation strategies. Through meticulous attention to data quality, robust analytical techniques, and effective stakeholder engagement, we successfully delivered a reliable and impactful flood risk assessment that has significantly contributed to improving the city’s resilience to flooding.

Key Topics to Learn for Your GPS/GIS Interview

Ace your next interview by mastering these core concepts. Remember, understanding the “why” behind the technology is as crucial as knowing the “how.”

- GPS Fundamentals: Understand GPS signal acquisition, triangulation, error sources (like atmospheric delays and multipath), and differential GPS techniques. Consider the limitations and how to mitigate inaccuracies.

- GIS Data Models: Become proficient in vector and raster data structures, their strengths and weaknesses, and when to use each. Explore different data projections and coordinate systems and their impact on spatial analysis.

- Spatial Analysis Techniques: Practice common spatial analysis methods such as buffering, overlay analysis (union, intersect, erase), proximity analysis, and network analysis. Be ready to discuss real-world applications of these techniques.

- GIS Software Proficiency: Showcase your expertise in industry-standard software like ArcGIS, QGIS, or other relevant platforms. Highlight your experience with data manipulation, geoprocessing tools, and map creation.

- Data Management and Quality: Understand the importance of data quality, metadata, and data cleaning processes. Be prepared to discuss strategies for ensuring data accuracy and reliability.

- Cartography and Visualization: Demonstrate your ability to create effective and visually appealing maps, choosing appropriate symbology, labeling, and map layouts to effectively communicate spatial information.

- Remote Sensing Principles (Optional): Depending on the role, understanding basic remote sensing concepts (e.g., image interpretation, spectral signatures) can be a significant advantage.

- Problem-Solving and Case Studies: Prepare to discuss how you’ve used GPS/GIS to solve real-world problems. Frame your examples using the STAR method (Situation, Task, Action, Result) to clearly articulate your contributions.

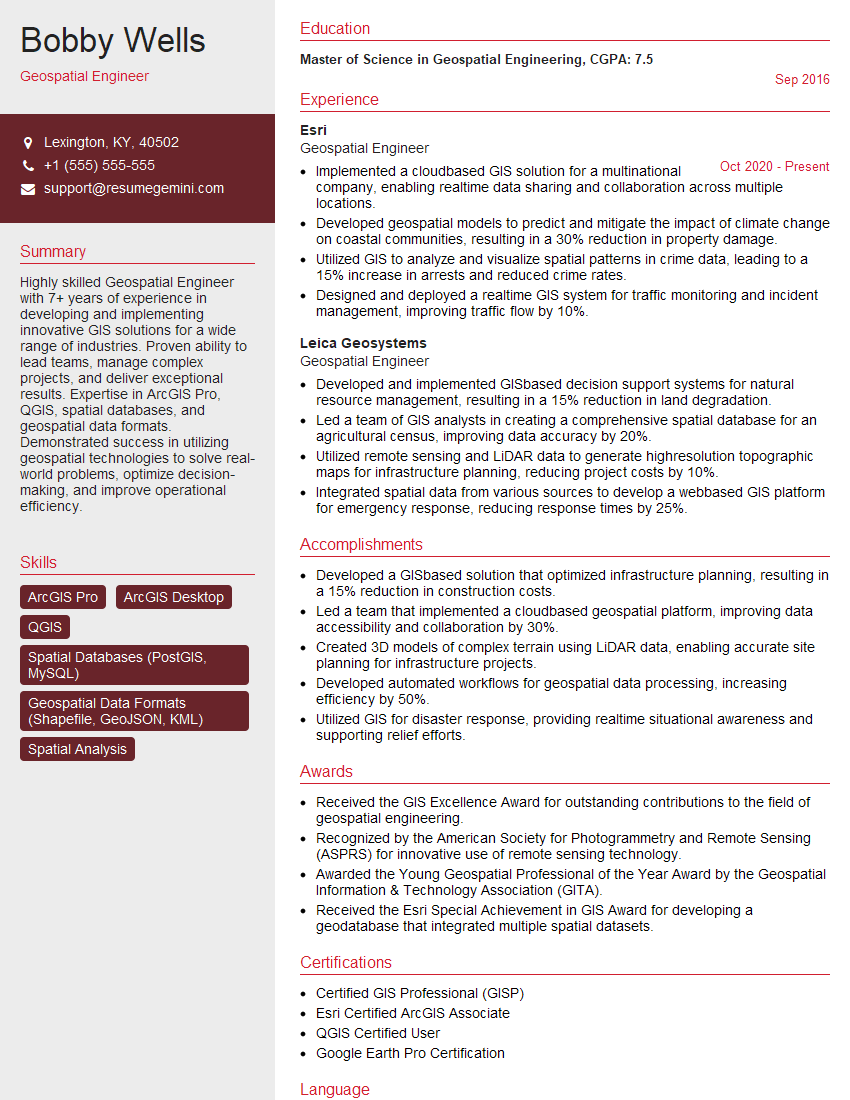

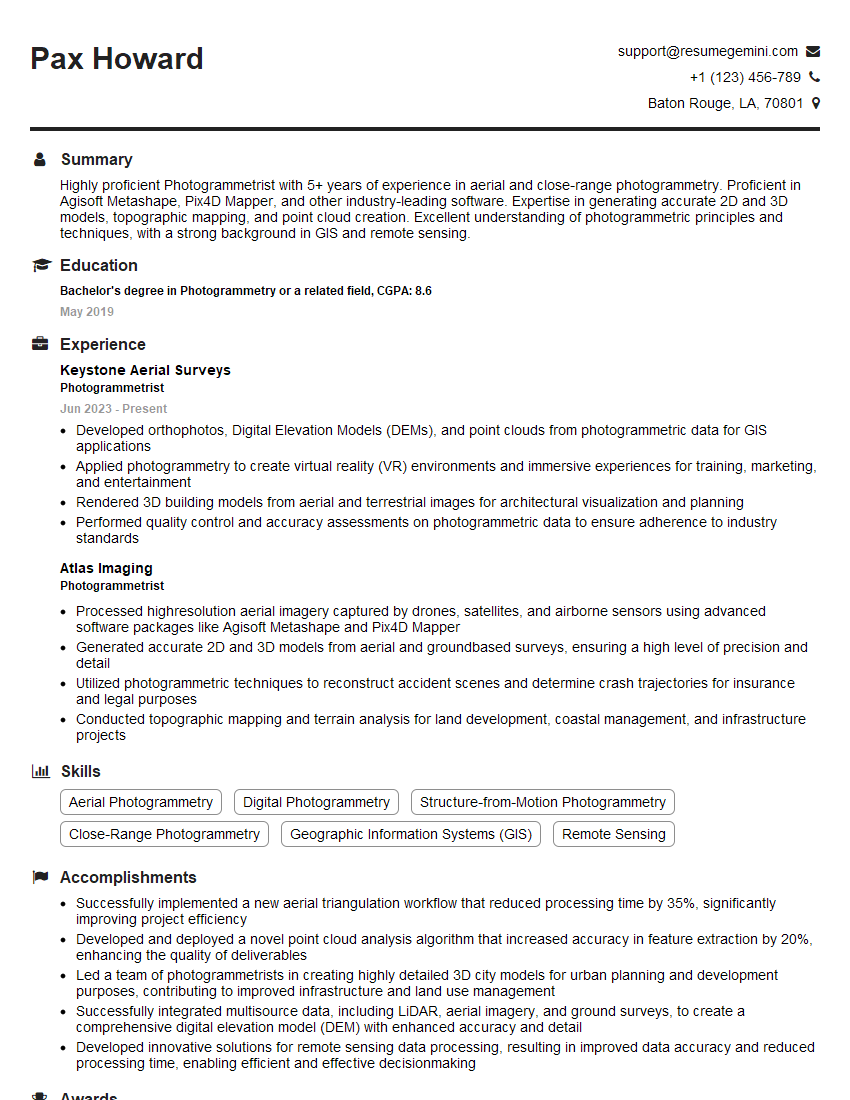

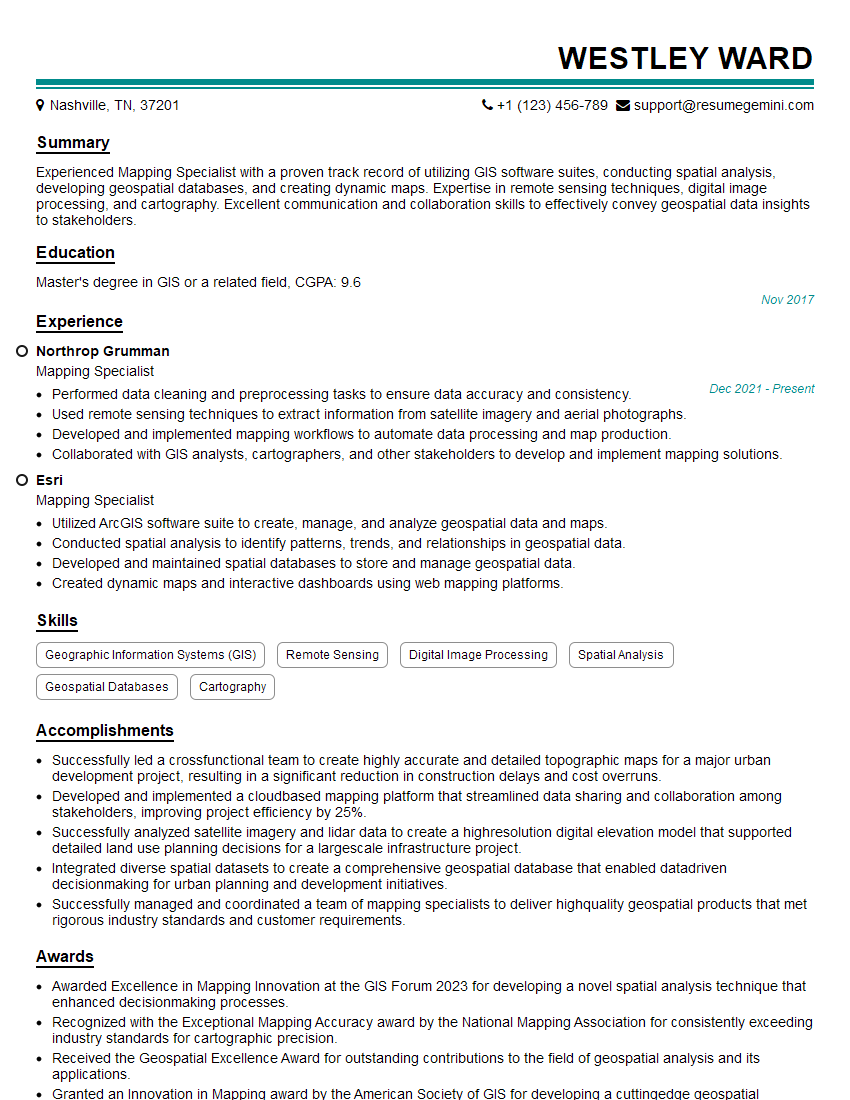

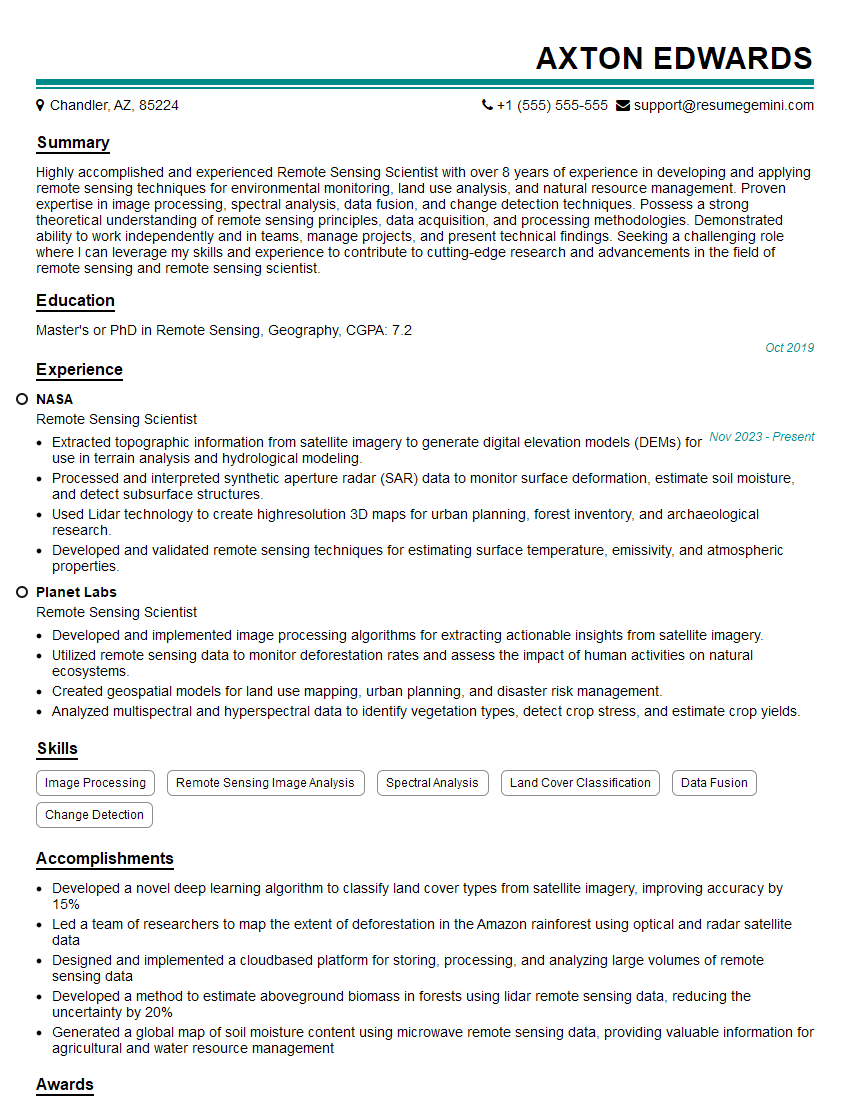

Next Steps: Unlock Your GPS/GIS Career

Mastering GPS/GIS opens doors to exciting and impactful careers. To maximize your job prospects, create a compelling, ATS-friendly resume that showcases your skills and experience. ResumeGemini is a trusted resource to help you build a professional resume that stands out. They offer examples of resumes tailored to the GPS/GIS field to give you a head start. Invest time in crafting a strong resume – it’s your first impression with potential employers.

Explore more articles

Users Rating of Our Blogs

Share Your Experience

We value your feedback! Please rate our content and share your thoughts (optional).

What Readers Say About Our Blog

Hello,

We found issues with your domain’s email setup that may be sending your messages to spam or blocking them completely. InboxShield Mini shows you how to fix it in minutes — no tech skills required.

Scan your domain now for details: https://inboxshield-mini.com/

— Adam @ InboxShield Mini

Reply STOP to unsubscribe

Hi, are you owner of interviewgemini.com? What if I told you I could help you find extra time in your schedule, reconnect with leads you didn’t even realize you missed, and bring in more “I want to work with you” conversations, without increasing your ad spend or hiring a full-time employee?

All with a flexible, budget-friendly service that could easily pay for itself. Sounds good?

Would it be nice to jump on a quick 10-minute call so I can show you exactly how we make this work?

Best,

Hapei

Marketing Director

Hey, I know you’re the owner of interviewgemini.com. I’ll be quick.

Fundraising for your business is tough and time-consuming. We make it easier by guaranteeing two private investor meetings each month, for six months. No demos, no pitch events – just direct introductions to active investors matched to your startup.

If youR17;re raising, this could help you build real momentum. Want me to send more info?

Hi, I represent an SEO company that specialises in getting you AI citations and higher rankings on Google. I’d like to offer you a 100% free SEO audit for your website. Would you be interested?

Hi, I represent an SEO company that specialises in getting you AI citations and higher rankings on Google. I’d like to offer you a 100% free SEO audit for your website. Would you be interested?

good