Interviews are more than just a Q&A session—they’re a chance to prove your worth. This blog dives into essential Experience with Software Development and Scripting interview questions and expert tips to help you align your answers with what hiring managers are looking for. Start preparing to shine!

Questions Asked in Experience with Software Development and Scripting Interview

Q 1. Explain the difference between procedural and object-oriented programming.

Procedural programming and object-oriented programming (OOP) represent fundamentally different approaches to structuring software. Procedural programming focuses on procedures or functions that operate on data. Think of it like a recipe: you have a set of instructions (functions) that act on ingredients (data). Object-oriented programming, on the other hand, organizes code around ‘objects’ that encapsulate both data and the functions that manipulate that data. These objects interact with each other to accomplish tasks.

Procedural Programming:

- Data and functions are separate.

- Emphasis on procedures and how data is processed.

- Often uses global variables, which can lead to maintainability issues.

- Example (pseudocode):

function calculateArea(length, width) { return length * width; }

Object-Oriented Programming:

- Data and functions are bundled together within objects.

- Emphasis on objects and their interactions.

- Uses concepts like encapsulation, inheritance, and polymorphism to improve code organization and reusability.

- Example (pseudocode):

class Rectangle { constructor(length, width) { this.length = length; this.width = width; } calculateArea() { return this.length * this.width; } }

In essence, OOP promotes modularity, reusability, and maintainability, making it more suitable for large, complex projects. Procedural programming is often simpler for smaller projects but can become unwieldy as complexity increases.

Q 2. What are the advantages and disadvantages of using interpreted vs. compiled languages?

Interpreted and compiled languages differ primarily in how the source code is executed. Compiled languages translate the entire source code into machine code before execution, while interpreted languages execute the source code line by line.

Compiled Languages (e.g., C++, Java):

- Advantages: Faster execution speed, better performance for computationally intensive tasks, often produce smaller and more efficient executables.

- Disadvantages: Longer development cycle due to the compilation step, platform-dependent executables (you might need to compile for different operating systems), harder to debug as you need to recompile after each code change.

Interpreted Languages (e.g., Python, JavaScript):

- Advantages: Faster development cycle as there’s no compilation step, platform-independent (as long as an interpreter is available), easier debugging, improved flexibility for dynamic programming.

- Disadvantages: Slower execution speed, often requires more resources during runtime, typically less efficient for resource-intensive applications.

The choice between compiled and interpreted languages depends on the project requirements. For performance-critical applications, compiled languages are preferred. For rapid prototyping or scripting tasks, interpreted languages are generally more suitable. Many modern languages blend both approaches (e.g., Java uses a just-in-time compiler).

Q 3. Describe your experience with version control systems (e.g., Git).

I have extensive experience using Git for version control. I’m proficient in all core Git functionalities, including branching, merging, rebasing, and resolving conflicts. I utilize Git on a daily basis for individual and collaborative projects. In collaborative settings, I’m comfortable using Git workflows like Gitflow or GitHub Flow.

My typical workflow involves frequent commits with descriptive messages, utilizing branches for feature development or bug fixes, and performing regular pull requests for code reviews before merging changes into the main branch. I’m adept at using Git’s command-line interface as well as various GUI clients. I’ve utilized Git for managing projects of varying scales, from small personal scripts to large-scale enterprise applications. One project involved resolving a complex merge conflict on a large codebase by carefully analyzing the changes and selectively merging sections of code to avoid data loss.

Q 4. How do you handle debugging in your development process?

My debugging process is systematic and iterative. It generally starts with reproducing the issue consistently. I then leverage a range of tools and techniques. I heavily rely on print statements or logging tools for tracking the program’s flow and variable values. Integrated Development Environments (IDEs) are invaluable with their debugging capabilities: breakpoints, step-through execution, and variable inspection. Using a debugger allows me to pinpoint the exact line of code causing the problem. For more complex issues, I employ techniques like binary search to narrow down the problematic code section or utilize profiling tools to identify performance bottlenecks.

Beyond the technical aspects, I find that clear and well-structured code drastically reduces debugging time. Writing unit tests upfront also helps catch bugs early in the development process. When faced with challenging bugs, I often collaborate with team members, sharing information and brainstorming potential solutions. In essence, my debugging approach emphasizes both systematic techniques and proactive coding practices.

Q 5. What are your preferred scripting languages and why?

My preferred scripting languages are Python and Bash.

Python: I find Python’s readability and versatility incredibly valuable. Its extensive libraries (like NumPy, Pandas, and scikit-learn) make it highly suitable for data analysis, machine learning, and scripting tasks. Its clean syntax reduces development time and improves code maintainability. I’ve used Python extensively for automating tasks, building web applications, and creating data analysis pipelines.

Bash: Bash, the default shell in most Unix-like systems, is invaluable for automating system administration tasks. Its power lies in its ability to easily integrate with various command-line tools and its scripting capabilities for managing files, processes, and system configurations. I frequently use Bash for automating deployments, managing servers, and streamlining workflows in my development process.

My preference stems from the practical benefits these languages offer in terms of efficiency, readability, and widespread community support. Each has unique strengths that complement each other in various development contexts.

Q 6. Explain the concept of RESTful APIs.

RESTful APIs (Representational State Transfer Application Programming Interfaces) are a standardized architectural style for designing networked applications. They utilize HTTP methods (GET, POST, PUT, DELETE) to interact with resources. Each resource is identified by a unique URI (Uniform Resource Identifier). The communication is stateless, meaning each request contains all the information needed to process it. This is crucial for scalability and maintainability.

Key characteristics of a RESTful API:

- Client-server architecture: The client (e.g., a web browser or mobile app) sends requests to the server, which processes the request and sends back a response.

- Stateless communication: Each request is independent of previous requests.

- Cacheable responses: Responses can be cached to improve performance.

- Uniform interface: A consistent way of interacting with resources through standard HTTP methods.

- Layered system: The API can be composed of multiple layers, providing flexibility and scalability.

- Code on demand (optional): The server can extend client functionality by transferring executable code.

RESTful APIs are ubiquitous in modern web development, enabling seamless communication between different applications and services. They form the backbone of many web-based interactions, facilitating features such as fetching data, updating information, and processing transactions.

Q 7. Describe your experience with database technologies (e.g., SQL, NoSQL).

My experience with database technologies encompasses both SQL and NoSQL databases. I’m proficient in SQL, having worked extensively with relational databases like PostgreSQL and MySQL. I have experience designing database schemas, writing complex queries (including joins, subqueries, and window functions), optimizing query performance, and managing database security. I’ve used SQL in various projects, from simple data storage to complex data warehousing solutions.

In the realm of NoSQL databases, I have experience with MongoDB and Cassandra. I understand the benefits and limitations of NoSQL databases and when they are appropriate compared to SQL databases. I’ve used NoSQL databases for projects requiring high scalability, flexibility, and handling large volumes of unstructured or semi-structured data. For example, I used MongoDB for a project requiring rapid ingestion and retrieval of large-scale social media data, while Cassandra was leveraged for a project needing high availability and fault tolerance.

My database expertise includes not only the technical skills but also the understanding of when to select the most appropriate database technology based on the specific needs of the project. This involves considering factors such as data structure, query patterns, scalability requirements, and performance needs.

Q 8. How do you ensure code quality and maintainability?

Ensuring code quality and maintainability is paramount for long-term software success. It’s not just about writing code that works; it’s about writing code that’s easy to understand, modify, and extend. This involves a multi-faceted approach:

Coding Standards and Style Guides: Adhering to a consistent coding style (e.g., PEP 8 for Python) makes code readable and understandable by everyone on the team. This reduces ambiguity and improves collaboration.

Code Reviews: Having another developer review your code before it’s merged into the main branch catches bugs early and ensures adherence to standards. It’s also a great learning opportunity.

Testing: Comprehensive testing, including unit, integration, and system tests, is crucial. Tests act as documentation and ensure that changes don’t break existing functionality. I employ Test-Driven Development (TDD) whenever feasible, writing tests *before* writing the code they’re intended to verify.

Version Control (Git): Using a version control system like Git allows for easy tracking of changes, collaboration, and rollback to previous versions if needed. Meaningful commit messages are essential for understanding the evolution of the codebase.

Documentation: Clear and concise documentation is vital. This includes comments within the code itself, explaining complex logic, and external documentation explaining the system’s architecture and usage.

Refactoring: Regularly reviewing and refactoring code to improve its structure and readability is a proactive measure to prevent technical debt from accumulating. This ensures the code remains maintainable over time.

For example, in a recent project, we implemented a rigorous code review process using pull requests on GitHub. This significantly reduced the number of bugs found in production and improved the overall quality of the codebase.

Q 9. Explain the difference between a stack and a queue data structure.

Stacks and queues are both fundamental data structures used to store and manage collections of elements, but they differ significantly in how elements are added and removed.

Stack (LIFO): A stack operates on the Last-In, First-Out (LIFO) principle. Imagine a stack of plates; you can only add a new plate to the top, and you can only remove the top plate. The most recently added element is the first to be removed. Common stack operations include

push(add an element) andpop(remove an element). Stacks are used in function calls (managing the call stack), undo/redo functionality, and expression evaluation.Queue (FIFO): A queue operates on the First-In, First-Out (FIFO) principle. Think of a queue at a grocery store; the first person in line is the first person served. The element added first is the first to be removed. Common queue operations are

enqueue(add an element) anddequeue(remove an element). Queues are used in managing tasks, handling requests (e.g., in a web server), and implementing breadth-first search algorithms.

Here’s a simple Python example illustrating the difference:

import collections

stack = []

stack.append(1) # push

stack.append(2)

stack.append(3)

print(stack.pop()) # Output: 3

queue = collections.deque()

queue.append(1) # enqueue

queue.append(2)

queue.append(3)

print(queue.popleft()) # Output: 1Q 10. What is the purpose of a design pattern?

Design patterns are reusable solutions to commonly occurring problems in software design. They are not finished code implementations but rather templates or blueprints that provide a general structure and best practices for solving specific design challenges. They promote code reusability, readability, and maintainability.

The purpose of a design pattern is to offer a well-tested, proven approach to a recurring design problem, avoiding reinventing the wheel. They also enhance communication among developers, as everyone understands the implications of using a particular pattern (e.g., Singleton, Factory, Observer).

For instance, the Singleton pattern ensures that only one instance of a class is created, which is useful for managing resources or controlling access to a specific object. The Factory pattern provides a way to create objects without specifying their concrete classes, making the code more flexible and extensible.

Using design patterns helps to create more robust, scalable, and maintainable systems by leveraging established solutions and best practices. In a recent project, employing the Model-View-Controller (MVC) pattern significantly simplified the development and maintenance of our web application’s user interface.

Q 11. Describe your experience with agile development methodologies.

I have extensive experience with Agile development methodologies, primarily Scrum and Kanban. I’ve participated in numerous projects using these frameworks, taking on various roles including developer, team lead, and scrum master.

In Scrum, I’m comfortable with sprint planning, daily stand-ups, sprint reviews, and retrospectives. I understand the importance of iterative development, frequent feedback, and adapting to changing requirements. I’ve found that the short iteration cycles (sprints) provide a mechanism for frequent checkpoints and continuous improvement.

With Kanban, I’ve focused on visualizing workflow, limiting work in progress (WIP), and continuously optimizing the flow of tasks. The flexibility of Kanban has proved useful in projects with evolving priorities and unpredictable demands.

My experience with Agile has taught me the value of collaboration, communication, and continuous improvement. I’ve found that Agile methodologies facilitate the creation of high-quality software that meets customer needs effectively.

For example, in one project using Scrum, we successfully delivered a complex feature set on time and within budget through consistent sprint planning, daily communication, and proactive problem-solving.

Q 12. Explain your understanding of software testing methodologies.

Software testing methodologies are crucial for ensuring the quality and reliability of software. They involve a systematic approach to identifying and resolving defects before the software is released. I’m familiar with various testing methodologies, including:

Unit Testing: Testing individual components or modules of the software in isolation. This helps identify defects early and ensures the correct functioning of individual units.

Integration Testing: Testing the interaction between different modules or components of the software to ensure they work together correctly.

System Testing: Testing the entire system as a whole to ensure it meets the specified requirements.

Acceptance Testing: Testing the software against the customer’s requirements to ensure it meets their expectations and is acceptable for use.

Regression Testing: Retesting the software after making changes to ensure that new changes haven’t introduced new bugs or broken existing functionality.

Black Box Testing: Testing the software without knowledge of its internal workings, focusing solely on its inputs and outputs.

White Box Testing: Testing the software with knowledge of its internal structure and workings.

I believe in a multi-layered approach to testing, combining different methodologies to ensure thorough coverage. Automated testing is essential for regression testing and ensuring consistent quality throughout the development lifecycle. I have experience using various testing frameworks such as JUnit, pytest, and Selenium.

Q 13. How do you handle conflicts within a team environment?

Handling conflicts within a team environment is a crucial skill. My approach focuses on open communication, active listening, and finding mutually agreeable solutions. I believe in addressing conflicts directly and constructively, rather than avoiding them.

My strategy typically involves:

Understanding the root cause: Before attempting to resolve a conflict, I strive to understand the underlying reasons for the disagreement. This often involves actively listening to all parties involved.

Open communication: Creating a safe space where everyone feels comfortable expressing their opinions and concerns is critical. I encourage respectful dialogue and avoid interrupting or making judgmental statements.

Finding common ground: Focusing on shared goals and objectives helps to de-escalate the conflict and identify potential areas of compromise.

Collaborative problem-solving: Working together to brainstorm solutions that address the concerns of all parties involved. This may involve compromising or finding creative solutions.

Mediation (if necessary): In situations where I am unable to resolve a conflict independently, I may seek the help of a more experienced mediator or team lead.

In a past project, a disagreement arose regarding the best approach to implement a critical feature. By facilitating open discussion and collaboration, we identified a hybrid solution that incorporated the strengths of both approaches, ultimately resulting in a superior solution.

Q 14. What is your experience with cloud platforms (e.g., AWS, Azure, GCP)?

I have experience working with several cloud platforms, including AWS (Amazon Web Services), Azure (Microsoft Azure), and GCP (Google Cloud Platform). My experience spans various services, including:

Compute: Deploying and managing virtual machines (VMs) using EC2 (AWS), Virtual Machines (Azure), and Compute Engine (GCP).

Storage: Utilizing object storage (S3, Azure Blob Storage, Cloud Storage), and managed databases (RDS, Azure SQL Database, Cloud SQL).

Networking: Configuring virtual networks, load balancers, and firewalls.

Serverless Computing: Developing and deploying serverless functions using Lambda (AWS), Azure Functions, and Cloud Functions (GCP).

I’m proficient in using the command-line interfaces (CLIs) and management consoles of these platforms. I understand the importance of security best practices when working with cloud environments, such as IAM (Identity and Access Management) and network security groups. My experience allows me to select the appropriate cloud services for specific project needs, optimizing for cost, performance, and scalability.

For example, in a recent project, we migrated a legacy on-premise application to AWS, leveraging EC2, S3, and RDS. This migration resulted in improved scalability, reduced infrastructure costs, and enhanced reliability.

Q 15. Describe your experience with containerization technologies (e.g., Docker, Kubernetes).

Containerization technologies like Docker and Kubernetes have revolutionized software development and deployment. Docker provides a lightweight, portable way to package applications and their dependencies into containers, ensuring consistent execution across different environments. Think of it like a standardized shipping container for your software – it guarantees the software inside will run the same way regardless of where the ship (your server) is docked.

Kubernetes, on the other hand, orchestrates the deployment, scaling, and management of containerized applications. It’s like a sophisticated port authority managing many Docker containers across a fleet of servers, ensuring efficient resource utilization and high availability. I’ve extensively used Docker to build and ship microservices, creating consistent development and production environments. My Kubernetes experience includes deploying and managing complex applications using deployments, services, and ingress controllers, optimizing for performance and scalability. For example, I used Kubernetes to manage a cluster of microservices for an e-commerce application, automatically scaling up during peak shopping periods and scaling down during less busy times, significantly reducing infrastructure costs.

In one project, I leveraged Docker Compose to define and manage multi-container applications, simplifying the development workflow and making it easier for team members to reproduce the application’s environment locally. This drastically improved collaboration and reduced discrepancies between development and production.

Career Expert Tips:

- Ace those interviews! Prepare effectively by reviewing the Top 50 Most Common Interview Questions on ResumeGemini.

- Navigate your job search with confidence! Explore a wide range of Career Tips on ResumeGemini. Learn about common challenges and recommendations to overcome them.

- Craft the perfect resume! Master the Art of Resume Writing with ResumeGemini’s guide. Showcase your unique qualifications and achievements effectively.

- Don’t miss out on holiday savings! Build your dream resume with ResumeGemini’s ATS optimized templates.

Q 16. What is your approach to problem-solving when faced with a complex coding challenge?

My approach to complex coding challenges involves a structured, iterative process. First, I thoroughly understand the problem, breaking it down into smaller, manageable sub-problems. I use techniques like drawing diagrams or writing pseudo-code to visualize the problem’s structure and potential solutions. This helps me identify dependencies and potential pitfalls early on. Then, I develop a solution for each sub-problem, testing and validating each piece independently before integrating them into a complete solution.

I employ a test-driven development (TDD) approach whenever feasible, writing unit tests to verify the functionality of each component. This not only ensures the correctness of the code but also serves as documentation and aids in refactoring. Throughout the process, I continuously evaluate my progress, adapting my approach as needed. If I encounter roadblocks, I don’t hesitate to seek help from colleagues or online resources. It’s a collaborative effort, and leveraging the collective knowledge of the team is crucial for solving challenging problems efficiently and effectively. For instance, recently, I tackled a performance bottleneck in a data processing pipeline by systematically profiling the code, identifying the slowest parts, and implementing optimized algorithms and data structures.

Q 17. How do you stay up-to-date with the latest technologies and trends in software development?

Staying current in the ever-evolving world of software development requires a multi-pronged approach. I actively participate in online communities, such as Stack Overflow and Reddit’s programming subreddits, engaging in discussions and learning from experienced developers. I regularly read technical blogs and articles from reputable sources like Medium and InfoQ, focusing on areas relevant to my current projects and career goals. Attending webinars and online courses on platforms like Coursera and Udemy keeps me abreast of new technologies and best practices.

Furthermore, I actively contribute to open-source projects on GitHub. This hands-on experience exposes me to different coding styles, problem-solving approaches, and the latest technologies used in real-world applications. Finally, I make it a point to attend industry conferences and workshops whenever possible, networking with peers and learning from experts in the field. For example, I recently completed a course on serverless computing and implemented a proof-of-concept project utilizing AWS Lambda, expanding my skillset and broadening my understanding of cloud-native architectures.

Q 18. Explain your understanding of SOLID principles.

SOLID principles are a set of five design principles intended to make software designs more understandable, flexible, and maintainable. They are:

- Single Responsibility Principle (SRP): A class should have only one reason to change. This promotes modularity and reduces complexity.

- Open/Closed Principle (OCP): Software entities (classes, modules, functions, etc.) should be open for extension, but closed for modification. This prevents unintended side effects when adding new features.

- Liskov Substitution Principle (LSP): Subtypes should be substitutable for their base types without altering the correctness of the program. This ensures that inheritance is used correctly.

- Interface Segregation Principle (ISP): Clients should not be forced to depend upon interfaces they don’t use. This improves the cohesion of interfaces and reduces unnecessary dependencies.

- Dependency Inversion Principle (DIP): High-level modules should not depend on low-level modules. Both should depend on abstractions. Abstractions should not depend on details. Details should depend on abstractions. This promotes loose coupling and improves testability.

I apply these principles consistently in my designs, striving for modularity, maintainability, and extensibility. For example, when designing a user authentication system, I would separate the user interface from the authentication logic, adhering to the SRP. I would also use interfaces to abstract the authentication method, allowing me to easily add new authentication providers (e.g., OAuth, SAML) without modifying existing code, demonstrating the OCP.

Q 19. What are your experiences with different software development life cycles (SDLC)?

My experience spans several Software Development Life Cycles (SDLCs), including Waterfall, Agile (Scrum and Kanban), and DevOps. Waterfall, with its sequential phases, is suitable for projects with well-defined requirements and minimal changes. However, its rigidity makes it less adaptable to evolving requirements. Agile methodologies, such as Scrum and Kanban, are better suited for iterative development and embrace change. Scrum uses sprints and daily stand-ups to ensure continuous progress and collaboration, while Kanban focuses on visualizing workflow and limiting work in progress.

I’ve worked extensively with Agile methodologies, utilizing Scrum in several projects. The iterative nature of Scrum allows for continuous feedback and adaptation, resulting in higher quality products and improved customer satisfaction. DevOps focuses on automation and collaboration between development and operations teams, enabling faster and more reliable deployments. I’ve incorporated DevOps principles into my workflow, using tools such as Jenkins and GitLab CI/CD for continuous integration and continuous delivery, leading to quicker releases and reduced deployment risks. My experience with these different SDLCs allows me to choose the most appropriate methodology for each project, based on its specific requirements and context.

Q 20. Describe your experience with different types of testing (unit, integration, system).

I have extensive experience with different types of software testing, including unit, integration, and system testing. Unit testing verifies the functionality of individual components or modules in isolation. I typically use frameworks like JUnit or pytest, ensuring high test coverage and early detection of bugs. Integration testing focuses on verifying the interaction between different components or modules, ensuring that they work together as expected. System testing is performed on the entire system, validating that all components function correctly as a whole and meet the specified requirements.

My approach emphasizes a multi-layered testing strategy, combining unit, integration, and system tests to achieve comprehensive coverage. Automated testing is crucial for ensuring efficiency and consistency. I employ various testing techniques, such as black-box and white-box testing, depending on the specific needs of the project. For example, in a recent project, I implemented unit tests for each function in a data processing module, ensuring that each function performed its intended task correctly. I then proceeded with integration testing to verify that the modules interacted correctly, followed by system testing to ensure the overall system met the defined specifications. This comprehensive testing approach helped identify and resolve bugs early in the development cycle, improving the quality and reliability of the software.

Q 21. How do you handle time constraints and tight deadlines?

Handling time constraints and tight deadlines requires a proactive and organized approach. My strategy involves prioritizing tasks based on their importance and urgency, focusing on the critical path to meet the deadlines. I utilize project management tools to track progress, identify potential bottlenecks, and adjust the schedule as needed. Effective communication with stakeholders is paramount to ensure everyone is aligned on priorities and expectations.

I’m adept at breaking down complex tasks into smaller, manageable units, allowing for incremental progress and better risk management. I’m not afraid to ask for help when needed, leveraging the skills and expertise of team members to ensure efficient task completion. Furthermore, I actively seek ways to optimize the development process, using automation and efficient coding practices to reduce development time without compromising quality. For example, I might use code generation tools or build scripts to automate repetitive tasks. In a recent project with a tight deadline, I successfully used this strategy to deliver the project on time and within budget. Effective time management, clear communication, and a willingness to adapt are crucial for successfully navigating tight deadlines.

Q 22. Explain your experience with using different IDEs or code editors.

Throughout my career, I’ve worked with a variety of IDEs and code editors, each suited to different tasks and programming languages. My experience spans from heavyweight IDEs like IntelliJ IDEA (primarily for Java and Kotlin development) and Visual Studio (for C# and .NET projects), to lighter-weight options such as VS Code (highly versatile, used extensively for JavaScript, Python, and many other languages), and Sublime Text (favored for its speed and customization options, particularly for scripting and quick edits).

Choosing the right IDE or editor often depends on the project’s scale, the programming language used, and personal preference. For instance, IntelliJ IDEA’s robust refactoring tools and intelligent code completion are invaluable for large Java projects, while VS Code’s extensive extension ecosystem makes it adaptable to nearly any development workflow. Sublime Text’s speed and minimal footprint are advantageous when working with resource-constrained systems or when rapid prototyping is critical. My proficiency in these tools allows me to seamlessly transition between projects with diverse requirements.

I’m also comfortable using command-line editors like Vim and Emacs, demonstrating my adaptability and understanding of fundamental text manipulation techniques. This proficiency is essential for debugging on remote servers or for quick edits where a full IDE isn’t necessary.

Q 23. What is your understanding of security best practices in software development?

Security best practices in software development are paramount. They encompass a holistic approach, starting from the design phase and continuing through development, testing, and deployment. Key aspects include:

- Secure coding practices: This involves avoiding common vulnerabilities like SQL injection, cross-site scripting (XSS), and cross-site request forgery (CSRF). Regular code reviews and the use of static and dynamic analysis tools are crucial for identifying potential weaknesses. For example, using parameterized queries instead of directly embedding user input into SQL statements prevents SQL injection attacks.

- Input validation and sanitization: Thoroughly validating and sanitizing all user inputs before using them in the application is crucial. This prevents malicious data from compromising the system.

- Authentication and authorization: Implementing robust authentication mechanisms to verify user identity and authorization mechanisms to control access to resources is non-negotiable. This includes using strong passwords, multi-factor authentication, and role-based access control.

- Data protection: Protecting sensitive data involves employing encryption, both in transit and at rest. Data loss prevention (DLP) measures are also essential to monitor and prevent unauthorized data exfiltration.

- Regular security updates and patching: Keeping all software components, including the operating system, libraries, and frameworks, up-to-date with the latest security patches is vital to mitigate known vulnerabilities.

- Security testing: Regular penetration testing and vulnerability assessments are necessary to identify and address security weaknesses proactively.

In my experience, integrating security considerations throughout the software development lifecycle (SDLC) – rather than as an afterthought – is essential for building secure and reliable applications. A failure to do so can lead to severe consequences, including data breaches, financial losses, and reputational damage.

Q 24. Describe your experience with working in different programming paradigms.

I have extensive experience working with various programming paradigms. My experience includes:

- Object-Oriented Programming (OOP): I’m proficient in using OOP principles like encapsulation, inheritance, and polymorphism in languages such as Java, C#, and Python. For example, designing a banking system using OOP would involve creating classes for accounts, customers, and transactions, each with its own properties and methods, and interacting through inheritance and polymorphism.

- Procedural Programming: I understand and have used procedural programming in languages like C and Pascal. This approach is particularly useful for simpler programs where a structured, step-by-step approach is sufficient.

- Functional Programming: I have experience with functional programming concepts in languages like Python, JavaScript (using functional programming libraries), and Scala. This approach emphasizes immutability and pure functions, leading to more concise and predictable code. For example, using map, filter, and reduce functions in Python to process lists provides a clean and functional approach.

Understanding different paradigms allows me to choose the most appropriate approach for a given problem. The choice depends on factors such as project complexity, team expertise, and the specific requirements of the application. Often, a hybrid approach combining elements of different paradigms can be the most effective solution.

Q 25. Explain your understanding of Big O notation and its importance in algorithm analysis.

Big O notation is a mathematical notation used to describe the performance or complexity of an algorithm. It represents the relationship between the input size (n) and the runtime or space requirements of the algorithm. It focuses on the growth rate as the input size increases, ignoring constant factors.

For example:

- O(1) – Constant time: The algorithm’s runtime remains constant regardless of the input size. Accessing an element in an array by index is an example of O(1) complexity.

- O(log n) – Logarithmic time: The runtime increases logarithmically with the input size. Binary search in a sorted array is an example of O(log n) complexity.

- O(n) – Linear time: The runtime increases linearly with the input size. Searching for an element in an unsorted array is an example of O(n) complexity.

- O(n log n) – Linearithmic time: Merge sort and heap sort have this complexity.

- O(n2) – Quadratic time: The runtime increases quadratically with the input size. Bubble sort and selection sort have this complexity.

- O(2n) – Exponential time: The runtime doubles with each increase in input size. Finding all subsets of a set is an example.

Understanding Big O notation is crucial for selecting efficient algorithms, particularly when dealing with large datasets. Choosing an algorithm with a lower Big O complexity can significantly improve the performance of an application. For instance, using a binary search (O(log n)) instead of a linear search (O(n)) on a sorted list of one million items results in a dramatic performance improvement.

Q 26. How do you optimize code for performance?

Optimizing code for performance involves a multi-faceted approach that considers various aspects of the application. Strategies include:

- Algorithmic optimization: Choosing efficient algorithms with lower Big O complexity is fundamental. For example, switching from a bubble sort (O(n2)) to a merge sort (O(n log n)) can greatly improve performance for large datasets.

- Data structure selection: Selecting appropriate data structures based on access patterns is crucial. Using a hash table for fast lookups instead of a linear search in an array is a classic example.

- Code profiling: Using profiling tools to identify performance bottlenecks is essential. Profilers provide insights into which parts of the code consume the most time and resources, allowing developers to focus optimization efforts effectively.

- Caching: Storing frequently accessed data in a cache (like memory cache or database cache) reduces the need for repeated computations or database queries. Caching is particularly effective for read-heavy applications.

- Code refactoring: Improving code readability and efficiency through refactoring can lead to performance gains. Removing redundant calculations, optimizing loops, and reducing function call overhead are common refactoring techniques.

- Database optimization: Optimizing database queries, using appropriate indexes, and tuning the database configuration can significantly impact application performance, especially in database-intensive applications.

- Asynchronous programming: Using asynchronous operations can improve responsiveness, especially in I/O-bound operations such as network requests.

The optimization process is often iterative. It involves profiling, identifying bottlenecks, applying optimizations, and then re-profiling to measure the impact of the changes. This cyclical approach allows for incremental improvements in application performance.

Q 27. Describe your experience with different software architectures (e.g., microservices, monolithic).

My experience encompasses both monolithic and microservices architectures.

Monolithic Architecture: In monolithic architectures, all components of the application are tightly coupled and deployed as a single unit. This approach is simpler to develop and deploy initially, but scalability and maintainability can become challenges as the application grows in size and complexity. I have worked on several monolithic applications, and experienced firsthand the difficulties in maintaining them. Changes often require redeploying the entire application, even if only a small part needs updating.

Microservices Architecture: Microservices architecture breaks down the application into smaller, independent services that communicate with each other over a network. Each microservice focuses on a specific business function. This approach offers benefits such as improved scalability, fault isolation, and independent deployment of services. I’ve been involved in designing, developing, and deploying microservice-based applications. The complexity increases, but the advantages regarding maintainability, scalability, and flexibility are substantial.

The choice between monolithic and microservices architecture depends heavily on factors such as project scale, team size, and the complexity of the application. Smaller projects may benefit from the simplicity of a monolithic approach, while large, complex applications often benefit from the flexibility and scalability of microservices.

Key Topics to Learn for Experience with Software Development and Scripting Interviews

- Data Structures and Algorithms: Understanding fundamental data structures (arrays, linked lists, trees, graphs) and algorithms (searching, sorting, graph traversal) is crucial for efficient code design and problem-solving. Practical application includes optimizing code for speed and memory usage.

- Object-Oriented Programming (OOP): Mastering OOP principles (encapsulation, inheritance, polymorphism) is essential for building scalable and maintainable software. Practical application includes designing robust and reusable code components.

- Software Design Patterns: Familiarize yourself with common design patterns (e.g., Singleton, Factory, Observer) to build better structured and more adaptable software. Practical application includes improving code organization and reducing complexity in larger projects.

- Version Control (Git): Proficiency in Git is a must-have. Understand branching, merging, and resolving conflicts. Practical application includes collaborative coding and managing code changes effectively.

- Scripting Languages (e.g., Python, JavaScript, Bash): Develop expertise in at least one scripting language, focusing on its practical applications in automation, web development, or system administration. Practical application includes automating repetitive tasks and creating efficient workflows.

- Databases (SQL, NoSQL): Understand relational and NoSQL databases, including querying, data modeling, and database design principles. Practical application includes efficient data storage and retrieval for applications.

- Testing and Debugging: Learn different testing methodologies (unit, integration, system) and effective debugging techniques. Practical application includes ensuring code quality and identifying and resolving errors efficiently.

- Problem-Solving and Communication: Practice articulating your thought process clearly and effectively. Demonstrate your problem-solving skills by breaking down complex problems into smaller, manageable parts.

Next Steps

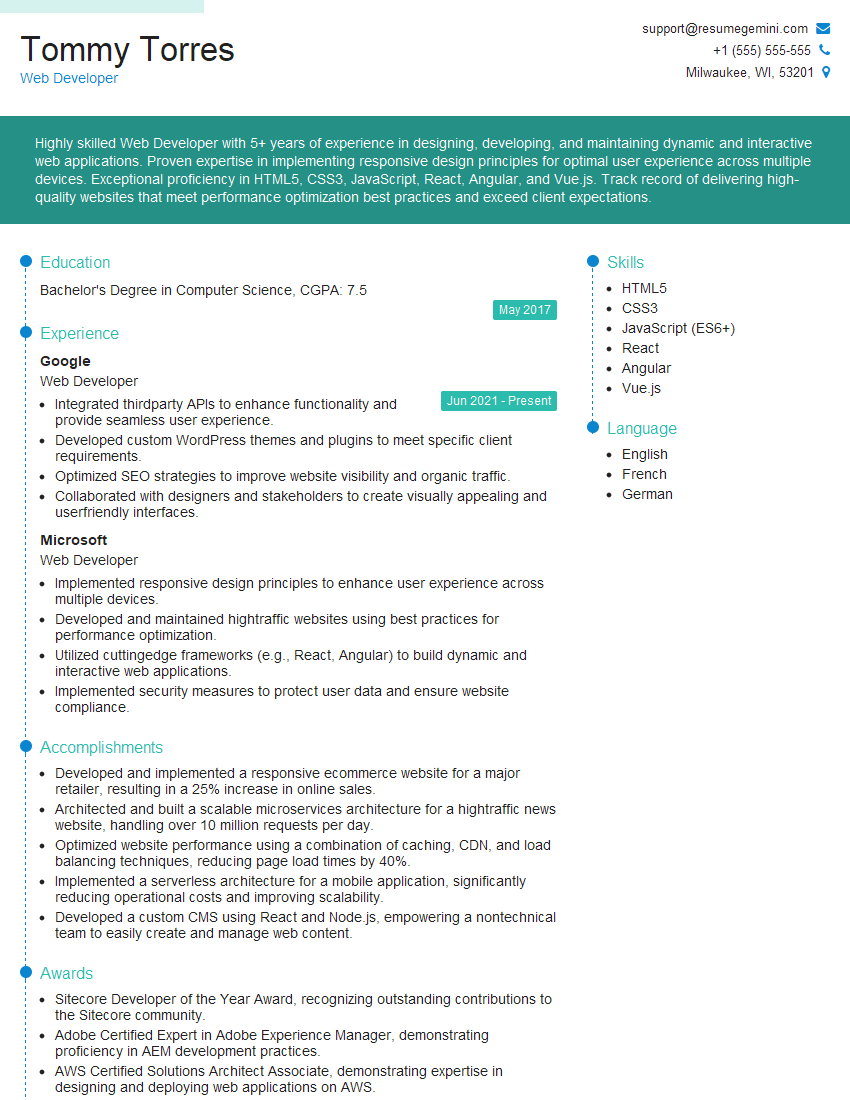

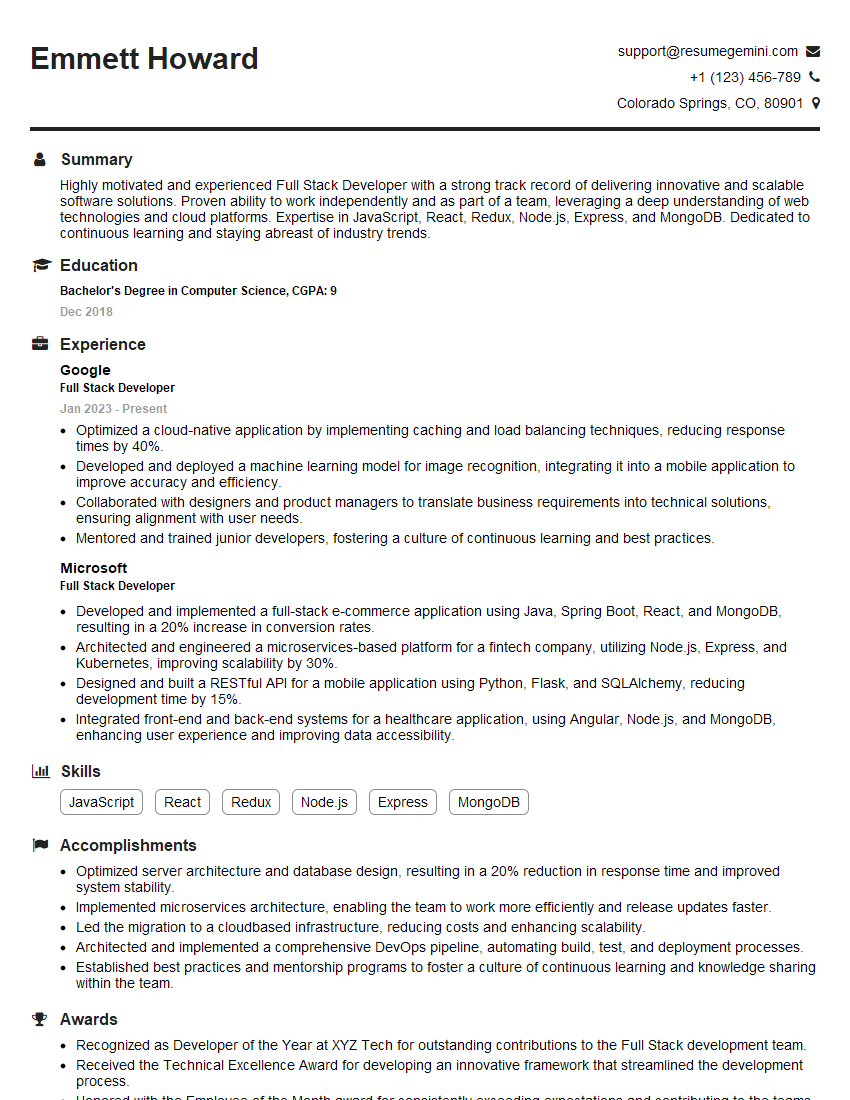

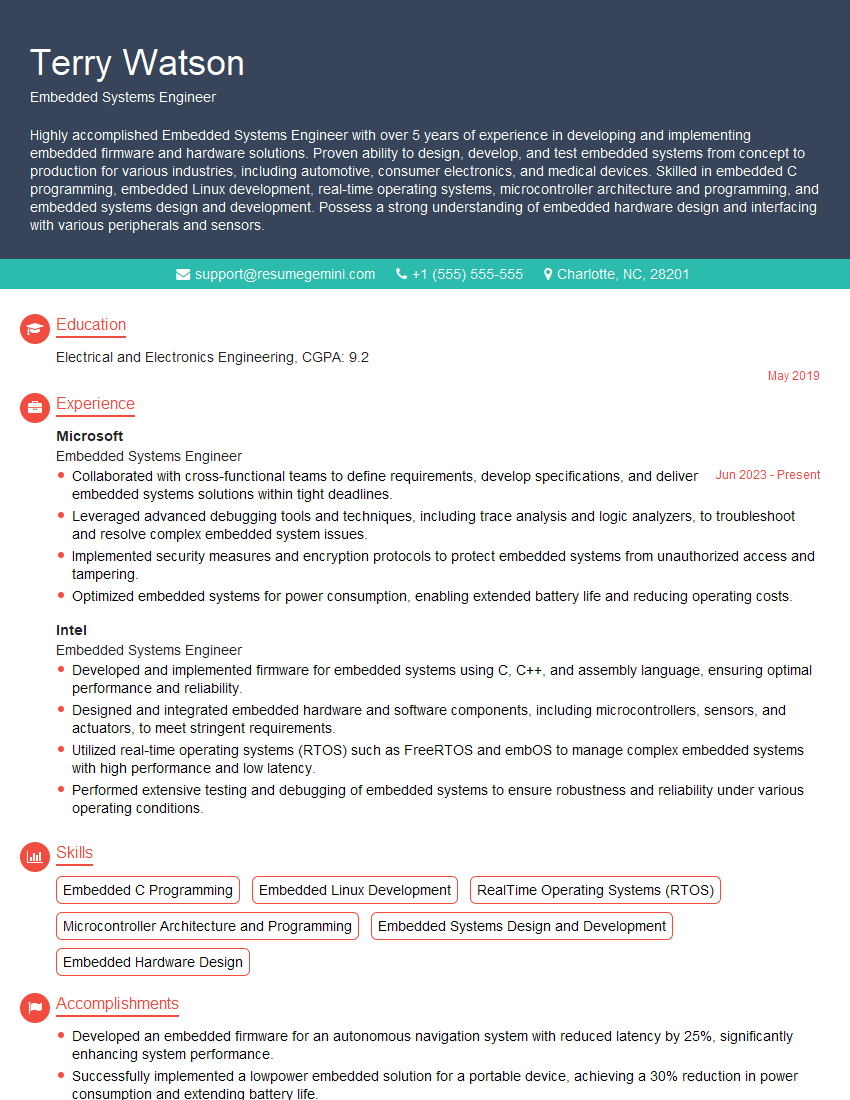

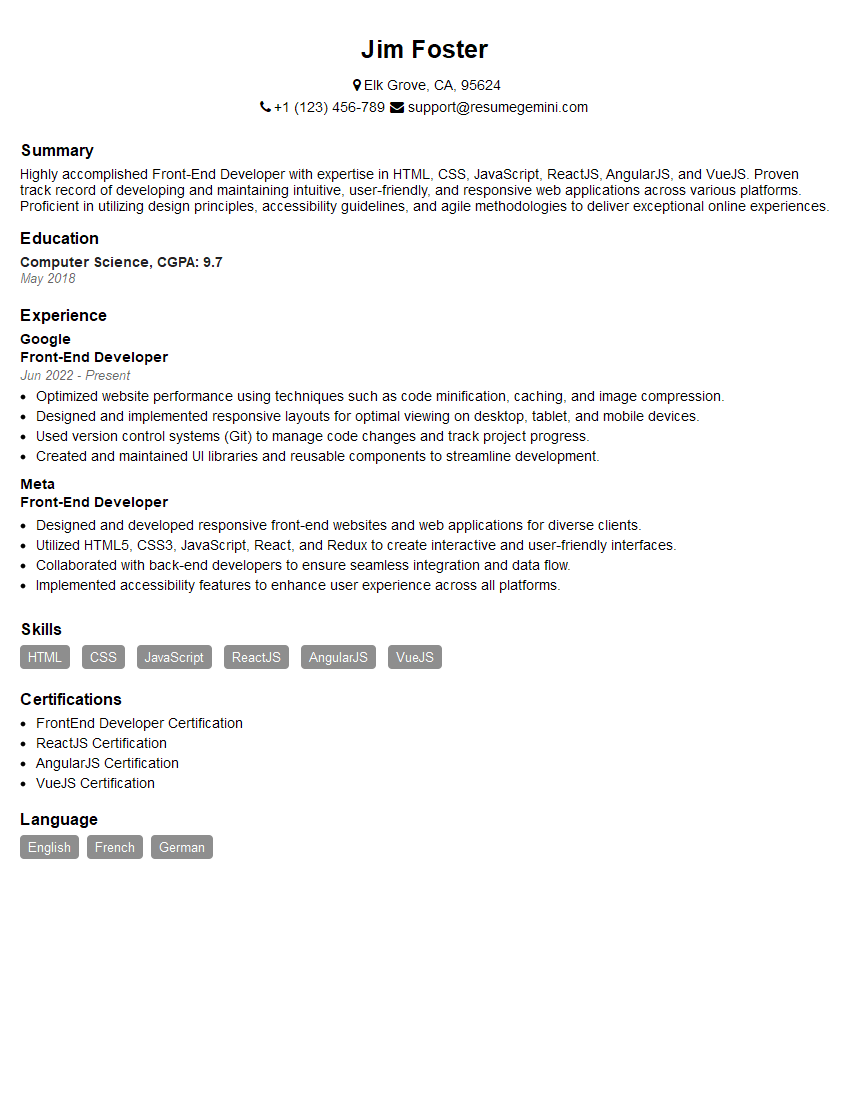

Mastering software development and scripting is pivotal for career advancement in the tech industry, opening doors to diverse and exciting roles. A strong resume is your key to unlocking these opportunities. Creating an ATS-friendly resume, optimized for Applicant Tracking Systems, significantly increases your chances of getting noticed by recruiters. To build a professional and impactful resume that highlights your skills and experience effectively, leverage the power of ResumeGemini. ResumeGemini provides a user-friendly platform and examples of resumes tailored to software development and scripting roles to help you create a winning application. Invest the time to craft a compelling resume – it’s your first impression and a crucial step towards your dream career.

Explore more articles

Users Rating of Our Blogs

Share Your Experience

We value your feedback! Please rate our content and share your thoughts (optional).

What Readers Say About Our Blog

Hello,

We found issues with your domain’s email setup that may be sending your messages to spam or blocking them completely. InboxShield Mini shows you how to fix it in minutes — no tech skills required.

Scan your domain now for details: https://inboxshield-mini.com/

— Adam @ InboxShield Mini

Reply STOP to unsubscribe

Hi, are you owner of interviewgemini.com? What if I told you I could help you find extra time in your schedule, reconnect with leads you didn’t even realize you missed, and bring in more “I want to work with you” conversations, without increasing your ad spend or hiring a full-time employee?

All with a flexible, budget-friendly service that could easily pay for itself. Sounds good?

Would it be nice to jump on a quick 10-minute call so I can show you exactly how we make this work?

Best,

Hapei

Marketing Director

Hey, I know you’re the owner of interviewgemini.com. I’ll be quick.

Fundraising for your business is tough and time-consuming. We make it easier by guaranteeing two private investor meetings each month, for six months. No demos, no pitch events – just direct introductions to active investors matched to your startup.

If youR17;re raising, this could help you build real momentum. Want me to send more info?

Hi, I represent an SEO company that specialises in getting you AI citations and higher rankings on Google. I’d like to offer you a 100% free SEO audit for your website. Would you be interested?

Hi, I represent an SEO company that specialises in getting you AI citations and higher rankings on Google. I’d like to offer you a 100% free SEO audit for your website. Would you be interested?

good