Interviews are opportunities to demonstrate your expertise, and this guide is here to help you shine. Explore the essential Cross-Browser and Cross-OS Testing interview questions that employers frequently ask, paired with strategies for crafting responses that set you apart from the competition.

Questions Asked in Cross-Browser and Cross-OS Testing Interview

Q 1. Explain the importance of cross-browser and cross-OS testing.

Cross-browser and cross-OS testing is crucial for ensuring your web application provides a consistent and reliable user experience across different browsers (Chrome, Firefox, Safari, Edge) and operating systems (Windows, macOS, Linux, iOS, Android). Imagine building a house – you wouldn’t want a window that only opens in one room, right? Similarly, a website that functions flawlessly on Chrome but crashes on Firefox is unacceptable. This type of testing verifies functionality, layout, and performance are consistent, regardless of the user’s environment. This is especially important because users access websites from diverse setups, and a broken experience can lead to lost customers and a damaged brand reputation.

Q 2. What are some common challenges encountered during cross-browser testing?

Cross-browser testing presents several challenges. Inconsistent Rendering Engines: Each browser interprets and renders HTML, CSS, and JavaScript differently, leading to visual discrepancies or functional errors. For example, a perfectly positioned element on Chrome might be misaligned in Firefox. Browser-Specific APIs and Features: Some browsers support features others don’t, requiring conditional logic in your code. JavaScript Compatibility: JavaScript libraries and frameworks may behave differently across browsers and versions. Handling different screen sizes and resolutions adds further complexity. And finally, the sheer number of browser/OS combinations to test makes comprehensive coverage a significant undertaking.

Q 3. Describe your experience with different testing frameworks (e.g., Selenium, Cypress, Playwright).

I have extensive experience with Selenium, Cypress, and Playwright. Selenium, a mature and widely-used framework, provides excellent cross-browser compatibility and supports multiple programming languages. I’ve used it for large-scale, automated tests, including data-driven testing and parallel execution. Cypress, known for its developer-friendly approach and real-time testing capabilities, is excellent for front-end testing. Its ability to debug tests directly within the browser is a significant advantage. Playwright, a newer framework, offers excellent performance and cross-browser compatibility, particularly for testing complex applications. My preference depends on the project’s needs – Selenium for robust, large-scale tests, Cypress for rapid development cycles and ease of debugging, and Playwright for its speed and modern approach. For instance, on a recent project, I used Selenium for comprehensive regression testing, while Cypress streamlined our front-end component testing.

Q 4. How do you handle browser-specific bugs or inconsistencies?

When encountering browser-specific bugs, my approach is systematic. First, I isolate the bug, confirming it’s not a general issue. Then, I carefully document the bug, including browser version, OS, steps to reproduce, and expected vs. actual behavior. I then investigate the root cause. Is it a rendering issue, a JavaScript incompatibility, or a browser-specific bug in a third-party library? If the bug is in the code, I’ll fix it, implementing browser-specific workarounds if necessary using conditional statements (e.g., feature detection). If the bug stems from a browser or library, I might use CSS hacks or JavaScript polyfills to enhance compatibility, ensuring graceful degradation. Finally, I add a new test case to ensure the bug is not reintroduced.

Q 5. What are some strategies for optimizing cross-browser test execution time?

Optimizing cross-browser test execution time involves several strategies. Parallel execution: Running tests across multiple machines or virtual machines simultaneously dramatically reduces overall time. Test prioritization: Focus on high-priority test cases (critical functionality) first. Smart test selection: Only run tests relevant to the specific changes in a given release. Test data optimization: Use realistic but minimal test data sets. Efficient selectors: Employ efficient CSS selectors or XPaths in your automation scripts to reduce lookup times. Utilize cloud-based testing platforms: These provide infrastructure for fast parallel execution and access to a wide range of browser and OS combinations. For instance, I recently reduced our test suite execution time by 70% by implementing parallel execution and a refined test selection strategy.

Q 6. Explain the difference between functional and compatibility testing.

Functional testing validates that the application works as expected, regardless of the browser. It focuses on core functionality, verifying that buttons click, forms submit, and data is processed correctly. Compatibility testing, on the other hand, checks if the application functions and renders correctly across different browsers and operating systems. It focuses on ensuring consistent user experience irrespective of the browser/OS combination. For example, functional testing would verify if the login form submits successfully, while compatibility testing would check if the login form renders correctly and is usable on various browsers and screen sizes.

Q 7. How do you ensure consistent test results across different browsers and operating systems?

Consistent test results require a structured approach. First, use a version control system to manage the test scripts, ensuring everyone works with the same code. Employ a reliable test framework (Selenium, Cypress etc.) that provides consistent behavior across platforms. Utilize clear and concise test cases with specific steps to reproduce and expected outcomes. Parameterize test data to run tests on various inputs. Use a consistent testing environment – set up your test environment carefully to avoid inconsistencies caused by differences in OS configurations or browser extensions. Regularly review and update your test cases to reflect changes in the application and browser landscape. Use CI/CD integration to automatically run your tests after every code change, ensuring timely identification of any compatibility issues.

Q 8. Describe your experience with automated cross-browser testing tools.

My experience with automated cross-browser testing tools spans several years and numerous projects. I’ve worked extensively with tools like Selenium, Cypress, Playwright, and Sauce Labs. Selenium, for example, offers a powerful framework for writing automated tests in various programming languages, allowing for comprehensive coverage across different browsers. I’m proficient in crafting robust test suites that verify functionality, UI elements, and responsiveness across different browser versions and operating systems. With Cypress, I appreciate its ease of use and the ability to debug tests effectively. Sauce Labs and similar cloud-based platforms have been invaluable for scaling testing efforts and accessing a wide range of browser and operating system combinations without the need for maintaining a large local infrastructure. Beyond the tools themselves, my experience includes designing effective test strategies, managing test environments, and analyzing test results to identify and report on cross-browser compatibility issues.

For instance, in a recent e-commerce project, we used Selenium and a Jenkins pipeline to automate regression testing across Chrome, Firefox, Safari, and Edge on Windows and macOS. This automation significantly reduced testing time and improved the overall quality of the application.

Q 9. How do you prioritize testing across various browsers and platforms?

Prioritizing cross-browser and cross-platform testing involves a multi-faceted approach. It’s not simply about testing on every possible combination; instead, it’s about strategic prioritization based on several key factors:

- Target Audience: Identify the browsers and operating systems most frequently used by your target audience. Analytics data from your website or application can provide valuable insights into browser usage statistics.

- Business Criticality: Prioritize testing features that are essential for core functionality and the user experience. For example, the checkout process on an e-commerce website would receive higher priority than a less critical feature.

- Risk Assessment: Analyze which browsers or platforms might be more prone to compatibility issues based on past experience or known browser-specific quirks. Older browser versions, for instance, are often higher risk.

- Resource Constraints: Realistically assess the available time, resources, and budget. Testing on every single combination is often not feasible. A well-defined testing matrix allows for balancing thoroughness with practical limitations.

In practice, I often start with the most popular browsers and operating systems among the target audience, followed by the next most popular, and then address any known compatibility issues from previous releases. This phased approach ensures that we cover the most critical aspects while still leaving room to address less common scenarios if needed.

Q 10. What is the role of responsive design in cross-browser testing?

Responsive design is absolutely crucial for cross-browser testing. It’s a development approach that ensures a website or application adapts seamlessly to different screen sizes and devices. Without responsive design, cross-browser testing would be significantly more challenging, as you’d need to test multiple versions of the site tailored to each device. Responsive design significantly reduces this testing burden.

During cross-browser testing, we need to verify that the responsive design implementation works correctly across all target browsers and screen sizes. We need to ensure that the layout, content, and functionality adapt smoothly across various devices, including desktops, tablets, and smartphones, and maintain usability across all these screen sizes and orientations. Testing with different screen resolutions and device emulators/simulators is a key aspect of this.

Q 11. How do you handle testing for different screen resolutions and devices?

Testing across different screen resolutions and devices is vital for ensuring a consistent user experience. The approach involves a combination of techniques:

- Browser Developer Tools: Modern browsers provide excellent developer tools that allow us to simulate different screen resolutions and device pixel ratios. This is a quick way to get a first impression of how the application looks and functions on different screen sizes.

- Emulators and Simulators: Mobile emulators (like those built into Chrome DevTools) and simulators (like those provided by Android Studio or Xcode) give a more accurate representation of how an application behaves on specific devices, handling system interactions more realistically.

- Real Devices: For critical features or situations requiring high fidelity, testing on actual devices is invaluable. This helps capture subtle differences that emulators or simulators may miss.

- Responsive Testing Frameworks: Automated testing frameworks such as Selenium can be configured to test against various screen sizes and resolutions by dynamically adjusting the browser window size during the test execution.

A combination of these approaches provides a comprehensive strategy, balancing speed and accuracy. For example, I might begin by using developer tools for initial checks, then move to emulators for more thorough testing, and finally test on real devices for the most critical functionality.

Q 12. How do you identify and document cross-browser compatibility issues?

Identifying and documenting cross-browser compatibility issues requires a systematic approach:

- Detailed Test Cases: Clearly defined test cases ensure consistent testing and help pinpoint the exact location and nature of the issues.

- Screenshots and Screen Recordings: Visual documentation is essential for illustrating rendering discrepancies, layout problems, or UI inconsistencies across browsers. High-quality screenshots and screen recordings effectively communicate the issue to developers.

- Browser Developer Tools: Utilizing browser developer tools allows for detailed analysis of the HTML, CSS, and JavaScript that might be responsible for any discrepancies or errors. This facilitates problem diagnosis.

- Error Logs and Console Messages: Examining browser console logs and network requests to pinpoint JavaScript errors or other runtime issues helps understand the root cause of failures.

- Bug Tracking System: Formalizing bug reports in a system like Jira or similar helps maintain consistency, track progress, and prioritize fixes.

A well-documented bug report typically includes the browser and version, operating system, steps to reproduce the issue, screenshots, error messages (if any), and an explanation of the expected versus the actual behavior.

Q 13. What are some common browser rendering engines and how do they impact testing?

Different browsers utilize various rendering engines, which significantly impact cross-browser testing. The rendering engine interprets HTML, CSS, and JavaScript code and renders the final visual representation in the browser window. Inconsistencies in these engines lead to compatibility issues.

- Blink (Chrome, Edge, Opera): One of the most prevalent engines, known for its speed and performance. It frequently updates, which can sometimes create unexpected behavior in earlier versions.

- Gecko (Firefox): A long-standing rendering engine known for its conformance to web standards. It has its own quirks and differences compared to other engines.

- Webkit (Safari): Initially developed by Apple, it has its own rendering engine. While it aims for compatibility, slight differences exist.

- Trident (older versions of Internet Explorer): Now largely obsolete but important to acknowledge in legacy projects. It was infamous for rendering inconsistencies.

Understanding the quirks of each rendering engine is crucial for anticipating potential problems. For example, a CSS property might be rendered differently in Blink compared to Gecko, requiring careful consideration of cross-browser compatible alternatives. This highlights the necessity of thorough testing across all relevant engines.

Q 14. How do you handle JavaScript errors during cross-browser testing?

Handling JavaScript errors during cross-browser testing is a critical aspect of ensuring application stability and reliability. The strategies I employ are:

- Browser Developer Tools: Actively using browser developer tools (specifically the console) to monitor and identify any JavaScript errors. The console provides detailed information about the error, including the line number, file, and stack trace, enabling quicker pinpointing of the problem.

- Automated Testing Frameworks: Leveraging the error handling capabilities of automated testing frameworks such as Selenium or Cypress. These frameworks can catch exceptions thrown by the JavaScript code, recording errors and making them part of the test report.

- Debugging Tools: Utilizing debugging tools (in browsers or IDEs) to step through the JavaScript code line by line and examine variables to understand the sequence of events that led to the error. Setting breakpoints at specific points aids diagnosis.

- JavaScript Linting and Static Analysis: Employing linters (like ESLint) and static analysis tools to proactively identify potential issues in the JavaScript code *before* testing. These tools can catch errors like syntax errors, undefined variables, and other common programming pitfalls.

- Conditional Code: When a specific JavaScript feature or API is found to be inconsistently handled by certain browsers, I sometimes implement conditional code to adapt behavior as needed, providing fallbacks or alternative implementations based on the detected browser.

A proactive approach that combines these techniques dramatically reduces the time spent debugging errors and improves the overall quality of the application across different browsers.

Q 15. Explain your approach to reporting and tracking cross-browser test results.

My approach to reporting and tracking cross-browser test results centers around a structured, easily accessible, and visually clear system. I typically use a combination of tools and techniques to ensure comprehensive reporting.

- Test Management Tools: I leverage tools like TestRail, Jira, or Azure DevOps to log individual tests, their execution status (pass/fail), and detailed descriptions of any failures. This allows for easy tracking of progress and identification of recurring issues.

- Automated Reporting: For automated tests, I integrate reporting features from testing frameworks like Selenium or Cypress. These tools often generate reports with screenshots, logs, and videos, providing a detailed record of each test run across different browsers and operating systems.

- Custom Dashboards: For larger projects, I often create custom dashboards summarizing test results across different browsers and metrics (e.g., pass/fail rates, execution time). These dashboards offer a high-level overview of the testing progress and help in quick identification of critical issues.

- Visual Comparison Tools: For visual regression testing, I utilize tools capable of taking screenshots and comparing them across browsers to detect unexpected changes in the UI. Differences are highlighted clearly in the report.

- Defect Tracking: All identified bugs are meticulously documented with detailed steps to reproduce, browser/OS information, and screenshots. This ensures that the development team can easily understand and resolve the issues.

For example, in a recent project, using Selenium and TestRail, we were able to identify a rendering issue specific to Safari 15 by automatically generating screenshots and a detailed report with browser-specific logs, leading to a swift resolution.

Career Expert Tips:

- Ace those interviews! Prepare effectively by reviewing the Top 50 Most Common Interview Questions on ResumeGemini.

- Navigate your job search with confidence! Explore a wide range of Career Tips on ResumeGemini. Learn about common challenges and recommendations to overcome them.

- Craft the perfect resume! Master the Art of Resume Writing with ResumeGemini’s guide. Showcase your unique qualifications and achievements effectively.

- Don’t miss out on holiday savings! Build your dream resume with ResumeGemini’s ATS optimized templates.

Q 16. What are some best practices for managing cross-browser test environments?

Managing cross-browser test environments effectively requires a blend of infrastructure, automation, and strategy. Here are some best practices:

- Virtualization: Using virtual machines (VMs) like VirtualBox or VMware allows us to create isolated environments for different browser and OS combinations, preventing conflicts and ensuring consistency across test runs.

- Cloud-Based Testing Platforms: Platforms like BrowserStack, Sauce Labs, or LambdaTest provide access to a vast range of browser and OS configurations, eliminating the need for extensive local infrastructure setup and maintenance.

- Automated Environment Provisioning: Using tools like Docker and Ansible enables automated setup and configuration of test environments, ensuring consistency and repeatability. This also simplifies the process of scaling up or down the test infrastructure based on project needs.

- Version Control: Managing browser versions and test environment configurations using version control systems like Git prevents accidental changes and ensures that everyone works with the same setup. This is incredibly important for reproducibility and collaboration.

- Regular Updates: Keeping browsers and operating systems updated with security patches and bug fixes is vital to ensure accurate and reliable testing. However, this needs to be managed carefully to avoid introducing unexpected behavior related to new versions.

For instance, in a previous project, we utilized Docker containers to create consistent test environments across the team, preventing discrepancies caused by local configurations, ensuring everyone ran tests in identical setups.

Q 17. How do you use debugging tools to identify and fix cross-browser issues?

Debugging cross-browser issues is a crucial part of my workflow. I extensively utilize browser developer tools (Chrome DevTools, Firefox Developer Tools, etc.) along with other debugging strategies:

- Inspecting the DOM: The first step is often inspecting the Document Object Model (DOM) to see how the HTML, CSS, and JavaScript are being rendered across different browsers. Differences in rendering can often reveal the root cause of inconsistencies.

- Network Monitoring: Analyzing network requests using the browser’s developer tools helps identify if there are issues with loading resources (images, scripts, stylesheets) that are browser-specific.

- Console Logging: Strategically placed

console.log()statements in the JavaScript code help track variable values, execution flow, and identify errors that occur only in certain browsers. - JavaScript Debuggers: Step-by-step debugging allows me to pinpoint exactly where JavaScript errors or unexpected behavior arises.

- Browser-Specific Debugging Tools: Some browsers offer unique debugging tools or extensions that can be invaluable for identifying browser-specific problems.

For example, I once used Chrome DevTools’ performance profiler to identify a performance bottleneck in a JavaScript animation that was only affecting Chrome. Optimizing that section improved the overall performance across all browsers.

console.log('Checking variable value:', myVariable);

Q 18. Explain your experience with different testing methodologies (e.g., Agile, Waterfall).

My experience spans both Agile and Waterfall methodologies. Both have their place depending on the project’s size, complexity, and client requirements.

- Agile: In Agile projects, cross-browser testing is integrated into short sprints. Tests are often automated to ensure continuous feedback and quick iteration. The focus is on rapid feedback loops, adapting to changing requirements, and frequent releases.

- Waterfall: In Waterfall, cross-browser testing is typically a dedicated phase of the project lifecycle. Testing happens after development is completed. This approach is better suited for projects with well-defined requirements and minimal expected changes.

In Agile, I might use a framework like BDD (Behavior-Driven Development) for testing, defining acceptance criteria collaboratively with the development team. In Waterfall, a more structured test plan is essential, outlining all necessary tests and their execution order. The choice depends greatly on the context of the project and the client’s preferences.

Q 19. How do you integrate cross-browser testing into a CI/CD pipeline?

Integrating cross-browser testing into a CI/CD pipeline is crucial for continuous delivery. This ensures that every code change is tested across different browsers before deployment.

- Selenium Grid/Sauce Labs/BrowserStack Integration: Automate test execution using frameworks like Selenium and integrate with cloud-based testing platforms. These platforms allow running tests in parallel across multiple browsers and OS combinations.

- CI/CD Tool Integration: Integrate the automated tests into your chosen CI/CD tool (Jenkins, GitLab CI, CircleCI, Azure DevOps). This triggers the tests automatically upon code changes.

- Reporting and Alerting: Configure the pipeline to generate test reports and send alerts for test failures. This allows for immediate notification of any issues.

- Test Environment Management: Manage test environments using Docker or other containerization technologies to ensure consistency and reproducibility in the CI/CD pipeline.

For example, in a recent project, we integrated Selenium tests into our Jenkins pipeline. Upon each commit, the pipeline would automatically trigger the tests across different browsers, generating reports and notifying the team if any failures occurred. This significantly accelerated the feedback loop and improved the overall quality of the product.

Q 20. Describe your experience with performance testing in cross-browser environments.

Performance testing in cross-browser environments is crucial for ensuring a good user experience. It focuses on identifying performance bottlenecks and ensuring responsiveness across different browsers and devices.

- Load Testing: Simulating multiple users accessing the application simultaneously helps identify performance issues under load. Tools like JMeter or k6 are commonly used for this.

- Browser Performance Profiling: Using browser developer tools, we can profile JavaScript execution, network requests, rendering times, and other performance metrics for each browser.

- Synthetic Monitoring: Setting up synthetic monitors to check application performance proactively helps identify and alert on performance degradation.

- Real User Monitoring (RUM): RUM tools provide insights into real user behavior and performance from actual users’ perspectives.

In one instance, we used JMeter to simulate high user traffic on our application. This revealed a database query that was causing performance issues specifically in Firefox. Optimizing that query significantly improved the performance of the application across all browsers.

Q 21. How do you ensure your tests are scalable and maintainable?

Scalability and maintainability of cross-browser tests are paramount for long-term success. Here’s how I ensure both:

- Modular Test Design: Breaking down tests into smaller, independent modules improves reusability, simplifies maintenance, and facilitates scaling. Changes in one module do not necessarily require changes in others.

- Page Object Model (POM): The POM pattern enhances test maintainability by separating test logic from UI elements. Changes to UI elements only require updating the Page Objects, not the entire test suite.

- Data-Driven Testing: Using data-driven testing allows running the same test with different input data, reducing test code duplication and enabling scalable testing across multiple scenarios and browsers.

- Test Automation Framework: Selecting a robust test automation framework like Selenium or Cypress provides a structured environment for developing, organizing, and maintaining tests.

- Regular Code Reviews and Refactoring: Regular code reviews help maintain code quality and identify areas for improvement. Refactoring ensures that the test suite remains easy to understand and modify.

For example, by adopting the Page Object Model, we significantly reduced the impact of UI changes on our test suite. Modifying a single Page Object addressed the changes instead of needing to modify multiple tests individually. This made the tests much easier to maintain in the long term.

Q 22. What is your experience with cloud-based cross-browser testing platforms?

I have extensive experience with cloud-based cross-browser testing platforms like Sauce Labs, BrowserStack, and LambdaTest. These platforms are invaluable for efficiently testing across a wide range of browsers and operating systems without needing to maintain a large, in-house infrastructure. I’m proficient in utilizing their features, including parallel testing for faster execution, automated test scripting integrations (Selenium, Cypress, etc.), and comprehensive reporting and debugging tools. For example, in a recent project, using BrowserStack’s parallel testing capabilities allowed us to reduce our testing time by 70%, significantly accelerating our release cycle. I’m also comfortable working with their integrations with various CI/CD pipelines like Jenkins and GitLab CI, ensuring seamless test automation within our development workflow.

Q 23. How do you handle testing for older or less common browsers?

Testing on older or less common browsers requires a strategic approach. We can’t ignore them entirely, as a significant portion of users might still use them. I typically start by identifying the target audience and their browser usage statistics. Tools like StatCounter can provide valuable data on browser market share. Based on this analysis, I prioritize testing on the most prevalent older browsers. For those with lower usage, we might use a combination of techniques. We can leverage cloud-based platforms offering access to older browser versions and virtual machines. Alternatively, for very niche browsers, we might opt for manual testing on a dedicated machine, focusing on critical functionalities rather than exhaustive testing. This prioritization ensures we focus our efforts where the impact is greatest.

Q 24. How do you ensure accessibility compliance in cross-browser testing?

Accessibility compliance is crucial. In cross-browser testing, we ensure this through several strategies. First, we use automated accessibility testing tools like axe DevTools and WAVE to scan our web application for common accessibility violations across different browsers. These tools flag issues like missing alt text for images, incorrect color contrast ratios, and keyboard navigation problems. Second, we conduct manual accessibility testing, simulating different disabilities to verify how well the application functions for users with visual, auditory, motor, or cognitive impairments. This involves testing screen readers, keyboard navigation, and ensuring sufficient color contrast. We also carefully review our code to follow accessibility best practices, such as using ARIA attributes to provide semantic meaning to interactive elements.

Q 25. What is your experience with testing different browser extensions and plugins?

My experience with browser extensions and plugins is extensive. These can significantly impact the functionality and appearance of a web application, requiring careful consideration during cross-browser testing. We treat extensions as different ‘browser versions’ in a sense. We create test plans that encompass different plugin configurations or their absence. We often test with the most popular extensions in our target market, paying attention to any conflicts or unexpected interactions with our application. Using browser profiles or dedicated virtual machines helps to isolate the impact of specific extensions without affecting other tests. For example, I’ve dealt with scenarios where a specific ad blocker triggered rendering errors in a certain browser, requiring adjustments to our CSS or JavaScript to resolve the compatibility issues.

Q 26. Describe a time when you had to troubleshoot a difficult cross-browser issue.

During a recent project, we encountered an unexpected layout issue in Internet Explorer 11. The application displayed correctly in all modern browsers but suffered from significant rendering problems in IE11. The issue stemmed from a specific CSS property that IE11 interpreted differently than other browsers. Our initial automated tests failed to detect this since we primarily focused on more modern browsers. To troubleshoot, I utilized the IE developer tools to meticulously inspect the page’s rendered CSS and DOM structure. Through a process of elimination and careful debugging, we identified the problematic CSS property. We then implemented a CSS hack specifically targeting IE11 to override the incorrect behavior. This involved adding conditional CSS statements based on the browser’s user agent, ensuring the application’s layout remained consistent across all supported browsers.

Q 27. How do you stay up-to-date with the latest advancements in cross-browser testing?

Staying updated in this rapidly evolving field requires a multi-pronged approach. I regularly follow industry blogs and publications focusing on web development and testing. I actively participate in online communities and forums, engaging with other testers and developers to share knowledge and learn about new challenges and solutions. Attending webinars and conferences provides exposure to cutting-edge tools and methodologies. Furthermore, I regularly experiment with new testing tools and technologies to gain practical experience. Continuous learning and hands-on practice are key to remaining proficient in cross-browser and cross-OS testing.

Q 28. What is your experience with using different test data management strategies during cross-browser tests?

Effective test data management is vital for reliable cross-browser testing. We typically employ different strategies depending on the project’s scope and complexity. For smaller projects, we might use a simple approach of maintaining a separate test data set. This data set includes various inputs to exercise different code paths and UI elements. For larger projects, we often utilize database-driven test data. This allows for more efficient management and reuse of test data across different browsers and test runs. Furthermore, we use techniques like data masking to protect sensitive information, and we often employ data generators to create realistic but synthetic test data for scenarios that require high volume or privacy protection. This structured approach ensures the quality and integrity of the test data, leading to more reliable cross-browser test results.

Key Topics to Learn for Cross-Browser and Cross-OS Testing Interview

- Understanding Browser Engines and Rendering Engines: Learn the differences between major browser engines (Blink, Gecko, WebKit) and how they impact rendering. Explore the implications for layout inconsistencies and potential bugs.

- Cross-Browser Compatibility Issues: Gain practical experience identifying and resolving common compatibility issues related to CSS, JavaScript, and HTML across different browsers (Chrome, Firefox, Safari, Edge).

- Testing Frameworks and Tools: Familiarize yourself with popular testing frameworks (Selenium, Cypress, Puppeteer) and browser developer tools for efficient debugging and testing.

- Responsive Design and Testing: Understand the principles of responsive web design and how to effectively test responsiveness across various screen sizes and devices.

- Cross-OS Compatibility: Explore the nuances of testing across different operating systems (Windows, macOS, Linux) and how OS-specific configurations can affect application behavior.

- Virtual Machines and Emulators: Learn how to utilize virtual machines and emulators to efficiently test on various operating systems and browser combinations without needing physical hardware.

- Test Case Design and Execution: Master the techniques of designing comprehensive test cases to cover various scenarios and efficiently executing these tests to identify bugs.

- Reporting and Bug Tracking: Learn how to effectively document and report bugs, ensuring clear and concise communication with developers.

- Performance Testing in Cross-Browser Environments: Understand how browser performance can vary and the techniques for identifying and optimizing performance bottlenecks.

- Accessibility Testing: Learn how to ensure your application is accessible to users with disabilities, considering implications across different browsers and assistive technologies.

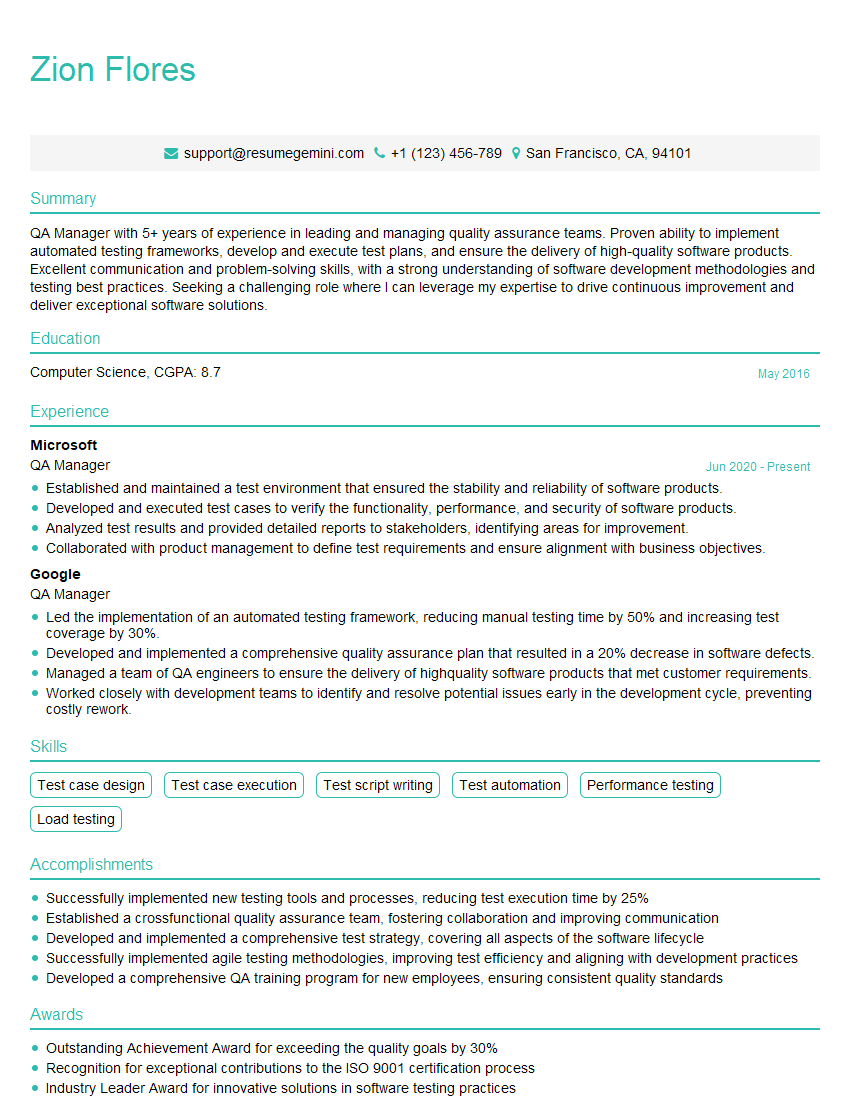

Next Steps

Mastering cross-browser and cross-OS testing is crucial for a successful career in software quality assurance. It demonstrates a deep understanding of web technologies and problem-solving skills highly valued by employers. To maximize your job prospects, it’s essential to create a strong, ATS-friendly resume that highlights your skills and experience effectively. ResumeGemini is a trusted resource for building professional and impactful resumes. We provide examples of resumes tailored specifically for candidates in Cross-Browser and Cross-OS Testing to help you showcase your qualifications to potential employers.

Explore more articles

Users Rating of Our Blogs

Share Your Experience

We value your feedback! Please rate our content and share your thoughts (optional).

What Readers Say About Our Blog

Hello,

We found issues with your domain’s email setup that may be sending your messages to spam or blocking them completely. InboxShield Mini shows you how to fix it in minutes — no tech skills required.

Scan your domain now for details: https://inboxshield-mini.com/

— Adam @ InboxShield Mini

Reply STOP to unsubscribe

Hi, are you owner of interviewgemini.com? What if I told you I could help you find extra time in your schedule, reconnect with leads you didn’t even realize you missed, and bring in more “I want to work with you” conversations, without increasing your ad spend or hiring a full-time employee?

All with a flexible, budget-friendly service that could easily pay for itself. Sounds good?

Would it be nice to jump on a quick 10-minute call so I can show you exactly how we make this work?

Best,

Hapei

Marketing Director

Hey, I know you’re the owner of interviewgemini.com. I’ll be quick.

Fundraising for your business is tough and time-consuming. We make it easier by guaranteeing two private investor meetings each month, for six months. No demos, no pitch events – just direct introductions to active investors matched to your startup.

If youR17;re raising, this could help you build real momentum. Want me to send more info?

Hi, I represent an SEO company that specialises in getting you AI citations and higher rankings on Google. I’d like to offer you a 100% free SEO audit for your website. Would you be interested?

Hi, I represent an SEO company that specialises in getting you AI citations and higher rankings on Google. I’d like to offer you a 100% free SEO audit for your website. Would you be interested?

good