The right preparation can turn an interview into an opportunity to showcase your expertise. This guide to Ability to analyze and interpret complex data sets interview questions is your ultimate resource, providing key insights and tips to help you ace your responses and stand out as a top candidate.

Questions Asked in Ability to analyze and interpret complex data sets Interview

Q 1. Explain your approach to cleaning and preparing a large, messy dataset.

Cleaning a large, messy dataset is like renovating an old house – it requires a systematic approach. My process typically involves several key stages:

- Data Profiling and Exploration: I begin by understanding the data’s structure, identifying variable types, and looking for initial inconsistencies (e.g., unexpected values, incorrect data types). Tools like pandas profiling in Python are invaluable here.

- Handling Missing Data: Missing values are common. I assess the extent and pattern of missingness (e.g., Missing Completely at Random (MCAR), Missing at Random (MAR), Missing Not at Random (MNAR)). Strategies include imputation (filling in missing values using mean, median, mode, or more sophisticated methods like k-Nearest Neighbors), deletion (removing rows or columns with excessive missing data), or using algorithms designed to handle missing data.

- Data Cleaning and Transformation: This involves addressing inconsistencies, such as correcting typos, standardizing formats (dates, currency), and handling outliers (discussed later). I use regular expressions for text cleaning and functions like

pandas.to_datetime()for date standardization. - Data Reduction: For very large datasets, dimensionality reduction techniques like Principal Component Analysis (PCA) or feature selection can be applied to reduce the number of variables while retaining essential information.

- Data Validation: Once cleaned, I rigorously validate the data to ensure accuracy and consistency, often using automated checks and manual spot-checking.

For example, in a project analyzing customer data, I encountered inconsistencies in the ‘address’ field. I used regular expressions to standardize addresses and identified and corrected numerous typos. I also handled missing values in the ‘age’ field by imputing using the median age for that customer segment.

Q 2. Describe a time you identified an unexpected pattern in a dataset. What did you do?

During an analysis of social media sentiment towards a new product launch, I noticed a significant, unexpected negative spike in sentiment around a specific time. Initial analysis suggested a correlation with a unrelated news event. However, further investigation revealed a minor bug in the product that impacted a small user segment and was being discussed heavily on social media. This wasn’t captured in traditional customer feedback channels. I immediately alerted the product team, which allowed for a swift bug fix and a proactive communication strategy to mitigate negative sentiment. This highlighted the power of data to uncover hidden issues not revealed by traditional methods.

Q 3. How do you handle missing data in a dataset?

Handling missing data is crucial. The best approach depends on the nature and extent of the missing data, as well as the analysis goals. Common techniques include:

- Deletion: Removing rows or columns with missing values. This is simple but can lead to significant information loss if many values are missing. This is best used only if the missing data is completely random and constitutes a small portion of the dataset.

- Imputation: Replacing missing values with estimated values. Methods include:

- Mean/Median/Mode Imputation: Replacing missing values with the mean, median, or mode of the respective column. Simple but can distort the distribution if missingness is not random.

- Regression Imputation: Predicting missing values using a regression model based on other variables.

- K-Nearest Neighbors (KNN) Imputation: Imputing missing values based on the values of similar data points.

- Model-based techniques: Using algorithms like multiple imputation or maximum likelihood estimation which explicitly model the missing data mechanism.

The choice depends on the context. For example, in a medical study, simply deleting rows with missing data points could lead to biased results, so imputation or model-based approaches might be more suitable. However, in a large dataset with a small amount of missing data that is randomly distributed, simple deletion might be acceptable.

Q 4. What are some common data visualization techniques you use and when are they most appropriate?

Data visualization is key to understanding data. My go-to techniques include:

- Histograms: Show the distribution of a single numerical variable. Ideal for understanding the frequency of different values and identifying skewness.

- Scatter plots: Show the relationship between two numerical variables. Helpful for identifying correlations and clusters.

- Box plots: Display the distribution of a numerical variable, highlighting median, quartiles, and outliers. Useful for comparing distributions across different groups.

- Bar charts: Compare categorical data. Ideal for visualizing counts or proportions across different categories.

- Heatmaps: Visualize correlation matrices or other tabular data. Great for spotting patterns in large datasets.

For instance, to analyze sales data, I’d use a bar chart to compare sales across different product categories and a scatter plot to explore the relationship between advertising spend and sales revenue. A heatmap could be used to see correlations between different product features.

Q 5. Compare and contrast different regression techniques.

Several regression techniques exist, each with strengths and weaknesses:

- Linear Regression: Models the relationship between a dependent variable and one or more independent variables assuming a linear relationship. Simple to interpret, but can be inaccurate if the relationship is non-linear.

- Polynomial Regression: Extends linear regression to model non-linear relationships using polynomial terms. More flexible than linear regression, but can overfit if the degree of the polynomial is too high.

- Logistic Regression: Predicts the probability of a categorical dependent variable (usually binary). Widely used in classification problems.

- Ridge Regression and Lasso Regression: Regularized versions of linear regression that address multicollinearity and prevent overfitting by adding penalty terms. Useful when dealing with many predictors.

The choice depends on the nature of the data and the research question. If the relationship between variables is linear, linear regression might suffice. If the relationship is non-linear, polynomial regression or other non-linear methods might be more appropriate. Logistic regression is used when predicting a categorical outcome.

Q 6. How do you determine the appropriate statistical test for a given dataset and hypothesis?

Selecting the right statistical test hinges on the type of data (categorical, numerical), the research question (comparing means, testing associations), and the number of groups being compared. A step-by-step process helps:

- Define the Hypothesis: Clearly state the null and alternative hypotheses.

- Identify the Data Type: Are your variables categorical or numerical?

- Determine the Number of Groups: Are you comparing two groups or more?

- Consider the Relationship between Variables: Are you testing for differences or associations?

Examples:

- Comparing means of two independent groups: Independent samples t-test

- Comparing means of more than two independent groups: ANOVA

- Comparing means of two dependent groups: Paired samples t-test

- Testing the association between two categorical variables: Chi-square test

- Testing the association between two numerical variables: Correlation analysis

Choosing the wrong test can lead to inaccurate conclusions. Carefully considering these factors is essential for a valid statistical analysis. Consult statistical resources to ensure the correct test is selected for your specific situation.

Q 7. Explain the concept of outliers and how you would address them in your analysis.

Outliers are data points that significantly deviate from the rest of the data. They can be caused by errors in data collection, genuine unusual events, or simply natural variation. Addressing outliers depends on their nature and the impact on analysis:

- Identification: Use box plots, scatter plots, or statistical methods like the Z-score (values more than 3 standard deviations from the mean) to identify outliers.

- Investigation: Examine outliers to understand their cause. Are they errors? Are they meaningful data points that reflect real variability?

- Treatment:

- Removal: Only remove outliers if they are clearly errors. Document the reasons for removal.

- Transformation: Apply transformations (e.g., log transformation) to reduce the influence of outliers.

- Winsorizing or Trimming: Replace extreme values with less extreme values (e.g., replace with the 95th percentile).

- Robust methods: Use statistical methods that are less sensitive to outliers (e.g., median instead of mean).

For instance, in analyzing real estate prices, a single property priced extremely high might be an outlier. I’d investigate—was it a data entry error or a genuinely unique property? If it’s an error, I’d correct it. If genuine, I might use a robust statistical method (e.g., median price) or transformation to lessen its disproportionate influence on the analysis.

Q 8. How do you interpret correlation coefficients?

Correlation coefficients measure the strength and direction of a linear relationship between two variables. They range from -1 to +1. A coefficient of +1 indicates a perfect positive correlation (as one variable increases, the other increases proportionally), -1 indicates a perfect negative correlation (as one increases, the other decreases proportionally), and 0 indicates no linear correlation.

For example, a correlation coefficient of 0.8 between ice cream sales and temperature suggests a strong positive correlation: higher temperatures tend to lead to higher ice cream sales. A coefficient of -0.6 between the number of hours spent exercising and body fat percentage suggests a moderate negative correlation: more exercise is associated with less body fat. It’s crucial to remember that correlation doesn’t imply causation; a correlation might be due to a third, unmeasured variable.

Interpreting the strength of the correlation is subjective, but a commonly used guideline is: 0.0-0.3 (weak), 0.3-0.7 (moderate), and 0.7-1.0 (strong). Always consider the context and visualize the data (scatter plot) alongside the coefficient for a comprehensive understanding.

Q 9. Describe your experience with different data mining techniques.

My experience encompasses a wide range of data mining techniques, including clustering, classification, association rule mining, and regression. In a previous role, I used k-means clustering to segment customers based on their purchasing behavior, revealing distinct groups with different needs and preferences. This allowed for targeted marketing campaigns and improved customer retention. I’ve also applied decision tree classification to predict customer churn, leveraging features like customer tenure and engagement metrics. Further, I’ve utilized Apriori algorithm for association rule mining to discover frequently purchased product combinations, leading to optimized product placement and cross-selling strategies. Finally, I have extensive experience with various regression techniques (linear, logistic, polynomial) for predictive modeling tasks.

I am proficient in using tools like Python’s scikit-learn library and R’s caret package for implementing and evaluating these techniques. My approach always involves careful data preprocessing, feature engineering, and model selection to ensure robustness and accuracy.

Q 10. Explain the difference between predictive and descriptive analytics.

Descriptive analytics summarizes past data to understand what happened. Think of it as looking in the rearview mirror. It involves techniques like calculating summary statistics (mean, median, standard deviation), creating visualizations (charts, graphs), and generating reports. For example, analyzing past sales data to identify the best-selling product in a given month is descriptive analytics.

Predictive analytics uses historical data to forecast future outcomes. It’s like using the map to plan your journey. It leverages machine learning algorithms to build models that predict future events. Predicting customer churn or future sales based on historical trends is an example of predictive analytics.

In short, descriptive analytics tells you what happened, while predictive analytics tells you what might happen.

Q 11. How would you approach building a predictive model for customer churn?

Building a predictive model for customer churn involves a systematic approach. First, I’d gather historical data, including customer demographics, purchase history, engagement metrics (website visits, app usage), customer service interactions, and churn status. Then, I’d perform exploratory data analysis to understand the data, identify patterns, and handle missing values. Feature engineering would be crucial here—creating new features from existing ones that might be more predictive (e.g., average purchase frequency, days since last interaction).

Next, I would select appropriate machine learning algorithms, potentially starting with simpler models like logistic regression and then exploring more complex models like support vector machines (SVMs), random forests, or gradient boosting machines. I’d split the data into training, validation, and testing sets. The model would be trained on the training set, tuned on the validation set (to avoid overfitting), and finally evaluated on the testing set to assess its performance on unseen data. Model performance metrics such as precision, recall, F1-score, and AUC would be used to compare models and select the best one.

Finally, I’d deploy the chosen model, ensuring it’s easily integrated into the existing systems for real-time predictions and monitoring. Regularly reassessing the model’s performance and retraining it with updated data are vital for maintaining accuracy.

Q 12. How do you evaluate the performance of a machine learning model?

Evaluating a machine learning model depends on the type of problem (classification, regression, clustering). For classification, common metrics include:

- Accuracy: The overall correctness of predictions.

- Precision: The proportion of correctly predicted positive instances out of all predicted positive instances.

- Recall (Sensitivity): The proportion of correctly predicted positive instances out of all actual positive instances.

- F1-score: The harmonic mean of precision and recall.

- AUC (Area Under the ROC Curve): Measures the model’s ability to distinguish between classes.

For regression problems, metrics like:

- Mean Squared Error (MSE): Measures the average squared difference between predicted and actual values.

- Root Mean Squared Error (RMSE): The square root of MSE, providing a measure in the original units.

- R-squared: Represents the proportion of variance in the dependent variable explained by the model.

Beyond these, techniques like cross-validation are used to ensure the model generalizes well to unseen data and prevents overfitting. Visualizations like confusion matrices and ROC curves are also helpful in understanding model performance.

Q 13. What are some common challenges in working with big data?

Working with big data presents several challenges:

- Volume: The sheer size of data makes processing, storage, and analysis computationally intensive. Distributed computing frameworks like Hadoop and Spark are necessary.

- Velocity: Data arrives at a high speed, requiring real-time or near real-time processing capabilities. Stream processing technologies are essential.

- Variety: Data comes in various formats (structured, semi-structured, unstructured), demanding flexible processing methods capable of handling different data types.

- Veracity: Data quality can be inconsistent, requiring careful cleaning, validation, and error handling.

- Value: Extracting meaningful insights from vast amounts of data is a challenge; effective data mining and analysis techniques are vital.

Addressing these challenges often necessitates a combination of specialized tools, technologies, and expertise in distributed computing, data engineering, and machine learning.

Q 14. What is your experience with SQL and data manipulation?

I have extensive experience with SQL and data manipulation. I’m proficient in writing complex queries to extract, transform, and load (ETL) data from various sources. I’ve worked with relational databases like MySQL, PostgreSQL, and SQL Server, as well as NoSQL databases like MongoDB. My SQL skills include:

- Data retrieval: Using

SELECT,JOIN,WHERE,GROUP BY, andHAVINGclauses to extract specific data. - Data manipulation: Employing functions like

SUM(),AVG(),COUNT(), andDATE()for calculations and data transformations. - Data modification: Using

UPDATEandDELETEstatements to modify or remove data. - Data definition: Creating and managing database tables using

CREATE TABLE,ALTER TABLE, and other DDL commands.

For example, I’ve used SQL to join customer data with transaction data to analyze purchasing patterns, creating visualizations showing customer lifetime value and identifying high-value customers. I can also efficiently handle large datasets using optimized queries and indexing strategies. Beyond SQL, I’m familiar with data manipulation tools and languages like Python’s Pandas library, enabling me to process and analyze data efficiently in diverse formats.

SELECT customer_id, SUM(purchase_amount) AS total_spent FROM customers JOIN transactions ON customers.customer_id = transactions.customer_id GROUP BY customer_id ORDER BY total_spent DESC;Q 15. How familiar are you with statistical software packages (e.g., R, Python, SAS)?

I’m highly proficient in several statistical software packages. My primary tools are R and Python, which I use extensively for data manipulation, statistical modeling, and visualization. I’m also familiar with SAS, particularly its strengths in handling large datasets and its robust procedures for statistical analysis. My experience spans a wide range of packages within these environments; for example, in R, I regularly utilize packages like dplyr for data manipulation, ggplot2 for visualization, and caret for machine learning. In Python, I leverage libraries such as pandas, NumPy, scikit-learn, and matplotlib for similar purposes. This diverse skill set allows me to adapt to various project needs and choose the most appropriate tool for the task at hand.

Career Expert Tips:

- Ace those interviews! Prepare effectively by reviewing the Top 50 Most Common Interview Questions on ResumeGemini.

- Navigate your job search with confidence! Explore a wide range of Career Tips on ResumeGemini. Learn about common challenges and recommendations to overcome them.

- Craft the perfect resume! Master the Art of Resume Writing with ResumeGemini’s guide. Showcase your unique qualifications and achievements effectively.

- Don’t miss out on holiday savings! Build your dream resume with ResumeGemini’s ATS optimized templates.

Q 16. How do you handle conflicting data from multiple sources?

Handling conflicting data from multiple sources requires a systematic approach. First, I carefully examine the metadata of each dataset to understand its origin, collection methods, and potential biases. Then, I identify the nature of the conflict – are the discrepancies minor variations or significant disagreements? For minor inconsistencies, I might use techniques like averaging or weighted averaging, giving more weight to data from sources deemed more reliable. For major conflicts, I investigate the underlying reasons. This might involve contacting data providers for clarification, examining data quality indicators, or conducting further data validation. If inconsistencies remain irreconcilable, I document them clearly and discuss their implications in my analysis. Sometimes, I may need to exclude the conflicting data points if they are few and their impact is deemed minimal. Ultimately, transparency and thorough documentation are key to handling conflicting data responsibly.

Q 17. Describe a time you had to explain complex data analysis findings to a non-technical audience.

In a previous project analyzing customer churn for a telecommunications company, I needed to present complex statistical findings to the executive team, most of whom lacked a deep understanding of statistics. Instead of using technical jargon, I used clear and concise language, focusing on the key findings and their business implications. I employed visualizations like bar charts and line graphs to illustrate trends and patterns in customer churn. For example, instead of saying “The logistic regression model showed a statistically significant relationship between customer tenure and churn,” I explained it as: “Customers who have been with us for less than six months are significantly more likely to switch providers.”

I also created a narrative around the data, explaining the “story” it told, focusing on actionable insights. This involved highlighting the most important drivers of churn, such as issues with customer service and insufficient data plans, and suggesting specific strategies to address these issues. The presentation resulted in actionable changes to the company’s customer retention strategies.

Q 18. How do you identify and address bias in your data analysis?

Addressing bias is crucial for reliable data analysis. My approach starts with understanding the potential sources of bias – sampling bias (e.g., non-representative sample), measurement bias (e.g., flawed survey questions), reporting bias (e.g., selective reporting of results), and confirmation bias (e.g., interpreting data to support pre-existing beliefs). I employ several strategies to mitigate these biases. Firstly, I carefully scrutinize data collection methods and sample design to identify potential sources of bias. Secondly, I use rigorous statistical techniques to account for known biases. For example, I might use propensity score matching to control for confounding variables or employ regression models to account for systematic differences between groups. Finally, I document all potential biases and their potential impact on my results, ensuring complete transparency in my analysis.

Q 19. What are your preferred methods for data storytelling?

My preferred methods for data storytelling prioritize clarity and impact. I believe in using a combination of visualizations and narratives to convey complex information effectively. I frequently use charts, graphs, and dashboards to present key findings visually. I also employ narrative techniques to provide context, explain trends, and highlight important insights. For example, I might start with a compelling hook, followed by a clear explanation of the problem, the data used to analyze it, the methods employed, and, finally, the key conclusions and their implications. I often use analogies or metaphors to make complex concepts more relatable to the audience. The goal is not just to present the data but to engage the audience and lead them to a better understanding of the underlying message.

Q 20. What is your approach to identifying root causes from data analysis findings?

Identifying root causes from data analysis findings requires a structured approach. I often use techniques like the “5 Whys” – repeatedly asking “why” to drill down to the underlying cause of a problem. I also use data mining techniques like association rule mining to uncover relationships between variables and identify potential root causes. Additionally, I might employ statistical process control (SPC) charts to monitor processes over time and identify the point at which problems emerged. Combining quantitative analysis with qualitative data, such as interviews or surveys, can provide a more holistic understanding of the root causes. The process is iterative – initial findings often lead to further investigation and refinement of hypotheses.

Q 21. How do you ensure the accuracy and reliability of your data analysis results?

Ensuring the accuracy and reliability of my data analysis results is paramount. This involves several key steps: Firstly, I meticulously check the data for errors, inconsistencies, and outliers. Secondly, I utilize appropriate statistical methods and validate them. This includes ensuring that the chosen statistical techniques are appropriate for the type of data and the research question. Thirdly, I meticulously document my entire analytical process – from data cleaning to model building and interpretation. This allows for reproducibility and verification of results by others. Finally, I cross-validate my results using multiple methods, comparing findings from different statistical models or datasets. By employing these rigorous methods, I strive for transparency, reproducibility, and robust findings.

Q 22. Describe your process for validating data analysis findings.

Validating data analysis findings is crucial to ensuring the reliability and accuracy of your conclusions. My process involves a multi-step approach focusing on both the data and the analysis itself. I begin by rigorously checking data quality, looking for inconsistencies, outliers, and missing values. This often involves using descriptive statistics and data visualization to identify potential problems. For instance, I might create histograms to detect skewed distributions or scatter plots to identify correlations.

Next, I validate the analytical methods used. This means verifying the appropriateness of statistical tests, ensuring the assumptions underlying those tests are met, and checking for any potential biases in the methodology. For example, if I’m using regression analysis, I carefully examine the residuals to ensure they are normally distributed and randomly scattered.

Finally, I perform sensitivity analysis to assess the robustness of my findings. This involves changing assumptions, input parameters, or analytical techniques and observing the impact on the results. If the conclusions remain consistent across different scenarios, it increases confidence in the validity of the findings. A crucial aspect is documenting each step of this process meticulously, so the entire analysis is transparent and reproducible.

Q 23. How do you prioritize different analytical tasks when facing tight deadlines?

Prioritizing analytical tasks under tight deadlines requires a structured approach. My strategy involves a combination of urgency, importance, and impact assessment. I use a prioritization matrix, often a simple Eisenhower Matrix (urgent/important), to visually categorize tasks. Tasks that are both urgent and important get immediate attention. For example, resolving a critical production issue that’s impacting revenue would fall into this category.

Tasks that are important but not urgent are scheduled for later, but I ensure they are included in the plan. These could include developing a new forecasting model or performing a thorough competitor analysis. I delegate tasks where appropriate, based on team members’ skills and availability. This ensures efficient use of resources and accelerates the completion of the overall project. Finally, I use project management tools to track progress, manage dependencies between tasks, and maintain accountability. This helps me keep everything on schedule and prevent bottlenecks.

Q 24. Explain the concept of A/B testing and its applications.

A/B testing, also known as split testing, is a controlled experiment where two versions (A and B) of a webpage, application, or other element are shown to different user groups to determine which performs better. The key is that only one variable is changed between the two versions, allowing for clear attribution of the results. This ensures that any observed differences are directly attributable to the modification and not other confounding factors.

Applications are vast. In e-commerce, A/B testing might compare different button colors or product placement to see which drives higher conversion rates. In marketing, different subject lines or email designs can be tested to optimize open and click-through rates. In software development, A/B testing can compare different UI designs to improve user experience and engagement. The core principle is to use data-driven decision-making to optimize user experience, marketing strategies, product features, and other important aspects of a business.

Q 25. How would you use data to improve a business process?

Data can significantly improve business processes by identifying inefficiencies, optimizing workflows, and informing strategic decisions. A common example is supply chain optimization. By analyzing sales data, inventory levels, and lead times, you can identify patterns and predict future demand more accurately. This allows for better inventory management, reducing warehousing costs and minimizing stockouts.

Another example is improving customer service. Analyzing customer feedback, support tickets, and call center data can highlight common problems and areas for improvement. This information can be used to train staff, streamline processes, and enhance the customer experience. Similarly, analyzing website analytics can reveal user behavior patterns, identify friction points in the conversion funnel, and inform website design improvements. In essence, data-driven insights inform adjustments and upgrades at every stage, from production and logistics to sales and customer relations, resulting in a smoother and more efficient operation.

Q 26. Describe a time you made a significant impact using data analysis.

In a previous role, our marketing team was struggling to optimize campaign spending. We were allocating resources across multiple channels without clear understanding of their ROI. I analyzed campaign data, including impressions, clicks, conversions, and cost-per-acquisition (CPA) across different platforms. I used regression analysis to identify the key factors driving conversion rates and then developed a predictive model to forecast the performance of different channels.

The results revealed that one particular social media platform was significantly underperforming compared to others. Based on these findings, I recommended reallocating budget towards more efficient channels. The subsequent campaigns saw a 20% increase in conversion rates and a 15% reduction in CPA, leading to significant cost savings and a substantial boost to marketing ROI. This project highlighted the power of data-driven decision-making in resource allocation and achieving optimal campaign performance.

Q 27. What are some ethical considerations in data analysis?

Ethical considerations in data analysis are paramount. Privacy is a major concern. Ensuring data is collected, stored, and used responsibly is vital, adhering to regulations like GDPR and CCPA. This includes obtaining informed consent, anonymizing data where possible, and implementing robust security measures to prevent data breaches.

Another key aspect is bias. Algorithms and datasets can reflect existing societal biases, leading to unfair or discriminatory outcomes. It’s crucial to be aware of potential biases and actively work to mitigate them during the entire analytical process, from data collection to model deployment. Transparency and accountability are equally critical. Clearly communicating the methods used, limitations of the analysis, and potential implications of the findings is crucial to building trust and avoiding misuse of results.

Q 28. What are your strategies for continuous learning in the field of data analysis?

Continuous learning is vital in the rapidly evolving field of data analysis. My strategies include actively pursuing online courses and certifications offered by platforms like Coursera, edX, and Udacity. I also regularly attend industry conferences and webinars to stay updated on the latest tools, techniques, and best practices.

I actively engage with the data science community through online forums, attending meetups, and participating in Kaggle competitions. This provides opportunities to learn from others, collaborate on projects, and gain practical experience. I also dedicate time to reading research papers, journals, and industry blogs to stay informed about cutting-edge developments in the field. By combining formal learning with practical application and community engagement, I ensure my skills remain relevant and up-to-date.

Key Topics to Learn for Ability to analyze and interpret complex data sets Interview

- Data Cleaning and Preprocessing: Understanding techniques like handling missing values, outlier detection, and data transformation is crucial for accurate analysis. Practical application: Preparing a messy dataset for a regression model.

- Exploratory Data Analysis (EDA): Mastering techniques to summarize and visualize data to identify patterns, trends, and anomalies. Practical application: Using histograms, scatter plots, and box plots to understand the distribution of variables and their relationships.

- Statistical Analysis: Proficiency in hypothesis testing, regression analysis, and other statistical methods to draw meaningful conclusions from data. Practical application: Determining the statistical significance of the relationship between two variables.

- Data Visualization: Creating clear and effective visualizations to communicate insights derived from data analysis. Practical application: Building interactive dashboards to present key findings to stakeholders.

- Data Interpretation and Storytelling: Translating data findings into actionable insights and communicating them effectively to both technical and non-technical audiences. Practical application: Presenting complex analytical results in a concise and understandable manner.

- Choosing the Right Analytical Tools: Demonstrating familiarity with relevant software and programming languages (e.g., Python with Pandas and Scikit-learn, R, SQL) and their application in data analysis. Practical application: Selecting the appropriate statistical test based on the data type and research question.

- Bias and Limitations: Understanding potential sources of bias in data and acknowledging limitations of analytical approaches. Practical application: Critically evaluating the reliability and generalizability of analytical findings.

Next Steps

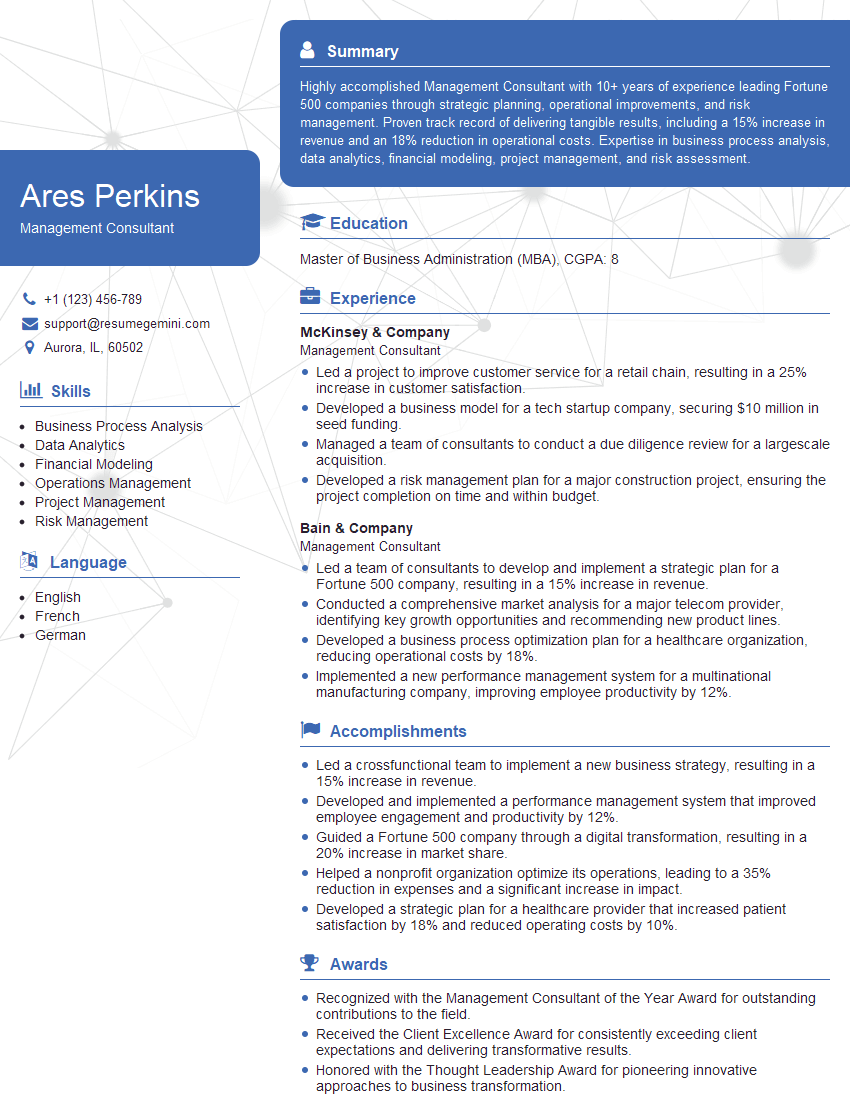

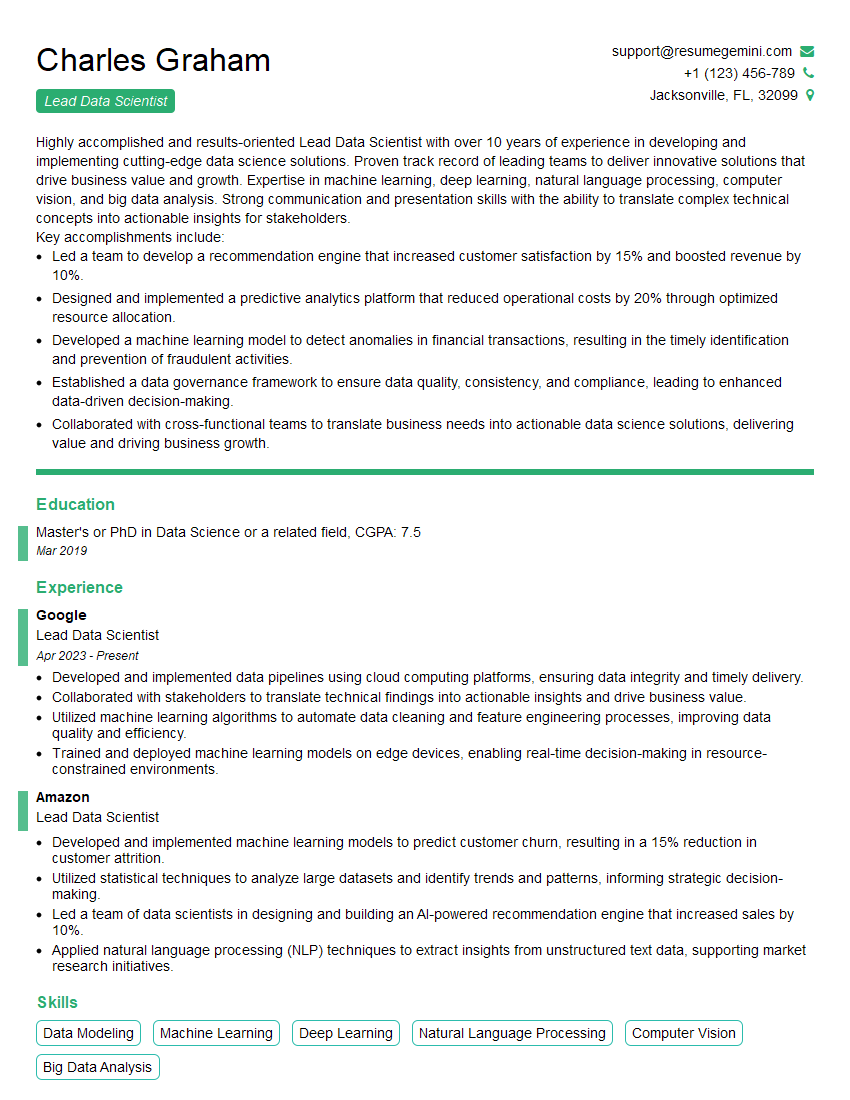

Mastering the ability to analyze and interpret complex data sets is paramount for career advancement in today’s data-driven world. It opens doors to exciting roles and higher earning potential across various industries. To significantly increase your job prospects, it’s essential to create a resume that effectively showcases your skills and experience. Build an ATS-friendly resume that highlights your accomplishments and quantifies your impact. ResumeGemini is a trusted resource to help you craft a professional and compelling resume. We provide examples of resumes tailored to highlight expertise in analyzing and interpreting complex data sets, helping you present your qualifications in the most impactful way possible.

Explore more articles

Users Rating of Our Blogs

Share Your Experience

We value your feedback! Please rate our content and share your thoughts (optional).

What Readers Say About Our Blog

Hello,

We found issues with your domain’s email setup that may be sending your messages to spam or blocking them completely. InboxShield Mini shows you how to fix it in minutes — no tech skills required.

Scan your domain now for details: https://inboxshield-mini.com/

— Adam @ InboxShield Mini

Reply STOP to unsubscribe

Hi, are you owner of interviewgemini.com? What if I told you I could help you find extra time in your schedule, reconnect with leads you didn’t even realize you missed, and bring in more “I want to work with you” conversations, without increasing your ad spend or hiring a full-time employee?

All with a flexible, budget-friendly service that could easily pay for itself. Sounds good?

Would it be nice to jump on a quick 10-minute call so I can show you exactly how we make this work?

Best,

Hapei

Marketing Director

Hey, I know you’re the owner of interviewgemini.com. I’ll be quick.

Fundraising for your business is tough and time-consuming. We make it easier by guaranteeing two private investor meetings each month, for six months. No demos, no pitch events – just direct introductions to active investors matched to your startup.

If youR17;re raising, this could help you build real momentum. Want me to send more info?

Hi, I represent an SEO company that specialises in getting you AI citations and higher rankings on Google. I’d like to offer you a 100% free SEO audit for your website. Would you be interested?

Hi, I represent an SEO company that specialises in getting you AI citations and higher rankings on Google. I’d like to offer you a 100% free SEO audit for your website. Would you be interested?

good