Cracking a skill-specific interview, like one for Advanced Knowledge of Cloud Computing and Data Management, requires understanding the nuances of the role. In this blog, we present the questions you’re most likely to encounter, along with insights into how to answer them effectively. Let’s ensure you’re ready to make a strong impression.

Questions Asked in Advanced Knowledge of Cloud Computing and Data Management Interview

Q 1. Explain the difference between IaaS, PaaS, and SaaS.

IaaS, PaaS, and SaaS are three fundamental service models in cloud computing, differing primarily in the level of abstraction and control offered to the user. Think of it like this: you’re building a house.

- IaaS (Infrastructure as a Service): This is like renting the land and basic building materials. You are responsible for building the entire house – the foundation, walls, roof, everything. You have complete control but significant responsibility. Examples include renting virtual machines (VMs), storage, and networking from providers like AWS EC2, Azure Virtual Machines, or GCP Compute Engine. You manage the operating system, applications, and everything else.

- PaaS (Platform as a Service): This is like renting a pre-fabricated house frame. The foundation and basic structure are already in place. You focus on interior design and fitting out the house – installing plumbing, electrical wiring, and adding your personal touches. You manage your applications and data, but the underlying infrastructure (servers, operating systems, etc.) is managed by the provider. Examples include AWS Elastic Beanstalk, Azure App Service, and Google App Engine.

- SaaS (Software as a Service): This is like moving into a fully furnished and maintained apartment. You only need to bring your belongings and start living. You don’t manage any infrastructure or even the application; you just use it. Examples are Salesforce, Gmail, and Microsoft 365.

In essence, the higher the level of service (SaaS > PaaS > IaaS), the less control and responsibility you have, but the easier it is to get started and use.

Q 2. Describe your experience with different cloud providers (AWS, Azure, GCP).

I’ve had extensive experience with all three major cloud providers – AWS, Azure, and GCP – across various projects. My experience includes:

- AWS: I’ve leveraged AWS extensively for building and deploying highly scalable microservices using EC2, ECS, and EKS. I’ve also utilized S3 for object storage, RDS for relational databases, and DynamoDB for NoSQL needs. I’m proficient in using various AWS services for monitoring (CloudWatch), logging (CloudTrail), and security (IAM).

- Azure: My Azure experience centers around building and managing virtual machines using Azure Virtual Machines, implementing Azure SQL Database for relational data, and leveraging Azure Cosmos DB for NoSQL document databases. I’ve also worked with Azure DevOps for CI/CD pipelines.

- GCP: On GCP, I’ve utilized Compute Engine for VM instances, Cloud SQL for relational databases, and Cloud Storage for object storage. I have experience in setting up and managing Kubernetes clusters using Google Kubernetes Engine (GKE) and working with BigQuery for large-scale data analytics.

I find each provider offers unique strengths; choosing the right one depends heavily on the specific project requirements, existing infrastructure, and team expertise. For instance, AWS often excels in sheer breadth of services, while Azure has strong integrations with Microsoft technologies, and GCP shines with its big data and machine learning offerings.

Q 3. How would you design a highly available and scalable data pipeline?

Designing a highly available and scalable data pipeline requires a robust architecture considering fault tolerance and elasticity. Here’s a sample design:

- Data Ingestion: Multiple sources feed data into the pipeline. Each source should have redundancy (e.g., two separate connections to each database). Consider using message queues (like Kafka or RabbitMQ) to buffer data and decouple ingestion from processing.

- Data Processing: Utilize distributed processing frameworks like Apache Spark or Apache Flink, which can handle large volumes of data and distribute the workload across multiple nodes. Implement fault tolerance mechanisms like checkpointing and retry mechanisms.

- Data Storage: Use a distributed storage system like HDFS, S3, or Azure Blob Storage to store the processed data. Ensure data is replicated across multiple availability zones to prevent data loss.

- Data Transformation (ETL): Employ tools like Apache Airflow or AWS Glue to orchestrate and monitor the ETL process. Break down complex ETL tasks into smaller, independent units to improve manageability and fault isolation.

- Monitoring and Alerting: Set up comprehensive monitoring using tools such as Prometheus, Grafana, or cloud provider-specific monitoring services (CloudWatch, Azure Monitor, Stackdriver). Configure alerts to notify administrators of any anomalies or failures.

Scalability is achieved through distributed processing and storage. High availability is guaranteed by redundancy at each stage, automated failover, and self-healing mechanisms. The entire pipeline should be designed with fault tolerance in mind, ensuring that if one component fails, the system can continue to operate without significant disruption.

Q 4. Explain your understanding of different database models (relational, NoSQL).

Relational and NoSQL databases represent distinct approaches to data modeling and management.

- Relational Databases (RDBMS): These databases organize data into tables with rows and columns, linked through relationships (e.g., foreign keys). They’re structured, enforce data integrity through schemas, and are ideal for applications requiring ACID (Atomicity, Consistency, Isolation, Durability) properties, like financial transactions. Examples include MySQL, PostgreSQL, Oracle, and SQL Server. They excel at complex queries and data relationships.

- NoSQL Databases: These databases offer a more flexible schema and handle large volumes of unstructured or semi-structured data more efficiently than RDBMS. They come in various types, including:

- Key-value stores: (Redis, Memcached) – simple data pairs.

- Document databases: (MongoDB) – data stored in JSON-like documents.

- Column-family stores: (Cassandra, HBase) – ideal for large datasets with many columns.

- Graph databases: (Neo4j) – best for representing relationships between data.

Choosing between RDBMS and NoSQL depends on the application’s requirements. RDBMS are suitable for applications needing strong consistency and data integrity, while NoSQL databases are better for high-volume, unstructured data, and horizontal scalability.

Q 5. What are the common challenges in migrating data to the cloud?

Migrating data to the cloud presents various challenges:

- Data Volume and Velocity: Moving massive datasets can be time-consuming and resource-intensive. The speed of data transfer and processing needs careful planning.

- Data Format and Structure: Data may be in various formats requiring conversion or transformation before loading into cloud storage. Inconsistent data structures across different source systems also need to be addressed.

- Data Security and Compliance: Ensuring data security and meeting regulatory compliance standards during and after migration is crucial. Data encryption, access control, and audit trails must be established.

- Downtime and Business Continuity: Minimizing downtime during the migration process is essential. A well-defined migration plan with thorough testing is critical.

- Cost Optimization: Cloud migration costs can be significant if not managed effectively. Proper cost modeling and resource optimization are important.

- Integration with Existing Systems: Integrating cloud-based data with on-premises systems or other cloud platforms requires careful planning and potentially custom-built integration solutions.

Addressing these challenges involves a phased approach, thorough planning, and using appropriate migration tools and techniques.

Q 6. How do you ensure data security and compliance in the cloud?

Data security and compliance in the cloud are paramount. Here’s how to ensure them:

- Data Encryption: Employ encryption at rest (for data stored in cloud storage) and in transit (for data transferred over networks). Use strong encryption algorithms and regularly update encryption keys.

- Access Control: Implement the principle of least privilege, granting users only the necessary access rights. Use Identity and Access Management (IAM) services provided by cloud providers.

- Data Loss Prevention (DLP): Implement DLP solutions to monitor and prevent sensitive data from leaving the cloud environment.

- Regular Security Audits and Penetration Testing: Conduct regular security audits and penetration tests to identify vulnerabilities and ensure compliance with security standards.

- Compliance Certifications: Obtain relevant compliance certifications like ISO 27001, SOC 2, or HIPAA, demonstrating your commitment to data security and regulatory compliance.

- Security Information and Event Management (SIEM): Use SIEM tools to centralize security logs and monitor for suspicious activities. This aids in incident detection and response.

- Vulnerability Management: Regularly scan for and address software vulnerabilities in your cloud infrastructure.

Proactive security measures are key. Regularly review and update security policies and procedures to adapt to evolving threats and compliance requirements.

Q 7. Describe your experience with data warehousing and ETL processes.

My experience with data warehousing and ETL processes includes designing, building, and maintaining data warehouses for various organizations. I’ve worked with both traditional ETL processes and modern cloud-based solutions.

- Data Warehousing: I’ve designed and implemented data warehouses using various technologies, including Snowflake, Amazon Redshift, and Google BigQuery. I have expertise in dimensional modeling and designing efficient star and snowflake schemas. I’m skilled in optimizing query performance and managing data warehouse capacity.

- ETL Processes: I’ve used ETL tools such as Informatica PowerCenter, Apache Kafka, and cloud-based services like AWS Glue and Azure Data Factory to extract, transform, and load data into data warehouses. I understand the importance of data quality, cleansing, and transformation to ensure data accuracy and consistency within the warehouse.

In practice, I focus on creating efficient and scalable ETL pipelines that can handle large volumes of data while maintaining data quality. I employ various techniques like data profiling, data validation, and error handling to ensure data integrity throughout the ETL process. Performance tuning and monitoring are also crucial aspects of my approach.

Q 8. What are your preferred tools for data visualization and analysis?

My preferred tools for data visualization and analysis depend heavily on the context of the project, but generally revolve around a combination of tools offering a powerful blend of exploratory analysis, statistical modeling, and compelling presentation capabilities. For interactive dashboards and exploratory analysis, I frequently leverage Tableau and Power BI. These tools excel at creating visually appealing dashboards that allow stakeholders to interact with the data and gain insights quickly. Their drag-and-drop interfaces simplify the process, making them excellent for collaborative projects and presenting findings to non-technical audiences.

For more in-depth statistical modeling and analysis, I rely on Python with libraries like Pandas, NumPy, Matplotlib, and Seaborn. Pandas provides powerful data manipulation capabilities, while NumPy offers efficient numerical computation. Matplotlib and Seaborn create high-quality static, interactive, and animated visualizations. This combination allows for greater flexibility and control over the analytical process, particularly for tasks requiring complex statistical modeling or custom visualizations. Finally, I often use R for specific statistical analyses, especially when dealing with advanced statistical techniques not readily available in Python libraries.

For example, in a recent project analyzing customer churn, I used Power BI to build a dashboard showing key metrics like churn rate by customer segment. Then, I employed Python with Scikit-learn to build a predictive model to identify customers at high risk of churning, visualizing the model’s performance using Matplotlib.

Q 9. Explain your experience with big data technologies (Hadoop, Spark, etc.).

My experience with big data technologies encompasses both Hadoop and Spark ecosystems. I’ve worked extensively with Hadoop Distributed File System (HDFS) for storing and managing large datasets, utilizing its scalability and fault tolerance. I’ve leveraged MapReduce for processing large datasets in parallel, writing custom MapReduce jobs using Java and understanding its limitations regarding iterative algorithms.

However, for iterative tasks and real-time processing, Spark offers significant advantages. I’ve used Spark SQL for querying large datasets stored in various formats (Parquet, Avro) and implemented machine learning algorithms using Spark MLlib. Spark’s in-memory computation significantly speeds up processing, essential for many big data applications. I’m also proficient in using PySpark for writing Spark applications using Python, simplifying development and integration with other Python libraries.

For instance, in a project involving analyzing web server logs, I employed Spark to process terabytes of data, aggregating user activity and identifying patterns in real-time. The speed and efficiency of Spark were crucial in providing near real-time insights, something MapReduce couldn’t deliver effectively.

Q 10. How do you handle data inconsistencies and anomalies?

Handling data inconsistencies and anomalies is a critical aspect of data management. My approach involves a multi-step process starting with data profiling and exploration to identify potential issues. I use descriptive statistics, data visualization, and anomaly detection algorithms to pinpoint inconsistencies and outliers.

For inconsistencies, I investigate the root cause – is it due to data entry errors, system glitches, or different data sources? The solution depends on the nature of the inconsistency. For minor inconsistencies, I may use data imputation techniques like mean/median imputation or k-Nearest Neighbors (KNN) imputation. For more significant or systematic errors, I may need to review data sources, implement data validation rules, or cleanse the data manually.

Anomaly detection often uses statistical methods or machine learning algorithms. For instance, I might use the Isolation Forest algorithm to identify unusual data points and then investigate them manually to determine if they’re legitimate outliers or data errors. After identifying and addressing inconsistencies and anomalies, I rigorously validate the cleaned data to ensure accuracy and consistency.

In a recent project involving sensor data, I detected anomalous readings using a combination of statistical process control (SPC) charts and the Isolation Forest algorithm. Upon investigation, these anomalies were attributed to sensor malfunctions, which allowed us to address the equipment issues and avoid inaccurate conclusions.

Q 11. Describe your experience with different cloud storage solutions.

My experience with cloud storage solutions includes AWS S3, Azure Blob Storage, and Google Cloud Storage (GCS). I understand the nuances of each platform and choose the best option based on factors like cost, scalability, security, and specific project requirements.

AWS S3 offers excellent scalability and a wide range of features, including versioning and lifecycle management. I’ve used S3 for storing large datasets, backups, and application assets. Azure Blob Storage provides similar functionality with strong integration into the Azure ecosystem. GCS also offers comparable capabilities, often excelling in cost-effectiveness for certain workloads.

The selection depends on the bigger picture. If the project is heavily integrated with the AWS ecosystem, S3 is often the natural choice. If tighter integration with other Azure services is needed, Azure Blob Storage is preferable. Choosing a cloud storage provider involves careful consideration of all aspects of your data storage and management strategy.

Q 12. How do you optimize database performance?

Optimizing database performance requires a holistic approach encompassing several strategies. I start by analyzing query performance using tools like database explain plans. This identifies bottlenecks – slow queries or inefficient indexing.

Key optimization techniques include creating appropriate indexes to speed up data retrieval, optimizing database schemas to reduce data redundancy and improve query efficiency, and using query optimization techniques such as rewriting queries to use efficient join methods or using appropriate data types. Regular database maintenance, like running statistics updates and defragmentation, is also crucial. Additionally, database tuning parameters, such as buffer pool size and connection pooling, can be adjusted to optimize performance based on workload characteristics.

For example, in a project with a slow-performing SQL Server database, I identified a poorly performing query using the execution plan. By adding an index to the relevant columns, I reduced query execution time by over 80%. Proper indexing is often the low-hanging fruit that yields immediate and significant performance improvement.

Q 13. Explain your understanding of data modeling techniques.

Data modeling is crucial for building efficient and scalable databases. My understanding encompasses various techniques, including relational modeling (using ER diagrams), NoSQL modeling (document, key-value, graph), and dimensional modeling for data warehousing.

Relational modeling focuses on designing tables with clear relationships between them, ensuring data integrity and consistency. I use ER diagrams to visually represent the entities, attributes, and relationships within the database. NoSQL modeling offers flexible schemas better suited for unstructured or semi-structured data, often seen in big data applications. Choosing between relational and NoSQL depends on the specific needs of the application. Dimensional modeling structures data into fact tables and dimension tables, ideal for analytical processing and business intelligence.

For example, in designing a database for an e-commerce website, I’d use a relational model with tables for customers, products, orders, and order items, establishing relationships between them. For a social media application, a graph database might be preferable, modeling user relationships efficiently.

Q 14. How do you manage data backups and recovery in the cloud?

Managing data backups and recovery in the cloud requires a robust and reliable strategy. My approach focuses on several key aspects: frequent backups to ensure minimal data loss, utilizing cloud-native backup services for ease of management and scalability, and implementing a recovery plan to quickly restore data in case of failure.

I typically leverage cloud-provided backup services such as AWS Backup, Azure Backup, or Google Cloud Backup. These services automate the backup process, providing features like incremental backups, versioning, and lifecycle management. I also define a comprehensive recovery plan outlining the steps to restore data from backups, ensuring all teams are aware of the procedures. This includes defining recovery time objectives (RTOs) and recovery point objectives (RPOs) to set recovery goals. Regular testing of the backup and recovery plan is vital to verify its effectiveness and identify any potential weaknesses.

For instance, using AWS Backup, I would configure automated daily backups of a relational database hosted on AWS RDS, storing the backups in S3. The recovery plan would specify the steps to restore the database from the latest backup within a defined RTO and RPO.

Q 15. What are your strategies for monitoring and managing cloud resources?

Monitoring and managing cloud resources effectively requires a multi-faceted approach. It’s not just about knowing *what* to monitor, but *how* and *why*. My strategy centers around establishing a robust observability pipeline incorporating metrics, logs, and traces. This allows for comprehensive insights into resource utilization, performance, and potential issues.

- Metrics-based monitoring: I leverage cloud provider tools like CloudWatch (AWS), Monitoring (Google Cloud), or Azure Monitor to track key performance indicators (KPIs) such as CPU utilization, memory usage, network traffic, and disk I/O. Setting up alerts based on predefined thresholds is crucial for proactive issue detection. For instance, if CPU utilization consistently exceeds 80% for a prolonged period, an alert triggers an investigation.

- Log aggregation and analysis: Centralized log management solutions like Splunk, ELK stack (Elasticsearch, Logstash, Kibana), or cloud-native logging services are essential for analyzing application logs, system logs, and security logs. This helps identify errors, pinpoint bottlenecks, and track security events. For example, analyzing application logs can reveal patterns in error messages, pointing to faulty code or configuration issues.

- Distributed tracing: In microservices architectures, distributed tracing tools like Jaeger or Zipkin are invaluable for understanding the flow of requests across different services. This facilitates identifying slowdowns or failures within complex systems. If a request is taking unusually long, tracing helps pinpoint the specific service responsible for the delay.

- Automated scaling and resource optimization: I utilize auto-scaling features to dynamically adjust resource allocation based on demand. This ensures optimal performance while minimizing costs. For example, scaling up compute resources during peak hours and scaling down during off-peak hours saves significant expense.

- Cost optimization tools: Cloud providers offer cost management tools that help identify areas of potential cost savings. Regularly reviewing these reports and making adjustments based on usage patterns is crucial for maintaining budget control.

In a recent project, I used a combination of CloudWatch and Prometheus to monitor a large-scale microservices application. By setting up custom dashboards and alerts, we were able to quickly detect and resolve a performance bottleneck caused by a database query issue, preventing a major service disruption.

Career Expert Tips:

- Ace those interviews! Prepare effectively by reviewing the Top 50 Most Common Interview Questions on ResumeGemini.

- Navigate your job search with confidence! Explore a wide range of Career Tips on ResumeGemini. Learn about common challenges and recommendations to overcome them.

- Craft the perfect resume! Master the Art of Resume Writing with ResumeGemini’s guide. Showcase your unique qualifications and achievements effectively.

- Don’t miss out on holiday savings! Build your dream resume with ResumeGemini’s ATS optimized templates.

Q 16. Explain your experience with containerization technologies (Docker, Kubernetes).

Containerization technologies, particularly Docker and Kubernetes, are fundamental to modern cloud-native application deployments. My experience spans the entire lifecycle, from image creation and management to orchestration and deployment.

- Docker: I’m proficient in creating and managing Docker images, using Dockerfiles to define application dependencies and configurations. This ensures consistent and reproducible application deployments across different environments. I understand best practices for minimizing image size and optimizing for security.

- Kubernetes: My expertise extends to deploying and managing containerized applications using Kubernetes. I’m familiar with concepts like pods, deployments, services, and namespaces. I’ve utilized Kubernetes for automating deployment, scaling, and managing containerized applications in production environments. I have practical experience with configuring resource limits, setting up health checks, and implementing rollouts and rollbacks to minimize disruption during updates.

- Orchestration and deployment strategies: I’m adept at using various deployment strategies like rolling updates, blue/green deployments, and canary releases to minimize downtime and risk during application updates. I understand the importance of using strategies that match the application’s specific needs and risk tolerance.

For example, in a recent project, we migrated a monolithic application to a microservices architecture using Docker and Kubernetes. This significantly improved scalability, resilience, and deployment speed. We used Kubernetes’ rolling update feature to ensure a seamless transition with minimal downtime.

Q 17. How do you ensure data quality and integrity?

Ensuring data quality and integrity is paramount. My approach is proactive and multi-layered, incorporating data validation, cleansing, monitoring, and governance.

- Data validation: I implement data validation rules at various stages of the data pipeline to ensure data conforms to predefined standards and constraints. This includes data type validation, range checks, and consistency checks. For instance, ensuring that a date field contains a valid date format and is within a reasonable range.

- Data cleansing: I use techniques to identify and correct or remove inaccurate, incomplete, irrelevant, or duplicate data. This often involves using ETL (Extract, Transform, Load) processes and data quality tools.

- Data monitoring: Continuous monitoring of data quality metrics is crucial. This involves setting up alerts for anomalies or deviations from expected patterns. An example would be monitoring the completeness of a particular field to ensure it’s not missing too many values.

- Data governance: Establishing clear data governance policies and procedures is essential for maintaining data quality over the long term. This includes defining data ownership, access controls, and data quality standards.

In a previous role, I implemented a data quality framework that included automated data validation checks, data profiling, and real-time data quality monitoring. This led to a significant improvement in data accuracy and reliability, reducing errors and improving business decisions.

Q 18. Describe your experience with serverless computing.

Serverless computing offers significant advantages in terms of scalability, cost efficiency, and reduced operational overhead. My experience involves designing and deploying serverless applications using platforms like AWS Lambda, Google Cloud Functions, and Azure Functions.

- Function development and deployment: I’m proficient in developing and deploying functions written in various programming languages, including Python, Node.js, and Java. I understand the best practices for writing efficient and scalable serverless functions.

- Event-driven architectures: I have experience designing and implementing event-driven architectures using serverless technologies. This involves integrating functions with various event sources, such as databases, message queues, and APIs.

- API Gateways: I leverage API gateways to manage access and routing of requests to serverless functions. This allows for secure and efficient management of API calls.

- Monitoring and logging: I implement robust monitoring and logging mechanisms to track function performance and identify potential issues. This includes setting up alerts and integrating with centralized logging systems.

In one project, I migrated a batch processing job to a serverless architecture using AWS Lambda. This resulted in a significant reduction in infrastructure costs and improved scalability. The application scaled automatically based on demand, eliminating the need for manual provisioning and management of servers.

Q 19. What are your strategies for implementing data governance?

Data governance is a crucial aspect of data management, ensuring data is handled responsibly and ethically. My strategy involves a multi-pronged approach focused on policy, process, and technology.

- Policy definition: Establishing comprehensive data governance policies that cover data quality, security, privacy, and compliance is the foundation. These policies clearly define roles, responsibilities, and procedures for handling data.

- Process implementation: Defining and implementing processes for data collection, storage, access, usage, and disposal is crucial. This includes procedures for data discovery, metadata management, and data retention.

- Technology implementation: Leveraging data governance tools and technologies to automate and streamline data governance processes is essential. This includes data catalogs, data lineage tools, and access control systems.

- Data discovery and cataloging: Understanding what data exists, where it is located, and how it is used is fundamental. A data catalog helps manage and organize this information.

- Data lineage: Tracking the movement and transformation of data throughout its lifecycle is critical for understanding data dependencies and identifying potential risks.

In a past project, I implemented a data governance framework for a financial institution. This involved defining data ownership, access control policies, and data quality standards. We used a data catalog and lineage tool to track data across multiple systems, ensuring compliance with regulatory requirements.

Q 20. How do you handle data encryption and decryption?

Data encryption and decryption are critical for protecting sensitive data. My approach involves using appropriate encryption algorithms and key management practices, tailored to the specific data sensitivity and security requirements.

- Encryption algorithms: I select appropriate encryption algorithms based on the sensitivity of the data and the level of security required. This might include AES (Advanced Encryption Standard) for data at rest or TLS (Transport Layer Security) for data in transit.

- Key management: Secure key management is crucial. I utilize cloud provider’s Key Management Services (KMS) or other secure key management systems to generate, store, and manage encryption keys. This ensures that keys are protected from unauthorized access.

- Data at rest encryption: This involves encrypting data when it is stored on disk or in databases. Cloud providers offer various options for encrypting data at rest, such as server-side encryption or client-side encryption.

- Data in transit encryption: This involves encrypting data while it is being transmitted over a network. TLS/SSL is commonly used for secure communication between applications and servers.

In a recent project, we implemented end-to-end encryption for a customer database using AWS KMS to manage the encryption keys. This ensured that data was protected both at rest and in transit, meeting stringent security requirements.

Q 21. Explain your understanding of different data integration patterns.

Data integration patterns describe how different data sources are combined and processed. Understanding these patterns is key to building robust and scalable data integration solutions.

- Data virtualization: This pattern presents a unified view of data from multiple sources without physically moving or copying the data. It’s useful when dealing with large volumes of data or when you need a real-time view of data from disparate sources.

- ETL (Extract, Transform, Load): A traditional approach where data is extracted from source systems, transformed to a standardized format, and loaded into a target system (e.g., a data warehouse). This is suitable for batch processing of large datasets.

- ELT (Extract, Load, Transform): Similar to ETL, but the transformation step happens after the data is loaded into the target system. This can be advantageous for handling very large datasets where transformation in the source is impractical.

- Change Data Capture (CDC): This focuses on capturing only the changes made to data sources, minimizing the amount of data processed and improving efficiency. It’s especially useful for real-time data integration scenarios.

- Message Queues: Asynchronous integration pattern using message queues like Kafka or RabbitMQ to decouple data producers and consumers. This improves system resilience and allows for flexible scaling.

- API-based integration: Using APIs to directly access and integrate data from various sources. This is suitable for real-time data integration or when accessing data from cloud-based services.

For example, in a recent project, we used a combination of CDC and ETL processes to integrate data from various operational systems into a data warehouse. CDC was used for real-time integration of critical transactional data, while ETL was used for batch processing of larger datasets.

Q 22. How do you troubleshoot cloud infrastructure issues?

Troubleshooting cloud infrastructure issues requires a systematic approach. Think of it like diagnosing a car problem – you wouldn’t just start replacing parts randomly. Instead, you’d follow a process of elimination.

- Identify the problem: Start by clearly defining the issue. Is it a performance bottleneck, an outage, a security alert, or something else? Gather all relevant logs and metrics (CPU utilization, memory usage, network latency, error messages).

- Isolate the affected component: Pinpoint the specific service, instance, or network component experiencing the problem. This might involve checking resource monitors, examining network traffic, and using tracing tools.

- Analyze logs and metrics: Scrutinize logs for error messages, warnings, and unusual activity. Analyze metrics to identify performance trends and anomalies. For example, a sudden spike in CPU usage might indicate a resource exhaustion problem.

- Implement solutions: Based on the analysis, implement the appropriate fix. This could involve restarting services, scaling resources, deploying patches, or even rolling back to a previous version. Always test your solution in a controlled environment (like a staging environment) before deploying it to production.

- Monitor and validate: After implementing a solution, closely monitor the system to ensure the problem is resolved and doesn’t reoccur. Utilize alerting systems to proactively identify potential issues.

For example, if a web application is slow, I’d first check the application logs for errors, then examine the web server’s CPU and memory usage. If those are high, I might scale up the server instances or optimize the application code. If the problem persists, I’d investigate the database and network connections.

Q 23. Describe your experience with cloud automation tools.

I have extensive experience with various cloud automation tools, including Terraform, Ansible, and CloudFormation. These tools are essential for efficiently managing and scaling cloud infrastructure. Think of them as blueprints and construction crews for your cloud environment.

Terraform: I use Terraform to define and provision infrastructure as code (IaC). This allows me to manage resources across multiple cloud providers consistently and reproducibly. terraform apply is my go-to command for deploying new infrastructure.

Ansible: Ansible is great for automating configuration management and application deployments. It uses a simple YAML-based language to describe tasks, making it relatively easy to learn and use. I’ve used it extensively for automating tasks like installing software, configuring servers, and deploying applications across multiple instances.

CloudFormation: AWS’s CloudFormation is a powerful tool for defining and managing AWS resources. I’ve used it to create entire application stacks, including databases, servers, and load balancers, from a single template. Its ability to manage resources within the AWS ecosystem is unmatched.

Through automation, I’ve significantly reduced manual effort, improved consistency, and shortened deployment times. This allows for faster iteration and reduces the risk of human error during infrastructure deployments.

Q 24. How do you design a scalable data lake or data warehouse?

Designing a scalable data lake or data warehouse depends heavily on the specific requirements of the project, such as data volume, velocity, variety, and the types of queries that will be run. However, several core principles apply to both.

- Data Ingestion: Design a robust and scalable ingestion pipeline to handle high volumes of data from diverse sources. Consider using tools like Apache Kafka, Apache Flume, or cloud-native services like AWS Kinesis or Azure Event Hubs.

- Storage: For a data lake, use cloud storage like AWS S3, Azure Blob Storage, or Google Cloud Storage. For a data warehouse, consider columnar storage databases like Snowflake, Amazon Redshift, or Google BigQuery, which are optimized for analytical queries.

- Processing and Transformation: Utilize distributed processing frameworks like Apache Spark or cloud-native services like AWS EMR or Azure Databricks for data cleaning, transformation, and feature engineering. This allows for parallel processing of large datasets.

- Metadata Management: Implement a robust metadata management system to track data lineage, schemas, and data quality. This is crucial for data governance and understanding your data.

- Scalability and Performance: Design your architecture to handle increasing data volumes and query loads. This includes using auto-scaling features, distributing workloads across multiple nodes, and optimizing query performance.

- Security and Access Control: Implement appropriate security measures, such as access control lists (ACLs) and encryption, to protect your data.

For example, a data lake might use S3 for raw data storage, Spark for processing, and Hive for querying. A data warehouse might use Snowflake for storage and querying, with data being loaded from the data lake after cleansing and transformation.

Q 25. What are your preferred methods for data profiling and cleansing?

Data profiling and cleansing are crucial steps in ensuring data quality. Think of it as preparing ingredients before cooking a delicious meal – you wouldn’t use spoiled ingredients, would you?

- Data Profiling: I utilize tools like Talend, Informatica, or open-source tools like Pandas (in Python) to profile data. This involves analyzing the data to understand its structure, identify data types, check for missing values, and assess data quality. For example, identifying the frequency of different values in a column or detecting outliers.

- Data Cleansing: Based on the profiling results, I implement cleansing techniques to address data quality issues. This might involve:

- Handling Missing Values: Imputation using mean, median, or mode, or removal of rows with excessive missing values.

- Handling Outliers: Removing outliers or transforming them using techniques like winsorization or clipping.

- Data Transformation: Converting data types, standardizing formats, and creating new features.

- Deduplication: Identifying and removing duplicate records.

For example, I might use Python’s Pandas library with functions like df.fillna() for imputation, df.drop_duplicates() for deduplication, and various data cleaning packages to handle inconsistencies in data formats.

Q 26. Explain your understanding of cloud cost optimization strategies.

Cloud cost optimization is a continuous process, not a one-time project. It requires a proactive and data-driven approach.

- Rightsizing Instances: Choose the instance sizes appropriate for your workload. Avoid over-provisioning resources. Regularly review resource utilization and resize instances as needed. Tools like AWS Cost Explorer or Azure Cost Management can help identify underutilized resources.

- Reserved Instances/Savings Plans: Consider purchasing reserved instances or savings plans to get discounts on compute resources. This is particularly beneficial for consistent workloads.

- Spot Instances: Use spot instances for fault-tolerant, non-critical workloads. Spot instances provide significant cost savings but may be interrupted with short notice.

- Serverless Computing: Leverage serverless functions (AWS Lambda, Azure Functions, Google Cloud Functions) for event-driven architectures. You only pay for the compute time used.

- Data Storage Optimization: Use the most cost-effective storage options for your data. For example, use infrequent access storage (IA) for less frequently accessed data. Implement lifecycle policies to automatically move data to cheaper storage tiers.

- Monitoring and Alerting: Set up monitoring and alerting to track cloud spending and identify potential cost anomalies. This allows for proactive cost management.

Imagine you’re managing a large apartment building. Rightsizing is like ensuring tenants have the right-sized apartment; Reserved instances are like offering long-term leases at a discount; Spot instances are like renting out rooms for short periods; Serverless is like subletting rooms only when needed.

Q 27. Describe your experience with implementing data security best practices.

Implementing data security best practices is paramount. It’s about building a strong defense against potential threats.

- Access Control: Implement strong access control measures, using least privilege principles. Only grant users the access they need to perform their jobs. Utilize IAM roles and policies in cloud environments.

- Data Encryption: Encrypt data at rest and in transit. Utilize encryption services provided by cloud providers or implement your own encryption solutions. This protects data from unauthorized access even if a security breach occurs.

- Network Security: Secure your network infrastructure with firewalls, intrusion detection/prevention systems, and VPNs. Regularly review and update security configurations.

- Vulnerability Management: Regularly scan your systems for vulnerabilities and apply patches promptly. Utilize vulnerability scanning tools and keep your software updated.

- Data Loss Prevention (DLP): Implement DLP measures to prevent sensitive data from leaving your organization’s control. This might include data masking, monitoring, and alert systems.

- Security Auditing and Logging: Regularly audit your security controls and review logs to detect and respond to security incidents.

For example, I might use AWS KMS to encrypt databases, implement network ACLs to control access to specific resources, and integrate with SIEM (Security Information and Event Management) tools to monitor security events.

Q 28. How do you ensure data privacy and compliance with regulations?

Ensuring data privacy and compliance with regulations like GDPR, CCPA, HIPAA, etc., requires a multi-faceted approach.

- Data Inventory and Mapping: Create a comprehensive inventory of your data, including its location, sensitivity, and purpose. Map your data flows to understand how data is processed and shared.

- Data Minimization and Purpose Limitation: Only collect and process the data necessary for the specified purpose. Avoid collecting unnecessary personal information.

- Data Security Measures: Implement strong data security measures as outlined above (encryption, access control, etc.).

- Consent Management: Implement mechanisms for obtaining and managing user consent for data processing, especially for sensitive data. This is particularly crucial for GDPR compliance.

- Data Subject Rights: Establish processes for handling data subject requests, such as the right to access, rectification, erasure, and data portability (as required by regulations like GDPR).

- Privacy Impact Assessments (PIAs): Conduct PIAs to assess the privacy risks associated with new data processing activities.

- Regular Audits and Compliance Reviews: Conduct regular audits and reviews to ensure ongoing compliance with relevant regulations.

Imagine you’re running a library. You need a catalog (data inventory), clear lending rules (purpose limitation), secure storage (encryption), a system for tracking who borrowed what (consent management), and a process for handling requests (data subject rights). Compliance is about ensuring your library operates according to the law and protects the privacy of its patrons.

Key Topics to Learn for Advanced Cloud Computing & Data Management Interviews

- Cloud Architectures: Understanding different cloud deployment models (public, private, hybrid), service models (IaaS, PaaS, SaaS), and their practical implications for designing scalable and resilient systems. Consider exploring trade-offs between cost, performance, and security.

- Data Warehousing & Big Data Technologies: Mastering concepts like data lakes, data warehouses, ETL/ELT processes, and technologies like Hadoop, Spark, and cloud-based data warehousing solutions (e.g., Snowflake, BigQuery). Focus on practical application in data modeling, querying, and performance optimization.

- Database Management Systems (DBMS): Deep dive into relational (SQL) and NoSQL databases. Understand database design principles, normalization, indexing, query optimization, and transaction management. Explore cloud-based database services and their unique features.

- Data Security & Governance: Learn about data encryption, access control, compliance regulations (e.g., GDPR, HIPAA), and data loss prevention strategies within cloud environments. Practice explaining how to implement robust security measures for data at rest and in transit.

- Cloud Security Best Practices: Explore topics such as IAM (Identity and Access Management), security auditing, vulnerability management, and incident response in cloud environments. Be ready to discuss practical strategies for securing cloud-based applications and data.

- Containerization & Orchestration: Understand Docker and Kubernetes, and how they enable efficient deployment and management of applications in cloud environments. Practice explaining their benefits and practical use cases.

- Serverless Computing: Explore the concepts and benefits of serverless architectures. Be prepared to discuss function-as-a-service (FaaS) platforms and their use in building scalable and cost-effective applications.

- Data Analytics & Machine Learning in the Cloud: Understand how to leverage cloud platforms for data analysis and machine learning tasks. Explore relevant services and tools, and be prepared to discuss practical applications and challenges.

- Cost Optimization Strategies in the Cloud: Learn how to effectively manage and optimize cloud spending. Discuss techniques for resource right-sizing, automation, and cost monitoring.

Next Steps

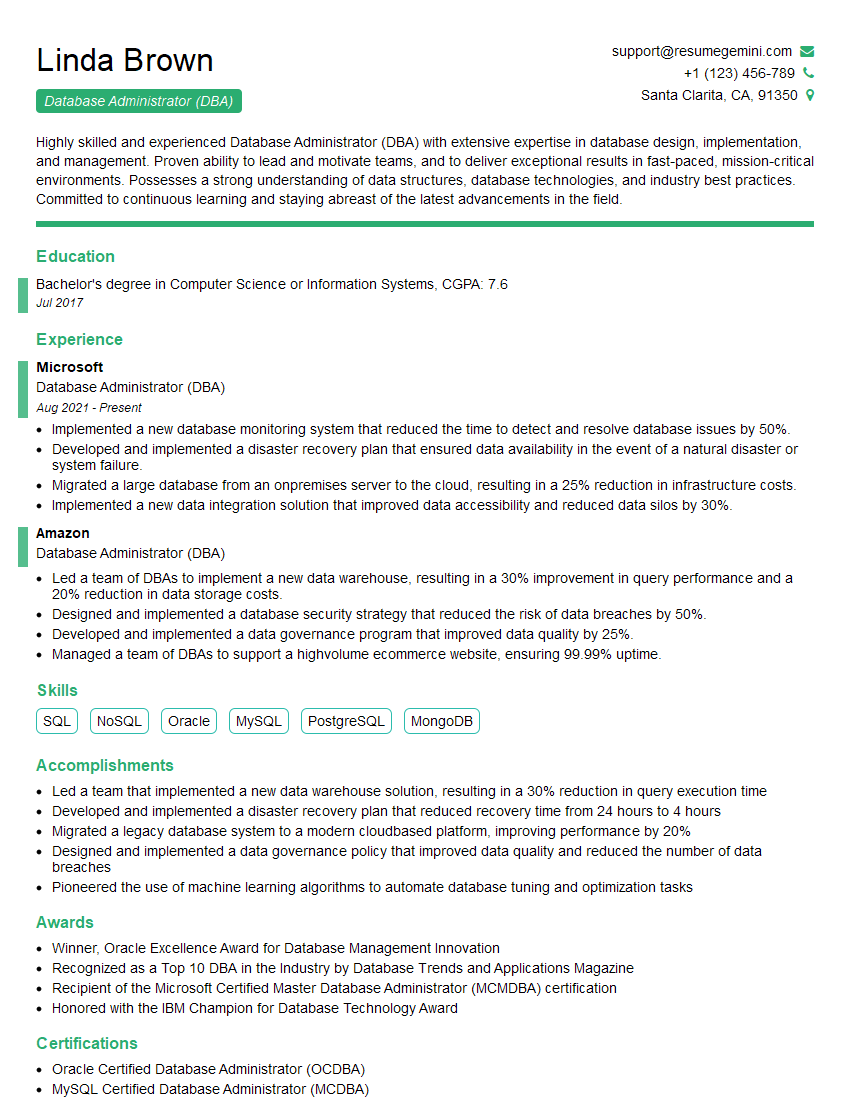

Mastering advanced cloud computing and data management skills is crucial for career advancement in today’s technology landscape. These skills are highly sought after, opening doors to lucrative and challenging roles. To maximize your job prospects, creating an ATS-friendly resume is essential. ResumeGemini is a trusted resource that can help you build a compelling and effective resume tailored to highlight your expertise. Examples of resumes specifically designed for candidates with advanced knowledge of cloud computing and data management are available to help guide you. Invest the time to build a strong resume – it’s your first impression on potential employers.

Explore more articles

Users Rating of Our Blogs

Share Your Experience

We value your feedback! Please rate our content and share your thoughts (optional).

What Readers Say About Our Blog

Hello,

we currently offer a complimentary backlink and URL indexing test for search engine optimization professionals.

You can get complimentary indexing credits to test how link discovery works in practice.

No credit card is required and there is no recurring fee.

You can find details here:

https://wikipedia-backlinks.com/indexing/

Regards

NICE RESPONSE TO Q & A

hi

The aim of this message is regarding an unclaimed deposit of a deceased nationale that bears the same name as you. You are not relate to him as there are millions of people answering the names across around the world. But i will use my position to influence the release of the deposit to you for our mutual benefit.

Respond for full details and how to claim the deposit. This is 100% risk free. Send hello to my email id: [email protected]

Luka Chachibaialuka

Hey interviewgemini.com, just wanted to follow up on my last email.

We just launched Call the Monster, an parenting app that lets you summon friendly ‘monsters’ kids actually listen to.

We’re also running a giveaway for everyone who downloads the app. Since it’s brand new, there aren’t many users yet, which means you’ve got a much better chance of winning some great prizes.

You can check it out here: https://bit.ly/callamonsterapp

Or follow us on Instagram: https://www.instagram.com/callamonsterapp

Thanks,

Ryan

CEO – Call the Monster App

Hey interviewgemini.com, I saw your website and love your approach.

I just want this to look like spam email, but want to share something important to you. We just launched Call the Monster, a parenting app that lets you summon friendly ‘monsters’ kids actually listen to.

Parents are loving it for calming chaos before bedtime. Thought you might want to try it: https://bit.ly/callamonsterapp or just follow our fun monster lore on Instagram: https://www.instagram.com/callamonsterapp

Thanks,

Ryan

CEO – Call A Monster APP

To the interviewgemini.com Owner.

Dear interviewgemini.com Webmaster!

Hi interviewgemini.com Webmaster!

Dear interviewgemini.com Webmaster!

excellent

Hello,

We found issues with your domain’s email setup that may be sending your messages to spam or blocking them completely. InboxShield Mini shows you how to fix it in minutes — no tech skills required.

Scan your domain now for details: https://inboxshield-mini.com/

— Adam @ InboxShield Mini

Reply STOP to unsubscribe

Hi, are you owner of interviewgemini.com? What if I told you I could help you find extra time in your schedule, reconnect with leads you didn’t even realize you missed, and bring in more “I want to work with you” conversations, without increasing your ad spend or hiring a full-time employee?

All with a flexible, budget-friendly service that could easily pay for itself. Sounds good?

Would it be nice to jump on a quick 10-minute call so I can show you exactly how we make this work?

Best,

Hapei

Marketing Director

Hey, I know you’re the owner of interviewgemini.com. I’ll be quick.

Fundraising for your business is tough and time-consuming. We make it easier by guaranteeing two private investor meetings each month, for six months. No demos, no pitch events – just direct introductions to active investors matched to your startup.

If youR17;re raising, this could help you build real momentum. Want me to send more info?

Hi, I represent an SEO company that specialises in getting you AI citations and higher rankings on Google. I’d like to offer you a 100% free SEO audit for your website. Would you be interested?

Hi, I represent an SEO company that specialises in getting you AI citations and higher rankings on Google. I’d like to offer you a 100% free SEO audit for your website. Would you be interested?

good