Interviews are more than just a Q&A session—they’re a chance to prove your worth. This blog dives into essential Calibration of Sensors and Transducers interview questions and expert tips to help you align your answers with what hiring managers are looking for. Start preparing to shine!

Questions Asked in Calibration of Sensors and Transducers Interview

Q 1. Explain the difference between accuracy and precision in calibration.

Accuracy and precision are crucial in calibration, but they represent different aspects of measurement quality. Think of it like shooting arrows at a target.

Accuracy refers to how close the measured value is to the true value. A highly accurate measurement means your arrows are clustered near the bullseye. High accuracy implies minimal systematic error – a consistent bias in your measurements.

Precision, on the other hand, describes the repeatability of measurements. High precision means your arrows are clustered tightly together, regardless of whether they hit the bullseye. High precision indicates minimal random error – variations in your measurements that are unpredictable.

You can have high precision but low accuracy (arrows clustered tightly but far from the bullseye – a systematic error), high accuracy but low precision (arrows scattered around the bullseye – large random error), or ideally, both high accuracy and high precision (arrows clustered tightly around the bullseye).

In calibration, we strive for both. A calibration process aims to minimize both systematic and random errors, leading to high accuracy and precision in the sensor’s readings.

Q 2. Describe the process of calibrating a pressure transducer.

Calibrating a pressure transducer involves comparing its output to a known standard under controlled conditions. Here’s a typical process:

- Preparation: Ensure the transducer is clean, undamaged, and properly connected. Allow it to stabilize at the ambient temperature.

- Setup: Connect the transducer to a calibration system, usually including a pressure source (e.g., deadweight tester, calibrated pressure regulator) and a high-resolution data acquisition system (DAQ).

- Calibration Points: Apply known pressure levels, starting from zero (or atmospheric pressure) and increasing to the transducer’s maximum rated pressure. Include several intermediate points for a more comprehensive calibration curve.

- Data Acquisition: The DAQ simultaneously records the applied pressure from the standard and the corresponding output voltage (or other output signal) from the transducer at each point.

- Curve Fitting: A calibration curve is generated by plotting the applied pressure against the transducer’s output. Common curve fitting techniques include linear regression or polynomial fits, depending on the transducer’s behavior.

- Uncertainty Analysis: Determine the uncertainty associated with the calibration process, considering errors from the pressure standard, the DAQ, and the curve fitting method.

- Certificate Generation: A calibration certificate is issued, documenting the calibration curve, uncertainties, and relevant metadata.

For example, a pressure transducer used in a process control system needs regular calibration to ensure its readings are reliable and accurate for maintaining the desired process parameters. Inaccurate readings could lead to process upsets or even safety hazards.

Q 3. What are the common types of calibration standards used?

Calibration standards are devices or systems with known and traceable values used to verify the accuracy of other measuring instruments. Common types include:

- Deadweight Testers: Highly accurate for pressure calibration, using precisely weighted pistons to generate known pressure levels. They are often the primary standard for pressure.

- Standard Resistors and Voltage Sources: Used in calibrating electrical sensors (e.g., thermocouples). These standards are often traceable to national metrology institutes.

- Thermometers (e.g., platinum resistance thermometers (PRTs)): Used for temperature calibration, typically traceable to national standards.

- Calibration Blocks: Used for calibrating dimensional measuring equipment (e.g., calipers, micrometers). They provide precise length standards.

- Digital Multimeters (DMMs) with Calibration: High-accuracy DMMs provide traceable voltage, current and resistance standards.

The choice of standard depends on the type of sensor being calibrated and the required accuracy level. The key is ensuring traceability to a recognized national or international standard.

Q 4. How do you handle calibration uncertainties?

Calibration uncertainties are inevitable and must be carefully managed. These uncertainties arise from various sources, including:

- Standard Uncertainty: Uncertainty inherent in the calibration standard itself.

- Method Uncertainty: Uncertainty associated with the calibration method used.

- Environmental Uncertainty: Uncertainty due to variations in temperature, pressure, or humidity.

- Instrument Uncertainty: Uncertainty in the measuring instrument used during calibration.

Handling uncertainties involves a quantitative approach:

- Identify Sources: List all potential sources of uncertainty.

- Quantify Uncertainty: Estimate the magnitude of each uncertainty source, often using statistical methods.

- Combine Uncertainties: Combine individual uncertainties using appropriate statistical techniques (e.g., root-sum-square method) to obtain the overall calibration uncertainty.

- Report Uncertainty: Clearly report the overall uncertainty on the calibration certificate along with its coverage factor (usually k=2 for a 95% confidence interval).

Proper uncertainty analysis is crucial for ensuring the reliability and validity of calibration results. It allows us to understand the limits of the measurement and make informed decisions based on the precision required for the application.

Q 5. Explain the concept of traceability in calibration.

Traceability in calibration is the ability to link a measurement result to national or international standards through an unbroken chain of calibrations. It’s like a lineage for your measurements, showing that they’re dependable and consistent. Imagine a family tree – traceability demonstrates the connection back to a universally accepted ‘ancestor’ (the primary standard).

It ensures that measurements performed in different laboratories or by different organizations are comparable and consistent. Traceability is typically achieved through a chain of calibrations, where each instrument is calibrated against a more accurate standard, ultimately leading to a national or international standard maintained by a metrology institute (e.g., NIST in the US or NPL in the UK).

For example, a company calibrating pressure transducers might use a deadweight tester which is calibrated by a calibration laboratory accredited to ISO/IEC 17025, which in turn uses national standards for verification.

Q 6. What is a calibration certificate and what information does it contain?

A calibration certificate is a formal document that records the results of a calibration process. It’s crucial evidence demonstrating the accuracy and reliability of the calibrated instrument. Key information included:

- Instrument Identification: Serial number, model, and manufacturer of the calibrated instrument.

- Calibration Date and Procedure: The date the calibration was performed and the methods used.

- Calibration Results: The calibration curve, correction factors, or other relevant data that describes the instrument’s performance.

- Uncertainty Analysis: Detailed information on the uncertainties associated with the measurements.

- Traceability Statement: A statement confirming the traceability to national or international standards.

- Accreditation Information (if applicable): Information on the accreditation of the calibration laboratory.

- Expiry Date: The date the calibration is no longer considered valid.

The certificate serves as proof of the instrument’s accuracy and ensures confidence in the measurements obtained using the instrument. It’s often a requirement for regulatory compliance and quality control in various industries.

Q 7. Describe different calibration methods (e.g., comparison, substitution).

Several calibration methods exist, each with its strengths and weaknesses:

- Comparison Calibration: The most common method. The output of the instrument under test (IUT) is compared directly to a known standard under the same conditions. The difference between the readings gives the correction or error.

- Substitution Calibration: The standard and the IUT are used alternately to measure the same quantity. This method minimizes the impact of environmental variations or drift in the measuring system. It’s particularly useful when comparing standards with similar output levels.

- Calibration using Multi-Point Calibration: This involves establishing several calibration points across the measurement range of the transducer to create a more comprehensive calibration curve (linear or non-linear as needed). Better reflects real-world performance.

- In-situ Calibration: The IUT is calibrated in its actual operating environment. While practical, this method can be challenging to perform accurately as environmental influences need to be taken into account.

The choice of method depends on several factors, including the type of sensor, the required accuracy, and the available resources. In many cases, a combination of methods might be used to achieve the desired level of confidence.

Q 8. How do you select the appropriate calibration method for a specific sensor?

Selecting the right calibration method depends heavily on the sensor’s type, its intended application, and the required accuracy. It’s like choosing the right tool for a job – a screwdriver won’t work for hammering a nail.

We consider several factors:

- Sensor Type: A thermocouple (temperature) will require a different calibration approach than a load cell (force). Thermocouples often use comparison against a known standard, while load cells might involve applying known weights.

- Accuracy Requirements: High-precision applications demand more rigorous methods, such as multi-point calibration with traceability to national standards. Less critical applications might suffice with a simpler two-point calibration.

- Calibration Standards: Access to suitable standards (e.g., certified weights, temperature baths) dictates the feasibility of certain methods. For example, calibrating a high-pressure transducer requires access to a pressure calibrator capable of generating the necessary pressures with known accuracy.

- Calibration Equipment: The available equipment influences the choice. A simple multimeter might be adequate for certain sensors, while others need specialized equipment like a digital pressure gauge or an automated calibration system.

For instance, a pressure sensor used in a critical aerospace application would demand a meticulous multi-point calibration traceable to national standards, using a high-accuracy pressure calibrator in a controlled environment. A less critical application, such as a simple water pressure gauge, might only need a two-point calibration.

Q 9. What are the common sources of error in sensor calibration?

Sensor calibration errors stem from various sources, broadly categorized as:

- Systematic Errors: These are consistent, repeatable errors that are predictable. Examples include:

- Offset Error: A consistent deviation from the true value, even when the input is zero.

- Gain Error: A consistent scaling error, leading to readings consistently higher or lower than the true value.

- Nonlinearity: Deviations from a linear relationship between the input and output. This is common in many sensors.

- Hysteresis: Different readings depending on whether the input is increasing or decreasing.

- Random Errors: These are unpredictable and vary randomly. Sources include:

- Noise: Electrical or environmental noise affecting the sensor signal.

- Resolution Limitations: The sensor’s inability to distinguish between closely spaced values.

- Environmental Factors: Temperature fluctuations, vibrations, electromagnetic interference (EMI).

- Human Errors: Incorrect reading, recording, or handling of the sensor or calibration equipment. For example, a technician might misinterpret a gauge reading or improperly connect the calibration equipment.

Q 10. How do you identify and mitigate these errors?

Identifying and mitigating errors requires a systematic approach:

- Careful Calibration Procedure: Following a well-defined calibration procedure that specifies the equipment, methods, and environmental conditions.

- Multiple Measurements: Taking multiple measurements at each calibration point and calculating statistics (mean, standard deviation) to reduce random error effects.

- Data Analysis: Analyzing the calibration data to identify patterns indicative of systematic errors. Curve fitting or linear regression can help model sensor behavior and compensate for nonlinearities.

- Environmental Control: Maintaining a stable and controlled environment during calibration to minimize environmental effects (temperature, pressure, humidity).

- Equipment Calibration: Regular calibration and verification of the calibration equipment itself to ensure its accuracy.

- Error Compensation: Incorporating error compensation algorithms into the sensor’s data processing to correct for known systematic errors, such as using polynomial curve fitting to model nonlinearities.

- Operator Training: Proper training of personnel on the correct procedures for calibration and handling of the sensors and equipment helps minimize human error.

For example, if we observe significant hysteresis in a pressure sensor, we can account for this during the data analysis by creating separate calibration curves for increasing and decreasing pressures. If the sensor shows excessive noise, we could use signal filtering techniques to reduce its impact.

Q 11. Explain the importance of environmental factors in calibration.

Environmental factors significantly influence sensor readings and calibration results. Think of a thermometer left in direct sunlight – its reading will be far higher than the actual ambient temperature.

Temperature, pressure, humidity, and electromagnetic fields can all affect sensor performance. These effects need careful consideration during calibration:

- Temperature Effects: Many sensors exhibit temperature sensitivity, requiring temperature compensation or calibration at multiple temperatures. This is especially crucial for high-precision applications.

- Pressure Effects: Pressure can influence the readings of certain sensors, such as those measuring strain or flow.

- Humidity Effects: High humidity can affect the readings of some sensors, causing drift or inaccurate readings.

- Electromagnetic Interference (EMI): Electromagnetic fields can induce noise or errors in sensors’ signals, especially those sensitive to electrical interference.

To mitigate these effects, calibration should ideally occur in a controlled environment that minimizes variations in these factors. Temperature-controlled chambers or environmental test chambers are often used for precise calibration. Temperature compensation algorithms or sensor designs that minimize environmental effects can also be employed.

Q 12. What is the role of statistical process control (SPC) in calibration?

Statistical Process Control (SPC) plays a vital role in ensuring the ongoing accuracy and reliability of calibrated sensors. It provides a framework to monitor sensor performance over time and detect potential drifts or issues early on.

SPC techniques, such as control charts, help us track sensor readings and identify patterns indicative of degradation or changes in accuracy. Control charts plot sensor readings over time and visually indicate when a sensor deviates from its expected performance range.

By using SPC, we can:

- Detect trends and shifts in sensor accuracy: Early detection of such trends allows for preventative maintenance or recalibration, avoiding costly errors or downtime.

- Determine the calibration interval: Data from SPC charts can help determine how frequently a sensor should be recalibrated to maintain its required accuracy.

- Improve calibration processes: By analyzing SPC data, we can identify areas where calibration procedures can be improved to enhance accuracy and reduce variability.

Imagine monitoring a temperature sensor in a critical industrial process using a control chart. If the sensor’s readings consistently drift outside predefined control limits, it signals a potential problem, prompting investigation and possibly recalibration to prevent faulty process control.

Q 13. How do you manage and maintain calibration records?

Maintaining accurate and accessible calibration records is essential for regulatory compliance, quality assurance, and troubleshooting. A robust system should include:

- Calibration Certificates: Formal documentation from a calibration laboratory attesting to the accuracy and traceability of the sensor’s calibration.

- Calibration Logs: Detailed records of each calibration event, including the date, time, equipment used, calibration procedures followed, results, and any deviations or observations.

- Database Management: Utilizing a computerized database system to manage calibration records efficiently, providing easy access and searchability.

- Unique Identification: Each sensor should have a unique identifier (e.g., serial number) to ensure accurate record-keeping.

- Version Control: Maintaining versions of calibration procedures and related documents to track any changes over time.

- Auditing Trail: Tracking who made changes to calibration data or records, providing transparency and accountability.

A well-maintained calibration database can be instrumental in identifying trends, scheduling calibrations, and providing evidence of compliance. It can also help in diagnosing issues if a sensor malfunctions, providing historical performance data.

Q 14. Describe your experience with different types of sensors (e.g., temperature, pressure, flow).

My experience encompasses a broad range of sensors, including:

- Temperature Sensors: Extensive experience with thermocouples (Type K, Type T, etc.), RTDs (resistance temperature detectors), and thermistors, including calibration using various methods such as ice point calibration and comparison against certified reference standards. I’ve worked with both contact and non-contact temperature sensors in diverse applications.

- Pressure Sensors: Experience with various pressure sensor technologies, including strain gauge-based, piezoelectric, and capacitive sensors. Calibration experience includes using deadweight testers, electronic pressure calibrators, and specialized software for data acquisition and analysis.

- Flow Sensors: Experience with various flow sensor types, including differential pressure flow meters, ultrasonic flow meters, and vortex flow meters. Calibration involves using known flow standards, such as calibrated flow meters or gravimetric methods.

- Other Sensors: I’ve also worked with other sensor types such as load cells, accelerometers, and displacement sensors, each requiring specialized calibration techniques appropriate to the sensor’s operating principle and required accuracy.

In each case, my approach involves selecting the appropriate calibration method based on the sensor type, required accuracy, and available equipment and resources. I meticulously document the process, analyze results, and implement appropriate error correction methods to ensure accurate and reliable sensor readings.

Q 15. Explain the principles of linearization in sensor calibration.

Linearization in sensor calibration is the process of correcting a sensor’s non-linear output to produce a more linear response. Many sensors, especially those based on physical phenomena, don’t produce a perfectly straight line relationship between the input stimulus and the output signal. This non-linearity can introduce significant errors in measurement. Linearization aims to compensate for this by applying mathematical transformations to the sensor’s raw data, making it easier to interpret and use.

Common techniques include curve fitting (e.g., polynomial regression, spline interpolation) to model the non-linear relationship and applying the inverse function to map the raw sensor data to a linear scale. For example, a thermocouple’s voltage output versus temperature is non-linear; we can use a polynomial fit based on its lookup table to approximate this relationship and get a linearized temperature reading. Software tools often provide automated linearization routines, allowing users to input calibration data and generate a linearization function.

Consider a pressure sensor. Its raw output might follow a slightly curved response. We can use a second-order polynomial, y = a + bx + cx², where y is the linearized pressure and x is the raw sensor output. By carefully selecting the coefficients a, b, and c through calibration data points, we can accurately model the non-linearity and thus achieve a linearized output that is more suitable for further processing and analysis.

Career Expert Tips:

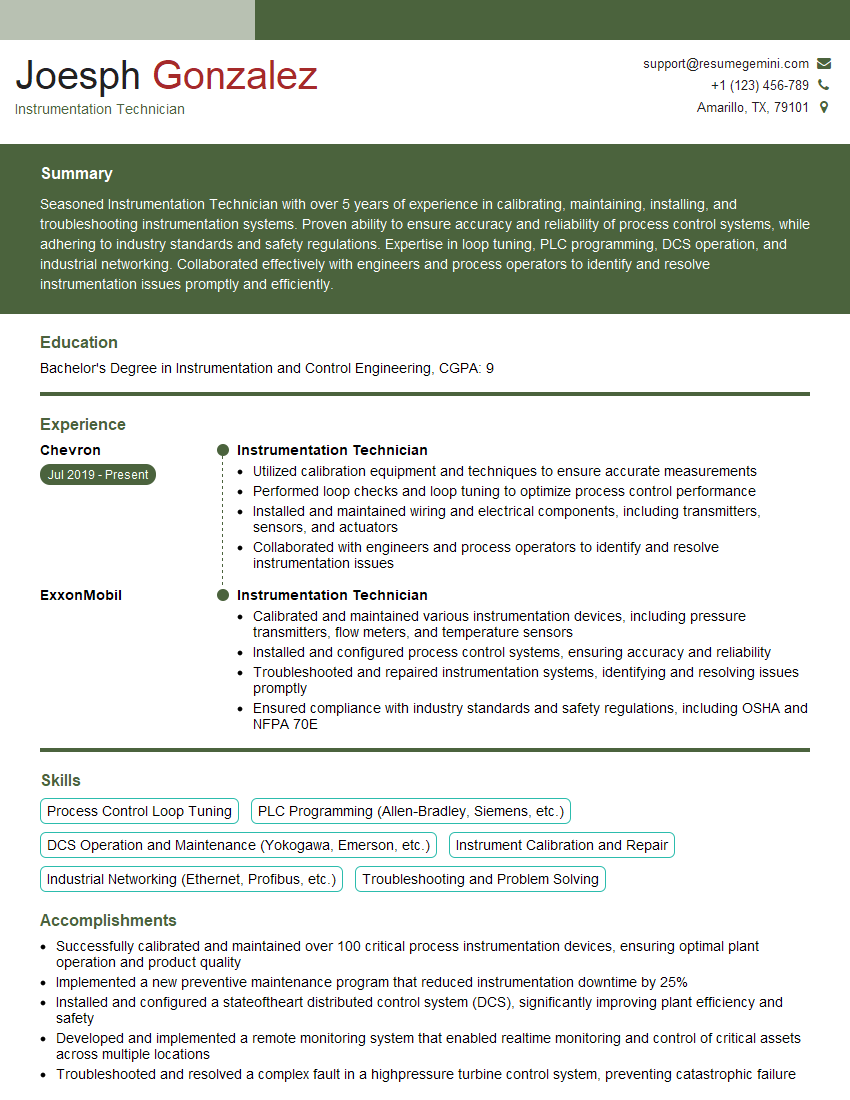

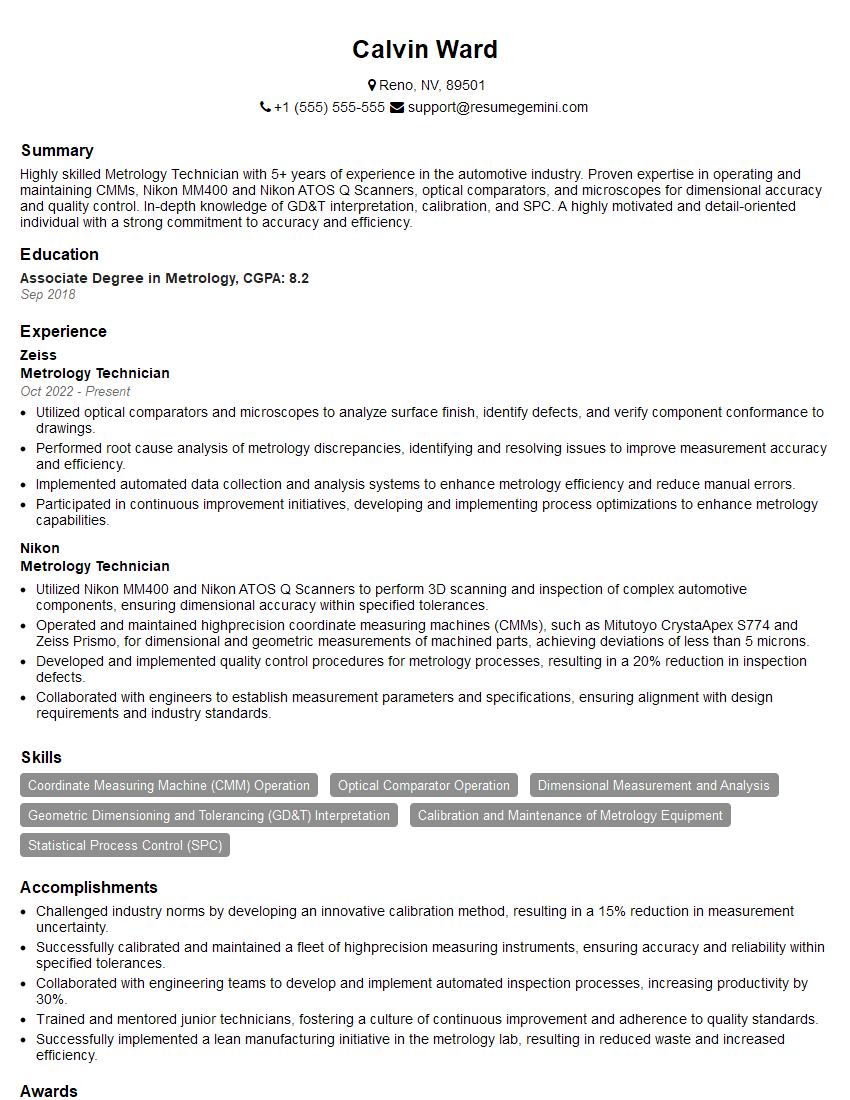

- Ace those interviews! Prepare effectively by reviewing the Top 50 Most Common Interview Questions on ResumeGemini.

- Navigate your job search with confidence! Explore a wide range of Career Tips on ResumeGemini. Learn about common challenges and recommendations to overcome them.

- Craft the perfect resume! Master the Art of Resume Writing with ResumeGemini’s guide. Showcase your unique qualifications and achievements effectively.

- Don’t miss out on holiday savings! Build your dream resume with ResumeGemini’s ATS optimized templates.

Q 16. How do you assess the health and integrity of a sensor before calibration?

Assessing sensor health before calibration is crucial for ensuring accurate and reliable results. The process involves a series of checks that vary based on the sensor type but generally includes:

- Visual Inspection: Check for physical damage, corrosion, loose connections, or any obvious signs of wear and tear.

- Continuity Test: For electrical sensors, a simple continuity test verifies the electrical path from the sensor to the measurement system is intact. This helps identify open circuits or shorts.

- Zero and Span Check: A quick check at the sensor’s zero and full-scale points provides an initial indication of its performance. Large deviations might indicate drift or other problems.

- Comparison with known standards: If possible, compare the sensor’s reading against a reference standard or a known good sensor under similar conditions to get a quick measure of accuracy before fully calibrating.

- Reviewing previous calibration data: Analyzing historical calibration reports reveals trends in sensor performance, indicating potential issues such as drift or degradation.

For instance, before calibrating a temperature sensor, I would visually inspect for any physical damage and check its resistance using a multimeter. This preliminary assessment allows for early detection of potential issues, preventing wasted time and resources during full calibration if the sensor needs repair or replacement.

Q 17. What are the common types of transducer technologies?

Transducer technologies encompass a vast array of devices that convert one form of energy into another for measurement purposes. Common types include:

- Resistive Transducers: These transducers change their resistance in response to the measured parameter. Examples include potentiometers (measuring position), strain gauges (measuring strain or force), and thermistors (measuring temperature).

- Capacitive Transducers: These use changes in capacitance to measure parameters. Examples include capacitive proximity sensors and level sensors.

- Inductive Transducers: These use changes in inductance for measurements. Examples include LVDTs (Linear Variable Differential Transformers) for displacement measurements and inductive proximity sensors.

- Piezoelectric Transducers: These generate an electrical charge in response to mechanical stress or pressure. They’re used in accelerometers, pressure sensors, and microphones.

- Photoelectric Transducers: These convert light into electrical signals, used in photodiodes, phototransistors, and photomultiplier tubes for various light-related measurements.

- Thermoelectric Transducers (Thermocouples): These generate a voltage proportional to the temperature difference between two dissimilar metals.

The choice of transducer depends heavily on the application and the parameter being measured. For example, measuring very small displacements might necessitate the use of an LVDT, while a simple pressure measurement could utilize a piezoresistive sensor.

Q 18. Describe your experience with calibration software and equipment.

My experience with calibration software and equipment spans several years and diverse applications. I’m proficient in using various calibration software packages, including LabVIEW, MATLAB, and specialized calibration software provided by manufacturers of specific equipment. These packages allow for automated data acquisition, analysis, and report generation. This automation is crucial for handling large datasets efficiently and ensuring traceability.

I’ve worked extensively with calibration equipment such as digital multimeters (DMMs), precision power supplies, temperature baths, pressure calibrators, and signal generators. The choice of equipment is dictated by the sensor type and the required accuracy. My expertise extends to setting up and troubleshooting calibration systems, ensuring the integrity and accuracy of the entire measurement chain. For example, I am experienced in selecting appropriate standards and calculating measurement uncertainties to comply with relevant standards.

Q 19. How do you handle out-of-tolerance readings during calibration?

Handling out-of-tolerance readings during calibration is a critical aspect of the process, requiring systematic investigation and careful documentation. The first step is to verify the reading. This involves rechecking the connections, confirming the proper functioning of the equipment, and repeating the measurement several times.

If the reading consistently falls outside the tolerance limits, I would:

- Investigate the cause: The issue might stem from sensor drift, damage to the sensor, malfunctioning equipment, or even environmental factors. A thorough investigation is crucial to identify the root cause.

- Implement corrective actions: Corrective actions may include sensor repair, recalibration of equipment, or even sensor replacement if necessary.

- Document the findings: All findings, including the out-of-tolerance readings, the investigation process, the corrective actions, and the final result, must be meticulously documented in the calibration report.

- Assess the impact: Determine if the out-of-tolerance readings affect the reliability and accuracy of the sensor. Depending on the context, this may necessitate a re-evaluation of the measurement process or even lead to a re-calibration of associated systems.

For instance, if a pressure sensor consistently reads high, I might check for leaks in the system or verify the accuracy of the pressure calibrator used. Thorough documentation is key for traceability and accountability.

Q 20. What are the different types of calibration intervals?

Calibration intervals vary depending on the sensor’s type, application, and environmental conditions. Common types include:

- Daily Calibration: Used for sensors requiring high accuracy and those subject to significant environmental variations, like some industrial process sensors.

- Weekly Calibration: Applicable for sensors used in less critical applications or where environmental conditions are relatively stable.

- Monthly Calibration: A more common interval for many sensors, particularly those in less demanding environments.

- Quarterly Calibration: Suitable for sensors exhibiting good stability and those used in relatively benign conditions.

- Annual Calibration: Often used for sensors that show minimal drift or those in controlled environments. This is common for sensors used in laboratory settings.

The frequency is not fixed and can be adjusted based on the sensor’s performance history and its criticality in the overall system. If a sensor shows signs of drift or instability, more frequent calibration is necessary.

Q 21. How do you determine the appropriate calibration interval for a specific sensor?

Determining the appropriate calibration interval for a specific sensor involves careful consideration of several factors:

- Sensor stability: Sensors with low drift and high stability can have longer calibration intervals.

- Environmental conditions: Sensors operating in harsh environments (high temperature, humidity, vibration) require more frequent calibration.

- Sensor usage: Continuous operation or frequent use may necessitate shorter intervals.

- Criticality of the measurement: Sensors used in safety-critical systems or those whose readings have significant consequences require more frequent calibration.

- Historical data: Analyzing past calibration records helps in identifying trends and predicting future performance. A history of rapid drift may indicate a need for shorter intervals.

- Relevant standards and regulations: Regulatory bodies often specify calibration intervals for certain applications.

For example, a temperature sensor used in a food processing plant, where accurate temperature control is crucial for safety and product quality, would require more frequent calibration (e.g., weekly or monthly) than a temperature sensor used in a general laboratory setting where a yearly calibration might suffice. A formal risk assessment can help guide the selection of the appropriate calibration interval.

Q 22. Explain your experience with different calibration standards (e.g., NIST, ISO).

My experience encompasses working with various calibration standards, primarily NIST (National Institute of Standards and Technology) and ISO (International Organization for Standardization) guidelines. NIST provides traceability to the national measurement system, ensuring the accuracy of our calibrations are directly linked to national standards. This is crucial for applications demanding high accuracy, such as aerospace or pharmaceutical industries. ISO standards, such as ISO 17025, dictate the requirements for competence of testing and calibration laboratories, providing a framework for quality management systems ensuring the reliability and consistency of our processes. For instance, in a recent project calibrating pressure transducers for a medical device manufacturer, we adhered strictly to NIST-traceable standards for pressure, ensuring our calibration results were validated against nationally recognized values. The ISO 17025 framework guided our laboratory’s quality management system, ensuring meticulous record-keeping, proficiency testing, and regular audits to maintain the highest level of accuracy and confidence in our results.

Q 23. Describe your experience with calibration procedures and documentation.

Calibration procedures are meticulously documented and follow a standardized format to ensure repeatability and traceability. This includes identifying the instrument, its unique serial number, the calibration standards used, the date and time of calibration, and the results obtained. We use specialized software to manage calibration data, generate certificates of calibration, and track instrument performance over time. For example, a typical procedure for calibrating a thermocouple involves comparing its readings to a known standard, often a platinum resistance thermometer, at multiple temperature points. This data is then plotted to generate a calibration curve, which is used to correct future measurements made with that thermocouple. The entire process is meticulously documented, including any deviations from standard procedures and corrective actions taken. The documentation ensures that anyone reviewing the calibration can fully understand the process and the results.

Q 24. What is your approach to problem-solving in a calibration environment?

My approach to problem-solving in a calibration environment is systematic and data-driven. I start by clearly defining the problem, gathering all relevant data (e.g., calibration history, instrument specifications, environmental conditions), and then analyzing the data to identify potential causes. This might involve examining calibration curves for anomalies, checking for equipment malfunctions, or reviewing environmental factors. Once the root cause is identified, I develop and implement a solution. This could be as simple as adjusting instrument settings or replacing a faulty component, or it might involve more complex solutions, such as developing a new calibration procedure or upgrading equipment. For example, I once encountered a situation where a series of pressure transducers showed consistently high readings. Through a systematic investigation, I identified a pressure leak in the calibration system. The leak was repaired, and subsequent calibrations produced accurate results.

Q 25. How do you ensure the accuracy and reliability of calibration results?

Ensuring the accuracy and reliability of calibration results involves a multi-pronged approach. Firstly, using NIST-traceable standards is paramount. Secondly, regular calibration of our own equipment is crucial to prevent errors propagating through the system. We use a combination of preventative maintenance, regular checks and recalibrations on our reference standards. Thirdly, rigorous adherence to documented procedures minimizes human error. Statistical process control techniques are also employed to monitor the calibration process and identify any trends or anomalies. For example, we track the uncertainties associated with each calibration to understand the overall measurement uncertainty and provide a realistic confidence interval for our results. Finally, regular proficiency testing through participation in interlaboratory comparison programs further validates our results and ensures our procedures are meeting the standards.

Q 26. Explain your experience working in a calibration laboratory setting.

My experience in a calibration laboratory setting spans several years, where I’ve worked on a wide range of instruments including pressure transducers, thermocouples, and flow meters across different industries. My role involves planning and performing calibrations, analyzing results, generating reports, maintaining calibration equipment, and managing the laboratory’s quality system. I’m adept at utilizing various calibration techniques and am proficient in operating sophisticated testing equipment. This work requires attention to detail, strong analytical skills, and a deep understanding of metrology principles. Moreover, I’m experienced in training and supervising junior technicians, ensuring consistency in our work and adherence to quality standards. The collaborative nature of the lab fosters a strong sense of teamwork, crucial in ensuring accuracy and efficiency.

Q 27. Describe a challenging calibration project you have worked on and how you overcame it.

One challenging project involved calibrating a highly specialized optical sensor used in a cutting-edge research facility. The sensor was incredibly sensitive to environmental variations and exhibited non-linear behavior. Initially, standard calibration techniques proved inadequate. To overcome this, I collaborated with the sensor manufacturer and researchers to thoroughly investigate the sensor’s behavior under different conditions. This involved developing a customized calibration setup that meticulously controlled environmental factors such as temperature and humidity. We also utilized advanced statistical modeling techniques to characterize the sensor’s non-linear response. By adapting our approach to the unique characteristics of the sensor, we successfully generated a robust and accurate calibration curve that met the researchers’ exacting requirements. This project highlighted the importance of adaptability and collaboration in overcoming challenging calibration tasks.

Key Topics to Learn for Calibration of Sensors and Transducers Interview

- Sensor Principles: Understanding the fundamental operating principles of various sensor types (e.g., resistive, capacitive, inductive, piezoelectric, optical) and their limitations.

- Transducer Characteristics: Analyzing key transducer parameters like sensitivity, linearity, accuracy, precision, hysteresis, drift, and resolution. Knowing how to interpret datasheets and specifications.

- Calibration Methods: Mastering different calibration techniques, including two-point, multi-point, and linearization methods. Understanding the difference between traceable and non-traceable calibrations.

- Calibration Equipment: Familiarity with common calibration equipment such as multimeters, signal generators, data acquisition systems, and standards. Knowing their limitations and proper usage.

- Uncertainty Analysis: Understanding the concept of measurement uncertainty and its propagation through the calibration process. Being able to perform uncertainty calculations and express results appropriately.

- Practical Applications: Discussing real-world applications of sensor calibration across various industries (e.g., automotive, aerospace, manufacturing, healthcare). Being able to relate theoretical concepts to specific scenarios.

- Troubleshooting and Diagnostics: Demonstrating problem-solving skills in identifying and resolving common issues encountered during sensor calibration, such as drift, noise, and offset errors.

- Calibration Standards and Traceability: Understanding the importance of traceable calibration standards and their role in ensuring the accuracy and reliability of measurements.

- Data Acquisition and Analysis: Knowing how to acquire, process, and analyze calibration data using appropriate software and techniques. Being able to interpret graphs and charts effectively.

- Calibration Procedures and Documentation: Understanding the importance of following established calibration procedures and maintaining proper documentation. Being able to create and interpret calibration certificates.

Next Steps

Mastering Calibration of Sensors and Transducers opens doors to exciting career opportunities in diverse fields, offering strong prospects for advancement and higher earning potential. To maximize your chances of securing your dream role, a well-crafted, ATS-friendly resume is crucial. ResumeGemini is a trusted resource that can help you build a professional resume that highlights your skills and experience effectively. Examples of resumes tailored specifically to Calibration of Sensors and Transducers are available to guide you, ensuring your application stands out.

Explore more articles

Users Rating of Our Blogs

Share Your Experience

We value your feedback! Please rate our content and share your thoughts (optional).

What Readers Say About Our Blog

Hello,

We found issues with your domain’s email setup that may be sending your messages to spam or blocking them completely. InboxShield Mini shows you how to fix it in minutes — no tech skills required.

Scan your domain now for details: https://inboxshield-mini.com/

— Adam @ InboxShield Mini

Reply STOP to unsubscribe

Hi, are you owner of interviewgemini.com? What if I told you I could help you find extra time in your schedule, reconnect with leads you didn’t even realize you missed, and bring in more “I want to work with you” conversations, without increasing your ad spend or hiring a full-time employee?

All with a flexible, budget-friendly service that could easily pay for itself. Sounds good?

Would it be nice to jump on a quick 10-minute call so I can show you exactly how we make this work?

Best,

Hapei

Marketing Director

Hey, I know you’re the owner of interviewgemini.com. I’ll be quick.

Fundraising for your business is tough and time-consuming. We make it easier by guaranteeing two private investor meetings each month, for six months. No demos, no pitch events – just direct introductions to active investors matched to your startup.

If youR17;re raising, this could help you build real momentum. Want me to send more info?

Hi, I represent an SEO company that specialises in getting you AI citations and higher rankings on Google. I’d like to offer you a 100% free SEO audit for your website. Would you be interested?

Hi, I represent an SEO company that specialises in getting you AI citations and higher rankings on Google. I’d like to offer you a 100% free SEO audit for your website. Would you be interested?

good