Are you ready to stand out in your next interview? Understanding and preparing for Cloud Monitoring and Logging interview questions is a game-changer. In this blog, we’ve compiled key questions and expert advice to help you showcase your skills with confidence and precision. Let’s get started on your journey to acing the interview.

Questions Asked in Cloud Monitoring and Logging Interview

Q 1. Explain the difference between metrics, logs, and traces.

Metrics, logs, and traces are three fundamental pillars of observability, each providing a different perspective on the health and performance of a system. Think of them as different lenses through which you view your application.

- Metrics: These are numerical data points collected at regular intervals, representing the state of your system at a specific point in time. Examples include CPU utilization, memory usage, request latency, and error rate. They’re great for understanding trends and identifying anomalies over time. Imagine a dashboard showing these metrics – a quick glance reveals the overall health. They’re often aggregated and visualized using tools like Grafana.

- Logs: These are textual records of events that occur within your system. They provide rich context about individual events, including timestamps, error messages, and user actions. Logs are like detailed event reports, useful for diagnosing specific problems. For instance, a log might record a specific database error with details about the query and affected data. They’re usually aggregated and analyzed using tools like Elasticsearch, Fluentd, and Kibana (EFK stack) or Splunk.

- Traces: These are chronological sequences of events that track the flow of a single request through a distributed system. They show how a request traverses multiple services, highlighting latency at each stage. If you imagine a complex system like an e-commerce site with multiple microservices (payment, inventory, etc.), traces can help you pinpoint the service causing a delay. Tools like Jaeger and Zipkin help to visualize these traces.

In essence: Metrics provide a summary, logs give details, and traces reveal the flow. Effective monitoring utilizes all three for a comprehensive understanding.

Q 2. Describe your experience with various monitoring tools (e.g., Prometheus, Grafana, Datadog, CloudWatch).

I have extensive experience with several prominent monitoring tools, each with its own strengths and weaknesses. My experience spans from basic setup and configuration to advanced query optimization and alert management.

- Prometheus: I’ve used Prometheus for its powerful querying capabilities (PromQL) and its ability to scrape metrics from various sources. I’ve built dashboards visualizing metrics related to application performance, resource utilization, and error rates. Its open-source nature and flexibility made it ideal for several projects.

- Grafana: I’ve leveraged Grafana extensively for building interactive dashboards and visualizing metrics collected from Prometheus, InfluxDB, and other data sources. I’ve created customized visualizations, alerts, and reports, allowing for efficient monitoring and troubleshooting.

- Datadog: In projects requiring a more comprehensive, out-of-the-box solution, I’ve employed Datadog. Its automated discovery, built-in integrations, and user-friendly interface simplified the monitoring and alerting process, especially in complex, multi-cloud environments. I’ve designed custom dashboards, created and managed alerts, and integrated Datadog with other tools in the DevOps pipeline.

- CloudWatch: Within AWS environments, I’ve extensively used CloudWatch. I’ve configured CloudWatch to monitor EC2 instances, Lambda functions, databases, and other AWS services. I’m proficient in creating custom metrics, alarms, and log groups, integrating them with other AWS services for comprehensive monitoring and troubleshooting.

My experience allows me to select the right tool for each specific project based on factors like scale, budget, existing infrastructure, and specific monitoring needs.

Q 3. How do you design a monitoring system for a high-volume, low-latency application?

Designing a monitoring system for a high-volume, low-latency application requires a focus on efficiency and minimal overhead. The key is to avoid impacting the performance you’re trying to monitor.

- Granular Metrics: Collect only essential metrics, focusing on those directly impacting performance (e.g., request latency percentiles, error rates, queue lengths). Avoid unnecessary metrics that could add overhead.

- Sampling and Aggregation: Implement appropriate sampling techniques to reduce the volume of data transmitted. Aggregate metrics at the appropriate level to minimize the number of data points sent to the monitoring system.

- Asynchronous Monitoring: Utilize asynchronous methods for collecting and sending metrics to avoid blocking the application threads. Push-based systems (e.g., using libraries that push metrics to Prometheus) are more efficient than pull-based systems (frequent polling).

- Distributed Tracing: Employ distributed tracing to identify bottlenecks across different services. This provides insights into where latency originates, allowing for targeted optimization efforts. Zipkin or Jaeger are excellent tools for this purpose.

- Efficient Data Storage: Use time-series databases (like Prometheus or InfluxDB) optimized for storing and querying large volumes of metrics efficiently. Configure appropriate data retention policies to manage storage costs and prevent data overload.

- Alerting Strategies: Establish sophisticated alerting based on critical thresholds and trends, minimizing false positives to prevent alert fatigue. Use alerting on percentiles (e.g., 99th percentile latency) rather than averages to catch outlier performance issues.

A layered approach with detailed metrics at lower levels and aggregated metrics at higher levels provides the balance between granular insights and overall system health understanding.

Q 4. What are some common challenges in implementing cloud monitoring and logging?

Implementing cloud monitoring and logging presents several challenges:

- Data Volume and Cost: Cloud-based systems generate massive amounts of data. Managing this volume effectively, while keeping costs under control, requires careful planning and the use of techniques like data sampling, aggregation, and log rotation.

- Data Security and Compliance: Ensuring the security and privacy of monitoring and log data is crucial. Meeting regulatory compliance requirements (e.g., GDPR, HIPAA) requires implementing appropriate access controls, encryption, and data retention policies.

- Tool Integration and Complexity: Integrating various monitoring and logging tools with existing infrastructure can be complex, requiring expertise in different technologies and APIs. Managing this diverse ecosystem efficiently is key.

- Alert Fatigue: Poorly configured alerting systems can lead to a flood of alerts, making it difficult to identify and address actual issues. Implementing robust alert filtering and prioritization mechanisms is critical.

- Scalability and Reliability: Monitoring systems themselves need to be scalable and highly available to avoid becoming a single point of failure. Implementing redundancy, fault tolerance, and automated scaling mechanisms is necessary.

- Lack of Centralized Visibility: A lack of unified view of data across different services and clouds complicates troubleshooting and performance analysis. Centralized logging and monitoring dashboards are needed to provide a holistic view.

Successfully addressing these challenges requires careful planning, selection of appropriate tools and technologies, and a strong understanding of DevOps principles.

Q 5. Explain different strategies for log aggregation and analysis.

Log aggregation and analysis are critical for understanding system behavior and troubleshooting issues. Several strategies exist, each with its strengths and weaknesses:

- Centralized Logging Systems: Tools like Elasticsearch, Fluentd, and Kibana (EFK stack), or Splunk, collect logs from various sources, providing a unified view. They offer features like indexing, searching, and analysis.

- Cloud-Based Logging Services: Cloud providers (AWS CloudWatch, Azure Monitor, Google Cloud Logging) offer managed logging services with built-in features for scalability, security, and analysis. These are often integrated with other cloud services.

- Log Shippers: Tools like Fluentd and Logstash collect, process, and forward logs to a central repository. They support various log formats and can transform logs before sending them.

- Log Aggregation with Filtering and Enrichment: Before storing logs, it’s crucial to filter out unnecessary information and add relevant context (enrichment) through tools like Grok (for pattern extraction). This improves the efficiency of analysis.

- Log Analysis Tools: Utilize tools that support structured logging (JSON or other formats). These facilitate efficient querying and analysis based on specific fields within the logs, allowing you to efficiently pinpoint problems.

The chosen strategy depends on factors such as scale, budget, existing infrastructure, and the complexity of the logging requirements.

Q 6. How do you handle alert fatigue in a large-scale monitoring system?

Alert fatigue is a significant problem in large-scale monitoring systems. It happens when the constant stream of alerts overwhelms engineers, leading to missed critical alerts and a decrease in responsiveness.

- Smart Alerting: Implement intelligent alerting systems based on thresholds, correlations, and trends, rather than relying on simple threshold-based alerts. This requires thoughtful consideration of the system’s normal behavior.

- Alert Grouping and Suppression: Group related alerts into single incidents to reduce noise. Implement alert suppression to avoid repeated alerts for the same issue within a defined timeframe.

- Contextual Information: Provide rich context with alerts to help engineers quickly understand the problem. Include relevant metrics, log extracts, and other information.

- Alert Prioritization: Assign severity levels to alerts based on their potential impact. Prioritize alerts based on severity and business criticality.

- On-Call Rotation and Escalation Policies: Implement robust on-call rotation schedules and escalation procedures to ensure that alerts are addressed efficiently. Consider using tools to automate on-call and escalation.

- Feedback Loop: Regularly review and adjust alert configurations based on feedback from engineers to fine-tune the system and reduce false positives.

A well-designed alerting system should be proactive and informative, not overwhelming. Continuous monitoring and optimization are crucial.

Q 7. Discuss your experience with different log formats (e.g., JSON, CSV, plain text).

Different log formats impact the ease of aggregation, analysis, and search.

- Plain Text: Simplest format, but lacks structure, making analysis challenging. Useful for simple applications, but quickly becomes unwieldy for large-scale systems. Example:

2024-10-27 10:00:00 ERROR: Database connection failed - CSV (Comma Separated Values): Structured format, easy to parse. Suitable for simple log structures with a fixed number of fields. Example:

2024-10-27,10:00:00,ERROR,Database connection failed - JSON (JavaScript Object Notation): Highly structured, flexible, and widely used. Allows for complex log structures with hierarchical data. Ideal for large-scale systems and easier to query. Example:

{"timestamp": "2024-10-27 10:00:00", "level": "ERROR", "message": "Database connection failed", "details": {"error_code": 1234}}

JSON is generally preferred for its flexibility and machine-readability, especially for complex systems where structured logging is critical for efficient analysis. However, plain text remains useful for simpler scenarios where structured data is unnecessary.

Q 8. Describe your experience with distributed tracing.

Distributed tracing is crucial for understanding the flow of requests across microservices in a complex application. Imagine a user placing an order – the request might travel through multiple services: inventory, payment processing, shipping, etc. Distributed tracing allows us to follow that request’s journey, measuring latency at each step and identifying bottlenecks. I’ve extensively used tools like Jaeger and Zipkin, which inject unique trace IDs into requests. This allows us to correlate logs and metrics from different services, providing a holistic view of the request’s performance. For example, I once used distributed tracing to pinpoint a slow database query in the payment processing service that was causing significant delays in order completion. This was only revealed by examining the trace’s timings across all involved services; simply monitoring individual services wouldn’t have surfaced the root cause.

In practice, this involves instrumenting services to capture events, propagating trace context (e.g., via HTTP headers), and using a backend system to aggregate and visualize the traces. Understanding the relationship between spans (individual operations within a service) within a larger trace is key to effective troubleshooting. This method is indispensable in modern, distributed architectures.

Q 9. How do you ensure the security and compliance of your monitoring and logging data?

Security and compliance are paramount. We employ a multi-layered approach. First, data at rest is encrypted using robust encryption algorithms. We leverage technologies like AES-256 for data stored in databases or cloud storage. Secondly, data in transit is secured using HTTPS and TLS. All communication between monitoring agents, collection points, and the central logging/monitoring platform is encrypted. Third, access control is strictly enforced using role-based access control (RBAC). Only authorized personnel have access to sensitive data, and their access is regularly audited. Fourth, we adhere to relevant compliance standards like GDPR, HIPAA, or PCI DSS, depending on the specific data we handle and the industry regulations that apply. This includes data anonymization techniques where appropriate.

Regular security assessments and penetration testing are performed to identify and address vulnerabilities proactively. We employ intrusion detection systems (IDS) and security information and event management (SIEM) solutions to monitor for suspicious activity. Finally, robust logging of all monitoring and logging system operations is crucial for security auditing and incident response. We maintain detailed audit logs to track who accessed what data, when, and from where.

Q 10. What are some best practices for designing effective dashboards?

Effective dashboards are concise, informative, and focused on key performance indicators (KPIs). The goal is to provide a clear, at-a-glance overview of system health and performance. Think of them as the cockpit of your application’s health. I adhere to the following best practices:

- Focus on key metrics: Only include the most important metrics relevant to the user’s needs and role. Avoid overwhelming the dashboard with unnecessary data.

- Clear visualization: Utilize appropriate chart types (line graphs for trends, bar charts for comparisons, gauges for thresholds) and use clear, consistent legends and labels.

- Effective use of color: Use color strategically to highlight important information, but avoid excessive or distracting color schemes.

- Appropriate granularity: Choose the right time intervals and aggregation levels to show both high-level trends and granular details when needed.

- Actionable insights: Dashboards should not just display data but provide insights that drive action. Include alerts or notifications when thresholds are breached.

- User roles and permissions: Tailor dashboards to different user roles, showing only relevant information to maintain focus and avoid information overload.

For example, a dashboard for a DevOps engineer might focus on CPU utilization, memory usage, and error rates. A business-oriented dashboard could focus on revenue, order processing time, and customer satisfaction scores. A well-designed dashboard prevents alert fatigue and enables proactive problem-solving.

Q 11. Explain how you would troubleshoot a performance issue using monitoring and logging data.

Troubleshooting with monitoring and logging data is a systematic process. It’s like being a detective, piecing together clues to solve a mystery. My approach usually involves:

- Identify the symptom: Pinpoint the performance issue. Is it slow response times, high error rates, or something else?

- Gather data: Collect relevant metrics and logs from the affected system(s). This might involve reviewing system logs, application logs, database queries, and network traffic.

- Correlate data: Look for patterns and correlations between metrics and logs. For example, a spike in CPU usage might coincide with a surge in error logs.

- Isolate the root cause: Use the gathered data to pinpoint the underlying cause. This could involve examining specific code sections, database queries, or network configurations.

- Test and validate: Implement changes to verify the fix and monitor for recurrence.

For instance, if I see slow response times on a web application, I’d start by checking the application logs for errors. Then I’d look at metrics like CPU usage, memory usage, and database query performance. If I find high CPU utilization and slow database queries, the investigation would narrow down to resolving the database performance issues or optimizing the application code impacting the database.

Q 12. How do you use monitoring and logging data to improve the reliability and performance of applications?

Monitoring and logging data are invaluable for improving application reliability and performance. They provide continuous feedback on the health and performance of applications, enabling proactive problem-solving and optimization. Using this data, I can:

- Identify and resolve performance bottlenecks: By analyzing metrics like CPU usage, memory consumption, and network latency, I can identify and address performance bottlenecks before they impact users.

- Proactive problem detection: Monitoring tools can detect anomalies and potential issues before they escalate into major outages. This allows us to address problems before they impact users, enhancing reliability.

- Capacity planning: Monitoring data allows us to predict future resource needs, enabling efficient capacity planning and preventing performance degradation due to resource limitations.

- Root cause analysis: Examining logs helps identify the root cause of errors and incidents, enabling us to fix them and prevent recurrence.

- Performance optimization: Analyzing performance data helps identify areas for optimization, like inefficient code or database queries, leading to better application performance.

For example, if the monitoring system detects a consistent spike in error rates during peak hours, I’d examine the logs to identify the underlying issues. This might reveal a database query that needs optimization or a code bug that needs fixing. By continuously monitoring and analyzing these data points, we can keep our applications running reliably and efficiently.

Q 13. Describe your experience with setting up and managing monitoring agents.

My experience with monitoring agents involves deploying, configuring, and managing agents across various environments (cloud, on-premise). I’ve worked with agents like Datadog, Prometheus, and the agents provided by various cloud platforms (e.g., Google Cloud Monitoring, AWS CloudWatch). Setting up these agents involves configuring them to collect the required metrics and logs, ensuring proper security settings, and defining appropriate reporting intervals.

The key challenges I’ve addressed include managing agent upgrades (ensuring minimal disruption to services), scaling the agent deployment to accommodate growing infrastructure, handling agent failures (implementing automatic restarts and alerts), and securing agent communication (using encryption and authentication). For example, during a large-scale infrastructure migration, I automated the deployment and configuration of monitoring agents using configuration management tools like Ansible, ensuring consistent monitoring coverage across all environments. This was critical in minimizing downtime and ensuring smooth transition.

Q 14. How do you handle large volumes of log data efficiently?

Handling large volumes of log data efficiently requires a multi-pronged approach. The sheer volume can overwhelm traditional methods. Here’s what I’d do:

- Centralized Logging: Employ a centralized logging platform capable of handling high throughput, such as Elasticsearch, Splunk, or the cloud-native logging services offered by major providers (Google Cloud Logging, AWS CloudWatch Logs, Azure Monitor Logs).

- Log Aggregation and Filtering: Efficiently aggregate logs from multiple sources, and utilize filtering and query optimization techniques to reduce the amount of data processed. This minimizes storage and improves search performance.

- Log Shippers: Use efficient log shippers like Fluentd or Logstash to collect, preprocess, and forward logs to the centralized platform. This improves throughput and reduces latency.

- Log Rotation and Archiving: Implement log rotation policies to manage storage space. Archive older logs to cheaper storage solutions (like cloud storage) once they are no longer needed for real-time analysis.

- Data Compression: Compress logs to reduce storage space and improve transfer speeds. Various compression algorithms like gzip or zstd can be employed.

- Log Management Tools: Utilize advanced log management tools offering features like log parsing, searching, visualization, and analytics to make sense of the large volume of data efficiently. This includes using advanced search techniques and filters to retrieve only relevant information.

For example, in a large e-commerce platform, I’d use a distributed logging architecture with Fluentd collecting logs from numerous microservices, forwarding them to Elasticsearch for indexing and searching. We would then use Kibana (or a similar tool) for data visualization and analysis. By employing these methods, we can manage terabytes of logs efficiently without impacting system performance.

Q 15. Explain your experience with different alerting mechanisms (e.g., email, SMS, PagerDuty).

Alerting mechanisms are crucial for timely responses to critical events in a cloud environment. My experience spans various systems, each with its strengths and weaknesses. I’ve extensively used email for less critical alerts, like scheduled maintenance notifications or minor performance dips. SMS is ideal for urgent situations requiring immediate attention, such as a significant service outage or a security breach; the immediacy makes it perfect for quick responses and escalation. PagerDuty, however, represents a more sophisticated approach. It offers intelligent routing, escalation policies, and detailed incident management capabilities. This means alerts are sent to the right person at the right time, ensuring a rapid and effective response, even outside of business hours. For example, in one project, we integrated PagerDuty with our application monitoring system, triggering alerts based on error rates and response times. The customizable escalation policies ensured that on-call engineers were notified immediately, preventing minor issues from escalating into major outages.

- Email: Suitable for less urgent alerts, providing context and details.

- SMS: Best for critical alerts demanding immediate action.

- PagerDuty: Sophisticated system for intelligent routing, escalation, and incident management.

Career Expert Tips:

- Ace those interviews! Prepare effectively by reviewing the Top 50 Most Common Interview Questions on ResumeGemini.

- Navigate your job search with confidence! Explore a wide range of Career Tips on ResumeGemini. Learn about common challenges and recommendations to overcome them.

- Craft the perfect resume! Master the Art of Resume Writing with ResumeGemini’s guide. Showcase your unique qualifications and achievements effectively.

- Don’t miss out on holiday savings! Build your dream resume with ResumeGemini’s ATS optimized templates.

Q 16. Describe your experience with different types of monitoring (e.g., application monitoring, infrastructure monitoring, network monitoring).

My experience encompasses a wide range of monitoring types, each serving different purposes. Infrastructure monitoring focuses on the health and performance of the underlying hardware and network, including servers, storage, and network devices. Metrics like CPU utilization, memory usage, and network latency are constantly tracked. Application monitoring delves deeper, concentrating on the performance and availability of applications themselves. Key metrics include response times, error rates, and transaction throughput. Network monitoring ensures the smooth flow of data across the network, identifying bottlenecks and potential outages. It involves monitoring bandwidth usage, packet loss, and latency. I’ve used tools like Prometheus, Grafana, and Datadog to implement these different monitoring types. For instance, in a recent project, we used Prometheus to collect infrastructure metrics from Kubernetes clusters and Grafana to visualize dashboards displaying key performance indicators. This allowed us to quickly identify and address performance bottlenecks affecting application performance, resulting in improved user experience and reduced downtime.

Q 17. How do you ensure the accuracy and completeness of your monitoring data?

Data accuracy and completeness are paramount. I employ several strategies to ensure this. First, I meticulously validate monitoring configurations, ensuring that the right metrics are collected from the right sources and that data is properly processed and transformed. This involves regular reviews of configuration files and dashboards to detect any discrepancies. Second, I implement robust data quality checks and alerts, triggering notifications if anomalies or unexpected patterns are detected. This proactive approach enables early identification and resolution of data quality issues. Third, I use data validation techniques to check for inconsistencies and outliers in the collected data. For instance, I might compare metric values against expected ranges or historical trends to identify suspicious values which should be investigated. Fourth, I maintain detailed documentation of all monitoring processes and configurations, including data sources, transformation rules, and alert thresholds to ensure the accuracy and reliability of our monitoring data. This documentation is regularly reviewed and updated to reflect any changes made to the monitoring systems.

Q 18. Explain your experience with using monitoring and logging data for capacity planning.

Monitoring and logging data are invaluable for capacity planning. By analyzing historical trends in resource utilization, I can forecast future demand and proactively scale resources to meet expected growth. This includes analyzing CPU utilization, memory consumption, disk I/O, and network traffic over time to identify patterns and predict future needs. I often use time series databases to store and analyze this historical data, leveraging forecasting algorithms to predict future resource requirements. For example, in a previous project, I analyzed historical web server logs to project future traffic based on seasonality and user growth trends. This informed decisions on server provisioning and scaling, preventing performance bottlenecks during peak usage periods. This data-driven approach enabled us to optimize resource allocation and minimize infrastructure costs.

Q 19. How do you integrate monitoring and logging data with other systems?

Integrating monitoring and logging data with other systems is crucial for a holistic view of the infrastructure. This involves using APIs and standardized formats like JSON to facilitate data exchange. Common integration points include ticketing systems (like Jira or ServiceNow) for automating incident management, SIEM (Security Information and Event Management) systems for security monitoring and analysis, and BI (Business Intelligence) tools for generating reports and dashboards. For example, we implemented an automated workflow where alerts from our monitoring system automatically trigger tickets in our Jira instance, providing incident details and context for faster resolution. We also integrated our logging data with our SIEM system to correlate security events with application performance metrics, providing a richer understanding of security incidents.

Q 20. Describe your experience with different cloud platforms (e.g., AWS, Azure, GCP) and their monitoring services.

I possess extensive experience with AWS, Azure, and GCP, leveraging their respective monitoring services. AWS CloudWatch provides comprehensive monitoring for AWS resources and custom applications, offering metrics, logs, and tracing. Azure Monitor offers similar capabilities, integrating seamlessly with Azure services. Google Cloud Monitoring provides robust monitoring for GCP resources and applications, with strong integration with other GCP services. Each platform has its strengths and nuances. For instance, while all three platforms provide log aggregation, the specific features and functionalities differ in areas such as log filtering, searching, and querying. My familiarity extends to configuring and managing these services, designing custom dashboards, creating alerts, and analyzing data to identify performance bottlenecks or potential issues. Selecting the right platform depends on the specific requirements of the project and existing infrastructure.

Q 21. How do you use monitoring and logging data to identify security threats?

Monitoring and logging data are essential for identifying security threats. By analyzing logs for suspicious activities, unusual patterns, or anomalies, I can detect potential security breaches or intrusions. This involves correlating data from different sources, such as network logs, application logs, and security events, to identify patterns that might indicate malicious activity. For instance, a sudden spike in failed login attempts from unusual IP addresses could indicate a brute-force attack. Similarly, unexpected access to sensitive data or unusual file modifications might signal an insider threat. I leverage SIEM systems and security monitoring tools to facilitate these analyses. The identification of these patterns allows for timely response and mitigation of security threats, protecting sensitive data and ensuring system integrity. These insights are also crucial for enhancing our security posture and implementing preventative measures.

Q 22. What are the key performance indicators (KPIs) you would track for a typical application?

Choosing the right Key Performance Indicators (KPIs) for an application is crucial for understanding its health and performance. Think of KPIs as vital signs for your application – they tell you if it’s thriving or struggling. For a typical application, I’d focus on a combination of:

- Request Latency: How long does it take for a request to be processed? High latency indicates slowdowns, potentially due to overloaded servers or inefficient code. I’d track this using percentiles (e.g., 95th percentile latency) to get a sense of the worst-case scenarios.

- Error Rate: What percentage of requests result in errors (5xx errors)? A rising error rate is a clear sign of problems needing immediate attention. Tracking different error types separately can aid in pinpointing the cause (e.g., database errors vs. network errors).

- Throughput/Requests per Second (RPS): How many requests can the application handle per second? This KPI helps assess the application’s capacity and identify potential bottlenecks. A sudden drop in RPS indicates a potential issue.

- Resource Utilization (CPU, Memory, Disk I/O): Monitoring CPU usage, memory consumption, and disk I/O helps identify resource constraints. High utilization often points towards scaling needs or inefficient resource management.

- Database Performance (Query Times, Connections): If your application relies on a database, monitoring query execution times and the number of active connections is critical. Slow queries can significantly impact application performance.

The specific KPIs will vary depending on the application type, but these provide a solid foundation for comprehensive monitoring. For example, a real-time chat application might prioritize RPS and latency, while an e-commerce site would focus on transaction success rate and order processing time.

Q 23. How do you create custom metrics for your application?

Creating custom metrics allows for granular monitoring tailored to your specific application needs. The process typically involves instrumenting your application code to record relevant events and then sending these metrics to your monitoring system. Here’s a breakdown:

- Identify Key Events: First, pinpoint the critical events or actions within your application that you want to track. These could be anything from successful logins to completed transactions or specific function call durations.

- Instrumentation: You’ll need to add code to your application to capture these events and their associated data (e.g., timestamps, durations, error codes). Many popular libraries and frameworks provide functionality for this. For example, in Python you can use libraries such as Prometheus client.

- Metrics Format: Custom metrics usually follow a standard format (e.g., name, value, timestamp, labels). The labels allow for further categorization and filtering of your metrics.

- Export to Monitoring System: Finally, you send these metrics to your chosen monitoring system (e.g., Prometheus, Datadog, CloudWatch) using a suitable method (e.g., a dedicated agent, a library).

Example (Conceptual): Let’s say you want to track the time taken to process an image. You would add code that measures the processing time and then sends a metric like image_processing_time{image_size="large",status="success"} 1234 to your monitoring system, where 1234 represents the processing time in milliseconds. The labels allow you to analyze processing times for different image sizes and success/failure statuses.

Q 24. Explain your experience with using log analysis tools to identify trends and patterns.

Log analysis is indispensable for identifying trends and patterns in application behavior. It’s like being a detective, piecing together clues from log entries to understand what’s happening within your system. I’ve extensively used tools like Elasticsearch, Kibana, Splunk, and Graylog. My experience involves:

- Centralized Logging: I ensure all application logs are routed to a central repository for easier analysis and correlation. This eliminates the need to search across multiple servers or log files.

- Log Aggregation and Parsing: These tools effectively aggregate logs from various sources and parse them to extract key information. This involves configuring parsers to understand the format of your logs and extract fields like timestamps, error codes, user IDs, etc. This structured data is key for effective analysis.

- Pattern Recognition: These tools offer powerful search and query capabilities, enabling the identification of recurring errors, performance bottlenecks, and suspicious activities. Regular expressions are often used to match specific patterns within the log data.

- Visualization and Dashboards: Creating dashboards that visualize key metrics from logs provides a quick overview of system health and performance. I usually focus on error rates, request counts, and latency distributions. This enables rapid identification of issues.

- Alerting: Configuring alerts based on identified patterns in logs (e.g., a sudden spike in error rates) ensures proactive identification of problems before they impact users.

For example, during a recent project, using Splunk’s search functionality and visualizations, I identified a recurring pattern of database connection timeouts. This led to the discovery of a configuration issue in the database connection pool.

Q 25. Describe a time you had to debug a complex issue using monitoring and logging data.

One time, we experienced a sudden surge in application latency, impacting user experience. Using our monitoring system (Datadog), we saw a spike in database query times. This wasn’t immediately obvious from the application logs themselves, but through correlating the monitoring data (latency) with the slow database queries, we uncovered the root cause:

- Initial Observation: Datadog dashboards alerted us to the increased latency. The application logs showed general errors, but nothing specific.

- Investigating Database Performance: We switched to our database monitoring tools (also integrated with Datadog) and found a specific slow query impacting a large number of users. The query was poorly optimized, leading to slow execution times.

- Analyzing the Query: We used query analysis tools within our database management system to understand the query’s performance bottlenecks. This revealed an inefficient join operation.

- Solution: After optimizing the query using appropriate indexes and refactoring the join, the latency issue was resolved. This involved modifying the database schema and updating the application’s database interactions.

This experience underscored the value of correlating data from various monitoring and logging sources for effective debugging. It highlighted that solely relying on application logs alone might not reveal the whole picture, and the importance of robust monitoring for various components of your application.

Q 26. What are some techniques for optimizing the performance of your monitoring system?

Optimizing a monitoring system is crucial for maintaining its efficiency and preventing performance degradation. This can involve several strategies:

- Filtering and Aggregation: Avoid sending unnecessary data to your monitoring system. Use filtering rules to exclude irrelevant or low-value data points. Aggregate metrics at appropriate intervals to reduce data volume.

- Efficient Data Storage: Choose a monitoring system that offers efficient data storage and retrieval mechanisms. This may involve leveraging techniques such as data compression, partitioning, and sharding.

- Alerting Optimization: Ensure your alerting rules are well-defined and avoid generating excessive or false-positive alerts. Prioritize alerts based on their severity and impact.

- Sampling: For high-volume metrics, consider using sampling to reduce the amount of data transmitted. Ensure that the sampling rate preserves the essential characteristics of the data.

- Data Retention Policies: Establish appropriate data retention policies to avoid storage overload. Retain longer-term data for trend analysis but delete older, less important data.

For example, instead of storing every single database query log, we might aggregate the number of queries executed per minute and only store exceptions. This significantly reduces data volume while still providing valuable insight.

Q 27. How do you stay up-to-date with the latest trends and technologies in cloud monitoring and logging?

Keeping up with the ever-evolving landscape of cloud monitoring and logging requires a multi-faceted approach:

- Industry Publications and Blogs: I regularly read publications like InfoQ, The Register, and various vendor blogs (e.g., Datadog, AWS, Google Cloud) to stay informed about new technologies and best practices. This helps me understand industry trends and emerging technologies.

- Conferences and Webinars: Attending conferences (like KubeCon, AWS re:Invent) and participating in webinars allows me to learn from experts and network with fellow professionals. This exposes me to new ideas and solutions.

- Online Courses and Certifications: Platforms like Coursera, Udemy, and A Cloud Guru offer valuable courses on cloud technologies, including monitoring and logging. Obtaining relevant certifications demonstrates proficiency and helps in career advancement.

- Open Source Projects: Engaging with open-source projects (e.g., Prometheus, Grafana) allows me to learn from the code and understand the inner workings of popular monitoring tools. It helps build hands-on experience.

- Community Forums and Groups: Participating in online communities and forums allows for the exchange of knowledge and best practices with other professionals. This provides insights and helps to understand the current challenges in the industry.

This ongoing learning helps me adapt to new technologies, improve my skills, and stay ahead of the curve in this rapidly changing field.

Key Topics to Learn for Your Cloud Monitoring and Logging Interview

- Cloud Monitoring Fundamentals: Understanding the core principles of monitoring cloud-based systems, including metrics, logs, and traces. Explore different monitoring approaches and their trade-offs.

- Log Management and Analysis: Mastering techniques for collecting, processing, and analyzing log data from various cloud services. Practice using log aggregation tools and querying languages like Fluentd or the Cloud Logging query language.

- Metrics and Dashboards: Designing effective dashboards to visualize key performance indicators (KPIs) and gain insights into system health and performance. Understand different charting techniques and best practices for dashboard design.

- Alerting and Notifications: Setting up robust alerting systems to proactively identify and respond to critical events. Learn to define alert thresholds and escalation policies effectively.

- Distributed Tracing: Understanding distributed tracing techniques for debugging and performance analysis in microservices architectures. Familiarize yourself with tools like Jaeger or Zipkin.

- Security in Monitoring and Logging: Implementing secure logging practices to protect sensitive data and ensure compliance with security regulations. Understand the importance of data encryption and access control.

- Specific Cloud Provider Services: Deep dive into the monitoring and logging services offered by major cloud providers (AWS CloudWatch, Azure Monitor, Google Cloud Monitoring). Understand their strengths, weaknesses, and best use cases.

- Problem-Solving and Troubleshooting: Develop your skills in using monitoring and logging data to diagnose and resolve performance issues and system failures. Practice analyzing logs to identify root causes of problems.

Next Steps: Level Up Your Career

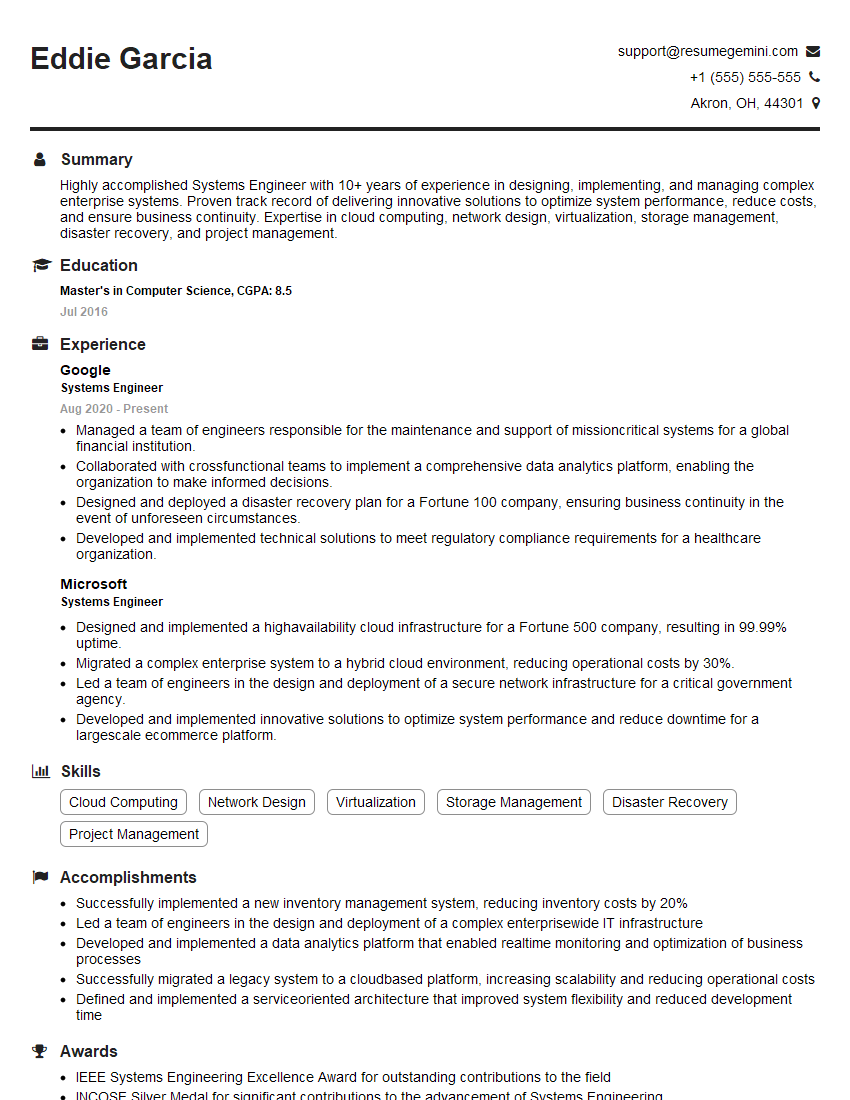

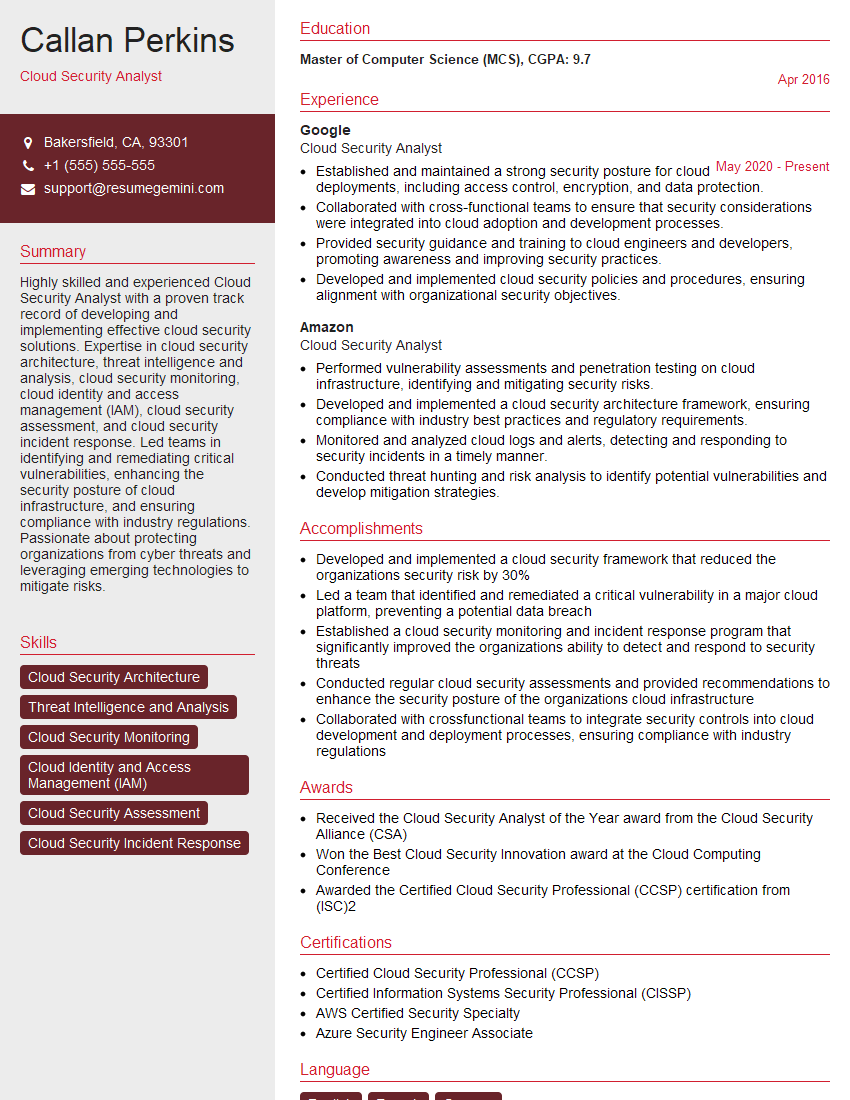

Mastering Cloud Monitoring and Logging is crucial for career advancement in today’s cloud-centric world. These skills are highly sought after, opening doors to exciting opportunities and higher earning potential. To maximize your job prospects, focus on building an ATS-friendly resume that effectively highlights your expertise. ResumeGemini is a trusted resource to help you craft a professional and impactful resume, ensuring your skills and experience shine through. We provide examples of resumes tailored to Cloud Monitoring and Logging roles to help you get started.

Explore more articles

Users Rating of Our Blogs

Share Your Experience

We value your feedback! Please rate our content and share your thoughts (optional).

What Readers Say About Our Blog

Hello,

We found issues with your domain’s email setup that may be sending your messages to spam or blocking them completely. InboxShield Mini shows you how to fix it in minutes — no tech skills required.

Scan your domain now for details: https://inboxshield-mini.com/

— Adam @ InboxShield Mini

Reply STOP to unsubscribe

Hi, are you owner of interviewgemini.com? What if I told you I could help you find extra time in your schedule, reconnect with leads you didn’t even realize you missed, and bring in more “I want to work with you” conversations, without increasing your ad spend or hiring a full-time employee?

All with a flexible, budget-friendly service that could easily pay for itself. Sounds good?

Would it be nice to jump on a quick 10-minute call so I can show you exactly how we make this work?

Best,

Hapei

Marketing Director

Hey, I know you’re the owner of interviewgemini.com. I’ll be quick.

Fundraising for your business is tough and time-consuming. We make it easier by guaranteeing two private investor meetings each month, for six months. No demos, no pitch events – just direct introductions to active investors matched to your startup.

If youR17;re raising, this could help you build real momentum. Want me to send more info?

Hi, I represent an SEO company that specialises in getting you AI citations and higher rankings on Google. I’d like to offer you a 100% free SEO audit for your website. Would you be interested?

Hi, I represent an SEO company that specialises in getting you AI citations and higher rankings on Google. I’d like to offer you a 100% free SEO audit for your website. Would you be interested?

good