Preparation is the key to success in any interview. In this post, we’ll explore crucial Component and Device Testing interview questions and equip you with strategies to craft impactful answers. Whether you’re a beginner or a pro, these tips will elevate your preparation.

Questions Asked in Component and Device Testing Interview

Q 1. Explain your experience with different testing methodologies (e.g., black box, white box, grey box).

Throughout my career, I’ve extensively utilized various testing methodologies, each offering unique strengths depending on the project and component complexity. Black box testing treats the component as a ‘black box,’ focusing solely on input and output without considering the internal workings. This is ideal for early-stage testing or when internal design is unavailable. For instance, I’ve used black box techniques to verify the functionality of a newly received microcontroller by applying various input signals and observing the resulting outputs, checking against the datasheet specifications. White box testing, conversely, requires intimate knowledge of the component’s internal structure, allowing for comprehensive testing of individual code paths or hardware components. I’ve employed this method while debugging firmware issues, tracing the flow of execution to pinpoint the source of a malfunction. Grey box testing sits between these two extremes; it leverages partial knowledge of the internal structure to guide test design. A practical example would be testing a network card where I know the internal protocols but not the exact implementation details. By combining these approaches, I ensure thorough testing across various levels of abstraction.

Q 2. Describe your experience with test equipment such as oscilloscopes, multimeters, and logic analyzers.

My experience with test equipment is extensive and encompasses a wide range of tools. Oscilloscopes are indispensable for analyzing analog signals, allowing me to visually inspect waveforms for anomalies such as noise, distortion, or timing issues. For example, I recently used an oscilloscope to identify a timing glitch in a high-speed data transmission line. Multimeters provide essential measurements of voltage, current, and resistance, crucial for verifying power supply levels, signal integrity, and component functionality. In one project, a multimeter helped pinpoint a faulty resistor causing a voltage drop in a circuit. Logic analyzers are crucial for digital systems, providing a detailed view of digital signals and their timing relationships. I once used a logic analyzer to troubleshoot a communication problem between two microcontrollers, identifying a misalignment in their clock signals. Proficiency with these tools, coupled with a strong theoretical understanding of electronics, is essential for accurate and efficient testing.

Q 3. How do you approach debugging a failing component or device?

Debugging a failing component or device is a systematic process. My approach involves several key steps. First, I reproduce the failure consistently. This requires careful documentation of the conditions under which the failure occurs. Next, I employ a divide-and-conquer strategy, isolating sections of the circuit or software to determine the source of the problem. This may involve using test equipment to isolate faulty components or using debugging tools to trace the execution flow of software. If a hardware fault is suspected, I would use techniques like visual inspection, in-circuit testing, or even component substitution. For software-related failures, I utilize logging, breakpoints, and code tracing to isolate the faulty section of code. Throughout the process, clear documentation is critical, allowing me to track progress and maintain a record of findings. Ultimately, the goal is to pinpoint the root cause rather than simply masking the symptoms.

Q 4. What are your preferred methods for documenting test procedures and results?

Comprehensive documentation is paramount in component and device testing. My preferred method uses a combination of structured test plans and detailed reports. Test plans meticulously define the test objectives, procedures, and expected results. This ensures consistency and repeatability across multiple tests and testers. I typically use a version-controlled document system to maintain the test plans, and I employ detailed checklists to track progress through each test step. Once tests are conducted, comprehensive reports are generated documenting the test setup, observed results, and any deviations from the expected outcomes. I use tables and figures to present data effectively, and I include clear and concise summaries outlining the overall test results and conclusions. This methodical approach ensures that the test process and its results are completely auditable and can be easily reviewed and understood by others.

Q 5. Explain your experience with automated testing frameworks and tools.

I have significant experience with automated testing frameworks and tools. These tools are essential for increasing testing efficiency and improving the overall quality of the test process. I’ve used various tools, including Python-based frameworks such as pytest and unittest for software testing. These frameworks allow for the creation of automated tests that can be easily executed and checked for failures. I have also used LabVIEW for automated hardware testing, allowing me to programmatically control instruments like oscilloscopes and multimeters, significantly streamlining data acquisition and analysis. Automated testing is particularly beneficial for repetitive tasks and regression testing, ensuring that new changes don’t introduce unintended problems. For example, in one project, we used a combination of Python and LabVIEW to automate a series of functional tests for a complex embedded system, dramatically reducing testing time and improving reliability.

Q 6. How do you ensure the accuracy and reliability of your test results?

Ensuring the accuracy and reliability of test results is critical. This relies on a multi-faceted approach. Firstly, the use of calibrated test equipment is paramount. Regular calibration ensures that measurements are accurate and traceable to national standards. Secondly, employing appropriate statistical methods is important to analyze test data. Analyzing variance, standard deviation, and confidence intervals helps determine whether observed differences are significant or simply due to random variations. Thirdly, employing multiple testing methods and comparing results is crucial for validation. For example, I might compare results from a functional test with those obtained from a stress test or a performance test. Finally, meticulous documentation of the entire testing process, including equipment used, test procedures, and environmental conditions, enhances transparency and reproducibility. By adhering to these principles, I strive to generate results that are not only accurate but also reliable and trustworthy.

Q 7. Describe your experience with different types of testing (functional, performance, stress, etc.).

My experience spans a variety of testing types. Functional testing verifies that the component or device meets its specified functionality. I’ve conducted numerous functional tests using various test benches and equipment to confirm whether components like sensors, actuators, or communication interfaces meet their intended operational parameters. Performance testing focuses on measuring speed, throughput, resource usage, and other performance metrics. For instance, I’ve characterized the latency and throughput of network interfaces under different load conditions. Stress testing assesses the robustness and reliability under extreme conditions, such as high temperatures, voltage fluctuations, or heavy load. I’ve used stress tests to identify design weaknesses in a system operating under high-temperature environments. Other types include endurance testing (evaluating lifespan), security testing (assessing vulnerability), and usability testing (evaluating user experience, which is more relevant in higher-level systems incorporating user interfaces). A comprehensive testing strategy typically involves a combination of these techniques to ensure thorough evaluation across various aspects of the device’s performance and reliability.

Q 8. How do you handle conflicting priorities or deadlines in a testing environment?

Conflicting priorities and deadlines are a common challenge in testing. My approach involves a structured prioritization process. First, I clearly understand all deadlines and their associated risks. This often involves discussions with stakeholders to understand the business impact of each task. Then, I employ a risk-based prioritization matrix, categorizing tasks based on urgency and impact. High-impact, urgent tasks get top priority. For tasks that need to be deferred, I communicate proactively and transparently with stakeholders, providing realistic revised timelines and outlining any potential risks. I may suggest alternative testing strategies to address the time constraint, such as focusing on high-risk areas first, or employing risk-based testing techniques.

For example, if faced with a critical bug fix deadline and a less critical feature testing deadline, I would prioritize the bug fix, communicating the potential delay in feature testing and seeking agreement on a revised timeline. This proactive communication ensures everyone is aligned and aware of potential consequences.

Q 9. What is your experience with statistical analysis of test data?

Statistical analysis is crucial in component and device testing for drawing reliable conclusions from test data. My experience encompasses a wide range of statistical techniques, including hypothesis testing (e.g., t-tests, ANOVA), regression analysis, and statistical process control (SPC). I’m proficient in using statistical software packages like Minitab and R to analyze test results, identify outliers, and determine the statistical significance of observed variations. For example, I’ve used ANOVA to compare the mean lifetime of components from different manufacturing batches, helping to identify variations in quality. I’ve also used regression analysis to model the relationship between environmental factors and component performance, allowing for better prediction and control of reliability. Furthermore, I regularly use control charts (e.g., X-bar and R charts) to monitor process stability and identify potential quality issues in real-time.

Q 10. Describe your experience with different types of test reports and documentation.

I have extensive experience creating various test reports and documentation, catering to different audiences and purposes. This includes:

- Test plans: Detailing the scope, objectives, methodologies, and resources for testing.

- Test cases: Specifying the steps, inputs, and expected outputs for individual test scenarios.

- Test reports: Summarizing the testing results, including the number of tests executed, passed, failed, and not executed. These often include detailed failure analysis and root cause identification.

- Defect reports: Documenting identified defects, including their severity, priority, and reproduction steps.

- Traceability matrices: Linking requirements to test cases, ensuring comprehensive test coverage.

- Summary reports: Providing high-level overviews of the testing process and outcomes for management and stakeholders. These often include key metrics such as defect density and test coverage.

I adhere to standardized formats and templates, ensuring consistency and clarity across all documentation. I tailor my reporting style to the specific audience, using appropriate technical language while maintaining readability and avoiding jargon.

Q 11. How familiar are you with version control systems (e.g., Git)?

I’m highly familiar with version control systems, primarily Git. I utilize Git for managing source code, test scripts, and test data across multiple projects. I understand the core concepts of branching, merging, committing, and pushing changes. I’m comfortable using Git workflows like Gitflow for managing feature development and releases in a collaborative team environment. My experience includes using Git repositories hosted on platforms like GitHub and GitLab. I leverage Git’s capabilities for version tracking and collaboration to ensure clear lineage of test artifacts and easy rollback options if necessary. I also use Git for code review and collaboration with developers, aiding in early defect identification and improving communication.

Q 12. What is your experience with defect tracking and management tools (e.g., Jira)?

I have significant experience using Jira and similar defect tracking and management tools. I’m proficient in creating and managing issues, assigning tasks, tracking progress, and generating reports. I’m familiar with Jira’s workflows and customizable features, allowing me to tailor the system to meet the specific needs of a project. I utilize Jira for the entire defect lifecycle—from initial identification and reporting to resolution and verification. This includes assigning priorities and severities to defects based on their impact, providing detailed descriptions, attaching relevant evidence, and effectively managing communication between developers and testers. My workflow in Jira ensures traceability from defect identification to its closure, enabling efficient debugging and product improvement.

Q 13. Explain your understanding of different failure modes and mechanisms in electronic components.

Understanding failure modes and mechanisms is fundamental to effective component testing. Electronic components can fail due to various reasons, categorized into different failure modes. These include:

- Mechanical failures: Such as cracks, fractures, or wear and tear in physical structures.

- Electrical failures: Including shorts, opens, dielectric breakdown, or excessive voltage/current stresses.

- Thermal failures: Resulting from overheating, temperature cycling, or thermal shock.

- Chemical failures: Caused by corrosion, degradation, or material interactions.

- Electrochemical failures: Involving migration, diffusion, or plating issues due to electric current or chemical reactions.

The mechanisms behind these failures are complex and often involve multiple factors. Understanding these mechanisms is essential for developing effective test methods and predicting component lifetime. For instance, electromigration—the movement of ions under high current density—can lead to open circuits in integrated circuits. Similarly, thermal cycling can cause cracks in solder joints, leading to intermittency and eventual failure.

Q 14. How do you determine the root cause of a hardware failure?

Determining the root cause of a hardware failure is a systematic process requiring meticulous investigation. My approach follows these steps:

- Gather evidence: Collect information on the failure, including symptoms, environmental conditions, operating parameters, and any error logs or messages.

- Visual inspection: Carefully examine the failed component for any physical damage, such as cracks, burns, or discoloration.

- Electrical testing: Use appropriate instrumentation (e.g., multimeters, oscilloscopes) to measure electrical parameters and identify anomalies in voltage, current, or impedance.

- Component level testing: Isolate the failed component and conduct further tests to confirm its malfunction and identify the type of failure (e.g., short circuit, open circuit).

- Analysis of failure mode: Identify the failure mode based on observations and test results (e.g., thermal overload, electrical stress).

- Root cause analysis: Use techniques like the ‘5 Whys’ or fault tree analysis to trace back to the underlying cause of the failure. This often involves analyzing design, manufacturing, and operational factors.

- Corrective action: Implement appropriate corrective actions, including design changes, process improvements, or updated procedures, to prevent similar failures in the future.

For instance, if a power supply fails, I might find evidence of overheating, leading to a visual inspection showing burnt components. Through electrical testing, I can confirm the short circuit and, using root cause analysis, determine that inadequate heat sinking was the primary cause. This would lead to corrective actions, such as improving the heat dissipation mechanism.

Q 15. What is your experience with environmental testing (e.g., temperature, humidity, vibration)?

Environmental testing is crucial for ensuring the robustness and reliability of components and devices. My experience encompasses a wide range of environmental stressors, including temperature cycling (both high and low temperature extremes), humidity testing (assessing performance under various moisture levels), and vibration testing (simulating real-world shocks and vibrations). I’ve worked extensively with industry-standard test chambers and equipment to perform these tests, adhering to relevant specifications like MIL-STD-810 and IEC 60068.

For example, in a recent project involving a ruggedized handheld device, we subjected the device to temperature cycling from -40°C to +85°C, followed by humidity testing at 95% relative humidity. We monitored key performance indicators (KPIs) such as power consumption, signal strength, and functionality throughout the testing process. Vibration testing involved subjecting the device to sinusoidal and random vibration profiles, mimicking the stresses it might experience during transportation or operation in a harsh environment. By carefully analyzing the test results, we were able to identify potential weaknesses in the design and implement improvements to enhance the device’s resilience.

Career Expert Tips:

- Ace those interviews! Prepare effectively by reviewing the Top 50 Most Common Interview Questions on ResumeGemini.

- Navigate your job search with confidence! Explore a wide range of Career Tips on ResumeGemini. Learn about common challenges and recommendations to overcome them.

- Craft the perfect resume! Master the Art of Resume Writing with ResumeGemini’s guide. Showcase your unique qualifications and achievements effectively.

- Don’t miss out on holiday savings! Build your dream resume with ResumeGemini’s ATS optimized templates.

Q 16. Describe your experience with designing and implementing test fixtures.

Designing and implementing test fixtures is a critical aspect of effective component and device testing. A well-designed fixture ensures accurate and repeatable test results, protecting the device under test (DUT) from damage and providing the necessary interfaces for applying stimuli and measuring responses. My experience includes designing fixtures for various types of components, from small integrated circuits (ICs) to larger printed circuit boards (PCBs).

The design process typically begins with a thorough understanding of the DUT’s specifications, the types of tests to be performed, and the required measurement accuracy. I use CAD software (such as SolidWorks or AutoCAD) to create detailed 3D models of the fixtures, considering factors such as material selection (for thermal and mechanical properties), connector types, and ease of assembly and disassembly. I’ve also worked with custom fabrication techniques, including 3D printing and CNC machining, to create specialized fixtures for unique testing requirements. For example, I designed a custom fixture for testing the thermal performance of a high-power amplifier using a combination of thermal interface materials and precision temperature sensors.

Q 17. How do you ensure that your tests are comprehensive and cover all relevant scenarios?

Ensuring comprehensive test coverage is paramount. My approach involves a multi-faceted strategy that combines various test methodologies and techniques. I begin by developing a comprehensive test plan based on requirements specifications and risk assessments. This plan outlines the various tests to be performed, the test conditions, the expected results, and the pass/fail criteria.

To ensure thorough coverage, I utilize several techniques, including:

- Functional testing: Verifying that the component or device performs its intended functions correctly.

- Stress testing: Pushing the component or device beyond its normal operating limits to assess its robustness.

- Boundary value analysis: Testing at the limits of acceptable input values.

- Equivalence partitioning: Dividing the input domain into equivalence classes and testing one representative value from each class.

- Fault injection testing: Deliberately injecting faults into the system to assess its fault tolerance.

Furthermore, I utilize a combination of automated and manual testing, leveraging automated test equipment (ATE) for repeatable and high-throughput testing, while employing manual testing for tasks requiring human judgment or complex interactions.

Q 18. What is your experience with test plan development and execution?

Test plan development and execution are fundamental to successful testing. My experience includes developing comprehensive test plans that cover all aspects of the testing process, from test objective definition to reporting. The plans include a detailed description of the test environment, the test procedures, the required equipment, and the acceptance criteria. I utilize various tools and techniques for test management, such as Jira or TestRail, to track progress, manage defects, and generate reports.

During test execution, I meticulously follow the test plan, documenting all test results and any deviations from the plan. I employ rigorous change management procedures to ensure that any modifications to the plan are properly documented and approved. A crucial aspect of my approach is thorough documentation, ensuring that all test results are clearly recorded and readily available for review and analysis. For instance, in a recent project involving the validation of a new communication protocol, the test plan was meticulously designed to cover various scenarios such as data rate variations, signal noise, and network congestion. The use of a test management tool ensured efficient tracking of test cases and defects.

Q 19. How do you manage and prioritize testing activities within a project?

Managing and prioritizing testing activities involves careful planning and resource allocation. I typically use a risk-based approach to prioritize tests, focusing on the most critical functionalities and potential failure points. This often involves using a risk matrix to assess the likelihood and impact of potential failures. High-risk areas receive priority, ensuring that the most crucial aspects of the system are thoroughly tested early in the development cycle.

I often employ agile methodologies for managing testing activities, working closely with developers and project managers to ensure that testing tasks are integrated seamlessly into the overall development process. Effective communication and collaboration are key to successfully managing testing efforts, preventing bottlenecks, and resolving issues promptly. Regular status meetings and progress reports help track progress and identify potential problems early on. Using project management tools allows for better visualization of tasks, dependencies, and resource allocation, leading to efficient prioritization and execution of testing activities.

Q 20. What is your experience with different types of component packaging and handling?

My experience includes working with a variety of component packaging types, including surface-mount technology (SMT) packages (e.g., QFN, BGA, SOIC), through-hole packages (e.g., DIP, SIP), and various connector types. I understand the handling requirements for each type of package, and I take precautions to prevent damage during handling, testing, and storage. For example, I’m familiar with the use of anti-static mats and wrist straps to protect sensitive components from electrostatic discharge (ESD).

Proper handling is crucial to avoid damage. For delicate components like BGAs, special tools and techniques are required for soldering and desoldering. Understanding the package’s mechanical strength and thermal characteristics is also important during handling. For instance, applying excessive force during insertion or removal of components can lead to damage. Likewise, exceeding the component’s thermal limits during soldering can lead to failure. My experience ensures that all handling procedures comply with the manufacturer’s recommendations and best practices to prevent damage and ensure accurate testing results.

Q 21. Describe your understanding of signal integrity and power integrity.

Signal integrity and power integrity are critical aspects of electronic design and testing. Signal integrity refers to the quality of electrical signals as they travel through a circuit, ensuring that signals arrive at their destination without significant distortion or attenuation. Power integrity, on the other hand, focuses on ensuring that sufficient power is delivered to the components, with minimal noise and voltage fluctuations.

Poor signal integrity can lead to data errors, timing violations, and system malfunctions. Similarly, poor power integrity can cause unexpected voltage drops, component damage, and system instability. My understanding encompasses the use of various simulation tools (e.g., SPICE, IBIS-AMI) to analyze signal and power integrity issues. I’m also familiar with various techniques for mitigating these issues, such as proper impedance matching, careful routing of traces, and the use of decoupling capacitors. For example, during testing, I use oscilloscopes and spectrum analyzers to measure signal quality and identify potential problems. In the case of power integrity, I use power analyzers to monitor voltage and current levels and identify any potential issues like excessive voltage drop or noise.

Q 22. How familiar are you with various communication protocols (e.g., I2C, SPI, UART)?

I possess extensive experience with various communication protocols crucial for component and device testing. I2C, SPI, and UART are fundamental, and I’m proficient in analyzing their data streams, troubleshooting communication errors, and integrating them into automated test systems.

I2C (Inter-Integrated Circuit): I’m familiar with its two-wire, master-slave architecture. I can effectively debug I2C issues, such as clock stretching, arbitration, and address conflicts, using logic analyzers and oscilloscopes. For instance, I recently resolved a timing issue in an I2C-based sensor by carefully analyzing the bus activity with a logic analyzer, identifying a delay introduced by a faulty pull-up resistor.

SPI (Serial Peripheral Interface): My expertise includes understanding SPI’s flexible clocking and data transfer mechanisms. I can configure SPI devices, handle different modes (clock polarity and phase), and interpret the data to verify proper functionality. I’ve used SPI extensively to test communication with flash memory chips, ensuring reliable data read and write operations.

UART (Universal Asynchronous Receiver/Transmitter): I’m adept at using UART for both low-speed data communication and debugging. I’m comfortable using terminal programs to monitor UART data, identify baud rate issues, and analyze data packets for errors. I once used UART logging to track down a intermittent failure in a microcontroller’s firmware during a power cycle.

Beyond these, I also have working knowledge of protocols like CAN, USB, and Ethernet, depending on the project requirements. My understanding extends to both hardware and software aspects, ensuring effective testing across the entire system.

Q 23. Explain your experience with PCB design and testing.

My experience with PCB design and testing is comprehensive, encompassing the entire lifecycle – from schematic capture and component selection to board bring-up and failure analysis. I’m proficient in using EDA tools like Altium Designer and Eagle to create and verify schematics and PCB layouts.

During testing, I utilize a range of techniques, including in-circuit testing (ICT), functional testing, and boundary scan testing (JTAG). ICT helps identify shorts and open circuits early in the process. Functional testing ensures that the board performs according to specifications, while JTAG allows for deeper access and control of internal signals. For instance, I recently used JTAG to diagnose a complex timing issue within a microcontroller on a high-speed digital board, tracing the problem to a poorly designed trace routing affecting signal integrity.

I also have practical experience with signal integrity analysis, employing oscilloscopes and spectrum analyzers to identify signal noise and impedance mismatches. This is crucial for high-speed designs, where even minor signal degradation can lead to significant performance issues or data corruption.

Q 24. What is your experience with high-speed digital testing?

High-speed digital testing demands specialized skills and equipment. My experience includes testing systems operating at frequencies exceeding hundreds of MHz, often dealing with signal integrity challenges and timing-critical circuits.

I’m proficient in using high-speed oscilloscopes, logic analyzers, and protocol analyzers to capture and analyze signals. I understand the importance of proper termination, grounding, and shielding to minimize signal reflections and noise. Furthermore, I employ techniques like eye diagrams and jitter analysis to assess signal quality and determine compliance with standards.

A recent project involved testing a high-speed data acquisition system. Using a high-bandwidth oscilloscope, I identified signal reflections caused by impedance mismatches in the PCB traces, leading to data errors. By modifying the trace routing and implementing appropriate termination, I improved the signal integrity and resolved the issue, resulting in reliable data acquisition.

Q 25. How do you ensure test coverage in complex systems?

Ensuring comprehensive test coverage in complex systems requires a structured and methodical approach. I typically employ a combination of techniques, including requirement traceability, risk analysis, and code coverage analysis.

Requirement Traceability: Each test case should directly address a specific requirement, ensuring all aspects of the system are verified.

Risk Analysis: Identifying high-risk areas of the system allows for focused testing and prioritization of critical test cases.

Code Coverage Analysis: For embedded systems, I use code coverage tools to ensure that a significant portion of the code base is exercised during testing. This helps identify untested code paths that could lead to potential failures.

Test Case Design Techniques: I utilize various techniques like equivalence partitioning, boundary value analysis, and state transition testing to efficiently design comprehensive test suites.

Beyond these, effective test management tools and processes are crucial for tracking progress and managing the large number of tests that may be required for a complex system.

Q 26. Explain your experience with using scripting languages (e.g., Python) for test automation.

I’m proficient in using Python for test automation, leveraging its extensive libraries for data manipulation, instrument control, and reporting. This allows me to create automated test sequences, reducing manual effort and improving testing efficiency.

I use libraries such as PyVISA to control instruments like oscilloscopes and power supplies, NumPy for data processing, and Matplotlib for creating informative graphs and reports. For example, I automated the testing of a power supply using PyVISA to control a programmable power supply, measuring output voltage and current with a multimeter, and verifying the results against specifications. This significantly reduced testing time and enabled repeatable, accurate measurements.

My automation scripts are well-structured, documented, and easily maintainable, promoting collaboration and efficient test updates as the system evolves.

Q 27. Describe a challenging testing situation you encountered and how you overcame it.

During the testing of a high-power amplifier, we encountered intermittent failures that were extremely difficult to reproduce. Initial diagnostic tests indicated no obvious problems. The failure mode was unpredictable, occurring only under specific load conditions and at elevated temperatures.

To resolve this, I employed a multi-pronged approach: First, we enhanced our test setup by incorporating temperature control and precise load monitoring. This allowed us to systematically reproduce the failure under controlled conditions. Second, we used a high-speed oscilloscope to monitor the amplifier’s internal signals during operation, revealing a transient overvoltage spike occurring just before the failure. Finally, we meticulously examined the amplifier’s schematic and PCB layout, identifying a poorly placed decoupling capacitor that was the root cause of the overvoltage.

By systematically analyzing the failure, enhancing the test environment, and employing advanced diagnostic tools, we were able to identify and resolve the underlying cause of the intermittent failures. This experience highlighted the importance of methodical troubleshooting, coupled with the appropriate test equipment and expertise, to successfully diagnose and overcome complex testing challenges.

Q 28. What are your long-term career goals in the field of Component and Device Testing?

My long-term career goals center on becoming a recognized expert in advanced component and device testing methodologies. I aim to specialize in areas such as high-speed digital testing and embedded system verification, continually expanding my knowledge of emerging technologies and test techniques. I aspire to lead and mentor teams, contributing to the development of innovative test solutions that enhance product quality and reliability. This involves staying at the forefront of technological advancements and contributing to the development of industry best practices in component and device testing.

Key Topics to Learn for Component and Device Testing Interview

- Functional Testing: Understanding the core functionalities of components and devices and verifying their correct operation. This includes defining test cases and expected outcomes.

- Performance Testing: Analyzing response times, throughput, resource utilization (CPU, memory, power), and scalability under various load conditions. Practical application includes load testing and stress testing methodologies.

- Reliability Testing: Assessing the mean time between failures (MTBF), mean time to repair (MTTR), and overall system robustness. This involves techniques like accelerated life testing and fault injection.

- Compatibility Testing: Ensuring seamless operation across different hardware platforms, software versions, and operating systems. This includes testing compatibility with various peripherals and interfaces.

- Security Testing: Identifying and mitigating vulnerabilities in components and devices to prevent unauthorized access, data breaches, and malicious attacks. This involves penetration testing and vulnerability assessments.

- Test Automation: Developing and implementing automated test scripts using various tools and frameworks to improve efficiency and reduce testing time. Practical application includes selecting appropriate automation tools and frameworks based on project needs.

- Test Reporting and Analysis: Documenting test results, identifying defects, and providing actionable insights to development teams. This includes interpreting test data and generating concise, informative reports.

- Debugging and Troubleshooting: Effectively identifying, isolating, and resolving issues encountered during testing. This involves utilizing debugging tools and applying systematic problem-solving techniques.

Next Steps

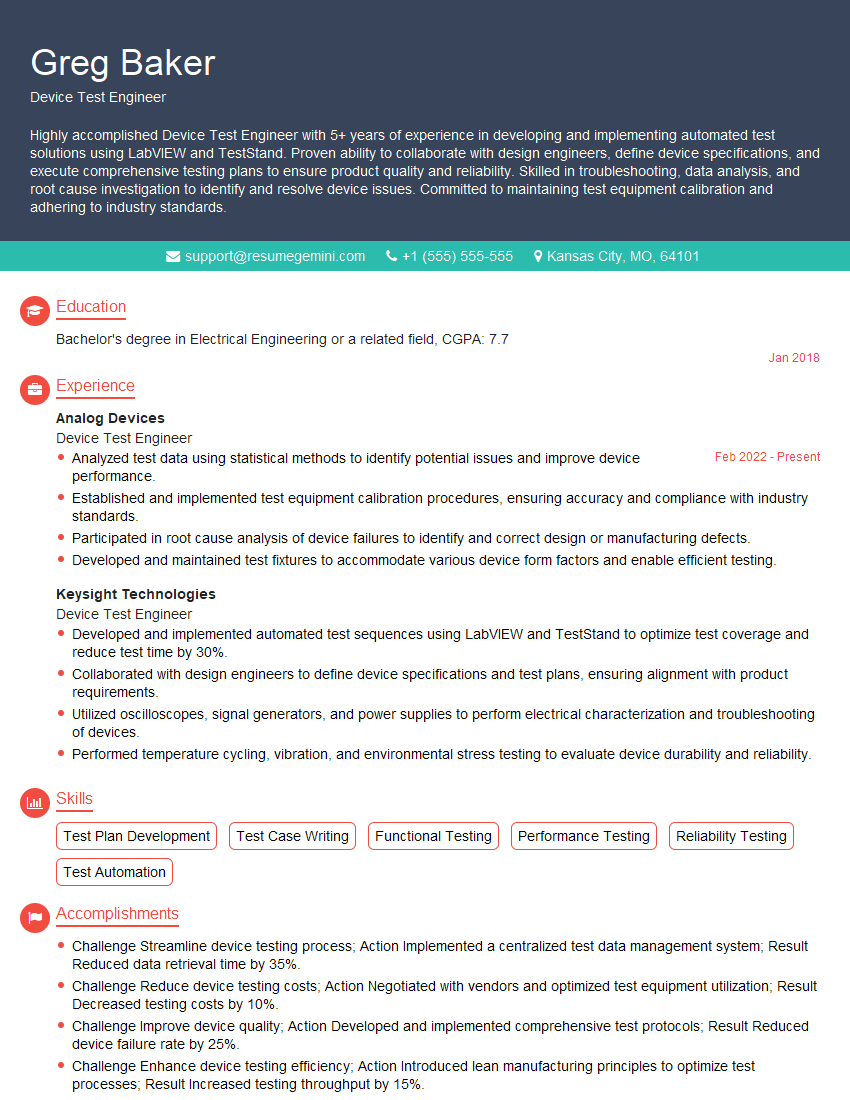

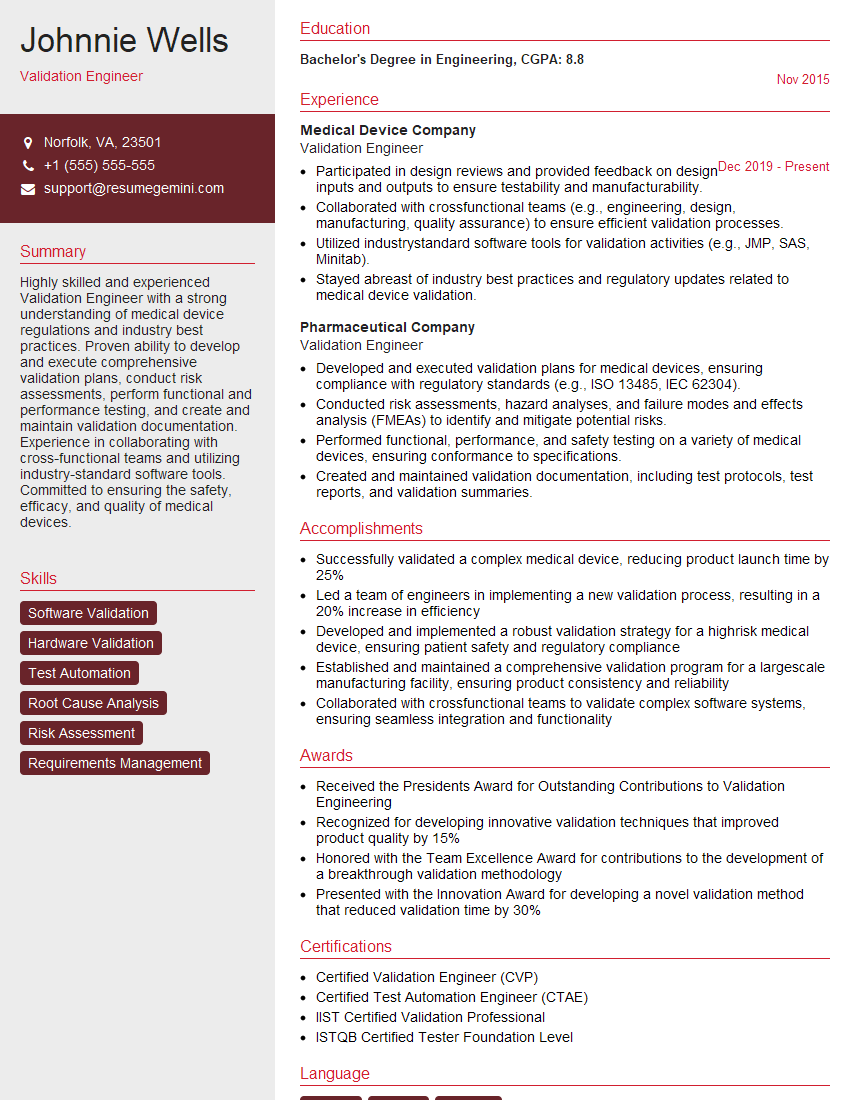

Mastering Component and Device Testing opens doors to exciting career opportunities in various industries, offering excellent growth potential and competitive salaries. A strong resume is crucial for showcasing your skills and experience to potential employers. Creating an ATS-friendly resume is essential to ensure your application gets noticed. ResumeGemini is a trusted resource to help you build a professional, impactful resume that highlights your expertise in Component and Device Testing. Examples of resumes tailored to this field are available on ResumeGemini to guide you in building yours.

Explore more articles

Users Rating of Our Blogs

Share Your Experience

We value your feedback! Please rate our content and share your thoughts (optional).

What Readers Say About Our Blog

Hi,

Business owners spend hours every week worrying about their website—or avoiding it because it feels overwhelming.

We’d like to take that off your plate:

$69/month. Everything handled.

Our team will:

Design a custom website—or completely overhaul your current one

Take care of hosting as an option

Handle edits and improvements—up to 60 minutes of work included every month

No setup fees, no annual commitments. Just a site that makes a strong first impression.

Find out if it’s right for you:

https://websolutionsgenius.com/awardwinningwebsites

Hello,

we currently offer a complimentary backlink and URL indexing test for search engine optimization professionals.

You can get complimentary indexing credits to test how link discovery works in practice.

No credit card is required and there is no recurring fee.

You can find details here:

https://wikipedia-backlinks.com/indexing/

Regards

NICE RESPONSE TO Q & A

hi

The aim of this message is regarding an unclaimed deposit of a deceased nationale that bears the same name as you. You are not relate to him as there are millions of people answering the names across around the world. But i will use my position to influence the release of the deposit to you for our mutual benefit.

Respond for full details and how to claim the deposit. This is 100% risk free. Send hello to my email id: [email protected]

Luka Chachibaialuka

Hey interviewgemini.com, just wanted to follow up on my last email.

We just launched Call the Monster, an parenting app that lets you summon friendly ‘monsters’ kids actually listen to.

We’re also running a giveaway for everyone who downloads the app. Since it’s brand new, there aren’t many users yet, which means you’ve got a much better chance of winning some great prizes.

You can check it out here: https://bit.ly/callamonsterapp

Or follow us on Instagram: https://www.instagram.com/callamonsterapp

Thanks,

Ryan

CEO – Call the Monster App

Hey interviewgemini.com, I saw your website and love your approach.

I just want this to look like spam email, but want to share something important to you. We just launched Call the Monster, a parenting app that lets you summon friendly ‘monsters’ kids actually listen to.

Parents are loving it for calming chaos before bedtime. Thought you might want to try it: https://bit.ly/callamonsterapp or just follow our fun monster lore on Instagram: https://www.instagram.com/callamonsterapp

Thanks,

Ryan

CEO – Call A Monster APP

To the interviewgemini.com Owner.

Dear interviewgemini.com Webmaster!

Hi interviewgemini.com Webmaster!

Dear interviewgemini.com Webmaster!

excellent

Hello,

We found issues with your domain’s email setup that may be sending your messages to spam or blocking them completely. InboxShield Mini shows you how to fix it in minutes — no tech skills required.

Scan your domain now for details: https://inboxshield-mini.com/

— Adam @ InboxShield Mini

Reply STOP to unsubscribe

Hi, are you owner of interviewgemini.com? What if I told you I could help you find extra time in your schedule, reconnect with leads you didn’t even realize you missed, and bring in more “I want to work with you” conversations, without increasing your ad spend or hiring a full-time employee?

All with a flexible, budget-friendly service that could easily pay for itself. Sounds good?

Would it be nice to jump on a quick 10-minute call so I can show you exactly how we make this work?

Best,

Hapei

Marketing Director

Hey, I know you’re the owner of interviewgemini.com. I’ll be quick.

Fundraising for your business is tough and time-consuming. We make it easier by guaranteeing two private investor meetings each month, for six months. No demos, no pitch events – just direct introductions to active investors matched to your startup.

If youR17;re raising, this could help you build real momentum. Want me to send more info?

Hi, I represent an SEO company that specialises in getting you AI citations and higher rankings on Google. I’d like to offer you a 100% free SEO audit for your website. Would you be interested?

Hi, I represent an SEO company that specialises in getting you AI citations and higher rankings on Google. I’d like to offer you a 100% free SEO audit for your website. Would you be interested?

good