The right preparation can turn an interview into an opportunity to showcase your expertise. This guide to Computer Vision and Machine Learning interview questions is your ultimate resource, providing key insights and tips to help you ace your responses and stand out as a top candidate.

Questions Asked in Computer Vision and Machine Learning Interview

Q 1. Explain the difference between supervised, unsupervised, and reinforcement learning.

Machine learning algorithms are broadly categorized into three types based on the nature of the data and learning process: supervised, unsupervised, and reinforcement learning.

- Supervised Learning: This approach involves training a model on a labeled dataset, where each data point is paired with its corresponding correct output. Think of it like a teacher guiding a student – the teacher (labeled data) provides the correct answers, allowing the student (model) to learn the relationship between inputs and outputs. Examples include image classification (where images are labeled with their respective categories) and object detection (where images are annotated with bounding boxes around objects).

- Unsupervised Learning: Here, the model learns from unlabeled data, identifying patterns and structures without explicit guidance. It’s like giving a student a puzzle without the picture on the box; they must figure out the structure and relationships on their own. Common techniques include clustering (grouping similar data points together) and dimensionality reduction (reducing the number of variables while preserving important information). In computer vision, this could involve grouping similar images together based on visual features or identifying underlying features in high-dimensional image data.

- Reinforcement Learning: This approach trains an agent to make decisions in an environment by interacting with it and receiving rewards or penalties. Imagine training a robot to navigate a maze; the robot learns by trial and error, receiving positive reinforcement for reaching the goal and negative reinforcement for hitting walls. This paradigm is less commonly used directly in image processing but is increasingly relevant for tasks like robot navigation guided by image inputs.

In essence, the key difference lies in the availability of labeled data and the feedback mechanism used during the learning process.

Q 2. Describe different types of convolutional neural networks (CNNs) and their applications.

Convolutional Neural Networks (CNNs) are a specialized type of neural network designed for processing grid-like data, such as images. Different architectures exist, each suited for specific tasks:

- LeNet-5: One of the earliest and most influential CNNs, primarily used for digit recognition. Its simple architecture paved the way for more complex models.

- AlexNet: A deeper CNN that significantly improved the accuracy of image classification on the ImageNet dataset. It introduced concepts like ReLU activation functions and dropout regularization.

- VGGNet: Characterized by its use of small convolutional filters (3×3) stacked multiple times, achieving higher accuracy with increased depth.

- GoogLeNet (Inception): Introduced the inception module, which combines different convolutional filters of various sizes to capture multi-scale features efficiently.

- ResNet: Addresses the vanishing gradient problem in deep networks through residual connections, enabling the training of extremely deep models with improved accuracy.

- U-Net: Specifically designed for biomedical image segmentation, its architecture allows for precise localization of objects within images.

Applications are diverse and include:

- Image Classification: Identifying the main object in an image (e.g., cat, dog, car).

- Object Detection: Locating and classifying multiple objects within an image (e.g., self-driving cars identifying pedestrians and vehicles).

- Image Segmentation: Pixel-level labeling of objects in an image (e.g., medical image analysis to segment organs).

- Image Generation: Creating new images (e.g., generating realistic faces or artistic styles).

Q 3. What are the common challenges in object detection and how can they be addressed?

Object detection, while significantly advanced, still faces several challenges:

- Small Objects: Detecting tiny objects can be difficult as their features may be poorly represented in the input image. This can be mitigated by using higher resolution images, feature pyramids (like in Faster R-CNN), or specialized architectures designed for multi-scale feature extraction.

- Occlusion: When objects are partially hidden behind others, detecting them becomes challenging. Advanced techniques involving context modeling and attention mechanisms can help address this issue.

- Class Imbalance: Some object classes may be far more frequent than others in a dataset, leading to biased models that perform poorly on less frequent classes. Strategies such as data augmentation for under-represented classes or cost-sensitive learning can help balance the training process.

- Background Clutter: Distinguishing objects from complex or noisy backgrounds can be challenging. Effective feature extraction and robust background subtraction techniques are essential.

- Computational Cost: Real-time object detection in high-resolution video streams demands efficient algorithms and hardware. Optimization techniques like pruning, quantization, and efficient architectures are necessary for deployment on resource-constrained platforms.

Addressing these challenges often involves a combination of improved algorithms, architectural innovations, and data preprocessing techniques.

Q 4. Explain the concept of transfer learning and its benefits in computer vision.

Transfer learning leverages knowledge gained from solving one problem to improve performance on a related but different problem. Instead of training a model from scratch, we use a pre-trained model (often trained on a large dataset like ImageNet) and fine-tune it on a smaller dataset specific to our task. Imagine learning to drive a car after already knowing how to ride a bicycle – the underlying principles of balance and control transfer, making learning to drive easier.

Benefits in computer vision are numerous:

- Reduced Training Time: Significantly less training data and computational resources are required compared to training from scratch.

- Improved Accuracy: Leveraging the knowledge from a pre-trained model on a massive dataset often leads to better performance, especially when dealing with limited data.

- Easier Implementation: Pre-trained models are readily available and can be easily integrated into projects.

A common approach involves freezing the weights of the initial layers of a pre-trained CNN (which learn general features) and only training the later layers (which are specific to the task). This allows the model to adapt to the new dataset while retaining valuable features learned from the larger dataset.

Q 5. What are some common image preprocessing techniques used in computer vision?

Image preprocessing is crucial for improving the performance and efficiency of computer vision algorithms. Common techniques include:

- Resizing: Scaling images to a consistent size ensures uniformity in input to the model.

- Cropping: Removing irrelevant parts of the image to focus on the region of interest.

- Normalization: Scaling pixel values to a specific range (e.g., 0-1) to stabilize training and improve model convergence.

- Data Augmentation: Artificially increasing the size of the dataset by creating modified versions of existing images through techniques like rotation, flipping, cropping, and adding noise. This helps improve model robustness and generalization.

- Noise Reduction: Applying filters to remove unwanted noise from images (e.g., Gaussian blur, median filter).

- Color Space Conversion: Changing the color space (e.g., RGB to grayscale, HSV) to highlight specific features or simplify processing.

The choice of preprocessing techniques depends on the specific task and the characteristics of the dataset.

Q 6. How does backpropagation work in neural networks?

Backpropagation is an algorithm used to train neural networks by calculating the gradient of the loss function with respect to the network’s weights. Imagine it as figuring out how much each weight contributed to the error in the prediction. This gradient then guides the adjustment of weights to minimize the error. It’s a chain rule application where the error is propagated backward through the network layer by layer.

The process involves:

- Forward Pass: The input data is fed forward through the network, producing an output.

- Loss Calculation: The difference between the predicted output and the actual target is computed using a loss function.

- Backward Pass: The gradient of the loss function with respect to each weight is calculated using the chain rule. This involves propagating the error signal back through the network, layer by layer.

- Weight Update: The weights are updated using an optimization algorithm (e.g., gradient descent) based on the calculated gradients. This adjustment aims to reduce the loss function’s value.

This iterative process of forward and backward passes continues until the loss function is minimized to an acceptable level, indicating that the network has learned the underlying patterns in the data.

Q 7. Describe different loss functions used in computer vision tasks.

Various loss functions are used in computer vision, each suited for specific tasks:

- Mean Squared Error (MSE): Measures the average squared difference between predicted and actual values. Commonly used for regression tasks, such as depth estimation.

- Cross-Entropy Loss: Used for classification problems. Measures the difference between the predicted probability distribution and the true distribution. Commonly used in image classification tasks.

- Hinge Loss: Used in support vector machines (SVMs) and some other classification algorithms. Encourages large margins between classes.

- Binary Cross-Entropy: A special case of cross-entropy loss used for binary classification problems (e.g., determining if an image contains a specific object or not).

- Focal Loss: Addresses class imbalance issues by assigning different weights to different classes. Particularly useful in object detection where the number of positive samples (objects) is usually much smaller than negative samples (background).

- Intersection over Union (IoU) Loss: Measures the overlap between the predicted bounding box and the ground truth bounding box. Frequently used in object detection for evaluating the quality of localization.

- Dice Loss: Used for image segmentation, measuring the similarity between the predicted segmentation mask and the ground truth mask. It emphasizes areas with both high true positives and true negatives.

The selection of the appropriate loss function significantly impacts the performance of the model. The choice depends on the specific computer vision task and the desired characteristics of the model’s output.

Q 8. Explain the difference between precision and recall in object detection.

In object detection, precision and recall are crucial metrics that assess the accuracy of your model’s predictions. Think of it like a search for a specific type of flower (your object) in a vast field (your image).

Precision answers: “Out of all the flowers my model identified, how many were actually the right kind?” A high-precision model makes few false positive errors – it’s very careful not to label something as the target flower unless it’s extremely confident. A low precision means many of its identifications were wrong.

Recall answers: “Out of all the flowers of that type actually present in the field, how many did my model correctly identify?” A high-recall model finds most or all of the target flowers; it minimizes false negative errors – it tries to find every instance of the target flower, even at the cost of some false positives. Low recall means it missed a lot of flowers.

Example: Imagine a self-driving car detecting pedestrians. High precision is crucial here; we’d rather have the car be overly cautious and sometimes miss a pedestrian (low recall) than falsely identify a mailbox as a person (low precision) and cause an accident. On the other hand, a medical image analysis system looking for cancerous cells might prioritize high recall – it’s better to have some false positives (further investigation required) than miss a single cancerous cell (false negative).

Q 9. What are the advantages and disadvantages of using different activation functions?

Activation functions introduce non-linearity into neural networks, enabling them to learn complex patterns. Different activation functions have distinct characteristics that make them suitable for various tasks.

- Sigmoid: Outputs values between 0 and 1, often used in binary classification for the output layer. Disadvantages: Suffers from the vanishing gradient problem (gradients become very small during backpropagation, hindering learning), and outputs are not zero-centered.

- Tanh (Hyperbolic Tangent): Similar to sigmoid but outputs values between -1 and 1. Advantages: Zero-centered, which can speed up training. Disadvantages: Still suffers from the vanishing gradient problem.

- ReLU (Rectified Linear Unit): Outputs the input if positive, otherwise 0. Advantages: Simple, computationally efficient, mitigates the vanishing gradient problem. Disadvantages: Can suffer from the ‘dying ReLU’ problem where neurons become inactive.

- Leaky ReLU: A variation of ReLU that allows a small, non-zero gradient for negative inputs. Advantages: Addresses the dying ReLU problem.

- Softmax: Often used in the output layer for multi-class classification; outputs a probability distribution over multiple classes.

The choice of activation function depends on the specific problem. For hidden layers, ReLU or its variations are often preferred for their efficiency and ability to avoid the vanishing gradient problem. For the output layer, sigmoid or softmax is usually chosen depending on whether the task is binary or multi-class classification, respectively.

Q 10. How do you evaluate the performance of a computer vision model?

Evaluating a computer vision model involves using appropriate metrics to assess its performance on unseen data. The process usually involves these steps:

- Data Splitting: Divide your dataset into training, validation, and test sets. The test set is crucial for obtaining an unbiased estimate of the model’s generalization performance.

- Metric Selection: Choose relevant metrics based on the task. For example:

- Classification: Accuracy, precision, recall, F1-score, AUC (Area Under the ROC Curve)

- Object Detection: Mean Average Precision (mAP), Intersection over Union (IoU)

- Segmentation: IoU, Dice coefficient

- Evaluation on Test Set: Run the trained model on the held-out test set and calculate the chosen metrics. This gives you an objective measure of the model’s performance on data it has never seen before.

- Error Analysis: Examine the model’s errors on the test set to understand its weaknesses. This helps guide improvements to the model or data.

Example: A facial recognition system might be evaluated using accuracy and F1-score on a test set of images. Low accuracy might indicate that the model is not generalizing well, while low F1-score might suggest an imbalance in precision and recall, indicating potentially more false positives or false negatives.

Q 11. Explain the concept of overfitting and how to mitigate it.

Overfitting occurs when a model learns the training data too well, including its noise and outliers, resulting in poor generalization to unseen data. Imagine a student memorizing the answers to a practice test instead of understanding the underlying concepts; they’ll do well on that specific test but fail a similar one.

Mitigation Techniques:

- Cross-validation: Train and validate the model on different subsets of the training data, giving a more robust estimate of performance and helping identify overfitting early.

- Regularization: Adding penalty terms to the loss function to discourage complex models. This includes techniques like L1 and L2 regularization (discussed in the next question).

- Data Augmentation: Artificially increasing the size of the training dataset by creating modified versions of existing data (e.g., rotating, flipping, cropping images). This helps the model become more robust to variations in the data.

- Early Stopping: Monitor the model’s performance on a validation set during training and stop training when the performance starts to decrease. This prevents the model from overfitting to the training data.

- Dropout: Randomly ignoring neurons during training, forcing the network to learn more robust features.

By applying these techniques, you can build models that generalize well to new data, avoiding the pitfalls of overfitting.

Q 12. Describe different regularization techniques used in machine learning.

Regularization techniques constrain the complexity of a machine learning model to prevent overfitting. They work by adding penalty terms to the loss function, discouraging excessively large weights.

- L1 Regularization (Lasso): Adds a penalty term proportional to the absolute value of the weights. This tends to drive some weights to exactly zero, leading to feature selection; it encourages sparsity in the model.

- L2 Regularization (Ridge): Adds a penalty term proportional to the square of the weights. This shrinks the weights towards zero but doesn’t drive them to exactly zero. It reduces the influence of individual features.

- Elastic Net: A combination of L1 and L2 regularization. It benefits from both feature selection (L1) and smaller weights (L2), making it a robust choice.

- Dropout: A regularization technique specific to neural networks. It randomly ignores neurons during training, forcing the network to learn more robust features that aren’t reliant on any single neuron.

The choice of regularization technique often depends on the data and the specific model. L2 regularization is a common starting point due to its computational efficiency. L1 might be preferred if feature selection is desirable. Elastic net often provides a good balance.

Q 13. What are some common metrics used to evaluate the performance of a machine learning model?

The choice of metrics depends heavily on the specific machine learning task and the goals. However, some commonly used metrics include:

- Accuracy: The ratio of correctly classified instances to the total number of instances. Simple to understand but can be misleading in imbalanced datasets.

- Precision: The proportion of correctly predicted positive instances out of all instances predicted as positive. High precision means few false positives.

- Recall (Sensitivity): The proportion of correctly predicted positive instances out of all actual positive instances. High recall means few false negatives.

- F1-Score: The harmonic mean of precision and recall. Provides a balanced measure considering both false positives and false negatives. Useful when dealing with imbalanced datasets.

- AUC (Area Under the ROC Curve): Measures the ability of a classifier to distinguish between classes across different thresholds. Useful for evaluating classifiers with varying thresholds.

- RMSE (Root Mean Squared Error): Measures the average magnitude of errors in regression tasks. Lower RMSE indicates better performance.

- R-squared: Represents the proportion of variance in the dependent variable explained by the model in regression tasks. Higher R-squared indicates a better fit.

For example, in a fraud detection system, recall (minimizing false negatives) might be more important than precision (minimizing false positives), whereas in spam filtering, precision (minimizing false positives) might be more critical.

Q 14. Explain the difference between classification and regression.

Classification and regression are two fundamental types of supervised machine learning tasks, differing in the nature of the output variable.

Classification: Predicts a categorical output variable. The output belongs to a finite set of classes or categories. Examples include image classification (cat, dog, bird), spam detection (spam, not spam), and medical diagnosis (disease A, disease B, healthy).

Regression: Predicts a continuous output variable. The output can take any value within a range. Examples include predicting house prices, stock prices, temperature, or the age of a person based on an image.

Key Differences Summarized:

| Feature | Classification | Regression |

|---|---|---|

| Output Variable | Categorical | Continuous |

| Goal | Assign data points to categories | Predict a numerical value |

| Evaluation Metrics | Accuracy, precision, recall, F1-score, AUC | RMSE, R-squared, MAE (Mean Absolute Error) |

The choice between classification and regression depends entirely on the nature of the problem and the desired output.

Q 15. What is the role of feature engineering in machine learning?

Feature engineering is the process of using domain knowledge to create features that make machine learning algorithms work better. It’s like preparing the ingredients for a recipe – the better the ingredients, the better the final dish. Instead of raw data, we create new features that are more informative and relevant to the task at hand. This can involve transforming existing features, combining them, or creating entirely new ones.

For example, imagine you’re building a model to predict house prices. Raw data might include square footage, number of bedrooms, and location. Feature engineering could involve creating new features like ‘price per square foot’, ‘distance to nearest school’, or ‘average income in the neighborhood’. These new features often capture more meaningful relationships than the raw data alone.

Effective feature engineering requires a deep understanding of the data and the problem you’re trying to solve. It’s an iterative process – you create features, test them, and refine them based on the model’s performance. Poor feature engineering can lead to inaccurate and unreliable models, while good feature engineering can drastically improve model accuracy and efficiency.

Career Expert Tips:

- Ace those interviews! Prepare effectively by reviewing the Top 50 Most Common Interview Questions on ResumeGemini.

- Navigate your job search with confidence! Explore a wide range of Career Tips on ResumeGemini. Learn about common challenges and recommendations to overcome them.

- Craft the perfect resume! Master the Art of Resume Writing with ResumeGemini’s guide. Showcase your unique qualifications and achievements effectively.

- Don’t miss out on holiday savings! Build your dream resume with ResumeGemini’s ATS optimized templates.

Q 16. Describe different dimensionality reduction techniques.

Dimensionality reduction techniques aim to reduce the number of variables in a dataset while preserving important information. High-dimensional data can lead to computational challenges (the ‘curse of dimensionality’), and often contains redundant or irrelevant information. These techniques are crucial for improving model performance, reducing computational cost, and visualizing data.

- Principal Component Analysis (PCA): A linear transformation that projects data onto a lower-dimensional subspace spanned by principal components – directions of maximum variance. It’s widely used for data visualization and feature extraction.

- t-distributed Stochastic Neighbor Embedding (t-SNE): A non-linear technique that excels at visualizing high-dimensional data in a low-dimensional space (often 2D or 3D). It focuses on preserving local neighborhood structures in the data.

- Linear Discriminant Analysis (LDA): A supervised technique that aims to maximize the separation between different classes in the data. It projects data onto a lower-dimensional subspace that optimizes class separability.

- Autoencoders: Neural networks trained to reconstruct their input. By forcing the network to learn a compressed representation (bottleneck layer), we can extract lower-dimensional features that capture essential information.

Choosing the right technique depends on the specific dataset and task. For example, PCA is suitable for unsupervised tasks where the goal is to reduce dimensionality without considering class labels, while LDA is better suited for supervised classification tasks.

Q 17. Explain the concept of bias-variance tradeoff.

The bias-variance tradeoff is a fundamental concept in machine learning. It describes the balance between a model’s ability to fit the training data (low bias) and its ability to generalize to unseen data (low variance).

Bias refers to the error introduced by approximating a real-world problem, which might be complex, by a simplified model. High bias leads to underfitting, where the model is too simple to capture the underlying patterns in the data. Think of it like trying to fit a straight line to data that’s clearly curved.

Variance refers to the model’s sensitivity to fluctuations in the training data. High variance leads to overfitting, where the model learns the training data too well, including its noise, and performs poorly on unseen data. Imagine a model that memorizes the training examples instead of learning general patterns.

The goal is to find the sweet spot that minimizes both bias and variance. This often involves choosing an appropriate model complexity and using techniques like regularization to prevent overfitting.

Q 18. What are some common challenges in deploying machine learning models?

Deploying machine learning models in real-world applications comes with several challenges:

- Data drift: The statistical properties of the input data change over time, causing model performance to degrade. This requires model retraining or adaptation.

- Monitoring and maintenance: Models need continuous monitoring to detect performance degradation and potential issues. This includes tracking key metrics, retraining schedules, and handling unexpected input data.

- Scalability: Models need to handle large volumes of data and high throughput in production environments. This may involve distributed computing and optimized infrastructure.

- Integration with existing systems: Seamless integration with existing software and hardware systems is essential for successful deployment.

- Explainability and interpretability: Understanding why a model makes a particular prediction is crucial, especially in high-stakes applications. This requires using interpretable models or techniques to explain model decisions.

- Security and privacy: Protecting sensitive data and ensuring model security are paramount concerns.

Addressing these challenges requires careful planning, robust infrastructure, and a continuous monitoring and maintenance process.

Q 19. How do you handle imbalanced datasets in machine learning?

Imbalanced datasets, where one class significantly outnumbers others, pose a challenge for machine learning models because they tend to be biased towards the majority class. Several techniques can help address this:

- Resampling: This involves either oversampling the minority class (creating copies of existing samples) or undersampling the majority class (removing samples). Careful consideration is needed to avoid overfitting in oversampling and loss of information in undersampling.

- Cost-sensitive learning: Assigning different misclassification costs to different classes. For example, misclassifying a minority class sample might be given a higher cost than misclassifying a majority class sample, encouraging the model to focus more on the minority class.

- Ensemble methods: Combining multiple models trained on different subsets of the data or with different resampling techniques can improve performance on imbalanced datasets.

- Anomaly detection techniques: If the minority class represents anomalies or outliers, techniques specifically designed for anomaly detection may be more appropriate than traditional classification methods.

The best approach depends on the specific dataset and the nature of the imbalance. Often, a combination of techniques is used to achieve optimal performance.

Q 20. Explain the difference between a generative and discriminative model.

Generative and discriminative models represent two different approaches to machine learning. They differ primarily in what they learn and how they make predictions:

Discriminative models learn the boundary between different classes. They focus on directly modeling the conditional probability P(y|x), where x is the input and y is the output (class label). Think of them as learning a decision boundary to separate different classes. Examples include Support Vector Machines (SVMs), Logistic Regression, and most neural networks used for classification.

Generative models learn the probability distribution of each class. They model the joint probability P(x, y), allowing them to generate new samples that resemble the training data. Think of them as learning the underlying data structure of each class. Examples include Naive Bayes, Gaussian Mixture Models, and Generative Adversarial Networks (GANs).

In essence, discriminative models learn what distinguishes classes, while generative models learn how classes are formed. The choice depends on the task. Discriminative models generally perform better for classification, while generative models are useful for tasks like data generation, anomaly detection, and density estimation.

Q 21. Describe different types of recurrent neural networks (RNNs) and their applications.

Recurrent Neural Networks (RNNs) are designed to process sequential data, such as text, speech, and time series. Their key feature is the hidden state, which is updated at each time step and carries information from previous steps. This allows them to maintain context across the sequence.

- Vanilla RNNs: The simplest type of RNN, but they suffer from the vanishing gradient problem, making it difficult to learn long-term dependencies.

- Long Short-Term Memory (LSTM): LSTMs address the vanishing gradient problem using sophisticated gating mechanisms that control the flow of information into and out of the hidden state. They excel at capturing long-range dependencies in sequential data and are widely used in natural language processing and speech recognition.

- Gated Recurrent Units (GRUs): Similar to LSTMs, GRUs also use gating mechanisms to control information flow, but they have a simpler architecture with fewer parameters. They often offer a good balance between performance and computational efficiency.

Applications of RNNs include:

- Natural Language Processing (NLP): Machine translation, text generation, sentiment analysis, named entity recognition.

- Speech Recognition: Converting spoken language into text.

- Time Series Analysis: Forecasting stock prices, weather prediction, anomaly detection in sensor data.

The choice of RNN architecture (Vanilla RNN, LSTM, GRU) depends on the specific task and the length of the sequences being processed. LSTMs are often preferred for long sequences due to their ability to handle long-term dependencies, while GRUs might be a more efficient alternative for shorter sequences.

Q 22. What are some common techniques for image segmentation?

Image segmentation is the process of partitioning an image into multiple segments, each representing a different object or region. Think of it like coloring in a picture – each distinct area gets a different color. This is crucial for many computer vision tasks, such as object detection, medical image analysis, and autonomous driving.

- Thresholding: A simple technique where pixels above a certain threshold value are assigned to one segment, and those below to another. This works well for images with high contrast.

- Edge Detection: Identifying boundaries between objects using algorithms like Sobel or Canny edge detectors. These edges form the basis for segmenting the image.

- Region-based Segmentation: Grouping pixels based on their similarity in color, texture, or intensity. This often involves techniques like region growing or watershed algorithms.

- Clustering-based Segmentation: Using clustering algorithms like k-means to group pixels into clusters representing different segments. This approach is useful when the image doesn’t have well-defined boundaries.

- Deep Learning-based Segmentation: Using Convolutional Neural Networks (CNNs) like U-Net or Mask R-CNN. These models learn complex features and relationships from data to achieve highly accurate segmentation. This is currently the state-of-the-art approach.

For example, in medical imaging, we might use segmentation to identify tumors in an MRI scan. In autonomous driving, we could segment the road, cars, pedestrians, and other objects to enable safe navigation.

Q 23. Explain the concept of attention mechanisms in deep learning.

Attention mechanisms allow neural networks to focus on the most relevant parts of the input data. Imagine reading a book – you don’t focus equally on every word; you pay more attention to important phrases and sentences. Similarly, attention mechanisms guide the network to concentrate on specific features, improving accuracy and efficiency.

In deep learning, attention is implemented by assigning weights to different parts of the input. These weights represent the importance of each part in predicting the output. A higher weight indicates greater relevance. This is particularly useful in processing sequential data (like text or time series) and images, where not all parts of the input are equally informative.

The Transformer architecture, famous for its use in natural language processing (NLP) models like GPT-3, relies heavily on attention mechanisms. In computer vision, attention can help a model focus on specific regions of an image, leading to more accurate object detection and image classification.

There are different types of attention, such as self-attention (where the network attends to different parts of itself) and cross-attention (where the network attends to different parts of another input).

Q 24. How do you handle missing data in a dataset?

Missing data is a common problem in machine learning. The best way to handle it depends on the nature and extent of the missingness. There’s no one-size-fits-all solution, but here are some common strategies:

- Deletion: The simplest approach is to remove data points with missing values. However, this can lead to significant information loss, especially if the missing data is not randomly distributed (missing not at random).

- Imputation: Replacing missing values with estimated ones. Common methods include using the mean, median, or mode of the available data (simple imputation), or using more sophisticated techniques like k-Nearest Neighbors (k-NN) imputation or multiple imputation. K-NN finds the closest data points with complete data and uses their average to fill in the missing value.

- Model-based imputation: Employing machine learning models to predict the missing values. This can be more accurate than simpler imputation methods but requires careful model selection and validation.

- Ignoring the missing data: Certain algorithms, like decision trees, can handle missing data directly without needing imputation or deletion. This is a convenient approach when suitable.

The choice of method depends on the dataset and the learning algorithm used. For example, if the missing data is significant and not randomly distributed, more advanced techniques such as multiple imputation or model-based imputation are preferred over simple deletion or imputation.

Q 25. Describe different methods for data augmentation in computer vision.

Data augmentation significantly increases the size and diversity of your training dataset, improving the robustness and generalization ability of your model. It’s like showing a child many variations of a cat – a black cat, a white cat, a cat sitting, a cat sleeping – to ensure they can recognize a cat in any situation.

- Geometric Transformations: Rotating, flipping, cropping, scaling, and shearing images. This helps the model become invariant to these changes.

- Color Space Augmentation: Adjusting brightness, contrast, saturation, and hue. This improves the model’s robustness to variations in lighting conditions.

- Noise Addition: Adding Gaussian noise, salt-and-pepper noise, or other types of noise to simulate real-world conditions.

- Random Erasing: Randomly removing rectangular regions from the image. This simulates occlusions and forces the model to learn more robust features.

- Mixup: Linearly interpolating multiple images and their labels. This creates synthetic training examples and improves model generalization.

- Cutout: Similar to random erasing, but the removed region is a square.

For instance, when training an object detection model for self-driving cars, we might augment images by adding random noise to simulate poor weather conditions or by rotating images to account for different camera angles.

Q 26. Explain the concept of GANs (Generative Adversarial Networks) and their applications.

Generative Adversarial Networks (GANs) are a type of deep learning model consisting of two neural networks: a generator and a discriminator. Imagine a counterfeiter (generator) trying to create fake banknotes and a detective (discriminator) trying to identify them. They compete against each other, with the generator improving its ability to create realistic fakes and the discriminator improving its ability to detect them. This adversarial training leads to remarkably realistic outputs.

The generator takes random noise as input and tries to generate realistic data (e.g., images, text, music). The discriminator takes both real and generated data as input and tries to distinguish between them. This process is repeated iteratively, improving both networks’ performance over time.

Applications of GANs are numerous:

- Image Generation: Creating realistic images of faces, objects, scenes, etc.

- Image Enhancement: Upscaling low-resolution images, inpainting missing parts of images.

- Style Transfer: Transferring the style of one image to another.

- Drug Discovery: Generating new molecules with desired properties.

- Anomaly Detection: Identifying unusual patterns in data.

For example, GANs have been used to generate realistic images of human faces, helping in creating realistic avatars or assisting in designing new fashion items.

Q 27. What are some ethical considerations in using AI and machine learning?

Ethical considerations in AI and machine learning are paramount. The powerful capabilities of these technologies necessitate careful consideration of their potential societal impact:

- Bias and Fairness: AI models trained on biased data can perpetuate and amplify existing societal biases, leading to unfair or discriminatory outcomes. Careful data curation and algorithmic fairness techniques are crucial.

- Privacy and Security: AI systems often process sensitive personal data, raising concerns about privacy violations and data breaches. Robust security measures and data anonymization techniques are essential.

- Transparency and Explainability: Many AI models, especially deep learning models, are often ‘black boxes’, making it difficult to understand how they arrive at their decisions. This lack of transparency can be problematic, particularly in high-stakes applications such as healthcare and criminal justice.

- Job Displacement: Automation driven by AI could lead to significant job displacement, requiring proactive measures to support affected workers and adapt the workforce.

- Accountability and Responsibility: Determining who is responsible when an AI system makes a mistake or causes harm is a complex issue that needs careful consideration.

For example, a facial recognition system trained primarily on images of white faces might perform poorly on faces of other ethnicities, leading to inaccurate and potentially harmful outcomes.

Q 28. Describe your experience with a specific computer vision or machine learning project.

In a previous role, I led a project to develop a computer vision system for automated defect detection in manufactured circuit boards. The challenge was to identify subtle defects – scratches, cracks, missing components – in high-resolution images of circuit boards, with high accuracy and low false positives. This was crucial for maintaining high quality control standards in manufacturing.

We approached this using a combination of deep learning techniques. Initially, we explored various CNN architectures like ResNet and Inception, but found that a U-Net-based model provided the best performance for semantic segmentation of the defects. We also implemented data augmentation techniques to increase the robustness of the model and handle variations in lighting and image quality. A key element of the success was carefully curating a large, high-quality dataset of images with annotated defects.

The project involved significant experimentation with different model architectures, hyperparameters, and data augmentation strategies. We used precision-recall curves and F1-scores to evaluate and compare the performance of different models. The final model achieved a significant improvement over existing manual inspection methods, reducing defect detection time and increasing accuracy significantly. This resulted in substantial cost savings for the manufacturing company and contributed to improved product quality.

Key Topics to Learn for Computer Vision and Machine Learning Interviews

- Image Processing Fundamentals: Understanding image formation, filtering techniques (e.g., Gaussian, median), and transformations (e.g., Fourier, wavelet).

- Feature Extraction and Detection: Exploring SIFT, SURF, HOG, and other methods for identifying key features within images. Practical application: object recognition in security systems.

- Deep Learning Architectures for Computer Vision: Mastering Convolutional Neural Networks (CNNs), including architectures like AlexNet, VGG, ResNet, and understanding their applications in image classification, object detection (e.g., YOLO, Faster R-CNN), and semantic segmentation.

- Machine Learning Algorithms: A solid grasp of supervised learning (regression, classification), unsupervised learning (clustering, dimensionality reduction), and their applications in Computer Vision tasks. Practical application: anomaly detection in manufacturing processes using image data.

- Model Evaluation and Optimization: Understanding metrics like precision, recall, F1-score, AUC, and employing techniques like cross-validation and hyperparameter tuning for optimal model performance.

- Object Detection and Tracking: Familiarize yourself with different approaches to object detection and tracking, including single-object and multi-object tracking algorithms. Practical application: autonomous driving systems.

- Image Segmentation: Learn various segmentation techniques, including thresholding, region-based methods, and deep learning-based approaches (e.g., U-Net). Practical application: medical image analysis.

- 3D Computer Vision: Understanding concepts related to depth estimation, stereo vision, and point cloud processing. Practical application: robotics and augmented reality.

- Practical Problem-Solving: Develop your ability to approach real-world problems, analyze datasets, choose appropriate algorithms, and interpret results effectively.

Next Steps

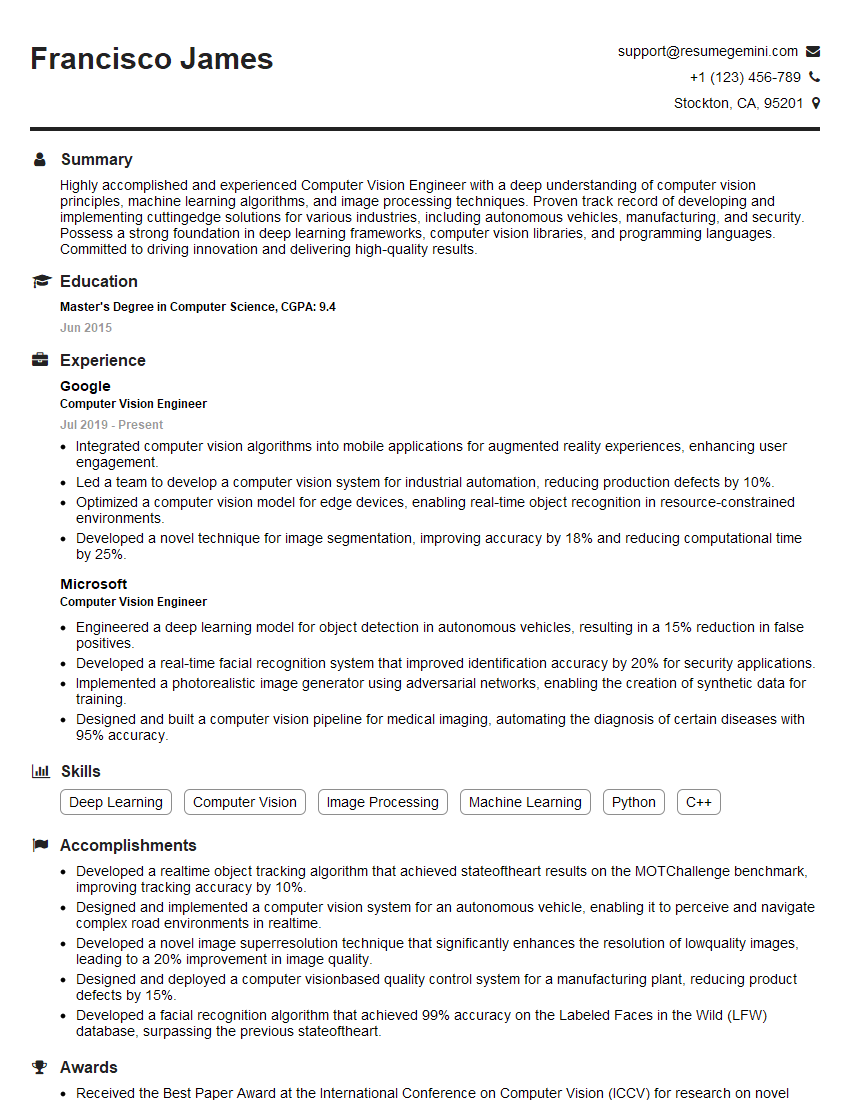

Mastering Computer Vision and Machine Learning opens doors to exciting and high-demand careers in various industries. To maximize your job prospects, creating a strong, ATS-friendly resume is crucial. ResumeGemini is a trusted resource to help you build a professional and impactful resume that highlights your skills and experience effectively. ResumeGemini provides examples of resumes tailored specifically to Computer Vision and Machine Learning roles to help guide you in showcasing your unique qualifications. Invest time in crafting a compelling resume—it’s your first impression on potential employers.

Explore more articles

Users Rating of Our Blogs

Share Your Experience

We value your feedback! Please rate our content and share your thoughts (optional).

What Readers Say About Our Blog

Hello,

We found issues with your domain’s email setup that may be sending your messages to spam or blocking them completely. InboxShield Mini shows you how to fix it in minutes — no tech skills required.

Scan your domain now for details: https://inboxshield-mini.com/

— Adam @ InboxShield Mini

Reply STOP to unsubscribe

Hi, are you owner of interviewgemini.com? What if I told you I could help you find extra time in your schedule, reconnect with leads you didn’t even realize you missed, and bring in more “I want to work with you” conversations, without increasing your ad spend or hiring a full-time employee?

All with a flexible, budget-friendly service that could easily pay for itself. Sounds good?

Would it be nice to jump on a quick 10-minute call so I can show you exactly how we make this work?

Best,

Hapei

Marketing Director

Hey, I know you’re the owner of interviewgemini.com. I’ll be quick.

Fundraising for your business is tough and time-consuming. We make it easier by guaranteeing two private investor meetings each month, for six months. No demos, no pitch events – just direct introductions to active investors matched to your startup.

If youR17;re raising, this could help you build real momentum. Want me to send more info?

Hi, I represent an SEO company that specialises in getting you AI citations and higher rankings on Google. I’d like to offer you a 100% free SEO audit for your website. Would you be interested?

Hi, I represent an SEO company that specialises in getting you AI citations and higher rankings on Google. I’d like to offer you a 100% free SEO audit for your website. Would you be interested?

good