The right preparation can turn an interview into an opportunity to showcase your expertise. This guide to Data Collection and Research interview questions is your ultimate resource, providing key insights and tips to help you ace your responses and stand out as a top candidate.

Questions Asked in Data Collection and Research Interview

Q 1. Explain the difference between quantitative and qualitative data collection methods.

Quantitative and qualitative data collection methods differ fundamentally in their approach to data and the type of insights they provide. Quantitative methods focus on numerical data and statistical analysis to establish relationships between variables and test hypotheses. Think of it like measuring ingredients in a recipe – you get precise numbers. Qualitative methods, on the other hand, explore in-depth understanding of experiences, perspectives, and meanings. It’s like interviewing the chef to understand their culinary philosophy behind the recipe.

- Quantitative: Employs methods like surveys with closed-ended questions, experiments, and structured observations. The goal is to quantify phenomena and test for statistical significance. For example, a survey measuring customer satisfaction on a scale of 1 to 5 would be a quantitative approach.

- Qualitative: Uses methods such as interviews (structured, semi-structured, or unstructured), focus groups, ethnography (observing people in their natural settings), and case studies. The goal is to gain rich, detailed insights and understand the ‘why’ behind observed phenomena. For instance, conducting in-depth interviews to understand why customers are dissatisfied would be a qualitative approach.

The choice between these methods depends heavily on the research question and the type of insights sought.

Q 2. Describe your experience with various data collection tools and techniques.

My experience spans a wide range of data collection tools and techniques. I’ve extensively used survey platforms like Qualtrics and SurveyMonkey for large-scale quantitative data collection. I’m proficient in designing and administering questionnaires, ensuring they’re clear, concise, and free from bias. For qualitative data, I’ve utilized interview software like Otter.ai for transcription and analysis, as well as audio and video recording equipment for capturing richer data from focus groups and ethnographic studies. Furthermore, I have experience using specialized software for data management and analysis, such as SPSS and R, to process and interpret data effectively.

In one project, we used a mixed-methods approach, employing online surveys for gathering quantitative data on user behavior and conducting follow-up interviews to gain deeper qualitative insights into the motivations behind their actions. This combination allowed for a more comprehensive understanding of the phenomenon under investigation.

Q 3. How do you ensure the reliability and validity of your data collection process?

Ensuring reliability and validity is paramount. Reliability refers to the consistency of the data collection process; will you get the same results if you repeat the process? Validity refers to the accuracy of the data – does it actually measure what it intends to measure?

- Reliability: We achieve this through rigorous instrument design, using standardized protocols, and piloting the data collection instruments before full-scale implementation to identify and correct any inconsistencies. For example, pretesting a questionnaire helps identify confusing questions or ambiguities. Inter-rater reliability is ensured through training for multiple researchers involved in data collection, especially in qualitative studies requiring subjective judgment.

- Validity: This is achieved through careful instrument design, using established scales and validated measures where possible, and employing appropriate sampling techniques to ensure the data is representative of the population of interest. Triangulation, which involves using multiple data sources or methods to corroborate findings, is particularly important for enhancing the validity of qualitative research.

Documenting every step of the data collection process is crucial for ensuring both reliability and validity, creating a transparent and auditable trail.

Q 4. What are some common challenges in data collection, and how have you overcome them?

Data collection invariably presents challenges. Some common ones include:

- Low response rates: This is a frequent issue in surveys. I’ve addressed this by employing strategies such as offering incentives, ensuring questionnaires are concise and engaging, and utilizing multiple modes of contact (email, phone, mail).

- Data quality issues: Inconsistent or incomplete data can significantly impact the results. This can be mitigated through data cleaning and validation, and by incorporating data quality checks throughout the process. For instance, using automated checks in online surveys or manually reviewing interview transcripts for inconsistencies.

- Access limitations: Gaining access to the target population can be difficult. This requires careful planning, obtaining appropriate permissions and building trust with participants. For example, navigating ethical review boards and gaining informed consent is crucial.

- Resource constraints: Time and budget limitations can constrain data collection efforts. Careful planning, prioritizing data collection methods, and efficient use of resources are vital.

Problem-solving in data collection often requires a flexible and adaptive approach, willing to adjust strategies in response to unforeseen challenges.

Q 5. How do you handle missing data in your analyses?

Missing data is a common problem. The best approach depends on the extent and pattern of missing data and the nature of the data itself.

- Listwise deletion: This simple method removes any cases with missing data. However, it can lead to significant loss of data and bias, particularly if the missing data is not random. It’s suitable only when the amount of missing data is minimal and is randomly distributed.

- Imputation: This involves replacing missing values with estimated values. Several techniques exist, such as mean imputation, regression imputation, or multiple imputation, each with its strengths and weaknesses. Multiple imputation is generally preferred as it accounts for uncertainty in the imputed values.

- Model-based approaches: Some statistical models are robust to missing data and can be applied directly without imputation, if the missing data mechanism is understood.

The choice of method should always be carefully considered, and any imputation strategy should be clearly documented and justified.

Q 6. Explain your understanding of sampling techniques and bias mitigation.

Sampling techniques are crucial for selecting a representative subset of the population for study, minimizing the cost and time involved in studying the entire population. Bias mitigation is equally critical to ensure the results accurately reflect the population and are not skewed by systematic errors.

- Sampling Techniques: Probability sampling (simple random, stratified, cluster) ensures every member of the population has a known chance of being selected, reducing selection bias. Non-probability sampling (convenience, purposive, snowball) is more convenient but may introduce bias. The choice depends on the research objectives and available resources.

- Bias Mitigation: Careful consideration of the sampling frame (the list of potential participants) is vital. Avoiding biased sampling frames is key. Randomization is critical in experimental designs to minimize confounding factors and ensure fair comparisons. Blinding, where participants or researchers are unaware of the treatment assignment, is another powerful bias mitigation technique.

Understanding the strengths and limitations of various sampling techniques and actively mitigating potential biases are essential for producing credible and generalizable research findings.

Q 7. Describe your experience with data cleaning and preprocessing.

Data cleaning and preprocessing are essential steps that significantly impact the quality of the analysis. It involves identifying and correcting errors, inconsistencies, and missing values in the dataset to ensure its accuracy and reliability.

- Error detection and correction: This involves identifying and correcting data entry errors, inconsistencies, and outliers. This often involves using data validation rules and visual inspections of the data.

- Handling missing data: Strategies outlined in the previous answer (imputation or deletion) are employed based on the characteristics of missing data.

- Data transformation: This involves converting data into a suitable format for analysis. This may include converting categorical variables into numerical representations (dummy coding), standardizing or normalizing variables, or creating new variables based on existing ones.

- Data reduction: Techniques such as principal component analysis (PCA) can reduce the dimensionality of the data by creating a smaller set of uncorrelated variables that capture most of the variation in the original dataset. This is useful for high-dimensional datasets.

Thorough data cleaning and preprocessing are crucial for ensuring the integrity of the data and the validity of the results. It’s often an iterative process requiring careful attention to detail and domain knowledge.

Q 8. What statistical methods are you proficient in using for data analysis?

My proficiency in statistical methods spans a wide range, encompassing both descriptive and inferential statistics. Descriptive statistics, such as calculating means, medians, modes, and standard deviations, are fundamental to summarizing and understanding data. I regularly use these to provide initial insights into datasets.

For inferential statistics, I’m experienced with hypothesis testing (t-tests, ANOVA, chi-squared tests), regression analysis (linear, logistic, multiple), and correlation analysis. For instance, in a recent project analyzing customer churn, I used logistic regression to identify key predictors of customer attrition, allowing the company to proactively target at-risk customers. I also frequently employ non-parametric methods like the Mann-Whitney U test when dealing with data that doesn’t meet the assumptions of parametric tests. Finally, I’m comfortable working with more advanced techniques like time series analysis and survival analysis when appropriate for the data and research question.

Q 9. How do you determine the appropriate sample size for a research project?

Determining the appropriate sample size is crucial for ensuring the reliability and validity of research findings. It’s not a one-size-fits-all approach; it depends on several factors. These include the desired level of precision (margin of error), the confidence level (typically 95%), the expected variability in the population (standard deviation), and the effect size you’re trying to detect.

I typically use power analysis to calculate the necessary sample size. This involves specifying the above parameters and using statistical software (like G*Power or R) to determine the minimum number of participants needed. For example, if we’re studying the effectiveness of a new drug, a larger sample size would be needed to detect a small effect size compared to a large effect size. Furthermore, I always consider practical constraints, such as budget and accessibility to the population, when finalizing the sample size. It’s a balance between statistical rigor and feasibility.

Q 10. Describe your experience with data visualization and reporting.

Data visualization and reporting are critical for communicating insights effectively. I’m proficient in using various tools, including Tableau, Power BI, and Python libraries like Matplotlib and Seaborn, to create compelling visuals. My approach is to select the most appropriate chart type for the data and the message I want to convey.

For instance, I might use bar charts for comparing categories, line charts for showing trends over time, scatter plots for exploring relationships between variables, and heatmaps for visualizing correlations. Beyond the charts themselves, I meticulously design reports that are clear, concise, and easy to navigate, ensuring that key findings are prominently displayed. I always tailor the report to the audience, simplifying technical details for non-technical stakeholders while providing greater depth for technical audiences.

Q 11. How do you ensure data privacy and security during collection and analysis?

Data privacy and security are paramount in my work. I strictly adhere to relevant regulations like GDPR and HIPAA, depending on the context of the project. My approach involves several key strategies: First, I anonymize or pseudonymize data wherever possible, removing or replacing identifying information. Second, I utilize secure data storage and transmission methods, including encryption both in transit and at rest. Third, I implement access control measures, restricting access to data based on the principle of least privilege. Only authorized personnel have access to sensitive information. Fourth, I follow strict data governance protocols, documenting all data handling procedures and ensuring data is handled according to ethical guidelines.

For example, in a project involving sensitive health data, we used differential privacy techniques to add noise to the data while preserving aggregate trends, ensuring individual privacy was maintained. Regular security audits and vulnerability assessments also form an integral part of my data handling process.

Q 12. What is your experience with different types of databases (e.g., relational, NoSQL)?

My experience with databases encompasses both relational and NoSQL databases. I’m proficient in working with relational databases like MySQL, PostgreSQL, and SQL Server, using SQL for data manipulation and querying. I understand the importance of database normalization and designing efficient relational schemas.

In addition, I’m experienced with NoSQL databases like MongoDB and Cassandra. I understand the advantages of NoSQL databases for handling large volumes of unstructured or semi-structured data. For example, in a project involving social media data, the flexibility and scalability of MongoDB were crucial in managing the high volume and variety of data. The choice between relational and NoSQL depends on the specific project requirements, and I’m comfortable making informed decisions based on factors such as data structure, scalability needs, and query patterns.

Q 13. Explain your experience with data mining techniques.

Data mining techniques are central to my work, allowing me to uncover hidden patterns and insights within large datasets. I’m experienced in various techniques, including association rule mining (using Apriori or FP-Growth algorithms), classification (using decision trees, support vector machines, or naive Bayes), clustering (K-means, hierarchical clustering), and regression.

For example, in a retail setting, I used association rule mining to identify frequently purchased item sets, enabling the business to optimize product placement and improve sales. I also frequently use dimensionality reduction techniques like PCA to simplify datasets before applying other data mining methods. The selection of appropriate data mining techniques depends heavily on the data and the research questions. I always emphasize careful model evaluation and validation to ensure the reliability of results.

Q 14. How do you communicate complex data findings to both technical and non-technical audiences?

Communicating complex data findings effectively to diverse audiences is a crucial skill. My approach involves tailoring my communication style and the level of detail to the audience. For technical audiences, I use precise terminology and delve into the details of the methodology and results. For non-technical audiences, I prioritize clear and concise explanations, focusing on the key takeaways and using visualizations to illustrate complex concepts.

I often use storytelling techniques to make data more engaging. For instance, instead of simply presenting statistical results, I might weave a narrative around the findings, highlighting the implications and potential actions. I believe effective communication requires strong visualization skills, the ability to translate technical language into plain English, and a good understanding of the audience’s needs and level of expertise. Interactive dashboards and presentations, coupled with clear written reports, are invaluable tools in this regard.

Q 15. Describe your experience with A/B testing or other experimental designs.

A/B testing, also known as split testing, is a controlled experiment where two versions of a webpage, email, or other element are shown to different user groups to determine which performs better. My experience encompasses designing, implementing, and analyzing A/B tests across various platforms. For instance, I once worked on optimizing the checkout process for an e-commerce website. We tested two versions: one with a streamlined design and the other with a more traditional layout. We used a statistical significance test (like a chi-squared test or a t-test) to compare conversion rates between the two groups. The streamlined design showed a statistically significant improvement in conversion rates, leading to a substantial increase in sales. Beyond A/B testing, I’m also experienced with more complex experimental designs such as factorial designs (testing multiple variables simultaneously), multivariate testing (testing multiple variations of multiple elements), and interrupted time series designs (measuring the impact of an intervention on a metric over time). Each design choice depends heavily on the research question and available resources. The key is careful planning to isolate the effects of the variables being tested.

Career Expert Tips:

- Ace those interviews! Prepare effectively by reviewing the Top 50 Most Common Interview Questions on ResumeGemini.

- Navigate your job search with confidence! Explore a wide range of Career Tips on ResumeGemini. Learn about common challenges and recommendations to overcome them.

- Craft the perfect resume! Master the Art of Resume Writing with ResumeGemini’s guide. Showcase your unique qualifications and achievements effectively.

- Don’t miss out on holiday savings! Build your dream resume with ResumeGemini’s ATS optimized templates.

Q 16. How do you evaluate the success of a data collection and analysis project?

Evaluating the success of a data collection and analysis project goes beyond simply producing numbers. It requires a multi-faceted approach focusing on both the process and the outcome. Firstly, we must consider whether the project met its objectives. Did we gather the data needed to answer our research questions? Was the data of sufficient quality and quantity? Secondly, the analysis should be rigorously evaluated: was the methodology appropriate? Are the conclusions supported by the evidence? Did we consider potential biases or limitations? Thirdly, the impact of the findings needs to be assessed. Did the results lead to actionable insights and changes? Did the project produce a return on investment (ROI) either directly through improved efficiency or indirectly through better decision-making? Finally, we assess the process itself. Was the project completed on time and within budget? Was the collaboration effective? Were there any unexpected challenges, and how were they overcome? This holistic approach ensures we learn from both successes and failures and improve future projects.

Q 17. What ethical considerations do you consider when conducting research?

Ethical considerations are paramount in any research project. My approach adheres to a strict code of ethics, prioritizing the well-being and rights of participants. Key considerations include:

- Informed Consent: Participants must be fully informed about the purpose of the study, their rights, and the potential risks and benefits before participating. This includes obtaining explicit consent, either written or verbal depending on the context.

- Privacy and Confidentiality: Data should be anonymized or pseudonymized whenever possible to protect participants’ identities. Secure storage and handling of data are essential to prevent breaches of confidentiality.

- Data Security: Robust measures should be in place to protect data from unauthorized access, use, disclosure, disruption, modification, or destruction.

- Bias and Fairness: We must be mindful of potential biases in our research design, data collection, and analysis, striving for fairness and inclusivity. We avoid perpetuating stereotypes or discrimination.

- Transparency and Honesty: All findings must be reported honestly and transparently, acknowledging any limitations or potential biases. This includes properly citing all sources and adhering to academic integrity principles.

Q 18. What software and tools are you familiar with for data collection and analysis?

My expertise spans a wide range of software and tools for data collection and analysis. For data collection, I’m proficient in using online survey platforms like Qualtrics and SurveyMonkey, as well as specialized tools for collecting specific data types, such as social media listening tools or web analytics platforms like Google Analytics. For data analysis, I’m highly experienced with programming languages like R and Python, leveraging libraries like pandas, numpy, scikit-learn, and ggplot2 for data manipulation, statistical analysis, and visualization. I’m also comfortable using statistical software packages such as SPSS and SAS. For data visualization, I utilize tools like Tableau and Power BI to create insightful and compelling dashboards and reports. Finally, I have experience with database management systems like MySQL and PostgreSQL for efficient data storage and retrieval.

Q 19. Explain your experience with data warehousing and ETL processes.

Data warehousing and ETL (Extract, Transform, Load) processes are essential for consolidating data from disparate sources into a central repository for analysis. My experience involves designing and implementing data warehouses using dimensional modeling techniques, creating efficient ETL pipelines using tools like Apache Kafka and Apache Spark, and ensuring data quality throughout the entire process. For example, in a previous role, I worked on building a data warehouse for a large telecommunications company. This involved extracting data from various operational systems (billing, customer service, network performance), transforming it to conform to a common data model, and loading it into a central data warehouse. The process involved resolving inconsistencies across different data sources, handling missing values, and ensuring data integrity. The resulting data warehouse provided a single source of truth for business intelligence and analytics, enabling data-driven decision-making across the organization.

Q 20. Describe a time you had to deal with conflicting data sources.

In a previous project analyzing customer satisfaction, we encountered conflicting data from two sources: customer surveys and social media feedback. The surveys indicated high overall satisfaction, while social media sentiment analysis revealed a significant number of negative comments related to a specific product feature. To resolve this conflict, I first investigated the potential reasons for the discrepancy. This involved examining the survey methodology (e.g., sampling bias) and the social media data collection process (e.g., the use of appropriate keywords and filtering of irrelevant data). We found that the survey had oversampled loyal customers, leading to an overly positive view. The social media data, though containing negative sentiment, was not representative of the entire customer base either. To synthesize the data, we weighted the survey results to better represent the broader customer population, using demographic data to adjust the sampling bias. We also segmented the social media data to focus on comments related to the specific product feature in question. By carefully analyzing and interpreting the data from both sources, we obtained a more nuanced understanding of customer sentiment.

Q 21. How do you ensure data quality throughout the research process?

Ensuring data quality is an ongoing process that begins at the design phase and continues throughout the research project. Key strategies include:

- Data Validation: Implementing rigorous checks and validation rules to ensure data accuracy and consistency at every step.

- Data Cleaning: Identifying and handling missing values, outliers, and inconsistencies in the data. This may involve imputation techniques, outlier removal, or data transformation.

- Data Standardization: Establishing consistent data formats and coding schemes to enable accurate comparisons and analyses across different data sources.

- Documentation: Maintaining comprehensive documentation of data collection procedures, cleaning processes, and analysis methods to ensure transparency and reproducibility.

- Regular Audits: Performing regular audits of the data to identify and address any emerging quality issues.

- Version Control: Using version control systems to track changes and modifications made to the data over time, allowing for rollback if necessary.

Q 22. Describe your experience with different data types (e.g., structured, unstructured).

My experience encompasses a wide range of data types, from neatly organized structured data to the more chaotic world of unstructured data. Structured data, like that found in relational databases, is easily searchable and analyzed because it adheres to a predefined format. Think of a spreadsheet with clearly defined columns and rows – each entry fits neatly into its designated category. I’ve extensively used structured data in projects involving customer demographics, sales figures, and website analytics. Unstructured data, however, is far less organized. This includes text documents, images, audio files, and social media posts. Working with this requires different techniques, often involving natural language processing (NLP) or image recognition to extract meaningful information. For example, in a recent project analyzing customer reviews, we used NLP to identify sentiment (positive, negative, neutral) and then correlated it with product features to improve design.

Semi-structured data falls between these two extremes. XML and JSON files are good examples. They have some organizational structure, but not the rigid constraints of relational databases. My experience with this type of data frequently involves web scraping and API interactions, where I’ve had to clean and transform the data to make it suitable for analysis. Successfully handling diverse data types is crucial for comprehensive research, enabling richer insights and more robust conclusions.

Q 23. How do you stay updated with the latest trends in data collection and research?

Staying current in the rapidly evolving field of data collection and research requires a multi-pronged approach. I regularly attend industry conferences like KDD and NeurIPS, where I network with colleagues and learn about cutting-edge techniques and tools. I also subscribe to several leading journals and publications, such as the Journal of the American Statistical Association and Communications of the ACM, to stay abreast of the latest research. Beyond formal publications, I actively participate in online communities, forums, and webinars focusing on data science and research methodologies. Following key influencers and researchers on platforms like Twitter and LinkedIn provides valuable insights into emerging trends. Furthermore, I dedicate time to exploring open-source tools and libraries, constantly testing and evaluating new technologies and their applications in data collection and analysis. Continuous learning is vital in this field.

Q 24. What is your experience with working with large datasets?

I have significant experience handling large datasets, often exceeding terabytes in size. Working with such datasets requires careful planning and leveraging the power of distributed computing frameworks like Hadoop and Spark. These tools allow for parallel processing, making it possible to efficiently analyze massive amounts of data. Furthermore, I’m proficient in using cloud-based solutions such as AWS S3 and Google Cloud Storage for storing and managing large datasets. One project involved analyzing a petabyte-scale dataset of sensor readings to predict equipment failures. This involved not only efficient storage and processing but also sophisticated data reduction and feature engineering techniques to avoid computational bottlenecks and ensure accurate results. Proper data management is paramount when dealing with big data, minimizing storage costs while ensuring data integrity and accessibility.

Q 25. How do you approach designing a data collection instrument (e.g., survey, interview guide)?

Designing a data collection instrument is a crucial step. It begins with clearly defining the research question and objectives. This guides the selection of appropriate data collection methods (survey, interviews, observations, etc.) and the design of the instruments. For example, if we’re researching customer satisfaction, a survey might be ideal. However, if we’re exploring the complexities of a particular decision-making process, in-depth interviews would provide richer qualitative data. Each question must be carefully worded to avoid ambiguity and bias, using clear and concise language appropriate for the target audience. Pilot testing is essential to identify and rectify any flaws in the instrument before full-scale deployment. This involves testing the instrument on a smaller sample group to gather feedback and make necessary adjustments. The goal is to ensure the instrument effectively captures the relevant information, is easy to administer, and yields reliable and valid data.

Q 26. Describe your experience with hypothesis testing and statistical significance.

Hypothesis testing and statistical significance are fundamental aspects of my work. A hypothesis is a testable statement about a population parameter. We use statistical methods to determine whether the data collected provides enough evidence to reject the null hypothesis (typically a statement of no effect). Statistical significance refers to the probability of observing the obtained results (or more extreme results) if the null hypothesis were true. A p-value less than a predetermined significance level (usually 0.05) indicates statistically significant results, suggesting that the null hypothesis should be rejected. However, statistical significance doesn’t automatically imply practical significance. A small effect might be statistically significant in a large sample, but it might not be practically meaningful. I always consider both statistical and practical significance when interpreting results. For instance, in a clinical trial, a statistically significant improvement in a treatment’s effectiveness might not be practically important if the improvement is very small.

Q 27. Explain your understanding of different types of research designs (e.g., experimental, observational).

Research designs are the frameworks that guide the collection and analysis of data. Experimental designs involve manipulating an independent variable to observe its effect on a dependent variable, often with a control group for comparison. This allows for causal inferences. A classic example is a randomized controlled trial in medicine. Observational designs, on the other hand, do not involve manipulation of variables. Researchers observe and measure variables without intervention. Types include cohort studies, case-control studies, and cross-sectional studies. These designs are useful when manipulating variables is unethical or impractical. Choosing the right research design depends heavily on the research question and ethical considerations. The strength of causal inference differs significantly between these designs, with experimental studies typically offering stronger evidence of causality.

Q 28. How do you manage and prioritize multiple data collection projects simultaneously?

Managing multiple data collection projects simultaneously requires robust organizational skills and effective time management. I use project management tools like Jira or Asana to track tasks, deadlines, and resources for each project. This includes assigning priorities to tasks based on deadlines and importance. Clear communication with team members is crucial to ensure everyone is aligned and working efficiently. Regular progress meetings help identify and resolve any bottlenecks or challenges early on. Prioritization is based on factors like project deadlines, resource availability, and strategic importance. For instance, a time-sensitive project with a critical deadline would naturally take precedence over a less urgent one. Careful delegation of tasks based on team members’ skills and expertise is also essential to maximize efficiency. By employing these strategies, I ensure that all projects are completed successfully and within the allotted timeframes.

Key Topics to Learn for Data Collection and Research Interview

- Research Design: Understanding qualitative vs. quantitative methods, choosing appropriate methodologies for different research questions, and outlining the research process from inception to conclusion. Practical application: Designing a study to assess customer satisfaction with a new product.

- Data Collection Techniques: Mastering various data gathering methods such as surveys (online, phone, in-person), interviews (structured, semi-structured, unstructured), focus groups, observations, and document analysis. Practical application: Selecting the optimal data collection method for a specific research objective, considering budget and time constraints.

- Sampling Strategies: Understanding probability and non-probability sampling techniques, determining appropriate sample sizes, and minimizing sampling bias. Practical application: Justifying the choice of a specific sampling method for a given research project and addressing potential biases.

- Data Management and Cleaning: Techniques for organizing, cleaning, and preparing data for analysis, including handling missing data and outliers. Practical application: Using software like R or Python to clean and transform a large dataset for analysis.

- Data Analysis and Interpretation: Familiarity with descriptive and inferential statistics, visualizing data effectively, and drawing meaningful conclusions from research findings. Practical application: Interpreting statistical results to support or refute a research hypothesis.

- Ethical Considerations in Research: Understanding principles of informed consent, confidentiality, and data privacy. Practical application: Developing an ethical research protocol that addresses potential risks and safeguards participant rights.

- Data Visualization and Reporting: Creating clear and concise reports that effectively communicate research findings to various audiences. Practical application: Presenting research results using graphs, charts, and tables to highlight key insights.

Next Steps

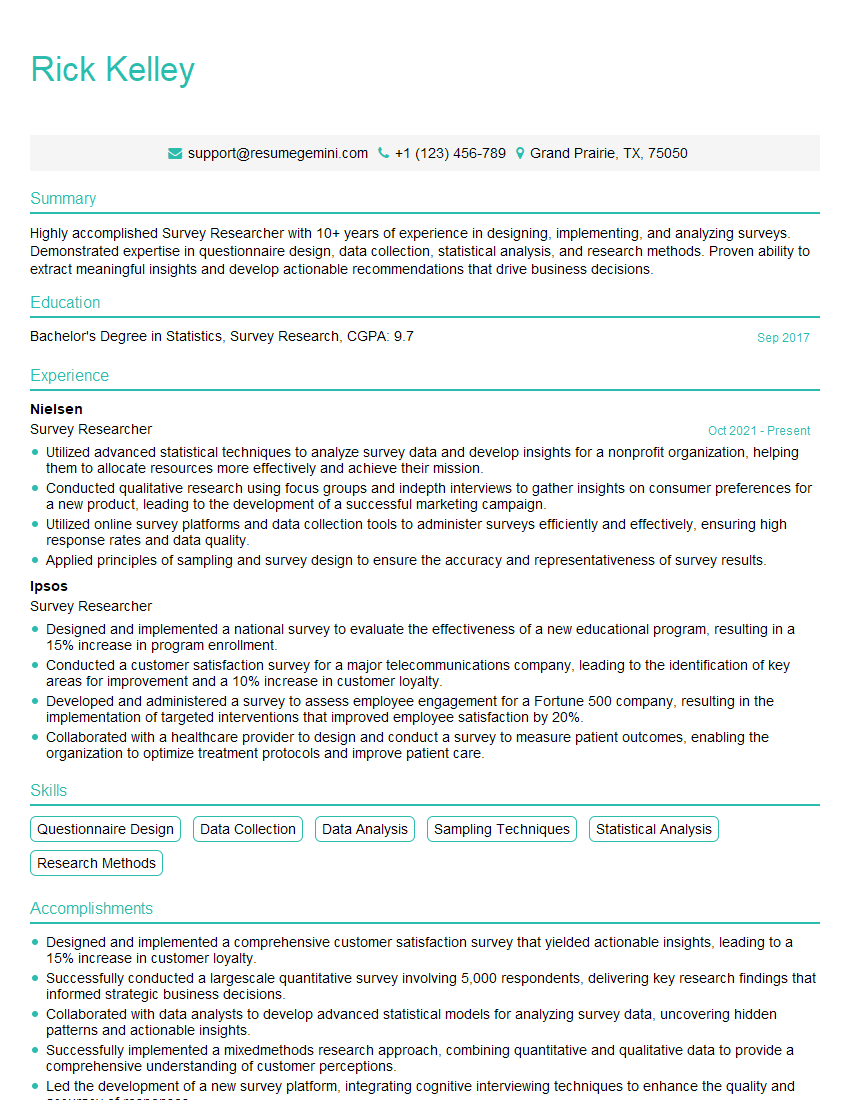

Mastering Data Collection and Research is crucial for career advancement in many fields. A strong understanding of these principles opens doors to exciting opportunities and allows you to make a significant impact. To maximize your job prospects, crafting an ATS-friendly resume is essential. This ensures your application gets noticed by recruiters and hiring managers. We highly recommend using ResumeGemini, a trusted resource, to build a professional and impactful resume. ResumeGemini provides examples of resumes tailored specifically to Data Collection and Research roles, helping you present your skills and experience effectively. Take the next step towards your dream career today!

Explore more articles

Users Rating of Our Blogs

Share Your Experience

We value your feedback! Please rate our content and share your thoughts (optional).