Cracking a skill-specific interview, like one for Data Integration Patterns, requires understanding the nuances of the role. In this blog, we present the questions you’re most likely to encounter, along with insights into how to answer them effectively. Let’s ensure you’re ready to make a strong impression.

Questions Asked in Data Integration Patterns Interview

Q 1. Explain the difference between ETL and ELT processes.

ETL (Extract, Transform, Load) and ELT (Extract, Load, Transform) are both data integration processes, but they differ significantly in *when* the data transformation occurs. Think of it like preparing a meal:

- ETL is like prepping all your ingredients (extracting), chopping them up and mixing them perfectly (transforming), and then finally putting everything in the oven to cook (loading). The transformation happens *before* the data is loaded into the target system. This means data is transformed in a staging area before reaching the data warehouse. This is suitable for smaller datasets where transformation can be done efficiently.

- ELT is like throwing all the raw ingredients into a slow cooker (extracting and loading), and letting it simmer and blend together naturally (transforming). The transformation happens *after* the data is loaded into the target system, typically using the target system’s capabilities (like SQL or cloud-based services). This approach is beneficial for larger datasets, enabling faster initial data ingestion and leveraging the power of cloud data warehouses for transformation.

In short, ETL prioritizes transformation *before* loading, leading to potentially smaller data volumes in the target system, while ELT prioritizes speed of loading, leaving the transformation to be handled by the target system’s capabilities. The best approach depends on factors like data volume, data velocity, transformation complexity, and the capabilities of the target system.

Q 2. Describe three common data integration patterns and their use cases.

Three common data integration patterns are:

- Data Virtualization: This pattern creates a unified view of data from disparate sources without physically moving or copying the data. Imagine a librarian creating a catalog of all the books in various library branches – you don’t need to physically move the books, just a consolidated index. Use cases include providing a single point of access to multiple data sources for reporting and analytics, reducing data redundancy and improving data governance.

- Change Data Capture (CDC): This involves tracking only the changes in data sources instead of replicating the entire dataset every time. Think of it like using a ‘track changes’ feature in a document instead of rewriting the whole thing every time you make a small correction. This is highly efficient for handling large volumes of data where only incremental updates are required. Use cases include real-time data warehousing, operational data stores, and providing near real-time analytics.

- Message Queues (e.g., Kafka, RabbitMQ): These act as intermediaries, buffering and routing data between systems asynchronously. Imagine a post office receiving letters (data) and distributing them to different addresses (target systems). This allows for decoupling systems, improving scalability, and handling high data volumes with high throughput. Use cases include real-time data streaming, event-driven architectures, and microservices integration.

Q 3. What are the advantages and disadvantages of using a message queue in data integration?

Using message queues in data integration offers several advantages, but also has some drawbacks:

- Advantages:

- Decoupling: Systems can operate independently, improving resilience and flexibility.

- Asynchronous processing: Data can be processed asynchronously, increasing throughput and preventing bottlenecks.

- Scalability: Message queues can easily scale to handle high data volumes.

- Fault tolerance: Messages are persistent, ensuring data is not lost in case of failures.

- Disadvantages:

- Complexity: Introducing a message queue adds complexity to the architecture.

- Monitoring overhead: Requires monitoring of message queues to ensure reliable delivery.

- Potential for message loss (although rare with proper configuration): While rare, there’s a slight chance of message loss if not properly configured and managed.

- Latency: Adding a message queue might introduce some latency, although this is usually minimal compared to the benefits.

Q 4. How would you handle data inconsistencies during data integration?

Handling data inconsistencies during data integration is crucial. A multi-step approach is typically required:

- Identify inconsistencies: Use data profiling and comparison techniques to detect inconsistencies. This involves comparing data from different sources to identify discrepancies in data types, formats, values, and structures.

- Analyze the root cause: Understand *why* the inconsistencies exist. Are they due to different data entry practices, system limitations, or data quality issues?

- Establish a resolution strategy: Decide how to handle the inconsistencies. This might involve:

- Data cleansing: Correcting the inaccurate data by applying rules or algorithms.

- Data standardization: Transforming data into a consistent format.

- Data deduplication: Identifying and merging duplicate records.

- Data enrichment: Supplementing data with additional information.

- Conflict resolution rules: Defining rules to prioritize data from specific sources in case of conflicts.

- Implement and monitor: Put your chosen strategy into action and continuously monitor for new inconsistencies.

Remember, proper documentation of these rules and processes is critical for maintainability and repeatability.

Q 5. Explain the concept of change data capture (CDC) and its importance in data integration.

Change Data Capture (CDC) is the process of identifying and tracking only the changes made to data sources, rather than replicating the entire dataset. Think of it as a ‘diff’ function for databases. This is extremely important in data integration because it allows for:

- Increased efficiency: Only changed data is processed, dramatically reducing processing time and bandwidth consumption, especially beneficial with large datasets and frequent updates.

- Near real-time data integration: Changes are captured and integrated almost immediately, providing up-to-the-minute insights.

- Reduced storage costs: Only the changes, not the entire dataset, need to be stored and processed.

- Improved data quality: By focusing on changes, inconsistencies can be identified and resolved more quickly.

CDC is commonly used in scenarios requiring real-time analytics dashboards, operational data stores, and applications requiring constant data synchronization.

Q 6. What are some common data quality challenges in data integration and how do you address them?

Common data quality challenges in data integration include:

- Inconsistent data formats: Data from different sources may use different formats (dates, currencies, etc.).

- Data duplication: The same data may exist in multiple sources, leading to inconsistencies and data bloat.

- Missing values: Some data points may be missing, requiring imputation or other handling techniques.

- Inaccurate data: Data may contain errors due to human input, system failures, or data corruption.

- Data inconsistency across sources: Different definitions or interpretations of data across systems.

Addressing these challenges requires a combination of:

- Data profiling: Analyzing data to understand its structure, quality, and characteristics.

- Data cleansing: Identifying and correcting errors in the data.

- Data standardization: Transforming data into a consistent format.

- Data validation: Implementing rules to ensure data quality.

- Metadata management: Providing clear descriptions of data sources, formats, and meanings.

A robust data quality framework, including automated checks and monitoring, is essential for addressing these challenges effectively.

Q 7. Describe your experience with different data integration tools (e.g., Informatica, Talend, Matillion).

I’ve had extensive experience with several data integration tools, including Informatica PowerCenter, Talend Open Studio, and Matillion. My experience encompasses:

- Informatica PowerCenter: I’ve utilized PowerCenter for large-scale ETL processes in enterprise environments. Its robust features for data transformation, mapping, and scheduling are invaluable for complex integration projects. I have experience with its metadata management capabilities and performance tuning techniques.

- Talend Open Studio: I’ve leveraged Talend’s open-source capabilities for building and deploying ETL processes for smaller-scale projects and prototyping. Its user-friendly interface and extensive connectors make it ideal for rapid development.

- Matillion: I’ve worked with Matillion for cloud-based data integration projects within AWS and Azure. Its ease of use and integration with cloud data warehouses is very beneficial for cloud-native architectures. I particularly appreciate its strong capabilities for data transformation within the cloud environment.

In each case, my work involved designing, implementing, testing, and deploying data integration solutions, addressing various challenges related to data quality, performance, and scalability.

Q 8. How do you ensure data security and privacy during data integration?

Data security and privacy are paramount during data integration. Think of it like transporting valuable goods – you need robust security measures at every stage of the journey. We need to protect data at rest and in transit.

- Encryption: Both data at rest (within databases and storage) and data in transit (during transfer between systems) should be encrypted using strong algorithms like AES-256. This ensures that even if data is intercepted, it remains unreadable without the decryption key.

- Access Control: Implementing robust access control mechanisms, such as role-based access control (RBAC), is crucial. Only authorized personnel should have access to sensitive data, and their access should be limited to what’s necessary for their roles. This minimizes the risk of unauthorized data access.

- Data Masking and Anonymization: For development and testing environments, consider using data masking techniques to replace sensitive data with fake, but similar, data. Anonymization techniques can be used to remove personally identifiable information (PII) from data before it enters the integration pipeline.

- Data Loss Prevention (DLP): DLP tools monitor data flows and can detect and prevent the exfiltration of sensitive data. This is especially useful for detecting and blocking attempts to steal data during integration processes.

- Auditing and Logging: Maintain detailed logs of all data access, modifications, and transfers. This allows for tracking data movement and identifying any unauthorized activities. Regular auditing ensures compliance with regulations and helps in incident response.

- Compliance with Regulations: Adherence to relevant regulations, such as GDPR, CCPA, and HIPAA, is crucial. This involves implementing appropriate technical and organizational measures to protect personal data.

For example, in a project integrating customer data from multiple sources, we implemented end-to-end encryption using TLS for data in transit and AES-256 for data at rest. We also implemented RBAC to restrict access to sensitive customer information based on user roles.

Q 9. Explain the concept of data virtualization and its benefits.

Data virtualization creates a unified view of data from disparate sources without physically moving or replicating the data. Imagine it as a virtual library catalog – you can search and access information from various books (databases) without having to physically combine them into one giant book.

- Benefits:

- Reduced Data Movement: Avoids the overhead and cost of data replication and ETL processes.

- Improved Data Agility: Allows for quick access to data from various sources without major data transformation and pipeline building.

- Enhanced Data Governance: Provides a single point of access for data, making it easier to manage and govern.

- Simplified Integrations: Enables faster and easier integration of new data sources.

- Cost Savings: Reduces storage costs and the need for complex ETL processes.

In a recent project, we used data virtualization to provide a unified view of customer data from our CRM, marketing automation, and sales systems. This eliminated the need to create a massive data warehouse and significantly reduced integration time and costs. The business users could easily access a consolidated view through a single interface.

Q 10. How do you handle large data volumes in data integration?

Handling large data volumes requires employing strategies that optimize processing and minimize resource consumption. Think of it like building a highway system to efficiently manage traffic – you wouldn’t just have one road for all vehicles.

- Parallel Processing: Break down large datasets into smaller chunks and process them concurrently using multiple processors or machines. This significantly reduces processing time.

- Distributed Computing: Distribute the processing workload across a cluster of machines, leveraging the power of multiple computers to handle large datasets efficiently.

- Data Partitioning: Divide the dataset into smaller, manageable partitions based on relevant criteria (e.g., date, region). This allows for parallel processing and reduces the load on individual machines.

- Streaming Data Processing: For continuous data streams (e.g., sensor data, financial transactions), use streaming platforms like Apache Kafka or Apache Flink to process data in real time without needing to store everything before processing.

- Data Compression: Compress the data to reduce storage space and network bandwidth requirements. Efficient compression algorithms can save significant resources.

- Columnar Storage: Employ columnar databases like Apache Parquet or ORC. These are optimized for analytical queries and efficiently handle large volumes of data.

For instance, when integrating terabytes of log data, we utilized a distributed processing framework like Apache Spark to process data in parallel across a cluster of machines. This allowed us to process the data significantly faster than with a single-machine approach.

Q 11. What are some best practices for designing and implementing a data integration pipeline?

Designing and implementing a robust data integration pipeline requires a well-defined strategy. Think of it as constructing a well-engineered building – you wouldn’t just start laying bricks without a blueprint.

- Clearly Defined Requirements: Begin with detailed requirements gathering to understand data sources, transformations, and target systems.

- Modular Design: Build the pipeline using modular components for better maintainability, testability, and scalability. This allows for easier updates and modifications.

- ETL Process Optimization: Optimize the Extract, Transform, Load (ETL) process to minimize data latency and resource usage. This might involve techniques like incremental loading and data filtering.

- Error Handling and Logging: Implement robust error handling and logging mechanisms to detect and manage errors during pipeline execution. Detailed logs are crucial for debugging and monitoring.

- Testing and Validation: Thoroughly test the pipeline at each stage (unit testing, integration testing, end-to-end testing) to ensure data quality and accuracy.

- Monitoring and Alerting: Set up monitoring tools to track pipeline performance and generate alerts for failures or anomalies.

- Version Control: Use version control systems (like Git) to manage code and configuration changes, enabling rollback to previous versions if needed.

- Documentation: Document the entire pipeline, including data sources, transformations, target systems, and error handling procedures. This facilitates maintenance and troubleshooting.

In a recent project, we adopted a modular design, breaking down the integration pipeline into reusable components for data extraction, transformation, and loading. This improved maintainability and allowed us to easily add new data sources.

Q 12. Describe your experience with different database technologies (e.g., SQL Server, Oracle, MySQL).

I possess extensive experience with various database technologies, including SQL Server, Oracle, and MySQL. Each has its strengths and weaknesses, making them suitable for different applications.

- SQL Server: Highly reliable and scalable, excellent for enterprise-level applications, strong integration with other Microsoft products. I’ve used it extensively in projects requiring robust transaction management and high availability.

- Oracle: Known for its robust features and scalability, often preferred for large-scale, mission-critical applications. My experience includes working with Oracle’s advanced features, such as RAC (Real Application Clusters) for high availability.

- MySQL: A popular open-source database, very versatile and suitable for a wide range of applications, especially web applications. I’ve used it in numerous projects requiring cost-effective solutions and ease of deployment.

For example, in one project, we used SQL Server for our core transactional database due to its excellent transaction management capabilities. In another, we chose MySQL for a web application due to its ease of use and cost-effectiveness.

Q 13. How do you monitor and troubleshoot data integration pipelines?

Monitoring and troubleshooting data integration pipelines are crucial for ensuring data quality and timely delivery. Think of it as monitoring the health of a vital organ – you need to know if anything is malfunctioning.

- Monitoring Tools: Utilize monitoring tools to track pipeline performance, including data volume, processing time, error rates, and resource utilization. Examples include Grafana, Prometheus, and Datadog.

- Logging and Alerting: Implement robust logging and alerting systems to notify administrators of errors, failures, or performance bottlenecks. This allows for timely intervention and prevents major issues.

- Data Quality Checks: Implement data quality checks at various stages of the pipeline to detect and address data anomalies or inconsistencies.

- Root Cause Analysis: When issues arise, conduct thorough root cause analysis to identify the underlying problem and implement appropriate fixes. This often involves examining logs, reviewing data transformations, and checking source systems.

- Performance Tuning: Optimize pipeline performance by identifying bottlenecks and improving data processing efficiency. This may involve adjusting query optimization strategies or upgrading hardware.

In a recent project, we implemented a monitoring system that tracked pipeline performance metrics and sent alerts for failures. This allowed us to quickly identify and resolve issues before they impacted downstream systems.

Q 14. Explain the concept of data lineage and its importance.

Data lineage tracks the journey of data from its origin to its final destination. Think of it as a detailed map showing the entire path of a package, from its point of origin to its final delivery address. This is vital for understanding data transformations and ensuring data quality and compliance.

- Importance:

- Data Governance: Enables efficient data governance by providing a clear understanding of data origins, transformations, and usage.

- Data Quality: Helps in identifying and resolving data quality issues by tracing the source of errors or inconsistencies.

- Compliance: Essential for demonstrating compliance with data privacy and security regulations (e.g., GDPR, CCPA).

- Auditing: Supports auditing processes by providing a detailed history of data transformations and access.

- Debugging: Aids in debugging and troubleshooting data integration issues by tracing the flow of data through the pipeline.

In a project involving sensitive customer data, maintaining accurate data lineage allowed us to quickly identify the source of a data breach and comply with regulatory requirements for data privacy.

Q 15. How do you test and validate data integration processes?

Testing and validating data integration processes is crucial for ensuring data quality and accuracy. It’s like building a house – you wouldn’t skip inspections! My approach is multifaceted, incorporating various techniques at different stages.

Unit Testing: I test individual components, such as data transformation scripts or ETL (Extract, Transform, Load) jobs, in isolation. This helps pinpoint issues early. For example, I’d test a script that cleanses addresses to ensure it correctly handles various address formats.

Integration Testing: Once individual components work, I test their interaction. This verifies data flows correctly between systems. Think of it as testing the plumbing in your house – are all the pipes connected correctly?

System Testing: This involves testing the entire integration process end-to-end, from source to target system. It’s the final check to ensure everything works as expected. Similar to a final inspection of the house.

Data Quality Checks: I implement checks to validate data accuracy, completeness, and consistency after integration. This includes verifying data types, checking for null values, and comparing data counts against expected values. This is like making sure the electrical wiring meets code.

Performance Testing: This assesses the speed and efficiency of the integration process. It identifies bottlenecks and ensures it can handle the expected data volume and throughput. Think load testing – how many people can live comfortably in the house?

These tests are often automated using tools like Jenkins or pytest for efficient and repeatable validation.

Career Expert Tips:

- Ace those interviews! Prepare effectively by reviewing the Top 50 Most Common Interview Questions on ResumeGemini.

- Navigate your job search with confidence! Explore a wide range of Career Tips on ResumeGemini. Learn about common challenges and recommendations to overcome them.

- Craft the perfect resume! Master the Art of Resume Writing with ResumeGemini’s guide. Showcase your unique qualifications and achievements effectively.

- Don’t miss out on holiday savings! Build your dream resume with ResumeGemini’s ATS optimized templates.

Q 16. What are some common performance bottlenecks in data integration and how do you optimize them?

Performance bottlenecks in data integration are common, often stemming from inefficient processes or inadequate infrastructure. Imagine a highway with a narrow bottleneck – traffic jams ensue! Here are some common culprits and solutions:

Network Latency: Slow network connections between systems significantly impact performance. Solution: Optimize network infrastructure, use faster connections, or consider data replication techniques.

Inefficient Data Transformations: Complex or poorly optimized transformations can consume excessive processing time. Solution: Optimize scripts, leverage parallel processing, and use efficient algorithms. For instance, using vectorized operations in pandas (Python) rather than looping.

Database Bottlenecks: Slow database queries or inadequate database infrastructure can slow the entire process. Solution: Optimize database queries, ensure sufficient database resources (CPU, memory, I/O), and consider indexing strategies.

Inadequate Hardware Resources: Insufficient server resources (CPU, memory, storage) can lead to performance issues. Solution: Upgrade server hardware, distribute the load across multiple servers, or utilize cloud-based solutions with scalable resources.

Poorly Designed ETL Processes: Inefficient ETL jobs can be major bottlenecks. Solution: Analyze ETL processes to identify and eliminate redundancy, optimize data filtering, and implement efficient data loading strategies.

Profiling tools and performance monitoring are invaluable in identifying and addressing these bottlenecks. Regular monitoring and optimization are key.

Q 17. Describe your experience with different data formats (e.g., JSON, XML, CSV).

I have extensive experience working with various data formats, each with its own strengths and weaknesses. It’s like having a toolbox with different tools for different jobs.

JSON (JavaScript Object Notation): A lightweight, human-readable format ideal for web applications and APIs. I frequently use JSON in microservices architectures and NoSQL databases. I’m proficient in parsing and manipulating JSON using libraries like

json.loads()in Python.XML (Extensible Markup Language): A more structured format often used for data exchange between different systems, especially in enterprise settings. XML’s hierarchical structure requires more robust parsing, often using libraries like

xml.etree.ElementTreein Python.CSV (Comma Separated Values): A simple, widely used format for storing tabular data. It’s great for quick data exploration but lacks the richness and schema definition of JSON or XML. Python’s

csvmodule is my go-to for processing CSV files.

My experience extends to handling nested structures, complex schemas, and data validation within these formats. I’m also familiar with other formats like Avro, Parquet, and ORC, which are particularly useful for big data processing.

Q 18. How do you handle data transformations in data integration?

Data transformation is the heart of data integration, where raw data is converted into a usable format. It’s like refining raw ore into usable metal. I utilize various techniques depending on the needs:

ETL Tools: I leverage ETL tools like Informatica PowerCenter or Apache Kafka to perform complex transformations at scale. These tools offer features for data cleansing, enrichment, and aggregation.

Scripting Languages: Python is my primary choice for custom transformations. Libraries like Pandas provide powerful tools for data manipulation, including data cleaning, filtering, aggregation, and joining. For instance,

df['column'] = df['column'].str.lower()converts a column to lowercase.SQL: I use SQL for database-centric transformations, leveraging functions and stored procedures to modify data directly within the database.

UPDATE table SET column = value WHERE condition;is a common SQL update statement.Mapping and Rules Engines: These tools define transformations through rules or mappings, providing a declarative approach. They are especially useful for complex transformations involving multiple data sources.

My approach always emphasizes data quality and consistency. I implement comprehensive error handling and logging to track transformations and identify potential issues. The choice of method depends on factors like data volume, complexity of transformation, and available tools.

Q 19. Explain the concept of metadata management in data integration.

Metadata management is the systematic organization and control of data about data – the metadata. It’s like a map for your data landscape. Effective metadata management is essential for understanding, accessing, and managing your data assets.

Technical Metadata: Describes the technical characteristics of data, such as data format, location, schema, and data quality metrics. This is vital for ETL processes and data discovery.

Business Metadata: Provides context about the data, such as its meaning, origin, and intended use. This ensures that data is understood and used correctly by business users.

Operational Metadata: Tracks the data integration process itself, including job execution times, data volumes, and error logs. This information is useful for monitoring and performance tuning.

I use metadata repositories to store and manage this information. Tools like Data Catalogs help centralize metadata, making it easier to discover, understand, and govern data. Proper metadata management greatly improves data governance, data quality, and the overall efficiency of data integration projects.

Q 20. What are the key considerations for cloud-based data integration?

Cloud-based data integration offers scalability, cost-effectiveness, and flexibility compared to on-premises solutions. However, several considerations are crucial:

Scalability and Elasticity: Cloud platforms offer scalability to handle fluctuating data volumes. However, careful planning is needed to ensure resources are appropriately provisioned.

Security and Compliance: Protecting data in the cloud requires robust security measures, including encryption, access control, and compliance with relevant regulations like GDPR or HIPAA.

Data Governance and Management: Establishing clear data governance policies and utilizing cloud-based data governance tools are essential to ensure data quality, consistency, and compliance.

Cost Optimization: Cloud costs can vary greatly. Careful monitoring and optimization of cloud resources are crucial to avoid unexpected expenses.

Vendor Lock-in: Choosing a cloud provider involves potential vendor lock-in. Carefully assess your long-term needs and choose a provider with open standards and APIs to minimize this risk.

Integration with Existing Systems: Ensure seamless integration with existing on-premises systems and applications. Hybrid cloud strategies may be necessary to manage this effectively.

Careful planning and selection of appropriate cloud services are critical to realizing the full benefits of cloud-based data integration.

Q 21. How do you ensure data consistency across different data sources?

Ensuring data consistency across different data sources is a major challenge in data integration. It’s like harmonizing different musical instruments in an orchestra. My approach involves these strategies:

Data Standardization: Establishing common data definitions, formats, and naming conventions across all data sources. This includes data cleansing and transformation to align inconsistent data.

Data Quality Rules: Implementing data quality rules to enforce consistency and identify inconsistencies early in the integration process. This can involve data validation rules, data profiling, and anomaly detection.

Master Data Management (MDM): Implementing an MDM system to create a single, authoritative source of truth for critical data entities. This ensures consistency across different systems and applications.

Data Reconciliation: Regularly reconciling data across different systems to identify and resolve discrepancies. Techniques like data matching and merge algorithms are employed for this purpose.

Data Versioning: Maintaining a history of data changes and versions to enable tracking and rollback in case of errors or inconsistencies. This is especially important for audit trails and compliance requirements.

A combination of these strategies, implemented within a robust data governance framework, is vital for maintaining data consistency across various data sources.

Q 22. Explain the concept of schema mapping in data integration.

Schema mapping is the crucial process of aligning the structures of data from different sources to enable seamless integration. Think of it like translating between different languages – each source has its own ‘dialect’ (schema), and schema mapping creates a common understanding. This involves identifying corresponding fields, data types, and relationships between different schemas. For example, one source might have a field called ‘customer_name’ while another uses ‘clientName’. Schema mapping would identify these as equivalent and ensure consistent naming in the integrated data.

Key Aspects of Schema Mapping:

- Field Mapping: Identifying corresponding fields across different schemas.

- Data Type Conversion: Transforming data types (e.g., converting VARCHAR to DATE).

- Data Transformation: Applying functions to clean, standardize, or enrich data (e.g., removing leading/trailing spaces).

- Relationship Mapping: Defining relationships between entities across schemas (e.g., one-to-many relationships between customers and orders).

Example: Consider integrating customer data from a CRM system and an e-commerce platform. The CRM might store customer addresses in three separate fields (street, city, state), while the e-commerce platform uses a single ‘address’ field. Schema mapping would involve combining the three fields from the CRM into a single address field, matching the e-commerce platform’s structure.

Q 23. Describe your experience with data integration using cloud services (e.g., AWS, Azure, GCP).

I have extensive experience leveraging cloud services for data integration, primarily using AWS and Azure. In AWS, I’ve utilized services like AWS Glue for ETL (Extract, Transform, Load) processes, leveraging its serverless capabilities for scalability and cost-efficiency. I’ve built ETL pipelines using Apache Spark on EMR (Elastic MapReduce) for large-scale data processing. Additionally, I’ve used S3 for data storage and Amazon RDS for relational databases.

On Azure, my experience includes using Azure Data Factory for creating and managing data integration pipelines. This involved defining data flows, transformations, and scheduling tasks. I’ve also worked with Azure Synapse Analytics, a powerful analytics service that integrates data warehousing, data integration, and big data analytics capabilities. In both environments, I focused on building robust, fault-tolerant, and scalable solutions, incorporating best practices for monitoring and logging.

A recent project involved integrating sales data from various regional databases (hosted on-premise) into a centralized data warehouse on Azure. We used Azure Data Factory to extract data, perform cleansing and transformations, and load it into Azure Synapse Analytics. The entire process was automated, improving data accessibility and reporting speed significantly.

Q 24. How do you handle data errors and exceptions during data integration?

Robust error handling is paramount in data integration. I employ a multi-layered approach, starting with proactive data validation at the source. This involves data profiling to understand data quality issues like null values, inconsistencies, and outliers. Then, I implement checks within the integration pipelines themselves using error handling mechanisms provided by the chosen tools (e.g., try-catch blocks in code, exception handling in cloud services).

Strategies for Handling Errors:

- Data Validation: Implementing checks for data types, ranges, and constraints before processing.

- Error Logging and Monitoring: Detailed logging provides insights into errors, facilitating debugging and identifying patterns. Real-time monitoring dashboards help identify and address issues proactively.

- Error Handling Mechanisms: Utilizing built-in exception handling features in ETL tools, allowing the pipeline to continue processing even with some errors (with appropriate logging).

- Dead-letter Queues: Storing failed records in a separate queue for later review and correction.

- Retry Mechanisms: Automatically retrying failed operations a specified number of times before escalation.

Example: In an ETL process, if a data transformation fails due to an invalid data format, the error is logged, and the problematic record is moved to a dead-letter queue for manual inspection. The pipeline continues processing the rest of the data.

Q 25. What are some common challenges in integrating data from different sources?

Integrating data from diverse sources presents numerous challenges. These stem from differences in data formats, structures, data quality, and governance policies. Here are some common issues:

- Data Format Inconsistency: Sources might use different formats (CSV, JSON, XML, databases).

- Schema Discrepancies: Different sources have varied field names, data types, and structures.

- Data Quality Issues: Inconsistent data, missing values, duplicates, and outliers impact data reliability.

- Data Governance and Compliance: Sources may have varying levels of data security, privacy, and access controls.

- Data Volume and Velocity: High-volume, high-velocity data streams require robust and scalable solutions.

- Data Integration Complexity: Integrating numerous sources with complex relationships and transformations can be challenging.

Example: Integrating data from a legacy system (using a flat file) with a modern cloud-based application (using a NoSQL database) requires careful consideration of data format conversion, schema mapping, and handling data quality issues in the legacy data.

Q 26. Explain your experience with data governance and compliance in data integration.

Data governance and compliance are critical aspects of data integration. My experience includes designing and implementing data governance frameworks that ensure data quality, security, and compliance with relevant regulations (e.g., GDPR, CCPA). This involves defining data policies, roles, and responsibilities, and establishing data lineage tracking.

Key aspects of data governance in data integration:

- Data Quality Management: Establishing processes for data profiling, cleansing, and validation.

- Data Security: Implementing access controls, encryption, and other security measures to protect sensitive data.

- Data Lineage: Tracking data’s journey from source to destination, ensuring accountability and traceability.

- Metadata Management: Maintaining comprehensive metadata about data sources, schemas, and transformations.

- Compliance with Regulations: Ensuring the integration process adheres to relevant data privacy and security regulations.

In a recent project, we implemented a data governance framework that included data masking and encryption for sensitive customer data during integration, ensuring compliance with GDPR. We also established a robust metadata repository, allowing easy tracking of data lineage and assisting in auditing activities.

Q 27. How do you choose the appropriate data integration pattern for a given scenario?

Choosing the right data integration pattern depends heavily on the specific requirements of the integration scenario. Several factors influence this decision, including the number of sources, data volume, data velocity, data structures, and the desired level of real-time processing.

Common Data Integration Patterns:

- ETL (Extract, Transform, Load): A batch-oriented approach ideal for large volumes of data that don’t require real-time processing.

- ELT (Extract, Load, Transform): Loads raw data first, then transforms it within the target data warehouse, advantageous for large datasets and flexible transformations.

- Change Data Capture (CDC): Tracks changes in data sources and replicates only the changes to the target, suitable for real-time or near real-time updates.

- Data Virtualization: Creates a unified view of data from multiple sources without physically moving or transforming data. Ideal for quick integration of read-only data.

- Message Queues (e.g., Kafka): Used for high-volume, real-time data streaming and integration.

Decision Process:

- Assess data sources and targets: Understand data volume, velocity, structure, and location.

- Define integration requirements: Determine the level of real-time processing needed and data transformation requirements.

- Evaluate available tools and technologies: Consider scalability, cost, and maintainability.

- Select appropriate pattern: Choose the pattern that best aligns with the assessment and requirements.

For example, for a scenario with high-volume, real-time data streams, a message queue-based approach like Kafka might be preferred. For a large batch data integration with complex transformations, an ETL approach would be suitable.

Q 28. Describe your experience with data profiling and its role in data integration.

Data profiling is an essential step in data integration. It involves analyzing data to understand its characteristics, quality, and structure. This information guides schema mapping, data cleansing, and transformation efforts, helping to identify and address potential issues early in the process.

Key aspects of data profiling in data integration:

- Data Quality Assessment: Identifying issues like null values, inconsistencies, duplicates, and outliers.

- Data Type Analysis: Determining the data types of each field and identifying inconsistencies.

- Data Distribution Analysis: Understanding the distribution of values within each field (e.g., frequency, range).

- Data Structure Analysis: Understanding the relationships between different fields and tables.

- Data Volume Analysis: Determining the size and volume of the data to be integrated.

Benefits of Data Profiling:

- Improved Data Quality: Early detection and correction of data quality issues.

- Enhanced Schema Mapping: Accurate identification of corresponding fields and data types.

- Optimized Data Transformations: More efficient and effective data transformation processes.

- Reduced Integration Risks: Minimized errors and delays during the integration process.

In a recent project, data profiling revealed significant inconsistencies in date formats across multiple sources. This informed the design of data transformation rules to standardize date formats, ensuring data consistency in the integrated data warehouse. This proactive approach saved considerable time and effort later in the project.

Key Topics to Learn for Data Integration Patterns Interview

- ETL (Extract, Transform, Load) Processes: Understand the core stages, different ETL tools (e.g., Informatica, Talend), and their optimal application in various scenarios. Consider challenges like data cleansing and transformation strategies.

- Data Integration Architectures: Explore different architectural patterns such as hub-and-spoke, data virtualization, and message queues. Analyze their strengths and weaknesses in relation to scalability, performance, and maintainability.

- Data Synchronization Techniques: Master various methods for keeping data consistent across multiple systems, including change data capture (CDC), real-time synchronization, and batch processing. Discuss the trade-offs between consistency and performance.

- Data Quality and Governance: Learn about data profiling, cleansing, and validation techniques. Understand the role of data governance in ensuring data accuracy and compliance. Discuss strategies for handling data inconsistencies and errors.

- API-Based Integration: Explore REST and SOAP APIs, their functionalities, and how they’re used in data integration. Understand the design principles of robust and scalable APIs for data exchange.

- Cloud-Based Data Integration: Familiarize yourself with cloud-based data integration services offered by major providers (e.g., AWS, Azure, GCP). Understand serverless architectures and their implications for data integration projects.

- Data Modeling for Integration: Understand various data models (e.g., relational, NoSQL) and how they influence the design and implementation of data integration solutions. Discuss techniques for data transformation between different models.

- Problem-Solving and Troubleshooting: Practice identifying and resolving common data integration challenges such as data inconsistencies, performance bottlenecks, and error handling. Develop your approach to debugging and troubleshooting complex integration issues.

Next Steps

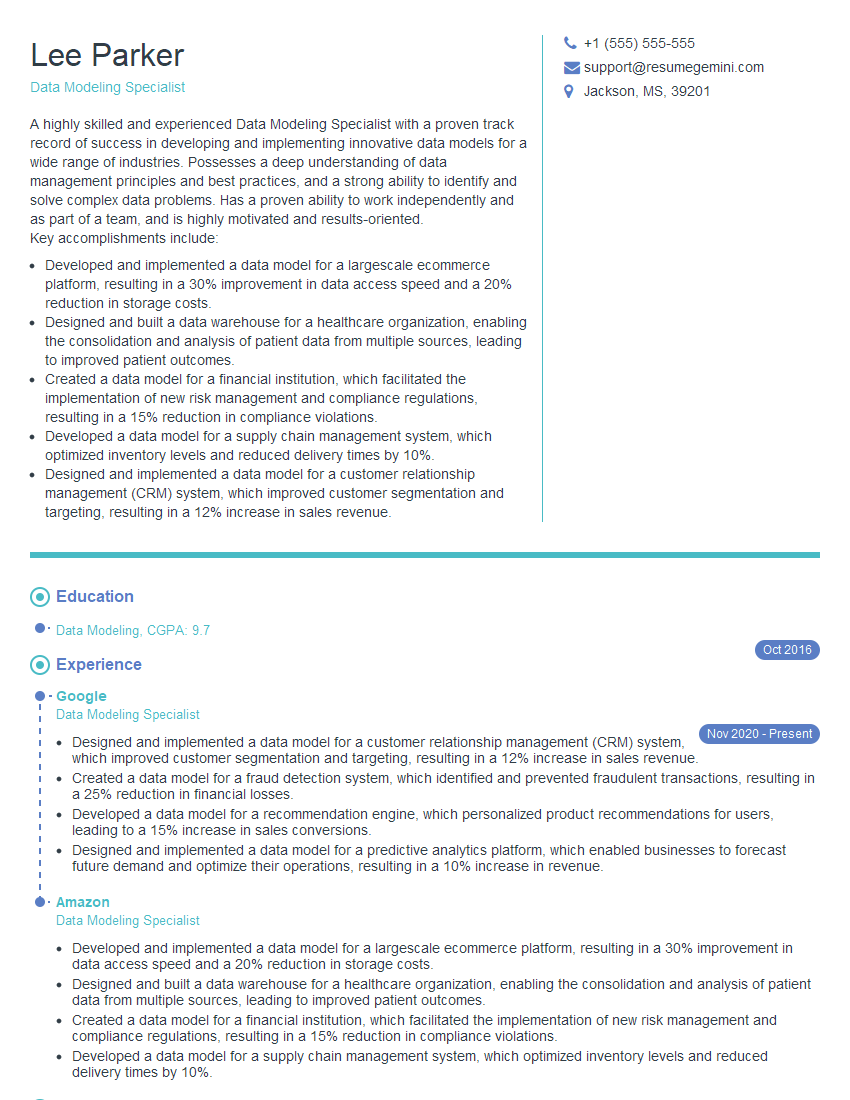

Mastering data integration patterns is crucial for career advancement in today’s data-driven world. It demonstrates a strong understanding of data management, architecture, and problem-solving, highly valued by employers. To significantly boost your job prospects, create an ATS-friendly resume that showcases your skills effectively. ResumeGemini is a trusted resource that can help you build a compelling and professional resume tailored to highlight your expertise in data integration. Examples of resumes optimized for Data Integration Patterns roles are available to guide you.

Explore more articles

Users Rating of Our Blogs

Share Your Experience

We value your feedback! Please rate our content and share your thoughts (optional).

What Readers Say About Our Blog

Hello,

We found issues with your domain’s email setup that may be sending your messages to spam or blocking them completely. InboxShield Mini shows you how to fix it in minutes — no tech skills required.

Scan your domain now for details: https://inboxshield-mini.com/

— Adam @ InboxShield Mini

Reply STOP to unsubscribe

Hi, are you owner of interviewgemini.com? What if I told you I could help you find extra time in your schedule, reconnect with leads you didn’t even realize you missed, and bring in more “I want to work with you” conversations, without increasing your ad spend or hiring a full-time employee?

All with a flexible, budget-friendly service that could easily pay for itself. Sounds good?

Would it be nice to jump on a quick 10-minute call so I can show you exactly how we make this work?

Best,

Hapei

Marketing Director

Hey, I know you’re the owner of interviewgemini.com. I’ll be quick.

Fundraising for your business is tough and time-consuming. We make it easier by guaranteeing two private investor meetings each month, for six months. No demos, no pitch events – just direct introductions to active investors matched to your startup.

If youR17;re raising, this could help you build real momentum. Want me to send more info?

Hi, I represent an SEO company that specialises in getting you AI citations and higher rankings on Google. I’d like to offer you a 100% free SEO audit for your website. Would you be interested?

Hi, I represent an SEO company that specialises in getting you AI citations and higher rankings on Google. I’d like to offer you a 100% free SEO audit for your website. Would you be interested?

good