Feeling uncertain about what to expect in your upcoming interview? We’ve got you covered! This blog highlights the most important Database Connectivity and Management interview questions and provides actionable advice to help you stand out as the ideal candidate. Let’s pave the way for your success.

Questions Asked in Database Connectivity and Management Interview

Q 1. Explain the difference between clustered and non-clustered indexes.

Indexes are special lookup tables that the database search engine can use to speed up data retrieval. Think of them like the index in the back of a book – they point you to the right page (data row) quickly, instead of making you read every page.

A clustered index is a special type of index that defines the physical ordering of rows in a table. There can only be one clustered index per table. Imagine a library where books are sorted alphabetically by author’s last name; the physical placement of books reflects the author’s last name. That’s analogous to a clustered index. If you query by author’s last name, the database can very efficiently locate the relevant rows.

A non-clustered index, on the other hand, doesn’t change the physical order of the data. Instead, it creates a separate structure that contains the index key and a pointer to the actual row in the table. This is like having a separate alphabetical index in the book; it lists the topics and page numbers but doesn’t rearrange the pages themselves. You can have many non-clustered indexes on a single table.

In short: Clustered indexes define the physical order, improving read performance for queries on the indexed columns. Non-clustered indexes create separate structures, enabling efficient lookups for multiple columns without affecting the table’s physical order.

Q 2. Describe different types of database joins (INNER, LEFT, RIGHT, FULL).

Database joins combine rows from two or more tables based on a related column between them. Think of it like combining different pieces of information that belong together – customer details from one table and their order history from another. Let’s explore the different join types:

- INNER JOIN: Returns only the rows where the join condition is met in both tables. Imagine finding customers who have placed orders; only those customers appearing in both the customer and order tables are returned.

- LEFT (OUTER) JOIN: Returns all rows from the left table (the one specified before

LEFT JOIN), even if there’s no match in the right table. All customers are returned, whether or not they have any orders. If no match exists in the right table, the columns from the right table will haveNULLvalues. - RIGHT (OUTER) JOIN: Similar to

LEFT JOIN, but it returns all rows from the right table, regardless of matches in the left table. All orders are returned, showing the customer even if the customer information is missing. - FULL (OUTER) JOIN: Returns all rows from both tables. It combines the results of

LEFT JOINandRIGHT JOIN. All customers and all orders are included, and unmatched rows will haveNULLvalues in the corresponding columns from the other table.

Example (SQL):

SELECT * FROM Customers INNER JOIN Orders ON Customers.CustomerID = Orders.CustomerID;Q 3. What is normalization and why is it important?

Normalization is a database design technique aimed at organizing data to reduce redundancy and improve data integrity. It’s like tidying up your closet – you separate your clothes by type and season to make it easier to find what you need and avoid clutter.

Redundancy leads to several problems: wasted storage space, inconsistent data (different entries for the same information), and update anomalies (changes need to be made in multiple places, leading to errors). Normalization eliminates or minimizes these issues by following specific rules (normal forms), such as separating data into different related tables, and defining relationships between them.

Why is it important?

- Data Integrity: Ensures data accuracy and consistency.

- Efficiency: Reduces storage space and improves query performance.

- Maintainability: Makes it easier to update, modify, and maintain the database.

Different normal forms (1NF, 2NF, 3NF, etc.) have specific guidelines to address different levels of redundancy. A well-normalized database provides a robust and reliable foundation for applications.

Q 4. Explain ACID properties in the context of database transactions.

ACID properties are a set of four characteristics that guarantee reliable database transactions. They are crucial for maintaining data consistency and integrity, especially in situations involving concurrent access or failures.

- Atomicity: A transaction is treated as a single unit of work. Either all operations within the transaction are completed successfully, or none are. It’s like an all-or-nothing approach – if one part fails, the entire transaction is rolled back.

- Consistency: A transaction must maintain the database’s integrity constraints. It moves the database from one valid state to another. Think of it like a well-organized room – even while rearranging items, it should remain organized.

- Isolation: Concurrent transactions should not interfere with each other. Each transaction should behave as if it’s the only one operating on the database. This is especially important in multi-user environments.

- Durability: Once a transaction is committed, the changes are permanent and survive even system failures. It’s like writing data in stone – once it’s recorded, it cannot easily be lost.

These properties ensure that database operations are reliable and predictable, even in complex scenarios.

Q 5. How do you handle database deadlocks?

Database deadlocks occur when two or more transactions are blocked indefinitely, waiting for each other to release resources. Imagine two people trying to pass each other in a narrow hallway; neither can move until the other does, creating a deadlock.

Handling Deadlocks:

- Prevention: Careful database design and transaction management can help prevent deadlocks. This might involve ordering resource access consistently (always acquiring locks in the same order).

- Detection and Rollback: Database systems typically have deadlock detection mechanisms. When a deadlock is detected, one or more of the involved transactions are rolled back (aborted), freeing the resources and allowing others to proceed. This is usually the most common solution.

- Timeout: Setting timeouts on locks can prevent indefinite waiting. If a transaction cannot acquire a lock within a specified time, it’s rolled back.

The best approach is often a combination of these techniques. Designing transactions that acquire resources in a predictable manner can significantly reduce the likelihood of deadlocks.

Q 6. What are stored procedures and how are they beneficial?

Stored procedures are pre-compiled SQL code blocks that reside on the database server. They encapsulate a set of SQL statements and can accept input parameters and return output values. Think of them as reusable, modular units of database functionality.

Benefits:

- Performance: Pre-compilation improves execution speed.

- Security: Stored procedures can help enforce security policies by controlling access to underlying data.

- Maintainability: Easier to modify and maintain than scattered SQL code.

- Modularity: Promotes code reusability and simplifies application development.

- Data Integrity: Enforces business rules and data constraints through the stored procedure logic.

Example (SQL):

CREATE PROCEDURE GetCustomerOrders (@CustomerID INT) AS BEGIN SELECT * FROM Orders WHERE CustomerID = @CustomerID; END;This procedure retrieves all orders for a specific customer ID. The @CustomerID is an input parameter.

Q 7. What are triggers and how do they work?

Database triggers are stored procedures automatically executed in response to certain events on a particular table or view. They act like event listeners, performing actions whenever data is inserted, updated, or deleted.

How they work:

A trigger is associated with a specific table and a specific event (INSERT, UPDATE, DELETE). When the event occurs, the database system automatically executes the trigger’s code. Triggers can perform various actions, including data validation, auditing, and cascading updates.

Example (Scenario):

Imagine an inventory management system. You might have a trigger on the Orders table that automatically updates the Inventory table when an order is placed, decreasing the quantity of the ordered items. This ensures data consistency across the two tables without requiring explicit code in the application.

Triggers are powerful but should be used judiciously, as they can affect database performance if not designed and implemented carefully. It’s important to consider potential performance implications, particularly for complex triggers affecting large datasets.

Q 8. Explain the concept of database views.

Database views are essentially virtual tables based on the result-set of an SQL statement. They don’t store data themselves; instead, they provide a customized perspective or subset of data from one or more underlying base tables. Think of them as pre-defined queries that simplify data access and provide a layer of abstraction.

Benefits of using views:

- Data simplification: Views can present complex data in a more manageable and understandable format, hiding the underlying complexity from the user.

- Data security: Views can restrict access to sensitive data by only exposing specific columns or rows from the base tables. A user might only see the data relevant to their role, even if they don’t have permission to access the entire table.

- Data consistency: A view can present a consistent view of data even if the underlying tables are modified. This improves data integrity and reduces the risk of inconsistencies.

- Query simplification: Complex queries can be encapsulated within a view, making it easier for other users (or even the same user later on) to access and reuse that data.

Example: Imagine an e-commerce database with a ‘Customers’ table and an ‘Orders’ table. We could create a view called ‘CustomerOrderSummary’ that shows each customer’s name and their total order value. This view simplifies the process for reporting on customer spending, avoiding the need to write a complex JOIN query every time.

CREATE VIEW CustomerOrderSummary AS SELECT c.customerName, SUM(o.orderTotal) AS totalSpent FROM Customers c JOIN Orders o ON c.customerId = o.customerId GROUP BY c.customerName;Q 9. How do you optimize database queries for performance?

Optimizing database queries for performance is crucial for application responsiveness and scalability. It involves analyzing query execution plans and identifying bottlenecks. Here are some key strategies:

- Indexing: Create indexes on frequently queried columns. Indexes are like the index in a book – they speed up searches significantly. However, overuse can slow down data modification operations, so choose wisely.

- Query Rewriting: Rewrite inefficient queries to use more efficient SQL constructs. For example, avoid using

SELECT *; select only the necessary columns. - Data Type Optimization: Ensure data types are appropriate for the data. Choosing overly large data types consumes unnecessary space and can slow down operations.

- Proper use of Joins: Avoid Cartesian products (joins without proper join conditions). Understand the different types of joins (INNER, LEFT, RIGHT, FULL) and use the one best suited to your needs. Consider using indexes on join columns.

- Database Caching: Leverage database caching mechanisms to store frequently accessed data in memory for faster retrieval. Many database systems have built-in caching capabilities.

- Query Planning and Execution Analysis: Use your database system’s tools to analyze query execution plans. These tools show how the database plans to execute the query, allowing you to identify performance bottlenecks and optimize accordingly.

- Normalization: Design your database schema with proper normalization to reduce data redundancy and improve data integrity. This will lead to more efficient queries and less storage space needed.

- Partitioning: Divide large tables into smaller, more manageable partitions based on some criteria (e.g., date, region). This allows for parallel processing and faster query execution, especially useful for large datasets.

Example of inefficient query: SELECT * FROM Customers WHERE city = 'London'; (selects all columns, inefficient if only a few are needed)

Improved query: SELECT customerId, customerName, email FROM Customers WHERE city = 'London'; (selects only necessary columns).

Q 10. What are database constraints and give examples.

Database constraints are rules enforced by the database management system (DBMS) to ensure data integrity and consistency. They prevent invalid or inconsistent data from being entered into the database.

- NOT NULL: Ensures that a column cannot contain NULL values.

e.g., CREATE TABLE Employees (employeeID INT NOT NULL, ...); - UNIQUE: Ensures that all values in a column are unique. Often used for primary keys or other identifying fields.

e.g., CREATE TABLE Users (username VARCHAR(255) UNIQUE, ...); - PRIMARY KEY: Uniquely identifies each row in a table. It’s a combination of NOT NULL and UNIQUE constraints.

e.g., CREATE TABLE Products (productID INT PRIMARY KEY, ...); - FOREIGN KEY: Creates a link between two tables. It ensures that the values in a column of one table match the values in a primary key column of another table.

e.g., CREATE TABLE Orders (orderID INT PRIMARY KEY, customerID INT, FOREIGN KEY (customerID) REFERENCES Customers(customerID)); - CHECK: Ensures that values in a column satisfy a specific condition.

e.g., CREATE TABLE Ages (age INT CHECK (age >= 0)); - DEFAULT: Specifies a default value for a column if no value is provided during insertion.

e.g., CREATE TABLE Products (productStatus VARCHAR(20) DEFAULT 'Active');

Constraints are crucial for maintaining data quality and preventing errors, leading to a more reliable and robust database system.

Q 11. Describe different types of database backups and recovery strategies.

Database backups are copies of your database that allow you to recover data in case of data loss. Recovery strategies define how you use these backups to restore your database. Different types of backups cater to different needs and recovery times.

- Full Backup: A complete copy of the entire database. It’s the most comprehensive but also the slowest and most space-consuming.

- Incremental Backup: A backup of only the data that has changed since the last full or incremental backup. It’s faster and more space-efficient than full backups, but requires a full backup to be restored.

- Differential Backup: A backup of the data that has changed since the last full backup. It’s faster than incremental backups but larger than incremental backups. The restoration process is faster than incremental backups because it only needs the last full and differential backup.

- Transaction Log Backups: Backups of the transaction log, which records all database modifications. These are critical for point-in-time recovery, restoring the database to a specific point in time before a failure.

Recovery Strategies:

- Full Recovery: Uses a full backup and all subsequent transaction log backups for the most complete recovery.

- Bulk-Logged Recovery: Uses a full backup and minimal transaction log backups, sacrificing some recovery granularity to speed up data modification operations.

- No Recovery: No transaction logs are kept; only full backups are used. Offers fastest data modifications but less recovery flexibility.

Choosing the right backup and recovery strategy depends on factors like the criticality of the data, the recovery time objective (RTO), and the recovery point objective (RPO).

Q 12. How do you monitor database performance?

Monitoring database performance is essential for proactive issue detection and optimization. It involves tracking various metrics to identify bottlenecks and potential problems.

- Query Performance: Monitor the execution time of queries, especially slow-running queries. This can be done using database monitoring tools and query profiling.

- Resource Utilization: Track CPU usage, memory usage, disk I/O, and network traffic. High resource utilization can indicate bottlenecks or performance issues.

- Transaction Logs: Monitor the size and growth rate of transaction logs. Rapidly growing logs may indicate excessive changes or issues.

- Deadlocks and Blocking: Detect and resolve deadlocks and blocking situations, which occur when multiple transactions interfere with each other.

- Connection Pool Usage: Monitor the usage of connection pools to ensure they are sized appropriately and not causing contention.

- Error Rates: Track error rates to identify potential issues in the database system or applications interacting with the database.

- Wait Statistics: Analyze wait statistics to find out what resources database processes are waiting for.

Most database systems provide built-in monitoring tools or support integration with third-party monitoring software. Regular performance monitoring is critical for maintaining a healthy and efficient database.

Q 13. Explain different database replication methods.

Database replication involves creating copies of data on multiple servers. This enhances availability, scalability, and performance. Several methods exist:

- Asynchronous Replication: Changes are replicated to secondary servers with a delay. This is more efficient but introduces latency. Data consistency might be momentarily compromised between servers.

- Synchronous Replication: Changes are written to all servers simultaneously. This provides stronger data consistency but can be slower than asynchronous replication.

- Master-Slave Replication: One server (the master) acts as the source of data, and changes are copied to one or more slave servers. This is a common approach for read scaling.

- Multi-Master Replication: Multiple servers can act as masters, allowing for independent data modification on each server. Data conflicts need to be resolved, typically using conflict resolution strategies.

- Circular Replication: Changes are propagated in a circular fashion among multiple servers. This distributes the load and enhances redundancy.

The choice of replication method depends on the specific application requirements. For applications demanding high availability and low latency, synchronous replication is preferred. Asynchronous replication might be more suitable for applications where eventual consistency is acceptable and maximizing throughput is crucial.

Q 14. What is database sharding?

Database sharding, also known as horizontal partitioning, is a technique for dividing a large database into smaller, more manageable parts called shards. Each shard is stored on a separate database server. This distributes the data across multiple servers, improving scalability, performance, and availability.

How it works: A sharding key is used to determine which shard a specific piece of data belongs to. For example, a user ID could be used as a sharding key, with user IDs 1-1000 on shard A, 1001-2000 on shard B, and so on. Queries are then routed to the appropriate shard based on the sharding key.

Benefits:

- Scalability: Adding more shards is simpler than scaling a single, massive database.

- Performance: Queries are executed on a smaller dataset within a single shard.

- Availability: Failure of one shard doesn’t affect the entire database.

Challenges:

- Shard Key Selection: Choosing an appropriate sharding key that distributes the data evenly across the shards is crucial.

- Data Distribution: Maintaining an even distribution of data across the shards is essential for optimal performance.

- Cross-Shard Queries: Queries involving data across multiple shards can be complex and require special handling (often requiring distributed transactions).

Sharding is a powerful technique for managing extremely large databases, but it introduces complexities that must be carefully considered in the design and implementation phases.

Q 15. How do you ensure database security?

Database security is paramount, and ensuring it involves a multi-layered approach. Think of it like protecting a valuable castle: you need strong walls, vigilant guards, and secure entry points. In the database world, this translates to several key strategies.

- Access Control: This is fundamental. We use robust authentication mechanisms (like strong passwords and multi-factor authentication) to verify user identities. Then, we implement authorization, defining what each user or role can access (e.g., read-only access, write access, administrative privileges). Think of this as assigning specific keys to different guards, allowing them to access only their designated areas.

- Data Encryption: Encrypting data both in transit (while it’s traveling across networks) and at rest (when it’s stored) is crucial. This is like locking valuable items in chests within the castle. Even if someone breaches the walls, they can’t easily access the contents without the key (the encryption key).

- Regular Audits and Monitoring: Continuous monitoring of database activity helps detect suspicious behavior, such as unauthorized access attempts or unusual data modifications. This is like having security patrols within the castle, always vigilant for any suspicious activity.

- Input Validation: Sanitizing user input before it reaches the database prevents SQL injection attacks – a common vulnerability where attackers inject malicious code into database queries. This is like carefully inspecting all packages entering the castle to ensure they contain nothing dangerous.

- Database Patching and Updates: Keeping the database software up-to-date with the latest security patches closes known vulnerabilities. This is akin to regularly reinforcing the castle walls to withstand new attack methods.

- Principle of Least Privilege: Granting users only the minimum necessary permissions helps limit the damage from potential breaches. If a guard is compromised, they can’t access more of the castle than they are authorized for.

By combining these strategies, we create a robust defense against threats and protect sensitive data.

Career Expert Tips:

- Ace those interviews! Prepare effectively by reviewing the Top 50 Most Common Interview Questions on ResumeGemini.

- Navigate your job search with confidence! Explore a wide range of Career Tips on ResumeGemini. Learn about common challenges and recommendations to overcome them.

- Craft the perfect resume! Master the Art of Resume Writing with ResumeGemini’s guide. Showcase your unique qualifications and achievements effectively.

- Don’t miss out on holiday savings! Build your dream resume with ResumeGemini’s ATS optimized templates.

Q 16. What are the advantages and disadvantages of NoSQL databases?

NoSQL databases offer a different approach to data management compared to traditional relational databases (like SQL). They are designed for flexibility and scalability, particularly with large volumes of unstructured or semi-structured data. Let’s look at the advantages and disadvantages:

- Advantages:

- Scalability: NoSQL databases can easily scale horizontally by adding more servers to handle growing data volumes and user traffic. Imagine expanding a warehouse by adding more storage units instead of building a larger, single warehouse.

- Flexibility: They can handle various data models (key-value, document, graph, etc.), accommodating diverse data structures. This is like having different compartments in a warehouse to store various types of goods.

- Performance: For specific use cases, NoSQL databases can be significantly faster than relational databases, particularly for read-heavy applications. This is analogous to having a well-organized warehouse allowing quick retrieval of goods.

- Disadvantages:

- Data Consistency: NoSQL databases may not always offer the same level of data consistency as relational databases, depending on the specific database system used. This could lead to potential data discrepancies if not carefully managed.

- ACID Properties: The strict ACID properties (Atomicity, Consistency, Isolation, Durability) which guarantee reliable transactions in relational databases are often relaxed or not fully implemented in NoSQL databases. This is a critical consideration for financial transactions or similar use cases.

- Complex Queries: Performing complex joins or other sophisticated queries can be more challenging in NoSQL databases compared to relational databases.

The choice between SQL and NoSQL depends heavily on the specific application requirements. NoSQL is a great fit for applications needing high scalability and flexibility, while relational databases are better suited for applications demanding strict data consistency and complex transactional operations.

Q 17. Explain the difference between OLTP and OLAP databases.

OLTP (Online Transaction Processing) and OLAP (Online Analytical Processing) databases serve different purposes and have distinct characteristics. Think of OLTP as handling daily business transactions and OLAP as providing insights from historical data.

- OLTP: Designed for high-speed transaction processing, handling many short, simple queries. Examples include ATM withdrawals, online shopping orders, or booking flights. These databases prioritize data integrity and transactional consistency (ACID properties). The structure is typically normalized to reduce data redundancy.

- OLAP: Focuses on complex analytical queries, data mining, and business intelligence. It uses large historical datasets and performs aggregations, summarizations, and trend analysis. Imagine analyzing sales data over the past five years to identify seasonal trends. OLAP databases often utilize data warehousing techniques and prioritize fast query response times even on massive datasets. They might use denormalized structures to speed up queries.

In short, OLTP is about handling individual transactions efficiently, while OLAP is about analyzing aggregated data to gain insights. They often work together: data from OLTP systems is frequently loaded into an OLAP data warehouse for analysis.

Q 18. What is data warehousing?

A data warehouse is a central repository of integrated data from multiple sources, designed for analytical processing and business intelligence. It’s like a giant, organized library of historical data, specifically crafted for insightful analysis rather than daily operational tasks. Data is extracted from various operational systems (OLTP databases, spreadsheets, etc.), transformed to a consistent format, and loaded into the warehouse for querying and reporting.

Key characteristics include:

- Subject-oriented: Data is organized around business subjects (e.g., customers, products, sales) rather than operational processes.

- Integrated: Data from disparate sources is combined into a consistent and unified view.

- Time-variant: Data is stored historically, allowing analysis of trends and changes over time.

- Non-volatile: Data in the warehouse is not updated in real-time; it’s refreshed periodically.

Data warehouses empower businesses to make data-driven decisions by providing a holistic view of their operations and market trends.

Q 19. What is ETL (Extract, Transform, Load)?

ETL (Extract, Transform, Load) is the process of collecting data from various sources, converting it into a usable format, and loading it into a target database, typically a data warehouse. Think of it as the pipeline that fills the data warehouse.

- Extract: Data is extracted from source systems. These sources can be databases, flat files, APIs, or other data repositories. This step involves connecting to the sources, retrieving the necessary data, and handling any data-specific challenges such as different data formats or schemas.

- Transform: Data is cleaned, standardized, and transformed into a consistent format suitable for the target data warehouse. This includes handling missing values, data type conversions, data cleansing, and any necessary calculations or aggregations.

- Load: The transformed data is loaded into the target data warehouse. This involves efficient data loading techniques to minimize downtime and ensure data integrity.

ETL processes are often automated using specialized ETL tools, which help manage the complexity of extracting, transforming, and loading large datasets from diverse sources. Efficient ETL is crucial for ensuring a high-quality and timely data warehouse, enabling accurate business intelligence.

Q 20. Explain the concept of database indexing.

Database indexing is like creating a detailed table of contents for a book. It significantly speeds up data retrieval by creating a separate data structure that contains pointers to the actual data rows in the database table. Instead of searching through every row, the database can use the index to quickly locate the relevant rows.

Indexes are typically created on columns that are frequently used in WHERE clauses of SQL queries. For example, if you frequently search for customers based on their last name, you would create an index on the ‘last_name’ column. Different types of indexes exist, including B-tree, hash, and full-text indexes, each suited for different types of queries.

While indexes improve query performance, they also consume storage space and can slow down data insertion and update operations. The optimal indexing strategy involves carefully considering the trade-off between query performance and update overhead.

Q 21. What are the different types of database relationships?

Database relationships define how different tables in a relational database are connected and interact. They’re crucial for ensuring data integrity and preventing redundancy.

- One-to-one: One record in a table relates to only one record in another table. Example: A person might have only one passport.

- One-to-many: One record in a table can relate to multiple records in another table. Example: One customer can have many orders.

- Many-to-many: Records in one table can relate to multiple records in another table, and vice versa. Example: Many students can enroll in many courses.

These relationships are implemented using foreign keys, which are columns in one table that reference the primary key of another table. Properly designed relationships are essential for maintaining data consistency and efficiency in relational databases.

Q 22. How do you handle database errors and exceptions?

Handling database errors and exceptions is crucial for building robust and reliable applications. It involves anticipating potential issues, implementing appropriate error handling mechanisms, and providing informative feedback to users. This typically involves using try-except blocks (or similar constructs depending on your programming language) to catch specific exceptions.

- Specific Exception Handling: Instead of a generic

except Exception:, catch specific exceptions likeOperationalError(for connection issues),IntegrityError(for constraint violations), orProgrammingError(for syntax errors) to provide targeted error responses. This allows you to handle each situation appropriately. - Logging Errors: Thoroughly log all database errors, including timestamps, error messages, and relevant context (e.g., SQL query). This information is vital for debugging and identifying patterns in errors. Consider using a structured logging library for easier analysis.

- Retry Mechanisms: For transient errors (like network glitches), implementing retry logic with exponential backoff can significantly improve the application’s resilience. This involves retrying the database operation after a short delay, increasing the delay exponentially with each retry attempt to avoid overwhelming the database.

- User-Friendly Error Messages: Don’t present raw database error messages to users directly. Translate them into user-friendly messages that avoid technical jargon. For example, instead of “IntegrityError: UNIQUE constraint failed,” you might display “This entry already exists.”

Example (Python with psycopg2 for PostgreSQL):

try:

cursor.execute("INSERT INTO users (name, email) VALUES (%s, %s)", (name, email))

connection.commit()

except psycopg2.IntegrityError as e:

print("Error: This email is already registered.")

except psycopg2.OperationalError as e:

print("Error connecting to database. Please try again later.")

except Exception as e:

print(f"An unexpected error occurred: {e}")

logging.exception(e) # Log the full exception for debugging

finally:

cursor.close()

connection.close()Q 23. What is a transaction log and why is it important?

A transaction log is a crucial component of a database management system (DBMS) that records all changes made to the database. Think of it as a detailed history of every database update. Its importance stems from its role in ensuring data integrity and enabling recovery from failures.

- Data Recovery: In case of a system crash, a database can be restored to a consistent state using the information stored in the transaction log. The log allows the DBMS to ‘redo’ any uncommitted transactions and ‘undo’ any committed transactions that were in progress when the failure occurred.

- Data Integrity: The transaction log helps maintain the ACID properties (Atomicity, Consistency, Isolation, Durability) of database transactions. If a transaction fails midway, the log ensures that the changes made by that transaction are rolled back, maintaining data consistency.

- Auditing and Tracking: The transaction log provides an audit trail of all database changes, which is invaluable for security audits, tracking down errors, and troubleshooting data discrepancies.

- Replication: Transaction logs are essential for database replication. Changes are replicated from the primary database to secondary databases by applying the changes recorded in the transaction log to the secondary instances.

Real-World Scenario: Imagine an online banking system. A transaction log would record every deposit, withdrawal, and transfer. If the system crashes, the transaction log allows the bank to recover all completed and in-progress transactions accurately, avoiding inconsistencies in account balances.

Q 24. Describe your experience with a specific database management system (e.g., MySQL, SQL Server, Oracle).

I have extensive experience with MySQL, particularly in designing and managing large-scale, high-availability database systems. I’ve worked with versions ranging from MySQL 5.7 to 8.0, utilizing various storage engines like InnoDB (for transactional applications) and MyISAM (for non-transactional applications).

- Performance Optimization: I’ve extensively tuned MySQL performance using techniques like indexing (covering indexes, composite indexes), query optimization (using

EXPLAINto analyze query plans), and database partitioning for large datasets. For instance, I optimized a large e-commerce database by implementing a sharding strategy, improving query response times by 70%. - Replication and High Availability: I’ve set up and managed MySQL replication configurations (master-slave, multi-master) to ensure high availability and data redundancy. I’ve implemented failover mechanisms and load balancing solutions using tools like MySQL Proxy and MaxScale to maintain database uptime during maintenance or failures.

- Security Best Practices: I’m well-versed in securing MySQL deployments, implementing measures such as strong password policies, user access control, regular security audits, and encryption to protect sensitive data. I’ve also worked with integrating MySQL with various authentication mechanisms and implementing firewalls.

- Data Modeling and Schema Design: I have a solid grasp of database normalization principles, and have designed and implemented efficient database schemas using ER diagrams, ensuring data integrity and scalability. I’ve worked with various data types and relational database concepts extensively.

In one project, I migrated a legacy MySQL database to a cloud-based solution, optimizing the process to minimize downtime and ensure data integrity. This involved careful planning, thorough testing, and the use of database migration tools.

Q 25. How do you troubleshoot database connection issues?

Troubleshooting database connection issues involves a systematic approach, moving from the simplest checks to more complex investigations. The first step is to ensure the basics are correct: that the database server is running, the network is accessible, and the user credentials are valid.

- Check Server Status: Confirm that the database server is running and listening on the expected port. Use tools like

netstatorss(on Linux) or Resource Monitor (on Windows) to verify the server is actively listening. - Network Connectivity: Verify that your application can reach the database server. Check for network firewalls, network segmentation, or DNS resolution problems. Use tools like

pingandtracerouteto check network reachability. - Credentials Verification: Ensure that the username and password used for the connection are correct and that the user has the necessary privileges to access the database. Double-check for typos and special characters.

- Check Connection String: Scrutinize the connection string for errors. Verify the server address, port number, database name, and other parameters are accurate. Make sure the correct database driver is being used.

- Examine Database Logs: Check the database server’s error log for any clues related to connection attempts or failures. These logs frequently provide insightful information about the reason for connection failures.

- Driver/Library Issues: Ensure that the correct database driver or client library is installed and properly configured for your programming language and environment.

If the problem persists after these basic checks, consider using more advanced diagnostic tools such as database monitoring systems or network analysis tools to identify deeper issues.

Q 26. Explain your experience with database performance tuning.

Database performance tuning is an iterative process aimed at optimizing query execution time, resource utilization, and overall database responsiveness. It requires a deep understanding of the database system, query optimization techniques, and application logic.

- Query Optimization: Analyze slow queries using tools like

EXPLAIN PLAN(Oracle, PostgreSQL) or MySQL’sEXPLAINto identify performance bottlenecks. Common issues include missing indexes, inefficient join operations, or poorly written SQL queries. Rewrite queries, add indexes, or optimize the schema to improve query performance. - Indexing Strategies: Proper indexing is crucial. Create indexes on frequently queried columns, considering composite indexes to enhance performance of queries that involve multiple columns. Avoid over-indexing, as it can slow down data modification operations.

- Schema Optimization: Optimize the database schema by considering normalization techniques (to reduce data redundancy), data types, and table structures. Analyze data usage patterns to identify areas for schema refinement.

- Caching Strategies: Implement appropriate caching mechanisms (at the application level or database level) to reduce the load on the database server and speed up data retrieval. Utilize database-specific caching features, like query caching.

- Hardware Resources: Ensure that the database server has sufficient CPU, memory, and storage resources. Monitor resource utilization metrics (CPU usage, memory usage, disk I/O) to identify potential hardware bottlenecks.

- Connection Pooling: Implement connection pooling to reuse database connections, minimizing the overhead of establishing and closing connections for each request.

In one instance, I improved the performance of a customer relationship management (CRM) database by over 50% by optimizing queries and adding indexes strategically, resulting in significant improvement in the user experience.

Q 27. What is your experience with database migration?

Database migration is the process of moving data and schema from one database system to another, often involving different versions or platforms. This process requires careful planning and execution to ensure data integrity and minimal downtime.

- Assessment and Planning: Begin by thoroughly assessing the source and target database systems, including their schemas, data volumes, and performance requirements. Develop a detailed migration plan that includes steps for data extraction, transformation, loading (ETL), testing, and cutover.

- Data Extraction: Extract data from the source database using appropriate tools and techniques. This might involve using database backup utilities, export functions, or specialized ETL tools.

- Data Transformation: Transform the extracted data to match the structure and data types of the target database schema. This may involve data cleansing, conversion, and mapping.

- Data Loading: Load the transformed data into the target database using appropriate methods. Consider using bulk loading techniques to minimize loading time for large datasets.

- Testing and Validation: Thoroughly test the migrated data and schema to ensure data integrity and consistency. This might involve performing data validation checks, running test queries, and verifying application functionality.

- Cutover: Implement the final cutover to the new database system, minimizing downtime and ensuring a seamless transition.

I’ve successfully migrated several databases, including one project where we migrated a large legacy Oracle database to a cloud-based PostgreSQL instance, leveraging tools like pgloader and ensuring minimal disruption to business operations.

Q 28. Describe your experience with cloud-based database services (e.g., AWS RDS, Azure SQL Database).

I possess significant experience with cloud-based database services, primarily AWS RDS (Relational Database Service) and Azure SQL Database. These services offer managed database instances, relieving the need for manual server administration and enabling scalability and high availability.

- AWS RDS: I’ve worked with various RDS instance types (MySQL, PostgreSQL, Oracle, SQL Server) provisioning and managing databases across multiple availability zones for high availability and disaster recovery. I’ve utilized RDS features like read replicas for scaling read operations, automated backups, and point-in-time recovery.

- Azure SQL Database: I’ve deployed and managed Azure SQL databases, leveraging features like elastic pools for efficient resource utilization, geo-replication for disaster recovery, and various performance tuning options. I’ve utilized Azure’s monitoring and diagnostic tools to optimize database performance and identify potential issues.

- Cost Optimization: With cloud databases, I’ve focused on cost optimization strategies like right-sizing instances based on workload demands, utilizing reserved instances or committed use discounts, and optimizing database configurations to minimize costs while maintaining required performance levels.

- Security Best Practices: I’ve implemented robust security measures for cloud databases, including network security groups (NSGs), VPC peering, encryption at rest and in transit, and database access controls to secure the data and meet compliance standards.

In a recent project, I migrated an on-premises SQL Server database to Azure SQL Database, leveraging Azure’s migration tools and automated processes to ensure a smooth and efficient transition while reducing infrastructure management overhead.

Key Topics to Learn for Database Connectivity and Management Interview

- Database Models: Understanding relational (SQL) and NoSQL databases, their strengths, weaknesses, and appropriate use cases. Practical application: Choosing the right database for a specific project based on data structure and performance needs.

- SQL Proficiency: Mastering essential SQL commands (SELECT, INSERT, UPDATE, DELETE, JOINs) and writing efficient queries for data retrieval and manipulation. Practical application: Optimizing database queries for speed and scalability in a production environment.

- Database Connectivity: Familiarizing yourself with various database connectors (ODBC, JDBC, etc.) and understanding how to establish connections and manage transactions. Practical application: Building a robust and secure application that interacts with a database.

- Data Integrity and Security: Implementing measures to ensure data accuracy, consistency, and security (access control, encryption). Practical application: Designing a database schema that prevents data corruption and unauthorized access.

- Database Administration Basics: Understanding fundamental database administration tasks like backup and recovery, user management, performance tuning, and monitoring. Practical application: Troubleshooting database performance issues and implementing solutions.

- Transaction Management: Understanding ACID properties and how to manage transactions to ensure data consistency. Practical application: Implementing error handling and rollback mechanisms in database applications.

- Normalization and Data Modeling: Designing efficient and well-structured databases using normalization techniques. Practical application: Creating a database schema that minimizes data redundancy and improves data integrity.

- Indexing and Query Optimization: Understanding indexing strategies and how to optimize database queries for improved performance. Practical application: Improving the response time of database applications by using appropriate indexes.

Next Steps

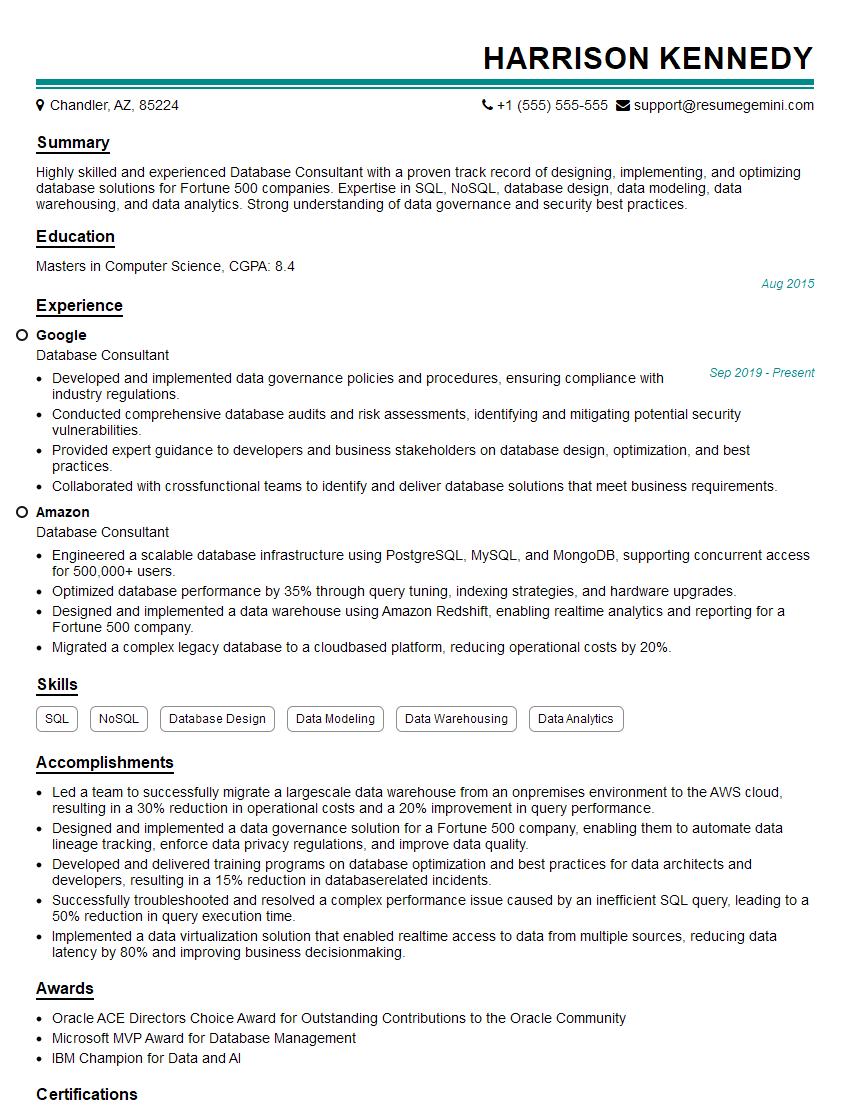

Mastering Database Connectivity and Management is crucial for a successful career in software development, data science, and many other technology fields. Strong database skills are highly sought after, opening doors to exciting and rewarding opportunities. To maximize your job prospects, focus on creating a compelling and ATS-friendly resume that highlights your relevant skills and experience. ResumeGemini is a trusted resource that can help you build a professional and impactful resume tailored to your specific skills and target roles. Examples of resumes tailored to Database Connectivity and Management are available, showcasing how to effectively present your qualifications to potential employers.

Explore more articles

Users Rating of Our Blogs

Share Your Experience

We value your feedback! Please rate our content and share your thoughts (optional).

What Readers Say About Our Blog

Hi, I represent an SEO company that specialises in getting you AI citations and higher rankings on Google. I’d like to offer you a 100% free SEO audit for your website. Would you be interested?

good