Interviews are more than just a Q&A session—they’re a chance to prove your worth. This blog dives into essential Defect Detection and Resolution interview questions and expert tips to help you align your answers with what hiring managers are looking for. Start preparing to shine!

Questions Asked in Defect Detection and Resolution Interview

Q 1. Describe your experience with different defect tracking systems (e.g., Jira, Bugzilla).

I have extensive experience with various defect tracking systems, primarily Jira and Bugzilla. Jira, with its flexible workflow and Kanban/Scrum board integration, is my preferred choice for agile development environments. I’ve used it to manage everything from small bug fixes to complex feature development, leveraging its issue linking, custom fields, and reporting capabilities for efficient tracking and analysis. Bugzilla, while less visually appealing, offers a robust and customizable system ideal for larger projects or those requiring more granular control over workflows. For instance, in one project involving a large enterprise software suite, Bugzilla’s powerful querying features were essential for identifying and addressing interconnected defects across different modules. I’m proficient in using both systems to create, assign, track, and resolve defects, ensuring clear communication between developers, testers, and project managers throughout the development lifecycle.

Q 2. Explain your process for prioritizing defects.

Prioritizing defects is crucial for effective bug resolution and project success. My approach involves a multi-faceted strategy, combining severity and priority levels. Severity focuses on the impact of the defect on the system (e.g., critical, major, minor), while priority considers the urgency of fixing it (e.g., high, medium, low). I use a matrix that combines these, allowing a clear understanding of what needs immediate attention. For example, a low-severity, high-priority bug might be a usability issue impacting many users, needing quick resolution even if it doesn’t crash the system. Conversely, a high-severity, low-priority bug—a rare edge case causing a system crash—may be addressed after more critical issues. I also consider factors like frequency of occurrence, customer impact, and business requirements when assigning priority. Furthermore, regular prioritization meetings with developers and stakeholders are essential to validate my assessment and ensure alignment with project goals.

Q 3. How do you differentiate between a bug, a defect, and a failure?

While the terms are often used interchangeably, there are subtle but important differences. A defect is a flaw in the code or design that deviates from specified requirements or expected behavior. A bug is a type of defect—a coding error causing unexpected behavior. Think of a bug as a specific manifestation of a broader defect. Finally, a failure is the consequence of a defect; it’s the system’s inability to perform its intended function due to the presence of a defect. For example, a defect in the code for calculating user balances might lead to a bug where balances are incorrectly displayed (the bug is the symptom). This could result in a system failure where users cannot access accurate account information (the failure is the impact). Understanding these distinctions is vital for accurate reporting and effective problem solving.

Q 4. What are the different testing methodologies you are familiar with?

I’m proficient in several testing methodologies, including Waterfall, Agile (Scrum and Kanban), and V-model. My experience in Agile environments has honed my skills in iterative testing and continuous feedback. In Waterfall, I’ve managed thorough test plans and execution in clearly defined phases. The V-model’s emphasis on verification and validation at each stage complements my structured approach. I adapt my approach to the project’s needs, recognizing that the best methodology depends heavily on the context and project complexity.

Q 5. Describe your experience with test case design techniques.

Test case design is a critical aspect of my work. I employ various techniques, including equivalence partitioning (dividing inputs into groups likely to behave similarly), boundary value analysis (focusing on boundary values to catch errors), decision table testing (mapping inputs to outputs based on decision logic), and state transition testing (modeling system behavior through different states). For example, when testing a login form, equivalence partitioning would involve creating test cases with valid and invalid usernames and passwords, while boundary value analysis would test inputs at the edges of the valid range (e.g., minimum and maximum password lengths). I also utilize error guessing, based on my experience and intuition, to identify potential areas prone to errors. Choosing the right technique depends on the complexity of the software component under test.

Q 6. How do you handle a situation where a developer disagrees with your defect report?

Disagreements with developers are a normal part of the defect resolution process. My approach is to remain professional and objective. I begin by carefully reviewing the developer’s arguments and the code in question. If I find errors in my report, I acknowledge them promptly and correct the report. If I still believe the defect is valid, I present a clear and concise explanation of the issue, providing concrete evidence like screenshots, logs, or test steps to reproduce the problem. I emphasize the impact of the defect on the system and the user experience. Open communication and collaborative debugging are essential; I find that working closely with the developer, stepping through the code together, often clarifies the situation and leads to a mutual understanding of the problem and a solution. If the disagreement persists, I escalate the issue to the project lead or senior management for mediation.

Q 7. Explain your experience with different types of testing (e.g., unit, integration, system, acceptance).

My experience encompasses all levels of software testing. Unit testing involves verifying individual components or modules of the code. Integration testing focuses on interactions between different units or modules. System testing validates the entire system as a whole. Finally, acceptance testing ensures the system meets the requirements defined by the stakeholders. Each level is critical; a failure at any level can propagate to higher levels, creating more significant issues. For instance, if unit tests fail to catch a bug in a single function, that bug could lead to integration issues, eventually resulting in system failures that are costly to fix in later stages. My approach is to ensure comprehensive testing at each level, maximizing early detection of defects and minimizing their downstream impact.

Q 8. How do you ensure test coverage?

Ensuring comprehensive test coverage is crucial for identifying defects early in the software development lifecycle. It’s about strategically designing tests to exercise as much of the system’s functionality as possible, preventing defects from slipping through the cracks. My approach involves a multi-pronged strategy:

- Requirement-based testing: I begin by meticulously reviewing requirements and specifications to identify all functionalities and scenarios that need to be tested. This ensures that every requirement is verified.

- Risk-based testing: I prioritize testing areas with a higher risk of failure or those impacting core functionalities. This is a cost-effective way to focus efforts where they matter most.

- Test case design techniques: I utilize various techniques such as equivalence partitioning, boundary value analysis, and state transition testing to create a robust set of test cases covering a wide range of inputs and conditions. For example, when testing a password field, I would use boundary value analysis to test inputs like empty strings, strings exceeding the maximum length, and strings containing special characters.

- Code coverage analysis: Using tools, I assess the extent to which the codebase is executed during testing. This provides a quantitative measure of test coverage, highlighting areas that might need additional testing. For example, a low statement coverage indicates that parts of the code have not been exercised during testing.

- Review and Iteration: Test cases are continuously reviewed and refined based on the defects found and feedback received, leading to improved coverage over time.

For instance, in a recent project involving an e-commerce website, we achieved over 95% statement coverage through a combination of unit, integration, and system tests, ensuring minimal risk of defects impacting critical functionalities such as order processing and payment gateway integration.

Q 9. Describe your approach to root cause analysis of defects.

Root cause analysis (RCA) is a systematic process of identifying the underlying reasons for defects, not just their symptoms. My approach follows a structured methodology:

- Defect Reproduction: The first step is to reliably reproduce the defect. This ensures that everyone is on the same page and that we’re investigating the correct issue. This may involve gathering detailed logs and steps to reproduce.

- Data Gathering: I collect relevant information, such as error logs, system logs, network traces, and user input, to build a comprehensive picture of the problem. The more data you have, the better you can pinpoint the problem.

- 5 Whys Analysis: A simple yet powerful technique where we repeatedly ask ‘Why?’ to drill down to the root cause. For example, ‘Why did the application crash?’ ‘Because of a null pointer exception.’ ‘Why was there a null pointer exception?’ ‘Because a variable wasn’t initialized.’ This continues until the fundamental issue is identified.

- Fishbone Diagram (Ishikawa Diagram): This visual tool helps to categorize potential causes and explore their relationships. Categories might include people, process, environment, materials, and methods.

- Fault Tree Analysis (FTA): This more sophisticated technique maps out the sequence of events that led to the defect, providing a clear visualization of the causal chain.

In a recent instance, a performance issue was traced to an inefficient database query using the 5 Whys method. We identified the inefficient query, modified the database schema to reduce data redundancy and optimized the query, which dramatically improved the response time.

Q 10. How do you write effective defect reports?

A well-written defect report is vital for efficient resolution. It needs to be clear, concise, and contain all the necessary information for developers to understand and fix the issue. I follow these guidelines:

- Unique ID: Assign a unique identifier to each defect for easy tracking and referencing.

- Summary: A concise description of the problem, easily understood at a glance. Include what was expected versus what actually happened.

- Steps to Reproduce: Clear and detailed steps to reproduce the defect, including any necessary input data or configurations.

- Actual Result: Precisely describe what happened when the steps were followed.

- Expected Result: Specify the correct or intended outcome.

- Severity: Rate the impact of the defect (e.g., critical, major, minor). This helps prioritize fixing.

- Priority: Indicate the urgency of resolving the defect based on business needs.

- Environment: Specify the operating system, browser, database version, and other relevant system details.

- Attachments: Include screenshots, logs, or any other relevant files to support the report.

A poorly written defect report, missing key information, can lead to wasted time, miscommunication, and ultimately, ineffective bug fixes. I strive for clarity to ensure that my reports facilitate efficient resolution.

Q 11. What metrics do you use to track the effectiveness of defect detection and resolution?

Tracking the effectiveness of defect detection and resolution involves using key metrics to monitor and improve the software development process. These include:

- Defect Density: The number of defects per lines of code (LOC). This metric indicates the overall quality of the codebase.

- Defect Severity Distribution: Tracks the number of defects categorized by severity (critical, major, minor). This helps identify patterns and areas needing improvement.

- Defect Resolution Time: Measures the time taken to fix a defect from its identification to resolution. This highlights areas for process optimization.

- Mean Time To Failure (MTTF): For systems, it represents the average time until a failure occurs. A higher MTTF indicates greater reliability.

- Mean Time To Repair (MTTR): Measures the average time required to restore a system to operational state after a failure. A shorter MTTR reflects efficient resolution.

- Escape Rate: The percentage of defects that made it into production. A low escape rate is the ultimate goal.

By regularly monitoring these metrics, we can pinpoint areas needing improvement and make data-driven decisions to enhance the quality of software and efficiency of development. Trends in these metrics can highlight systemic issues needing attention.

Q 12. How do you use test automation to improve defect detection?

Test automation significantly enhances defect detection by enabling faster, more thorough, and repeatable testing. It automates repetitive tasks, freeing up human testers to focus on more complex and creative testing scenarios.

- Regression Testing: Automating regression tests ensures that new code changes don’t introduce regressions or break existing functionality. This is particularly important in agile development where frequent code changes are made.

- Unit Testing: Automating unit tests ensures that individual components of the software function correctly, catching defects early in the development cycle. Frameworks like JUnit and pytest facilitate this.

- Integration Testing: Automating integration tests verifies that different modules of the software work correctly together. Tools like Selenium and Cypress automate browser interactions.

- Performance Testing: Automated performance testing tools, such as JMeter and LoadRunner, simulate real-world user loads to identify performance bottlenecks and ensure scalability.

Example: JMeter script to simulate 100 concurrent users accessing a login page. - Data-Driven Testing: This technique allows you to use test data from external sources like spreadsheets or databases, which enhances test coverage and efficiency.

For example, in a recent project, we used Selenium to automate functional tests, reducing the time spent on regression testing by 80% and enabling earlier detection of defects.

Q 13. What are some common causes of defects in software development?

Defects in software development can stem from various sources. Some common causes include:

- Requirements Issues: Ambiguous, incomplete, or inconsistent requirements can lead to misunderstandings and defects in the implementation.

- Design Flaws: Poorly designed systems with inadequate architecture or insufficient consideration of edge cases can introduce vulnerabilities and defects.

- Coding Errors: These are the most common type of defect, including logic errors, syntax errors, and off-by-one errors.

- Testing Gaps: Insufficient or inadequate testing can allow defects to slip through to production.

- Communication Breakdown: Poor communication between developers, testers, and stakeholders can lead to misunderstandings and errors.

- Lack of Code Review: Absent code reviews can miss potential defects before they are integrated into the codebase.

- Time Pressure: Rushed development often leads to shortcuts and compromises in quality, resulting in more defects.

- Inadequate Tools and Technologies: Utilizing outdated tools or technologies can increase the likelihood of errors and defects.

Addressing these root causes through improved processes, better communication, and robust testing strategies is key to reducing the number of defects in the final product. A culture of quality embedded throughout the software development lifecycle is the key.

Q 14. Explain your experience with performance testing and identifying performance bottlenecks.

Performance testing is crucial for identifying and resolving performance bottlenecks before they affect users. My experience encompasses various aspects of performance testing, including:

- Load Testing: Simulating high user loads to determine the system’s behavior under stress. This helps identify the breaking point and capacity limits.

- Stress Testing: Pushing the system beyond its expected load to understand its behavior under extreme conditions and identify failure points.

- Endurance Testing (Soak Testing): Running the system under a sustained load over an extended period to assess its stability and identify memory leaks or other degradation issues.

- Spike Testing: Simulating sudden, dramatic increases in user load to determine the system’s response to unexpected surges in traffic.

- Performance Bottleneck Analysis: Using profiling tools and analyzing metrics like response time, throughput, and resource utilization to pinpoint areas needing optimization. This often involves analyzing logs, database queries, network traffic, and code execution.

In a past project involving a high-traffic website, we used JMeter to simulate realistic user load. We identified a bottleneck in the database query process that was causing slow response times. By optimizing the database queries and adding caching mechanisms, we significantly improved the overall website performance. Tools like New Relic and Dynatrace are invaluable in this process.

Q 15. Describe your experience with security testing and identifying security vulnerabilities.

Security testing is crucial for identifying vulnerabilities that could expose a system to attacks. My experience encompasses various techniques, including penetration testing, static and dynamic code analysis, and security audits. I’ve used tools like OWASP ZAP and Burp Suite to identify vulnerabilities such as SQL injection, cross-site scripting (XSS), and cross-site request forgery (CSRF). For example, during a recent penetration test on an e-commerce website, I identified a SQL injection vulnerability in the login form by manipulating input parameters. This allowed me to bypass authentication and gain unauthorized access to the database. My process typically involves defining the scope, performing vulnerability scans, analyzing the results, validating findings through manual testing, and ultimately reporting the vulnerabilities with clear remediation recommendations. I prioritize understanding the business context to focus testing efforts on the most critical areas.

Career Expert Tips:

- Ace those interviews! Prepare effectively by reviewing the Top 50 Most Common Interview Questions on ResumeGemini.

- Navigate your job search with confidence! Explore a wide range of Career Tips on ResumeGemini. Learn about common challenges and recommendations to overcome them.

- Craft the perfect resume! Master the Art of Resume Writing with ResumeGemini’s guide. Showcase your unique qualifications and achievements effectively.

- Don’t miss out on holiday savings! Build your dream resume with ResumeGemini’s ATS optimized templates.

Q 16. How do you handle defects found in production?

Handling production defects requires a calm, systematic approach prioritizing minimizing impact and swift resolution. My process involves these key steps: 1. Immediate Impact Assessment: I determine the severity and scope of the issue – how many users are affected, what data is at risk, and the potential financial repercussions. 2. Containment: We implement immediate measures like temporarily disabling the affected functionality or rolling back to a previous stable version to prevent further damage. 3. Root Cause Analysis: A thorough investigation pinpoints the underlying cause of the defect. This might involve reviewing logs, debugging code, and collaborating with developers. 4. Resolution & Testing: The defect is fixed, and thorough testing is performed to validate the solution and ensure it doesn’t introduce new problems. 5. Deployment & Monitoring: The fix is deployed to production, and we closely monitor the system’s performance to ensure stability. 6. Post-mortem Analysis: We review the incident to understand what went wrong, identify areas for process improvement, and prevent similar issues in the future. For example, a recent critical defect caused a system crash during peak hours. We immediately implemented a rollback, identified the root cause as a memory leak, fixed it, thoroughly tested the fix, and deployed it within two hours. A post-mortem review resulted in improved monitoring and stricter code review processes.

Q 17. What is your experience with Agile methodologies and their impact on defect detection?

Agile methodologies, with their iterative nature and emphasis on continuous feedback, significantly improve defect detection. The short development cycles and frequent testing incorporated in sprints allow for early detection and resolution of defects, reducing the cost and effort associated with fixing them later in the development lifecycle. Techniques such as daily stand-up meetings and sprint reviews provide regular opportunities for collaboration and feedback, further enhancing defect detection. The continuous integration and continuous delivery (CI/CD) pipeline also automates testing at various stages, enabling faster identification and resolution of issues. In one project employing Scrum, we implemented automated unit and integration tests, which caught several critical defects during the sprint itself, preventing them from reaching production. This approach fostered a culture of quality and accountability across the entire development team.

Q 18. How do you collaborate with developers and other stakeholders to resolve defects?

Effective collaboration is key to successful defect resolution. I work closely with developers, product owners, and other stakeholders using various tools and techniques. This includes: Clear and Concise Communication: I ensure that defect reports are detailed, reproducible, and easily understood by all parties. I use a defect tracking system (e.g., Jira) to manage and track defects throughout their lifecycle. Collaborative Problem Solving: I participate in code reviews and debugging sessions, working alongside developers to understand the root cause and implement effective solutions. Regular Updates: I provide regular updates to stakeholders on the status of defects, including estimated timelines for resolution. Proactive Communication: I proactively communicate potential risks and roadblocks, allowing for proactive mitigation strategies. For instance, if a defect involves a complex piece of code, I collaborate closely with the developer to provide detailed reproduction steps and logs, facilitating a faster resolution.

Q 19. Describe a time you had to deal with a critical defect under pressure.

During a critical system upgrade, a defect emerged that caused a significant performance degradation during peak usage. The pressure was immense as users experienced severe slowdowns, impacting business operations. We immediately formed a rapid response team comprising developers, system administrators, and myself. My role was to quickly analyze the issue, provide reproducible steps, and prioritize the resolution effort. We isolated the problem to a database query and, through collaborative debugging and performance profiling, identified the cause as an inefficient join operation. By optimizing the query and implementing temporary caching, we resolved the issue within four hours, minimizing the impact and restoring system performance. This incident highlighted the importance of proactive monitoring, rapid response, and effective teamwork under pressure.

Q 20. What are some common challenges you face in defect detection and resolution?

Several challenges complicate defect detection and resolution: Inconsistent Reporting: Lack of standardized reporting can make reproducing and analyzing defects difficult. Insufficient Test Data: A lack of comprehensive test data can prevent the detection of certain defects. Time Constraints: Pressure to meet deadlines sometimes leads to rushed testing, increasing the risk of missed defects. Complex Systems: In large and complex systems, identifying the root cause of a defect can be challenging due to numerous interdependencies. Lack of Collaboration: Poor communication and collaboration between developers and testers can hamper defect resolution. Addressing these challenges requires clear processes, adequate resources, effective communication, and a culture of quality that prioritizes defect prevention.

Q 21. How do you stay updated on the latest testing tools and techniques?

Staying current with testing tools and techniques is essential for my role. I actively engage in various methods: Professional Development: I attend conferences, webinars, and workshops related to software testing and quality assurance. Online Resources: I regularly consult industry blogs, publications, and online communities to learn about the latest trends and best practices. Certifications: I pursue relevant certifications to enhance my skills and stay up-to-date with industry standards. Hands-on Experience: I actively experiment with new tools and techniques on personal projects to gain practical experience. For example, I recently completed a course on performance testing and implemented JMeter into our testing process, improving our ability to identify performance bottlenecks early in the development lifecycle.

Q 22. What is your experience with static code analysis tools?

Static code analysis tools are automated programs that examine source code without actually executing it. They’re invaluable for identifying potential bugs, security vulnerabilities, and coding style inconsistencies early in the development process. My experience spans several tools, including SonarQube, FindBugs, and Coverity. I’ve used them across various projects involving Java, Python, and C++. For example, with SonarQube, I’ve successfully identified potential null pointer exceptions and uncovered instances of insecure coding practices like SQL injection vulnerabilities before they made it to the testing phase. This proactive approach significantly reduces the cost and time required for defect resolution later in the SDLC.

I’m proficient in configuring these tools to tailor their analysis to specific project needs, focusing on relevant rule sets and managing false positives. The results of these analyses are used to prioritize remediation efforts, focusing on the most critical issues first.

Q 23. How do you measure the quality of your testing process?

Measuring testing quality isn’t just about the number of bugs found; it’s about the effectiveness and efficiency of the entire process. I use a multi-faceted approach. Key metrics include defect density (number of defects per lines of code), defect discovery rate (how quickly defects are found), and the escape rate (number of defects that reach production). Analyzing trends in these metrics over time helps identify areas needing improvement. For instance, a consistently high escape rate suggests problems with testing coverage or testing methodology.

Beyond quantifiable metrics, I also assess the quality of test cases themselves, ensuring they cover different scenarios and edge cases. Regular test case reviews and peer reviews also contribute to higher quality, preventing errors in the tests themselves. Finally, customer feedback post-release is incorporated to inform future testing strategies, allowing continuous improvement.

Q 24. Explain your understanding of software development life cycle (SDLC) and its impact on testing.

The Software Development Life Cycle (SDLC) encompasses all stages involved in software development, from initial conception to deployment and maintenance. Understanding the SDLC is critical for effective testing because it dictates when and how testing should be integrated. For example, in a waterfall model, testing happens primarily at the end, while in agile methodologies, testing is integrated throughout each iteration (sprint).

Different SDLC models impact the intensity and type of testing required. Agile demands continuous testing with rapid feedback loops, often involving automated tests. In contrast, waterfall models may prioritize extensive testing at the final stages. My experience includes working with both agile and waterfall models, adapting my testing approach accordingly. Early involvement in the SDLC, regardless of the chosen methodology, is key to influencing design decisions that can improve testability and reduce defects.

Q 25. Describe your experience with different testing environments.

My experience covers a variety of testing environments, ranging from simple development machines to complex cloud-based infrastructure. I’ve worked with virtual machines, containers (Docker), and cloud platforms (AWS, Azure). I’m comfortable setting up and configuring test environments that mirror the production environment as closely as possible. This includes configuring databases, network settings, and other relevant infrastructure components. For example, when testing a web application, I’d ensure the test environment replicates the production server’s configuration, including load balancing and security settings, to catch environment-specific issues.

The choice of testing environment depends on factors like the application’s complexity, the scale of the testing, and resource constraints. The goal is always to create a reliable and repeatable environment that facilitates accurate and comprehensive testing.

Q 26. How do you manage your workload when dealing with multiple defects simultaneously?

Managing multiple defects simultaneously requires a structured approach. I utilize defect tracking systems (like Jira or Bugzilla) to organize and prioritize defects based on severity, impact, and priority. I employ techniques like triaging (assessing the severity and impact of each defect) to ensure I focus on the most critical issues first. This often involves prioritizing defects impacting core functionality or those with the highest user impact.

I utilize time management techniques, such as timeboxing (allocating a specific amount of time to each defect) and task switching minimization. I also collaborate closely with developers to establish clear communication channels and ensure efficient resolution. Regular status updates and meetings help track progress and address potential roadblocks promptly.

Q 27. What are your preferred techniques for preventing defects?

Defect prevention is paramount. My preferred techniques focus on proactive measures rather than solely reactive defect fixing. This includes:

- Code reviews: Peer code reviews help catch potential errors before they are integrated into the main codebase. This approach promotes knowledge sharing and improves overall code quality.

- Static code analysis: As mentioned earlier, tools like SonarQube and FindBugs play a crucial role in proactively identifying potential issues.

- Unit testing: Developers writing unit tests for their code ensures that individual components function correctly, catching bugs early.

- Design reviews: Reviewing the architecture and design of the software can identify potential problems before significant coding begins.

- Test-driven development (TDD): Writing tests *before* writing code forces developers to think carefully about the intended functionality and edge cases.

Ultimately, a strong emphasis on best practices, clear communication, and a culture of quality across the development team is essential for effective defect prevention.

Q 28. Explain your experience with regression testing.

Regression testing is crucial to ensure that new code changes haven’t introduced new bugs or broken existing functionality. My experience includes designing and executing various types of regression tests, including:

- Retesting: Rerunning previous tests to verify that previously fixed defects haven’t reappeared.

- Confirmation testing: Verifying that fixed defects are indeed resolved.

- Regression test suites: Maintaining a comprehensive suite of automated tests that can be run quickly and efficiently after every code change.

I use automation extensively for regression testing. Tools like Selenium and JUnit are employed to automate the execution of test cases, saving time and improving consistency. I prioritize automating critical functionalities and those prone to regression. Regular maintenance and updates to regression test suites ensure their ongoing effectiveness. The goal is to achieve rapid feedback on the impact of code changes and to prevent regressions from reaching the production environment.

Key Topics to Learn for Defect Detection and Resolution Interview

- Defect Lifecycle Management: Understanding the complete lifecycle of a defect, from identification to closure, including stages like reporting, verification, and validation.

- Defect Reporting Techniques: Mastering clear, concise, and reproducible defect reports using established methodologies and tools. Practical application includes using bug tracking systems effectively.

- Root Cause Analysis (RCA): Developing proficiency in identifying the underlying causes of defects, not just the symptoms, using techniques like the 5 Whys or Fishbone diagrams.

- Testing Methodologies: Familiarity with various testing approaches (e.g., unit, integration, system, regression testing) and their application in defect detection.

- Debugging and Troubleshooting: Developing strong skills in identifying and resolving defects using debugging tools and techniques specific to your technology stack (e.g., logging, breakpoints, etc.).

- Defect Prevention Strategies: Understanding and implementing proactive measures to prevent defects from occurring in the first place, such as code reviews, static analysis, and design improvements.

- Metrics and Reporting: Analyzing defect data to identify trends, assess quality, and improve processes. This includes understanding key metrics like defect density and resolution time.

- Communication and Collaboration: Effectively communicating defect information to developers, testers, and other stakeholders to facilitate efficient resolution.

Next Steps

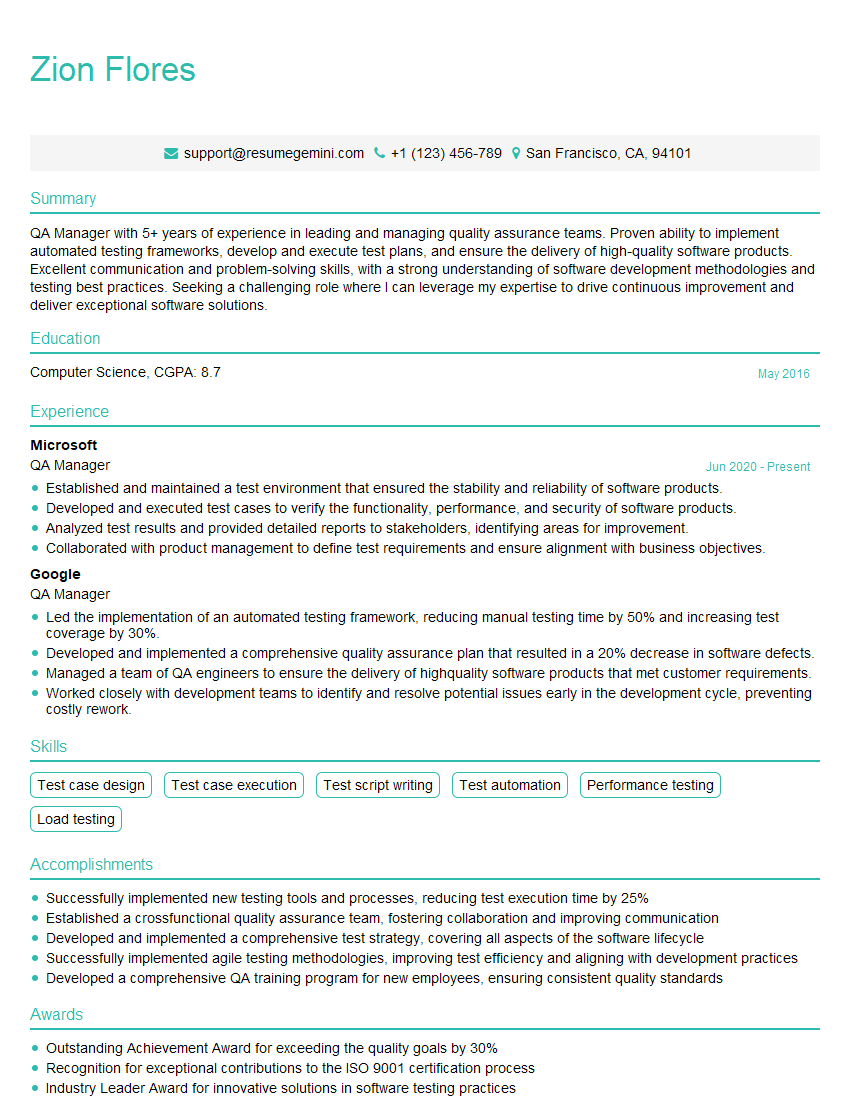

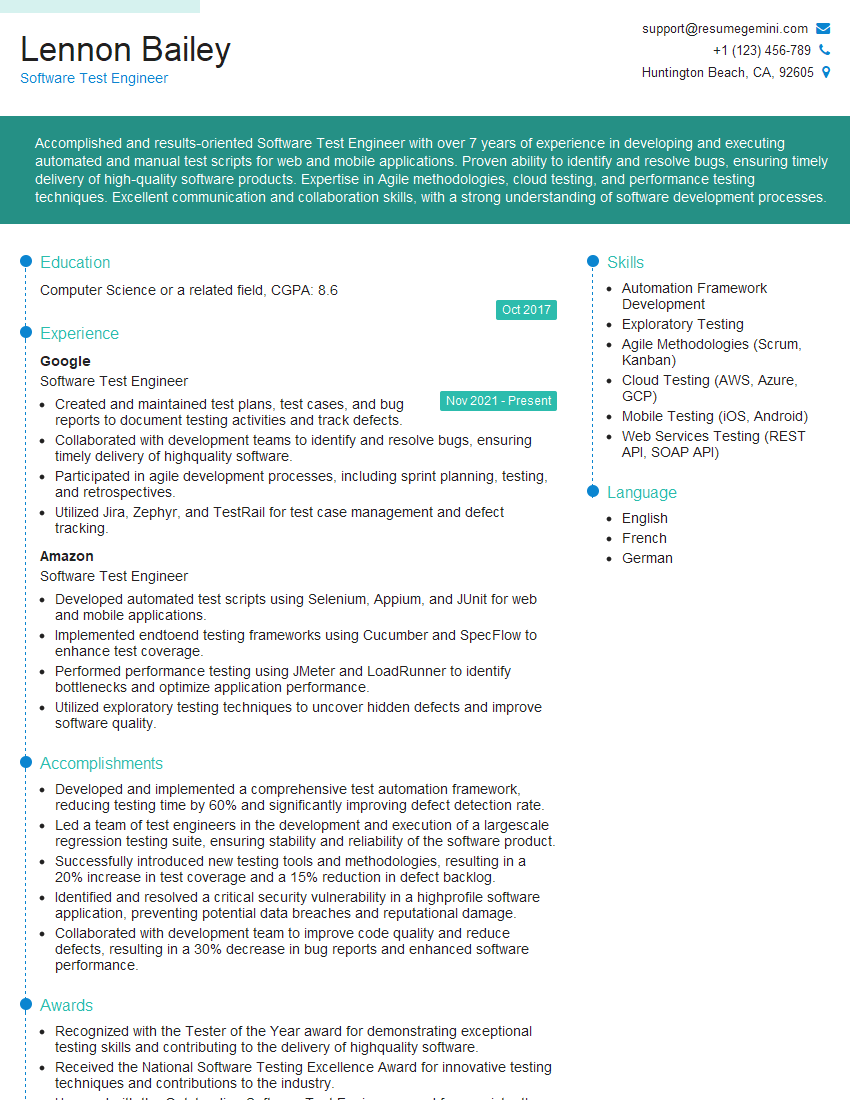

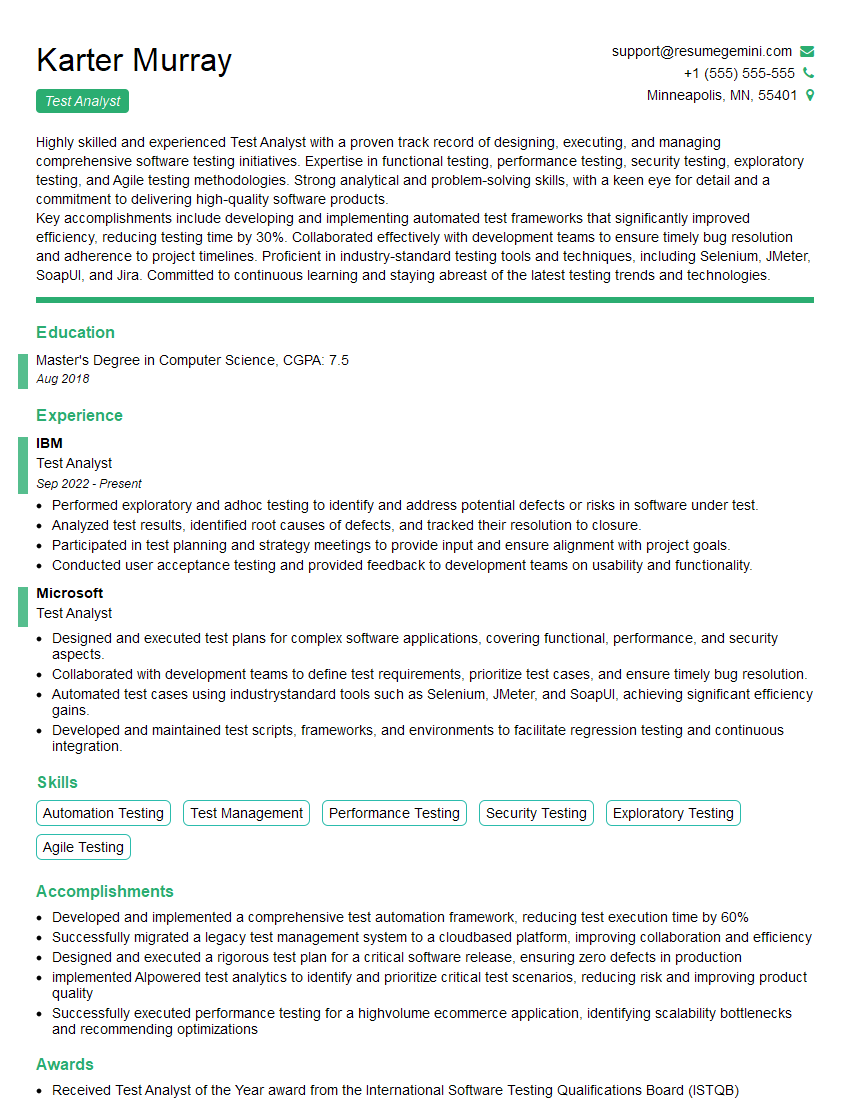

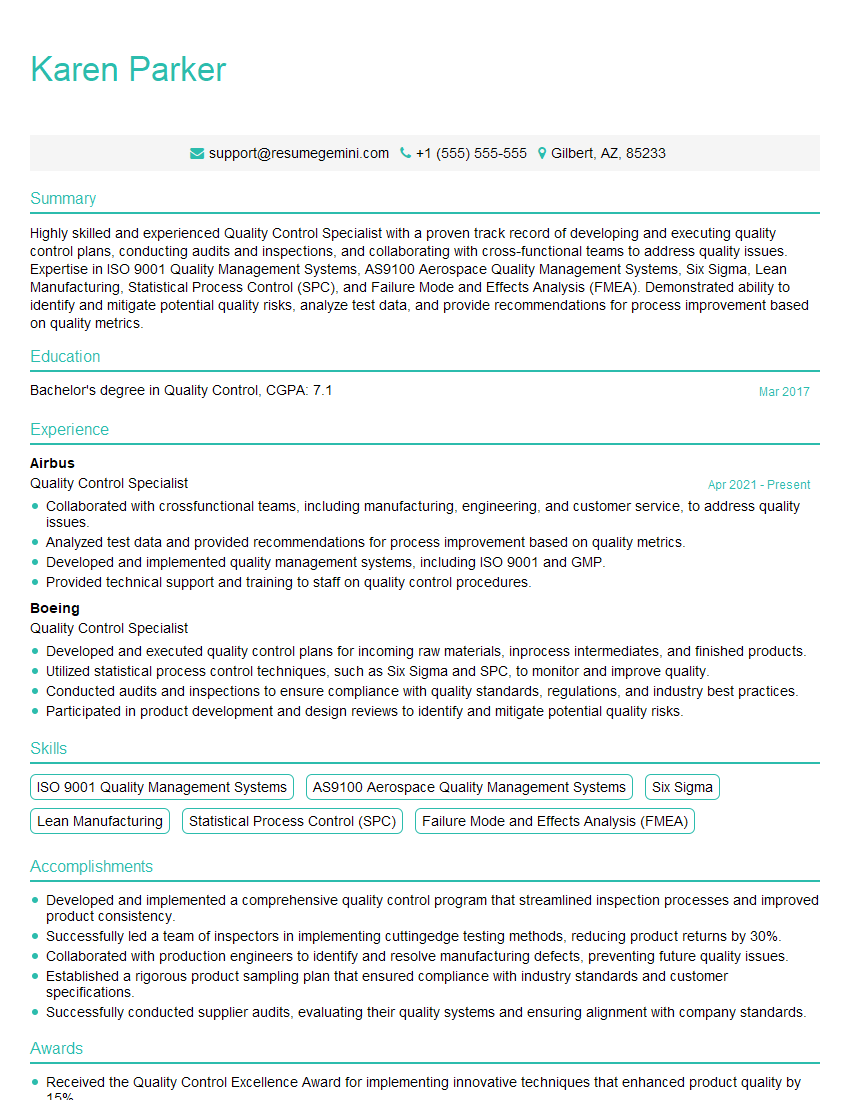

Mastering Defect Detection and Resolution is crucial for career advancement in software development and related fields. It demonstrates your ability to contribute to high-quality products and efficient development processes, leading to increased responsibility and earning potential. To enhance your job prospects, create an ATS-friendly resume that showcases your skills and experience effectively. ResumeGemini is a trusted resource that can help you build a professional and impactful resume. Take advantage of the resume examples tailored to Defect Detection and Resolution professionals available to help you present your qualifications in the best possible light.

Explore more articles

Users Rating of Our Blogs

Share Your Experience

We value your feedback! Please rate our content and share your thoughts (optional).

What Readers Say About Our Blog

Hi, I represent an SEO company that specialises in getting you AI citations and higher rankings on Google. I’d like to offer you a 100% free SEO audit for your website. Would you be interested?

good